Abstract

Critical thinking (CT) is widely regarded as an important competence to obtain in education. Students’ exposure to problems and collaboration have been proven helpful in promoting CT processes. These elements are present in student-centered instructional environments such as problem-based and project-based learning (P(j)BL). Next to CT, also higher-order thinking (HOT) and critical-analytic thinking (CAT) contain elements that are present in and fostered by P(j)BL. However, HOT, CT, and CAT definitions are often ill-defined and overlap. The present systematic review, therefore, investigated how HOT, CT, and CAT were conceptualized in P(j)BL environments. Another aim of this study was to review the evidence on the effectiveness of P(j)BL environments in fostering HOT, CT, or CAT. Results demonstrated an absence of CAT in P(j)BL research and a stronger focus on CT processes than CT dispositions (i.e., trait-like tendency or willingness to engage in CT). Further, while we found positive effects of P(j)BL on HOT and CT, there was a lack of clarity and consistency in how researchers conceptualized and measured these forms of thinking. Also, essential components of P(j)BL were often overlooked. Finally, we identified various design issues in effect studies, such as the lack of control groups, that bring the reported outcomes of those investigations into question.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Critical thinking (CT) is widely regarded as an important competence to learn, and its importance has only increased over time (Pellegrino & Hilton, 2012). Mastery of this ability is not only necessary for students but also for working professionals and informed citizens (Bezanilla et al., 2021). Therefore, thinking critically is a central aim of education (e.g., Butler & Halpern, 2020). A meta-analysis demonstrated that the opportunity for dialogue, exposing students to authentic or situated problems and examples, and mentoring them in these habits of mind positively affected CT (Abrami et al., 2015). All of these pedagogical elements are, to some extent, present in student-centered instructional environments. Thus, it would seem that a good way to teach CT is by using active, student-centered instructional methods (Bezanilla et al., 2021; Lombardi et al., 2021). Problem-based learning (PBL) and project-based learning (PjBL) are prototypical examples of active, student-centered instructional methods. Next to CT, higher-order thinking (HOT) and critical-analytic thinking (CAT) also contain elements that are present in and fostered by student-centered learning environments, such as PBL and PjBL. However, CT, HOT, and CAT definitions are often ill-defined and overlap.

For the aforementioned reasons, the present study investigated CT, HOT, and CAT in student-centered learning environments. We will focus on PBL and PjBL because these two formats are most frequently studied in the research literature and hence constitute the vast majority of student-centered instructional methods (Authors, 2022; Nagarajan & Overton, 2019). In addition, both PBL and PjBL have been included as acknowledged instructional formats in the Cambridge Handbook of Learning Sciences (Sawyer, 2014) and have specific criteria that need to be fulfilled to be labeled as PBL and PjBL. Despite their unique characteristics and origin, PBL and PjBL share common ground because of their joint roots in constructivist learning theory (Authors, 2022; Loyens & Rikers, 2017). PBL and PjBL as pedagogies can be seen as manifestations of constructivist learning. Constructivism is a theory or view on how learning happens, which holds that learners construct knowledge out of experiences. It has roots in philosophy and stresses the student’s active role in his or her knowledge-acquisition process (Loyens et al., 2012).

What is PBL?

There are many definitions of PBL in the research literature, all presenting different perspectives and ideas with regard to this educational pedagogy (Dolmans et al., 2016; Zabit, 2010). Notwithstanding the variety of definitions, different PBL implementations demonstrate some shared characteristics. Based on the original method developed at McMaster University (Spaulding, 1969), Barrows (1996) described six core characteristics of PBL. The first characteristic is that learning is student centered. Second, learning occurs in small student groups under the guidance of a tutor. The third characteristic refers to the tutor as a facilitator or guide. Fourth, students encounter so-called “authentic” (i.e., relating to real life) problems in the learning sequence before any preparation or study has occurred. Fifth, these problems function as triggers for students’ prior knowledge activations, which leads to the discovery of knowledge gaps. Finally, students overcome these knowledge gaps through self-directed learning, which requires sufficient time for self-study (Schmidt et al., 2009).

Besides the core elements, different PBL environments share similarities in terms of the process. For example, Wijnia et al. (2019) highlighted that PBL as a process consists of three separate stages: an initial discussion phase, a self-study phase, and a reporting stage. First, students are given a meaningful problem that describes an observable phenomenon or event. The instructional goal of the problem presented to the students can differ. For example, the problem could originate from professional practice or be related directly to distinctive events in a particular domain or field of study. An example of a problem related to a specific domain of study from an introductory psychology course reads as follows (Schmidt et al., 2007):

Coming home from work, tired and in need of a hot bath, Anita, an account manager, discovers two spiders in her tub. She shrinks back, screams, and runs away. Her heart pounds, a cold sweat is coming over her. A neighbor saves her from her difficult situation by killing the little animals using a newspaper.” Explain what has happened here. (p. 92)

During the first stage, prior knowledge is important as students come up with theories to explain the problem based on their life experiences. Because their knowledge is often limited and insufficient, students formulate learning issues (formulated as questions) to guide their research and further self-study. All this takes place in a class discussion, usually in classes with fewer than 12 students. During the second stage, students consult learning resources to gain knowledge relevant to the problem and to address the learning issue questions. These resources can be selected by the students, the tutor, or a combination of both (Wijnia et al., 2019). In tandem with these steps, students have to plan and monitor study activities that need to be carried out before the next class meeting (Loyens et al., 2008). In the final stage, students reconvene under the guidance of their tutor to share and evaluate their findings critically and elaborate on their newly acquired knowledge. Students apply this knowledge to the problem to identify plausible solutions or explanations (Loyens & Rikers, 2017; Wijnia et al., 2019).

What is PjBL?

In project-based or project-centered learning (PjBL), the learning process is organized around activities that drive students’ actions (Blumenfeld et al., 1991). Students learn central concepts and principles of a discipline through the projects. This “learning-by-doing” approach of PjBL could help motivate students to learn, as they play an active role in the process (Saad & Zainudin, 2022). Students have a significant degree of control over the project they will work on and what they will do in the project. The projects are hence student-driven and, similar to PBL, are intended to generate learner agency. Specific end products need to result from the work, although the processes to get to the end product can vary. The end products (e.g., a website, presentation, or report) serve as the basis for discussion, feedback, and revision (Blumenfeld et al., 1991; Helle et al., 2006; Tal et al., 2006). Also, even though different forms of PjBL exist, most start with a driving question or problem and typically incorporate the following features (Krajcik, 2015; Thomas, 2000):

-

1.

Projects for which the students seek solutions or clarifications are relevant to their lives.

-

2.

PjBL involves planning and performing investigations to answer questions.

-

3.

Students collaborate with other students, teachers, and members of society.

-

4.

PjBL is centered around producing artifacts.

-

5.

Technology is used when appropriate.

In sum, students perform a series of collaborative inquiry activities that should help them acquire new, domain-specific knowledge and thinking processes to solve real-world problems. The PjBL end products must reflect learners’ knowledge of the project topic and their metacognitive knowledge (Grant & Branch, 2005). A project can be a problem to solve (e.g., How can we reduce the pollution in the schoolyard pond?), a phenomenon to investigate (e.g., Why do you stay on your skateboard?), a model to design (e.g., Create a scale model of an ideal high school), or a decision to make (e.g., Should the school board vote to build a new school?; Yetkiner et al., 2008).

Students work together and projects last for considerable periods (Helle et al., 2006). The role of the instructor consists of facilitating the project. That is, the instructor helps with framing and structuring the projects, monitors the development of the end product, and assesses what students have learned (Chiu, 2020; David, 2008; Helle et al., 2006).

What PBL and PjBL Foster

One aim of schools and colleges implementing student-centered approaches such as PBL and PjBL is to increase students’ competence in tackling complex problems common in an ever-changing world (Gijbels et al., 2005). To that end, several goals and desired outcomes have been put forward for PBL and PjBL. The primary goal is to educate the students to a level where they can comfortably use and retrieve information when needed and identify situations where specific knowledge and strategic processes are applicable. With these strategies and knowledge, students can start developing plausible explanations of phenomena that represent important disciplinary understandings (Loyens et al., 2012; McNeill & Krajcik, 2011). Several studies have investigated the effectiveness of P(j)BL on knowledge acquisition (e.g., Chen & Yang, 2019; Strobel & Van Barneveld, 2009). In addition, both PBL and PjBL consist of collaborative learning sessions that could foster effective interpersonal communication. Such abilities can enable learners to contribute to discussions in clear and appropriate ways, help to reach conclusions and answers more easily, and identify inconsistencies and unresolved issues (Loyens et al., 2008, 2012).

Further, students could develop problem-solving strategies while working on problems or projects (Krajcik et al., 2008). Even when problems are highly complex and ill-structured, they can be effectively analyzed, and plausible responses can be identified (Loyens et al., 2012). Also, because the problems and projects are specific to the students’ domain of study, the knowledge and strategies they acquire are applicable to their future professional practice. Therefore, problems and projects are believed to be more engaging, motivating, and interesting for the students (Hmelo-Silver, 2004; Larmer et al., 2015; Saad & Zainudin, 2022). Finally, as noted, student-centered instructional methods such as PBL and PjBL imply a different, less directive role for the teacher. Consequently, students receive more during the learning process. The success of PBL and PjBL also rests on the “preparedness of a student to engage in learning activities defined by him- or herself, rather than by a teacher” (Schmidt, 2000, p. 243), a process referred to as self-directed learning.

Even though the research literature on P(j)BL does not explicitly state that these instructional formats should foster HOT, CT, and CAT, their design and implementations do appear to require students’ engagement in these forms of thinking. Thus, we will seek to define these constructs within the context of student-centered learning environments.

HOT, CT, and CAT

Like PBL and PjBL, many definitions of HOT, CT, and CAT can be found in the literature. We acknowledge our inability to be complete regarding the different domains and traditions (i.e., philosophical, psychological, educational) of HOT, CT, and CAT, in which definitions have been put forward. Rather, we will focus on definitions that help describe the role of HOT, CT, and CAT in student-centered learning environments.

What Is Higher Order Thinking (HOT)?

First, HOT can be seen as an overarching concept defined as “skills that enhance the construction of deeper, conceptually-driven understanding” (Schraw & Robinson, 2011, p. 2). Framed in more traditional terms, HOT corresponds with Bloom’s taxonomy, with remembering or recalling facts reflecting lower-order cognitive thinking (i.e., concerned with the acquisition of knowledge or information) and comprehending, applying, analyzing, synthesizing, and evaluating as higher-order thinking, referring to more intellectual abilities and skills (Lombardi, 2022; Miri et al., 2007). The focus on thinking skills does not imply that the essential importance of knowledge is abandoned. In fact, knowledge is needed and related to thinking processes, which comes to the fore in, for example, later revisions of Bloom’s taxonomy (Lombardi, 2022).

HOT has been put forward as having four components (Schraw et al., 2011): (a) reasoning (i.e., induction and deduction), (b) argumentation (i.e., generating and evaluating evidence and arguments), (c) metacognition (i.e., thinking about and regulating one’s thinking), and (d) problem solving and critical thinking (CT). Problem-solving involves several steps carried out consecutively: 1) identifying and representing the problem at hand, 2) selecting and applying a suitable solution strategy, and 3) evaluating the process and solution (Chakravorty et al., 2008). CT refers to the reflective thinking that leads to certain outcomes (i.e., decision-making) and actions (Ennis, 1987). CT is, in this view, considered a subcomponent of HOT (Schraw et al., 2011). Indeed, in its measurement, CT has also addressed HOT processes such as analysis and synthesis (Lombardi, 2022).

Yen and Halili (2015) also characterize HOT as an umbrella term for all manner of reflective thinking, including creative thinking, problem-solving, decision-making, and metacognitive processing. Also, in this definition, several components refer to the taxonomy of Schraw and colleagues (2011): reflection (component d) and metacognition (component c), problem-solving (component d), and decision-making (CT, component d). The only exception is creative thinking, which is not included as a subcomponent by Schraw and colleagues (2011); however, they acknowledge that this could be part of a broader taxonomy of HOT, together with, for example, moral reasoning.

What Is CT?

The literature on CT traces back to the Greek philosophers who sought to explain the origin and meaning of such thinking. As Van Peppen (2020) points out, “the word critical derives from the Greek words ‘kritikos’ (i.e., to judge/discern) and ‘kriterion’ (i.e., standards),” and hence “CT implies making judgments based on standards” (p. 11). A second important ancestor of CT was John Dewey, who spoke of “reflective thinking” when referring to CT. From thereon, many traditions and definitions of CT have been formulated. Ennis (1987), for example, defined CT as the thinking process focused on the decision of what to believe or what to do. He further expanded the idea of Glaser (1941), who acknowledged the role of dispositions in CT. Ennis (1962) distinguished two distinct CT components, dispositions and abilities, with the first one being more trait-like tendencies (e.g., dispositions toward inquisitiveness, open-mindedness, sensitivity to other points of view, cognitive flexibility) and the second referring to actual cognitive activities (e.g., focusing, analyzing arguments, asking questions, evaluating evidence, comparing potential outcomes; Schraw et al., 2011).

Scholars of the American Philosophical Association tried to come up with a consensus on the definition of CT, referred to as the Delphi Panel (Facione, 1990b). The processes associated with CT in that report were interpretation (i.e., understanding and articulating meaning), analysis (i.e., identifying relationships between information, including argument analysis), evaluation (i.e., making judgments and assessments about the credibility of information, including assessing arguments), inference (i.e., identifying the necessary information for decision making, including coming up with hypotheses), explanation (i.e., articulating and presenting one’s position, arguments, and analysis used to determine that position), and self-regulation (i.e., self-analyzing and examining one’s inferences and correcting when necessary; Facione, 1990b). The latter component (i.e., self-regulation) has a strong metacognitive character (Zimmerman & Moylan, 2009).

Finally, another conceptualization of CT that resulted in the development of a widely used measurement instrument for CT comes from Halpern. Halpern (2014) mainly focuses on abilities/cognitive activities and less on dispositions in her definition of CT. Specifically, she states that CT entails “cognitive skills or strategies that increase the probability of a desirable outcome” (p. 8). Halpern’s taxonomy consists of five main elements; verbal reasoning, argument analysis, hypothesis testing, likelihood and uncertainty, and decision-making/problem-solving.

What Is CAT?

CAT refers to the processes we use “when we question or at least do not simply passively accept the accuracy of claims as givens” (Byrnes & Dunbar, 2014, p. 479). Its distinguishing feature compared to CT is its focus on justification and determining whether appropriate and credible evidence supports a claim or proposed response (Murphy et al., 2014). Although some frameworks of HOT and CT include the component of analysis and evaluation, the CAT research literature puts the processes of “weighing the evidence” at the forefront. Alexander (2014) describes the process of CAT in four consecutive steps. The process starts with a claim or task for which one collects data or evidence. Individuals then evaluate or judge these data or evidence and, as the last step, integrate it with their knowledge and beliefs. Dispositions are not considered in the CAT literature, although individual differences, such as prior knowledge and goals, can act as moderators.

In sum, unequivocal definitions of HOT, CT, and CAT are hard to find, although definitions do share important attributes. As Byrnes and Dunbar (2014) point out, “operational definitions follow from theoretical definitions” (p. 482). Indeed, the definitions introduced in this overview have led to several operationalizations and measurements of HOT, CT, and CAT. Those operationalizations are also important in the discussion on how HOT, CT, and CAT are framed within student-centered learning and whether P(j)BL might foster such valued forms of thinking.

The Link Between HOT, CT, and CAT and P(j)BL

To establish the link between HOT, CT, and CAT and the learning environments P(j)BL, we carefully examined two lines of research literature: literature on how to effectively teach HOT, CT, and CAT, as well as literature on the learning processes involved in P(j)BL (i.e., a synthesis between cognitive and instructional science). Most of the research directed toward teaching reflective forms of thinking have addressed CT as a form of HOT (e.g., Abrami et al., 2015; Miri et al., 2007; Schraw et al., 2011). For example, Miri and colleagues (2007) defined three teaching strategies that should encourage students to engage collaboratively in CT-aligned processes (e.g., asking appropriate questions and seeking plausible solutions). Those teaching strategies are (a) dealing with real cases in class, (b) encouraging class discussions, and (c) fostering inquiry-oriented experiments.

The link with P(j)BL is evident, as they are centered around dealing with real problems and cases in collaborative class discussions. Certainly, PjBL sometimes requires the execution of experiments. The sharing of knowledge and collaboration has a place in both instructional formats, in PBL in the reporting phase, in PjBL because students collaborate with other students, teachers, and members of society. In their meta-analysis, Abrami and colleagues (2015) reviewed possible instructional strategies that could foster CT. They concluded that two types of interventions helped develop CT processes: discussion and “authentic or situated problems and examples … particularly when applied problem solving … is used” (Abrami et al., 2015, p. 302). Again, there is a clear link because using authentic problems and class discussions are central components of PBL and PjBL. Also, Torff (2011) labeled core P(j)BL activities as “high-CT activities” (p. 363): Socratic discussion, debate, problem-solving, problem finding, brainstorming, decision-making, and analysis.

With respect to CAT, Byrnes and Dunbar (2014) put forward some instructional approaches that should prove facilitative. In their view, “students should pose unanswered questions that require the collection of data or evidence” (p. 488). Subsequently, they need to “engage in appropriate methodologies to gather this evidence.” Finally, students need to “have opportunities to be surprised by unanticipated findings and discuss or debate how the anticipated, unanticipated, and missing evidence should be interpreted” (p. 488). These authors also stress the importance of working in teams, engaging in discussions, identifying sources of uncertainty and problems of interpretation, and presenting findings and conclusions for peer review. Also, regarding CAT, parallels can be drawn with PBL and PjBL in which a problem or question is the starting point, and students engage in several learning activities to develop an understanding and potentially a solution.

The second line of research that is useful to establish the link between HOT, CT, and CAT and P(j)BL, deals withs learning processes. For example, Krajcik et al. (2008) argued that processes common to project-based approaches involve learners in “scientific practices such as argumentation, explanation, scientific modeling, and engineering design” (p. 3). Furthermore, Krajcik and colleagues mention that students learning in these environments use problem-solving, design, decision-making, argumentation, weighing of different pieces of evidence, explanation, investigation, and modeling. Some scholars mention the development of metacognitive knowledge as an outcome of PjBL (Grant & Branch, 2005).

A similar case can be made for PBL. Hung, W. and colleagues (2008) indicated that students who experience PBL possess better hypothesis-testing abilities due to their more coherent explanations of hypotheses and hypothesis-driven reasoning. Further, the PBL process relies heavily on group discussions of real-life problems, discovering knowledge gaps, gathering information/evidence to answer the learning issues/questions, analyzing the evidence, resolving unclarities, and deciding on the outcome.

The Present Study

The present study aimed to situate HOT, CT, and CAT in PBL and PjBL environments. As we have set out to establish, even though fostering HOT, CT, and CAT may not be an explicit goal of these student-centered approaches; there are theoretical and empirical reasons to expect an association to exist. The research literature on how to effectively teach HOT, CT, and CAT has mentioned instructional formats that use discussion and problem-solving. Secondly, the research literature on the learning processes that take place in student-centered learning environments like P(j)BL mention HOT, CT, and CAT processes such as decision making, argumentation, weighing of different pieces of evidence, explanation, investigation (Abrami et al., 2015; Krajcik et al., 2008). Therefore, the first research question we posed was: How are HOT, CT, and CAT conceptualized in student-centered learning environments? In addition, parallels exist between processes involved in HOT, CT, and CAT on the one hand and the learning activities/processes in P(j)BL on the other hand. Moreover, effective instructional activities to foster HOT, CT, and CAT (e.g., Abrami et al., 2015) are core activities in P(j)BL. Therefore, the second aim of this study was to review the evidence on the effectiveness of student-centered environments in fostering either HOT, CT, or CAT. To that end, we carried out a review study of studies investigating HOT, CT, and CAT in the context of PBL and PjBL.

Method

Search Strategy

For this review, we systematically investigated six online databases: Web of Science (Core Collection) and five EBSCO databases (ERIC, Medline, PsycInfo, Psychology and Behavioral Sciences, and Teacher Reference Center). We included Medline in this list because problem-based learning originated in medical education (Spaulding, 1969, see also Servant-Miklos, 2019) and is often researched in the context of medical education (W. Hung et al., 2019; Koh et al., 2008; Smits et al., 2002; Strobel & Van Barneveld, 2009). For this systematic review, we used the following Boolean string of search terms (Oliver, 2012): “project based learning” or “project based instruction” or “project based approach” or “PjBL” OR “problem based learning” or “problem based approach” or “problem based instruction” or “PBL” AND “higher order thinking” or “critical thinking” or “critical analytic* thinking.”Footnote 1 Because of its medical connotations (i.e., it is also an abbreviation for “peripheral blood lymphocytes”; e.g., Caldwell et al., 1998), “PBL” was not used for our Web of Science search. The search terms match our research questions in that they include both PBL and PjBL, as well as HOT, CT, and CAT, thus leading us to those studies that included those variables. In addition to using the aforementioned terms, we delimited our search to peer-reviewed records written in English.

Selection Process

Inclusion Criteria

In addition to the search parameters established by our search terms, we used the following inclusion criteria to determine our final sample—studies had to: (a) use a quantitative measure of HOT, CT, or CAT, (b) take place in a PBL or PjBL environment, (c) be an empirical study that took place in a classroom context, (d) investigate K-12 or higher education students, and (e) be published as a peer-reviewed journal article. For example, to be included in our review, studies must have a dedicated measure of HOT, CT, or CAT that gives insight into the authors’ conceptualization of these constructs. The measure used could be a standardized instrument or one that was researcher-designed.

Also, in line with our research aims, we only included studies that focused on PBL or PjBL and met the basic criteria for these student-centered approaches. To judge the quality of this learning environment, the definition or description in the theoretical framework and the implementation of those environments were assessed against the defining characteristics established in the literature (Barrows, 1996; Hmelo-Silver, 2004; Schmidt et al., 2009). For PBL, those criteria included (a) student-centered, active learning, (b) the guiding role of teachers, (c) collaborative learning in small groups, (d) the use of realistic problems as the start of the learning process, and (e) ample time for (self-directed) self-study. Furthermore, PBL had to contain the three process phases (i.e., initial discussion, self-study, and reporting phases). For PjBL, those criteria included (a) the project starts with a driving question or problem, (b) the project is relevant and authentic, (c) collaborative, inquiry learning activities take place, (d) room for student autonomy and the guiding role of teachers, (e) the project is central to the curriculum, and (f) the creation of a tangible product (Authors, 2022). We only included studies in a classroom context in K-12 and higher education because we wanted to focus on the effectiveness of these learning environments in formal educational settings. We focused on peer-review articles to better ensure the quality of the study.

Exclusion Criteria

We excluded self-report items (often from course evaluations) such as, “I have improved my ability to judge the value of new information or evidence presented to me” or “I have learned more about how to justify why certain procedures are undertaken in my subject area” (Castle, 2006). We also excluded the critical thinking scale from the Motivated Strategies for Learning Questionnaire (MSLQ; Duncan & McKeachie, 2005). In the MSLQ, critical thinking is viewed as a learning strategy. In light of strong criticism toward the reliance on MSLQ to effectively gauge these forms of reflective thinking (see Dinsmore & Fryer, 2022, this issue), we have excluded these studies (e.g., Sungur & Tekkaya, 2006).

We also excluded studies that did not meet the defining criteria for PBL or PjBL. For example, if a study indicated investigating PBL where the learning process started with a lecture instead of a problem. Furthermore, we excluded studies that did not adequately describe the process the researchers labeled as PBL or PjBL (e.g., Razali et al., 2017). We also excluded studies that consisted of a combination of P(j)BL with additional activities (e.g., concept maps with PBL; Si et al., 2019) or interventions (e.g., a CT or motivation intervention combined with PBL; Olivares et al., 2013).

Moreover, we excluded studies that took place in a laboratory setting, intervention studies at places such as summer camps, tutoring, afterschool programs or studies with employee samples (e.g., health nurses; T.-M. Hung et al., 2015), because of our focus on formal education. We further excluded theoretical, conceptual, or “best practices” articles. Finally, for this review, we excluded peer-reviewed conference papers and abstracts (“wrong format”) as they were often hard to retrieve or provided too little information to code the outcome measures and learning environment.

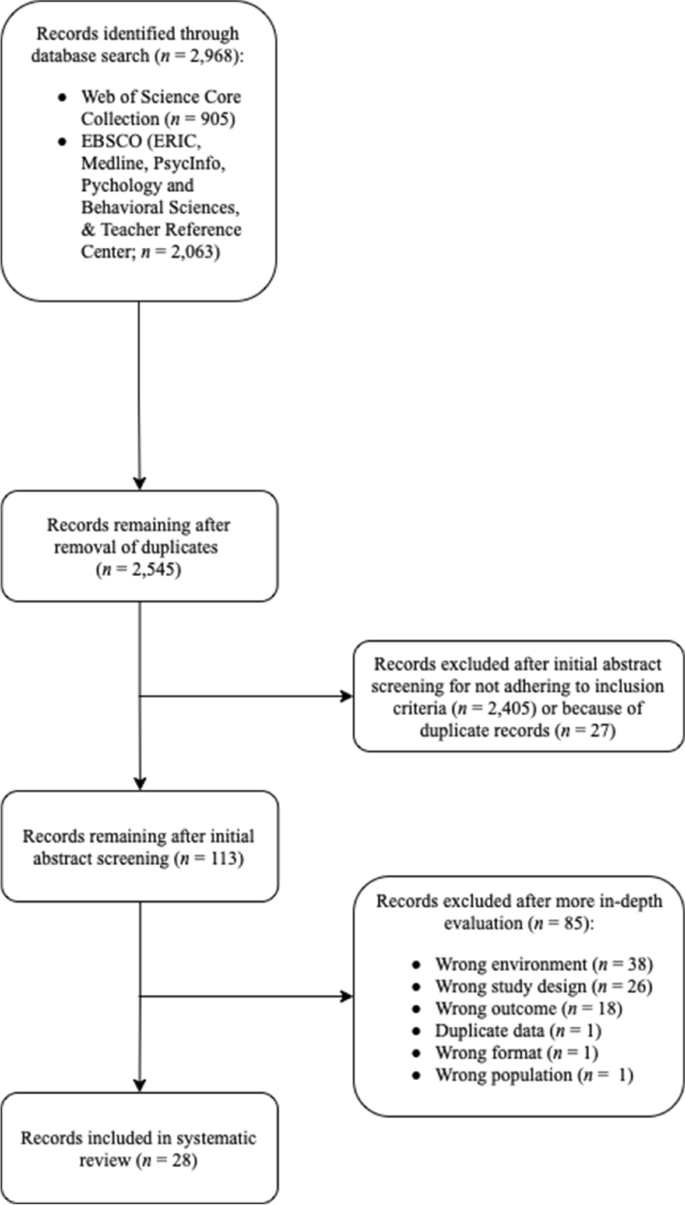

Coding and Final Sample

Figure 1 outlines our entire search process, in line with PRISMA (Preferred Reporting Items for Systematic Review and Meta-Analysis) guidelines (Moher et al., 2015). As can be seen there, our initial searches in the Web of Science and EBSCO databases provided us with 2,968 results, which we uploaded to the Rayyan platform (Ouzzani et al., 2016). After removing duplicates identified by the Rayyan platform, 2,545 papers remained, of which we screened the titles, abstracts, and, if needed, the full texts. We identified an additional 27 duplicates and excluded 2,405 papers because the studies did not meet our inclusion criteria.

We selected 113 studies for further inspection and coding. The specific codes were in line with our inclusion and exclusion criteria and were designed to streamline the final elimination round (i.e., excluding articles after in-depth reading). Table 1 provides a detailed overview of the codes used.

The search process overall resulted in a final sample of 28 studies. Of these 113 studies, 84 studies were excluded (see Fig. 1 for exclusion reasons). We excluded one paper because it contained duplicate data (Yuan et al., 2008b) reported in another paper (Yuan et al., 2008a). We selected the Yuan et al. (2008a) paper instead of the Yuan et al. (2008b) paper because the former also reported the results of the control group (i.e., lecture-based learning). In contrast, the latter only included the data of the PBL group.

Results and Discussion

Descriptives of the Final Sample

Before answering our research questions, we describe the characteristics of the 28 included studies (see Table 2). Of the included studies, 22 investigated a higher education setting and 6 in K-12. Twelve studies took place within the Health Sciences domain (e.g., nursing education, medical education), 8 within the Science, Technology, Engineering, Art, and Math (STEAM) domain, and 8 in other domains (e.g., financial management or psychology). Studies covered 12 different countries. Most studies were conducted in the USA (n = 7), Turkey (n = 4), Indonesia (n = 4), and China (n = 3). Two studies investigated the effects of PjBL; all other studies examined a PBL setting.

Research Question 1: Conceptualization of HOT, CT, and CAT

The first research question of this review was “How are HOT, CT, and CAT conceptualized in student-centered learning environments (i.e., PBL and PjBL)?” To answer this research question, it is important to know that none of the identified studies investigated the effect of P(j)BL on CAT. HOT was only investigated in two studies. One of the included studies investigated the effect of PBL on HOT, and the other study investigated the effect of PjBL on HOT and CT. All other studies investigated the effect of P(j)BL on CT. Hence, to answer this research question, we will mainly focus on the conceptualization of CT and, to a smaller extent, on HOT. In answering this research question, we will discuss how CT and HOT were defined and measured.

Conceptualization of CT

CT conceptualizations in P(j)BL consisted of CT dispositions and CT processes. These processes were referred to as “skills” or “abilities” in the included studies (e.g., W.-C. W. Yu et al., 2015). Our review will use the term “processes” instead of “skills.” A CT disposition is “the constant internal motivation to engage problems and make decisions by using CT” (Facione et al., 2000, p. 65). Facione et al. (2000) use the word disposition to refer to individuals' characterological attributes. An example of a disposition is being open-minded, analytical, or truth-seeking. In the included studies, dispositions were described as the “will” or “inclination” to evaluate situations critically (e.g., Temel, 2014; W.-C. W. Yu et al., 2015) and a necessary pre-condition for CT processes (Temel, 2014).

Although the correlation between CT dispositions and processes is not extremely high (e.g., Facione et al., 2000), both seem to be necessary for “reasonable reflective thinking focused on deciding what to believe or do” (e.g., Ennis, 2011, p. 10). The included studies in this review defined the concept of CT seven times in terms of dispositions and processes, 13 times solely in terms of processes, and four times solely in terms of dispositions. Three studies did not define the concept or spoke in general terms as “a way to find meaning in the world in which we live” (Burris & Garton, 2007, p. 106). Of the included studies, 7 studies measured CT disposition, 18 studies measured CT processes, and one study measured CT dispositions and processes. One study stated to have measured CT dispositions, but in the results section, only statistics for CT processes were reported (Hassanpour Dekhordi & Heydarnejad, 2008). Regarding measurements, 16 studies were congruent in defining CT and measuring CT. This means that, for example, when authors defined CT in terms of dispositions, they measured dispositions as well. This also means that 11 studies were incongruent in this respect (e.g., mentioning processes but measuring dispositions or mentioning both but measuring one component).

In sum, although CT consists of dispositions and processes, in the conceptualization of CT in P(j)BL research, we saw a majority of studies focusing only on the processes and, to a lesser extent, on (the combination with) dispositions. Also, 11 studies were incongruent in focus (dispositions and/or processes) of their description of CT and the focus of their measurement instrument.

CT Dispositions

In the studies included in this review, many different terms were used to describe CT disposition(s). The nine studies that reported measuring CT dispositions used the California Critical Thinking Disposition Inventory (CCTDI; Facione, 1990a). The CCTDI assesses students’ willingness or inclination toward engaging in critical thinking. The CCTDI contains seven dispositions (Facione, 1990a; Yeh, 2002). The scale Truth-Seeking refers to the mindset of being objective, honest, and seeking the truth even when findings do not support one’s opinions/interests. Open-Mindedness refers to tolerance, an open mind toward conflicting views, and sensitivity toward the possibility of one’s own bias. The subscale Analyticity concerns a disposition to anticipate possible consequences, results, and problematic situations. The fourth subscale, Systematicity, measures having an organized, orderly, and focused approach to problem-solving. The trust in one’s reasoning process is measured in the subscale CT Self-Confidence. Inquisitiveness concerns intellectual curiosity, whereas Cognitive Maturity refers to the expectation of making timely, well-considered judgments. A qualitative analysis of the descriptions showed that most of the terms used corresponded with CCTDI subscales (Facione, 1990a; Yeh, 2002; see Appendix Table 5). Sometimes other terms were used that could be classified less easily according to the dispositions of the CCTDI but point toward a willingness to engage in critical thinking or the role of self-regulation and metacognition.

CT Processes

There were not only many dispositions mentioned by the studies included in this review, but the number of terms to describe CT processes was even higher. When we look at the three most used instruments, many of these terms appear as specific components of those tests (see Appendix Table 6). For example, the most commonly used instruments were the California Critical Thinking Skills Test (CCTST; 4 studies) and the Watson–Glaser Critical Thinking Appraisal (WGCTA; 2 studies). One study used the Cornell Critical Thinking Test (CCTT), but the theoretical framework related to this test was used in three other studies to measure CT processes. The CCTST, WGCTA, and CCTT are well-known commercial standardized measures of critical thinking.

The CCTST is a companion test of the CCTDI and measures five critical thinking processes: Analysis, Evaluation, Inference, Deductive Reasoning, and Inductive Reasoning (Facione, 1991). Analysis refers to accurately identifying problems and processes such as categorization, decoding significance, and clarifying meaning. Evaluation concerns the ability to assess statements’ credibility and arguments’ strength. The Inference subscale measures the ability to draw logical and justifiable conclusions from evidence and reasons. Deductive Reasoning relies on strict rules and logic, such as determining the consequences of a given set of rules, conditions, principles, or procedures (e.g., syllogisms, mathematical induction). Finally, Inductive Reasoning refers to reasoned judgment in uncertain, risky, or ambiguous contexts.

Another well-validated test used in the studies included in this review is the WGCTA (Watson & Glaser, 1980). The WGCTA provides problems and situations requiring CT abilities. It measures CT as a composite of attitudes of inquiry (i.e., recognizing the existence of problems and acceptance of a need for evidence), knowledge (i.e., about valid inferences, abstractions, and generalizations), and skills in applying these attitudes e and knowledge (Watson & Glaser, 1980, 1994, 2009).

The scale consists of five subscales: Inferences, Recognition of Assumptions, Deduction, Interpretation, and Evaluation of Arguments. The Inferences subscale measures to what extent participants can determine the truthfulness of inferences drawn from given data. Recognition of Assumptions concerns recognizing implicit presuppositions or assumptions in statements or assertions. The Deduction subscale measures the ability to determine if conclusions necessarily follow from the given information. Interpretation concerns weighing evidence and deciding if the generalizations based on the given data are justifiable. Finally, the subscale Evaluation of Arguments measures the ability to distinguish strong and relevant arguments from weak and irrelevant arguments. The long version of the scale consists of 80 items (parallel Forms A and B; Watson & Glaser, 1980), and the short version (From S) contains 40 items (Watson & Glaser, 1994). Newer test versions are available (Watson & Glaser, 2009), but the included studies relied on the older, abbreviated version (Burris & Garton, 2007; Şendağ & Odabaşı, 2009).

The CCTT level Z, designed by Ennis, measures deduction, semantics, credibility, induction, definition and assumption identification, and assumption identification (Bataineh & Zghoul, 2006; Ennis, 1993). The CCTT is a commercial measure of critical thinking and has two versions. The CCTT level X is for students in Grades 4–14, whereas CCTT level Z is for advanced and gifted high school students, college students, graduate students, and other adults. Possibly due to copyright restrictions, many articles gave very brief descriptions of the test subscales. We also found some inconsistencies in the descriptions of the subscales in the literature. We could not retrieve the original manuals of the CCTT from 1985 or the revised version from 2005. Leach and others (2020) gave a detailed description of the CCTT level X subscales and provided a possible explanation for the inconsistencies found in the literature.

The test measures five latent dimensions: Induction, Deduction, Observation, Credibility, and Assumption (Leach et al., 2020). However, these five dimensions are reduced into four parts in the test manual. Two dimensions are taken together (i.e., observation and credibility), and some items of one dimension are counted as an element of another part (Leach et al., 2020). Bataineh and Zghoul (2006) described the subscales of CCTT level Z in more detail. According to them, the CCTT level Z measures six dimensions: Deduction, Semantics, Credibility Induction, Definition and Assumption Identification, and Assumption Identification. The subscale Deduction measures to what extent a person can detect valid reasoning. The subscale, Semantics, measures the ability to assess verbal and linguistic aspects of arguments. Credibility concerns the extent to which a participant can estimate the truthfulness of a statement. The subscale Induction refers to the ability to judge conclusions and the best possible predictions. Definition and Assumption Identification measures the extent to which a person can identify the best definition of a given situation. Finally, assumption identification asks participants to choose the most probable unstated assumption in the text.

In summary, as can be seen from this descriptive analysis (see Appendix Tables 5 and 6), many terms are employed to characterize CT dispositions and processes. The conceptualization of CT differs per measurement, as evidenced by the three most commonly used instruments (i.e., CCTDT, CCTST, WGCTA) and the instruments based on Ennis’s conceptualization of CT (e.g., CCTT). We also observed a tendency to create new instruments, often based on or inspired by other measurement instruments that introduce new terms to describe CT.

HOT Processes

In some studies measuring CT, the authors mentioned that CT was a component of HOT (Cortázar et al., 2021; Dakabesi & Louise, 2019; Sasson et al., 2018). Only two studies measured HOT (Sasson et al., 2018; Sugeng & Suryani, 2020). In both instances, the authors solely defined HOT in reference to the more complex thinking levels in Bloom et al. and’s (1956; Krathwohl, 2002) taxonomy for the cognitive domain: application, analysis, synthesis, and evaluation. In the Sasson et al. (2018) study, comprehension was also included as one of the higher cognitive processes. In contrast, in the Sugeng and Suryani (2020) study, comprehension was treated as a form of lower-order thinking. Both studies use Bloom’s Taxonomy as a framework for coding student work. Therefore, we mainly saw the same terms to conceptualize HOT and also congruency between characterizing and measuring HOT processes, based on Bloom’s taxonomy.

Research Question 2: Can PBL and PjBL Foster HOT, CT, and CAT?

To answer Research Question 2, we summarized the main findings regarding the effectiveness of PBL and PjBL on CT and HOT (see Tables 3 and 4). When possible, we calculated the standardized mean difference (Cohen’s d) in Comprehensive Meta-Analysis statistical software (version 3; Borenstein et al., 2009). First, we will discuss the effects on CT and then the two studies that examined HOT.

Effects of P(j)BL on Critical Thinking

Description of Studies

Table 3 reports the main findings of the 27 studies that investigated the effects of P(j)BL on CT. Most studies investigated the effects of PBL, and only two examined the effects of PjBL (Cortázar et al., 2021; Sasson et al., 2018). Most studies compared P(j)BL with a control group with pre-post measures of CT (n = 16). Five studies compared PBL with a control group and only reported posttest scores (see Table 3). We used the pre-post data of a single P(j)BL group (without a control group) for five other studies. One study had another design (comparing year groups; Pardamean, 2012).

The most commonly reported control group was (traditional) lecture-based learning (e.g., Carriger, 2016; Choi et al., 2014; Gholami et al., 2016; Lyons, 2008; Rehmat & Hartley, 2020; Tiwari et al., 2006; W.-C. W. Yu et al., 2015; Yuan et al., 2008a), traditional or conventional learning (e.g., Dilek Eren & Akinoglu, 2013; Fitriani et al., 2020; Saputro et al., 2020; Sasson et al., 2018; Temel, 2014), or instructor-led instruction (Şendağ & Odabaşı, 2009). In Burris and Garton (2007), the control group was a supervised study group, of which the authors indicated this corresponded with Missouri’s recommended curriculum for (secondary) agriculture classes. The control group in Siew and Mapeala (2016) focused on conventional problem-solving.

Some studies included additional experimental groups (Carriger, 2016; Cortázar et al., 2021; da Costa Carbogim et al., 2018; Fitriani et al., 2020; Siew & Mapeala, 2016; W.-C. W. Yu et al., 2015). In these experimental groups, PBL was combined with another intervention (Cortázar et al., 2021; da Costa Carbogim et al., 2018; Fitriani et al., 2020; Siew & Mapeala, 2016; W.-C. W. Yu et al., 2015) or combined with lectures (Carriger, 2016). For example, these interventions might include a critical thinking intervention (da Costa Carbogim et al., 2018) or a socially shared regulation intervention (Cortázar et al., 2021). To describe the main findings and calculate effect sizes, we only report the data for the “regular” PBL and the control groups (if reported) of these studies. Overall, results showed positive effects of P(j)BL on critical thinking. When we only look at the statistical tests the authors performed, 19 studies reported positive effects on CT, indicating that students’ CT disposition or skills scores increased from pretest to posttest or obtained higher scores than the control group. Seven studies reported non-significant findings, and only one study reported a negative effect.

Meta-Analysis

In the meta-analysis section of this review, we only included studies with a pre-post and/or independent groups design. Independent groups designs and pre-post designs both give insight into the question of whether PBL and PjBL affect students’ CT. Independent group designs check if the instructional method is more effective than “traditional” education, whereas pre-post designs check for differences before and after the implementation.

We were able to calculate effect sizes for 23 studies. For the studies with independent groups pre-post designs, we used the pretest and posttest means and standard deviations (SDs) and sample size per group to compute effect sizes. Posttest SD was used to standardize the effect size. Because most studies did not report the correlation between the pretest and posttest scores, we assumed a conservative correlation of 0.70 if the correlation was not reported. Studies suggest strong test–retest correlations for standardized critical thinking measures (Gholami et al., 2016; Macpherson & Owen, 2010). For example, Macpherson and Owen (2010) reported a strong positive correlation (r = 0.71) between two test moments among medical students for WGCTA. We used both groups’ means, SDs, and sample sizes to calculate the effect sizes for the studies with independent groups posttest-only designs. We used the mean difference, t, and sample size for the single group pre-post studies to calculate the effect size (da Costa Carbogim et al., 2018; Iwaoka et al., 2010) or the pretest and posttest means and SDs, sample size, and pre-post correlation. Again, we assumed a correlation of 0.70 if it was not reported.

When we included all 23 studies in one analysis (random-effects model), this resulted in a medium effect size of 0.644 (SE = 0.10, 95% CI [0.45, 0.83]). These results suggest that P(j)BL could positively affect students’ CT dispositions and processes. However, the effect was heterogenous, Q(22) = 237.46, p < 0.001, I2 = 90.74, T2 = 0.17 (SE = 0.11), which implies that the variability in effect sizes has sources other than sampling error. Because only one of the studies investigated PjBL, we repeated the analysis for only the PBL studies, resulting in an effect size of 0.635 (SE = 0.10, 95% CI [0.44, 0.83]), indicating the results remained similar. In our analysis, we included three types of research designs. Overall, the studies that compared P(j)BL with a control group reported larger effect sizes (independent groups: n = 4, d = 0707, SE = 0.22; independent groups pre-posttest: n = 14, d = 0.831, SE = 0.13). than studies with a single-group pre-post design (n = 5, d = 0.213, SE = 0.18).

As Table 3 reveals, there were some studies with extreme effect sizes (e.g., Saputro et al., 2020). We, therefore, conducted leave-one-out analyses. The leave-one-out analyses revealed that effect sizes were between 0.533–0.686 with an SE of approximately 0.10. Confidence intervals were between 95% CI [0.37, 0.70] to 95% CI [0.48, 0.89]. Overall, the conclusion about the positive effect of P(j)BL on critical thinking would not change if we left out the study by Saputro et al. (2020).

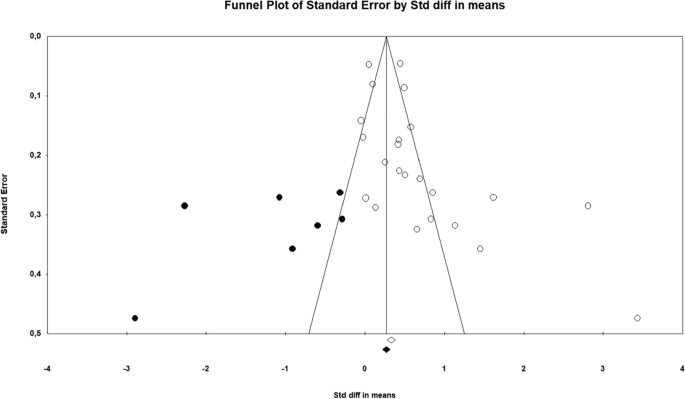

We further examined publication bias by inspecting the funnel plot and Egger’s reception intercept (Egger et al., 1997). We applied Duval and Tweedie’s (2000) trim-and-fill technique and conducted a classic fail-safe N analysis. Figure 2 presents the funnel plot of all included studies and plots the individual study effect size against the standard error of the effect size estimates. The funnel plot indicated publication bias. Egger’s linear regression test for asymmetry further supported this observation, t(21) = 2.78, p = 0.006. Duval and Tweedie’s trim-and-fill technique (7 studies trimmed at the left side) resulted in an adjusted effect size from a medium effect of 0.644 to a small effect of 0.298 (95% CI [0.09, 0.51]). The fail-safe N suggested that 1,376 missing studies are needed for the result of this meta-analysis to be nonsignificant (p > 0.05). Overall, the results suggested that P(j)BL can have a small-to-medium positive effect on students’ CT processes and dispositions.

As mentioned, the effect was heterogeneous. Due to the limited number of studies, we could not investigate moderating factors that can explain the heterogeneity statistically. The variation in effect sizes can likely be partly caused by variation in how PBL was implemented, as this often differs per institute even when the defining characteristics have been met (Maudsley, 1999; Norman & Schmidt, 2000). However, to deal with this issue, we only included studies that met the defining criteria of PBL and PjBL. We further excluded studies that contained additional activities (e.g., concept mapping) that could affect the results.

Also, differences in the exact operationalization of CT could affect the results. To explore this, we calculated the effect size separately for studies reporting outcomes on CT processes (n = 17) and dispositions (n = 7). Analyses suggested a higher effect size for the studies reporting results for CT processes (d = 0.720, SE = 0.14, 95% CI [0.46, 0.99]) than studies reporting on the effects of CT disposition (d = 0.411, SE = 0.13, 95% CI [0.16, 0.66]). However, these results must be interpreted with caution due to the limited number of studies and the extreme effect sizes in the CT processes group.

Other variables that could potentially explain heterogeneity are sample level (e.g., K-12 or higher education) and the duration of the intervention or exposure to PBL. For example, a meta-analysis of the effects of student-centered learning on students’ motivation showed that the effect of student-centered learning on motivation was lower for K-12 samples and curriculum implementation compared to studies conducted in a higher education setting and course implementations (Authors, 2022). Possibly, similar factors could affect the effect of P(j)BL on CT, but more research is needed to investigate this in more detail.

Additional Findings

In the meta-analysis section of this review, we only included studies with a pre-post and/or independent groups design. Two studies deviated from this design. Tiwari et al. (2006) did not only compare the effects of PBL vs. lecture-based learning immediately after the PBL course but also included two follow-ups one and two years later. As seen in Table 3, the PBL group showed significant gain scores in CT immediately after the course, and the gain score remained positive at the first follow-up. However, the gain score became non-significant at the second follow-up (two years later). When we look at some subscale scores, results revealed significant gains in favor of PBL for truth-seeking, analyticity, and CT self-confidence. The gain score for analyticity remained positive at the first follow-up, whereas the truth-seeking gain score remained significant at the first and second follow-ups (two years later). The results of this study suggest that PBL can have long-term effects on CT dispositions.

Pardamean (2010) did not have a control group but examined CT processes in first through third-year students. Their study revealed no differences between the three-year groups on overall CT. There was one statistically significant difference on the subscale Inductive Reasoning, on which the second-year students obtained the highest score and the third-year students the lowest score. This study does not support that CT increases across year groups in a PBL curriculum. However, we have no information on the baseline CT of each group.

Not all studies reported the results of the test subscales, even when the original scale consisted of subscales. Seven of the nine studies using the CCTDI reported subscale results. Of these studies, three studies reported positive results for Open-Mindedness, Inquisitiveness, Truth-Seeking, or Systematicity, and two for the Analyticity subscale or the CT Self-Confidence subscale. Of the five studies that examined Cognitive Maturity, only one reported a positive effect of P(j)BL (see Table 3). Five studies reported the subscale scores on the CCTST. Of these studies, two found positive effects of P(j)BL on Analysis or Evaluation, and one study on Inference. No students reported positive effects on the Deduction subscale. For Induction, one study found a positive effect, and another reported a negative effect. Overall, mixed results were found on the subscale level (see Table 3).

Effects of P(j)BL on Higher-Order Thinking

Only two studies investigated the effects of P(j)BL on HOT (see Table 4). One in a PBL setting (Sugeng & Suryani, 2020) and the other in a PjBL setting (Sasson et al., 2018). We could not calculate effect sizes based on the data provided in the papers. Sugeng and Suryani (2020) compared a PBL group with a lecture-based group on HOT and lower-order thinking. The PBL group scored significantly higher on HOT, whereas the lecture-based group scored higher on lower-order thinking. Sasson et al. (2018) reported a positive effect for a 2-year PjBL program. HOT increased for the PjBL group but not for the control group from Measurement 1 (beginning of 9th grade) to Measurement 3 (end of 10th grade).

Conclusions and Implications

This systematic review focused on two questions: “How are HOT, CT, and CAT conceptualized in student-centered learning environments?” and “Can PBL and PjBL foster HOT, CT, and CAT?” We presented and discussed findings related to those questions in the preceding section. Here we offer a more global examination of the trends that emerged from our analysis of two popular forms of student-centered approaches to instruction, PBL and PjBL, and share lingering issues that should be explored in future research. However, we first address certain limitations of this systematic review that warrant consideration.

Limitations

As stated, several limitations emerged in this systematic review that have a bearing on the conclusions we proffer. For one, when we set out to conduct this research, our intention was to understand how higher-order, critical, and critical-analytic thinking were conceptualized in PBL and PjBL. However, we found it impossible to analyze the role of CAT because CAT was not part of any investigation in the context of P(j)BL. Further, the attention given to HOT was quite limited, with only two studies investigating it. It should also be mentioned that we found an unequal distribution in the studies included in this review regarding the thinking measures and the learning environments. For example, CAT appeared not embedded in the P(j)BL literature and HOT to a significantly smaller degree than CT.

Similarly, most studies in this review reported findings of a PBL environment, with a PjBL environment only investigated in two studies. Consequently, the conclusions we draw from our analysis rest primarily on empirical research on studies applying PBL and not PjBL approaches.

As mentioned, the CT measures used in this work often consisted of several subscales. For example, the WGCTA consists of the subscales inference, recognition of assumptions, deduction, interpretation, and evaluation of arguments. The presence of multiple indicators was a limitation because the effects can differ for these subcomponents, making global interpretation of effectiveness more difficult. However, we did not use those components as search terms in the literature search, and most studies did not report these subscales or define them. Future studies could use finer-grained search terms, including the thinking measures’ subscales or processes.

While the present study demonstrated positive effects of P(j)BL on HOT and CT, it remains unknown what exactly led to these positive effects, given that multiple links between P(j)BL and HOT and CT could be identified. Also, associations of HOT, CT, and CAT with performance were not investigated in the present study. More controlled experimental studies could shed light on these issues and help overcome the design issues associated with effect studies.

Finally, future research could relate HOT, CT, and CAT in P(j)BL environments to other learning processes, such as self-regulated learning (SRL) and self-directed learning (SDL). Components such as metacognition also play a prominent role in SRL and SDL processes. Future research could shed light on the relationships between thinking and regulating processes in the context of P(j)BL.

Research Question 1: CAT Is Not Embedded and HOT Not Frequently Studied in the P(j)BL Literature

Concerning conceptualizations (RQ1), we must first acknowledge the skewed distribution of studies over the three types of thinking (i.e., HOT, CT, and CAT). To start with, CAT was not part of any investigation in the context of P(j)BL, HOT only in two studies, with the vast majority focusing on CT. When looking at the definition of CAT and its distinguishing feature compared to CT, it has been put forward as the focus on determining how appropriate and credible evidence is (Byrnes & Dunbar, 2014). Remarkably, this component is undoubtedly present in P(j)BL. After all, in PBL, when students work on the problem (that they encounter based on prior knowledge) or, more specifically, the learning questions/issues for further self-directed study formulated during PBL group discussion, they will look for and study different literature resources (e.g., Loyens et al., 2012). During this knowledge acquisition process in finding answers to the learning questions, they need to check whether different literature resources are in accordance with each other or whether dissimilarities can be detected. In case of dissimilarities, it is up to the student to decide and, later on, during the reporting phase, to discuss how to deal with these dissimilarities with the group. How come different sources provide different answers to the learning questions/issues, and what does that say about the credibility of the sources themselves? Also, in PjBL, students undergo the same process when dealing with conflicting information while working on their projects. It is important to note that these conflicting pieces of information are resolved through group discussion in P(j)BL. However, initially, they might cause some uncertainty regarding the learning process. Indeed, several scholars acknowledge learning uncertainty as a potential consequence of the open set-up of student-centered learning environments (Dahlgren & Dahlgren, 2002; Kivela & Kivela, 2005; Llyod-Jones & Hak, 2004). Nevertheless, the four steps described by Alexander (2014) are present in P(j)BL, which probably implies that the concept of CAT is not yet well known and embedded in the P(j)BL literature and that in the context of the P(j)BL literature, CAT would be a more accurate term compared to CT.

Similarly, only two studies examined HOT, which could be explained by the fact that HOT is an umbrella term consisting of CT (Schraw et al., 2011). That means that when researchers investigate HOT, they are also investigating CT. In light of conceptual clarity, however, it would be recommended to examine concepts at the most detailed level.

More Focus on CT Processes Than CT Dispositions

Another finding was that in the P(j)BL literature, more focus lies on processes, referred to as skills or abilities, compared to dispositions of CT or combinations of CT processes and dispositions. This is not surprising as P(j)BL has been more focused on and related to several interpersonal and self-directed learning skills (Loyens et al., 2008, 2012; Schmidt, 2000). What is more problematic is the incongruence between the definitions (CT dispositions and/or processes) and measurement instruments. From an educational point of view, processes seem to be the most natural to be fostered in education, although research has also demonstrated that learning environments can foster CT dispositions (Mathews & Lowe, 2011). Applied to P(j)BL environments, dispositions such as “inquisitiveness,” “open-mindedness,” “analyticity,” and “self-regulatory judgment” are certainly helpful. However, more empirical research is necessary to see whether and how P(j)BL environments can foster CT dispositions. The exact meaning of dispositions, which are frequently measured in this literature, is unclear. The measures, subscales, or items labeled as dispositions range from rather stable personality traits such as open-mindedness to more malleable individual differences factors such as prior knowledge. Some components included under dispositions also carry a strong cognitive character, such as analyticity.

Lack of Conceptual Clarity Troubles Measurements and Findings

Regarding the conceptualizations of HOT, CT, and CAT, we definitely ended up in muddy waters. The largest percentage of excluded articles was due to flawed conceptualizations (also for P(j)BL, which we will explain below). These flawed conceptualizations produce a domino effect because conceptualizations (i.e., theoretical definitions) are determinative for measurements (Byrnes & Dunar, 2014). In addition, conceptualizations were, so we observed, often at best operationalizations in which authors named specific (sub)processes without mentioning any theoretical grounding. The measurement tool used was often determinative for the inclusion of specific processes. However, we often observed a mismatch in that processes were mentioned that were not part of the measurement instrument, or we observed incongruence between definitions (e.g., processes) and measurement instruments (e.g., measuring dispositions).

Of course, attempts have been made to reach a consensus regarding conceptualizations. For example, the Delphi Report (Faccione, 1990b) and the special issue on CAT (e.g., Alexander, 2014; Byrnes & Dunar, 2014) tried to create conceptual clarity on respectively CT and CAT. Consensus should, however, not be a goal in itself. HOT, CT, and CAT are such broad concepts that consensus is far from easy. It is, however, more important to reflect on what the learning objectives of the learning environment (P(j)BL or other) are and determine whether fostering HOT, CT, and CAT is one of them, and then create an educational practice that is in line with these objectives (i.e., constructive alignment; Biggs, 1996). Depending on the learning objectives of a learning environment (i.e., the construction of flexible knowledge bases, the development of inquiry skills, or a tool for “learning how to learn”; Schmidt et al., 2009), one could emphasize specific (sub)CT processes and use different measurement instruments.

A consequence of these flawed conceptualizations is flawed measurements. Measurements were often problematic in terms of their psychometric properties. Another issue in this respect is that many CT measurements are commercial and not readily available.

Next to issues with the conceptualizations of HOT, CT, and CAT, also serious problems were seen in the conceptualization of P(j)BL. Descriptions were absent, unclear, too broad and general (e.g., “active learning”), or indicative of a learning environment other than P(j)BL. This is not a new finding. In fact, PBL, for example, was identified as troublesome in terms of its definitions a long time ago (e.g., Lloyd-Jones et al., 1998). However, it is at least troublesome that this is still the case and, therefore, we excluded many studies. On the other hand, we also notice that more recent studies pay more attention to this issue (e.g., Lombardi et al., 2022).

Design Issues in Effect Studies of P(j)BL on HOT, CT, and CAT

While investigating the second research question of this study on the effects of P(j)BL on HOT, CT, and CAT, we made several observations. First, there is still a lack of controlled studies in this domain. The great majority of studies did not use a control group (note: those included in the meta-analysis did), making it impossible to determine the effects of interventions. Like the unclear conceptualizations of P(j)BL, this is not new and has been indicated as an issue before (Loyens et al., 2012).

Another observation was that in many studies, it was seen as an assumption/given that P(j)BL fosters CT, usually without any explanation. As explained in the introduction, fostering HOT, CT, or CAT skills is not mentioned as one of the goals of P(j)BL, despite the links that can be made. A priori stating that P(j)BL fosters CT is hence premature.

Finally, we noticed that in several studies, PBL was combined with other interventions (e.g., concept mapping). In those cases, the PBL group served as a control group and the PBL plus extra group as the experimental condition, making it impossible to establish the effects of PBL on HOT, CT, and CAT. Most studies used a pre-posttest design.

Research Question 2: Positive effects of P(j)BL on HOT and CT

Overall, results showed positive effects of P(j)BL on CT and HOT (note that no studies on CAT were found to be included in this review), with scores increasing from pre- to posttest or P(j)BL obtaining higher scores than the control group. These findings imply that P(j)BL does carry elements that can foster CT and HOT. As mentioned above, the positive effects could be interpreted by both cognitive and instructional science literature. Literature on how to effectively teach HOT, CT, and CAT has identified several techniques that help foster these skills. Not surprisingly, these techniques, such as dealing with real cases/problems in class, encouraging (Socratic) class discussions/debate, fostering inquiry-oriented experiments, problem-solving, problem finding, brainstorming, decision making, and analysis (Abrami et al., 2015; Miri et al., 2007; Torff, 2011) are all linked to P(j)BL. Similarly, literature on the learning processes involved in P(j)BL also mentions processes linked to thinking skills. For example, students working on their projects in PjBL use problem-solving, design, decision-making, argumentation, using and weighing different pieces of knowledge, explanation, investigation, and modeling (Krajcik et al., 2008). Similarly, students working on problems in PBL have group discussions about authentic problems, engage in evidence-seeking behavior, analyze the evidence, resolve unclarities, and decide on the outcome.

While the majority of findings revealed positive effects regarding the effectiveness of P(j)BL in fostering CT and HOT, several studies also reported negative or no effects. Given the wide variety of P(j)BL formats, it might be due to implementation issues, but it can also be ascribed to design issues, as mentioned above. Further research needs to shed light on the null and negative findings.

As a final note, it should be mentioned that effects were found for CT, but the studies usually do not make claims about the finer-grained subprocesses. For example, four components of HOT have been identified (Schraw et al., 2011), while outcome measures are usually calculated at the “general” and not the subcomponent level. Nevertheless, the HOT component of metacognition is quite different from the HOT component of reasoning or problem-solving, which demonstrates the importance of constructive alignment (Biggs, 1996). Constructive alignment implies clearly defining the learning objectives of the learning environment (P(j)BL or other). When fostering HOT, CT, and CAT is one of the learning objectives, an educational practice should be developed that aligns with these objectives. To make claims about whether learning environments are effective in fostering HOT, CT, or CAT processes, one must first discover whether these processes are or can be part of the learning objectives of these learning environments.

Implications

Several implications for theory and practice follow out of this review study. The first implication is that there is much room for improvement in terms of conceptual clarity. CAT is not investigated and HOT only sporadically in the context of P(j)BL. Nevertheless, when looking at the respective definitions of these thinking processes, the “analytical” part of CAT is certainly present in P(j)BL environments when weighing the evidence during the analysis of specific problems or projects (Alexander, 2014). In addition, since CT is considered a component of HOT, we propose investigations at the most detailed level. For practitioners looking for ways to foster HOT, CT, and CAT, it is important to know and hence take into account that definitions (and hence subsequently, measurements) are ambiguous. Given that the results seem positive in terms of the capability of P(j)BL to foster HOT and CT, it is important to guard that the child is not thrown away with the bathwater. Exposure to problems and collaboration with fellow students seem beneficial for fostering thinking skills.

Secondly, the lack of studies investigating CAT in P(j)BL means that this form of thinking is not yet embedded in the P(j)BL research literature. Given the characteristics of the P(j)BL process, CAT processes should be an object of investigation in these learning environments to further advance the theoretical understanding of CAT in P(j)BL.

Conclusion

In sum, the present review study led to several conclusions regarding conceptualizations of HOT, CT, and CAT in the P(j)BL literature. First, CAT is not embedded, and HOT is not frequently studied in the P(j)BL literature. Second, more focus lies on CT skills compared to CT dispositions in the research literature. Next, a lack of clear conceptualizations of HOT, CT, and CAT complicates the measurements and findings. This lack of conceptual clarity carries into instruments and tools of assessment that are limited in number (and sometimes availability) and of questionable validity. The lack of conceptual clarity also extends to P(j)BL environments, where essential components of PBL and PjBL were not always articulated or addressed in studies claiming to implement these approaches. Design issues in effect studies add to these complications. Further, the reference to HOT or CT skills conflicts with the literature on what differentiates skills from more intentional and purposefully implemented processes (i.e., cognitive and metacognitive strategies). Finally, mainly positive effects were found of P(j)BL on HOT and CT.

Data Availability

The data that support the findings of this study are available from the corresponding author, S.L., upon reasonable request.

Notes

Note that in the search terms for the student-centered learning environments, we did not use a hyphen, that is, “problem based learning” instead of “problem-based learning”.

References

References preceded by an * were included in the review

Abrami, P. C., Bernard, R. M., Borokhovski, E., Waddington, D. I., Wade, C. A., & Persson, T. (2015). Strategies for teaching students to think critically: A meta-analysis. Review of Educational Research, 85(2), 275–314. https://doi.org/10.3102/0034654314551063

Alexander, P. A. (2014). Thinking critically and analytically about critical-analytic thinking. Educational Psychology Review, 26, 469–476. https://doi.org/10.1007/s10648-014-9283-1

Authors. (2022). [Blinded for review].

Barrows, H. S. (1996). Problem-based learning in medicine and beyond: A brief overview. In L. Wilkerson & W. H. Gijselaers (Eds.), New Directions in Teaching and Learning: Issue 68. Bringing problem-based learning to higher education: Theory and practice (pp. 3–12). Jossey-Bass. https://doi.org/10.1002/tl.37219966804

Bataineh, R. F., & Zghoul, L. H. (2006). Jordanian TEFL graduate students’ use of critical thinking skills (as measured by the Cornell Critical Thinking Test, Level Z). International Journal of Bilingual Education and Bilingualism, 9(1), 33–50. https://doi.org/10.1080/13670050608668629

Bezanilla, M. J., Galindo-Domínguez, H., & Poblete, M. (2021). Importance of teaching critical thinking in higher education and existing difficulties according to teacher’s views. REMIE-Multidisciplinary Journal of Educational Research, 11(1), 20–48. https://doi.org/10.4471/remie.2021.6159

Biggs, J. B. (1996). Enhancing teaching through constructive alignment. Higher Education, 32, 347–364. https://doi.org/10.1007/BF00138871

Bloom, B. S., Englehart, M. D., Furst, E. J., Hill, W. H., & Krathwohl, D. R. (1956). Taxonomy of educational objectives: The classification of educational goals. Handbook I: Cognitive domain. David McKay Co.

Blumenfeld, P. C., Soloway, E., Marx, R. W., Krajcik, J. S., Guzdial, M., & Palincsar, A. (1991). Motivating project-based learning: Sustaining the doing, supporting the learning. Educational Psychologist, 26(3–4), 369–398. https://doi.org/10.1080/00461520.1991.9653139

Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis. Wiley.

*Burris, S., & Garton, B. L. (2007). Effect of instructional strategy on critical thinking and content knowledge: Using problem-based learning in the secondary classroom. Journal of Agricultural Education, 48(1), 106–116. https://files.eric.ed.gov/fulltext/EJ840072.pdf

Butler, H. A., & Halpern, D. F. (2020). Critical thinking impacts our everyday lives. In R. J. Sternberg & D. F. Halpern (Eds.), Critical thinking in psychology (2nd ed., pp. 152–172). Cambridge University Press. https://doi.org/10.1017/9781108684354.008

Byrnes, J. P., & Dunbar, K. N. (2014). The nature and development of critical analytic thinking. Educational Psychology Review, 26, 477–493. https://doi.org/10.1007/s10648-014-9284-0

Caldwell, D. J., Caldwell, D. Y., Mcelroy, A. P., Manning, J. G., & Hargis, B. M. (1998). BASP-induced suppression of mitogenesis in chicken, rat and human PBL. Developmental & Comparative Immunology, 22(5–6), 613–620. https://doi.org/10.1016/S0145-305X(98)00037-8

*Carriger, M. S. (2016). What is the best way to develop new managers? Problem-based learning vs. lecture-based instruction. The International Journal of Management Education, 14(2), 92–101. https://doi.org/10.1016/j.ijme.2016.02.003

Castle, A. (2006). Assessment of the critical thinking skills of student radiographers. Radiography, 12(2), 88–95. https://doi.org/10.1016/j.radi.2005.03.004

Chakravorty, S. S., Hales, D. N., & Herbert, J. I. (2008). How problem-solving really works. International Journal of Data Analysis Techniques and Strategies, 1(1), 44–59. https://doi.org/10.1504/IJDATS.2008.020022

Chen, C., & Yang, Y. (2019). Revisiting the effects of project-based learning on students’ academic achievement: A meta-analysis investigating moderators. Educational Research Review, 26, 71–81. https://doi.org/10.1016/j.edurev.2018.11.001

Chiu, C.-F. (2020). Facilitating K-12 teachers in creating apps by visual programming and project-based learning. International Journal of Engineering Technology in Learning, 15(1), 103–118. https://www.learntechlib.org/p/217066/

*Choi, E., Lindquist, R., & Song, Y. (2014). Effects of problem-based learning vs. traditional lecture on Korean nursing students’ critical thinking, problem-solving, and self-directed learning. Nurse Education Today, 34(1), 52–56. https://doi.org/10.1016/j.nedt.2013.02.012

*Cortázar, C., Nussbaum, M., Harcah, J., Alvares, J., López, F., Goñi, J., & Cabezas, V. (2021). Promoting critical thinking in an online, project-based course. Computers in Human Behavior, 119, Article 106705. https://doi.org/10.1016/j.chb.2021.106705