Abstract

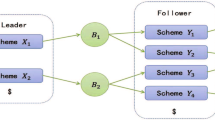

This paper presents the OPTGAME algorithm developed to iteratively approximate equilibrium solutions of ‘tracking games’, i.e. discrete-time nonzero-sum dynamic games with a finite number of players who face quadratic objective functions. Such a tracking game describes the behavior of decision makers who act upon a nonlinear discrete-time dynamical system, and who aim at minimizing the deviations from individually desirable paths of multiple states over a joint finite planning horizon. Among the noncooperative solution concepts, the OPTGAME algorithm approximates feedback Nash and Stackelberg equilibrium solutions, and the open-loop Nash solution, and the cooperative Pareto-optimal solution.

Similar content being viewed by others

Notes

Linear quadratic dynamic games (LQDG) are, for example, a model class that is very well understood and extensively investigated, especially in continuous time (Engwerda 2005). For a recent piece of work applying deterministic, finite-dimensional, zero-sum LQDGs formulated in discrete time, see, e.g. Pachter and Pham (2010).

The computer program DYNGAME (McKibbin 1987) is, for example, designed to solve rational expectations models and related deterministic dynamic game problems for economic applications, but is not appropriate for approximating solutions for nonlinear problems because the algorithm linearizes the nonlinear dynamic constraint prior to initializing the solution procedure. The LQDG Toolbox (Michalak et al. 2011) exclusively solves linear quadratic (LQ) open-loop deterministic dynamic games (DG) in continuous time and does not provide feedback solutions. Regarding dynamic stochastic games, the Pakes & McGuire computer algorithm (Pakes et al. 1993; Pakes and McGuire 1994, 2001), which calculates Markov-perfect equilibrium solutions, is set up to investigate dynamic industries with heterogenous firms, i.e. the dynamic competition in an oligopolistic industry with investment, entry, and exit (Ericson and Pakes 1995). Here, intensive work is ongoing with respect to both theoretical and computational aspects (see, e.g. Doraszelski and Satterthwaite 2010; Fershtman and Pakes 2012; Farias et al. 2012), with potential future applicability to (macro)economic problems such as those for which OPTGAME was developed.

It is the number of decision makers involved that distinguishes OPTGAME 2.0 from its predecessor OPTGAME 1.0, which is designed for only two players; see Behrens et al. (2003); Hager et al. (2001). Moreover, the MATLAB implementation of OPTGAME 2.0 offers a wider spectrum of numerical equation solvers than its predecessor.

Decision makers may also care about what other decision makers do, i.e., it is possible that \(\mathbf {R}_t^{ij}\!\ne \!\mathbf {0}\) for \(j\ne i\). In other words, deviations in the levels of someone else’s control variables from what is desired (seen from one’s own perspective) can be punished in one’s own objective function.

Particularly in economic models, often one accounts for time preferences and assumes the penalty matrices to be \(\varvec{\Omega }_t^{i}\!:=(\vartheta ^{i})^{t-1}\varvec{\Omega }_0^{i}\) \(\forall t\!\in \!\{1,\ldots ,T\}\), where \(\vartheta ^{i}\!\in \!(0,1)\) is each player \(i\)’s individual discount rate and \(\varvec{\Omega }_0^{i}\!\in \!\mathbb {R}^{m\!\times \!m}\) is \(i\)’s nonnegative definite penalty matrix for the intial time period.

The assumption of a first-order system of difference equations is not restrictive as higher-order difference equations can always be reduced to systems of first-order difference equations by appropriately redefining variables. Lagged state variables can also be easily removed by introducing additional state variables. In OPTGAME, this is done using the procedure proposed in de Zeeuw and van der Ploeg (1991).

For \(k\!=\!0\), either zero-vectors may be assigned to \(\{\bar{\mathbf {u}}_t^1\!(k)\}_{t=1}^{T}\), ..., \(\{\bar{\mathbf {u}}_t^{n}\!(k)\}_{t=1}^{T}\) or historical data or other external information may be used.

Note that we do not linearize the nonlinear system prior to executing the optimization procedure but rather linearize the system repeatedly during the optimization process along the current reference path \(\{\bar{\mathbf {X}}_t\!(k)\}_{t=1}^T\) (for \(k\!=\!1,\ldots ,k_\mathrm{max}\)).

Again a Gauss-Seidel, a Newton-Raphson, a Levenberg-Marquardt, or a trust region solver is applied for determining the state variable.

If convergence has not been obtained before \(k\) reaches its maximum value, \(k_\mathrm{max}\), the iterative optimization procedure terminates without succeeding in finding an equilibrium feedback solution. It can then be re-started with an alternative initial tentative control path (to be specified by the user; see Fig. 1).

Under our assumptions, for the linear time-varying dynamic system approximating the nonlinear system, there always exists a (not necessarily unique) feedback Nash equilibrium solution. For the nonlinear dynamic system, this is not always guaranteed, even if the algorithm converges fairly quickly. In any case, alternative tentative initial control paths can be used to provide more insight into the nature of the solution obtained.

Note that the open-loop Nash equilibrium solution of the linearized quadratic game is determined using Pontryagin’s maximum principle, while the feedback Nash and feedback Stackelberg solutions of the linearized quadratic game are approximated using the dynamic programming technique.

References

Basar, T., & Olsder, G. J. (1999). Dynamic noncooperative game theory (2nd ed.). Philadelphia: SIAM.

Dockner, E., Jorgensen, S., Long, N. V., & Sorger, G. (2000). Differential games in economics and management science. Cambridge: Cambridge University Press.

Doraszelski, U., & Satterthwaite, M. (2010). Computable Markov-perfect industry dynamics. RAND Journal of Economics, 41(2), 215–243.

Fershtman, C., & Pakes, A. (2012). Dynamic games with asymmetric information: A framework for empirical work. Quarterly Journal of Economics, 127(4), 1611–1661.

Doraszelski, U., & Markovich, S. (2007). Advertising dynamics and competitive advantage. RAND Journal of Economics, 38(3), 557–592.

Jørgensen, S., & Zaccour, G. (2004). Differential games in marketing. Boston: Kluwer.

Acoccella, N., Di Bartolomeo, G., & Hughes Hallett, A. (2013). The theory of economic policy in a strategic context. Cambridge: Cambridge University Press.

Blueschke, D., & Neck, R. (2011). “Core” and “periphery” in a monetary union: A macroeconomic policy game. International Advances in Economic Research, 17(3), 334–346.

Neck, R., & Behrens, D. A. (2004). Macroeconomic policies in a monetary union: A dynamic game. Central European Journal of Operations Research, 12(2), 171–186.

Neck, R., & Behrens, D. A. (2009). A macroeconomic policy game for a monetary union with adaptive expectations. Atlantic Economic Journal, 37(4), 335–349.

Petit, M. L. (1990). Control theory and dynamic games in economic policy analysis. Cambridge: Cambridge University Press.

Plasmans, J., Engwerda, J., van Aarle, B., Di Bartolomeo, G., & Michalak, T. (2006). Dynamic modeling of monetary and fiscal cooperation among nations. Dynamic modeling and econometrics in economics and finance (Vol. 8). Dordrecht: Springer.

Van Long, N. (2011). Dynamic games in the economics of natural resources: A survey. Dynamic Games and Applications, 1(1), 115–148.

Behrens, D. A., Caulkins, J. P., Feichtinger, G., & Tragler, G. (2007). Incentive Stackelberg strategies for a dynamic game on terrorism. In S. Jørgensen, M. Quincampoix, & V. L. Thomas (Eds.), Advances in dynamic game theory. Annals of the international society of dynamic games (Vol. 9, pp. 459–486). Boston: Birkhäuser.

Grass, D., Caulkins, J. P., Feichtinger, G., Tragler, G., & Behrens, D. A. (2008). Optimal control of nonlinear processes. With applications in drugs, corruption, and terror. Berlin/Heidelberg: Springer.

Novak, A. J., Feichtinger, G., & Leitmann, G. (2010). A differential game related to terrorism: Nash and Stackelberg strategies. Journal of Optimization Theory and Applications, 144(3), 533–555.

Engwerda, J. C. (2005). LQ dynamic optimization and differential games. Chichester: Wiley.

Pachter, M., & Pham, K. D. (2010). Discrete-time linear-quadratic dynamic games. Journal of Optimization Theory and Applications, 146(1), 151–179.

W. J. McKibbin (1987). Solving rational expectations models with and without strategic behavior. Reserve Bank of Australia Research, Discussion Paper 8706.

Michalak, T., Engwerda, J., & Plasmans, J. (2011). A numerical toolbox to solve n-player affine LQ open-loop differential games. Computational Economics, 37, 375–410.

A. Pakes, G. Gowrisankaran, and P. McGuire (1993). Implementing the Pakes-McGuire algorithm for computing Markov-perfect equilibria in GAUSS. Working Paper, Department of Economics, Yale University.

Pakes, A., & McGuire, P. (1994). Computing Markov-perfect Nash equilibria: Numerical implications of a dynamic differentiated product model. RAND Journal of Economics, 25, 555–589.

Pakes, A., & McGuire, P. (2001). Stochastic algorithms, symmetric Markov perfect equilibrium, and the ‘curse’ of dimensionality. Econometrica, 69, 1261–1281.

Ericson, R., & Pakes, A. (1995). Markov-perfect industry dynamics: A framework for empirical work. Review of Economic Studies, 62(1), 53–82.

Farias, V., Saure, D., & Weintraub, G. Y. (2012). An approximate dynamic programming approach to solving dynamic oligopoly models. RAND Journal of Economics, 43(2), 253–282.

Anderson, B. D. O., & Moore, J. B. (1998). Optimal control: linear-quadratic methods. New York: Prentice Hall.

Pachter, M. (2009). Revisit of linear-quadratic optimal control. Journal of Optimization Theory and Applications, 140(2), 301–314.

de Zeeuw, A. J., & van der Ploeg, F. (1991). Difference games and policy optimization: A conceptional framework. Oxford Economic Papers, 43(4), 612–636.

Lucas, R. E. (1983). Econometric policy evaluation: A critique. In K. Brunner & A. H. Meltzer (Eds.), Theory policy, institutions: Papers from the Carnegie-Rochester conference series on public policy (pp. 257–284). Amsterdam: North-Holland.

Behrens, D. A., Hager, M., & Neck, R. (2003). OPTGAME 1.0: A numerical algorithm to determine solutions for two-person difference games. In R. Neck (Ed.), Modelling and Control of Economic Systems 2001. A Proceedings volume from the 10th IFAC Symposium, Klagenfurt, Austria, 6–8 September 2001 (pp. 47–58). Oxford: Elsevier.

Hager, M., Neck, R., & Behrens, D. A. (2001). Solving dynamic macroeconomic policy games using the algorithm OPTGAME 1.0. Optimal Control Applications and Methods, 22(5–6), 301–332.

Chow, G. C. (1975). Analysis and control of dynamic economic systems. New York: Wiley.

Chow, G. C. (1981). Econometric analysis by control methods. New York: John Wiley & Sons.

Reinganum, J. F., & Stokey, N. S. (1985). Oligopoly extraction of a common property natural resource: The importance of the period of commitment in dynamic games. International Economic Review, 26(1), 161–173.

Matulka, J., & Neck, R. (1992). OPTCON: An algorithm for the optimal control of nonlinear stochastic models. Annals of Operations Research, 37, 375–401.

S. P. Boyd (2005). Linear dynamical systems. Linear quadratic regulator: Discrete-time finite horizon. See website http://www.stanford.edu/class/ee363.

Hamada, K., & Kawai, M. (1997). International economic policy coordination: Theory and policy implications. In M. U. Fratianni, D. Salvatore, & J. von Hagen (Eds.), Macroeconomic policy in open economies (pp. 87–147). Westport, CT: Greenwood Press.

R. Neck and D. Blueschke. Policy interactions in a monetary union: An application of the OPTGAME algorithm. In J. Haunschmied, V. M. Veliov, and S. Wrzaczek, editors, Dynamic Games in Economics. Springer, Berlin, forthcoming 2014.

Author information

Authors and Affiliations

Corresponding author

Additional information

We thank five anonymous referees; the editor; and Engelbert Dockner for fruitful discussions; Christina Kopetzky for indefatigable organizational support; and delegates of numerous workshops where earlier versions of this paper were presented for inspiring comments. We gratefully acknowledge financial support from the EU Commission (project no. MRTN-CT-2006-034270 COMISEF), the Austrian Science Foundation (FWF) (project no. P12745-OEK), the Austrian National Bank (OeNB) (projects no. 9152, no. 11216, and no. 12166), the European Regional Development Fund (ERDF) & the Carinthian Economic Promotion Fund (KWF) (grant no. 20214/23793/35529), the Carinthian Chamber of Commerce & the Provincial Government of Carinthia, and the former Ludwig Boltzmann Institute for Economic Policy Analyses, Vienna.

Appendix

Appendix

1.1 Derivation of the Feedback Nash Equilibirum Solution for a LQDG

All players have access to the complete state information and seek control rules that respond to the currently observed state. Here we will describe the corresponding feedback Nash equilibrium solution for iteration step \(k\), \(\{\hat{\mathbf {x}}_t^*\!(k)\}_{t=1}^T\) and \(\{\hat{\mathbf {u}}_t^{i*}\!(k)\}_{t=1}^T\) \(\forall i\!\in \!\{1,\ldots ,n\}\), that minimizes Eq. 1 subject to Eq. 5 by applying the method of dynamic programming. We set up player \(i\)’s (\(i\!=\!1\ldots ,n\)) individual cost-to-go function for the terminal period, \(T\),

For \(\mathbf {P}_T^{i}\!(k)\!:=\!\mathbf {Q}_T^{i}\) and \(\mathbf {p}_T^{i}\!(k)\!:=\!\mathbf {Q}_T^{i}\tilde{\mathbf {x}}_T^{i}\), Eq. 50 is equivalent to

where the scalar \(\xi _T\!(k)\) is the sum of all terms that do not depend on \(\hat{\mathbf {x}}_T^{*}\!(k)\) and \(\mathbf {u}_T^{i}\!(k)\) and is, thus, without any relevance for our further calculations. From Eq. 5 we know that the optimal state vector for the terminal period can be derived by the use of the state vector optimized for the previous time period, \(\hat{\mathbf {x}}_{T-1}^{*}\!(k)\), and the optimal control variables, \(\hat{\mathbf {u}}_{T}^{i*}\!(k)\) \(\forall i\!\in \!\{1,\ldots ,n\}\). To derive the latter, in Eq. 51 we replace \(\hat{\mathbf {x}}_T^{*}\!(k)\) by the right-hand side of Eq. 5, and compute the optimal values of \(J^{i*}_T\!(k)\) \(\forall i\!\in \!\{1,\ldots ,n\}\) by minimizing \(J^{i}_T\!(k)\) with respect to \(\mathbf {u}_T^i\!(k)\), i.e.,

Note that, since \(J^{i}_T\!(k)\) is strictly convex with respect to \(\mathbf {u}_T^i\!(k)\) \(\forall i\!\in \!\{1,\ldots ,n\}\), the first-order conditions (Eq. 52) are necessary and sufficient.

Under the assumption that all players act simultaneously, we can derive optimal control variables of the form \(\hat{\mathbf {u}}_{T}^{i*}\!(k)\!=\mathbf {G}_T^{i}\!(k)\hat{\mathbf {x}}_{T-1}^*\!(k)+\mathbf {g}_T^{i}\!(k)\).Footnote 14 Plugging these into Eq. 52 we arrive at Eq. 13 and Eq. 14 for \(t=T\) respectively, from which we can compute the feedback matrices, \(\mathbf {G}_T^{i}\!(k)\) and \(\mathbf {g}_T^{i}\!(k)\). The optimal state can then be determined by \(\hat{\mathbf {x}}_{T}^*\!(k)\!=\mathbf {K}_T\!(k)\hat{\mathbf {x}}_{T-1}^*\!(k)+\mathbf {k}_T\!(k)\) (cf. Eq. 17 for \(t=T\)) with \(\mathbf {K}_T\!(k)\) and \(\mathbf {k}_T\!(k)\) given by Eq. 11 and Eq. 12 for \(t=T\) respectively.

For the derivation of period-\((T\!-\!1)\) parameter matrices of the value function, i.e., the Riccati matrices for time period \(T\!-\!1\), we set up the cost-to-go function \(J^{i*}_T\!(k)+J^{i}_{T-1}\!(k)\) and replace \(\hat{\mathbf {x}}_{T}^*\!(k)\) and \(\hat{\mathbf {u}}_{T}^{i*}\!(k)\) by Eq. 17 and Eq. 18 for \(t=T\!-\!1\) respectively.

where the scalar \(\psi _{T-1}\!(k)\) is without any relevance for further calculations since it is the sum of all terms that do not depend on \(\hat{\mathbf {x}}_{T-1}^{*}\!(k)\) and \(\mathbf {u}_{T-1}^{i}\!(k)\). Collecting all terms containing \(\hat{\mathbf {x}}_{T-1}^{*}\!(k)\) we get

and can identify the Riccati matrices for \(T\!-\!1\) by comparing coefficients with Eq. 53. The Riccati matrices are then determined by Eq. 9 and Eq. 10 for \(t=T\!-\!1\) respectively. Then, we minimize the objective function of player \(i\) (\(i=1,\ldots ,n\)), i.e.,

analogously to what was done for period \(T\): In Eq. 53 we replace \(\hat{\mathbf {x}}_{T-1}^{*}\!(k)\) by the linearized system dynamics, \(\mathbf {A}_{T-1}\!(k)\hat{\mathbf {x}}_{T-2}^*\!(k)+\sum \nolimits _{i=1}^n\mathbf {B}_{T-1}^{i}\!(k)\mathbf {u}_{T-1}^{i}\!(k)+\mathbf {c}_{T-1}\!(k)\), compute the expression’s first derivative with respect to \(\mathbf {u}_{T-1}^{i}\!(k)\) \(\forall i\!\in \!\{1,\ldots ,n\}\), and set the derivative equal to zero. A little algebra yields optimal control variables of the form \(\hat{\mathbf {u}}_{T-1}^{i*}\!(k)\!=\mathbf {G}_{T-1}^{i}\!(k)\hat{\mathbf {x}}_{T-2}^*\!(k)+\mathbf {g}_{T-1}^{i}\!(k)\) with \(\mathbf {G}_{T-1}^{i}\!(k)\) and \(\mathbf {g}_{T-1}^{i}\!(k)\) being derived by solving \(2n\) linear matrix equations consisting of Eq. 13 and Eq. 14 \(\forall i\!\in \!\{1,\ldots ,n\}\) (for \(t\!=\!T\!-\!1\)) respectively. The optimal state variable for period \(t\!=\!T\!-\!1\) can, then, be determined by \(\hat{\mathbf {x}}_{T-1}^*\!(k)\!=\mathbf {K}_{T-1}\!(k)\hat{\mathbf {x}}_{T-2}^*\!(k)+\mathbf {k}_{T-1}\!(k)\) (cf. Eq. 17 for \(t\!=\!T\!-\!1\)) with \(\mathbf {K}_{T-1}\!(k)\) and \(\mathbf {k}_{T-1}\!(k)\) given by Eq. 11 and Eq. 12 for \(t\!=\!T\!-\!1\) respectively, with the Riccati matrices being determined by Eq. 9 and Eq. 10 (determined again by comparing coefficients).

The procedure sketched for \(t\!=\!T\) and \(t\!=\!T\!-\!1\) can be extended to period \(t\!=\!T\!-\!2\) and generalized to any other period \(t\!=\!\tau (\tau \ge 1)\) by induction. The existence of uniquely determined Riccati matrices for all periods \(t\!\in \!\{1,\ldots ,T\}\) of the LQDG, i.e., each player \(i\) seeking to minimize Eq. 1 subject to Eq. 5, can readily be verified according to, e.g. Basar and Olsder (1999), if the penalty matrices for the states are nonnegative definite (which is what we assumed).

To conclude, the LQDG at iteration step \(k\) is solved by starting with the terminal conditions \(\mathbf {P}_{iT}\!(k)\) and \(\mathbf {p}_{iT}\!(k)\), and integrating the Riccati equations (Eqs. 9 and 10) backward in time. Utilizing both Riccati matrices, \(\mathbf {P}_t^{i}\!(k)\) and \(\mathbf {p}_t^{i}\!(k)\), and feedback matrices, \(\mathbf {G}_t^{i}\!(k)\) and \(\mathbf {g}_t^{i}\!(k)\), computed for all players and for all time periods, i.e., \(\forall i\!\in \!\{1,\ldots ,n\}\) and \(\forall t\!\in \!\{1,\ldots ,T\}\), the \(k\)th iteration of the feedback Nash equilibrium path for the state variable, \(\{\hat{\mathbf {x}}_t^*\!(k)\}_{t=1}^T\), and the \(k\)th iteration of player \(i\)’s equilibrium path for their own control variable, \(\{\hat{\mathbf {u}}_t^{i*}\!(k)\}_{t=1}^T\), are determined by Eq. 17 and Eq. 18 respectively, both being initiated with \(\hat{\mathbf {x}}_{0}^*\!(k)\!=\!\bar{\mathbf {x}}_{0}\) (where \(\mathbf {K}_t\!(k)\) and \(\mathbf {k}_t\!(k)\) are defined by Eq. 11 and Eq. 12 respectively).

Given the values of optimal states and controls, the scalar values of the loss functions can be determined.

Rights and permissions

About this article

Cite this article

Behrens, D.A., Neck, R. Approximating Solutions for Nonlinear Dynamic Tracking Games. Comput Econ 45, 407–433 (2015). https://doi.org/10.1007/s10614-014-9420-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-014-9420-4