Abstract

We revisit the gradient projection method in the framework of nonlinear optimal control problems with bang–bang solutions. We obtain the strong convergence of the iterative sequence of controls and the corresponding trajectories. Moreover, we establish a convergence rate, depending on a constant appearing in the corresponding switching function and prove that this convergence rate estimate is sharp. Some numerical illustrations are reported confirming the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerical solution methods for various optimal control problems have been investigated during the last decades [6, 8,9,10,11]. However, in most of the literature, the optimal controls are assumed to be at least Lipschitz continuous. This assumption is rather strong, as whenever the control appears linearly in the problem, the lack of coercivity typically leads to discontinuities of the optimal controls. Recently, optimal control problems with bang–bang solutions attract more attention. Stability and error analysis of bang–bang controls can be found in [14, 26, 32]. Euler discretizations for linear–quadratic optimal control problems with bang–bang solutions were studied in [1, 2, 5, 29]. Higher order schemes for linear and linear–quadratic optimal control problems with bang–bang solutions were developed in [24, 27].

On the other hand, among many traditional solution methods in optimization, projection-type methods are widely applied because of their simplicity and efficiency [13, 15, 31].

Recently, the gradient projection method has been reconsidered for solving general optimal control problems [22, 28]. Under some suitable conditions, it was proved that the control sequence converges weakly to an optimal control and the corresponding trajectory sequence converges strongly to an optimal trajectory. However, no convergence rate result has been established.

In this paper, we study the gradient projection method for optimal control problems with bang–bang solutions. In particular we consider the following problem

subject to

and

Here [0, T] is a fixed time horizon, admissible controls are all measurable functions \(u:[0,T]\rightarrow U\), while \(x(t)\in {\mathbb {R}}^n\) denotes the state of the system at time \(t\in [0,T]\) and the functions \(f:{\mathbb {R}}\times {\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}^n, g:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) and \(h:{\mathbb {R}}\times {\mathbb {R}}^n\times {\mathbb {R}}^m\rightarrow {\mathbb {R}}\) are given.

Further we assume (see the next section for precise formulations) that the data are smooth enough, that the problem (1.1)–(1.3) is convex and that for the (unique) optimal control \(u^*\) the objective function fulfills a certain growth condition. In particular we show that this condition is satisfied in the bang–bang case if each component of the associated switching function satisfies a growth condition as given in [25, 29].

Under these assumptions, we prove that the control sequence actually converges strongly to the solution. Moreover, the convergence rates for both controls and states are provided, depending on the constant appearing in the growth condition for the switching function. An example is analysed showing that the estimation for these convergence rates is sharp.

The paper is organized as follows: In Sect. 2, we specify the assumptions we use and recall some facts which will be useful in the sequel. Section 3 discusses the convergence properties of the gradient projection method. Some numerical examples of linear–quadratic type are reported in Sect. 4 illustrating the results in the previous section. Some final remarks are given in the last section.

2 Preliminaries

In this section, we will clarify the assumptions used and recall some important facts which are necessary to establish our result.

By \({\mathcal {U}}:=L^2([0,T],U)\) we denote the set of all admissible controls and if not stated otherwise \(\Vert \cdot \Vert \) denotes the \(L^2\)-norm. The first two assumptions guarantee that the problem (1.1)–(1.3) is meaningful.

Assumption A1

For any given control \(u\in {\mathcal {U}}\) there is a unique solution \(x=x(u)\) of (1.2) on [0, T].

Assumption A2

The problem (1.1)–(1.3) has a solution \((x^*,u^*)\).

Now recall the Hamiltonian of (1.1)–(1.3) as

Then by the Pontryagin maximum principle there is an absolutely continuous function \(p^*\) such that \((x^*,u^*,p^*)\) solves the adjoint equation

and for every \(u\in U\)

We define \(J:{\mathcal {U}}\rightarrow {\mathbb {R}}\) via \(J(u):=\psi (x(u),u)\), where x(u) is the solution (1.2). Then we have the following useful formula for the gradient of J (see, e.g. [22, 31]).

where x and p are the unique solution of (1.2) and (2.1) depending on \(u\in {\mathcal {U}}\).

Assumption A3

The objective function J is continuously differentiable on \({\mathcal {U}}\) with Lipschitz derivative.

We denote by L the Lipschitz modulus of the gradient \(\nabla J\) of J and write \(J^*:=J(u^*)\) for its optimal value. The following result is well known (see e.g. [23, Lemma 1.30]).

Lemma 2.1

Suppose that A3 is fulfilled. Then for every \(u,v \in {\mathcal {U}}\) the following estimation holds

Assumptions A1–A3 are common in optimal control. For example the following two Assumptions B1–B2 imply A1–A3 (cf. [22])

Assumption B1

The functions f and h are of the form \(f(t,x,u)=f_0(x)+f_1(x)u\) and \(h(t,x,u)=h_0(x)+\langle h_1(x),u\rangle \) respectively, where \(f_0:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n, f_1:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^{n\times m}, h_0:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) and \(h_1:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) are twice continuously differentiable.

Assumption B2

There exists \(c\ge 0\) such that for every \(x\in {\mathbb {R}}^n\) and \(u\in U\):

Additionally we assume the following.

Assumption A4

The objective function J is convex.

Note that if the set \({\mathcal {F}}\) of admissible pairs is convex this assumption is equivalent to the statement that the function \(\psi \) is convex on \({\mathcal {F}}\). In particular this is the case if f is affine (i.e. f is of the form \(f(t,x,u)=A(t)x+B(t)u+d(t)\)) as in [25, 29].

Further we will assume a growth condition for J that is similar to (4.7) in [3].

Assumption A5

For a solution \(u^*\) of (1.1)–(1.3) there are constants \(\beta >0\) and \(\theta \ge 0\) such that for every \(u\in {\mathcal {U}}\) we have

Note that in particular A5 implies that the solution \(u^*\) is unique.

Remark 2.2

For coercive optimal control problems (in the sense of [12]) Assumptions A1–A4 are fulfilled as well as A5 for \(\theta =0\). In these problems the objective function J however is even strongly convex and therefore one can apply known results (e.g. [21, Theorem 2.1.15]) directly to show linear convergence of the gradient projection method in this case.

In the following we will show that Assumption A5 is fulfilled for bang–bang controls with no singular arcs. We recall that in the case of bang–bang controls the function \(\sigma ^*:=H_u(\cdot ,x^*,u^*,p^*)\) is called switching function corresponding to the triple \((x^*,u^*,p^*)\). For every \(j\in \{1,\ldots ,m\}\) denote by \(\sigma ^*_j\) its j-th component. The following assumption says that the switching function \(\sigma ^*\) satisfies a growth condition around the switching points, which implies that \(u^*\) is strictly bang–bang.

Assumption B3

There exist real numbers \(\theta ,\alpha ,\tau >0\) such that for all \(j\in \{1,\ldots ,m\}\) and \(s\in [0,T]\) with \(\sigma ^*_j(s)=0\) we have

Assumption B3 plays the main role in the study of regularity, stability and error analysis of discretization techniques for optimal control problems with bang–bang solutions. Many variations of this assumption are used in the literature about bang–bang controls. To our knowledge the first assumption of this type was introduced by Felgenhauer [14] for continuously differentiable switching functions with \(\theta =1\) to study the stability of bang–bang controls. Alt et al. [1, 2, 4] used a slightly stronger version of B3 with \(\theta =1\), that additionally excludes the endpoints 0 and T as zeros of the switching function, to investigate the error bound for Euler approximation of linear–quadratic optimal control problems with bang–bang solutions. Quincampoix and Veliov [26] used a rank condition which implies B3 (including cases where \(\theta \ne 1\)) to obtain the metric regularity and stability of Mayer problems for linear systems. Seydenschwanz [29], Preininger et al. [25], Pietrus, Scarinci and Veliov [24, 27] used this assumption in the study of metric (sub)-regularity, stability and error estimate for discretized schemes of linear–quadratic optimal control problems with bang–bang solutions.

To prove that B3 implies A5 we need the following lemma, which is a simplified version of [29, Lemma 1.3] (see also, [1, Lemma 4.1]).

Lemma 2.3

Let Assumptions A1–A2 be fulfilled and let \(u^*\) be a solution of (1.1)–(1.3) such that B3 is fulfilled for some \(\theta > 0\). Then there exists constants \(\beta >0\) such that for any feasible \(u\in {\mathcal {U}}\) it holds

where \(\Vert \cdot \Vert _1\) is the \(L^1\)-norm.

Proposition 2.4

Let Assumptions A1, A2 and A4 be fulfilled and let \(u^*\) be a solution of (1.1)–(1.3) such that B3 is fulfilled. Then A5 holds.

Proof

From Assumption A4 and (2.2) we obtain

Since \(\Vert \cdot \Vert ^2 \le C\Vert \cdot \Vert _1 \) on \({\mathcal {U}}\) for some constant \(C>0\), from Lemma 2.3 there exists \(\beta >0\) such that

Combining (2.3) and (2.4) we obtain A5. \(\square \)

To define the gradient projection method in the next chapter we will need the following notion of a projection. For each \(u\in {\mathcal {U}}\), there exists a unique point in \({\mathcal {U}}\) (see [17, p. 8]), denoted by \(P_{{\mathcal {U}}}(u)\), such that

It is well known [17, Theorem 2.3] that the projection operator can be characterized by

Further to establish the convergence rate of the gradient projection method, we will need the following lemmas.

Lemma 2.5

[18, Lemma 7.1] Let \(\alpha >0\) and let \(\{\delta _k\}_{k=0}^{\infty }\) and \(\{s_k\}_{k=0}^{\infty }\) be two sequences of positive numbers satisfying the conditions

Then there is a number \(\gamma >0\) such that

In particular, we have \(\lim _{k\rightarrow \infty }s_k=0\) whenever \(\sum _{k=0}^{\infty }\delta _k=\infty .\)

Lemma 2.6

[7, Lemma 3.2] Let \(\left\{ \alpha _{k}\right\} , \left\{ s_{k}\right\} \) be sequences in \({\mathbb {R}}_{+}\) satisfying

the sequence \(\left\{ \alpha _{k}\right\} \) is non-summable and the sequence \(\left\{ s_{k}\right\} \) is decreasing. Then

where the o-notation means that \(s_k = o(1/t_k)\) if and only if \( \lim _{k \rightarrow \infty } s_k t_k = 0\).

3 Convergence analysis

We consider the following Gradient Projection Method (GPM):

Algorithm GPM

-

Step 0: Choose a sequence \(\{\lambda _k\}\) of positive real numbers and an initial control \(u_0\in {\mathcal {U}}\). Set \(k=0\).

-

Step 1: Compute the gradient \(\nabla J(u_k)(t):=f_u(t,x_k(t),u_k(t))^\top p_k(t)+ h_u(t,x_k(t),u_k(t))^\top \) by solving the following differential equations

$$\begin{aligned} \dot{x}_k(t)= & {} f(t,x_k(t),u_k(t)), \quad x_k(0)=x_0;\nonumber \\ \dot{p}_k(t)= & {} -f_x(t,x_k(t),u_k(t))^\top p_k(t)- h_x(t,x_k(t),u_k(t))^\top , \nonumber \\ p_k(T)= & {} \nabla g(x_k(T)). \end{aligned}$$(3.1) -

Step 2: Compute

$$\begin{aligned} {u}_{k+1} = P_{\mathcal {U}}(u_k-\lambda _k \nabla J(u_k)). \end{aligned}$$(3.2) -

Step 3: If \(u_{k+1}=u_k\) then Stop. Otherwise replace k by \(k+1\) and go to Step 1.

It is known (see e.g. [21, Theorem 2.1.14]) that for J continuously differentiable with Lipschitz derivative the gradient (projection) method has the convergence rate \(O(\frac{1}{k})\) in terms of the objective value. I.e. that

For the strongly convex objective function, it is known that the iterative sequence \(\left\{ u_k \right\} \) converges linearly to the unique solution. However, it is not possible to show convergence for the iterative sequence \(\left\{ u_k \right\} \) for the general convex case. Here, thanks to Assumptions A1–A5, we are able to prove that the iterative sequence \(\left\{ u_k \right\} \) generated by the GPM converges strongly to an optimal control. Moreover, the convergence rate is established, depending on the constants \(\theta \) appearing in Assumption A5.

The following estimate will be used repeatedly in our convergence analysis.

Proposition 3.1

Let Assumptions A1–A4 be satisfied, let \(u^*\) be a solution of (1.1)–(1.3) such that Assumption A5 is fulfilled with some \(\theta >0\) and \(\beta >0\). Then for all \(k \in {\mathbb {N}},\) the following estimate holds

Proof

Since \(u_{k+1}=P_{{\mathcal {U}}}(u_k-\lambda _k \nabla J(u_k))\), it follows from (2.5) that

Substituting \(u=u^*\in {\mathcal {U}}\) into the latter inequality yields

or equivalently

This implies that

Since J has Lipschitz derivative, we have from Lemma 2.1 that

Substituting \(u=u_k\) and \(v=u_{k+1}\) into the last inequality yields

Moreover, since J is convex, we obtain

Combining (3.6), (3.7) and (3.8) gives

Using Assumption A5 we obtain

which is (3.4). \(\square \)

We are now in the position to establish the strong convergence and the convergence rate of \(\left\{ u_k \right\} \) to a solution.

Theorem 3.2

Let Assumptions A1–A4 be satisfied, let \(u^*\) be a solution of (1.1)–(1.3) such that Assumption A5 is fulfilled with some \(\theta >0\). Let the sequence \(\left\{ \lambda _{k} \right\} \) be chosen such that

Then we have

-

(i)

\(\Vert u_{k}-u^*\Vert ^2 \le \eta k^{-\frac{1}{\theta }},\) for all k, where \(\eta >0\) is a constant;

-

(ii)

The sequence \(\{J(u_k)\}\) is monotonically decreasing. Moreover \( \sum _{k=0}^{\infty } \left( J(u_k)\right. \left. -J(u^*)\right) < +\infty .\)

Proof

We first prove that \(\{u_{k}\}\) converges strongly to \(u^*\). From (3.4) and \(0 < \lambda _{\min } \le \lambda _{k} \le \frac{1}{L}\), the sequence \(\left\{ \Vert u_{k}-u^*\Vert \right\} \) is decreasing and bounded from below by 0, and therefore it converges. Moreover, since

we conclude that \(\left\{ \Vert u_{k}-u^*\Vert \right\} \) converges to 0, which means \(\{u_{k}\}\) converges strongly to \(u^*\).

Now we can apply Lemma 2.5 for \(s_k= \Vert u_{k}-u^*\Vert ^2, \alpha =\theta \) and \(\delta _k= 2\lambda _{\min } \beta \) to obtain the convergence rate (i) for \(\left\{ \Vert u_{k}-u^*\Vert \right\} \).

Substituting \(u=u_k\) in (3.5) implies

Combining (3.7) and (3.11) we get

Hence the sequence \(\{J(u_k)\}\) is monotonically decreasing. Now from (3.9) and \(0 < \lambda _{\min } \le \lambda _{k} \le \frac{1}{L}\) we have

Summing this inequality from 0 to \(i-1\) we obtain

Finally, taking the limit as \(i \rightarrow \infty \), we obtain (ii). \(\square \)

Remark 3.3

From (ii) in Theorem 3.2, we can conclude that \(J(u_{k}) -J(u^*)=o(\frac{1}{k})\), which significantly improves the error estimate \(J(u_{k}) -J(u^*)=O(\frac{1}{k})\) in (3.3).

The following example illustrates that the estimation (i) in Theorem 3.2 cannot be improved when \(\lambda _k\) is bounded from below by a constant \(\lambda _{\min }\).

Example 3.4

Consider the following optimal control problem

where \(\sigma \) is any continuous function fulfilling Assumption B3. Then \(\nabla J(u)(t)=\sigma (t)\) is independent of u and the optimal control is given by \(u^*(t)=-sgn(\sigma (t))\). Starting the GPM with \(u_0\equiv 0\) and \(\lambda _k=\lambda \) for some \(\lambda \in {\mathbb {R}}^+\) we get

In the special case \(\sigma (t)=t^\theta \), we therefore have \(u_k(t)=\max \{-1,-k\lambda t^\theta \}.\) This implies that for \(k>\frac{1}{\lambda T^{\theta }}\), we have

For the objective value we get

which is stronger than (ii). It remains unknown whether in the general case the estimation (ii) can be improved to an estimation similar to (3.14).

Using the stronger Assumptions B1–B2 the convergence rate of the corresponding trajectories can be obtained as a corollary of Theorem 3.2 and [22, Lemma 2].

Corollary 3.5

Let Assumptions B1, B2 and A4 be satisfied and let \((x^*,u^*)\) be a solution of (1.1)–(1.3) such that Assumption A5 is fulfilled with some \(\theta >0\). Further suppose that \(\lambda _k\in [\lambda _{\min },1/L] \subset (0,1/L]\). Then the sequence \(\{x_{k}(t)\}\) of trajectories converges strongly to the solution \(x^*\). Moreover, there exists a positive constant C such that for all k it holds,

where \(\Vert x(\cdot )\Vert _c=\max _{t\in [0,T]}|x(t)|\).

When the Lipschitz modulus L is difficult to estimate, one can consider the non-summable diminishing stepsizes as follow.

Theorem 3.6

Let Assumptions A1–A4 be satisfied, let \(u^*\) be a solution of (1.1)–(1.3) such that Assumption A5 is fulfilled with some \(\theta >0\). Let the sequence \(\left\{ \lambda _{k} \right\} \) be chosen such that

Then the sequence \(\{u_{k}\}\) converges strongly to \(u^*\). Moreover there exists \(N>0\) such that for all \(k \ge N\), it holds

-

(i)

\( \Vert u_{k}-u^*\Vert ^2 \le C\mu _k^{-\frac{1}{\theta }}\)

-

(ii)

\(J(u_k)-J(u^*)=o\left( \frac{1}{\mu _k}\right) \),

where \(\mu _k:=\sum _{i=N}^{k-1}\lambda _i\) and C is a constant.

Proof

Let \(\beta >0\) be as in Proposition 3.1. Since \(\lim _{k \rightarrow \infty } \lambda _{k} =0\), there exists \(N>0\) such that for all \(k\ge N\) we have \(1-\lambda _k L > 0\) and \(2\lambda _k\beta <1\). From (3.4) we have that \(\left\{ \Vert u_{k}-u^*\Vert \right\} \) is decreasing, therefore it converges. Moreover

Using Lemma 2.5 with \(s_k=\Vert u_{k+N}-u^*\Vert ^2, \alpha =\theta \) and \(\delta _k:=2\lambda _{k+N}\beta \) we get that there exists \(\gamma >0\) such that

which shows (i).

From (3.9), we have

leading to

Applying Lemma 2.6 with \(\alpha _k=\lambda _{N+k}\) and \(s_k=J(u_{N+k})-J(u^*)\) we obtain (ii). \(\square \)

Using the same example as above we can again show that the estimation (i) cannot be improved.

Example 3.7

Consider the problem (3.13) with \(\sigma (t):=t^\theta \) again. As before we use GPM with \(u_0\equiv 0\) but now with non-constant \(\lambda _k\). Denoting \(\mu _k:=\sum _{i=0}^{k-1}\lambda _i\) we get \(u_k(t)=\max \{-1,-\mu _k t^\theta \}.\) Hence for k big enough such that \(\mu _k>\frac{1}{T^\theta }\) we have

and

Similar to Corollary 3.5 we obtain

Corollary 3.8

Let Assumptions B1, B2 and A4 be satisfied and let \((x^*,u^*)\) be a solution of (1.1)–(1.3) such that Assumption A5 is fulfilled with some \(\theta >0\). Further let the sequence \(\left\{ \lambda _{k} \right\} \) be chosen such that

Then the sequence \(\{x_{k}(t)\}\) of trajectories converges strongly to the solution \(x^*\). Moreover, there exists a positive constant C such that for all k it holds,

4 Numerical illustrations

In this section, we present some numerical experiments for a class of optimal control problems with bang–bang solutions namely linear–quadratic problem, described as follow.

where

Here we use the classical Euler discretization where the error estimate can be found in [1, 2, 5]. We choose a natural number N and define the mesh size \(h:=T/N\). Since the optimal control is assumed to be bang–bang, we identify the discretized control \(u^N:=(u_0, u_1,\ldots ,u_{N-1})\) with its piece-wise constant extension:

Moreover, we identify the discretized state \(x^N:=(x_0, x_1,\ldots ,x_{N})\) and costate \(p^N:=(p_0,p_1,\ldots ,p_N)\) with its piece-wise linear interpolations

and

The Euler discretization of (1.1) is given by

where \(\psi _N\) is the cost function defined by

Observe that (\(P_N\)) is a quadratic optimization problem over a polyhedral convex set, where the gradient projection method converges linearly, see e.g., [30]. This means that for each N, there exists \(\rho _N \in (0,1)\) such that

In the following examples, we will consider various values of N which suggest that

This will confirm the sublinear rate obtained in Theorem 3.2. The codes are implemented in Matlab. We perform all computations on a windows desktop with an Intel(R) Core(TM) i7-2600 CPU at 3.4 GHz and 8.00 GB of memory. Since \(\nabla J\) is linear in u, one can roughly estimate its Lipschitz constant by \(L=\Vert \nabla J(u_0)\Vert /\Vert u_0\Vert \). We choose starting control \(u_0(t)=1 \, \forall t \in [0,T]\) and stepsize \(\lambda _k =\lambda < 1/L\). The stopping condition is \(\Vert u^N_k-u^N_{k-1}\Vert \le \epsilon \), where \(\epsilon =10^{-10}\).

The following example is taken from [27].

Example 4.1

Here, with appropriate values of a and b, there is a unique optimal solution \(u^*\) with a switch from \(-~1\) to 1 at time \(\tau \), which is a solution of the equation

As in [27], we choose \(a=1, b=0.1\), then \(\tau =0.492487520\) is a simple zero of the switching function. Therefore, \(\theta =1\) and the exact optimal control is

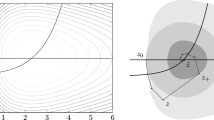

The convergence results for Example 4.1 with some different values of N are reported in Table 1. We can see that when N increases, \(\rho _N\) also increases and approaches 1. This means that we can only guarantee the sublinear convergence for the continuous problem. Figure 1 displays the optimal control and the optimal states when the discretized size \(N=50\).

The following second example is taken from [1, Example 6.1]

Example 4.2

The exact optimal control is given by

where \(\tau = 3.5174292\).

The convergence results for Example 4.2 with some different values of N are reported in Table 2. Again, we see that when N increases, \(\rho _N\) also increases and approaches 1. Figure 2 displays the optimal control and the optimal states for \(N=50\).

Optimal control (left) and optimal states (right) for Example 4.2 when \(N=50\)

In the next example, we consider a problem in which Assumption A5 is satisfied for \(\theta \not =1\) (see also [27, 29]).

Example 4.3

Here we present experiments with a family of problems satisfying Assumption A5 with various values of \(\theta \), given in [29]. Below, the time-interval is [0, 1], the dimension of the state is \(n=\theta +1\) and the dynamics system depends on parameters \(s_j\):

Approximate optimal controls after 1000 iterations when \(\theta =2\) (left) and \(\theta =3\) (right) for Example 4.3 with \(N=500\)

For any natural number \(\theta \), the values of the parameters \(s_j\) are chosen as

Then Assumption A5 is satisfied with the constant \(\theta \) [29] and exact optimal control is given by

if \(\theta \) is odd, and \(u^*(t)=-1\) if \(\theta \) is even. The convergence results for Example 4.3 when \(\theta =2,3\) with some different values of N are reported in Table 3. Figure 3 displays the approximate optimal controls after 1000 iterations for \(N=500\). It seems like the optimal control has \(\theta \) switching points. This is to be expected since \(\sigma ^*\) has a zero of order \(\theta \) at 1 / 2.

5 Concluding remarks

Note that the main results in Theorems 3.2 and 3.6 use Assumption A5 which is more general than just the bang–bang case. For example Assumption A5 is also satisfied in the strongly convex case, where even better convergence results are known. Further it would be interesting to see under what assumptions our results still apply in the case of singular arcs. This is challenging due to the fact that currently there is no condition similar to the bang–bang Assumption B3 that ensures Assumption A5 and therefore remains as a topic for future research.

References

Alt, W., Baier, R., Gerdts, M., Lempio, F.: Error bounds for Euler approximation of linear–quadratic control problems with bang–bang solutions. Numer. Algebra Control Optim. 2(3), 547–570 (2012)

Alt, W., Baier, R., Gerdts, M., Lempio, F.: Approximations of linear control problems with bang–bang solutions. Optimization 62(1), 9–32 (2013)

Alt, W., Felgenhauer, U., Seydenschwanz, M.: Euler discretization for a class of nonlinear optimal control problems with control appearing linearly. Comput. Optim. Appl. (2017). https://doi.org/10.1007/s10589-017-9969-7

Alt, W., Schneider, C., Seydenschwanz, M.: An implicit discretization scheme for linear–quadratic control problems with bangbang solutions. Optim. Methods Softw. 29(3), 535–560 (2014)

Alt, W., Schneider, C., Seydenschwanz, M.: Regularization and implicit Euler discretization of linear–quadratic optimal control problems with bang–bang solutions. Appl. Math. Comput. 287–288, 104–124 (2016)

Bonnans, J.F., Festa, A.: Error estimates for the Euler discretization of an optimal control problem with first-order state constraints. SIAM J. Numer. Anal. 55(2), 445–471 (2017)

Dong, Y.: Comments on the proximal point algorithm revisited. J. Optim. Theory Appl. 166(1), 343–349 (2015)

Dontchev, A.L.: An a priori estimate for discrete approximations in nonlinear optimal control. SIAM J. Control Optim. 34(4), 1315–1328 (1996)

Dontchev, A.L., Hager, W.W.: Lipschitzian stability in nonlinear control and optimization. SIAM J. Control Optim. 31(3), 569–603 (1993)

Dontchev, A.L., Hager, W.W., Malanowski, K.: Error bounds for Euler approximation of a state and control constrained optimal control problem. Numer. Funct. Anal. Optim. 21(5–6), 653–682 (2000)

Dontchev, A.L., Hager, W.W., Veliov, V.M.: Second-order Runge–Kutta approximations in control constrained optimal control. SIAM J. Numer. Anal. 38(1), 202–226 (2000)

Dontchev, A.L., Hager, W.W., Veliov, V.M.: Uniform convergence and mesh independence of Newton’s method for discretized variational problems. SIAM J. Control Optim. 39(3), 961–980 (2000)

Dunn, J.C.: Global and asymptotic convergence rate estimates for a class of projected gradient processes. SIAM J. Control Optim. 19(3), 368–400 (1981)

Felgenhauer, U.: On stability of bang–bang type controls. SIAM J. Control Optim. 41(6), 1843–1867 (2003)

Kelley, C.T., Sachs, E.W.: Mesh independence of the gradient projection method for optimal control problems. SIAM J. Control Optim. 30(2), 477–493 (1991)

Khoroshilova, E.V.: Extragradient-type method for optimal control problem with linear constraints and convex objective function. Optim. Lett. 7, 1193–1214 (2012)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Li, G., Mordukhovich, B.S.: Hölder metric subregularity with applications to proximal point method. SIAM J. Optim. 22(4), 1655–1684 (2012)

Luo, Z.Q., Tseng, P.: Error bounds and convergence analysis of feasible descent methods: a general approach. Ann. Oper. Res. 46(1), 157–178 (1993)

Luo, Z.Q., Tseng, P.: Error bound and convergence analysis of matrix splitting algorithms for the affine variational inequality problem. SIAM J. Optim. 2(1), 43–54 (1992)

Nesterov, Y.: Introductory Lectures on Convex Optimization. Springer, Berlin (2004)

Nikol’skii, M.S.: Convergence of the gradient projection method in optimal control problems. Comput. Math. Model. 18(2), 148–156 (2007)

Peypouquet, J.: Convex Optimization in Normed Spaces: Theory, Methods and Examples. Springer, Berlin (2015)

Pietrus, A., Scarinci, T., Veliov, V.M.: High order discrete approximations to Mayer’s problems for linear systems. SIAM J. Control Optim. 56(1), 102–119 (2018)

Preininger, J., Scarinci, T., Veliov, V.M.: Metric regularity properties in bang–bang type linear–quadratic optimal control problems. In: Research Report 2017-07, ORCOS, TU Wien http://orcos.tuwien.ac.at/fileadmin/t/orcos/Research_Reports/2017-07.pdf (2017)

Quincampoix, M., Veliov, V.M.: Metric regularity and stability of optimal control problems for linear systems. SIAM J. Control Optim. 51(5), 4118–4137 (2013)

Scarinci, T., Veliov, V.M.: Higher-order numerical schemes for linear quadratic problems with bang-bang controls. Comput. Optim. Appl. (2017). https://doi.org/10.1007/s10589-017-9948-z

Scheiber, E.: On the gradient method applied to optimal control problem. Bull. Transilv. Univ. Brasov 7(56), 139–148 (2014)

Seydenschwanz, M.: Convergence results for the discrete regularization of linear–quadratic control problems with bang–bang solutions. Comput. Optim. Appl. 61(3), 731–760 (2015)

Tuan, H.N.: Linear convergence of a type of iterative sequences in nonconvex quadratic programming. J. Math. Anal. Appl. 423(2), 1311–1319 (2015)

Vasil’ev, F.P.: Optimization Methods. Factorial Press, Moscow (2002). (in Russian)

Veliov, V.M.: Error analysis of discrete approximation to bang–bang optimal control problems: the linear case. Control Cybern. 34(3), 967–982 (2005)

Acknowledgements

Open access funding provided by Austrian Science Fund (FWF). The authors thank Vladimir Veliov for introducing them to the topic and for fruitful discussions. They are also thankful to Ursula Felgenhauer and the two anonymous referees for constructive comments which helped improving the presentation of the paper significantly.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research is supported by the Austrian Science Foundation (FWF) under Grant No. P26640-N25.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Preininger, J., Vuong, P.T. On the convergence of the gradient projection method for convex optimal control problems with bang–bang solutions. Comput Optim Appl 70, 221–238 (2018). https://doi.org/10.1007/s10589-018-9981-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-018-9981-6