Abstract

During the development of the Third U.S. National Climate Assessment, an indicators system was recommended as a foundational product to support a sustained assessment process (Buizer et al. 2013). The development of this system, which we call the National Climate Indicators System (NCIS), has been an important early product of a sustained assessment process. In this paper, we describe the scoping and development of recommendations and prototypes for the NCIS, with the expectation that the process and lessons learned will be useful to others developing suites of indicators. Key factors of initial success are detailed, as well as a robust vision and decision criteria for future development; we also provide suggestions for voluntary support of the broader scientific community, and for funding priorities, including a research team to coordinate and prototype the indicators, system, and process. Moving forward, sufficient coordination and scientific expertise to implement and maintain the NCIS, as well as creation of a structure for scientific input from the broader community, will be crucial to its success.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

The Third U.S. National Climate Assessment (NCA3; NCA when referring to the effort more broadly) developed new modes of conceptualizing its engagement processes and of integrating evolving scientific insights beyond the quadrennial assessment reports (Moser et al. 2015, this issue). Thus, over the past several years there have been calls for developing a climate indicators systems as part of the NCA (Buizer et al. 2013; Janetos et al. 2012). A National Research Council report (2009a) specifically suggested the U.S. Global Change Research Program (USGCRP) should consider developing a report card or climate change indices that could communicate complex scientific information effectively to a variety of audiences. Such indicators were expected to have utility beyond the NCA; for example, Victor and Kennel (2014) called for a broader range of climate indicators and the National Research Council (2013, 2015) recommended the next strategies for national sustainability to include explicit recognition of decision-making and adaptation and the use of metrics and indicators to evaluate progress.

Indicators are reference tools that can be used to regularly update status, rates of change, or trends of a phenomenon using measured data, modeled data, or an index to assess or advance scientific understanding, to communicate, to inform decision-making, or to denote progress in achieving management objectives. Indicators differ from data visualization tools in that they are systematically updated and comparative to a baseline of change.

Indicators have long been used to describe status and assess progress for key phenomena that are measureable directly or indirectly (e.g. through the use of proxies). Some economic and health indicators have been developed and used consistently and effectively across locations, and refined strategically over multiple decades (Cobb and Rixford 1998; Lippman 2006), but not all efforts have been successful (Innes 1990) or institutionalized (Ogburn 1933). Drawing on those experiences, more recent efforts have called for market and non-market valuation of environmental assets (National Research Council 1999) and the development of national ecological indicators (National Research Council 2000) that led to The State of the Nations Ecosystems effort developed by the Heinz Center (2008). And recently the National Research Council initiated an effort to consider sustainability metrics (2015). Though physical climate indicators are already presented through a range of Federal agency websites (e.g., https://www.climate.gov/maps-data), a unified effort that could support a sustained NCA process did not previously exist.

In considering establishment of an indicators system during the development of the NCA3, the USGCRP hosted three indicators workshops focused on ecological indicators (USGCRP 2010), physical indicators (USGCRP 2011b), and societal indicators (USGCRP 2011a) (Fig. 1). All the workshop outcomes supported the idea of an indicator system and noted the need for 1) developing processes for a sustained indicators effort, 2) leveraging existing data and indicator products, and 3) including stakeholder perspectives to assure utility for user communities (Online Resource 1: Outcomes of NCA Workshops focused on Indicators).

The workshops started a multi-year process (Fig. 1) to develop recommendations and create a prototype of an interagency indicators system, which we call the National Climate Indicator System (NCIS). The National Climate Assessment and Development Advisory Committee (NCADAC), the Federal Advisory Committee established for NCA3, empaneled an Indicators Working Group (hereafter IWG) in 2011 (Fig. 1; USGCRP 2015), and asked them to develop recommendations (Janetos et al. 2012; Buizer et al. 2013). The IWG included about 25 members from the NCADAC, Federal agencies, academia, and the private sector. It then created 13 Technical Teams of producers and users of information to provide recommendations on specific topics. In addition, an Indicators Research Team, which included both funded and volunteer scientists, coordinated the IWG and Technical Teams (see section 3.1) and developed prototypes in support of the recommendations.

The aspirational goal for the NCIS was that the recommendations of the IWG would be implemented, expanded, and sustained over the long-term to support the NCA and information needs of its users. Additionally, the NCIS was envisioned to be synergistic with Federal climate priorities, including the USGCRP strategic plan and priorities (USGCRP 2012) and later the President’s Climate Action Plan (2013); Climate Resilience Toolkit (https://toolkit.climate.gov/); and Executive Orders 13653 (November 2013), 13514 (October 2009), and 13642 (May 2013).

The objective of this paper is to describe the process of visioning, prototyping, and implementing the recommendations for an NCIS and to present lessons learned. Given the recent release of pilot indicators by the USGCRP (www.globalchange.gov/explore/indicators), we discuss opportunities for further development of the NCIS and the research needed for attaining the IWG’s vision. The authors of this paper do not claim to be impartial; Janetos and Kenney co-led the NCADAC IWG, Kenney led the Indicators Research Team, and Lough facilitated the review of NCIS recommendations in her role at the USGCRP National Coordination Office. In this article, the term “we” is used to reflect the perspective of the authors of this paper; in all other instances we use the name of the scientific or agency group to minimize confusion about different actors’ roles.

2 Vision and decision criteria

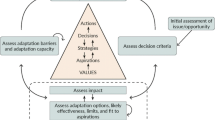

The IWG initially produced NCIS vision and decision criteria (USGCRP 2015), in which the vision outlined a system of physical, natural, and societal indicators that communicate and inform decisions about key aspects of the physical climate, climate impacts, vulnerabilities, and preparedness (Fig. 2). Central to this vision was a set of indicators useful for ongoing tracking of critical changes and impacts important to the NCA as well as indicators that could support adaptation and mitigation decisions by a range of stakeholders. These indicators could be tracked as a part of ongoing assessment, with adjustments made as necessary to adapt to changing conditions and understanding (for expanded discussion see Janetos et al. (2012) and Kenney et al. (2014)).

Conceptual model recommended for the National Climate Indicators System (adapted from Kenney et al. 2014)

The decision criteria operationalized IWG’s vision by describing the types and qualities of indicators that could be considered for inclusion in the system (Online Resource 2: Recommended Decision Criteria for the NCIS; USGCRP 2015). Key criteria include indicators that: are scientifically defensible and useful, support the conceptual framework (Fig. 2), are nationally important (not necessarily of national scale), and encompass lagging, coincident, and leading indicators.

These criteria provided robust foundations for the subsequent recommendation and development phases. Careful evaluation and consensus about the overall objectives, purpose, and criteria for inclusion are essential in an indicator effort to ensure that any competing and incompatible goals are worked through before developing and choosing indicators.

3 Recommendations and development processes

Moving from the overall goals to building the system, the IWG developed a process for making recommendations and creating prototypes that included establishing Technical Teams, creating conceptual models, developing recommendations and research priorities, reviewing the recommendations and selections, and selecting indicators for the NCIS pilot (Fig. 3).

3.1 Creation of technical teams and selection of experts

To develop the NCIS, the IWG created Technical Teams to provide topical recommendations, loosely structured on the sectors and systems represented in the NCA3 chapters (see Table 1). The 13 Technical Teams were comprised of physical, natural, and social scientists and practitioners who had a broad understanding of the multi-stressor climate changes or impacts on the system/sector, experience with existing sustained datasets and indicators, and an understanding of the information needs of stakeholders. In total, the members of the 13 Technical Teams and IWG included over 200 scientists and practitioners who were employed in the Federal agencies (nine of the 13 USGCRP agencies), academia, non-governmental organizations, and the private sector. This range of expertise provided the perspectives needed to meet NCIS goals, to have broad buy-in from the scientific community and the Federal agencies, and to support the goals of broad engagement within the NCA (Cloyd et al. 2015 this issue).

To ensure that the Technical Team recommendations had a strong theoretical foundation, the IWG charged them with producing for each topic a conceptual model, recommendations for indicators drawn from those models, and research priorities. The Indicators Research Team served a coordinating capacity for these teams.

3.2 Conceptual models

In the 2000 National Research Council report, Ecological Indicators for the Nation, one major criterion for indicator selection suggested by the authors (and identified by Cobb and Rixford 1998), was for them to have a clear conceptual basis. Consistent with this recommendation, the indicators system development used a conceptual or mental models approach, both for the overall system (Fig. 2) and for each of the Technical Teams’ recommendations. The Technical Team’s conceptual models transparently defined the system and relationships for each topic area, which framed the team’s recommendations.

We did not constrain each team within a single conceptual modeling framework because of the diversity of the topics and team members and a lack of existing conceptual models from the literature that could accommodate this diversity (Binder et al. 2013; Fisher et al. 2013; Dorward 2014). As a result, the conceptual models ranged from those that were focused on the system components (e.g., Kenney et al. 2014, pp. B-23) to those that provided a risk-based framing (e.g., Kenney et al. 2014, pp. B-42). Because the Technical Teams’ members were from different disciplines and had not worked together previously, the conceptual modeling process promoted a shared understanding of the components and functioning within each sector. We knew the process was achieving its intended goal when one person remarked that the conceptual modeling process’ success was evident when the individual experts became a team and developed a shared understanding of the critical biophysical and societal factors affecting the sector.

After the release of the NCADAC recommendations (Kenney et al. 2014) and building from the first round of conceptual models, the second round of conceptual model development has focused on using a common approach and syntax. Synthesizing across all the conceptual models has allowed us to better understand and describe the interlinkages between the different systems and sectors, to further describe the overall NCIS conceptual model, and was used to support the web-design of the NCIS. Though the system components are fairly obvious to the Technical Team, they are more challenging to identify and to connect for non-technical experts. We have found the conceptual models to be a useful way to represent systems and describe the types of indicators that could be useful to decision makers.

3.3 Recommendations and research priorities

The large number of existing datasets, indicator products, and graphics provided a solid foundation for the development of the NCIS, including materials created through the development of the NCA3, various Federal agency climate programs, and extramural scientific research. Each Technical Team assessed the available information and made recommendations for indicators that were a balance between those useful for documenting change in the sector and those that would be directly useful for adaptation or mitigation decisions. In either case, the teams needed to demonstrate actual or potential use of the indicator by user audiences to justify their recommendation. At the same time, the teams also suggested research priorities that would build out the information important for each sector.

3.4 Review processes

Each Technical Team’s conceptual model, recommendations, and research priorities were developed into a draft report that was reviewed by a minimum of three experts who were not part of the indicators process. The Indicators Research Team managed this external review process, and suggested priorities for responses by the Technical Teams, analogous to standard review processes used in most scientific publishing. The goal was to ensure that recommendations that ultimately came forward to the USGCRP had passed through rigorous internal and external expert review. The revised reports were delivered to the IWG for discussion and synthesis to suggest refinements of the recommendations by a particular team or to identify linkages of indicator and system concepts between teams. In this way, recommendations from Technical Teams underwent three levels of review and discussion – an external review, a review internal to the teams, and a discussion among all the Technical Teams for any system-wide issues, such as overlaps in proposed indicators or unexpected gaps in the end-to-end nature of the system.

Such extensive review was time-consuming and difficult. On one hand, it certainly had the potential to slow down recommendations from coming forward to the NCADAC (and ultimately the USGCRP). However, it created a level of confidence that indicators that were ultimately recommended through this process were scientifically well founded, had a strong potential to be immediately usable, and could be implemented fairly easily.

3.5 Selection of indicators for system

The IWG considered the recommendations of each of the Technical Teams. Because the first phase of the proposed NCIS implementation process was the creation of a pilot indicator system, the IWG asked each Technical Team to provide no more than four indicators that were already scientifically vetted and would be implementable with no additional research or data synthesis. The IWG then developed synthesis recommendations (Kenney et al. 2014), which were approved by the NCADAC (USGCRP 2015), and delivered as advice to the USGCRP. The IWG was well aware that the initial proposals of such a small number of indicators would leave unwelcome gaps in the end-to-end model of the NCIS. However, we felt strongly that it would not be possible to anticipate all the ways in which the indicators of the proposed NCIS might be interpreted and used, and that it would be preferable to make initial progress that could then be evaluated and built on. Technical Teams that had proposed multiple indicators for their topics were thus faced with a difficult tradeoff at the system level of the NCIS.

4 Proposed implementation and phasing

Though the vision, recommendations, and proposed implementation were designed through the IWG, the intent was for the NCIS to transition to the USGCRP for implementation and ongoing management. Specifically, the IWG envisioned that the NCIS would be developed with a process similar to a typical software development cycle. A beta version would be released to the public for review. This pilot version would serve as a proof-of-concept, with a limited number of indicators covering a wide range of topics and providing an opportunity to engage the broader stakeholder community. Such an iterative user-focused approach is rarely employed for decision-support products (Moss et al. 2014), but provides a means to assess the efficacy of the product and to co-design the elements with users to ensure the product better meets their needs (Fig. 3).

To support the implementation recommendations, the Indicators Research Team developed prototypes for a subset of the pilot indicators that were consistent with recommendations from the NCADAC (Kenney et al. 2014). The Indicators Research team also created a prototype style guide for indicator graphics, text, and metadata (see Online Resource 3: Recommended NCIS Graphic Style Guidance).

It is unusual for USGCRP to receive recommended content from an external group. In order to release the pilot as an interagency product, the agencies needed to establish a process to review, revise, and finalize the indicators figures, text, and metadata. A team of Federal scientists reviewed the content for scientific validity, consistency with other USGCRP products (e.g., NCA3), adherence to the USGCRP’s communication principles, and correctness and completeness of metadata. A number of changes were made to meet the standards for USGCRP products. For example, trends were added to some figures, text was revised to clarify climate impacts, relevance to decision-making was framed more consistently, and metadata was revised to adhere to agency standards. Several prototyped indicators were removed from the pilot set due to competing agency science products (e.g., multiple methods for calculating drought indices), or because the index reflected non-climate variables and a climate signal could not be clearly distinguished. Due to time constraints, these issues could not be resolved for the pilot, but those indicators could be revisited for the future. Consistent with the NCADAC’s recommendations, the USGCRP released a pilot phase in May 2015 (www.globalchange.gov/explore/indicators) and will consider establishing a sustained NCIS that expands the pilot.

5 Co-creation with stakeholders using social science research approaches

One of the tenets of stakeholder engagement processes is that the co-creation of knowledge confers greater legitimacy and salience to the outcomes than simply communicating expert judgment (Moss et al. 2014; Lee 1993; Innes 1990). For the NCIS, the approach to co-creation was serial. The first phase in the NCIS co-production process could be classified as integrating expert knowledge (Meadow et al. 2015). The initial process included experts on the IWG and Technical Teams who were familiar with scientific outputs and broad decision needs; at this stage it did not include non-technical experts who were interested in specific uses for the indicators.

Part of the rationale for the recommended pilot phase was to “road test” the NCIS pilot to understand how the indicators are used and the additional features needed to increase its utility. Involving a diverse group of non-technical experts and stakeholders would create the opportunity to move into a collaborative co-production function (Meadow et al. 2015) to expand and improve the NCIS to meet user needs and preferences.

The two-phase co-development process was purposeful; we focused on planning an inclusive, pragmatic process that would be led by technical experts but informed by decision needs and indicator use. The later phase of the co-development process allows for action-oriented engagement of stakeholders in which people are asked to engage in the process of designing scientific products to increase utility for both the scientific and user communities.

After the pilot NCIS was released by USGCRP, new opportunities emerged to evaluate co-development pathways, including both “top-down” and “bottom-up” approaches. Top-down approaches include web analytics and surveys to provide a broad assessment of the understandability of individual indicators as well as experiments to test the efficacy of visual indicator representations and the system as a whole. Bottom-up case studies engage scientists and decision makers to understand their decision-making contexts, processes, and information needs – particularly, how NCIS indicators or proposed indicators could be modified to better support a range of research questions and climate-informed decisions. The Indicators Research Team is currently conducting and publishing both top-down and bottom-up research that could provide ideas for future development of the NCIS. Such evaluation data can help prioritize indicator modifications, additions, and features for the overall system. An iterative approach to development supports the recommendations by Weaver et al. (2014) to increase global change social science research and National Research Council recommendation (2009b) that future research should include “design and application of decision support process and assessment of decision support experiments.”

Through a combination of evaluation research and targeted co-production, the NCIS could be refined and developed in a way that balances the need for indicators to support sustained assessments and to support decisions. Indicators can be both descriptive - describing existing conditions - and analytic - seeking to understand why those conditions exist (Cobb and Rixford 1998). The IWG recommendations primarily focused on identifying descriptive indicators and understanding the relationships among them through conceptual models. The approach placed the descriptive indicators within a systems context without being prescriptive; thus, allowing for a balance between indicators for assessment and decision-making.

6 Research opportunities

To meet the goals of the NCIS vision, several near-term research foci were suggested by Janetos and Kenney (2015). First, a big gap noted in the NCADAC’s recommendations (Kenney et al. 2014) was the need for adaptation and mitigation indicators. Because adaptation and mitigation indicators are both more experimental and more closely related to policy than others, the Technical Teams chose to identify research questions rather than individual indicators. For example, further research could help develop indicators that demonstrate climate vulnerability with and without adaptation measures (i.e., counterfactual analysis) or the effectiveness of recently implemented policies to reduce greenhouse gases. A particularly useful set of indicators would move beyond accounting for adaptation actions into measuring outcomes and effectiveness of portfolios of climate-resilience actions.

Second, it will be important to develop strategies for creating leading indicators to support climate-resilient decisions. Leading indicators are predictive of future conditions, based either on currently measured indicators that signify something important about the future or on models that can verifiably forecast future conditions. Leading indicators have been created for a number of key climate variables, such as surface temperature and precipitation (Walsh et al. 2014), but leading indicators for climate impacts are less developed yet critically important for informing adaptation actions. To pursue a research strategy related to leading climate impact and vulnerability indicators, it will be advisable when scientifically feasible to identify their optimal temporal scale so that they can be useful in both current and future decision contexts.

Third, there is a need for spatial scalability, i.e., indicators that can be presented at local to national scales. Such scalability of indicators would allow information to match the scale of the decision at hand. The spatial scalability of indicators is not simply a matter of aggregating or disaggregating observations or modeled results. The most appropriate way of representing an indicator such as drought (Steinemann and Cavalcanti 2006), for example, may depend on the scale, decision context, or available datasets. Overall, a system of scalable indicators would have greater utility for a broader range of users and, beyond the communication potential, could truly support decisions.

7 Conclusions

The recent release of the USGCRP indicators pilot (2015) has been very well received, but the true success of the effort will be demonstrated through ongoing governance and development. In particular, a clear vision of a fuller implementation of the NCIS needs to be defined and refined by user needs and scientific feasibility. In the long-term, a periodic review of the vision and decision criteria could be used to assist USGCRP in meeting, improving, and evolving the goals of the effort. Although the NCIS started with IWG recommendations, the system was intended for Federal agency ownership. Thus, future reconsideration of the goals will likely be a Federal activity, but we believe input from the broader community would prove useful to assure that the NCIS is serving the sustained assessment and user needs.

Reliance on a collaborative and transparent process involving Federal and non-Federal experts and decision makers could guide the evolution of a system of credible, salient, and useful indicators of change that meet the needs of the indicator audiences. In particular, ongoing interaction with stakeholders will allow agencies to better match user needs for climate information with the development of scientifically rigorous indicators. This approach would provide a consistent and regularly updated suite of national indicators to improve understanding of changes and impacts and to strengthen the ability of U.S. communities and the economy to prepare and respond; thus, meeting the original vision of the NCIS.

Ultimately, the NCIS could support development of assessment reports by providing consistent and routinely updated indicators of physical, ecological, and societal change. The NCIS could enable an efficient way for each successive national assessment to build on a well-documented time series of indicators, measured from established baselines, clearly showing how conditions have changed since the previous assessment. Finally, an NCIS that successfully includes information on the climate system, climate impacts, and adaptation and mitigation responses, should be able to provide future assessments with the ability assess the Nation’s progress in responding to climate change.

References

Binder CR, Hinkel J, Bots PW, et al. (2013) Comparison of frameworks for analyzing social-ecological systems. Ecol Soc 18(4):26

Buizer JL, Fleming P, Hays SL et al. (2013) Report on preparing the nation for change: building a sustained national climate assessment process. National Climate Assessment and Development Advisory Committee

Cloyd E, Moser SC, Maibach E, et al. (2015) Engagement in the third national climate assessment: commitment, capacity, and communication for impact. Clim Chang. doi:10.1007/s10584-015-1568-y

Cobb CW, Rixford C (1998) Lessons learned from the history of social indicators, Vol. 1: Redefining Progress San Francisco

Dorward A (2014) Livelisystems: a conceptual framework integrating social, ecosystem, development and evolutionary theory. Ecology and Society

Executive Office of the President (2013) The President’s Climate Action Plan http://www.whitehouse.gov/sites/default/files/image/president27sclimateactionplan.pdf

Executive Order 13514 of October 5, 2009. Federal leadership in environmental, energy, and economic performance (Federal Adaptation Plans)

Executive Order 13642 of May 9, 2013. Making open and machine readable the new default for government information

Executive Order 13653 of November 1, 2013. Preparing the United States for the impacts of climate change (Section 4: Providing Information, Data, and Tools for Climate Change Preparedness and Resilience)

Fisher JA, Patenaude G, Meir P, et al. (2013) Strengthening conceptual foundations: analysing frameworks for ecosystem services and poverty alleviation research. Glob Environ Chang 23(5):1098–1111

Innes JE (1990) Knowledge and public policy: the search for meaningful indicators. Transaction Publishers, New Brunswick

Janetos AC, Kenney MA (2015) Developing better indicators to track climate impacts. Front Ecol Environ 13:403–403. doi:10.1890/1540-9295-13.8.403

Janetos AC, Chen RS, Arndt D, et al. (2012) National climate assessment indicators: background, development, and examples. A technical input to the 2013 national climate assessment report. PNNL-21183, Pacific Northwest National Laboratory, Richland. pp. 59. http://Downloads.Globalchange.Gov/Nca/Technical_Inputs/NCA_Indicators_Technical_Input_Report.Pdf

Kenney MA, Janetos AC et al. (2014) National climate indicators system report. National Climate Assessment Development and Advisory Committee. http://www.globalchange.gov/sites/globalchange/files/Pilot-Indicator-System-Report_final.pdf

Lee KN (1993) Compass and gyroscope: integrating science and politics for the environment. Island Press, 255 pp

Lippman LH (2006) Indicators and indices of child well-being: a brief American history. Soc Indic Res 83:39–53

Meadow AM, Ferguson DB, Guido Z, et al. (2015) Moving toward the deliberate coproduction of climate science knowledge. Wea Climate Soc 7(2):179–191

Moser SC, Melillo J, Jacobs KL, et al. (2015) Aspirations and common tensions: larger lessons from the third US national climate assessment. Clim Chang. doi:10.1007/s10584-015-1530-z

Moss R, Scarlett PL, Kenney MA, et al. (2014) Ch. 26: Decision Support: Connecting Science, Risk Perception, and Decisions. Climate Change Impacts in the United States: The Third National Climate Assessment, Melillo JM, Richmond TC, and Yohe GW, eds., U.S. Global Change Research Program, 620–647. doi:10.7930/J0H12ZXG

National Research Council (1999) Nature's numbers: expanding the national economic accounts to include the environment. National Academies Press, Washington, DC

National Research Council (2000) Ecological indicators for the nation. National Academies Press, Washington, DC

National Research Council (2009a) Restructuring federal climate research to meet the challenges of climate change. National Academies Press, Washington, DC

National Research Council (2009b) Informing decisions in a changing climate. National Academies Press, Washington, DC

National Research Council (2013) Sustainability for the nation: resource connection and governance linkages. National Academies Press, Washington, DC

National Research Council (2015) Measuring progress toward sustainability: indicators and metrics for climate change and infrastructure vulnerability. Meeting in Brief, Roundtable on Science and Technology for Sustainability http://sites.nationalacademies.org/cs/groups/pgasite/documents/webpage/pga_168935.pdf

Ogburn WF (1933) Recent social trends in the United States. Report of the president's research committee on social trends. McGraw-Hill Book Company, New York

Steinemann A, Cavalcanti L (2006) Developing multiple indicators and triggers for drought plans. J Water Resour Plan Manag 132(3):164–174

The Heinz Center (2008) The State of the nation’s ecosystems 2008: measuring the lands, waters, and living resources of the United States. The H. John Heinz III Center for Science, Economics and the Environment

USGCRP (2010) Ecosystem Responses to Climate Change: Selecting Indicators and Integrating Observational Networks. NCA Report Series, Vol. 5a. November 30-December 1, 2010, Washington, DC http://data.globalchange.gov/assets/68/23/2165abcb9561c165fb65ec2dcf3a/ecological-indicators-workshop-report.pdf

USGCRP (2011a) Societal Indicators for the National Climate Assessment. Kenney MA, Chen RS, et al. Eds. NCA Report Series, Vol. 5c. April 28–29, 2011a, Washington, DC. http://data.globalchange.gov/assets/f7/3f/d552dcec34ae9c23fcdefd61de65/Societal_Indicators_FINAL.pdf

USGCRP (2011b) Monitoring Climate Change and its Impacts: Physical Climate Indicators. NCA Report Series, Vol. 5b. March 29–30, 2011b, Washington, DC. http://data.globalchange.gov/assets/04/91/f875575999e1882ac421297f310b/physical-climate-indicators-report.pdf

USGCRP (2012) The National Global Change Research Plan 2012–2021: A Strategic Plan for the U.S. Global Change Research Program. USGCRP Report. http://data.globalchange.gov/assets/d0/67/6042585bce196769357e6501a78c/usgcrp-strategic-plan-2012.pdf

USGCRP (2015) National Climate Assessment & Development Advisory Committee (2011–2014) Meetings, decisions, and adopted documents. http://www.globalchange.gov/what-we-do/assessment/ncadac Accessed 14 Aug 2015

Victor DG, Kennel CF (2014) Climate policy: ditch the 2 °C warming goal. Nature 514:30–31. doi:10.1038/514030a

Walsh J, Wuebbles D, Hayhoe K et al. (2014) Ch. 2: Our Changing Climate. Climate Change Impacts in the United States: The Third National Climate Assessment, Melillo JM, Richmond TC, Yohe GW, Eds., U.S. Global Change Research Program, 19–67. doi:10.7930/J0KW5CXT

Weaver CP, Mooney S, Allen D, et al. (2014) From global change science to action with social sciences. Nat Clim Chang 4:656–659. doi:10.1038/nclimate2319

Acknowledgments

The authors thank the editors of the special issue, four anonymous reviewers, and Michael Gerst for comments that have improved the manuscript. Kenney’s work was supported by National Oceanic and Atmospheric Administration grant NA09NES4400006 and NA14NES4320003 (Cooperative Climate and Satellites-CICS) at the University of Maryland/ESSIC. The National Environmental Modeling and Analysis Center at the University of North Carolina-Asheville, and specifically Caroline Dougherty, Karin Rogers, Ian Johnson, and Jim Fox collaborated with Kenney and Ainsley Lloyd and co-authored the NCIS Style Guide (Online Resource 3). Current and previous Indicators Research Team members include Ainsley Lloyd, Michael Gerst, Rebecca Aicher, Felix Wolfinger, Omar Malik, Sarah Anderson, Julian Reyes, Allison Bredder, Amanda Lamoureux, Maria Sharova, Eric Golman, Ella Clarke, Ryan Clark, Christian McGillen, Justin Shaifer, Olivia Poon, Jeremy Ardanuy, Ying Deng, Marques Gilliam, Andres Moreno, Jordan McCammon, Naseera Bland, and Michael Penansky. Members of the Indicators Technical Teams and NCADAC Indicators Working Group are included in Kenney et al. (2014).

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of a special issue on “The National Climate Assessment: Innovations in Science and Engagement” edited by Katharine Jacobs, Susanne Moser, and James Buizer.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kenney, M.A., Janetos, A.C. & Lough, G.C. Building an integrated U.S. National Climate Indicators System. Climatic Change 135, 85–96 (2016). https://doi.org/10.1007/s10584-016-1609-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-016-1609-1