Abstract

Schizophrenia is characterised by a variety of symptoms, many of which are expressed verbally. However, privacy concerns limit the possibility of collecting and sharing large corpora of schizophrenic speech. As a result, variability in the communicative behaviour of individuals with schizophrenia is currently poorly understood. In this study we explore how far content-free speech activity records can successfully profile the behaviour of patients with schizophrenia. We used data from one of the very few publicly available corpora of conversations with patients with schizophrenia, featuring interviews between a therapist and three patients with different symptoms. Crucially, in this study we annotated only moments of speech and silence during the interview, and we entirely discarded the verbal content of the interview. In this way we perform a type of analysis that fully preserves the speakers’ privacy, while still allowing for comparisons with the full original recorded material. We developed several types of analyses and corresponding visualisations from the activity records. Exemplifying these analyses, clear patient-specific profiles can be derived, based on the dimensions of total silence duration and the speech duration of patients and therapists. These findings are consistent with an independent phenomenological assessment of the three patients.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Language plays a crucial role in schizophrenia. From the clinicians’ point of view, language can express a variety of symptoms, including positive (e.g. delusion) and negative symptoms (e.g. alogia) (American Psychiatric Association, 2022; Andreasen, 1986), and thus is of fundamental importance in diagnosis and treatment monitoring. From the point of view of patients, the atypical use of language is a major source of difficulties for interpersonal relationships, with important consequences for their wellbeing. The research on “schizophrenic language” goes back to the early stages of modern psychiatry. Emil Kraepelin already proposed the symptom of “schizophasia”, referring to anomalies in the form and content of of utterances of patients with schizophrenia (Kraepelin, 1915). In the century that followed, a large amount of literature has been produced on the topic (e.g. Kuperberg, 2010; Parola et al., 2020; Schwartz, 1982), which has attracted the interest of psychiatry, linguistics and, more recently, natural language processing (Corcoran et al., 2018, 2020; Corona-Hernández et al., 2022). However, these efforts have not yielded a clear picture yet. Two problems have led to this lack of clarity.

First, most past research rested on an “essentialist” view of schizophrenic language. This includes, for example, a focus on the properties of patients’ productions from the semantic, lexical or discourse points of view (Covington et al., 2005; Linell, 1982). This approach stems from the mainstream view of language as a system, as a theoretical construct that can be isolated and described in itself (Harris, 1980), and mostly on the basis of its content, which is available in a written or at least transcribable format (McNeill, 1985). The interest of this approach lies in the hypothesis that patients’ language can offer a window into the organisation of their thoughts (Amblard et al., 2021), thus contributing to our understanding of the cognitive basis of schizophrenia. However, this approach tends to neglect the social aspects of the condition, overlooking how language is actually used in communication (Bambini et al., 2016; Mueser et al., 1991: also in relation to autism, Bolis et al., 2017; for a recent manifesto placing interaction at the heart of language and cognition see Dingemanse et al., (2023).

Second, schizophrenia is not a monolithic clinical syndrome and is not stable over time. Individuals diagnosed with schizophrenia exhibit a wide variety of behaviours and symptoms (Andreasen & Flaum, 1991; Brown, 1973). This makes it challenging to find clear-cut differences between the groups of patients and controls. Further, schizophrenia is substantially fluctuating in its time course. This situation is worsened by the small size of most databases used in research on language and communication of people with schizophrenia. On the one hand, collecting and annotating data from patients is resource intensive. On the other hand, privacy concerns make it difficult to share the collected data, especially in the case of unscripted interactions or of less constrained experimental tasks. As a result, most research is carried out on small, private databases (Parola et al., 2020). This situation hampers our understanding of just how variable the linguistic and communicative behaviour of people with schizophrenia can be.

1.1 Minimalist interaction records

In response to the two concerns above, in this study we explore the language of patients with schizophrenia from an intrinsically communicative point of view, while at the same time minimising privacy concerns, thus offering researchers a possibility to share data on the behaviour of individual subjects (Ben-Zeev et al., 2016; Brederoo et al., 2021). To do so, we explore content-free records of speech activity, as extracted from interactions including patients with schizophrenia (see Fig. 1). Records are stored as time-stamped successions of intervals of speech and of silence, separately for each interactant. The resulting records can be visualised as an interaction score, where time flows from left to right, with line breaks if necessary. Interactants are represented by stacked tiers, and intervals of activity are represented by lines or blocks on such tiers. Using different colours for each interactant improves the readability of the score.

Example of interaction score, reprinted from Lucarini et al. (2022). Black lines represent activity of the patient. Red lines represent activity of the interviewing therapist. Note the portions of no activity (e.g. at time 0:51) and of overlapping activity (e.g. at time 3:49). (Color figure online)

Representations of this type were pioneered by Chapple (1939) as a tool for quantifying interaction in anthropological fieldwork. They sparked large amounts of interest, research and criticism (see Watter, 2020). In the following decades, this line of work benefited from innovations in three domains. First, concerning the recording instrumentation, and notably with the development of dedicated hardware such as the “Interaction Chronograph” (Chapple, 1945), the automatisation of data transcription (Hargrove & Martin, 1982; Jaffe & Feldstein, 1970), and even the creation of inexpensive alternative devices such as the “Rotissograph” (Lauver, 1970). Second, experimental paradigms for the elicitation of interactions were standardised (Chapple, 1953) and extensively tested (Matarazzo & Wiens, 1972; Saslow et al., 1955), until converging onto an interview procedure which aimed to minimise the role of the interviewer. Third, the contexts of application were expanded to domains as diverse as the evaluation of salespeople performance (Chapple & Donald, 1947), the training of military aircraft pilots (Finesinger et al., 1948) and, crucially to our interests, the profiling of psychiatric disorders (Chapple & Lindemann, 1942; Feldstein, 1962). In the last two decades, content-free time-based representations of interaction have found wider use in speech sciences (Campbell, 2007; Dingemanse & Liesenfeld, 2022; Edlund et al., 2009; Trouvain & Truong, 2013), including the subfields of human–machine interaction (Gilmartin et al., 2018) and second language acquisition (Sbranna et al., 2020).

The creation of interactional scores like the one in Fig. 1 rests on two stipulations. First, we posit that the behaviour of interactants can be discretised into atomic moments of activity and of inactivity. In truth, it is possible that the concept of activity should be rather understood in continuous (or at least gradual) terms. As a first approximation, in this study we treat activity as a binary construct at the atomic level, i.e. for each observed point in time. However, different degrees of activity emerge as we observe interaction in larger temporal domains, i.e. when pooling atomic observations within a time window. Second, in order to make our atomic binary choices, we need to stipulate what counts as activity. In early work on the Interaction Chronograph, interaction was already understood as a multimodal event. Gestures were annotated as actions, since “A nod of agreement is just as definite a response as a verbal yes” (Appendices to Finesinger et al., 1948 p. 147). To fully understand interaction, it is necessary to integrate all visible and audible aspects, as highlighted by Kendon in recollecting his early work with Chapple (Leeds-Hurwitz & Kendon, 2021 p. 165). Ideally, visual information should be complemented by a close analysis of the content and of the time-course of the interaction (Fatouros-Bergman et al., 2006), and by any other communication modality (e.g. haptic). As such, our content-free representations of audible activity are thus extremely impoverished. And even at such a degree of simplification, they still require further stipulations. Certain vegetative sounds such as coughing, breathing and lip smacking, for example, might or might not have interactional value (Goldmann-Eisler, 1951; Starkweather, 1956; Swingle, 1984). The most reductionist approach is thus to operationalise speech activity as verbal and vocal material of unambiguous interactional value (Schroeder, 2003; Trouvain & Truong, 2012; Wharton, 2003; Ward, 2006). Besides verbal material, this definition includes vocal productions such as laughter, interjections (“ouch”), fillers (“uhm”) and backchannels (“mhm”), and it excludes vegetative sounds. The retained activities are represented in Fig. 2 by the white areas.

This definition of speech activity is brutally reductionist. It is discretised, binary, confined to the auditory domain, and restricted to verbal events and to unambiguously communicative vocal events. These are stripped of their phonetic, propositional or interactional content, and reduced to their start and endpoints in linear time. It describes only one component of the rich, multimodal, contextualised approach which is required to make sense of interactions. Because it is neglecting both content and form of those vocal events, as well as other communication channels such as gesture, posture or gaze information, our operationalisation of speech activity might at first glance seem particularly ill-suited for studying the language and communication of people diagnosed with schizophrenia. However, it also has two crucial advantages. First, by removing visual, auditory and propositional content and by reducing action to a sequence of timestamps, it provides a fully anonymous record of the interaction. This means that, even though informed consent is still required for data acquisition, such records do not violate the privacy of the patients and, if metadata are opportunely anonymised, can be freely shared with the research community. Second, if the phenomena of interest are binary decisions on interactional speech events, annotation becomes a comparatively trivial task. Annotations can also be largely automated, especially if each interactant is recorded with an individual microphone. In this case manual verification, if required at all, consists merely in discarding intervals of vegetative vocal activity (e.g. coughing) and other spurious noises. As such, it can be performed easily even by phonetically untrained personnel. This aspect further lowers the barrier to collecting, annotating and sharing large amounts of interactional data, thus offering a path for the study of variable behaviour in schizophrenic communication.

1.2 Patient behaviour variability

In the following sections we explore whether, despite their coarse nature, content-free speech activity records can effectively characterise differences in the behaviour of individual patients with schizophrenia, as observed during interactions with their therapist. As a first approximation, we would expect greater differences in inter-subject than intra-subject variability. That is, greater differences in the interactional behaviour of different subjects compared with the differences across different recordings for one speaker or across different portions of one recording for the same speaker.

Note that intra-subject variability is to be expected, since all interactions tend to have an internal structure, for example with the succession of phases such as greeting and leave-taking (Laver, 2011). In casual conversation, these phases can be structured into a web of potential paths, which can lead to the recurrence of certain phases, as when multiple topics are serially introduced (Ventola, 1979). We can expect the distribution of activity across participants to be different in these phases. In this sense, conversations can be seen as composed of moments where interactants share the floor in reasonably balanced terms, and by other moments where one of the interactants takes the leading role (respectively “chat” and “chunk” in the terminology of Eggins & Slade, 2004; see Dingemanse & Liesenfeld, 2022; Gilmartin et al., 2018). Moreover, the structures that emerge during casual conversation can be expected to differ from the structure of other types of interaction (Bakhtin, 1986; Biber & Conrad, 2009). This is particularly true for genres characterised by an intrinsic asymmetry of roles, as in the case of interviews, where one of the interactants usually has a more prominent role in managing the conversational exchange (Cangemi & Baumann, 2020; Itakura, 2001; Orletti, 2000; Viaro & Leonardi, 1983). Interviews which take place in medical settings are further characterised not only by their highly institutionalised nature, but also by the complementary needs for flexibility and personalisation (Ha & Longnecker, 2010; ten Have, 1991; Maynard & Heritage, 2005; Pilnick et al., 2009). Moreover, therapist-patient interviews in a psychiatric setting can present further specific modes of organisation (Galasiński, 2011; Ziółkowska, 2012). Thus even in the analysis of the behaviour of a single patient, sampling excerpts from different stages of an interview can show a high degree of intra-subject variability. This intra-subject variability can only be expected to increase if excerpts are sampled from interviews recorded at different points in the long-term relationship between patient and therapist, in which the patient can exhibit different overall conditions, potentially linked to the course of the pharmacological treatment.

Crucially, however, we expect a larger portion of the results to be explained by inter-subject variability, especially given the intrinsically heterogeneous nature of schizophrenia. The symptoms employed in diagnosing schizophrenia are in fact quite diverse, and can have different manifestations in the communicative behaviour of patients. Symptoms are usually grouped into negative or positive, where negative symptoms refer to the lack of behaviour that is common among non-diagnosed individuals, such as the expression of emotion or the desire to maintain social interaction. Positive symptoms, on the other hand, indicate the presence of experiences that are rare in non-diagnosed individuals, as in the case of delusions or hallucinations. Since a diagnosis of schizophrenia can be based on the presence of different positive and negative symptoms, we can expect subjects to display substantial differences in their speech activity behaviour. The most direct way to test this expectation is to examine the speech of subjects which have been independently described as representing different aspects of schizophrenia. This is for example the case of the three subjects discussed by Pastore (2013), and for which approximately 10 h of medical interviews with the same therapist are publicly available in the CIPPS corpus (Dovetto & Gemelli, 2013). In a phenomenological reconstruction of the interviews with these subjects (Pastore, 2013), the therapist characterises the patients as living in three different “worlds”, which can be seen as cornerstones of the schizophrenic experience.

The world of subject A is—metaphorically speaking—“frozen”, devoid of the possibility of action and, a fortiori, of interaction. The patient exhibits a subapophanic form of schizophrenia (Blankenburg, 1971; Parnas & Bovet, 1991; Raballo & Maggini, 2005), i.e. with the absence of delusion and the presence of stark negative symptoms. This results in a laboured participation to the interviews, with long silences, scant verbal production, impoverished prosody and limited gestural activity. Therefore, a large share of the “burden of interaction” is put on the interviewer’s shoulders.

Subject B, on the other hand, lives in what Pastore (2013) defines as a “fragmented” world, where isolated thoughts and words fail to converge into meaningful experiences. The subject engages in a continuous effort of recomposing these fragments into a larger frame, and thus jumps from one to the other in the tumultuous “flight of ideas” (Binswanger, 1933) of an unstructured delusion. This process leaves the therapist little room for participation; as a result, speech activity during the interviews is dominated by the patient.

Lastly, the “delusional” world of subject C is characterised by a structured delusion which pivots around proporioceptive and auditory verbal hallucinations. Due to the long-term experience with psychotic episodes, this subject has had the chance to sensorialise, externalise and organise their experiences and as a result, is able to communicate them clearly, effectively, almost without anomalies (Pastore, 2013). The patient therefore manages the interactional space with the interviewer in a competent way.

The three clinical cases above offer a privileged testbed for the informativeness of content-free speech activity records. They represent three different aspects of the schizophrenia spectrum, thus maximising the possibility of documenting inter-speaker variability. At the same time, they are documented across multiple interviews of substantial duration, so they also allow to study intra-speaker variability. The audio recordings and the transcripts of the interviews are publicly available, a fact which makes our analyses of inter- and intra-speaker variability fully transparent and verifiable. In the following, we will thus use them to evaluate the following claim: content-free speech activity records can capture subject-specific aspects of schizophrenic communication. In the Method section we describe the selection of excerpts (Sect. 2.1) and the creation and visualisation of activity records (Sect. 2.2). We then explore various visualisation strategies in the Results section (Sect. 3) in order to summarise the information provided by the activity records and to evaluate the claim above. After a brief recapitulation of the findings, in the Discussion section we comment on the limitations of the current study (Sect. 4.1) and suggest further avenues for research on different datasets (Sect. 4.2). For readers interested in exploring the claims made here using descriptive or inferential statistics, the annotations used for the creation of records are available in a dedicated Open Science Framework repository (Cangemi, 2023), which also contains scripts for data extraction and visualisation. The source audio files remain available as part of the CIPPS corpus (Dovetto & Gemelli, 2013).

2 Method

2.1 Material

For each of the 3 patients, we excerpted 4 interview segments of 10 min each, for a total of 2 h of speech. The CIPPS corpus features a variable number of recordings for each patient, notably 3 for patient A, 4 for patient B and 2 for patient C. Each recording contains a clinical interview, and is represented by a coloured bar in Fig. 3. The corpus also includes a single interview for speaker D, which we disregard in the following analysis, since this speaker is not discussed in Pastore (2013) and since there is only a single, short recording available for this speaker. Interviews have variable duration, ranging between 28 and 67 min, as indicated by the length of the bars in Fig. 3. Most interviews begin with a warm-up segment, where the therapist asks the patient to discuss a task that had been assigned at the end of the preceding interview (e.g. writing a short paragraph or creating a drawing). These segments have variable duration, and tend to be more monological than the rest of the interview. For this reason, we avoided sampling our subcorpus from the initial portions of each interview.

The four excerpts (1–4) for each speaker (A–C) as drawn from the individual recordings (coloured bars) of the CIPPS corpus (Dovetto & Gemelli, 2013). (Color figure online)

For each speaker, we excerpted from the first interview the segment from minute 10 to 20. These 10 min-long segments are represented in Fig. 3 by the black squares containing a white number 1. We further extracted the segment from minute 30 to 40 in the first recording, represented in Fig. 3 by the black square with a white number 2. The third segment was excerpted from minute 10 to 20 of the second recording. For patient C, the second interview was done in presence of an associate of the therapist. The associate remains almost completely silent for the duration of this excerpt, but their presence does nonetheless represent a difference from the recording conditions for the two other patients. For this reason, the black square for excerpt 3 for patient C is represented in Fig. 3 with white dots. The fourth excerpt was taken from minute 10 to 20 of the third recording for patients A and B, but since the CIPPS corpus only contains two recordings for patient C, we used minute 30 to 40 of the second recording for this speaker. Moreover, in this segment the therapist’s associate takes the role of interviewer, while the therapist remains silent in the recording room. As a result, in Fig. 3 we represent this segment as a white square with black dots.

Compared to speakers A and B, excerpt 3 for speaker C is thus sampled from a different interactional configuration, and excerpt 4 is also sampled from a different phase of a different interview. These choices were necessary given the nature of the material available in the CIPPS corpus. However, they also have the beneficial effect of introducing further intra-speaker variability, and thus of making our analysis more conservative.

2.2 Records

In order to create content-free speech activity records, we first annotated speech activity using the software Praat (Boersma & Weenink, 2022). Since interviews were recorded onto a single channel using a digital audio tape recorder in noisy environments, we opted for manual annotations, which were carried out by the first and fourth author. We created a tier for each interlocutor and marked intervals of speech activity with an “x” (see Fig. 4). Following the rationale provided at Sect. 1.1, speech activity was defined as segments of either verbal material or vocal material of clear interactional value, and both preceded and followed by a silence. The annotation instructions did not prescribe thresholds for the duration of either activity or silent intervals. A post-hoc examination shows that out of 1765 intervals perceived as silences, only one had a duration of less than 200 ms, consistently with different strains of literature on the topic (Brady, 1965; Campione & Véronis, 2002; Duez, 1982; Heldner et al., 2011, Schuppler & Kelterer, 2021).

Using a Praat script from Cangemi (2023, §S1), each of the 12 annotation files was transformed into a content-free speech activity record. These records provide a faithful graphical representation of speech activity during the interview, as annotated by the coders. As such, they have both the advantages and the disadvantages of analytical representations.

On the one hand, they retain all the information concerning the frequency, the duration and the timing of each interlocutor’s activity. Figure 5 illustrates two records, one for speaker B (top panel) and one for speaker A (bottom panel). In both panels, the speech activity of the therapist is represented by black lines, while the activity of patients is represented by coloured lines (respectively red for patient B and blue for patient A, using the same colour coding employed in Fig. 3 above and in the rest of this article). The panels convey an intuitive image of the intense activity of subject B (top, in red), who occupies the conversational floor almost continuously. There is very little silence in the interaction, and any given silence does not seem to last longer than a few seconds. Until the last ten seconds of this excerpt, the therapist only contributes a handful of very short actions, apparently only providing short feedback signals to the patient. The bottom panel of Fig. 5 shows a very different picture, with subject A (in blue) speaking for very limited amounts of time. The interaction features many silences, of which many are particularly long (i.e. longer than 10 s). The therapist takes action frequently, in many cases with long durations, which are often longer than the actions of the patient himself. This suggests that the therapist is not simply providing feedback to the patient, but actively trying to incite interaction by asking questions or sharing thoughts. This inviting attitude seems corroborated by how the therapist tends to stay inactive while the patient speaks, perhaps with the sole exception of the exchange at time point 8:51.

Analytical representations, however, also have disadvantages. The wealth of information displayed for a single 10 min excerpt makes it difficult to compare interactions across excerpts and across patients. Figure 5, for example, despite all its complexity, only represents a sixth of the analysed data. For this reason, these analytical records will be complemented by the derivative visualisations introduced in the Results section.

3 Results

3.1 Scatter plots of interpausal units

A first way to simplify the interaction records is by removing information on the sequentiality of events. In this way, every interpausal unit of speech activity is represented as an individual observation, which can be characterised over a variety of dimensions (Cangemi, 2023, §S2 and §S3). Figure 6 shows interpausal units as points in a plane where on the x-axis is displayed the duration of the interpausal unit, and on the y-axis is displayed the duration of the silence following the interpausal unit (i.e. until the speaker speaks again).

In this example, the top panel shows data for the interaction between the therapist (in black) and patient B (in red). The distribution of data points for patient B shows a large number of interpausal units (i.e. many red circles), many of which are of long duration (i.e. many points after x = 5 s), and many of which are followed by pauses of short duration (i.e. most points are close to y = 0 s). In other words, consistently with the information from the record in the left panel of Fig. 5, patient B speaks often, for long periods of time, and pauses for a very short time. Notably, however, this scatter plot represents data from all four excerpts recorded for patient B, and not only a single excerpt as in the top panel of Fig. 5.

Figure 6 also shows data points for the therapist; to avoid overlap and clutter, these points are plotted with inverted axes, and thus with the origin in the upper right corner of the graph. In his interaction with patient B, the therapist speaks very rarely (i.e. few black circles) and spends long periods of the interaction in silence (i.e. many points have high values on the inverted y-axis). When the therapist does speak it is only for a relatively short time (i.e. only two points after x = 5 s). The representation in Fig. 6 emphasises the strongly skewed nature of the interactions between patient B and the therapist, since a balanced interaction would be symmetrical along the sinister diagonal represented by the dashed line.

The bottom panel of Fig. 6 shows the interactions between patient C (green) and the therapist (black). This interaction is comparatively much more balanced; as shown by the number of points, the speakers seem to take the floor equally often, but the patient takes the floor slightly more often. As shown by the x-axes, both speakers tend to be active for up to 5 s at a time (rarely up to 10 s, and never past that). As shown by the y-axes, they only stay silent for up to 10 s in most of the cases; silences rarely last up to 20 s, and in a handful of cases the therapist stays silent for up to 1 min. This pattern is consistent with interactions where one person is speaking slightly more than the interlocutor, while still leaving ample room for exchange.

If necessary, further information can be represented in these plots using different symbols for the interpausal units, depending on the dimensions of interest (e.g. the excerpt from which the unit was extracted, whether the unit is followed by activity from the same interlocutor, whether the interlocutor becomes active during the unit).

3.2 Smooth activity contours

For Fig. 6 above, we discarded sequential information and retained the duration of each unit of activity or silence. The alternative approach to achieve synthetic representations is to discard information on the duration of each unit and to preserve instead information on the time course of the interaction. For the creation of Fig. 7 below, activity data was sampled at 10 Hz frequency from the interaction record (for details see Cangemi, 2023, §S4) with a 200 ms analysis window. Results were then locally fitted by polynomial regression within a neighbourhood including 5% of the data points (Cangemi, 2023, §S5) using the loess function (R Core Team, 2022).

This generates smooth curves of activity for each speaker. While necessarily sacrificing some detail, these representations still convey the main features of the excerpts. They have the advantage of presenting time without line breaks on the x axis, as done instead in the speech activity records (Fig. 5). By using transparency, these plots provide an immediate representation of moments of overlapping activity in the interaction. Moreover, they make it easier to compare interactions across several excerpts from the same speaker or, as done in Fig. 7, across several speakers.

The interaction with patient A (top panel, blue) is characterised by a large portion of silence, as evidenced by the small mass of activity curves. Considering the interview setting, it is particularly striking how little activity the patient contributes to the interaction. On the other hand, the interaction with patient B (centre panel, red) is characterised by constantly high levels of activity, as shown by the small amount of empty or white space in the plot. Moreover, the therapist’s activity contour has almost exclusively very low peaks, with the exception of times around 330 and 590 s (corresponding to minute 5:30 and 9:50 in the top panel of Fig. 5). This provides a clear image of the asymmetry of participant roles in this excerpt. In comparison, the interaction with patient C (bottom panel, green) shows a more regular alternation between the interactants. Differently from the cases of patients A and B, the relatively high degree of overlap in activity (e.g. around 500 s) suggests a lively, engaged interaction between patient C and the therapist. At the same time, the amount of activity (i.e. of coloured areas) seems to strike a balance between the cluttering visible in the excerpt for patient B and the excessively slow pace in the interaction with patient A.

3.3 Spider web plots of segments

Instead of smoothing activity patterns over a sliding window as done in Fig. 7 above, we can divide each excerpt into segments of an arbitrary duration, and visualise their properties independently. This is the approach taken for the creation of Fig. 8 below, where each line represents a 1 min segment of interaction (Nakazawa, 2022; see (Cangemi, 2023, §S6 and §S7). The graph thus contains 120 superimposed lines, i.e. 3 speakers * 4 excerpts per speaker * 10 min per excerpt. Lines are colour coded in the usual way, with blue for patient A, red for B and green for C. Like the scatter plots in Fig. 6, this visualisation also destroys information on the sequential development of the interaction. However, it allows the simultaneous representation of several dimensions of variation. For Fig. 8, we chose to represent the amount of the patient’s activity, of the therapist’s activity, and of silence. For these dimensions we used a scale from 0 to 60 s, i.e. the entire duration of each one-minute segment. We further represent overlapping activity, as well as the number of interpausal units (IPU), separately for patient and therapist. For these last three dimensions, we used a custom scale to improve the readability of the plot, with 5 s (instead of 60) for overlap and the maximum attested number (i.e. 30) for interpausal units. As in the case of the scatter plots discussed at Sect. 3.1, other dimensions could be chosen. By using transparency, this representation provides an intuitive account of inter-speaker and intra-speaker variability, since the dimensions with compact, saturated and vibrant colours represent reliable inter-speaker differences.

Spider web plot for the entire dataset. Each line is a 1 min segment extracted from the excerpts. Lines are colour-coded for patients, i.e. blue for A, red for B, green for C. Properties plotted are Silence, Patient and Therapist speech, Patient and Therapist Interpausal units (IPU) and Overlap. (Color figure online)

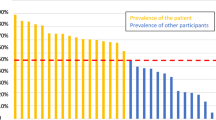

3.4 Scatter plot of segments

In order to make the results more readable, the aspects of the information depicted in the spider web plot from Fig. 8 above can be simplified into a traditional bidimensional scatter plot, thus representing the one-minute segments with points, instead of lines. For Fig. 9 below (Cangemi, 2023, §S6 and §S8) we only retain two dimensions from Fig. 8, notably those which show a clearer separation across subjects, i.e. Patient speech and Silence (see also Bartolomeo et al., 2013; Clemmer, 1980).

The resulting scatter plot shows little overlap between the data extracted for the three patients. They present clearly distinct patterns that are consistent with the phenomenological descriptions for patient A (subapophanic schizophrenia with preponderance of negative symptoms) and patient B (unstructured delusion with Ideenflucht). Note that the sum of Silence and Patient speech has an upper bound for each observation, represented by the dexter diagonal in Fig. 9. For example, if a one-minute segment contains 40 s of Silence, it cannot contain more than 20 s of Patient speech. However, given the different possible ways in which the interlocutor (i.e. the therapist) can contribute to the interaction, these two dimensions are neither causally related nor correlated. This is further evidenced by the visual inspection of Fig. 9, from which one can easily anticipate very different correlation slopes for each patient.

We further add points for the mean values for each of the four excerpts, representing them with large points with different geometrical shapes. The results are particularly striking for patient C, who displays little intra-subject variability despite having interacted with two different therapists across the four excerpts (see Fig. 3). For example, patient C interacts with the therapist in excerpt 1 (green square) and with the therapist’s associate in excerpt 4 (green diamond). These two symbols, however, are not further apart than those representing excerpts 1 and 4 for speaker A (blue square and diamond), who interacted with the therapist in both cases. This gives us a visual confirmation of the claim put forth in the Introduction: despite their reductionistic character, content-free speech activity records can capture subject-specific aspects of schizophrenic communication, by documenting a greater difference in inter-subject variability than in intra-subject variability for selected dimensions. For details on mean values per patient and excerpt, as well as overall means, see Cangemi (2023).

4 Discussion

The differences in the interaction styles of the three patients with schizophrenia documented in the CIPPS corpus (Dovetto & Gemelli, 2013; Pastore, 2013) can be characterised even with minimalist speech activity records, devoid of propositional or interactional content. Visual inspection of the distribution of Silence and Patient speech activity for four 10 min excerpts from the corpus (Fig. 9) show high inter-subject variability and low intra-subject variability. Given the ease with which the content-free speech activity records can be generated and shared, this approach holds promise for the study of communication styles in schizophrenia.

4.1 Limitations

Despite the encouraging results, the present study has a number of apparent shortcomings. First, it is possible that our records are only successful because of the extremely different manifestations of schizophrenia in the three patients chosen for this study. In other words, speech activity measures might be exceptionally well suited to represent the continuum spanning from the predominant negative symptoms of patient A to the hyperactive, verbose behaviour of patient B, but they might be less effective at characterising organised delusion as in the speech of patient C. Indeed, data from patient C shows substantial overlap with metrics extracted from a clinical interview between a different therapist and a control subject not diagnosed with schizophrenia (Cangemi, 2022, Cangemi et al., 2023). As a result, the composition of this dataset might have been particularly favourable to the success of our metrics. Since in this study we only aimed to offer a proof of concept, we find this caveat to be acceptable.

Second, the present study aimed at evaluating the success of the most minimalistic characterisation of speech activity possible, in order to comply with constraints on ease of annotation and on respect of privacy. However, even within these boundaries, it would still be possible to incorporate into our approach some well-known insight on how interaction works. So far we have limited ourselves to extracting activity information independently of its sequential nature, for example by characterising periods of inactivity as “silence”. This is oblivious of the well-known difference between “gaps”, i.e. moments of silence occurring between the speech activity of two different interlocutors, and “pauses”, i.e. moments of silence occurring between two activities from the same speaker (Sacks et al., 1974); similar distinctions could be drawn for moments of activity and for moments of overlapping activity (Schultz, 1975; see Heldner & Edlund, 2010). The content-free nature of our records is not incompatible with such finer classifications. Ideally, this characterisation would be further enriched by annotating whether these spurts of activity represent attempts to occupy the conversation floor, or rather support the interlocutor in their ongoing activity (Yngve, 1970). Crucially for our purposes, such classifications do not seem to require manual annotation, but can be successfully approximated using a simple durational threshold for backchannel signals such as “okay” and “mhm” (e.g. maximally 500 ms, see Edlund et al., 2010). Ultimately, instead of relying only on the dichotomous distinctions above, the spurts of activity in our records could be characterised using continuous measures of sequential organisation (de Ruiter et al., 2006; Levinson & Torreira, 2015). In other words, consistently with the minimalistic nature of our claim, in this study we have only captured a small amount of the information available in duration-based content-free representations of speech activity. However, only exploring finer content-free classifications and metrics would do justice to the full potential of such records.

In a similar vein, the third limitation of this study is the lack of validation against the richer spectrum of content-based analyses. As said above, our analysis deliberately focusses on low-resolution content-free representations, in order to provide a proof of concept for the viability of this privacy-compliant approach. However, it is also true that the CIPPS corpus used in this study has been published with both transcriptions and audio recordings, and thus the full extent of the available data could be used as ground truth for the evaluation of our findings. Besides the phenomenological account by Pastore (2013), other essays published with the CIPPS corpus provide content-based analyses of the lexical (Dovetto, 2015), textual (Gemelli, 2013) and conversational (Cacchione, 2013) aspects of the interviews. These broad-stroke analyses could offer a first benchmark to assess the loss (or distortion) of information generated by our content-free representations. At a lower level, a content-based analysis of the audio files could suggest different strategies for our content-free annotations. This is particularly true for the treatment of vegetative sounds. For example, since breathing is known to be relevant for turn-taking (McFarland, 2001, Torreira et al., 2015), if other vegetative sounds such as non-interactional coughing were sufficiently rare, it would become justifiable to include all human sounds in the speech activity records.

A last clear limitation of the current study is in the use of only 40 min of interaction for each of the 3 patients, out of the approximately 10 h of recordings available for the CIPPS corpus. While we believe that the current sample gives a reasonably representative picture of inter- and intra-subject variability in interactional behaviour, the annotation of the entire corpus would yield an even more robust data base for the evaluation of our claim. Extending the analysis to larger corpora in future will be straightforward if each interactant is recorded on a separate channel.

4.2 Outlook

Even though our approach is solely based on content-free speech activity records, it has the advantage of capturing macroscopic differences in the behaviour of interactants, while requiring little annotation work and being compliant with privacy requirements. Such requirements could also be met by removing all identifying information from conversation transcripts. Therefore, in the ideal case, when the respect of privacy and the effort invested in annotation are not a constraint, richer representations would of course be preferable. Only the exploration of fully contextualised exchanges, including consideration of the verbal content (Howes et al., 2021) and of the non-vocal information conveyed by gaze, gesture and posture (Perniss, 2018), can do justice to the inherent multimodality of language and, more importantly for our aims, to the subtleties of interaction (see Fig. 2).

So far we have treated visual and verbal information as equally problematic for privacy concerns. In truth, with the appropriate investment it should be possible to account for gaze, gesture and posture in an anonymised way. This is possible with manual annotation, with the automated analysis of video recordings (Holte et al., 2012), or with equipment for the recording of body movements (Gudmundsson et al., 2022; Pfeiffer, 2013) and gaze (Tonsen et al., 2020). As shown by a recent study on triadic interactions involving patients with schizophrenia (Howes & Lavelle, 2023), the inclusion of visual information, while costly, would surely increase the depth of our content-free activity records.

Alternatively, or additionally, it is possible to increase the breadth of application of our approach to different corpora, applications and research questions. These include for example the analysis of interactions other than interviews in a medical setting, such as task-oriented or unplanned conversation. Speech activity records could be useful to assess patients’ reaction to treatment, as well as to assist in quantifying the severity of symptoms across different stages of the disorder. Moreover, content-free records could be used to profile the communication styles of therapists, rather than patients, with potential benefits for the training of medical personnel. These applications and many others could be made possible with the development of dedicated software for portable devices and of secure data sharing platforms, allowing researchers and medical professionals to collect and publish large amounts of privacy-compliant data.

Data availability

Annotations and scripts are available in the Open Science Framework repository at the address https://osf.io/py47w/ (Cangemi, 2023).

References

Amblard, M., Musiol, M., & Rebuschi, M. (2021). (In)coherence of discourse formal and conceptual issues of language (Vol. 10). Springer International Publishing. https://doi.org/10.1007/978-3-030-71434-5

American Psychiatric Association. (2022). Diagnostic and statistical manual of mental disorders (Fifth Edition, Text Revision). American Psychiatric Association. https://doi.org/10.1176/appi.books.9780890425596

Andreasen, N. C. (1986). Scale for the assessment of thought, language, and communication (TLC). Schizophrenia Bulletin, 12(3), 473–482. https://doi.org/10.1093/schbul/12.3.473

Andreasen, N. C., & Flaum, M. (1991). Schizophrenia: The characteristic symptoms. Schizophrenia Bulletin, 17(1), 27–49. https://doi.org/10.1093/schbul/17.1.27

Bakhtin, M. M. (1986). The problem of speech genres. In C. Emmerson, M. Holquist, & V. W. McGee (Eds.), Speech genres and other late essays (pp. 60–102). University of Texas Press.

Bambini, V., Arcara, G., Bechi, M., Buonocore, M., Cavallaro, R., & Bosia, M. (2016). The communicative impairment as a core feature of schizophrenia: Frequency of pragmatic deficit, cognitive substrates, and relation with quality of life. Comprehensive Psychiatry, 71, 106–120. https://doi.org/10.1016/j.comppsych.2016.08.012

Bartolomeo, C., Improta, E. & Senza Peluso, M. (2013). Pause vuote e delirio nella Wahnstimmung. In F. M. Dovetto & M. Gemelli (2013), 221–252.

Ben-Zeev, D., Wang, R., Abdullah, S., Brian, R., Scherer, E. A., & Choudbury, T. (2016). Mobile behavioral sensing for outpatients and inpatients with schizophrenia. Psychiatric Services, 67, 558–561.

Biber, D., & Conrad, S. (2009). Register, genre, and style (1st ed.). Cambridge University Press. https://doi.org/10.1017/CBO9780511814358

Binswanger, L. (1933). Über Ideenflucht. Art. Institut Orell Füssli.

Blankenburg, W. (1971). Der Verlust der natürlichen Selbstverständlichkeit. Enke.

Boersma, P., & Weenink, D. (2022). Praat: Doing phonetics by computer. https://www.praat.org

Bolis, D., Balsters, J., Wenderoth, N., Becchio, C., & Schilbach, L. (2017). Beyond autism: Introducing the dialectical misattunement hypothesis and a bayesian account of intersubjectivity. Psychopathology, 50(6), 355–372. https://doi.org/10.1159/000484353

Brady, P. T. (1965). A technique for investigating on-off patterns of speech. Bell System Technical Journal, 44(1), 1–22. https://doi.org/10.1002/j.1538-7305.1965.tb04135.x

Brederoo, S. G., Nadema, F. G., Goedhart, F. G., Voppel, A. E., De Boer, J. N., Wouts, J., Koops, S., & Sommer, I. E. C. (2021). Implementation of automatic speech analysis for early detection of psychiatric symptoms: What do patients want? Journal of Psychiatric Research, 142, 299–301. https://doi.org/10.1016/j.jpsychires.2021.08.019

Brown, R. (1973). Schizophrenia, language, and reality. American Psychologist, 28, 395–403. https://doi.org/10.1037/h0034694

Cacchione, A. (2013). Se questo è un testo: Coerenza e coesione nel parlato schizofrenico. In F. M. Dovet-to & M. Gemelli (2013), 187–220.

Campbell, N. (2007). Approaches to conversational speech rhythm: Speech activity in two-person telephone dialoges. Proceedings of the 16th international congress of phonetic sciences, Saarbrücken (pp. 343–348). ICPHS.

Campione, E., & Véronis, J. (2002). A large-scale multilingual study of silent pause duration. Proceedings of the 1st speech prosody conference, Aix-En-Provence (pp. 199–202). ISCA.

Cangemi, F. (2022). Schizophrenia at the crossroads of thought, language and communication. Proceedings of the thought and language meeting (Institute of Electronics, Information and Communication Engineers), Yamagata (pp. 1–5). https://ken.ieice.org/ken/paper/20221127GCOv/eng/

Cangemi, F. (2023). COSAR: Content-free speech activity records. https://osf.io/py47w

Cangemi, F., M. Grice, V. Lucarini, M. Spaniol, K. Vogeley & S. Wehrle (2023). Very short utterances in clinical interviews with patients with schizophrenia. In: 18th associazione Italiana scienze della voce (AISV) conference. https://youtu.be/VwNJhu4gTmQ

Cangemi, F., & Baumann, S. (2020). Integrating phonetics and phonology in the study of linguistic prominence. Journal of Phonetics, 81, 100993. https://doi.org/10.1016/j.wocn.2020.100993

Chapple, E. D. (1945). Interaction recorder (U.S. Patent No. 2,387,563).

Chapple, E. D. (1939). Quantitative analysis of the interaction of individuals. Proceedings of the National Academy of Sciences of the United States of America, 25(2), 58–67. https://doi.org/10.1073/pnas.25.2.58

Chapple, E. D. (1953). The standard experimental (Stress) interview as used in interaction chronograph investigations. Human Organization, 12(2), 23–32.

Chapple, E. D., & Donald, G. (1947). An evaluation of department store salespeople by the interaction chronograph. Journal of Marketing, 12(2), 173–185. https://doi.org/10.1177/002224294701200203

Chapple, E. D., & Lindemann, E. (1942). Clinical implications of measurements of interaction rates in psychiatric interviews. Applied Anthropology, 1(2), 1–11.

Clemmer, E. J. (1980). Psycholinguistic aspects of pauses and temporal patterns in schizophrenic speech. Journal of Psycholinguistic Research, 9(2), 161–185. https://doi.org/10.1007/BF01067469

Corcoran, C. M., Carrillo, F., Fernández-Slezak, D., Bedi, G., Klim, C., Javitt, D. C., Bearden, C. E., & Cecchi, G. A. (2018). Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry, 17(1), 67–75. https://doi.org/10.1002/wps.20491

Corcoran, C. M., Mittal, V. A., Bearden, C. E., Gur, E. R., Hitczenko, K., Bilgrami, Z., Savic, A., Cecchi, G. A., & Wolff, P. (2020). Language as a biomarker for psychosis: A natural language processing approach. Schizophrenia Research, 226, 158–166. https://doi.org/10.1016/j.schres.2020.04.032

Corona-Hernández, H., de Boer, J. N., Brederoo, S. G., Voppel, A. E., & Sommer, I. E. C. (2022). Assessing coherence through linguistic connectives: Analysis of speech in patients with schizophrenia-spectrum disorders. Schizophrenia Research. https://doi.org/10.1016/j.schres.2022.06.013

Covington, M. A., He, C., Brown, C., Naçi, L., McClain, J. T., Fjordbak, B. S., Semple, J., & Brown, J. (2005). Schizophrenia and the structure of language: The linguist’s view. Schizophrenia Research, 77(1), 85–98. https://doi.org/10.1016/j.schres.2005.01.016

de Ruiter, J.-P., Mitterer, H., & Enfield, N. J. (2006). Projecting the end of a speaker’s turn: A cognitive cornerstone of conversation. Language, 82(3), 515–535. https://doi.org/10.1353/lan.2006.0130

Dingemanse, M., & Liesenfeld, A. (2022). From text to talk: Harnessing conversational corpora for humane and diversity-aware language technology. Proceedings of the 60th annual meeting of the association for computational linguistics (Volume 1: Long Papers, pp. 5614–5633). https://doi.org/10.18653/v1/2022.acl-long.385

Dingemanse, M., Liesenfeld, A., Rasenberg, M., Albert, S., Ameka, F. K., Birhane, A., Bolis, D., Cassell, J., Clift, R., Cuffari, E., De Jaegher, H., Dutilh Novaes, C., Enfield, N. J., Fusaroli, R., Gregoromichelaki, E., Hutchins, E., Konvalinka, I., Milton, D., Rączaszek-Leonardi, J., … Wiltschko, M. (2023). Beyond single-mindedness: A figure-ground reversal for the cognitive sciences. Cognitive Science, 47(1), e13230. https://doi.org/10.1111/cogs.13230

Dovetto, F. M., & Gemelli, M. (2013). Il parlar matto. Schizofrenia tra fenomenologia e linguistica. Il corpus CIPPS. (Seconda edizione con DVD-ROM). Aracne.

Dovetto, F. M. (2015). Uso delle parole nella schizofrenia. In L. Mariottini (Ed.), Identità e discorsi. Studi offerti a Franca Orletti (pp. 161–174). Roma Tre-Press.

Duez, D. (1982). Silent and non-silent pauses in three speech styles. Language and Speech, 25(1), 11–28. https://doi.org/10.1177/002383098202500102

Edlund, J., Heldner, M., Moubayed, S. A., Gravano, A., & Hirschberg, J. (2010). Very short utterances in conversation. Working Papers/Lund University, Department of Linguistics and Phonetics, 54, 11–16.

Edlund, J., Heldner, M., & Hirschberg, J. (2009). Pause and gap length in face-to-face interaction. Interspeech. https://doi.org/10.21437/Interspeech.2009-710

Eggins, S., & Slade, D. (2004). Analysing casual conversation. Equinox.

Fatouros Bergman, H., Preisler, G., & Werbart, A. (2006). Communicating with patients with schizophrenia: Characteristics of well functioning and poorly functioning communication. Qualitative Research in Psychology, 3(2), 121–146.

Feldstein, S. (1962). The relationship of interpersonal involvement and affectiveness of content to the verbal communication of schizophrenic patients. The Journal of Abnormal and Social Psychology, 64, 39–45. https://doi.org/10.1037/h0040892

Finesinger, J. E., Cobb, S., Chapple, E. D., & Brazier, M. A. B. (1948). An investigation of prediction of success in naval flight training. Civil Aeronautics Administration, Division of Research.

Galasiński, D. (2011). The patient’s world: Discourse analysis and ethnography. Critical Discourse Studies, 8(4), 253–265. https://doi.org/10.1080/17405904.2011.601634

Gemelli, M. (2013). Anafora nella schizofasia. Note preliminari all’identificazione di uno ‘splitting referenziale’. In F. M. Dovetto & M. Gemelli (2013), 85–122.

Gilmartin, E., Cowan, B. R., Vogel, C., & Campbell, N. (2018). Explorations in multiparty casual social talk and its relevance for social human machine dialogue. Journal on Multimodal User Interfaces, 12(4), 297–308. https://doi.org/10.1007/s12193-018-0274-2

Goldman-Eisler, F. (1951). The measurement of time sequences in conversational behavior. British Journal of Psychology, 42, 355–362.

Gudmundsson, V., Valls-Ratés, Ï., & Niebuhr, O. (2022). The Charles—A new sensor device for measuring body language and stress in Speech Communication. Proceedings of the 33rd Conference on Electronic Speech Signal Processing, Sønderborg (pp. 162–171).

Ha, J. F., & Longnecker, N. (2010). Doctor-patient communication: A review. The Ochsner Journal, 10(1), 38–43.

Hargrove, D. S., & Martin, T. A. (1982). Development of a microcomputer system for verbal interaction analysis. Behavior Research Methods & Instrumentation, 14(2), 236–239. https://doi.org/10.3758/BF03202160

Harris, R. (1980). The language-makers. Duckworth.

Heldner, M., & Edlund, J. (2010). Pauses, gaps and overlaps in conversations. Journal of Phonetics, 38, 555–568. https://doi.org/10.1016/j.wocn.2010.08.002

Heldner, M., Edlund, J., Hjalmarsson, A., & Laskowski, K. (2011). Very short utterances and timing in turn-taking. Proceedings of the 12th annual conference of the international speech communication association, Interspeech Florence (pp. 2837–2840). ISCA.

Holte, M. B., Tran, C., Trivedi, M. M., & Moeslund, T. B. (2012). Human pose estimation and activity recognition from multi-view videos: Comparative explorations of recent developments. IEEE Journal of Selected Topics in Signal Processing, 6(5), 538–552. https://doi.org/10.1109/JSTSP.2012.2196975

Howes, C., Breitholtz, E., Lavelle, M., & Cooper, R. (2021). Justifiable reasons for everyone: Dialogical reasoning in patients with schizophrenia. Proceedings. of the 25th Workshop on the Semantics and Pragmatics of Dialogue.

Howes, C., & Lavelle, M. (2023). Quirky conversations: How people with a diagnosis of schizophrenia do dialogue differently. Philosophical Transactions of the Royal Society B, 378, 20210480. https://doi.org/10.1098/rstb.2021.0480t

Itakura, H. (2001). Describing conversational dominance. Journal of Pragmatics, 33, 1859–1880. https://doi.org/10.1016/S0378-2166(00)00082-5

Jaffe, J., & Feldstein, S. (1970). Rhythms of dialogue. Academic Press.

Kraepelin, E. (1915). Psychiatrie. Ein Lehrbuch für studierende und Ärzte (Eighth edition). Barth.

Kuperberg, G. R. (2010). Language in schizophrenia part 1: An introduction: Language in schizophrenia part 1. Language and Linguistics Compass, 4(8), 576–589. https://doi.org/10.1111/j.1749-818X.2010.00216.x

Lauver, P. J. (1970). Inexpensive apparatus for quantifying speech and silence behaviors. Journal of Counseling Psychology, 17, 378–379. https://doi.org/10.1037/h0029502

Laver, J. (2011). Communicative functions of phatic communion (pp. 215–238). De Gruyter Mouton.

Leeds-Hurwitz, W., & Kendon, A. (2021). The natural history of an interview and the microanalysis of behavior in social interaction: A critical moment in research practice. Holisms of communication (pp. 145–200). Language Science Press.

Levinson, S. C., & Torreira, F. (2015). Timing in turn-taking and its implications for processing models of language. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2015.00731

Linell, P. (1982). The written language bias in linguistics. Linköping University Electronic Press.

Lucarini, V., Cangemi, F., Daniel, B. D., Lucchese, J., Paraboschi, F., Cattani, C., Marchesi, C., Grice, M., Vogeley, K., & Tonna, M. (2022). Conversational metrics, psychopathological dimensions and self-disturbances in patients with schizophrenia. European Archives of Psychiatry and Clinical Neuroscience, 272(6), 997–1005. https://doi.org/10.1007/s00406-021-01329-w

Matarazzo, J. D., & Wiens, A. N. (1972). The interview: Research on its anatomy and structure. Aldine-Atherton.

Maynard, D. W., & Heritage, J. (2005). Conversation analysis, doctor–patient interaction and medical communication. Medical Education, 39(4), 428–435. https://doi.org/10.1111/j.1365-2929.2005.02111.x

McFarland, D. H. (2001). Respiratory markers of conversational interaction. Journal of Speech, Language, and Hearing Research, 44, 128–143. https://doi.org/10.1044/1092-4388(2001/012)

McNeill, D. (1985). So you think gestures are nonverbal? Psychological Review, 92, 350–371. https://doi.org/10.1037/0033-295X.92.3.350

Mueser, K. T., Bellack, A. S., Douglas, M. S., & Morrison, R. L. (1991). Prevalence and stability of social skill deficits in schizophrenia. Schizophrenia Research, 5, 167–176. https://doi.org/10.1016/0920-9964(91)90044-R

Nakazawa, M. (2022). Fmsb: Functions for medical statistics book with some demographic data (0.7.3). https://CRAN.R-project.org/package=fmsb

Orletti, F. (2000). La conversazione diseguale. Carocci.

Parnas, J., & Bovet, P. (1991). Autism in schizophrenia revisited. Comprehensive Psychiatry, 32(1), 7–21. https://doi.org/10.1016/0010-440x(91)90065-k

Parola, A., Simonsen, A., Bliksted, V., & Fusaroli, R. (2020). Voice patterns in schizophrenia: A systematic review and Bayesian meta-analysis. Schizophrenia Research, 216, 24–40. https://doi.org/10.1016/j.schres.2019.11.031

Pastore, C. (2013). Tre modi dell’esperire schizofrenico. Mondo congelato, Mondo frammentato, Mondo delirato. In F. M. Dovetto & M. Gemelli (2013), 19–44.

Perniss, P. (2018). Why we should study multimodal language. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2018.01109

Pfeiffer, T. (2013). Documentation of gestures with motion capture. In C. Müller, A. Cienki, E. Fricke, S. Ladewig, D. McNeill, & S. Tessendorf (Eds.), Body – language – communication: an international handbook on multimodality in human interaction. Handbücher zur Sprach- und Kommunikationswissenschaft/Handbooks of linguistics and communication science (HSK) 38/1 (pp. 857–868). DE GRUYTER. https://doi.org/10.1515/9783110261318.857

Pilnick, A., Hindmarsh, J., & Gill, V. T. (2009). Beyond ‘doctor and patient’: Developments in the study of healthcare interactions. Sociology of Health & Illness, 31, 787–802. https://doi.org/10.1111/j.1467-9566.2009.01194.x

R Core Team. (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing.

Raballo, A., & Maggini, C. (2005). Experiential anomalies and self-centrality in schizophrenia. Psychopathology, 38, 124–132. https://doi.org/10.1159/000085842

Sacks, H., Schegloff, E. A., & Jefferson, G. (1974). A simplest systematics for the organization of turn-taking for conversation. Language, 50(4), 696–735. https://doi.org/10.2307/412243

Saslow, G., Matarazzo, J. D., & Guze, S. B. (1955). The stability of interaction chronograph patterns in psychiatric interviews. Journal of Consulting Psychology, 19, 417–430. https://doi.org/10.1037/h0047036

Sbranna, S., Cangemi, F., & Grice, M. (2020). Quantifying L2 interactional competence. Proceedings of the 16th associazione Italiana scienze della voce conference, Rende (CS), 383–405. https://doi.org/10.17469/O2107AISV000018

Schröder, M. (2003). Experimental study of affect bursts. Speech Communication, 40, 99–116. https://doi.org/10.1016/S0167-6393(02)00078-X

Schultz, S. J. (1975). A scheme for specifying interaction units. Family Process, 14(4), 559–578. https://doi.org/10.1111/j.1545-5300.1975.00559.x

Schuppler, B. & Kelterer, A. (2021). Developing an annotation system for communicative functions for a cross-layer ASR system. In Proceedings of the first workshop on integrating perspectives on discourse annotation, Tübingen (pp. 14–18). https://aclanthology.org/2021.discann-1

Schwartz, S. (1982). Is there a schizophrenic language? Behavioral and Brain Sciences, 5, 579–626. https://doi.org/10.1017/S0140525X00013650

Starkweather, J. A. (1956). Content-free speech as a source of information about the speaker. The Journal of Abnormal and Social Psychology, 52, 394–402. https://doi.org/10.1037/h0041133

Swingle, P. G. (1984). Temporal measures of vocalization: Some methodological considerations. Journal of Personality and Social Psychology, 47, 1263–1280. https://doi.org/10.1037/0022-3514.47.6.1263

ten Have, P. (1991). Talk and institution: A reconsideration of the ‘asymmetry’ of doctor-patient interaction. Talk and Social Structure: Studies in Ethnomethodology and Conversation Analysis., 1991, 138.

Tonsen, M., Baumann, C. K., & Dierkes, K. (2020). A high-level description and performance evaluation of pupil invisible (arXiv:2009.00508). arXiv. https://doi.org/10.48550/arXiv.2009.00508

Torreira, F., Bögels, S., & Levinson, S. C. (2015). Breathing for answering: The time course of response planning in conversation. Frontiers in Psychology. https://doi.org/10.3389/fpsyg.2015.00284

Trouvain, J., & Truong, K. P. (2013). Exploring sequences of speech and laughter activity using visualisations of conversations. Workshop on Affective Social Speech Signals 2013.

Trouvain, J., & Truong, K. P. (2012). Comparing non-verbal vocalisations in conversational speech corpora. Proceedings of 11th international workshop on corpora for research on emotion sentiment and social signals (ES3 2012) (pp. 36–39). Saarland University.

Ventola, E. (1979). The structure of casual conversation in english. Journal of Pragmatics, 3(3), 267–298. https://doi.org/10.1016/0378-2166(79)90034-1

Viaro, M., & Leonardi, P. (1983). Getting and giving information: analysis of a family-interview strategy. Family Process, 22, 27–42. https://doi.org/10.1111/j.1545-5300.1983.00027.x

Ward, N. (2006). Non-lexical conversational sounds in American English. Pragmatics and Cognition, 14(1), 129–182. https://doi.org/10.1075/pc.14.1.08war

Watter, S. B. (2020). Interaction chronograph: The administration of equilibrium. Grey Room, 79, 40–77. https://doi.org/10.1162/grey_a_00292

Wharton, T. (2003). Interjections, language, and the ‘showing/saying’ continuum. Pragmatics and Cognition, 11(1), 39–91. https://doi.org/10.1075/pc.11.1.04wha

Yngve, V. (1970). On getting a word in edgewise. Papers from the 6th regional meeting Chicago linguistic society, 567–578.

Ziółkowska, J. (2012). The objectifying discourse of doctors’ questions. Qualitative analysis of psychiatric interviews. Social Theory & Health. https://doi.org/10.1057/sth.2012.8

Funding

Open Access funding enabled and organized by Projekt DEAL. This research was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation)—Project-ID 281511265–SFB 1252 Prominence in Language at the University of Cologne: “Individual behaviour in encoding and decoding prosodic prominence” (Principal Investigators: Martine Grice and Kai Vogeley). Valeria Lucarini was supported by the doctoral grant “Young Talents in Psychiatry 2021” funded by the Fondation FondaMental and the Foundation Bettencourt Schueller. These funding agencies had no further role in the study, in the analyses, or in the decision to submit the article for publication.

Author information

Authors and Affiliations

Contributions

Funding for the study was secured by MG and KV. FC conceptualised and designed the study. Material preparation, data collection and analysis were performed by FC, AJ, VL and MS. The first draft of the manuscript was written by FC. All authors commented on the draft, reacted to reviewers’ comments, and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cangemi, F., Grice, M., Janz, A. et al. Content-free speech activity records: interviews with people with schizophrenia. Lang Resources & Evaluation (2023). https://doi.org/10.1007/s10579-023-09666-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10579-023-09666-z