Abstract

Kustaanheimo–Stiefel (KS) transformation depends on the choice of some preferred direction in the Cartesian 3D space. This choice, seldom explicitly mentioned, amounts typically to the direction of the first or the third coordinate axis in Celestial Mechanics and atomic physics, respectively. The present work develops a canonical KS transformation with an arbitrary preferred direction, indicated by what we call a defining vector. Using a mix of vector and quaternion algebra, we formulate the transformation in a reference frame independent manner. The link between the oscillator and Keplerian first integrals is given. As an example of the present formulation, the Keplerian motion in a rotating frame is re-investigated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

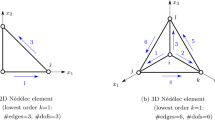

There are many ways to convert the Kepler problem into the isotropic harmonic oscillator. A comprehensive summary can be found in the monograph by Cordani (2003) and in the work of Deprit et al. (1994), the two sources overlapping only partially. Among all the methods, the Kustaanheimo–Stiefel (KS) transformation stands exceptional by its simplicity, popularity, and history [traced back to Heinz Hopf, or even Carl Friedrich Gauss—see Volk (1976)]. The literature concerning theoretical and practical aspects of the KS variables is already vast, yet there is a feature which, to our knowledge, has not brought enough attention by either being too obvious, or by not being realized. We mean the existence of some preferred direction in the definition of the KS variables. Kustaanheimo, Stiefel, and most of their followers in the realm of Celestial Mechanics, use the set of variables so designed, that only the first Cartesian coordinate \(x_1\) involves the squares of the KS coordinates. In the present paper, we will refer to it as KS1. However, in atomic physics a different set, to be named KS3, is considered standard, at least since late 1970s (e.g. Duru and Kleinert 1979). There, only the \(x_3\) involves the squares. The choice of the preferred direction is of marginal significance for the unperturbed Keplerian problem, but it may either simplify, or complicate expressions resulting from added perturbations. Thus we have found it worthwhile to establish a general KS transformation with the preferred direction left unspecified.

In Sect. 2 we give a brief outline of how the KS1 transformation has settled down in the realm of Celestial Mechanics. Not attempting a detailed bibliographic survey, we mark five turning points: (1) the first paper of Kustaanheimo (1964) where the transformation is born in spinor form, (2) the metamorphosis into the L-matrix setup done by Kustaanheimo and Stiefel (1965), (3) early quaternion formulation by Vivarelli (1983), (4) the refinement of the quaternionic form due to Deprit et al. (1994), and (5) interpretation of the KS variables as rotation parameters (Saha 2009). Save for the last point, the reader may observe how the special role of the \(Ox_1\) axis direction is transferred from one setup to another, and how the presence of a preferred direction becomes more and more evident.

Once the stage has been set, we discuss the main theme in Sect. 3. Introducing the notion of a defining vector, we build the KS transformation (and its canonical extension) with an arbitrary preferred direction. Working within the general quaternion and vector formalism, we refrain from using explicit expressions in terms of coordinates. Hopefully, it should allow capturing the intrinsic, coordinate independent features of the KS transformation.

Section 4 considers the general properties of the Hamiltonian in KS variables. Special attention is paid to linking the invariants of the unperturbed problem in its two incarnations: the Kepler problem in Cartesian coordinates, and the isotropic oscillator in KS variables.

Finally, in order to give an example that the choice of the defining vector does matter in a perturbed problem, we return to the Kepler problem in the rotating reference frame. Compared to earlier works which used the KS1 set, the task can be considerably facilitated by the appropriate selection of the preferred direction, as demonstrated in Sect. 5.

2 KS1 transformation

2.1 The roots

What Paul Kustaanheimo announced at the Oberwolfach conference on Mathematical Methods of Celestial Mechanics and published the same year (Kustaanheimo 1964) is worth a brief recall, because neither Annales Universitatis Turkuensis, nor the Publications of the Astronomical Observatory of Helsinki are widespread enough. Moreover, an awkward notation has masked some features that emerge immediately when the more common conventions are applied.

Given a Cartesian position vector \({\varvec{x}}=(x_1,x_2,x_3)^\mathrm {T}\), Kustannheimo extended it formally to a null 4-vector of the Minkowski space, using the length \(r = \sqrt{{\varvec{x}}\cdot {\varvec{x}}} = x_0\) as an extra coordinate. These served as the coefficients for a linear combination of a unit matrix \({\mathbf {\sigma }}_0\) and the Pauli matrices (Cartan 1966)

The result is a complex matrix

assigned to the vector \((x_0,x_1,x_2,x_3)^\mathrm {T}\). The difference between the treatment of \(x_1\) in the diagonal and the complex pair \(x_2 \pm \mathrm {i}x_3\) can be spotted already at this stage.Footnote 1

Any Hermitian matrix \(\mathbf {S}\) can be expressed in terms of two complex numbers \(S_1\) and \(S_2\). Using a complex 2-vector \({\varvec{s}} = (S_1,S_2)^\mathrm {T}\), Kustaanheimo (1964) defined \(\mathbf {S}\) in terms of its Hermitian outer product

By the equivalence of (2) and (3), the vector \({\varvec{s}}\) becomes a rank 1 spinor,Footnote 2 and the matrix \(\mathbf {S}\) – a rank 2 spinor associated with \({\varvec{x}}\) (c.f. Bellandi Filho and Menon 1987; Steane 2013). Equating respective elements of (2) and (3), one readily finds

As noted by Kustaanheimo, the transformation is not unique; indeed it involves only the products with conjugates, so using any spinor \({\varvec{q}} = (Q_1, Q_2)^\mathrm {T} = {\varvec{s}} \exp {\mathrm {i}\phi }\), leads to the same result. Geometrically, it means that any pair of complex numbers resulting from rotations of \(S_1\) and \(S_2\) on a complex plane by the same angle, generates the same position vector \({\varvec{x}}\) in (4).

The regularization of the Keplerian motion does not appear until the coordinate transformation (4) is augmented by the time transformation of the Sundman type. There, Kustaanheimo proposed a general formula relating the pseudo-time \(\tau \) to its physical counterpart t

where K could be an arbitrary function of position, velocity and time. This flexibility has never been seriously explored, and the simplest choice of \(K=0\) has became standard. The regularization converts the Kepler problem with energy constant h into a spinor oscillator problem

yet, this simple form does not show up, until the bilinear constraint

is imposed,Footnote 3 where the prime stands for the derivative with respect to the Sundman time \(\tau \). Kustaanheimo justified this choice by observing the invariance of the left hand side of (7) in the perturbed Kepler problem with a particular form of perturbation (linear in coordinates, velocities and angular momentum).

One can only speculate what would be the fate of the Kustaanheimo’s transformation, has it not attracted the attention of Eduard Stiefel who coauthored the paper published next year (Kustaanheimo and Stiefel 1965). In the new mise-en-scéne, the complex variables and unnecessary generalization were dropped, and the discussion focused on a real 4-vector \({\varvec{u}}=(u_1,u_2,u_3,u_4)^\mathrm {T}\), containing the parameters of the substitution

Then, Eq. (4) takes the form

and the constraint (7) is turned into

This derivation, however, cannot be found in the paper; Kustaanheimo and Stiefel (1965) have burnt the bridge leading back to the 1964 work and started the presentation from the matrix equation that related \({\varvec{u}}\) with a 4-vector \({\varvec{x}} =(x_1,x_2,x_3,0)^\mathrm {T}\) through the matrix product

The L-matrix definition, given by Kustaanheimo and Stiefel (1965) with an intriguing clause ‘for example’, was

This definition leads to the first three equations (9) for \(x_j\), whereas the last of equations (9) had been postulated as a required property of \(\mathbf {L}({\varvec{u}})\). Noteworthy, the special role of \(x_1\) has been conserved, as visible in the first of equations (9): other coordinates are defined by products \(u_i u_j\), whereas \(x_1\) is composed of the pure squares \(u_i^2\).

The work, published in a more widespread journal and written in a manner friendly to the Celestial Mechanics audience, considerably helped to promulgate what is now known as the Kustaanheimo–Stiefel transformation. By the influence of the Stiefel and Scheifele (1971) monograph, the matrix approach became paradigmatic in the Celestial Mechanics community, and the ‘for example’ choice (12) has been taken for granted and obvious, save for occasional renumbering of indices and the change of sign in \(u_4\).

2.2 Enter quaternions

The close relation between spinors and quaternions was known already to Cartan. But in the framework of the L-matrix formulation, the relation of the KS variables to the quaternion algebra is merely an additional aspect, mentioned by Kustaanheimo and Stiefel (1965) or Stiefel and Scheifele (1971) as an interesting, but probably unimportant curio. Setting the KS transformation in the quaternion formalism, originated by Vivarelli (1983) and applauded by Deprit et al. (1994), offered new paths to understanding the known properties of the transformation, as well as to its generalization to higher dimensions.

Following Deprit et al. (1994) we treat a quaternion \(\mathsf {v} = (v_0,v_1,v_2,v_3)\), or \(\mathsf {v}=(v_0,{\varvec{v}})\), as a union of a scalar \(v_0\) and of a vector \({\varvec{v}} = (v_1,v_2,v_3)^\mathrm {T}\). Conjugating a quaternion, we change the signs of its vector part, i.e.

Extracting the vector part is performed by means of the operator \(\natural \), so that for \(\mathsf {v}=(v_0,{\varvec{v}})\)

The usual scalar product, marked with a dot,

is commutative, but the quaternion product

is not. Using a standard basis

we recover the classical ‘1ijk’ multiplication rules of Hamilton for the basis quaternions: \(\mathsf {e}_0 \mathsf {e}_1 = \mathsf {e}_1\) for \(1 i = i\), \(\mathsf {e}_1 \mathsf {e}_2 = \mathsf {e}_3\) for \(ij=k\), etc. The inverse of a quaternion is, in full analogy with complex numbers, \(\mathsf {v}^{-1} = \bar{\mathsf {v}}/|\mathsf {v}|^2\), where the norm is \(|\mathsf {v}| = \sqrt{\mathsf {v}\cdot \mathsf {v}}\). The conjugate of a product is, typically for noncommutative operations,

Let us observe a useful property of the mixed dot product,

echoing the mixed product rule of the standard vector algebra.

Another useful operation is called a quaternion outer product (Morais et al. 2014) or a quaternion cross product (Stiefel and Scheifele 1971; Vivarelli 1988; Deprit et al. 1994). Conventions vary among the authors; we adopt the one of Deprit et al. (1994)

A remarkable property of the cross product, not mentioned by Deprit et al. (1994), is a factor exchange rule

following directly from (20) and (18).

Note that the cross product always results in a quaternion with null scalar part, called a pure vector (Deprit et al. 1994) or, more often, a pure quaternion (e.g. Morais et al. 2014). We adopt the former convention.

Casting the KS transformation in a quaternion form is not a novelty. It can be found already in Stiefel and Scheifele (1971). In spite of the discouraging comments attached by the authors, Vivarelli (1983) returned to this formalism and issued a different quaternion form of the transformation. Feeling obliged to adhere to the ‘for example’ convention of Kustaanheimo and Stiefel (1965), she reconstructed the transformation (9) as a quaternion product

where \(\mathsf {u} = (u_1,u_2,u_3,u_4)\), and the product in square brackets is an ‘anti-involute’ \(\mathsf {u}_*\) of \(\mathsf {u}\) (an operation, that actually amounts to a trivial change of sign in \(u_4\)).Footnote 4 Of course, the presence of \(\mathsf {e}_3\) in (22) does not mean that the direction of \(x_3\) gains some special position; the transformation of Vivarelli remains the pure KS1.

Deprit et al. (1994), decided to link a more natural, vector type quaternion \(\mathsf {x} = (0,x_1,x_2,x_3)\) with a KS quaternion \(\mathsf {v} = (v_0,v_1,v_2,v_3)\) and then, not needing an anti-involute, found thatFootnote 5

which is not far from the original quaternion formulation of Stiefel and Scheifele (1971). Converting a Stiefel–Scheifele–Vivarelli quaternion \(\mathsf {u}\) to a Deprit–Elipe–Ferrer quaternion \(\mathsf {v}\) can be effected by the rule

With this rule, the outcome of (23) is equivalent to the transformation of Kustaanheimo and Stiefel (1965). In particular, the distinguished role of \(x_1\) remains unaffected, and clearly marked by the presence of \(\mathsf {e}_1\) in Eq. (23), as it will follow from the interpretation given below.

2.3 Kustaanheimo–Stiefel meet Euler–Rodrigues

For practitioners, the most enjoyed property of quaternions is their straightforward relation to rotation. Rotation of a vector \({\varvec{x}}\), formally treated as a quaternion with null scalar part \((0,{\varvec{x}})\), is specified by a unit quaternion \(\mathsf {q}\), including the complete information about the rotation angle \(0 \le \theta \le \pi \), and rotation axis given by the unit vector \({\varvec{n}}\). Then, with

the rotated vector \({\varvec{y}}\) is obtained through the quaternion product

If we write the unit quaternion appearing in (26) simply as \(\mathsf {q}=(q_0,q_1,q_2,q_3)\), its components are the Euler–Rodrigues parameters (sometimes called the Cayley parameters) of the rotation matrix. Indeed, skipping the scalar part, Eq. (26) may be rewritten in the matrix form as

with the rotation matrix

This property reveals the meaning of the quaternion KS transformation (23):

Thus we find another argument in favor of the claim that the KS1 transformation attaches a special role to the axis \(Ox_1\).

The existence of some relation between KS variables and rotation was mentioned ‘for the record’ by Stiefel and Scheifele (1971), who declared the lack of interest in studying it closer. Then Vivarelli (1983) returned to this issue, but her description is based on a statement that since a unit quaternion \(\mathsf {q}\) ‘represents a rotation’, so a product \(\mathsf {q} \mathsf {q}_*\) also ‘represents’ some rotation with the axis and angle expressions provided. But, unlike (23), the assignment \(\mathsf {x} = \mathsf {q} \mathsf {q}_*\) is not a formula for rotation of some vector, leaving the whole argument in suspense. It took years until Saha (2009) issued an explicit reference to the rotation. His variant of the KS transformation

differs from (23) in two aspects: the conjugation sequence is different, and the basis quaternion \(\mathsf {e}_3\) is used instead of \(\mathsf {e}_1\) (a rare example of the KS3 convention in Celestial Mechanics). According to Saha (2009), Eq. (29) implies the rotation of the third axis to the \({\varvec{x}}\) direction, although actually it describes the inverse rotation: \({\varvec{x}}\) to \({\varvec{e}}_3\). Then – up to the signs mismatch – the right-hand side of (29) coincides with the third column of the matrix \(\mathbf {R}({\varvec{v}})\).

3 KS transformation with arbitrary defining vector

3.1 Point transformation

The most straightforward generalization of the quaternion formulation proposed by Deprit et al. (1994) is to consider an arbitrary unit quaternion

and the transformation

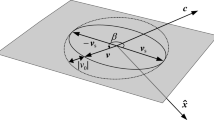

where a positive real parameter \(\alpha \), having the dimension of length, is introduced as in Deprit et al. (1994) to allow the components of \(\mathsf {v}\) have the same dimension as \(\mathsf {x}\), as well as to facilitate a comfortable units choice later on. Since the scalar component of (31) is \(x_0 = c_0 \, \mathsf {v} \cdot \mathsf {v}\), and \(c_0\) does not appear in the vector part of \(\mathsf {x}\), we may simply set \(c_0=0\). Thus \(\mathsf {c} = (0,{\varvec{c}})\), and its vector part will be called a defining vector. By definition, we assume the unit length of the defining vector \(||{\varvec{c}}||=1\) throughout the text.

The remaining subsystem of (31) may be set in the matrix-vector form

where \(\mathbf {R}\) is defined in Eq. (28). Alternatively, we can represent (32) as

or

The fibration property, known since Kustaanheimo (1964), may be stated in the general case as follows: quaternions \(\mathsf {v}\) and

generate the same vector \({\varvec{x}}\) for all values of angle \(\phi \). In other words, the point \({\varvec{x}}\) maps onto a fiber consisting of all quaternions \(\mathsf {w}\) generated from a given representative \(\mathsf {v}\). The proof is elementary, once we recall Eq. (18). Then

because the part in square brackets describes the rotation of \({\varvec{c}}\) around itself. Thus, to a given rotation/scaling matrix \(\mathbf {R}(\mathsf {v})\), exactly two quaternions can be assigned (\(\mathsf {v}\) and \(-\mathsf {v}\)), but the product of \(\mathbf {R}\) and a specified vector \({\varvec{c}}\) allows more freedom. This means also that the ‘geometrical interpretation’, stated in Sect. 2.3, refers to only one representative of the fiber.

According to the fibration property, inverting the transformation (32) amounts to picking up some particular \(\mathsf {v}\) that serves as the generator of the fiber. Since \(\mathbf {R}\) is homogenous of degree 2, introducing a unit quaternion \(\mathsf {q} = \mathsf {v}/|\mathsf {v}|\) we obtain

where \(\mathbf {R}(\mathsf {q}) \in \mathrm {SO}(3,{\mathbb {R}})\). Accordingly

since \(||{\varvec{c}}||=1\) by the assumptions. Thus, the transformation

has the meaning of rotation from \({\varvec{c}}\) to \(\hat{{\varvec{x}}}\).

Recalling the axis-angle decomposition (25) we can aim at some ‘natural’ choice of \(\mathsf {q}\) based upon rotation axis \({\varvec{n}}\) and angle \(\theta \) resulting from elementary vector identities. Thus, the vector part of \(\mathsf {q}\) is

whereas

Note the singular case \({\varvec{c}} \cdot \hat{{\varvec{x}}} = - 1\), when the actual choice should be \(\mathsf {q} = (0,{\varvec{n}})\), with an arbitrary unit vector \({\varvec{n}}\) orthogonal to \({\varvec{c}}\) (and thus to \(\hat{{\varvec{x}}}\)). For a (perturbed) Kepler problem, it may only happen on a polar orbit, with \({\varvec{c}}\) placed in the (osculating) orbit plane. Another problematic situation concerns the collision \({\varvec{x}}={\varvec{0}}\), when the KS quaternion \(\mathsf {v}=\mathsf {0}\) simply cannot be normalized to \({\varvec{q}}\), and the notion of rotation is inappropriate.

Thus we first propose an inversion rule

and

It differs from the rules of Stiefel and Scheifele (1971), effectively based upon the sign of \({\varvec{c}} \cdot {\varvec{x}}\) (with \({\varvec{c}} = {\varvec{e}}_1\)).

An interesting alternative was proposed by Saha (2009), who used the inversion rule implying a pure vector \((0,{\varvec{v}})\) form. We can obtain it from (42) through a quaternion product of \(\mathsf {v}\) with \((0, {\varvec{c}})\) or its conjugate [both being particular cases of (35)]. The result is indeed considerably simpler:

and (43) otherwise. The sign plus or minus can be chosen at will (both \(\mathsf {v}\) and \(-\mathsf {v}\) belong to the same fiber). In practice, while converting a sequence of positions forming an orbit \({\varvec{x}}(t)\), we can swap the signs at the instants, where the motion in \(\mathsf {v}\) appears discontinuous. Note that the choice (44) allows plotting the evolution of KS variables in \({\mathbb {R}}^3\), furnishing spatial trajectory \({\varvec{v}}(t)\). The vector \({\varvec{v}}\) defined by (44) will be called an SKS vector (after Saha, Kustaanheimo, and Stiefel). In order to distinguish general formulae from the ones referring to the SKS vector, we will use the subscript ‘s’ for the latter.

Any KS quaternion \(\mathsf {v}\) with \(v_0 \ne 0\) can be reduced to the SKS vector by the product

where the gauge function is a unit quaternion

This reduction rule can be used if KS coordinates are followed without reference to the Cartesian position \({\varvec{x}}\).

3.2 Canonical extension

3.2.1 KS momenta

Being interested in Hamiltonian formulation of the Kepler problem, we need to match the KS variables \(\mathsf {v}\) with their conjugate momenta \(\mathsf {V}\). This goal can be achieved by a dimension raising Mathieu transformation \({\varvec{X}}\cdot \mathrm {d}{\varvec{x}} = \mathsf {V} \cdot \mathrm {d}\mathsf {v}\), where \({\varvec{X}}\) are the momenta conjugate to the Cartesian \({\varvec{x}}\) coordinates. We will also use a formal quaternion \(\mathsf {X}\), postulating \(X_0 = 0\). Following the standard procedure (Kurcheeva 1977; Deprit et al. 1994), we generalize it from \(\mathsf {e}_1\) to a unit quaternion \(\mathsf {c} = (0,{\varvec{c}})\) obtaining

wherefrom the vector part is

Since the scalar component of (47) should be null, we obtain the constraint

Remarkably the vector \({\varvec{J}}\), orthogonal to \({\varvec{c}}\), is directly related to the quaternion cross product (20)

so we can rewrite the condition (49) as

valid regardless of \(c_0\). This is the general equivalent of the KS1 phase space constraint (7) or (10) for the transformation (31). Using \(\mathsf {c}=\mathsf {e}_1\), we can recover the formula of Deprit et al. (1994),

The presence of constraint (51) allows a unique determination of \(V_0\) in terms of the remaining variables. This, combined with a possibility of reduction to \({\varvec{v}}_\mathrm {s}\) and \(\mathsf {V}_\mathrm {s}\) implies that in spite of using eight variables, we follow the dynamics of a system with effectively three degrees of freedom.

The inverse of the transformation (53) is given as a quaternion product

or, explicitly (setting \(X_0=0\))

Notably, the definition of the new momenta is given in a mixed form, involving old momenta \({\varvec{X}}\) and new coordinates \({\varvec{v}}\). It means, that for a given set of values \({\varvec{x}},{\varvec{X}}\), the phase space fiber contains not only the family of coordinates \(\mathsf {v}\) implied by (35), but also the family of momenta \(\mathsf {V}\) – one quaternion for each member of (35). Thus, if we try to provide the explicit form of \(\mathsf {V}({\varvec{x}},{\varvec{X}})\), we may choose some particular representative of the fiber. Let us comfortably choose the SKS vector (44), because then we can simplify expressions, remaining on the ground of usual vector calculus.

In the absence of \(v_0\), the scalar part \(V_0\) simplifies to \(V_{0\mathrm {s}} = - 2 ({\varvec{v}}_\mathrm {s} \times {\varvec{X}}) \cdot {\varvec{c}}/\alpha \). Substituting \({\varvec{v}}_\mathrm {s}\) from (44), with the plus sign selected, into the first of equations (54), we find

whereas for \(\hat{{\varvec{x}}}= -{\varvec{c}}\), the scalar part of \(\mathsf {V}\) is \(V_{0\mathrm {s}}=0\). In both cases the conclusion is the same: whenever the SKS vector is taken for coordinates, the scalar part of the KS momenta quaternion is a product of a coordinates dependent factor and the projection of angular momentum on the defining vector \({\varvec{c}}\).

The vector part \({\varvec{V}}\) is also linked with familiar quantities when the same SKS vector is used, leading to

where the Cartesian momentum, radial velocity and angular momentum appear.

Finding \(\mathsf {V}_\mathrm {s}\) is possible without the knowledge of \({\varvec{x}}\) and \({\varvec{X}}\). Let us multiply both sides of Eq. (53) by a quaternion product \(\mathsf {c} \mathsf {q}_\mathrm {s} \bar{\mathsf {c}}\). Then, the equation becomes

and we see that its left hand side should represent \(\mathsf {V}_\mathrm {s}\). But one may easily verify that \(\mathsf {c} \mathsf {q}_\mathrm {s} \bar{\mathsf {c}} = \mathsf {q}_\mathrm {s}\), so if momenta \(\mathsf {V}\) are determined by \({\varvec{X}}\) with an arbitrary quaternion \(\mathsf {v}\), then the momenta \(\mathsf {V}_\mathrm {s}\) determined by the same \({\varvec{X}}\) and the equivalent SKS vector \({\varvec{v}}_\mathrm {s}\) are

where the sign choice should be the same as in (45).

4 KS Hamiltonian and its invariants

4.1 Perturbed Hamiltonian and equations of motion

As long as the defining vector is constant, the canonical KS transformation is time independent, so the Hamiltonian function transforms without a remainder. Thus, the first step is to obtain \(\mathcal {H}^\star (\mathsf {v},\mathsf {V},t) = \mathcal {H}({\varvec{x}},{\varvec{X}},t)\), where \(\mathcal {H}\) is a perturbed two body problem Hamiltonian

with the Keplerian part

depending on the gravitational parameter \(\mu \), and we make no assumptions about the order of magnitude for the perturbation \({\mathcal {R}}\).

The distance r may be considered a known function of \({\varvec{v}}\) thanks to (38), so we retain this symbol in \(\mathcal {H}_0\). The square of \(|{\varvec{X}}|\) is easily found from \(\mathsf {X}\bar{\mathsf {X}} = {\varvec{X}} \cdot {\varvec{X}} + X_0^2\). Substituting eq. (47), using the rule (18) and defining \(X_0\) by (49), we findFootnote 6

Thus the transformed Keplerian Hamiltonian is

Dropping the last, zero valued term in (62) is allowed, but it should not be done without reflection. It is to be remembered that while \(x_0=0\) is the property of the point transformation (31) itself, \(X_0=0\) is only postulated. Two separate questions should be addressed. First: is the value of \({\varvec{J}}\cdot {\varvec{c}}\) conserved during the motion? Here the answer is conditionally positive: it is not so for an arbitrarily invented Hamiltonian function of \(\mathsf {v}\) and \(\mathsf {V}\). But if the Hamiltonian is a transformed function \(\mathcal {H}({\varvec{x}},{\varvec{X}},t)\), then its KS image \(\mathcal {H}^\star (\mathsf {v},\mathsf {V},t)\) conserves \({\varvec{J}}\cdot {\varvec{c}}\), because all the Poisson’s brackets \(\left\{ {\varvec{J}}\cdot {\varvec{c}}, x_j\right\} =\left\{ {\varvec{J}}\cdot {\varvec{c}}, X_j\right\} =0\), for \(0 \le j \le 3\), similarly to the argument of Deprit et al. (1994). The second question, less often considered, is: does the presence of \({\varvec{J}} \cdot {\varvec{c}}\) influence the solution? Worth asking, because a zero valued function may still have nonzero derivatives. For a while the answer is obvious, because \(\mathcal {H}_0^\star \) contains the square \(({\varvec{J}} \cdot {\varvec{c}})^2\), so its gradient will vanish. But the problem may reappear in the context of variational equations or when the rotating reference frame will be considered.

The Hamiltonian (60) remains singular at \(r=0\), so we proceed with the Sundman transformation (5)

with an arbitrary parameter \(\beta \). In the canonical framework, switching from physical time t to the Sundman time \(\tau \) requires the transition to the extended phase space, appending to the KS coordinates and momenta a conjugate pair \(v^*\) and \(V^*\). The former is an imitator of the physical time (up to an additive constant); the latter serves to fix a zero energy manifold for the motion, being a doppelganger of the Hamiltonian \(\mathcal {H}^*\). Thus, on the manifold \(\mathcal {H}^\star +V^*=0\), we can divide the extended Hamiltonian by the right hand side of (63), obtaining the Hamiltonian function

independent on the new time variable \(\tau \). What remains, is a judicious choice of \(\alpha \) and \(\beta \). Assuming

we secure \(\mathsf {v}' = \mathsf {V}\). If then \(\alpha \) is equal to major axis (i.e. \(\alpha = 2 a\)) of the elliptic orbit, \(\tau \) will run on average at half rate of t for the Kepler problem, in accord with the angle doubling property of the KS transformation.

Although the constant term has no influence on equations of motion, we retain it for its role in fixing the \(\mathcal {K}=0\) manifold. And so we finally set up the KS Hamiltonian

The value of \(V^*\) should secure \(\mathcal {K}= 0\).

Equations of motion resulting from (66) are those of a perturbed harmonic oscillator with frequency

namely

where the prime marks the derivative with respect to Sundman time \(\tau \). We can note, that using an arbitrary defining vector \({\varvec{c}}\) has no influence on the unperturbed problem in KS variables. Whatever change results from a particular choice of \({\varvec{c}}\), may be revealed only by the form taken by \({\mathcal {P}}\) in a specific problem.

4.2 Invariants

Including the perturbation \({\mathcal {P}}\), we can only mention two invariants: if \({\mathcal {P}}\) does not depend explicitly on \(v^*\) (hence on time t), the momentum \(V^*= - E = \mathrm {const}\), where E is the total energy; regardless of \({\mathcal {P}}\), the scalar product \({\varvec{J}}\cdot {\varvec{c}}=0\) is also invariant, as already mentioned. Thus, let us focus on the first integrals of the unperturbed system \(\mathcal {K}_0\), i.e. the two body problem.

There are two points of view for the unperturbed system. We can see it as a four dimensional isotropic harmonic oscillator with its own first integrals. But we can also see it as a transformed Kepler problem with the well known first integrals, potentially expressible in terms of the oscillator constants.

4.2.1 Oscillator

In the absence of perturbation, we can consider the Hamiltonian \(\mathcal {K}_0\) as a separable system of four independent oscillators

Each Hamiltonian \({\mathcal {N}}_j\) is a first integral in involution with the rest \(\left\{ {\mathcal {N}}_i, {\mathcal {N}}_j\right\} =0\), but only three of them are independent, since their sum is fixed by (74). Yet, the system is superintegrable and more integrals can be found. First, we can introduce a four-dimensional variant of the Fradkin tensor \(\mathbf {F}\) (Fradkin 1967)

The symmetric matrix \(\mathbf {F}\) contains 10 different first integrals (not all independent), including four diagonal terms \(F_{ii} = 2E_i/\omega _0\).

Another set of integrals constitutes an antisymmetric angular momentum matrix \(\mathbf {L}\)

with 6 distinct elements (again, not all independent).

Considering \(F_{ij}\) and \(L_{ij}\) as the generators of Hamiltonian equations, we signal their different, somewhat complementary roles. Equations

define a phase plane rotation: either in a phase plane \((v_i,V_i/\omega _0)\) (diagonal terms \(F_{ii}\)), or in two phase planes \((v_i,V_i/\omega _0)\) and \((v_j,V_j/\omega _0)\). The angular momentum terms \(L_{ij}\) lead to equations

that generate rotation on a coordinate plane \((v_i,v_j)\) and on a momentum plane \((V_i,V_j)\).

Two important cross products that appeared in the KS transformation are expressible in terms of \(L_{ij}\) and thus are first integrals of unperturbed motion:

Accordingly, the condition (51) can be seen as a constraint on the angular momentum of the oscillator.

4.2.2 Kepler problem

The energy integral of the Kepler problem has been already discussed in Sect. 4, so let us pass to the two vector-valued first integrals: angular momentum and Laplace (Runge–Lenz) vector.

Expressing the angular momentum

in terms of the KS variables is a formidable task if a brute force attack is attempted by substitution of (33) and the second line of (48) into (81). It is much better to start from plugging in a cross product \(\mathsf {x} \wedge \mathsf {X}\), so that

with \(x_0=0\). Substituting the quaternion product forms (31) for \(\mathsf {x}\) and (47) for \(\mathsf {X}\), one can resort to the factor exchange rule (21)

where we used \(\bar{\mathsf {v}}\mathsf {v}= \alpha r\), and \(\mathsf {c} \bar{\mathsf {c}} = 1\). Thus we obtain

An analogous expression was obtained by Deprit et al. (1994) with a statement: ‘Proof By straightforward calculation using Symbol Processor’. We have decided to provide the proof in full length (or rather shortness), as a good example of the situation where quaternion formalism beats standard vector calculus.

If the expression (84) is used for the sole purpose of computing the value of \({\varvec{G}}\), then \(X_0=0\) can be safely set. But if \({\varvec{G}}\) is to appear in the perturbing Hamiltonian \({\mathcal {P}}\), one should not forget that derivatives of \(X_0\) do not vanish in general.

Linking the Keplerian \({\varvec{G}}\) with the oscillator’s angular momentum tensor \(\mathbf {L}\) is straightforward: inserting (79) into (84) results in

Notably, the defining vector \({\varvec{c}}\) has no direct effect on the direction of the angular momentum. Its action is only indirect, through the constraint \({\varvec{J}}\cdot {\varvec{c}}=0\).

The Laplace vector \({\varvec{e}}\) is primarily given with a cross product of momentum and angular momentum, but in order to express it in terms of KS variables, a version resulting form the ‘BAC-CAB’ identity is more convenient

Most of the building blocks are ready in Eqs. (33), (34), (48), and (61). The remaining product is elementarily found applying the identity (19)

And so the Laplace vector can be expressed as a quaternion product

The formula does not look friendly, but one should expect it to be expressible in terms of Fradkin integrals. Indeed, after rather tedious manipulations, we have found that

where the second term to the right vanishes due to the KS constraint \({\varvec{J}}\cdot {\varvec{c}}=0\), and the third is null on the Keplerian manifold \(\mathcal {K}_0=0\). It is not by chance, that the form of matrix \(\mathbf {E}\)

with diagonal terms

mimics the matrix \(\mathbf {R}({\varvec{v}})\) defined by Eq. (28), present in the KS transformation formula (32). In contrast to the angular momentum \({\varvec{G}}\), the defining vector \({\varvec{c}}\) is explicitly present in the definition of \({\varvec{e}}\).

4.3 Dynamical role of the invariant \({\varvec{J}}\cdot {\varvec{c}}\)

In Sects. 4.1 and 4.2.2, some warnings have been issued concerning the presence of the invariant \({\varvec{J}}\cdot {\varvec{c}}=0\), which should not be dropped blindly in some expressions. Let us now inspect its influence, by considering a Hamiltonian \({\mathcal {M}} = \varPsi {\varvec{J}}\cdot {\varvec{c}}\), where \(\varPsi \) is an arbitrary function of KS variables. Canonical equations of motion generated by \({\mathcal {M}}\), can be cast into a quaternion product form

The solution of this system is a quaternion product

with arbitrary constants \(\mathsf {u}, \mathsf {U}\), provided \(\phi \) is a function of time (possibly implicit) satisfying \(\phi '= - \varPsi \). But, recalling the fiber definition (35), we see that the resulting evolution of KS variables (93) happens on a fiber referring to constant values of the Cartesian variables.

Considering any Hamiltonian \({\mathcal {K}}+{\mathcal {M}}\), where \(\mathcal {K}\) is a KS transform of some function of the Cartesian variables, we recall that both terms commute (\(\left\{ \mathcal {K},{\mathcal {M}} \right\} = 0\)), so the solution will be a direct composition of flows resulting from both Hamiltonians separately. As it follows, adding or retracting a \({\varvec{J}}\cdot {\varvec{c}}\) term to a Hamiltonian function can modify a trajectory in the KS phase space, but it has no influence on the resulting motion in the Cartesian variables \({\varvec{x}},{\varvec{X}}\). We encourage an interested reader to consult a related work of Roa et al. (2016) in the framework of the KS1 set.

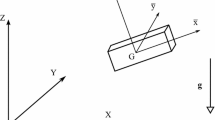

5 Kepler problem in rotating reference frame

5.1 General equations of motion

The Kepler problem in a uniformly rotating reference frame is a necessary building block for a number of dynamical problems handled by analytical perturbation techniques or symplectic integrators with partitioned Hamiltonian. Recently, the problem has been solved by Langner and Breiter (2015), where the account of earlier works can be found as well. Since Langner and Breiter (2015) solved the problem in the frame rotating around \({\varvec{e}}_3\) using the KS1 set (based upon the defining vector \({\varvec{c}}={\varvec{e}}_1\)), it may be interesting to confront the solution with a new one, assuming an arbitrary direction of rotation axis and benefiting from the freedom in the defining vector choice. Intuitively, selecting \({\varvec{c}}\) directed along the rotation axis seems most appropriate, so we assume the angular velocity vector of the reference frame to be \(\varOmega {\varvec{c}}\) from the onset.

The KS transformation, as described in Sect. 3, will be applied to the coordinates \({\varvec{x}}\) and momenta \({\varvec{X}}\) of the rotating frame. If at the epoch \(t=0\) the rotating frame and the fixed frame axes coincide, then the transformation linking \({\varvec{x}},{\varvec{X}}\) with the fixed frame coordinates \({\varvec{x}}_\mathrm {f}\) and momenta \({\varvec{X}}_\mathrm {f}\)

involves rotation matrix from Eq. (28) with the rotation quaternion

The transformation is canonical and, being time-dependent, it creates the remainder \(-\varOmega {\varvec{G}} \cdot {\varvec{c}}\) supplementing the transformed Hamiltonian. Accordingly, the Hamiltonian function to be considered is a sum of \(\mathcal {K}_0\) from Eq. (66) and of

5.2 Simplification

Recalling the conclusion of Sect. 4.3, and benefiting from the choice of \({\varvec{c}}\), we can modify \({\mathcal {P}}\) and use

According to Eqs. (84), (79) and (50), the modified term is simply

so it contains only the vector parts of the KS quaternions, save for \(v_0\) present in r.

Deriving canonical equations of motion from

we observe that they neatly split into a scalar part

and the vector part

where the frequency

is a constant of motion, because \(H' = \left\{ H,\mathcal {K}\right\} =0\), and the last two equations of motion are

Initial conditions for this system at \(\tau =0\) will be

Thus, the situation is much more comfortable than in Langner and Breiter (2015) and all earlier works. Equation (100), describing a simple, one-dimensional harmonic oscillator, are easily solved, rendering

similarly to the fixed frame case.

Looking at the Eq. (101), we recognize two parts referring to harmonic oscillator dynamics and to the kinematics of rotation. Introducing the cross product matrix

we rewrite (101) in the vector-matrix form

which suggest to postulate the solution

involving a common matrix \(\mathbf {A}\) and four scalars \(b_j\), with the initial conditions \(\mathbf {A}=\mathbf {I}\), \(b_2=b_3=0\), and \(b_1=b_4=1\) at \(\tau =t=v^*=0\). Substitution into (107) leads to

Collecting the term preceded by \(\mathbf {A}\), we obtain the system

with an obvious solution

actually known from (105). In the remaining part of (109) and (110) we change the independent variable using (103), and letting \(v^*=t\) for brevity, we find

solved by the orthogonal matrix \(\mathbf {A}\), which represents rotation around \({\varvec{c}}\) by an angle \((-\varOmega t)\). Thus, the final solution consists of the scalar equations (105) and the vector system

where \(\mathsf {q}\) is defined as in (95). Since the solutions for physical time \(v^*(\tau )\) and distance \(r(\tau )\) do not depend on rotation of the reference frame, we omit them—the readers may find them in Stiefel and Scheifele (1971), Langner and Breiter (2015) or any other KS-related text.

It is common to select the reference frame rotation axis as \({\varvec{c}}={\varvec{e}}_3\). In that case, the solution is further simplified, because then the rotation matrix \(\mathbf {R}(\mathsf {q})\) does not influence \(v_3\) and \(V_3\). Thus the appropriate choice of the rotation axis and of the defining vector leads to the simplest form of the solution, where only two degrees of freedom, namely \(v_1, V_1\), and \(v_2, V_2\), are affected by the rotation.

6 Concluding remarks

We dare to hope that pinpointing the presence of the defining vector in the Kustaanheimo–Stiefel transformation may help in both the understanding and the efficient use of this ingenuous device. We do encourage those of the readers who practice the use of KS variables, to choose the defining vector best suited for the problem at hand, instead of inertially following the ‘for example’ choice made by Kustaanheimo and Stiefel. Actually, a common habit in physics is to align the third axis with a symmetry axis of the potential. It means that the KS3 set (\({\varvec{c}}={\varvec{e}}_3\)) should be widespread, which is true in physics but not yet in Celestial Mechanics. Of course (as noticed by a reviewer), from a purely formal point of view, the adjustment of, say, KS1 to a different preferred direction \({\varvec{c}}\) may be achieved by means of a rotation matrix \(\mathbf {M} \in \mathrm {SO(4)}\), applied to the left-hand side of (11) or (23). But then, in the quaternion formalism, one should associate to \(\mathbf {M}\) a unit quaternion \(\mathsf {m}\), so that

and, finally

The next step towards the form (31) is blocked by the lack of commutativity in the quaternion product, leaving the relation of \(\mathsf {m}\) to \({\varvec{c}}\) unclear, and simplicity is lost, unless some trivial \(\mathsf {m}\) has been considered.

Another way of using an arbitrary preferred direction is implicitly present in the theory of generalized L-matrices worked out by Poleshchikov (2003), yet its geometrical interpretation, analogous to the one we propose, would need an additional effort.

Performing the canonical extension of the KS coordinates (point) transformation, we went a step further than usual, providing the direct expression of new momenta \(\mathsf {V}\) in terms of \({\varvec{x}}\) and \({\varvec{X}}\). It has revealed the dependence of the KS momenta on the Cartesian angular momentum vector. The explicit relation between Fradkin tensor and Laplace vector, derived in this paper, is another point of novelty, at least to our knowledge.

While working on some parts of the present study, we have been occasionally surprised by the power of the quaternion algebra when applied to the KS formulation of Deprit et al. (1994). It was not our intention to contradict the part of their conclusions that praised symbolic processors and lengthy calculations, but some proofs happened to be shorter than expected and we could not help it.

Notes

The disparity of indices associated with \(x_i\) and \(\sigma _j\), was originally not visible, since Kustaanheimo used a different set of the Pauli matrices (namely: \(\mathbf {i}_x = \sigma _3\), \(\mathbf {i}_y = \sigma _1\), and \(\mathbf {i}_z = - \sigma _2\)). It took some time until the physicists swapped to \(\mathbf {S}= \sum _{j=0}^3 x_j \sigma _j\), the KS3 convention.

Setting their additional parameter \(\alpha =1\).

The equivalent equation (22) of Deprit et al. (1994) is incomplete by the omission of \(X_0^2\).

References

Bellandi Filho, J., Menon, M.: The Kustaanheimo–Stiefel transformation in a spinor repesentation. Rev. Bras. Fis. 17(2), 302–309 (1987)

Cartan, E.: The Theory of Spinors. MIT Press, Cambridge (1966)

Cordani, B.: The Kepler Problem. Springer Basel AG, Basel (2003)

Deprit, A., Elipe, A., Ferrer, S.: Linearization: Laplace vs. Stiefel. Celest. Mech. Dyn. Astron. 58, 151–201 (1994). doi:10.1007/BF00695790

Duru, I., Kleinert, H.: Solution of the path integral for the H-atom. Phys. Lett. 84B(2), 185–188 (1979)

Fradkin, D.: Existence of the dynamic symmetries \(O_4\) and \(SU_3\) for all classical central potential problems. Prog. Theor. Phys. 37(5), 798–812 (1967)

Kurcheeva, I.V.: Kustaanheimo–Stiefel regularization and nonclassical canonical transformations. Celest. Mech. 15, 353–365 (1977). doi:10.1007/BF01228427

Kustaanheimo, P.: Spinor regularization of the Kepler motion. Ann. Univ. Turku. Ser. A 73, 1–7 (1964)

Kustaanheimo, P., Stiefel, E.: Perturbation theory of Kepler motion based on spinor regularization. J. Reine Angew. Math. 218, 204–219 (1965)

Langner, K., Breiter, S.: KS variables in rotating reference frame. Application to cometary dynamics. Astrophys. Space Sci. 357, 153 (2015). doi:10.1007/s10509-015-2384-6

Morais, J.P., Georgiev, S., Sprössig, W.: Real Quaternionic Calculus Handbook. Birkhäuser, Basel (2014). doi:10.1007/978-3-0348-0622-0

Poleshchikov, S.M.: Regularization of motion equations with L-transformation and numerical integration of the regular equations. Celest. Mech. Dyn. Astron. 85, 341–393 (2003)

Roa, J., Urrutxua, H., Peláez, J.: Stability and chaos in Kustaanheimo–Stiefel space induced by the Hopf fibration. Mon. Not. RAS 459, 2444–2454 (2016) doi:10.1093/mnras/stw780, arXiv:1604.06673

Saha, P.: Interpreting the Kustaanheimo-Stiefel transform in gravitational dynamics. Mon. Not. RAS 400, 228–231 (2009). doi:10.1111/j.1365-2966.2009.15437.x, arXiv:0803.4441

Steane, A.M.: An Introduction to Spinors. (2013) ArXiv e-prints arXiv:1312.3824

Stiefel, E., Scheifele, G.: Linear and Regular Celestial Mechanics. Springer, Berlin (1971)

Vivarelli, M.D.: The KS-transformation in hypercomplex form. Celest. Mech. 29, 45–50 (1983). doi:10.1007/BF01358597

Vivarelli, M.D.: Geometrical and physical outlook on the cross product of two quaternions. Celest. Mech. 41, 359–370 (1988)

Volk, O.: Miscellanea from the history of Celestial Mechanics. Celest. Mech. 14, 365–382 (1976). doi:10.1007/BF01228523

Waldvogel, J.: Quaternions and the perturbed Kepler problem. Celest. Mech. Dyn. Astron. 95, 201–212 (2006). doi:10.1007/s10569-005-5663-7

Waldvogel, J.: Quaternions for regularizing celestial mechanics: the right way. Celest. Mech. Dyn. Astron. 102, 149–162 (2008). doi:10.1007/s10569-008-9124-y

Acknowledgements

Not by chance, the text was completed and submitted in the day marking the tenth anniversary of the death of Prof. André Deprit. We would like to dedicate the work to the memory of this unforgettable man of science and a benevolent spirit of our Observatory. We thank reviewers for their comments and bibliographic suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Breiter, S., Langner, K. Kustaanheimo–Stiefel transformation with an arbitrary defining vector. Celest Mech Dyn Astr 128, 323–342 (2017). https://doi.org/10.1007/s10569-017-9754-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10569-017-9754-z