Abstract

Lapatinib and capecitabine (L-CAP) is effective in HER-2 positive patients with metastatic breast cancer (MBC). However, moderate to severe diarrhea and rash (≥ grade 2) are problematic dose limiting toxicities. Since risk may vary over the course of therapy, we developed repeated measures models to predict the risk of ≥ grade 2 diarrhea and rash prior to each cycle of L-CAP. Data from 197 patients who received the L-CAP as part of a clinical trial were reviewed (Cameron, Breast Cancer Res Treat 112:533–543, 2008). Generalized estimating equations were used to develop the risk models using a backward elimination process. Risk scoring algorithms were then derived from the final model coefficients. Finally, a receiver operating characteristic curve (ROC) analysis was undertaken to measure the predictive accuracy of the scoring algorithms. Patient age, presence of skin metastases at baseline, treatment being initiated in the spring, earlier cycles, and grade I diarrhea in the prior cycle were identified as being significant predictors for ≥ grade 2 diarrhea. The ROC analysis indicated good predictive accuracy for the diarrhea algorithm with an area under the curve of 0.78 (95 %CI: 0.72–0.82). Prior to each cycle of therapy, patients with risk scores > 125 units would be considered at high risk for developing ≥ grade 2 diarrhea. A similar prediction index was also derived in the case of ≥ grade 2 rash. Our models provide patient-specific risk information that could be helpful in assessing the risks and benefits of L-CAP in the MBC patients.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Lapatinib is an orally administered tyrosine kinase inhibitor of the epidermal growth factor receptor (EGFR) (ErbB1) and human epidermal growth factor receptor-2 (HER-2) [1]. Early trials reported clinical activity in HER-2 positive breast cancer, even in patients with prior exposure to trastuzumab suggesting the lack of cross-resistance [2]. In the pivotal trial that led to approval by regulatory agencies, good performance status patients with advanced stage HER-2 positive breast cancer who had received prior treatment with trastuzumab, anthracyclines, and taxanes were randomized to receive lapatinib 1,250 mg daily with capecitabine (L-CAP) or to capecitabine alone. At the interim analysis, the median time to progression was 8.4 months in the experimental group compared to 4.4 months in the control (HR = 0.49; p = 0.0069) [3]. Since the threshold for statistical significance in the primary endpoint was met, the Data Safety and Monitoring Committee recommended terminating enrollment and allowing women in the control arm to be offered lapatinib [3, 4].

Despite the almost two fold increase in progression free survival and substantial clinical activity in patients with brain metastases [4, 5], the L-CAP combination can be associated with significant dose limiting toxicities (DLT), particularly diarrhea and to a lesser extent, skin rash [3, 5]. Moderate to severe diarrhea and skin rash (≥ grade 2, as defined by the National Cancer Institute Common Terminology Criteria for Adverse Events (CTCAE)) were reported in 20 and 5 % of patients’ in the pivotal trial, respectively [3]. Grade 2 diarrhea is defined per CTCAE as an increase of 4 to 6 stools per day over baseline. Grade 2 rash is defined as a scattered macular or papular eruption or erythema with pruritus covering 10–30 % of body surface area. Diarrhea and rash are of clinical concern because they can lead to dose reductions, delays, reduce patient quality of life, increase health care resource use, and can even be life threatening [6–8].

Risk factors for diarrhea and rash have been identified, but they have not been formally quantified, nor are they specific to lapatinib [9, 10]. Therefore, occurrences of DLTs such as diarrhea and rash during anticancer therapy are largely believed to be unpredictable. As a result, the traditional model (acting only after a patients develop the toxicity) is still dominant because no reliable technology to predict when these episodes will occur, and in whom is available. The alternative, to intensely monitor and place all patients on supportive care interventions for all cycles of therapy is simply too expensive and time consuming for most health care systems to sustain. What may be possible, however, is a highly focused strategy based on mathematical modeling to accurately identify patients at higher than average risk, applied “just-in-time” to preempt episodes of DLTs such as diarrhea and rash. In other words, it should be possible and economical to intervene preventatively if we knew who is at higher risk and when (i.e., at what cycle) the risk would become unacceptably high. Such predictive models could then be made available as an “add-on” to existing computer-based chemotherapy ordering systems or delivered through a hand-held application.

To our knowledge, models for predicting the risk of diarrhea and rash in breast cancer patients receiving small molecule targeted therapies such as lapatinib have not been previously described. Therefore, cycle-based repeated measures prediction models for ≥ grade 2 diarrhea and rash were developed for patients receiving L-CAP. The advantage of a repeated measures approach compared to a model using baseline data only is that the former allows risk to be continually reassessed following each additional cycle of anticancer therapy. Repeated measures models have been successfully developed for other toxicities in cancer patients such as neutropenia and hand foot skin reaction [11, 12]. In this study, the development of two independent repeated measures (i.e., by cycle) prediction models for grade ≥ II diarrhea and rash and associated scoring algorithms in patients receiving L-CAP is described.

Methods

Patients and treatment

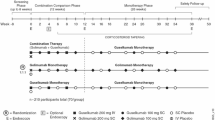

Patient data for developing the repeated measures risk index for ≥ grade 2 diarrhea and rash for L-CAP were obtained from the study initially reported by Geyer and colleagues[ 3]. This was a large randomized trial comparing L-CAP to capecitabine alone in patients with advanced stage HER-2 positive breast cancer [3, 4]. In that trial, a total of 399 patients who were previously exposed to trastuzumab, anthracyclines, and taxanes were randomized to receive capecitabine (2,000 mg/m2 days 1 through 14 of a 21-day cycle) and oral lapatinib 1,250 mg daily (n = 198) or to capecitabine (2,500 mg/m2 days 1 through 14 of a 21-day cycle) alone [3, 4].

Baseline data collection included patient demographic information, performance status, biochemistry, number and site of metastases, prior therapies, and duration of prior trastuzumab. The median dose per cycle of capecitabine and lapatinib delivered in the experimental group was 22,500 and 26,250 mg, respectively [3, 4]. The mean duration of lapatinib was 135 days over a median of 6 cycles (range 1–36). After a total of 1,617 cycles of L-CAP therapy, there were 93 and 19 ≥ grade II diarrhea and rash events as defined by the CTCAE. Therefore, two independent risk models were developed for ≥ grade II diarrhea and for ≥ grade II rash over a median of 6 cycles of L-CAP combination therapy.

Predictive factors for diarrhea and rash and the development of risk algorithms

There have been reports that diarrhea in cancer patients receiving chemotherapy is more prevalent in the elderly, when treatment is started in the spring or summer months, in earlier cycles of therapy and in those patients who experienced grade I diarrhea in a prior cycle [9, 13, 14]. Similarly, risk factors for rash in patients receiving targeted therapies include gender, age, and skin phototype [10, 15]. Certain polymorphisms have also been associated with these toxicities [16]. Therefore, these potential risk factors as well as others that were captured within the clinical trial were considered in the development of each prediction model and the associated scoring algorithms.

Patient demographic and clinical variables were screened for possible inclusion into each risk model. To identify the set of factors with the largest potential contribution to diarrhea and skin rash risk, those with a p value of 0.25 or less in a simple logistic regression with the dependent variable of diarrhea and skin rash were retained for further consideration. This is a recommended approach for removing weak prognostic covariates so that a more manageable set of variables can be submitted to multivariate techniques [17].

Generalized estimating equations (GEE), which adjust for patient clustering by cycle of therapy were used to determine the final set of risk factors in each model [18, 19]. A GEE model was chosen since observations between multiple cycles within the same patient would be expected to violate the independence assumption of standard logistic regression. The set of initially retained risk factors was analyzed in the GEE model. The Likelihood ratio test was then used in a backward elimination process (p < 0.05 to retain) to select the final set of risk factors for retention into the model. The goodness of fit of the final models was then assessed with the Hosmer–Lemeshow test. Model calibration was also evaluated by estimating a smooth calibration line between the observed and predicted outcomes [20]. The calibration curve would equal one (optimal) if the observed and predicted probabilities agree perfectly.

Nonparametric bootstrapping was then applied to test the internal validity of the final prediction models [21, 22]. Resampled data (1,000 iterations) were used to generate bootstrap estimates of the regression coefficients of the multivariable model. The confidence intervals of the regression coefficient estimates from the bootstrap sampling were then compared with the values calculated by the GEE regression analysis.

From the GEE regression model results, the contribution of the individual risk factors to diarrhea and skin rash risk was weighted with the final model coefficients. To simplify calculations using these weights for the risk algorithms, the coefficients were transformed by multiplying each by a constant (derived by trial and error) and then rounding to the nearest unit value. A summary diarrhea and skin rash risk score was then assigned to each patient by simply adding up transformed coefficient values (points) for each risk factor they possessed.

The predictive accuracy of the final risk scoring indexes for diarrhea and skin rash was then determined by measuring the specificity, sensitivity, and area under the Receiver Operating Characteristic (ROC) curve [23, 24]. Discrimination refers to the ability of a diagnostic test or a predictive tool to accurately identify patients at low and high risk for the event under investigation and is often presented as the area under the ROC curve. A predictive instrument with an ROC of ≥ 0.70 is considered to have good discrimination, and an area of 0.5 is equivalent to a “coin toss.” The statistical analyses were performed using Stata, V11.0 (Stata Corp., College Station, Texas, USA).

Results

The clinical and disease characteristics of patients in the model derivation sample are presented in Table 1. Patients who received L-CAP had a median age of 53 years, and over 95 % had a good performance status (as per trial protocol). Prior to the first cycle of anticancer therapy, hematological parameters were within normal limits, and the median number of metastatic sites was two, with the liver and bone being the most common. Approximately, 23 and 18 % of patients started therapy in the spring and summer months, respectively. A total of 1,617 cycles of L-CAP therapy were delivered over a 4–5-month period, and there were 208 grade one diarrhea episodes. With respect to the primary endpoints, there were 93 and 19 ≥ grade 2 diarrhea and skin rash episodes for an overall event rate per cycle of 5.8 and 1.2 %, respectively (Table 1).

Development of a risk prediction model for diarrhea

After the initial univariate screening of potential predictor factors, the variables associated with ≥ grade II diarrhea retained for further analysis were age, presence of bone and skin metastases at baseline, treatment being initiated in the spring, serum creatinine, albumin and total bilirubin levels in the prior cycle, and grade one diarrhea in the prior cycle. The development of the diarrhea prediction model was continued with multivariable GEE regression analysis and the backward elimination process. The final variables retained in the model that were significant predictive factors for diarrhea were advanced patient age, skin metastases at baseline, therapy being started in the spring, and grade one diarrhea in the prior cycle (Table 2). A negative association between risk and number of cycles was also identified where the hazard for diarrhea was the highest in earlier cycles and gradually declined by about 12 % in each additional cycle (Table 2). The confidence intervals of regression coefficient estimates from the bootstrap sampling were comparable with the values calculated by the GEE regression analysis, supporting the internal validity of the model.

Development of a diarrhea scoring system

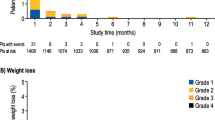

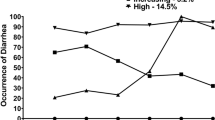

A risk scoring system was then developed from the point estimates of the regression coefficients and the intercept generated from the analysis. Each of the final regression coefficients retained in the model provided a statistical weight for that factor’s contribution to the overall risk of diarrhea. The scoring system was then adjusted by adding a constant across all scores to ensure that none were below zero. The final product was a scoring system between 0 and 250 where higher scores were associated with an increased risk for a diarrhea event (Table 3 and Fig. 1).

Factors that elevate the overall score are considered to be positive risk factors for diarrhea. For instance, patient age would be added to the baseline score of 100, so an older patient would be at higher risk for diarrhea than a younger patient (Table 3). In contrast, patients with skin metastases at baseline are at a reduced risk for diarrhea, so such patients would have 50 units subtracted from their cumulative risk score. This risk scoring system can then be applied to an individual patient prior to the start of L-CAP and also prior to the next cycle in order to monitor the risk of ≥ grade II diarrhea throughout the entire course of therapy. As an illustration, a 60-year-old HER-2 positive patient (base score = 100) with bone only metastases who started lapatinib and capecitabine in the spring would have a risk score of 185 units prior to starting her first cycle. This would correspond to a model estimated diarrhea risk of approximately 24 % during the first cycle of therapy (Figs. 1 and 3). However, as therapy is continued, the risk of diarrhea would decline with each additional cycle (Fig. 2). Therefore, the findings would suggest that the most critical period of dose limiting diarrhea with L-CAP is during the first few cycle of therapy.

All patients in the sample were then assigned a risk score based on the algorithm (Table 3). The model development was continued with an ROC analysis and a measurement of the area under the ROC curve. The findings suggested that the area under the ROC curve was good at 0.78 (95 %CI: 0.72–0.82), supporting the internal validity of the scoring algorithm.

The final step in the development of the diarrhea prediction tool was the identification of a high-risk score threshold or “cutoff,” which optimizes sensitivity and specificity. Four risk score categories were developed (Table 4). The analysis identified a risk score threshold of > 125 as being the point where sensitivity and specificity are optimal, keeping in mind that tradeoffs between these two measures need to be made (Table 4). Hence, a risk score threshold of > 125 would capture patients with a diarrhea risk of at least 4.2 % prior to each cycle of L-CAP therapy (Fig. 2).

Development of a risk prediction model and algorithm for rash

A similar process was used to develop the risk prediction algorithm for rash. However, with only 19 events, it must be acknowledged that there was limited power to identify statistically significant predictors of skin rash. Using a backward elimination process with a preset alpha at 0.05, the factors that were significantly associated with the development of ≥ grade II rash were the presence of brain metastases at baseline, the planned dose of capecitabine in grams for the given cycle, and the concomitant use of 5HT3 antiemetics (Table 2).

From the regression coefficients of these predictive factors, a scoring algorithm between 0 and 150 was developed where higher scores were associated with an increased risk of skin rash. With the estimated risk score, the probability of developing ≥ grade 2 skin rash during that cycle of lapatinib therapy can then be estimated from Fig. 2. As with the diarrhea model, all patients in the sample were then assigned a risk score based on the above algorithm. The ROC curve analysis suggested an acceptable AUC (0.67; 95 %CI: 0.54–0.81), but with somewhat weaker predictive accuracy that the diarrhea scoring algorithm.

The final step in the development of the skin rash prediction tool was the identification of a high-risk score threshold which optimizes sensitivity and specificity. Four risk score categories were developed (Table 4). The analysis identified a risk score threshold of > 40 as being the point where sensitivity and specificity are optimal. A risk score threshold of > 40 would capture patients with a skin rash risk of at least 1.6 % risk prior to each cycle of L-CAP therapy (Fig. 2).

Discussion

Diarrhea and rash are frequent AEs to agents targeting HER1 and 2 [25]. Our study describes the development of predictive models and scoring algorithms for grade ≥ 2 (moderate to severe) diarrhea and skin rash in advanced stage HER-2 positive breast cancer patients receiving lapatinib and capecitabine. After an initial starting point of 31 and 25 potential predictor variables for diarrhea and skin rash, the final independent risk models each contained 4–5 variables that were retained by statistical means. In the case of diarrhea, the findings that advanced patient age, therapy being started in the spring, a higher incidence in earlier cycles, and grade I diarrhea in a prior cycle as risk factors were not unexpected and consistent with earlier reports [9, 13, 14]. However, a reduced risk of diarrhea in patients with skin metastases has not to our knowledge been previously reported and warrants further investigation.

In the case of skin rash, risk was increased by elevating the dose of capecitabine, in patients with brain metastases and in cases where concomitant 5HT3 antiemetics were used. Since risk factors for skin rash in patients receiving the L-CAP combination have never been evaluated, so the identification of the above predictors should be considered exploratory, requiring confirmation in a planned external validation study.

There has been considerable research in the area of prediction modeling, but the majority of work has been on neutropenia and its associated complications [11, 20]. To our knowledge, these are the first predictive tools that have been developed for moderate to severe diarrhea and skin in patients receiving targeted therapy with lapatinib. Diarrhea and skin rash are both associated with substantial morbidity affecting patient quality of life and high cost to the health care system [7, 8, 26, 27]. Therefore, the ability to preempt these episodes with the use of validated mathematical algorithms will improve patient quality of life, reduce pain, and suffering and avoid unnecessary health care expenditures.

Important advantages of our repeated measures models are that they are straightforward to apply and able to discriminate between high and low risk patients. The risk threshold for medical decision-making can also be shifted up or down, depending on a patient’s risk tolerance. Such scoring systems can also be incorporated into chemotherapy ordering systems or even delivered through a hand-held device. Another advantage of a cycle-based prediction model over one that uses prechemotherapy baseline variables only is that the latter cannot predict at what cycle the risk becomes elevated. With only a baseline risk assessment, the clinician would be unable to forewarn the patient and target the appropriate use of preventative therapies when risk becomes unacceptably high.

Despite these attributes, there are a number of limitations in the models that need to be acknowledged. The current risk prediction algorithms for diarrhea and skin should be seen as work in progress because they have yet to undergo external and temporal validation on a new sample of patients [20, 28, 29]. Validation studies to address these limitations are currently underway. The models considered data on only readily measurable variables that were captured in the clinical trial. For instance, data on patient skin phototype was not available. Therefore, not all of the variability was accounted for in our analysis. In the sample of 197 patients, there were only 19 (1.2 % of cycles) moderate to severe skin rash events, which challenged the model development because the ratio between the numbers of events per variable retained in any model should be at least 10–1 [30]. The model development sample was patients who were enrolled into a clinical trial. It has been well documented in the literature that trial patients are predominantly Caucasian, younger and with a better overall performance status than non-trial patients [31]. As a result, it must be acknowledged that such drawbacks could compromise the generalizability of our models to patients outside of the clinical trial setting.

Despite these limitations, we provide an important starting point for optimizing the use of this active drug combination. The mathematical algorithms were easy to apply and able to identify patients at high-risk prior to each cycle of lapatinib and capecitabine. The external validation and eventual clinical application of these prediction tools will be an important source of patient-specific risk information for the practicing oncologist and can enhance patient care by utilizing preventative strategies in a proactive manner

References

Konecny GE, Pegram MD, Venkatesan N et al (2006) Activity of dual kinase inhibitor lapatinib against HER-2 overexpressing and trastuzumab-treated breast cancer cells. Cancer Res 66:1630–1639

Nelson MH, Dolder CR (2006) Lapatinib: a novel dual tyrosine kinase inhibitor with activity in solid tumors. Ann Pharmacother 40:261–269

Geyer CE, Forster J, Lindquist D et al (2006) Lapatinib plus capecitabine for HER2-positive advanced breast cancer. N Engl J Med 355:2733–2743

Cameron D, Casey M, Press M et al (2008) A phase III randomized comparison of lapatinib plus capecitabine versus capecitabine alone in women with advanced breast cancer that has progressed on trastuzumab: updated efficacy and biomarker analyses. Breast Cancer Res Treat 112:533–543

Bachelot T, Romieu G, Campone M et al (2013) Lapatinib plus capecitabine in patients with previously untreated brain metastases from HER2-positive metastatic breast cancer: a single-group phase 2 study. Lancet Oncol 14:64–71

Grem JL, Shoemaker DD, Patrelli NJ et al (1987) Severe life threatening toxicities observed using leucovorin with 5-fluorouracil. J Clin Oncol 5:1704–1712

Arnold RJ, Gabrail N, Raut M et al (2005) Clinical implications of chemotherapy-induced diarrhea in patients with cancer. J Support Oncol 3:227–232

Dranitsaris G, Maroun J, Shah A (2005) Severe chemotherapy-Induced diarrhea in patients with colorectal cancer: a cost of illness analysis. Support Care Cancer 13:18–24

Maroun JA, Anthony LB, Blais N et al (2007) Prevention and management of chemotherapy-induced diarrhea in patients with colorectal cancer: a consensus statement by the Canadian working group on chemotherapy-induced diarrhea. Curr Oncol 14:13–20

Luu M, Boone SL, Patel J et al (2011) Higher severity grade of erlotinib-induced rash is associated with lower skin phototype. Clin Exp Dermatol 36:733–738

Dranitsaris G, Rayson D, Vincent M et al (2008) Identifying patients at high risk for neutropenic complications during chemotherapy for metastatic breast cancer with doxorubicin or pegylated liposomal doxorubicin: the development of a prediction model. Am J Clin Oncol 31:369–374

Dranitsaris G, Vincent M, Yu J et al (2012) Development and validation of a prediction index for hand foot skin reaction in cancer patients receiving sorafenib. Ann Oncol 23:2103–2108

Arbuckle RB, Huber SL, Zacker C (2000) The consequences of diarrhea occurring during chemotherapy for colorectal cancer: a retrospective study. Oncologist 5:250–259

Cascinu S, Barni S, Labianca R et al (1997) Evaluation of factors influencing 5-fluorouracil-induced diarrhea in colorectal cancer patients. Support Care Cancer 5:314–317

Jatoi A, Green EM, Rowland KM Jr et al (2009) Clinical predictors of severe cetuximab-induced rash: observations from 933 patients enrolled in north central cancer treatment group study N0147. Oncology 77:120–123

Liu S, Kurzrock R (2014) Toxicity of targeted therapy: implications for response and impact of genetic polymorphisms. Cancer Treat Rev 40:883–891

George SL (1988) Identification and assessment of prognostic factors. Semin Oncol 15:462–471

Allison PD (1999). Logistic regression using the SAS system: theory and application; chapter 8; p 79–216. Cary, NC: SAS Institute Inc

Rabe-Hesketh S, Everitt B (2000). Statistical analysis using stata; chapter 9; p 119–136.Chapman & Hall/CRC.

Lyman GH, Kuderer NM (2005) A primer in prognostic and predictive models: development and validation of neutropenia risk models. Supp Cancer Ther 2:168–175

Steyerberg EW, Eijkemans MJ, Harrell FE Jr et al (2001) Prognostic modeling with logistic regression analysis: in search of sensible strategies in small data sets. Med Decis Making 21:45–56

Steyerberg EW, Harrell FE Jr, Borsboom GJ et al (2001) Internal validation of predictive models: efficiency of some procedures for logistic regression analysis. J Clin Epidemiol 54:774–781

Hanley JA, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143:29–36

McNeil BJ, Hanley JA (1984) Statistical approaches to the analysis of receiver operating characteristic (ROC) curves. Med Decis Making 4:137–150

Kollmannsberger C, Mitchell T (2013) Selected toxicities of targeted therapies: presentation and management. Semin Oncol 40:499–510

Rosen AC, Case EC, Dusza SW et al (2013) Impact of dermatologic adverse events on quality of life in 283 cancer patients: a questionnaire study in a dermatology referral clinic. Am J Clin Dermatol 14:327–333

Borovicka JH, Calahan C, Gandhi M et al (2011) Economic burden of dermatologic adverse events induced by molecularly targeted cancer agents. Arch Dermatol 147:1403–1409

Royston P, Moons KG, Altman DG, Vergouwe Y (2009) Prognosis and prognostic research: developing a prognostic model. BMJ 338:b604

Altman DG, Vergouwe Y, Royston P, Moons KG (2009) Prognosis and prognostic research: validating a prognostic model. BMJ 338:b605

Katz MH (2003) Multivariable analysis: a primer for readers of the medical literature. Ann Intern Med 138:644–650

Murthy VH, Krumholz HM, Gross CP (2004) Participation in cancer clinical trials: race-, sex-, and age-based disparities. JAMA 291:2720–2726

Acknowledgments

We are grateful to GlaxoSmithKline for providing access to the clinical trial database.

Conflict of interest

The corresponding author had full access to the data in the study and had the final responsibility for the decision to submit the paper. This study was supported by an investigator initiated research grant from GlaxoSmithKline. The sponsor had no input on the study design, data analysis, or preparation of the final manuscript. Drs. Dranitsaris and Lacouture have participated in advisory boards conducted by the GlaxoSmithKline

Funding

Funding for this study was provided by GlaxoSmithKline

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Dranitsaris, G., Lacouture, M.E. Development of prediction tools for diarrhea and rash in breast cancer patients receiving lapatinib in combination with capecitabine. Breast Cancer Res Treat 147, 631–638 (2014). https://doi.org/10.1007/s10549-014-3126-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10549-014-3126-0