Abstract

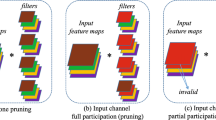

The development of convolutional neural network (CNN) have been hindered in resource-constrained devices due to its large memory and calculation. To obtain a light-weight network, we propose feature channels similarity and mutual learning fine tuning (FCS-MLFT) method. To begin with, we focus on the similarity redundancy between the output feature channels of CNN, and propose a novel structured pruning criterion based on the Cosine Similarity, moreover, we use K-Means to cluster the convolution kernels corresponding to the L1 norm of the feature maps into several bins, and calculate the similarity values between feature channels in each bin. Then, different from the traditional method of using the same strategy as the training process to improve the accuracy of the compressed model, we apply mutual learning fine tuning (MLFT) to improve the accuracy of the compact model and the accuracy obtained by the proposed method can achieve the accuracy of the traditional fine tuning (TFT) while significantly shortening the number of epochs. The experimental results not only show the performance of FCS method outperform the existing criteria, such as kernel norm-based and the layer-wise feature norm-based methods, but also prove that MLFT strategy can reduce the number of epochs.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: 26th advances in neural information processing systems (NIPS 2012), pp. 1106–1114. Lake Tahoe, Nevada, USA

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 770–778. Las Vegas, Nevada, USA

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Liu W, Anguelov D, Erhan D, Szegedy C, Reed SE, Fu C-Y, Berg AC (2016) SSD: single shot multibox detector. In: proceedings of the European conference on computer vision (ECCV 2016), pp 21–37

Redmon J, Divvala S, Girshick RB, Farhadi A (2016) You only look once: unified, real-time object detection. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR 2016), pp 779–788. Las Vegas, NV, USA

Chen LC, Papandreou G, Schroff F, Adam H (2017) Rethinking Atrous convolution for semantic image segmentation. arXiv:1706.05587

Venkateswara H, Chakraborty S, Panchanathan S (2017) Deep-learning systems for domain adaptation in computer vision: learning transferable feature representations. IEEE Signal Process Magazine 34(6):117–129

Kalchbrenner N, Espeholt L, Simonyan K, Oord AVD, Graves A, Kavukcuoglu K (2016) Neural machine translation in linear time. arXiv:1610.10099

Johnson R, Tong Z (2015) Semi-supervised convolutional neural networks for text categorization via region embedding. Adv Neural Inf Process Syst 28:919–927

Wang R, Li Z, Cao J, Chen T, Wang L (2019) Convolutional Recurrent Neural Networks for Text Classification. In: Proceedings of the 2019 International joint conference on neural networks (IJCNN 2019), pp 1–6. H Budapest

Zhang X, Zou J, Ming X, He K, Sun J (2015) Efficient and accurate approximations of nonlinear convolutional networks. In: Proceedings of the 2015 IEEE conference on computer vision and pattern recognition (CVPR 2015), pp 1984–1992. Boston

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. Comput Sci 14(7):38–39

Han S, Pool J, Tran J, Dally WJ (2015) Learning both weights and connections for efficient neural networks. arXiv:1506.02626

He Y, Lin J, Liu Z, Wang H, Li LJ, Han S (2018) AMC: AutoML for model compression and acceleration on mobile devices. In: Proceedings of the European conference on computer vision (ECCV 208), pp. 815–832. Munich, Germany

Li H, Kadav A, Durdanovic I, Samet H, Graf HP (2016) Pruning filters for efficient convnets. arXiv:1608.08710

Hu H, Peng R, Tai YW, Tang CK (2016) Network trimming: a data-driven neuron pruning approach towards efficient deep architectures. arXiv:1607.03250

Wang W, Zhu L, Guo B (2019) Reliable identification of redundant kernels for convolutional neural network compression. J Vis Commun Image Represent 63(102582):1–12

Prakosa SW, Leu JS, Chen ZH (2021) Improving the accuracy of pruned network using knowledge distillation. Pattern Anal Applic 24:819–830

Yuan L, Tay F, Li G, Wang T, Feng J (2020) Revisit knowledge distillation: a teacher-free framework. In: IEEE/CVF conference on computer vision and patternrecognition (CVPR 2020) 2020

Zhang Y, Xiang T, Hospedales TM, Lu H (2018) Deep Mutual Learning. In: 2018 IEEE conference on computer vision and pattern recognition (CVPR 2018), pp 4320–4328. Salt Lake City

Lebedev V, Ganin Y, Rakhuba M, Oseledets I, Lempitsky V (2014) Speeding-up convolutional neural networks using fine-tuned cp-decomposition. Computer science. arXiv:1412.6553

Yu X, Liu T, Wang X, Tao D (2017) On Compressing Deep Models by Low Rank and Sparse Decomposition. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR 2017), pp 67–76. Honolulu

Kim YD, Park E, Yoo S, Choi T, Yang L, Shin D (2016) Compression of deep convolutional neural networks for fast and low power mobile applications. In: Proceedings of the 2016 International conference on learning representations (ICLR), San Juan, Puerto, rico arXiv: 1511.06530

Calvi GG, Moniri A, Mahfouz M, Zhao Q, Mandic DP (2019) Compression and interpretability of deep neural networks via tucker tensor layer: from first principles to tensor valued back-propagation. arXiv:1903.06133

Tang R, Lu Y, Liu L, Mou L, Vechtomova O, Lin J (2019) Distilling task-specific knowledge from BERT into simple neural networks. arXiv:1903.12136

Romero A, Ballas N, Kahou SE, Chassang A, Gatta C, Bengio Y (2014) FitNets: hints for thin deep nets. In: proceedings of the 2015 international conference on learning representations (ICLR), San Diego, CA, United States. arXiv: 1412.6550

Yim J, Joo D, Bae J, Kim J (2017) A Gift from Knowledge Distillation: Fast Optimization, Network Minimization and Transfer Learning. In: 2017 IEEE conference on computer vision and recognition (CVPR 2017), pp 7130–7138. Honolulu

Chen G, Choi W, Yu X, Han T, Chandraker M (2017) Learning efficient object detection models with knowledge distillation. In: Proceedings of the 2017 annual conference on neural information processing systems (NIPS 2017),pp. 743–752. Long Beach, CA

Gupta S, Agrawal A, Gopalakrishnan K, Narayanan P (2015) Deep learning with limited numerical precision. In: Proceedings of the 2015 International conference on machine learning (ICML 2015), pp 1737–1746, Lile

Han S, Mao H, Dally WJ (2015) Deep compression: compressing deep neural networks with pruning, trained quantization and Huffman coding. In: proceedings of the 2016 international conference on learning representations (ICLR 2016), San Juan, Puerto Rico, arXiv :1502.0255

Courbariaux M, Bengio Y, David JP (2015) BinaryConnect: training deep neural networks with binary weights during propagations. In: Proceedings of the 2015 annual conference on neural information processing systems (NIPS 2015), pp. 3123–3131, Montreal, QC

Iandola FN, Han S, Moskewicz M W, Ashraf K, Dally WJ, Keutzer K (2016) SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and < 0.5MB model size. arXiv:1602.0736

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv:1704.04861

Hu J, Shen L, Sun G (2018) Squeeze-and-Excitation Networks. In: 2018 IEEE conference on computer vision and pattern recognition (CVPR 2018), pp 7132-7141, Salt Lake City

Lecun Y (1990) Optimal brain damage. Neural Inf Proc Syst 2(279):598–605

Hassibi B, Stork DG, Wolff GJ (1993) Optimal brain surgeon and general network pruning. In: proceedings of the IEEE international conference on neural networks (ICNN 1993), pp 293–299, San Francisco

Luo J H, Wu J (2017) An entropy-based pruning method for CNN compression. arXiv:1706.05791

Luo J H, Wu J, Lin W (2017) ThiNet: a filter level pruning method for deep neural network compression. In: proceedings of the 2017 IEEE international conference on computer vision (ICCV 2017), pp 5068–5076, Venice

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. In: proceedings of the 2015 international conference on learning representations (ICIR 2015), San Diego, CA, United States. arXiv:1409.1556

Author information

Authors and Affiliations

Contributions

Wei Yang contributed to paper writing. Wei Yang and Yancai Xiao contributed to the whole revision process. All authors have read and agreed to the submitted version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foun- dation of China under Grant 51577008.

Rights and permissions

About this article

Cite this article

Yang, W., Xiao, Y. Structured pruning via feature channels similarity and mutual learning for convolutional neural network compression. Appl Intell 52, 14560–14570 (2022). https://doi.org/10.1007/s10489-022-03403-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-03403-9