Abstract

Nowadays Artificial Intelligence (AI) has become a fundamental component of healthcare applications, both clinical and remote, but the best performing AI systems are often too complex to be self-explaining. Explainable AI (XAI) techniques are defined to unveil the reasoning behind the system’s predictions and decisions, and they become even more critical when dealing with sensitive and personal health data. It is worth noting that XAI has not gathered the same attention across different research areas and data types, especially in healthcare. In particular, many clinical and remote health applications are based on tabular and time series data, respectively, and XAI is not commonly analysed on these data types, while computer vision and Natural Language Processing (NLP) are the reference applications. To provide an overview of XAI methods that are most suitable for tabular and time series data in the healthcare domain, this paper provides a review of the literature in the last 5 years, illustrating the type of generated explanations and the efforts provided to evaluate their relevance and quality. Specifically, we identify clinical validation, consistency assessment, objective and standardised quality evaluation, and human-centered quality assessment as key features to ensure effective explanations for the end users. Finally, we highlight the main research challenges in the field as well as the limitations of existing XAI methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Artificial Intelligence (AI) has become in the last few years a building block of modern health services, improving efficiency and providing a concrete support to the decision making process. However, the lack of transparency and interpretability of AI still remains one of the main barriers to its real adoption in the clinical practice (Topol 2019), and even more in those systems that require a direct interaction with a non-expert user (e.g., remote patient monitoring and personalised support). In fact, in the healthcare domain we have to distinguish among different types of users dealing with AI systems: (i) the clinical/medical personnel (i.e., the domain expert), who needs explanations to increase their trust in the system and, at the same time, can provide a clinical validation; (ii) the technical developer, who is in charge of the reliability of the model; (iii) the patient or monitored subject, who needs interpretable and personalised explanations. Explainable AI (XAI) techniques have the potentiality to support all these types of users by making AI models more expressive and improving human understanding and confidence in AI-empowered Decision Support Systems (DSSs) (Das and Rad 2020), but they generally do not offer a one-fits-all solution (Cinà et al. 2022).

In addition, the healthcare domain includes a variety of AI applications related to different research areas, each of them requiring appropriate explanations. To date, biomedical imaging (e.g., X-rays, CT-scans, ultrasounds) is one of the most active XAI application fields, trying to explain model classification by generating saliency maps to highlight the relevance of different image regions (aka, super-pixels) to a given prediction (Tjoa and Guan 2020). It is generally applied in Computer-Aided Diagnosis (CAD) with different targets, such as cancer (Gulum et al. 2021) and COVID-19 (Mondal et al. 2021; Faruk 2021). However, many health applications are also based on several other data types, such as tabular and time series data that can derive from clinical information, such as Electronic Health Records (EHRs), as well as from real-world data collected by IoT and personal mobile devices. Currently the explainability of models applied to those data has not gathered the same attention by the research community yet. This trend is actually unexpected and in contrast with their widespread in the real life. EHRs are the major source of tabular data for clinical settings (Payrovnaziri et al. 2020), which contain rich, longitudinal, heterogeneous, and patient-specific information including demographics, clinical information, questionnaire outcomes, lab tests, and vital sign measurements. On the other hand, the recent diffusion of e-health and m-health systems offers increasing opportunities for remote health monitoring and decision making that heavily rely on the analysis of Multivariate Time Series (MTS) (Kok et al. 2022). The integration between these data sources and data-driven AI models may provide a fundamental contribution to the delivery of early, personalised, and high-quality care both in clinical and remote settings, and explainability becomes fundamental to provide effective explanations both to expert and non-expert users.

However, several existing XAI methods are currently not suitable for tabular data due to the unique characteristics that distinguish them from images (and also text records), such as the potential interactions between features and the coexistence of continuous and categorical predictors (Sahakyan et al. 2021). In addition, the majority of methods applied to time series data are generally adapted from computer vision and Natural Language Processing (NLP) fields in order to highlight which specific signal components get the most attention from the model while the classification is performed. As a result, these methods might not account for specific features of time series data, such as recurrent spatio-temporal patterns and correlations between multiple channels and/or sensing modalities. The unintuitive nature of some time series also poses additional challenges as even domain experts may struggle in understanding the information hidden in the most relevant signal components (Rojat et al. 2021).

Selecting existing XAI methods suitable for this data is not sufficient to effectively bring explainability in healthcare applications without an extensive assessment of the generated explanations (Markus et al. 2021). Clinical validation is currently one of the most widely discussed requirements to build trust in AI-empowered decision making in healthcare (Amann et al. 2020), as it is a critical step for a model to gain clinical credibility by matching data-driven knowledge with evidence-based assessment. In addition, evaluating the level of consistency of explanations generated by multiple methods may provide some preliminary insights into AI systems’ reliability, despite sensitivity analysis may be needed to better expose model vulnerabilities and draw more accurate conclusions about stability and robustness properties (Linardatos et al. 2020). However, evaluating clinical soundness and consistency of explanations does not enable a formal quality assessment as well as a systematic comparison of XAI methods, but standard metrics and practices are still missing in the research community (Guidotti et al. 2018). According to Doshi-Velez and Kim (2017), quality evaluation approaches can be divided into functionality-grounded, application-grounded, and human-grounded. The former represents an initial and objective assessment of explanations based on the definition of quantitative metrics, which enable to select the best method regardless end users’ needs and preferences. The other two approaches are complementary: human-in-the-loop evaluations are necessary to tune the explanations with respect to the target audience, by considering both domain experts and non-expert users (generally the patient). However, evaluating visual and textual explanations supplied by an algorithm is necessary but not sufficient to enable informed and confident decision-making if the interactivity with the end users is neglected (Arrieta et al. 2020). In fact, multidisciplinary collaboration is the premise to detect relevant interactions between end users and AI systems leading to a better interpretation of model predictions, which should be integrated by design or iteratively added to meet end users’ needs.

1.1 Contribution

This survey focuses on the application of XAI techniques to models learned from clinical data stored in EHRs, and real-world data collected by IoT and personal mobile devices. However, it is not sufficient to focus on the application of existing XAI methodologies in this field but it is essential to understand how explanations can be validated and evaluated to close the loop. For this reason, we investigated those works who include also clinical validation, consistency assessment and quality evaluation of the proposed XAI techniques for healthcare. During our research we found several works experimenting XAI methodologies without providing any kind of assessment of the generated explanations. We summarise them in a table to highlight the impact of this research field on the scientific literature, but then we focus on more structured studies including the explanations evaluation, from different perspectives. Specifically, we consider clinical validation to satisfy the strict constraints imposed by the medical domain, consistency assessment of explanations across multiple models and/or XAI methods, and finally formal quality evaluations including novel, objective, and quantitative metrics as well as user-centered studies. Therefore, the contribution of this survey can be summarised as follows:

-

an overview of the most prominent XAI methods applicable to tabular and time series data (Section 2);

-

a literature survey (methodology is reported in Section 3) related to the usage of XAI in healthcare applications targeting these data types and explanations’ assessment (Section 4);

-

a discussion section to highlight the main limitations of the presented methods, and open research challenges to improve explainability from both a methodological and user-based perspective (Section 5).

2 Background

Several technical features come into play when analysing the emerging landscape of XAI, which makes the taxonomy of existing methods not unique. Prior surveys addressing XAI from a more general and application-independent perspective classifying methods based on different aspects, which can be summarised as follows:

-

Scope: local or global. Local methods aims to explain predictions only for single data instances, whereas global methods enable understanding the reasoning of a learning algorithm as a whole.

-

Stage of applicability: explainability may be applied throughout the main stages of AI development pipeline, namely pre-modelling, model-development, and post-modelling. However, this classification generally distinguishes between ante-hoc and post-hoc methods. In the first case, explainability is embedded in the structure of the model and is available directly at the end of the learning phase, whereas in the second case explanatory techniques are used to unveil the “black-box” of complex models after their training.

-

Target model: model-agnostic methods can be theoretically applied to any kind of AI model, whereas model-specific ones are tailored to certain model classes, such as Convolutional Neural Networks (CNN).

-

Explanation form: attribution methods generate importance scores for each input, also providing input ranking. Similarly, heatmaps such as saliency and attention maps, compute and visualise adaptive weights related to the relevance of each data point. Decision rules (i.e., IF-THEN rules), as well as decision trees, represent other common explanation formats. Finally, dependency plots show the expected target response as a function of the input features of interest, thus potentially revealing both relationships between target and inputs (e.g., linear, non-linear, monotonic) and interactions among input variables.

-

Algorithmic nuances: the underlying algorithm used to extract explanations. Perturbation-based methods manipulate parts of the input by replacing, removing, or masking them in order to generate attributions for individual features, data points, or signal regions. Gradient-based methods are tailored to Deep Neural Networks (DNN), as they obtain attributions by using gradient (i.e., partial derivatives) to compute the impact of each input on model outcomes via one or more forward/backward pass through the network. On the other hand, instance-based methods extract a subset of relevant features that is needed to retain/change a given prediction without applying any perturbation to original data.

In addition, taxonomy of XAI methods also depends on the data type that is fed as input to the model to be explained, which can be images, text, graph, tabular, or time series data. As already outlined in Section 1, most of existing techniques have been originally conceived for images or text data, therefore they could not be suitable or readily applicable to tabular and time series data. For this reason, in the next subsections we first provide the reader with a summary of current XAI methods that are best suited for these data types.

2.1 XAI for tabular data

From the literature analysis, it may be noticed that the majority of the existing XAI techniques applicable to tabular data are model-agnostic. Feature ablation and permutation methods are straightforward options to estimate feature importance for any black-box estimator, by measuring how the prediction error changes when removing a given feature or randomly shuffling its values, respectively. Mean Decrease in Accuracy (MDA) is a popular choice in permutation studies, but other scoring metrics can be used as well. Tree-based models also provide an alternative measure of feature importance based on the Mean Decrease in Impurity (MDI), in which impurity is quantified by the splitting criterion of the decision tree (normally, Gini’s index). Therefore, MDI computes feature importance as the total decrease in node impurity (i.e., homogeneity of labels within the node) for every splits across all trees that include a given feature, weighted by the proportion of samples reached at each node.

Shapley Additive Explanations (SHAP) (Lundberg and Lee 2017) is probably the state-of-the-art method for XAI, and it is built on the concept of Shapley values coming from the coalitional game theory. This concept has been transferred to the Machine Learning (ML) domain by considering a prediction task as a game, features as players, and coalitions as all possible feature subsets, thus making it very suitable for tabular data. SHAP computes feature importance scores as the average marginal contribution that each feature brings to an individual prediction, where “marginal” stands for the difference between the actual predicted value and a base value used as a reference. According to Lundberg and Lee (2017), this value is defined as “the value that would be predicted if we did not know any feature for the current output”; in other words, it represents the average prediction over training/test set. On the other hand, the “average” terms implies computing the mean value across all permutations, i.e., all the possible subsets that include a specific feature. To apply SHAP provides several advantages. First, local explanations can also be aggregated to get global explanations. In addition, due to the axiomatic assumptions included in SHAP theoretical foundations, global explanations are more reliable than those obtained by most feature attribution methods. Finally, SHAP offers different algorithmic implementations to explain any kind of model.

Local Interpretable Model-agnostic Explanations (LIME) (Ribeiro et al. 2016) technique is another popular model-agnostic methods to obtain local interpretability. Although a model may be very complex globally, LIME produces an explanation by approximating it by an interpretable surrogate model (generally, a sparse linear model) only in the neighborhood of the instance to be explained. This is achieved by first creating a new dataset consisting of data points randomly drawn in the proximity of the instance of interest, along with the corresponding predictions of the original model. Then, a linear classifier is trained using the perturbed data set, in which each sample is also weighted by its proximity to the target instance through an appropriate weighting kernel. Finally, a very similar method to Least Absolute Shrinkage and Selection Operator (LASSO) regularisation is applied to keep only the most important features. As a result, regression coefficients are used as feature importance scores.

LIME works better for local interpretability, but global explanations may also be derived. A first option is to simply average importance scores across data instances, but this approach may suffer from a high variance due to multiple local approximations. On the other hand, LIME SubModular Pick (LIME-SP) optimisation algorithm allows the selection of a representative, non-redundant set of explanations as exemplary cases of how the model behaves for each class. However, this method just provides some global understanding, and not a comprehensive picture of the overall model reasoning.

Despite the key intuition of using local surrogate models cuts down LIME computational complexity (and time), it reduces outcome stability as well. The choice of simple sparse linear model implies that if the underlying model is highly non-linear even in the locality of the prediction, the explanations may not be faithful. In addition, explanations are originated from random perturbations of the original input space, which may not be representative of the instance to explain. Therefore, several techniques have been proposed trying to improve LIME stability. ALIME (Shankaranarayana and Runje 2019) exploits an auto-encoder as weighting function, whereas hierarchical clustering is adopted in DLIME (Zafar and Khan 2019) instead of random perturbations to group training data, and then it selects the cluster closer to the target instance. In addition, an alternative weighting approach has been proposed in ILIME (ElShawi et al. 2019), in which each perturbed instance is weighted based on its influence on the target instance to be explained, and the distance from it.

Anchors algorithm (Ribeiro et al. 2018) represents an evolution of LIME that exploits reinforcement learning and graph search to detect a region in the neighbourhood, defined by a range of values for some features, representing a sufficient and high-precision condition (i.e., an “anchor”) to guarantee local prediction, such that any changes to other features do not essentially alter model outcomes. These range values are then converted into IF-THEN rules, which can be used to explain not only the target instance but also every other instance meeting the anchor.

All the above mentioned methods are aimed at explaining how model outcomes are generated. However, there also exists other techniques, falling within the umbrella of counterfactual explanations, aiming at detecting the minimal feature changes that are necessary to drive a prediction towards a desired different output. Counterfactual explanations are generally formulated as an optimisation problem, so the main difference between existing techniques lies in the optimisation method and/or in the loss function to be minimised. A first method, called unconditional counterfactual explanations, has been proposed in Wachter et al. (2017) for differentiable models, such as neural networks, in which the gradients of the loss function can be computed. The loss function to be minimised in this case is the distance between the counterfactual and the original data point, subject to the constraint that the model classifies the counterfactual with the desired (and different) label. Guidotti et al. (2018) proposed Local Rule-Based Explanations of black-box decision systems (LORE), a model-agnostic method to extend counterfactual explanations beyond differentiable models. This approach exploits a genetic algorithm to create a synthetic neighborhood for a target instance, then it retrieves both a decision rule (similar to an anchor) and a set of counterfactual rules to identify changes leading to different predictions. More recently, Looveren and Klaise (2021) proposed a technique to obtain counterfactual explanations for differentiable classifiers based on prototypes, in which each class-specific prototype is computed as the average encoding over the K nearest instances with the same class label in the latent space generated by a CNN encoder. Once found, prototypes are embedded into the model objective function to guide the perturbations towards an interpretable counterfactual.

Sensitivity analysis is another category of XAI methods aimed at computing feature relevance that works by measuring how much model predictions are sensitive with respect to changes in one or more input parameters. In addition, it may also be used for model inspection, to detect how altering some internal components/properties affects the model outcomes. Traditional sensitivity analysis methods estimate the importance of each input variable as its contribution to the output model variance. Morris’ method (Morris 1991) is one of the most popular approaches for sensitivity analysis. It works by dividing the range of each variable and iteratively making one change at time within the range of each input variable, in order to cluster inputs in three categories: (1) features with no effect, (2) features with linear effect and no interactions, 3) features with non-linear effects and/or interaction effects. Despite this method is very complete, it is also very computational costly, in particular as the number of predictors increases. Therefore, other lightweight solutions have been proposed, such as those based on Analysis of Variance (ANOVA) decomposition (Saltelli et al. 2010). Moreover, adversarial examples represent a more recent and innovative approach to achieve sensitivity analysis, by exploiting the vulnerability of AI models against adversarial attacks as proxy of input relevance. Specifically, they apply intentional changes to input variables in order to generate new samples that can mislead model predictions, then quantify variable relevance depending on how the changed inputs are able to fool the model. However, adversarial example-based sensitivity analysis methods are currently used for computer vision and NLP tasks, while their effectiveness for other data types, such as tabular data, still need to be deeply investigated.

Visual explanation techniques are also available to highlight the relationship among target and input variables. Partial Dependence Plots (PDP) (Friedman 2001) show the average marginal effect of one or two features on model outcomes, assuming that the features are uncorrelated (which may not always be true). Their equivalent for local predictions, called Individual Conditional Expectation (ICE) plots, has been proposed by Goldstein et al. (2015) to visualise the dependence of the prediction on a feature for each instance separately. Finally, Accumulated Local Effects (ALE) (Apley and Zhu 2020) plots represent an unbiased alternative to PDP, as they account for feature correlation when showing feature influence on model outcomes.

To conclude this overview of XAI techniques suitable for tabular data, it is worth to briefly mention some methods available to globally explain complex models by approximating them with simpler ones, such as decision rules/trees. InTrees (Deng 2019) has been proposed as a framework to extract a compact set of decision rules from tree ensembles, by selecting and pruning rules according to a trade-off among their frequency within tree nodes, their error rate, as well as their length. Additional methods have been also developed for approximating DNN (Wu 2018) and Support Vector Machine (SVM) models (Barakat and Bradley 2007).

2.2 XAI for time series data

To date, Recurrent Neural Networks (RNN) generally represent the best strategy to deal with time series data, thanks to their memory state and their ability to learn relations through time. CNN with temporal convolutional layers are able to build temporal relationships as well, also extracting high level features from raw data. The introduction of these models to solve MTS classification and forecasting tasks significantly boosted predictive performance without requiring heavy data pre-processing. As a result, the majority of existing XAI methods applicable to this data are specific for these models.

As far as CNN is concerned, almost all methods are inherited from computer vision field to obtain post-hoc explainability. According to the underlying algorithm concepts, they can be divided into gradient-based and perturbation-based methods. Gradient-based methods measure how much a change around a local neighborhood of the input corresponds to a change in the model output by running a single forward or backward pass of Gradient Descent (GD) algorithm (or similar) in the network. They have been originally conceived as pixel attribution methods, also referred to as saliency maps, in order to highlight the pixels/super-pixels that are relevant for a certain image classification. However, they may also be adapted for time series data in order to highlight the most relevant data points within a 1-D sequence.

A first gradient-based explanation method has been proposed in Simonyan et al. (2013) to create saliency maps corresponding to the gradient of an output neuron with respect to changes in a small neighborhood around the input, thus highlighting image regions that are relevant for a target class. Afterwards, Sundararajan et al. (2017) proposed Integrated Gradients, which essentially represent a variation of the gradient computing technique implemented in the previous method, by directly attributing the network predictions to its input features.

Deep Learning Important FeaTures (DeepLIFT) (Shrikumar et al. 2017) is one of the most popular algorithms for post-hoc explanation of deep networks. It also works by attributing importance scores to input features, in which each score represents the impact on network outcomes of changing the original feature value to a reference baseline, which can be empirically chosen by end users. This approach is also known as “Gradient*Input” methods, since it also multiplies the gradient by the input signal. This operation essentially represents a \(1^st\) order Taylor approximation of the output changes when inputs are set to zero, and it has proven to enhance the visualisation of saliency maps with respect to previous gradient-based methods. Moreover, DeepLIFT has been later combined with Shapley values to build DeepSHAP, a specific framework to approximate SHAP feature attributions for any Deep Learning (DL) model. This method differs from the original DeepLIFT by using a distribution of background samples instead of a single reference value to change each selected feature, and using Shapley equations to linearise network components such as softmax operators.

Deconvolution (Zeiler and Fergus 2014), is a technique to visualise CNN-based saliency maps by using DeconvNets networks (Zeiler et al. 2011), which leverage the same CNN layers and operators in an exactly reversed order for mapping encoded features to input (i.e.,pixels), as opposite to the standard CNN data processing pipeline. Moreover, the guided backpropagation technique (Springenberg et al. 2014), also known as guided saliency, has been proposed as a variant of the deconvolution approach to extend its applicability to all possible CNN architectures to visualise saliency of the learned features.

Class Activation Mapping (CAM) (Zhou et al. 2016) is another CNN-based XAI methods, originally developed to detect class-specific image regions used by the network to make predictions. CAM computes a vector by concatenating the average activations of convolutional feature maps that are placed immediately before the last prediction layer, then it feeds a weighted sum of this vector to the final layer. In this way, the relevance of class-specific image regions, and of features learned in the latent space in general, can be retrieved by projecting back the weights of the output layer onto the convolutional feature map. However, CAM implementation requires CNN to have a specific architecture in their final layers, thus limiting its applicability. In addition, it is suitable only to highlight high-level representations learned at the last stages, whereas it cannot provide any explanation of low-level representations that are learned at earlier stages. To overcome these limitations, a more general CAM implementation, Gradient-weighted CAM (Grad-CAM) (Selvaraju et al. 2017), has been developed to extend its applicability to any CNN, which relies on gradient information flowing to the last convolutional layer to locate the most important image regions within a saliency map in an architecture-independent fashion.

Finally, Layer-wise Relevant Propagation (LRP) (Bach et al. 2015) is an interpretability method to decompose DNN by propagating their predictions backward without altering the output magnitude. By starting from neurons in the final prediction layer, and moving back to neurons of the input layer, the prediction value is backpropagated in such a way that each neuron redistributes to the preceding layer the same amount of information received from the higher layer.

Unlike gradient-based methods, perturbation-based methods compute the contribution of individual parts of the input by removing or randomly replacing them, then they exploit a distance metric to measure the difference in the model decision function. As a result, a higher difference in the prediction outcome indicate a higher contribution of the input component that has been altered. In this context, the Occlusion method by Zeiler and Fergus (2014) is one of the most used techniques coming from computer vision applications. It acts as sensitivity analysis method by systematically replacing different contiguous parts of an input with a given baseline, then monitoring the decrease in the prediction function. The implementation of this method is not computationally expensive and can be applied to any network architecture as it does not require specific internal components.

For what concerns RNN, Attention mechanism (Bahdanau et al. 2014), sometimes also referred as Self-Attention, is currently the state-of-the-art method for explainability. Attention originates from NLP domain, where it has been initially proposed as a solution to overcome the bottleneck of the original Encoder-Decoder RNN model employed for machine translation. It encodes the input sequence to one fixed length vector from which decoding the output at each time step. The main criticism of this approach is related to the difficulties for DL models to cope with very long sentences, especially those that are longer than the ones contained in the training corpus. Differently, the attention model does not encode input sequences as a whole, but rather it develops context vectors that are filtered specifically for each output time step. Then, it searches for a set of positions within a source sentence where the most relevant information is concentrated according to the context vectors associated with these positions and all the previous generated target words, in order to predict the next word. Besides providing a gain in predictive performance, attention also represents a powerful explanatory technique, as it highlights which words in a text corpus are the most relevant for a certain prediction.

Attention mechanism is at the foundation of Attentive transformers (Vaswani et al. 2017), which consist of specific RNN modules that can be embedded into the learning process of neural networks to obtain ante-hoc explainability. The inner functioning of a transformer relies on a sparse-max function to obtain a mask, which is subsequently scaled and multiplicatively applied to the input, in order to learn adaptive weights that reflect the impact of each input data on the final prediction. Transformers can be used to enable interpretability at different levels, such as input features and time points. For instance, they can detect globally important variables, persistent temporal patterns, as well as significant events within a data trajectory leading to a target outcome. Transformers have found their major applications in NLP, as demonstrated by very popular language models such as BERT (Devlin et al. 2018) and GPT-3 (Radford et al. 2018). However, with the advent of vision transformers for image-based applications (Mondal et al. 2021), they have been also embedded into CNN architectures. As a result, attention is the primary XAI method to explain RNN, while attention-enhanced CNN may also be found in some cases.

Eventually, there are also additional data mining methods applicable to time series data, although less used in practice. Fuzzy inference systems are a viable solution to simulate logical thinking, whereas Symbolic Aggregate ApproXimation (SAX) (Lin et al. 2003) works by converting time series into strings. Specifically, it first divides each time series into equally-length segments. Then, by assuming a Gaussian distribution of input data, it assigns a symbol to each segment by mapping the average segment value with the corresponding probability, in order to discover recurrent patterns. Prototype Learning (PL) is also a compatible approach for time series data, which generates samples to be used as reference to explain the typical pattern of all data instance belonging to the same class. In this context, Shapelets (Ye and Keogh 2009) is a time series-specific, prototype-based method to explain AI models by extracting input sub-sequences that are representative to each class. This method also provides more interpretable, accurate, and faster results with respect to the standard PL approach that selects class prototypes from the nearest samples in latent embedding space to a given data point.

3 Survey methodology

We searched IEEExplore, Springer, ACM, and Elsevier digital libraries, using different search strings obtained as a combination of the following subsets of keywords:

-

1.

“Explainable”, “Interpretable”;

-

2.

“AI”, “Artificial Intelligence”, “Machine Learning”;

-

3.

“Healthcare”, “Health”, “Wellbeing”.

In addition, we also analysed Scopus to double check the screening process conducted for the previous databases. This search also highlighted an additional cluster of relevant works coming from other sources, such as Nature Research journals, which have been further investigated.

Motivated by the very recent development and application of XAI methods, and especially in the healthcare domain, we filtered out the search by selecting papers published in the last 5 years (i.e., between 2017 and 2021). Table 2 reports the percentage of selected papers for each year within the date range, and it further confirms the latest exponential increase of XAI applications in the health domain, with the majority of research studies published in the last 2 years.

In order to focus only on XAI methods suitable for tabular and time series/sequence data, we first excluded applications targeting biomedical images, such as computerised tomography scans, magnetic resonance imaging, and ultrasound images, which are typically 3-D data with additional time dimension and/or multiple channels (4-5D) represented by tensors to be fed to DNN models. Then, we also excluded NLP tasks to extract meaning from unstructured medical text records (e.g., patient prescription notes), which generally make use of high-dimensional word embeddings as input data. Finally, we did not consider genomics and molecular biology applications based on graph data structures.

In turn, tabular data include independent (i.e., “static”) EHR, as well as feature datasets derived from physiological signals. In the first case, each patient’s record is treated as an independent observation, or else multiple observations are aggregated over a specific time window, still resulting in static data. In the second case, feature datasets are a high-level and multidimensional input representation resulting from the application of signal processing pipelines, such as signal framing/windowing and handcrafted feature extraction. On the other hand, time series/sequence data may be divided into longitudinal EHR, which consist of a sequence of visits/admissions to model patient trajectories using clinical variables or medical codes (e.g., ICD-10-CM codes), and raw signal time series.

For what concerns the stage of XAI applicability while building a model, we considered as eligible both post-hoc methods to add interpretability to already developed black-box models (e.g., tree ensembles, CNN), and ante-hoc methods that embed interpretability in the structure of the model, thus making it available directly at the end of the learning phase. However, we did not include “glass-box” approaches in which interpretability is simply addressed in terms of development of intrinsically Interpretable ML (IML) models. This typically involves three model classes, namely sparse linear classifiers (e.g., linear/logistic regression, generalised additive models), discretisation methods (e.g., rule-based learners, decision trees), and instance-based models (e.g., k-Nearest Neighbors, (k-NN)) (Du et al. 2019). A summary of the inclusion and exclusion criteria used for the literature survey is listed in Table 1.

As a result, we reached a total of 71 publications at the end of the search, including 46 journal articles (\(64.8\%\)) and 25 conference papers (\(35.2\%\)) reporting original studies. The number of selected papers for each digital library is shown in Table 3, whereas Table 4 illustrates the distribution of surveyed articles across the journals.

4 Results

In the previous section, we outlined the search strategy adopted in this survey, with particular reference to the input data type and the stage of application of XAI methods within the AI development pipeline. In this section, we present the revised works based on their main contribution in evaluating the explainability applied to the target health application. For a complete list of acronyms used in tables and throughout the text please refer to Table 9 in Section 1 of the Appendix.

A first research branch consists of exploratory studies aimed at experimenting XAI methodologies in order to demonstrate their possible integration with complex models to enable global/local interpretability in specific health applications, while maintaining good predictive performances. The most relevant preliminary works using tabular and time series data are summarised in Table 10 and Table 11 in Section B of the Appendix, respectively. However, these studies do not perform any evaluation of the proposed explanations, leaving room to further investigation as fundamental step to enhance confidence and trust of the medical community in decision making based on predictive AI. After this analysis, we move a step forward by focusing on studies that also included an explanation assessment from one or more of the following perspectives:

-

1.

clinical validation: alignment with existing medical knowledge/practice;

-

2.

consistency assessment: level of agreement of explanations provided by multiple models and/or XAI methods;

-

3.

quality assessment: it includes both quantitative evaluations based on novel metrics, and qualitative evaluations through clinician ratings and feedback.

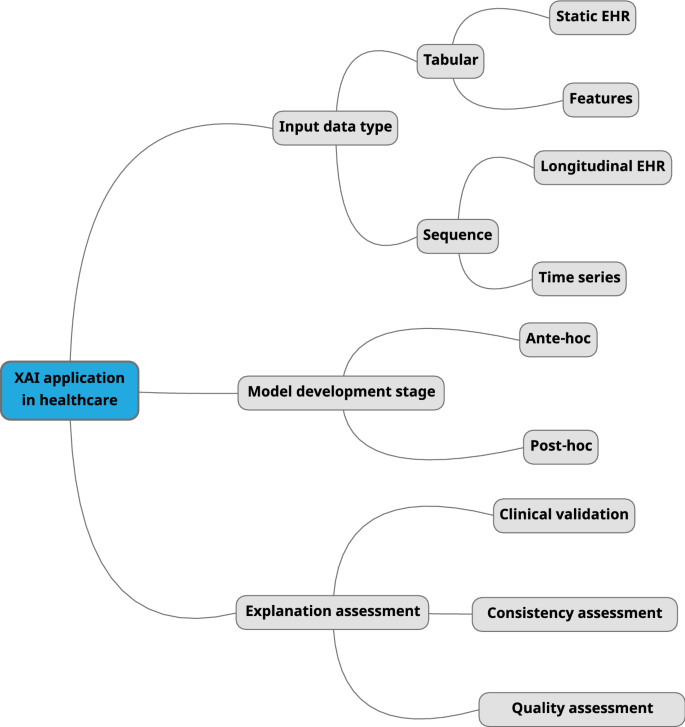

Such evaluations are complementary, so they may be conducted concurrently to strengthen the trust in a model. In the case more than one of the above explanation assessment procedures is performed, we present the most relevant findings. A taxonomy mind map is shown in Figure 1 to support the reader in understanding the main aspects covered in the overall process of selection, grouping, and analysis of the collected research works.

For each category, the revised works are also summarised in tables specifying the target application, input data type and datasets, AI models, and XAI methods. These tables also include a citation analysis derived from Google Scholar and updated to early September 2022, to highlight the impact of the research works. However, it is worth noting that this literature survey is limited to the past 5 years due to the very recent application of XAI in healthcare, and that \(>90\%\) of the selected papers has been published in the last 2 years. Therefore, the number of citations provides only a preliminary analysis of the impact of the research in this field.

4.1 Clinical validation

Evaluating explanations from a clinical standpoint is crucial to guarantee that the inner reasoning of a model follows the domain knowledge, at least with respect to the most important and well-established clinical guidelines. In other words, it demonstrates that model behaviour appears to be “human-like”), which also adds clinical credibility to the model itself. The research works presented in this section propose a preliminary clinical validation of the generated explanations, which is conducted either through domain expert surveys or through the comparison with the related medical literature. For tabular data this generally includes to investigate the global relationships between a target health condition and the input predictors evidenced by feature attribution or rule-based methods. Related research studies are summarised in Table 5. On the other hand, clinical validation of explanations generated by AI systems based on time series data is performed for two main reasons:

-

for longitudinal EHR, to assess if the evolution of patient trajectories resulting in a target health condition is aligned with the general clinical knowledge.

-

for physiological signals, to investigate whether the most important components highlighted by the model are clinically relevant, in order to assert decisions are made upon meaningful features.

Research studies in this area are shown in Table 6. Eventually, clinical comparison may also support knowledge discovery to learn novel relationships and patterns with emergent risk factors, which might be further investigated for a future integration in the current clinical practice.

XAI methods have been applied to a wide variety of CAD applications based on tabular data, and especially on EHR. Several solutions have been identified in this survey explaining the detection of neurological disorders. Beebe-Wang et al. (2021) proposed an explainable risk assessment for imminent (i.e., within 3 years) dementia diagnosis, by training XGBoost, Multi-Layer Perceptron (MLP), and Long Short-Term Memory (LSTM) models with multi-year, extensive cognitive testing data coming from the Religious Orders Study and Rush Memory and Aging Project (ROSMAP) (Bennett et al. 2012).

Then, they applied SHAP to the best XGBoost classifier for both feature selection and model explanation. Results indicate that the most relevant features come from cognitive tests collected in the most recent year, which is further confirmed by the absence of improvements in model performance when considering older visits or cumulative data to make predictions. This finding suggests that longitudinal testing may not be necessary for future dementia diagnosis, which is consistent with other studies reporting the modest value of gradual cognitive changes in predicting future dementia onset. In addition, SHAP feature ranking shows that the final model focuses on few cognitive tests that can be collected in a single visit in less than 20 minutes. Specifically, combining episodic memory tests with executive functioning or language tests led to a predictive accuracy comparable with that of a full cognitive test battery (98 minutes), in line with the current neuropsychology.

Sha et al. (2021) proposed a novel computational framework, Systems Metabolomics using Interpretable Learning and Evolution (SMILE), for supervised metabolomics data analysis aimed at Alzheimer disease (AD) diagnosis. SMILE exploits Linear Genetic Programming (LGP) as evolutionary algorithm to generate a compact predictive model, and it uses metabolite concentrations as input features stored in separated registers in a LGP program. The algorithm has been implemented using a metabolomic dataset reported in Wang et al. (2014), which includes plasma concentration of 242 metabolites from 57 AD subjects, 58 Mild Cognitive Impairment (MCI) subjects, and 57 healthy controls. For explainability purpose, features have been ranked according to their occurrence in the best evolved models, thus providing a way to assess their importance in predicting the disease. In addition, the co-occurrence frequency between each pair of features has been considered as a correlation measure. SMILE analysis highlighted many key metabolites that have been previously linked to AD, but also others less clinically investigated that can be potentially correlated with AD onset. In addition, SMILE performance degraded when detecting AD from MCI subjects, suggesting for possible similarities in biomarkers between the two conditions.

Kim et al. (2021) proposed an interpretable model to predict Early Neurological Deterioration (END) in stroke patients from heterogeneous EHR data. They trained several models by using data from 2363 subjects included in the Korean Atrial Fibrillation Evaluation Registry in Ischemic Stroke Patients (K-ATTENTION) (Jung et al. 2019), a real-world dataset composed of multi-center prospective registries that mainly focus on characteristics, oral anticoagulant use, and clinical outcomes of stroke patients. Then, SHAP has been applied to the best performing Light Gradient Boosting Machine (LightGBM) model to identify the most relevant risk factors for END. Specifically, results obtained from the analysis of SHAP dependence plots reveal clear cut-off values associated with positive and negative probabilities of END occurrence for the 4 main representative features, whose clinical implication may be applicable to real-world clinical practice. For instance, they suggest that patients with severe stroke tend to develop END, thus imposing a special attention for them, while patients with mild to moderate stroke have a lower probability to develop END. In addition, the cut-off value of fasting glucose concentration for predicting END is very similar to the current diagnostic criteria used for diabetes diagnosis (126 mg/dL).

XAI methods can also be used to assess the impact of several risk factors to develop chronic health disorders. Rashed-Al-Mahfuz et al. (2021) proposed a ML framework to detect Chronic Kidney Disease (CKD) from lab test data. To this aim, they evaluated different tree ensemble algorithms on the UCI CKD dataset (Rubini and Eswaran 2015), which consists of clinical tests collected from 400 patients with a total of 24 variables. SHAP values have been computed for each model, then 13 features with both the highest ranking and overlap across the models have been chosen as optimised subset to train a smaller RF model. Moreover, these predictors have been further categorised according to their source of acquisition (.e.g,, blood test, urine test, others) to develop additional RF models based on all possible combinations. The optimised and pathologically-selected subsets obtained by analysing SHAP explanations reach similar performance to the full input set, demonstrating that an accurate and early CKD diagnosis may be achieved by using few, low-cost, and clinically-relevant screening tools.

Pang et al. (2019) proposed an interpretable ML approach to analyse the main risk factors associated to early childhood obesity, including demographic characteristics, lab parameters, as well as anthropometric markers and vital sign measurements. The authors trained several ML models on the Pediatric Big Data repository, a clinical database derived from the EHR system at the Children’s Hospital of Philadelphia with more than 860 children, then they applied SHAP to explain the best performing XGBoost model. Results show that Well-known obesity risk factors such as weight, height, weight-for-height, geographic location, race and ethnicity appear among the most important features to the model. On the other hand, SHAP analysis also highlights novel factors that are related to human metabolism, such as Body Temperature (BT) and respiratory rate, which may deserve further investigation to unveil possible physiological mechanisms causing these associations.

Clinical validation is also fundamental when evaluating explanations related to risk prediction models developed for clinical settings, such as those related to life expectancy, post-operative outcomes, and hospital attendance. Zeng et al. (2021) proposed an explainable ML model for post-operative complication risk prediction of congenital heart surgery patients from patient- and surgery-specific features and intra-operative physiological time series. To this aim, they trained a XGBoost model on a private dataset containing data from 1964 pediatric patient, reaching \(83.1\%\) accuracy and 0.85 Area Under the ROC Curve (AUC) values for multi-label classification with five complication types (i.e., lung, cardiac, rhythm, infectious, others). Then, SHAP has been used to detect the most relevant factors and to perform an extensive clinical comparison of the main risk profiles learned by the model, all of which resulted to be clinically relevant. In particular, high blood pressure and prolonged cardiopulmonary bypass time patterns confirmed a high correlation with worse post-operative outcomes.

Zhang et al. (2021) developed an explainable model for the early prediction of Acute Kidney Injury (AKI) after Liver Transplantation (LT) using more than 100 variables mainly covering patient/donor demographic and clinical characteristics, such as comorbidities, laboratory values, and medications. They performed a retrospective data collection of adult LT cases to build two separate datasets, which have been used for internal and external validation of several ML models, respectively. SHAP explanation analysis for the best performing RF classifier indicated that higher pre-operative indirect bilirubin concentration, lower urine output, lower platelet count, longer anesthesia time, and high graft steatosis percentage are associated with a higher probability of AKI onset. The distribution and relation of these risk factors with AKI diagnosis match with the current physio-pathology, thus adding clinical credibility to the final model.

Explaining health predictions based on static EHR may be useful to detect the most important features, and to evaluate their relevance and correlation with respect to the model outcome from a clinical standpoint. On the other hand, modeling longitudinal EHR data collected during multiple visits/examinations or hospital admissions may enable learning how the evolution of patients’ clinical trajectories impact on a target health condition. Shashikumar et al. (2021) presented Deep Artificial Intelligence Sepsis Expert (DeepAISE), a novel interpretable recurrent survival model for periodical sepsis prediction after ICU admission from longitudinal lab tests and physiological measurements, such as HR, mean arterial pressure, pulse oximetry (SpO\(_2\)), respiration, and BT. DeepAISE combines a Gated Recurrent Unit (GRU)-based RNN with a Weibull Cox Proportional Hazards (WCPH) semi-parametric survival model (Cox 1992) to learn predictive features related to higher-order interactions and temporal patterns among clinical risk factors that maximise the data likelihood of observed time to septic events. Specifically, it starts by producing risk scores 4 hours after ICU admission, then it predicts the probability of onset of sepsis with 2-hour resolution for the next 12 hours. The model has been subjected to a internal validation using the Emory cohort dataset (ICU patients admitted to the hospitals within the Emory Healthcare system in Atlanta, Georgia from 2014 to 2018), and to an external validation using a patient cohort taken by the Medical Information Mart for Intensive Care (MIMIC)-III database (Johnson et al. 2016), in order to ensure robustness against potential changes due to different internal procedures and patients’ characteristics. To get model explanations, feature relevance scores have been computed as the gradient of the sepsis risk score with respect to all input features, in a similar way to CNN-based saliency maps. Analysis of the top-10 features confirmed that the system exploits predictors that have been already identified as risk factors for sepsis, such as recent surgery, length of ICU stay, HR, Glasgow coma score, white blood cell count, and temperature, as well as some less appreciated but known factors such as low blood phosphorous levels. A feature permutation study has also been performed by replacing the top-10 features at both global and local levels with random and/or missing values in order to assess the impact on the model performance. Obtained results indicate that locally important features may provide a better overview of the actual top contributing factors to individual risk scores, since local perturbations yield a significant drop in the model performance with respect to the global replacement strategy.

Sun et al. (2021) proposed AttenSurv, an attention-based RNN for Heart Failure (HF) survival prediction of seriously ill patients from longitudinal and heterogeneous EHR. The network consists of three modules: 1) a Bidirectional LSTM (Bi-LSTM) network to learn the latent representation of a patient trajectory; 2) a MLP network for survival prediction; 3) an attentive transformer to detect global critical risk factors. In addition, the authors also proposed an enhanced variant, named GNNAttenSurv, which also incorporates a Graph Neural Network (GNN) module to extract the latent correlation between risk factors. Both networks have been tested on three public follow-up datasets, namely WHAS (Lemeshow et al. 2011), SUPPORT (Knaus et al. 1995), and METABRIC (Curtis et al. 2012), and on two EHR datasets, the MIMIC-III DB and the Chinese PLAGH dataset, using different sets of dataset-specific features including lab tests, vital signs, demographics, and treatment information. The top-10 features identified by the model for different survival time horizons (ranging from 3 days up to 2 years) have been reviewed by different medical experts, and the resulting assessment demonstrated that they represent truly informative risk factors, with some of them currently adopted for HF survival prediction in the clinical practice.

Zheng et al. (2020) proposed a general DL framework, TRACER, to facilitate accurate and interpretable decision making in healthcare applications, using in-hospital acquired AKI and mortality prediction as cases studies. The framework relies on a RNN model as core component, named Time-Invariant and Time-Variant (TITV) network, which is designed to learn both time-variant and time-invariant feature importance scores for each patient into two separate sub-modules, by using a self-attention network and a Feature-wise Linear Modulation (FiLM)-based network (Perez et al. 2018), respectively. The proposed network has been evaluated on the NUH-AKI dataset (\(>100\)k patients from the National University Hospital in Singapore) for AKI diagnosis, and on the MIMIC-III dataset for mortality prediction. Then, extensive feature-level clinical interpretation has been performed in both domains. This analysis highlighted similar temporal patterns for features that share a similar physiological functionality, whereas diverging patterns have been found for features that have contrasting functionalities or reflect different patient clusters. Overall, feature rankings generally agree with the clinical relevance of the corresponding risk factors.

Kwon et al. (2018) developed a novel visual analytics tool, named RetainVis, to enhance interpretability and interactivity of RNN outcomes for disease diagnosis from longitudinal EHR, by integrating model explanations with additional functionalities, such as visualisation of historical patient trends, patient grouping according to desired criteria, and comparison with reference values in selected patient cohorts. For what concerns the DL model, RetainVis relies on a variant of the original REverse Time AttentIoN (RETAIN) network (Choi et al. 2016), named RETAINEx, which exploits Bi-LSTM modules with non-uniform time interval embedding to model irregular time spacing across consecutive visits, and a double attention mechanism at both time and feature levels. The network has been tested over the HIRA-NPS dataset (Kim et al. 2014), containing medical information of approximately 1.4 million of Korean patients, using HF diagnosis as main case study. In addition, the authors conducted an extensive analysis to review if the medical diagnoses and treatments that received the highest attention within the trajectory of patients that develop heart failure are supported by clinical evidence. Obtained results confirmed the premise that hypertensive, metabolic, and ischaemic heart diseases, and obesity are the main leading factors for heart failure, as well as one of the main related comorbidities.

The recent COVID-19 pandemic has also fostered the development of several solutions aimed at explaining disease diagnosis based on different data sources and analysis approaches, involving both tabular data and signal processing methods. Lu et al. (2020) proposed an explainable system to diagnose COVID-19 in suspected patients and then to predict mortality of confirmed cases using lab test data (e.g., nucleic acid test, blood test), also including medical text reports of basic diseases and symptoms. Specifically, they used a Gradient Boosting Decision Tree (GBDT) model for disease diagnosis, whereas RF was the best choice to predict mortality. In the first case, the GBDT model has been evaluated on a private COVID-19 dataset coming from Wuhan hospital (EHR data from 350 patients), whereas a public dataset available in Yan et al. (2020) has been used for the mortality prediction task (485 patients) by considering only lactic dehydrogenase, lymphocyte, and C-Reactive Protein (CRP) as features. SHAP analysis shows that procalcitonin and white blood cell are the most relevant features for COVID-19 diagnosis, in line with the current clinical findings. Unfortunately, the analysis of the explanatory power of textual features extracted through Term Frequency Inverse Document Frequency (TF-IDF) (Weiss et al. 2010) method is not reported, which might add further value to explanation assessment. As far as mortality prediction is concerned, results demonstrate that when the level of LDH and CRP rises and the level of lymphocyte decreases, the death probability is higher, which agrees with clinical features of death cases. In addition, SHAP dependence plots also highlight clear boundaries associated with rising and decreasing patterns of death probability for each feature, which may act as starting point to further investigate the impact of these risk factors.

Gupta et al. (2021) detected COVID-19 recovered subjects from healthy controls using ECG-based Heart Rate (HR) and Heart Rate Variability (HRV) features. They trained seven ML models by using 1-minute ECG recordings from a total of 447 subjects collected at two hospitals in Delhi, India, then they applied SHAP to the best performing XGBoost model. From this study, it may be inferred that high-frequency power, normalised high-frequency power, HRV standard deviation, low-frequency power, and low-to-high frequency power ratio are the most influenced features after COVID-19 infection, and that changes exhibited by these features are related to an increased vagal activity. These findings match with earlier studies suggesting that heart vagal stimulation increases in the post-COVID recovery phase (Bonaz et al. 2020).

Pal and Sankarasubbu (2021) proposed a mixed approach for early COVID-19 diagnosis by integrating symptoms metadata and cough sounds. The model architecture consists of two sub-components that are concatenated to obtain a final prediction: a TabNet (Arık and Pfister 2021) for generating embeddings from patient characteristics, diagnosis, and symptoms, and a DNN to generate cough embeddings from temporal and spectral acoustic features extracted through signal processing. Both networks integrate an attentive transformer to learn feature relevance from each data modality. The evaluation conducted on a medical dataset containing 30k cough audio segments and associated symptoms from 150 patients with four cough classes (COVID-19, asthma, bronchitis, and healthy) pointed out that more accurate predictions can be achieved using symptoms metadata than cough features, while the overall performance increase by combining both data sources. As it may be expected, attention distribution highlight fever, cough, dizziness, and chest pain as the most recurrent symptoms for infected subjects. In addition, the authors perform an in-depth clinical analysis of the main significant differences in the energy distribution of the cough spectrum between COVID-19 and other cough types. Overall, results confirm that the model is able to learn the main relationships between the frequency distribution of the most discriminating features and the underlying cough sound characteristics for each class. These findings are also supported by t-distributed Stochastic Neighbor Embedding (t-SNE) (Van der Maaten and Hinton 2008) visualisation, showing a clear separation between the four clusters of cough features learned by the model.

Several solutions based on signal processing methods have also been proposed to explain the detection of heart disorders, which are mainly based on the analysis of ECG recordings. Ivaturi et al. (2021) presented a XAI framework for AF prediction from single-lead ECG signals. To this aim, they first trained a MobileNet (Howard et al. 2017) CNN architecture on the PhysioNet/Computing in Cardiology Challenge 2017 dataset (Clifford et al. 2017). Then, global explanation analysis has been performed by dividing each ECG cycle into 8 equally size segments, each one corresponding approximately to a region of interest (e.g., P wave, T wave, isoelectric baseline), and applying feature ablation, feature permutation, and LIME methods to highlight the most relevant segments. Moreover, saliency maps with guided back-propagation technique have also been used to compare the direct contribution of raw input data to local predictions with global, segment-based analysis. Clinical analysis of explanations shows that the network effectively focuses on physiological features that match with those used by cardiologists for the clinical AF diagnosis, such as the absence of P-wave, variability of R-R intervals, and electrical activity in the isoelectric region of the ECG.

Mousavi et al. (2020) proposed HAN-ECG, an alternative solution for explaining AF predictions from single-lead ECG. This system differs from Ivaturi et al. (2021) as it relies on a stacked Bi-LSTM ensemble with a hierarchical attention mechanism to learn relevant components of the input signal at different levels, namely beat, wave, and time windows, respectively. The network has been evaluated over two datasets, including the PhysioNet 2017 and the MIT-BIH AFIBFootnote 1, then the visualisation of attention layers has been exploited to demonstrate that the model focuses on clinically relevant heart beats and waves for detecting AF arrhythmia. As in Ivaturi et al. (2021), the absence of P-waves, which may be occasionally replaced with a series of small waves called fibrillation waves, and the irregular R-R intervals in which the heartbeat intervals are not rhythmic played an essential role in AF detection.

Dissanayake et al. (2020) developed an interpretable DL framework for heart anomaly detection from Mel-Frequency Cepstral Coefficient (MFCC) spectral features (Clifford et al. 2017) extracted from phonocardiogram (PCG) signals (i.e., heart sounds). The framework combines a pre-trained LSTM network for automatic segmentation of the input MFCC maps, a CNN encoder to perform spatial feature learning on the supplied feature map, and a MLP network to get the final prediction. Different network architectures have been tested through the combination of the above modules in order to explicitly examine the importance of signal segmentation as a prior step to classification. Then, both SHAP and occlusion maps have been used to explain the hidden representations learned by the model. Experimental results obtained on the benchmark PhysioNet database (Goldberger et al. 2000) indicate that the network architecture without segmentation module reaches the highest accuracy, outperforming the state-of-the-art methods. In addition, both XAI methods show that a correct classification of PCG signals occur when the model mainly focuses on learned features that are placed within (or between) two fundamental heart sound locations, namely S1 and S2 segments. This model behaviour accords with the clinical assessment followed in digital phonocardiography. For what concerns the role of signal segmentation, these findings suggest that if the model is robust enough to learn the segmentation function while extracting associated features from S1 and S2 locations, then signal segmentation may not be necessary as preliminary data processing.

Eventually, XAI is also gaining increasing attention in the field of Human Activity Recognition (HAR), based both on wearable sensing and smart home environments (Arrotta et al. 2022). Most HAR applications are related to human well-being and fitness through physical activity monitoring, as well as to active and healthy aging by supporting older and impaired subjects in the correct execution of daily activities in their home environment, and/or by detecting abnormal behavioural and locomotion patterns (Khodabandehloo et al. 2021). However, using XAI methods in these applications is currently limited to explain why (and how) simple/complex activities are detected, so the impact of explainability methods on decision making may be limited. Differently, the contribution of XAI to HAR applications in the healthcare domain is much higher, as it provides evidences for the final diagnosis and justifications for successive interventions, with particular reference to gait analysis to detect orthopedic or neurodegenerative disorders, such as Parkinson Disease (PD), and/or fall detection. Such applications are often based on a vast stream of inertial and/or kinematic data, which are in current need of interpretability in order to detect which signal characteristics are effectively used by AI models to take meaningful decisions. To this aim, Filtjens et al. (2021) proposed an interpretable DL framework to detect movements preceding the occurrence of Freeze of Gait (FoG) episodes in PD patients. The framework is based on a CNN to model the reduction of movement prior to a FOG episode from 3-D kinematic joint trajectories of hip, knee, and ankle, respectively, and on LRP explanatory technique to identify the most influential features. The model has been built by using an existing dataset (Spildooren et al. 2010) containing 3-D motion data from 28 PD patients with and without FoG, and 14 healthy subjects. LRP interpretability analysis indicated that the movements perceived as the most relevant by the model are fixed knee extension during the stance phase, reduced peak knee flexion during wing phase, and the fixed ankle dorsiflexion during the wing phase. On the other hand, very little relevance has been observed for these movements in PD patients without FOG and in healthy controls. Therefore, this behaviour suggests that model decisions are made upon meaningful kinematic features, which are actually related to movement reductions during gait.

4.2 Explanation consistency assessment

The evaluation of the level of agreement between explanations generated by different methods is often used by researchers to get some preliminary insights into stability and robustness of AI models. These properties are often used interchangeably as they both refer to the model ability to withstand perturbations introduced in input data, even if a slight difference among the two concepts exists. Indeed, stability is evaluated with respect to unintentional perturbations that may occur in the real world, such as data noise, while robustness refers to subtle yet intentional changes in input data, namely adversarial attacks.

Given a target high-performing model, if similar explanations are generated by different methods, then the model should provide correct outcomes for the same reasons for equal or similar data instances over the time. On the other hand, similar explanations obtained by multiple models with the same explanatory technique(s) might indicate that common patterns are discovered within data and used to make decisions. As a result, the model should be able to deal with both random changes and adversarial examples without leading to systematic misclassification. However, more specific XAI approaches, such as sensitivity analysis, should be required to draw more accurate conclusions about these model desiderata.

From a practical standpoint, consistency assessment applies to methods providing the same explanation format, generally by matching rankings coming from feature attribution methods or by evaluating the degree of overlap between different decision rule sets. These methods are mainly applied to tabular data, while saliency and attention remain the benchmark methods to explain models learned from time series. Moreover, there are also other motivations limiting explanation consistency assessment to time series. First, saliency methods mainly work for local predictions, so evaluating explanation consistency may not provide any global understanding of models, unless a huge number of data instances is analysed individually. Moreover, the usefulness of comparing maps obtained by different methods can be questionable if end users have difficulties in understanding the high-level content hidden in the input sub-sequences showing the highest relevance. As a result, this section focuses on the most relevant XAI studies targeting tabular data and performing explanation consistency assessment. Research works are also summarised in Table 7.

Thimoteo et al. (2022) compared post-hoc explanations for COVID-19 diagnosis with other glass-box AI approaches. Specifically, they applied SHAP to SVM and RF classifiers trained on lab test data provided by the COVID-19 Data Sharing/BR (over 50k suspected COVID-19 patients), then they compared feature relevance with both LR coefficients and feature importance scores provided by Explainable Boosting Machine (EBM) algorithm (Nori et al. 2019). All global explanations converged in indicating eosinophils and leukocytes, and in general white blood cell-related parameters among the essential features to help diagnose the infection from blood test and pathogen variables.

Alves et al. (2021) proposed a Decision Tree-based eXplainer (DTX) and applied it for COVID-19 diagnosis from lab test (i.e., blood, urine, and others) data. This approach produces readable tree structure that provides classification rules to reflect the local behavior of complex models, and it can be considered similar to LIME method using decision trees as surrogate models instead of sparse linear models. In addition, DTX outcomes have been aggregated over many patients for the identification of global patterns, named criteria graphs. DTX rules and criteria graphs have been extracted from a RF classifier trained on the same public COVID-19 dataset used in Leung et al. (2021), then they have been compared with SHAP and LIME explanations at global and local stages, respectively. Results showed a high level of overlap of the proposed method with respect to these well-established XAI techniques, in particular highlighting a correspondence between the 5 largest nodes in the graph and the top-5 features in SHAP ranking.

Okay et al. (2021) developed an interpretable ML approach for early stage diabetes detection from sign and symptom data, which can be easily collected through patient questionnaires. They first trained RF and GBDT models on the Sylhet Diabetes datasetFootnote 2 (520 instances, 17 categorical features), then they applied SHAP to compare global explanations. Results indicated that the top-3 features (polyuria, polydipsia, and gender) are shared across the two models using SHAP, with also a high degree of overlap for the top-10 attributes. LIME also provided similar feature rankings among RF and GBDT for the selected local predictions, but this is not enough to assert the convergence of its global explanations. Oba et al. (2021) performed a similar study to analyse explanations related to diabetes aggravation detection from medical records that integrate patient interviews with lab tests and physiological measurements. They used a medical check-up dataset collected by a Japanese health care center to train different tree ensemble models (i.e., XGBoost, LightGBM, and CatBoost) and a TabNet, then they compared SHAP values obtained from the former with attention weights generated by the network. In this case, the top-3 features ranked by SHAP, namely current severity status of diabetes, blood sugar level, and glycated hemoglobin, were the same among all the models, and also equal to those learned by attentive transformers. In addition, results obtained by TabNet pointed out many highly-ranked indicators that can be obtained by non-invasive tests and interviews, which are less burdensome and expensive to patients.

Elshawi et al. (2019) performed an extensive analysis for investigating the outcomes of ML models for hypertension prediction from cardio-respiratory fitness data obtained after a treadmill test. The authors exploited data from \(>23k\) patients and models coming from the FIT project (Sakr et al. 2018), then they selected the best performing RF classifier to compare a variety of XAI methods, including feature permutation, PDP, ICE plots, feature interaction with Friedman’s H statistic (Friedman and Popescu 2008), and surrogate models for global explanations, as well as LIME and SHAP for local explanations. Results obtained by this experiment suggested that integrating different global interpretations may allow to generalise the overall conditional distribution modeled by the trained response function, but local interpretations should be preferred for a better understanding of smaller variations in the conditional distribution for specific instances.

Another automated and interpretable diagnostic application has been proposed by Seedat et al. (2020) for voice pathology assessment from smartphone-based microphone recordings. To this aim, they conducted a pilot study to collect and analyse voice recordings obtained from 33 healthy and diseased subjects, then they trained several ML models using a set of handcrafted features extracted through audio signal processing. By choosing ExtraTrees as the best performing model, they compared global explanations obtained through SHAP, Morris sensitivity analysis, and feature permutation. All methods converged in identifying the most relevant features, and they also highlighted 6 clinically used MFCC features as the top relevant ones.

Assessing stability should be imperative for predictive models that are supposed to be deployed for survival analysis. Kapcia et al. (2021) proposed ExMed, a tool to enable XAI data analytics and visualisation for clinicians by supporting multiple ML models and feature attribution algorithms, and they tested it using lung cancer life expectancy prediction from EHR as main application. By exploiting the public Simulacrum datasetFootnote 3, a cancer dataset provided by the National Cancer Registration and Analysis Service of Public Health England, they trained different ML models and then selected the best RF classifier to compare SHAP values with the global average of LIME local scores obtained over all the test patients. This analysis show a very similar impact of almost all 20 patient features used. In particular, cancer grade and M-best (i.e., presence/absence of distant metastatic spread) are the two most relevant features with very close importance scores (also in line with the current clinical knowledge), while there is a disagreement on age attribution. By using the same dataset, Duell et al. (2021) presented an extended comparison of explanations obtained for lung cancer survival prediction. Specifically, they compared SHAP, LIME, and anchors methods applied to a XGBoost model, as well as the feature importance ranking derived from an EBM model. Overall, all methods converge in identifying M-best as the most relevant feature globally, while the ranking for the remaining features differs between SHAP and LIME. For instance SHAP consider N-best (i.e., extent of involvement of regional lymph nodes) as the second most important feature, whereas LIME considers the behaviour of the tumour. Given such discrepancy between LIME and SHAP, the authors studied the scale of their differences by analysing the first 1000 instances of the test set individually to identify priority features, regardless of whether they are shared or not for every instance between the two methods. Results highlight M-best, N-best, and T-best (i.e, size and extent of the primary tumor) as the three most important features, further supporting both consistency and clinical relevance of knowledge representation.

Similarly, Moncada-Torres et al. (2021) compared the performance of a conventional WCPH regression model against three different ML methods, namely Random Survival Forest (Ishwaran et al. 2008), Survival SVM (Pölsterl et al. 2016), and XGBoost, for breast cancer survival prediction from patient, tumor, and treatment-related characteristics. Models have been trained on a dataset built through a retrospective data collection from the Netherlands Cancer Registry between 2005 and 2008, then SHAP has been applied to investigate the differences between a reference WCPH model and the best performing XGBoost model. This comparison resulted in a high degree of overlap between global explanations across the models, while XGBoost reached a considerably better accuracy. Therefore, this increase in model performance may be attributed to XGBoost’s ability to model non-linearities and complex interactions among input variables with respect to a simpler semi-parametric approach.