Abstract

Exercise physiology courses have transitioned to competency based, forcing Universities to rethink assessment to ensure students are competent to practice. This study built on earlier research to explore rater cognition, capturing factors that contribute to assessor decision making about students’ competency. The aims were to determine the source of variation in the examination process and document the factors impacting on examiner judgment. Examiner judgement was explored from both a quantitative and qualitative perspective. Twenty-three examiners viewed three video encounters of student performance on an OSCE. Once rated, analysis of variance was performed to determine where the variance was attributed. A semi-structured interview drew out the examiners reasoning behind their ratings. Results highlighted variability of the process of observation, judgement and rating, with each examiner viewing student performance from different lenses. However, at a global level, analysis of variance indicated that the examiner had a minimal impact on the variance, with the majority of variance explained by the student performance on task. One anomaly noted was in the assessment of technical competency, whereby the examiner had a large impact on the rating, linked to assessing according to curriculum content. The thought processes behind judgement were diverse and if the qualitative results had been used in isolation, may have led to the researchers drawing conclusions that the examined performances would have yielded widely different ratings. However, as a cohort, the examiners were able to distinguish good and poor levels of competency with the majority of student competency linked to the varying ability of the student.

Similar content being viewed by others

References

Albanese, M. A. (2000). Challenges in using rater judgments in medical education. Journal of Evaluative Medical Education, 6, 305–319.

Boud, D. (1995). Assessment and learning: Contradictory or complementary? In P. Knight (Ed.), Assessment for learning in higher education (pp. 35–48). London: Kogan.

Cohen, G. S., Henry, N. L., & Dodd, P. E. (1990). A self-study of clinical evaluation in the McMaster clerkship. Medical Teacher, 12(62), 265–272.

Cook, D. A., Dupras, D. M., Beckman, T. J., Thomas, K. G., & Pankratz, S. (2008). Effect of rater training on reliability and accuracy of mini-CEX scores: A randomized, controlled trial. Society of General Internal Medicine, 24(1), 74–79.

Dudek, N. L., Marks, M. B., Regehr, G. (2005). Failure to fail: The perspectives of clinical supervisors. Academic Medicine, 80(10), S84–S87

Eva, K. W. (2007). Putting the cart before the horse: Testing to improve learning. British Medical Journal, 334, 535.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual Review of Psychology, 62, 451–482.

Gingerich, A., Regehr, G., & Eva, K. (2011). Rater based assessments as social judgments: Rethinking the etiology of rater errors. Academic Medicine, 86(10), S1–S7.

Govaerts, M., Van de Wiel, L., Schuwirth, L., Van der Vleuten, C., & Muijtijens, A. (2013). Workplace-based assessment: Raters’ performance theories and constructs. Advances in Health Science Education, 18, 375–396.

Govaerts, M., Van der Vleuten, C., Schuwirth, L., & Muijtijens, A. (2007). Broadening perspectives on clinical performance assessment: Rethinking the nature of in-training assessment. Advances in Health Sciences Education, 12, 239–260.

Harden, R. M., Stevenson, M., Downie, W. W., & Wilson, G. M. (1975). Assessment of clinical competency using objective structured examination. British Medical Journal, 1, 447–451.

Holmboe, E. S., Sherbino, J., Long, D. M., Swing, S. R., & Frank, J. R. (2010). The role of assessment in competency-based medical education. Medical Teacher, 32, 676–682.

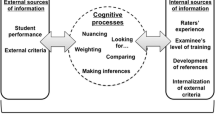

Kogan, J. R., Conforti, L., Bernabeo, E., Iobst, W., & Holmboe, E. (2011). Opening the black box of clinical skills assessment via observation: A conceptual model. Medical Education, 45, 1048–1060.

Kogan, J. R., Hess, B. J., Conforti, L. N., & Holmboe, E. S. (2010). What drives faculty ratings of residents’ clinical competence. The impact of faculty’s own clinical skills. Academic Medicine, 85, 25–28.

Lankshear, A. (1990). Failure to fail: The teacher’s dilemma. Nursing Standard, 4(20), 35–37.

Mazor, K. M., Zanetti, M. L., & Alpher, E. J. (2007). Assessing professionalism in the context of an objective structured clinical examination: An in-depth study of the rating process. Medical Education, 41, 331–340.

Naumann, F. L., Moore, K., Mildon, S., & Jones, P. (2014). Developing an objective structured clinical examination to assess work-integrated learning in exercise physiology. Asia Pacific Journal of Cooperative Learning, 15(2), 81–89.

Newble, D. (2004). Techniques for measuring clinical competence: Objective structured clinical examinations. Medical Education, 38(2), 199–203.

Schuwirth, L., & Ash, J. (2013). Assessing tomorrow’s learners: In competency-based education only a radically different holistic method of assessment will work. Six things we could forget. Medical Teacher, 35(7), 555–559.

Thomas, D. (2006). A general inductive approach for analyzing qualitative evaluation data. American Journal of Evaluation, 27, 237–246.

Van der Vleuten, C. (1996). The assessment of professional competence: Developments, research and practical implications. Advances in Health Science Education, 1(1), 41–67.

Van der Vleuten, C., & Schuwirth, L. (2005). Assessing professional competence: From methods to programmes. Medical Education, 39, 309–317.

Veloski, J., Boex, J., Grasberger, M., Evans, A., & Wolfson, D. (2006). Systematic review of the literature on assessment, feedback and physicians’ clinical performance. BEME Guide No. 7. Medical Teacher, 28(2), 117–128.

Vukanovick-Criley, J. M., Criley, S., Warde, C. M., Boker, J. R., Guevara-Matheus, L., Churchill, W. H., & Nelson, W. P. (2006). Competency in cardiac examination skills in medical students, trainees, physicians and faculty: A multi-centre study. Archives of Internal Medicine, 166(6), 610–616.

Wass, V., Van der Vleuten, C., Shatzer, J., & Jones, R. (2001). Assessment of clinical competence. The Lancet, 357(9260), 945–949.

Watson, R., Stimpson, A., Topping, A., & Porock, D. (2002). Clinical competence in nursing: A systematic review of the literature. Journal of Advanced Nursing, 39(5), 421–431.

Wolf, A. (2001). Competency based assessment. Chapter 25. In J. Raven & J. Stephenson (Eds.), Competence in the learning society (pp. 453–466). New York: Peter Lang.

Wood, T. J. (2014). Exploring the role of first impressions in rater-based assessment. Advances in Health Science Education, 19, 409–427.

Yeates, P., O’Neill, P., Mann, K., & Eva, K. (2013). Seeing the same thing differently. Mechanisms that contribute to assessor differences in directly-observed performance assessments. Advances in Health Science Education, 18, 325–341.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Naumann, F.L., Marshall, S., Shulruf, B. et al. Exploring examiner judgement of professional competence in rater based assessment. Adv in Health Sci Educ 21, 775–788 (2016). https://doi.org/10.1007/s10459-016-9665-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-016-9665-x