Abstract

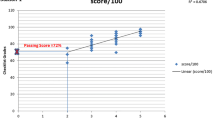

Objective structured clinical examinations (OSCEs) are used worldwide for summative examinations but often lack acceptable reliability. Research has shown that reliability of scores increases if OSCE checklists for medical students include only clinically relevant items. Also, checklists are often missing evidence-based items that high-achieving learners are more likely to use. The purpose of this study was to determine if limiting checklist items to clinically discriminating items and/or adding missing evidence-based items improved score reliability in an Internal Medicine residency OSCE. Six internists reviewed the traditional checklists of four OSCE stations classifying items as clinically discriminating or non-discriminating. Two independent reviewers augmented checklists with missing evidence-based items. We used generalizability theory to calculate overall reliability of faculty observer checklist scores from 45 first and second-year residents and predict how many 10-item stations would be required to reach a Phi coefficient of 0.8. Removing clinically non-discriminating items from the traditional checklist did not affect the number of stations (15) required to reach a Phi of 0.8 with 10 items. Focusing the checklist on only evidence-based clinically discriminating items increased test score reliability, needing 11 stations instead of 15 to reach 0.8; adding missing evidence-based clinically discriminating items to the traditional checklist modestly improved reliability (needing 14 instead of 15 stations). Checklists composed of evidence-based clinically discriminating items improved the reliability of checklist scores and reduced the number of stations needed for acceptable reliability. Educators should give preference to evidence-based items over non-evidence-based items when developing OSCE checklists.

Similar content being viewed by others

References

Bloch, R., & Norman, G. G-String IV program. Retrieved August 2011 from http://www.http://fhsperd.mcmaster.ca/g_string/download.html.

Brannick, M. T., Erol-Korkmaz, H. T., & Prewett, M. (2011). A systematic review of the reliability of objective structured clinical examination scores. Medical Education, 45, 1181–1189.

Brennan, R. L. (2001a). Generalizability Theory. New York: Springer-Verlag.

Brennan, R. L. (2001b). urGENOVA, v.2.1. Iowa City: Center for advanced studies in measurement and assessment, College of Education, University of Iowa. Retrieved September 2013 from http://www.uiowa.edu/~casma/computer_programs.htm.

Downing, S. M. (2004). Reliability: On the reproducibility of assessment data. Medical Education, 38, 1006–1012.

Downing, S. M. (2009). Statistics of Testing. In S. M. Downing & R. Yudkowsky (Eds.), Assessment in Health Professions Education (pp. 93–117). New York: Routledge.

Eva, K. W. (2004). What every teacher needs to know about clinical reasoning. Medical Education, 39, 98–106.

Hettinga, A. M., Denessen, E., & Postma, C. T. (2010). Checking the checklist: A content analysis of expert- and evidence-based case-specific checklist items. Medical Education, 44, 874–883.

Hodges, B., Regehr, G., McNaughton, N., Tiberius, R., & Hanson, M. (1999). OSCE checklists do not capture increasing levels of expertise. Academic Medicine, 74, 1129–1134.

IBM Corp. (2011). IBM SPSS Statistics for Windows, Version 20.0. Armonk: IBM Corp.

JAMA evidence. The rational clinical examination: Evidence-based clinical diagnosis. Retrieved March 26, 2012 from: http://jamaevidence.com/resource/523.

McGee, S. (2007). Evidence Based Physical Diagnosis (2nd ed.). St. Louis: Saunders Elsevier.

McGee, S. (2012). Evidence Based Physical Diagnosis (3rd ed.). Philadelphia: Saunders Elsevier.

Norman, G., Bordage, G., Page, G., & Keane, D. (2006). How specific is case specificity? Medical Education, 40, 618–623.

Patrício, M. F., Julião, M., Fareleira, F., & Carneiro, A. V. (2013). Is the OSCE a feasible tool to assess competencies in undergraduate medical education? Medical Teacher, 35, 503–514.

Schmidt, H. G., & Rikers, R. M. (2007). How expertise develops in medicine: Knowledge encapsulation and illness script formation. Medical Education, 41, 1133–1139.

Schuwirth, L. W., & van der Vleuten, C. P. (2003). The use of clinical simulations in assessment. Medical Education, 37(1 Suppl), 65–71.

Shavelson, R. J., & Webb, N. M. (1991). Generalizability theory: A primer. Newbury Park: Sage Publications.

Yudkowsky, R., Otaki, J., Lowenstein, T., Riddle, J., Nishigori, H., & Bordage, G. (2009). A hypothesis-driven physical examination learning and assessment procedure for medical students: Initial validity evidence. Medical Education, 43, 729–740.

Acknowledgments

The authors wish to thank Amin Mousavi and Dr. Todd Rogers from the Centre for Research in Applied Measurement and Evaluation at the University of Alberta for their help with generalizability analyses. We are also grateful to our colleagues in the Department of Medicine at the University of Alberta: Dr. Cheryl Goldstein for her assistance in the literature review for evidence-based items, Dr. Zaeem Siddiqi for his input on the Parkinson’s station, and to Drs. Bibiana Cujec, Adriana Lazarescu, Fiona Lawson, Fraulein Morales, Uwais Qarni, Irwindeep Sandhu, and Stephanie Smith for their assistance in classifying checklist items. This work was supported in part by the University of Alberta, Department of Medicine’s Medical Education Research Grant.

Conflict of interest

None.

Ethical standard

This study received approval from the Internal Review Boards at the University of Alberta and the University of Illinois at Chicago.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Daniels, V.J., Bordage, G., Gierl, M.J. et al. Effect of clinically discriminating, evidence-based checklist items on the reliability of scores from an Internal Medicine residency OSCE. Adv in Health Sci Educ 19, 497–506 (2014). https://doi.org/10.1007/s10459-013-9482-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-013-9482-4