Abstract

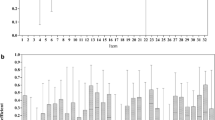

The purpose of this research was to study the effects of violations of standard multiple-choice item writing principles on test characteristics, student scores, and pass–fail outcomes. Four basic science examinations, administered to year-one and year-two medical students, were randomly selected for study. Test items were classified as either standard or flawed by three independent raters, blinded to all item performance data. Flawed test questions violated one or more standard principles of effective item writing. Thirty-six to sixty-five percent of the items on the four tests were flawed. Flawed items were 0–15 percentage points more difficult than standard items measuring the same construct. Over all four examinations, 646 (53%) students passed the standard items while 575 (47%) passed the flawed items. The median passing rate difference between flawed and standard items was 3.5 percentage points, but ranged from −1 to 35 percentage points. Item flaws had little effect on test score reliability or other psychometric quality indices. Results showed that flawed multiple-choice test items, which violate well established and evidence-based principles of effective item writing, disadvantage some medical students. Item flaws introduce the systematic error of construct-irrelevant variance to assessments, thereby reducing the validity evidence for examinations and penalizing some examinees.

Similar content being viewed by others

References

M. Albanese (1993) ArticleTitleType K and other complex multiple-choice items: An analysis of research and item properties Educational Measurement: Issues and Practices 12 28–33

Case S.M. Downing S.M. (1989). Performance of various multiple-choice item types on medical specialty examinations: Types A,B,C, K, and X. Proceedings of the Twenty-Eighth Annual Conference on Research in Medical Education, pp. 167–172

D.B. CaseS.M. Swanson (1998) Constructing Written Test Questions for the Basic and Clinical Sciences National Board of Medical Examiners Philadelphia, PA

K.D. Crehan T.M. Haladyna (1991) ArticleTitleThe validity of two item-writing rules Journal of Experimental Education 59 183–192

Dawson-Saunders B., Nungester R.J. Downing S.M. (1989). A comparison of single best answer multiple-choice items (A-type) and complex multiple-choice items (K-type). Proceedings of the Twenty-Eighth Annual Conference on Research in Medical Education, pp. 161–166

S.M. Downing (2002) ArticleTitleConstruct-irrelevant variance and flawed test questions: Do multiple-choice item writing principles make any difference? Academic Medicine 77 s103–104 Occurrence Handle12377719

S.M. Downing R.A. Baranowski L.J. Grosso J.J. Norcini (1995) ArticleTitleItem type and cognitive ability measured: The validity evidence for multiple true–false items in medical specialty certification Applied Measurement in Education 8 89–199

Downing S.M., Dawson-Saunders B., Case S.M. Powell R.D. (April, 1991). The psychometric effects of negative stems, unfocused questions, and heterogeneous options on NBME Part I and Part II characteristics. A paper presented at the annual meeting of the National Council on Measurement in Education, Chicago, IL

R.B. Frary (1991) ArticleTitleThe none-of-the-above option: An empirical study Applied Measurement in Education 4 115–124

T.M. Haladyna (2004) Developing and Validating Multiple-choice Test Items Lawrence Erlbaum Associates Hillsdale, NJ

T.M. Haladyna M.C. DowningS.M. Rodriguez (2002) ArticleTitleA review of multiple-choice item-writing guidelines Applied Measurement in Education 15 309–334 Occurrence Handle10.1207/S15324818AME1503_5

P.H. Harasym E.J. Leong C. Violato R. Brant F.F. Lorscheider (1998) ArticleTitleCuing effect of “all of the above” on the reliability and validity of multiple-choice test items Evaluation and the Health Profession 21 120–133

R.F. Jozefowicz B.M. Koeppen S. Case R. Galbraith D. Swanson H. Glew (2002) ArticleTitleThe quality of in-house medical school examinations Academic Medicine 77 156–161 Occurrence Handle11841981

R.L. Linn N.E. Gronlund (2000) Measurement and Assessment in Teaching EditionNumber8 Prentice-Hall Upper Saddle River, NJ

W.A. Mehrens I.J. Lehmann (1991) Measurement and Evaluation in Education and Psychology Harcourt Brace New York

S. Messick (1989) Validity R.L. Linn (Eds) Educational Measurement EditionNumber3 American Council on Education and Macmillan New York 13–104

L. Nedelsky (1954) ArticleTitleAbsolute grading standards for objective tests Educational and Psychological Measurement 14 181–201

A.J. Nitko (1996) Educational Assessment of Students Merrill Englewood Cliffs, NJ

P. Tamir (1993) ArticleTitlePositive and negative multiple choice items: How difficult are they? Studies in Educational Evaluation 19 311–32

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Downing, S.M. The Effects of Violating Standard Item Writing Principles on Tests and Students: The Consequences of Using Flawed Test Items on Achievement Examinations in Medical Education. Adv Health Sci Educ Theory Pract 10, 133–143 (2005). https://doi.org/10.1007/s10459-004-4019-5

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1007/s10459-004-4019-5