Abstract

Timely detection of an invasion event, or a pest outbreak, is an extremely challenging operation of major importance for implementing management action toward eradication and/or containment. Fruit flies—FF—(Diptera: Tephritidae) comprise important invasive and quarantine species that threaten the world fruit and vegetables production. The current manuscript introduces a recently developed McPhail-type electronic trap (e-trap) and provides data on its field performance to surveil three major invasive FF (Ceratitis capitata, Bactrocera dorsalis and B. zonata). Using FF male lures, the e-trap attracts the flies and retains them on a sticky surface placed in the internal part of the trap. The e-trap captures frames of the trapped adults and automatically uploads the images to the remote server for identification conducted on a novel algorithm involving deep learning. Both the e-trap and the developed code were tested in the field in Greece, Austria, Italy, South Africa and Israel. The FF classification code was initially trained using a machine-learning algorithm and FF images derived from laboratory colonies of two of the species (C. capitata and B. zonata). Field tests were then conducted to investigate the electronic, communication and attractive performance of the e-trap, and the model accuracy to classify FFs. Our results demonstrated a relatively good communication, electronic performance and trapping efficacy of the e-trap. The classification model provided average precision results (93–95%) for the three target FFs from images uploaded remotely from e-traps deployed in field conditions. The developed and field tested e-trap system complies with the suggested attributes required for an advanced camera-based smart-trap.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Key message

-

The described McPhail electronic (smart) trap showed good caipabilities and could be implemented in the future in the automatic surveillance of invasive and expanding fruit flies of economic importance.

Introduction

Automation of agricultural processes and decision making in agriculture has advanced at enormous steps during the last decade (Jung et al. 2021). Within these advances, automation of insect-monitoring and decision-making in pest management has been amply developed, and novel instruments (i.e., sensors), principles and agricultural applications were recently developed and communicated (e.g., Deqin et al. 2016; Goldshtein et al. 2017; Potamitis et al. 2018; Ioannou et al. 2019; Miranda et al. 2019; Nestel et al. 2019; Sciarretta et al. 2019; Cardim Ferreira Lima et al. 2020; Preti et al. 2021; Schellhorn and Jones 2021). Moreover, some of these electronic traps have achieved commercialization, (see for instance the RapidAIM, https://rapidaim.io/ and trapview, https://trapview.com/) providing services for real-time, wireless insect-pest monitoring and decision making in pest management, rendering management of pest by artificial intelligence (AI)-supported technologies a reality (Schellhorn and Jones 2021).

Smart-traps (“electronic traps”) for fruit flies (FF) (developed and proposed) follow a variety of principles (Cardim Ferreira Lima et al. 2020; Schellhorn and Jones 2021). These smart-traps utilize and function on all sorts of biological concepts and sensor types. Some of the most successful smart-traps utilize sensors that can detect some specific aspects of the FF behavior, like wing-beat frequency (Potamitis et al. 2018) or movement patterns (e.g., the RapidAIM, https://rapidaim.io/). Fruit flies are usually attracted to these smart-traps by lures, which express a certain level of specificity. These lured smart-traps, which count the number of attracted insects, from which the vast majority is the target FF, are useful tools to support monitoring of established FF populations and IPM decision-making systems (see for an early account, Jiang et al. 2008). This same research group also pioneered the idea of using smart-traps as early-warning systems for FF in remote areas and as a management tool to control FF outbreaks (Liao et al. 2012). Other type of sensors include those based on the acquisition of images from the insect pest (Preti et al. 2021). Prototypes of these smart traps used to monitor FF include, within others, those tested for the oriental fruit fly, Bactrocera dorsalis (Deqin et al. 2016), the Ethiopian fruit fly, Dacus ciliatus (Nestel et al. 2019), the Mediterranean fruit fly (medfly), Ceratitis capitata (Sciarretta et al. 2019) and the olive fruit fly, B. oleae (Miranda et al. 2019).

While most of these systems provide a basis for real-time remote monitoring of insect pests, and are becoming a tool for farm pest-management, few automation systems and sensors have addressed the surveillance of “invasive” insect pests (i.e., insect pest species that are exotic to a geographic region and it can be naturally spread and/or unintentionally introduced by humans) (e.g., Rassati et al. 2016). Introduction of exotic pests to new areas where they were not previously present has major economic and environmental implications (Pimentel 2011). New exotic species may substantially modify the fauna and vegetation of the invaded region (Lockwood et al. 2007). In addition, introduction of new species, especially phytophagous insects, may impose economic and environmental risks for the agricultural sector (Guillemaud et al. 2011). Usually, the introduction of a new agricultural pest will lead to massive pesticide utilization, to halt establishment and restrict spread of the invasive insect pest, and the application of phytosanitary regulations that may restrict movement of agricultural produce from the quarantined area, thus having an important economic impact on farmers and the trading industry (Follett and Neven 2006). In case the invasive pests manage to become successfully established and spread in the new region, the agricultural sector will necessitate confronting this new pest, usually requiring costly modifications and adaptations in pest management strategies.

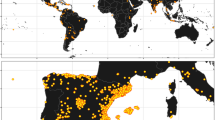

The family of FF (Diptera: Tephritidae), which contains more than 4000 species worldwide, include a group of insect pests of economic importance that infest fruits and vegetables. Eggs are laid in the rind or the flesh of the fruit and hatched larvae consume the flesh by drilling holes, destroying the fruit, rendering unmarketable (White and Elson-Harris 1992). Due to their reproductive capacity and ability to rapidly multiply, FF may induce severe economic damage to plantations, destroying up to 100% of the produce without control (Qin et al. 2015). Several FF species are known for their invasive capacity and ability to expand to new geographic regions. The medfly, for instance, has spread throughout the world in a relatively short period of time (ca. 200 years) and is currently expanding its geographic range to new areas in response to climatic change (Diamantidis et al. 2009; Szyniszewska and Tatem 2014; Gilioli et al. 2021). Similarly, the oriental fruit fly has spread from South East Asia to several islands of the Pacific Ocean and Africa and is frequently detected in North America (Papadopoulos et al. 2013). It is currently threatening the European continent as well, where adults have been recently intercepted in Austria (Egartner et al. 2019), Italy (Nugnes et al. 2018) and in France (https://gd.eppo.int/taxon/DACUDO/distribution/FR).

Surveillance systems to detect the introduction of exotic insect pests are active in many places, especially in fruit exporting and importing countries, and consist of fruit inspection in ports of entry and extensive networks of insect traps covering vast territorial areas (Shelly et al. 2014). These extensive trap networks require intensive scouting and monitoring and usually inflict important costs to the national plant protection agencies. For instance, in a small area like Israel, the territories under the jurisdiction of the Palestinian Authority and the Jordan Valley in Jordan, the IAEA/FAO Regional Cooperation framework helped establish a network of more than 1000 traps spread throughout the area to detect invasive Bactrocera fruit fly species that can threaten their fruit production and export markets (IAEA 2019). This network of traps is served by many scouts on a biweekly basis and has an important impact on the national budgets. Establishing a network of autonomic FF surveillance will greatly reduce costs and improve the ability of regulating agencies to get early warning alerts, facilitating rapid response actions to minimize the probabilities of establishment of invasive FF. The present study reports on the development and testing of a novel sensor (electronic trap, “e-trap”) to detect invasive FF in a timely manner that includes a trapping system, wireless transmission of images of captured insects, an image analysis system, and an algorithm to identify and count the captured adults. The e-trap was tested under field conditions in several regions of the Mediterranean and South Africa, targeting three important invasive FF: two Bactrocera species (B. dorsalis and B. zonata) and C. capitata that is currently expanding its distribution to more continental areas of Europe. This e-trap can also serve as a tool to monitor “low-prevalence” orchards, and as part of the “system approach” to reduce risk of exporting infested fruits to FF “free areas” (Jang et al. 2014).

Methodology

The fruit fly trap and optical sensor

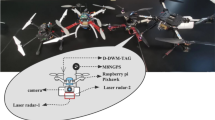

The e-trap is based on the conventional McPhail trap, developed and used by M. McPhail in 1935 to monitor the Mexican fruit fly (Anastrepha ludens) in Mexico (Steykal 1977). Since then, this trap and several variations has become a conventional trap to monitor FF throughout the world. The McPhail e-trap developed in this study (Fig. 1) comprises 3-pieces of plastic units: (a) a central cylinder (13.5 cm in diameter), bearing an invagination opening to the external environment in the bottom of the cylinder (6 cm in diameter), (b) a lid closing the cylinder from the top, and (c) a battery’s box (with capacity for 6-rechargeable and replaceable lithium batteries) that attach to the lateral external-wall of the cylinder (Fig. S1). The central cylinder is internally divided into several lateral chambers that accommodate the electronics and camera, and a central chamber where entering FF are directed (by the color) onto a yellow sticky-board where they adhere and die (Figs. S2 and S3). The yellow-sticky board faces a high-resolution camera (Raspberry pi Camera Module V2, Okdo Technology LTD) that is activated by a Raspberry pi Zero v 1.3 Microcomputer (Raspberry Pi Limited), which acquires the image and handles the uploading of the image to the cloud or server using cellular communication with a 4G USB Dongle (any modem functioning with the local communication system). The location of the yellow sticky-board and the camera within the trap create an optimal geometry and “focus” that covers the entire sticky-board surface and produces high-resolution images of the adhered insects (Fig. S4). Fruit flies are attracted to the trap using male lures (Methyl Eugenol, ME, for Bactrocera males, Trimedlure, TML, for Ceratitis males,) and protein-based products, such as Biolure, for female flies. At this stage, the e-trap is activated twice a day, sending two images per day. Energy is obtained from six lithium batteries (NCR18650PF) that allows the e-trap to function uninterruptedly for around 6–7 months. Uploaded images are processed by image analysis algorithms developed specifically for this purpose (see following sections). The results are managed and fed into risk models and/or sending alerts to stakeholders (Fig. 2).

Field-test of e-trap’s fruit fly attraction, electronic functioning and communication

The e-traps were tested, and their performance was compared with conventional traps, in five countries (Israel, Greece, Austria, Italy and South Africa). Investigation and comparison of the e-trap attractiveness was conducted targeting males of B. dorsalis (South Africa, where the species is established in the North parts of the country, and Italy, where recent interceptions were reported in 2018), B. zonata (Israel, where it is present and contained in urban and suburban Tel Aviv), and C. capitata (Israel and Greece, where it is endemic, and Austria where interceptions of the fly are common) using methyl eugenol (ME) dispensers for the first two species and Trimedlure (TML) dispensers for the third species. Traps were shipped from the Agricultural Research Organization (ARO) in Israel to partners and field-deployed for several periods in different ecosystems (Table S1). E-traps were paired with conventional traps commonly used in each country to monitor these flies. An expert entomologist serviced conventional traps with a periodicity of once a week up to once every 3 weeks (depending on location, season and distance). Also, an expert entomologist daily-inspected digital images uploaded by the e-traps to a google drive server. Data collected from individual e-traps included: daily upload of images (i.e., communication) and count of attracted FF (i.e., attractiveness). Data from paired conventional traps included the period of inspection and amount of FF attracted and trapped. These data were used to contrast trapping performance between the e-traps and conventional traps (paired t-test). Comparison between e-traps and conventional traps was made by counting the number of flies trapped in the e-traps during the same period of the conventional traps, which was framed by the service of the conventional trap. Thus, each e-trap was reset each time that the scout visited the paired conventional trap. The statistics only included periods with positive captures in any of the two trap. In addition, frequency of events in which information on the capture of the target fly by the e-trap preceded (even by one day) the information provided by the scout at the end of the visiting period was derived. In this case, we also only used periods with positive captures of target FF. This provided information on the early warning abilities of the e-trap in contrast to the current methods of servicing non-automatic traps by scouts.

Training a deep learning detector and classifier of Bactrocera and Ceratitis fruit flies

Source of data

Images to train the detector and classifier were derived from two sources: the “FF-photographic studio” (FF-studio) and from actual field images described in the previous section. The FF-studio consisted of an e-trap body with the ability to manually activate the camera and take at any time a picture of the yellow sticky-board (populated with different settings of laboratory FF). Adhesion of FF to the yellow sticky-boards was facilitated by using a laboratory setup exposing the e-trap insert (bearing the yellow sticky-board) to living laboratory FF (B. zonata and C. capitata) within a 40 × 40 cm Perspex cage (Fig. S5). These mock-ups were then inserted in the FF-studio system (Fig. S6), and images were produced under different illuminations resulting from placing and manually activating the FF-studio system outdoors under different tree canopies, and during different hours of the day. Pictures with diverse quantities of FF derived from the artificial exposure of the yellow sticky board to flies in the laboratory cage, and different illuminations, were produced and uploaded automatically by the FF-studio to the cloud. Several hundred of images were obtained, simulating the actual conditions of an active e-trap with the two target FF species. Data from the field were derived from the e-traps deployed and tested in the field as described previously. Several hundreds of images were obtained from this field-test. Images from the field contained the three FF species, and other insects attracted and adhered to the yellow-sticky board (ants, lacewings, bees, etc.). Bactrocera dorsalis images were obtained from traps deployed in citrus orchards in South Africa, and served to train the classifier (no images of B. dorsalis were artificially obtained with specimens from the laboratory). Images from all the insects obtained by these two methods were manually classified and annotated.

Data labeling and augmentation

The images acquired in the FF-studio contained mainly specimens that included a single FF species, either C. capitata or B. zonata. This enabled labeling each image and use that label for every insect detected in the image. To propagate labels from image to insects within an image, a class-agnostic detector was developed to extract image patches that contain insects, regardless of their species. Images were collected under controlled conditions, with a significant contrast between the background and the objects. We exploit this contrast and apply the “Canny” operator to detect insects in the image (Canny 1986). Canny is a classical image-processing algorithm for object detection that involves three main stages: (i) applying image smoothing for noise removal, (ii) compute the changes in x–y directions of the image (i.e., gradient) using Sobel/Prewitt/Roberts filters (Bhardwaj and Mittal 2012), and (iii) selecting gradients within a specific range determined by the user. The hyper parameters of the Canny algorithm were tuned to reach a good detection accuracy. The automatic annotation process is summarized in Fig. 3.

Annotating images collected using the field-test described earlier was more challenging because insects found in each e-trap image included diverse insect species, besides the target FF. No other FF besides the target one was trapped. Each insect was annotated using the SuperAnnotate© tool, which allows to define manually the locations of objects in the image by delimiting rectangles enclosing those objects (bounding boxes) (https://www.superannotate.com). The first step consisted on bounding boxes for each individual insect in an image. Objects were labeled with one of 11 possible classes: the three species of FF, B. zonata (PEACH-FF), C. capitata (MEDFLY) and B. dorsalis (ORIENTAL-FF), and other non-Tephritid attracted insects such as house flies, lacewings, bees and ants. No other FF were detected in any of the e-traps and conventional traps. Beside the above classes, we used an extra class, “other,” for less common species encountered in the e-trap.

To enrich the dataset, a standard data augmentation method was applied. Data augmentation enriches the data by adding slightly modified copies of existing data or creating new synthetic data from existing data. It improves classification accuracy by significantly increasing data diversity without collecting new physical samples (Shorten and Khoshgoftaar 2019). This strategy is known to contribute to generalization and prevent overfitting (Shorten and Khoshgoftaar 2019). Four augmentation operators were used: (1) horizontal flipping of the input image with probability 0.5 (the augmentation was applied to 50% of the images); (2) vertical flipping with probability 0.5; (3) rotating the input image with an angle between 0 and 90 degrees, with probability of 0.3; and (4) randomly changing brightness and contrast of the input image with probability of 0.2.

Standard data partitioning was used into three sets—train, validation and test sets, with a ratio of 0.6/0.2/0.2, respectively. Train set is the actual set used to train the model, validation set is used to tune the hyper parameters based on the performance of the model on this data split, and test set is used to provide a final evaluation of the trained model fit over unseen data. To evaluate model performance, we randomly split the data to train/validation/test (k-fold cross-validation) five times to explore the generalization ability of the trained model (Stone 1974). We fitted the model using each group training set and evaluated performance by using each test set. We report the average result over five test sets.

Training and deep learning

A Faster R-CNN ResNet50 model (Ren et al. 2015) was trained. Faster R-CNN is a common deep convolutional network used for object detection, which has shown to be an accurate predictor of different objects’ locations. First, a convolutional mask scans the image and generates candidate bounding boxes. Then, a fixed length feature vector is extracted. Finally, a simple neural network predicts the object class and bounding box coordinates. The model was initialized with a ResNet50 backbone pre-trained on ImageNet (a benchmark dataset for image classification and object detection), and fine-tuned the model with the FF-studio dataset.

A second-deep detection model was trained using the field dataset. Specifically, we trained the same architecture of the Faster R-CNN model that was used for the FF-studio data, for 200 epochs (i.e., the number of iterations over the entire training dataset that the machine learning algorithm performs). We optimized the model with stochastic gradient descent (Bottou 2010). We tuned model hyper parameters using the validation set. Specifically, we searched over learning rates in [\(5{e}^{-4}\), \(1{e}^{-3}\), \(2{e}^{-3}\), \(5{e}^{-3 },1{e}^{-2 }, 2{e}^{-2 }, 5{e}^{-2 }]\), and batch size in [1, 2, 3, 5]. Best validation loss was obtained with learning rate = \(2{e}^{-2}\), momentum = 0.9 and a batch size of 1.We used the standard loss functions of Faster R-CNN: a cross-entropy loss for classification, and \({L}_{2}\) loss for bounding box regression. Results were evaluated using standard metrics for object detection. Specifically, we computed the average precision per class and true positive rate using these basic definitions:

Here, true positive (TP) is a correct detection (Detection with “Intersection over Union,” IoU ≥ threshold), false positive (FP), is a wrong detection (Detection with IoU < threshold), and false negative (FN) is a case where a ground truth is not detected. True negative (TN) does not apply, since in object detection the data include many possible bounding boxes which should not be detected (i.e., the background) (Padilla et al. 2021). Using these terms, we compute precision and recall values for all classes, using IoU thresholds of 0.5. Then, we constructed a precision–recall curve where each data point in the curve represents precision and recall values for a specific score threshold varying from 0 to 1. We also compute the average precision value (AP) for each class. For the accuracy metric, we first filtered out all predictions with IoU lower than 0.5 with any ground truth box and all predictions with confidence scores lower than 0.5, keeping only valid predictions. Then, we counted the ratio between correct classification and the total number of valid predictions.

Evaluation of the trained model using field data

Validation consisted on deploying e-traps in the field for 30 days during June 2021. Five e-traps with ME as attractant were deployed in suburban areas of Tel Aviv, Israel, to capture male B. zonata flies, and five e-traps were deployed in Northern Israel orchards, loaded with TML (3 e-traps) and Biolure (2 e-traps), to capture C. capitata male and female flies. The use of Biolure was intended as a test of the ability of the trap to capture female C. capitata, and as an attractant for several other non-Tephritidae insects attracted to the bait, which allowed us to test the precision of the code when several other species of insects are present in the image. In addition, we used images generated during the exposure of the ME-baited e-traps in South Africa (Sect. 2.2), but that were not used for the training of the code. The pictures derived from the e-traps in South Africa had images of male B. dorsalis. These images were automatically uploaded to the cloud and processed with the developed deep learning detector and classifier code (see Sect. 2.3). Results obtained from the code were then evaluated by inspecting the uploaded images by an entomologist that contrasted the automatic results generated by the code with the actual images observed by the entomologist. Results of the contrast were summarized using a “confusion matrix” approach (Table S2). The image analysis classification code has been uploaded to a repository: https://github.com/ydiller/insect_detection/tree/public (the main files are train.py and test.py).

Results

McPhail e-trap function and attractiveness of fruit flies

E-traps were deployed in the field and functioned continuously for several periods of time ranging from a minimum of 60 days (in Greece) to a maximum of 257 days (in Israel). Average periods of time that the e-traps were operative uninterruptedly in the field were 165 days/trap in Israel (10 traps), 107 days in South Africa (6 traps), 87 days in Italy (4 traps), 80 days in Austria (4 traps) and 76 days in Greece (6 traps). During these periods of field activity, batteries were not recharged, and no electronic malfunction was recorded. The average rate of uploaded images (i.e., communication function and cellular connectivity) in the five countries can be seen in Fig. 4. The lowest rate of images uploaded was registered in Greece (55% of all potential images). In the rest of the countries, image uploading was above 70% of the potential number of images that could have been uploaded by all e-traps. Israel had the highest level of successful communication and image uploading (84%). Since e-traps utilize cellular communication, and they are deployed in agricultural areas, these data provide information on the general ability of the e-trap to function in rural environments in tested countries, where cellular receivers may be limited in space and time.

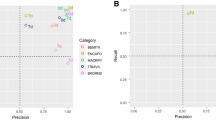

The ability of e-traps to attract targeted FF in contrast to conventional traps is shown in Fig. 5. Both B. dorsalis and C. capitata were attracted and trapped by the e-trap in similar numbers to the ones observed in the conventional traps. On the other hand, B. zonata was attracted in significantly lower numbers in the e-trap in contrast to the conventional Steiner-trap used in Israel for the surveillance of this FF. Figure 6 shows the results of early-warning events (i.e., the ability of the e-trap to provide information on the capture of the target FF before the scout visits and inspects the conventional trap). The statistics includes only periods where any of the two traps (e-trap or conventional) captured at least one adult target FF. The events extend for the period between scout visits, and a positive early warning event includes information provided by the e-trap at least a day before the scout’s visit and inspection of the conventional trap. Figure 6 shows that in all cases, the e-traps were able to provide early warning information with a relatively high rate (frequency of positive events ranged from 77% with C. capitata and B. zonata to 86% with B. dorsalis in South Africa).

Average number of captured FF (B. dorsalis, B. zonata and C. capitata) per trap/day in the electronic trap (green bars) and in the conventional traps (blue bars). Statistical inference was performed using matched paired t-test between e-traps and conventional traps deployed at close distance from each one. Only periods showing at least one FF in either of the traps were considered for the statistics. The only difference in trap efficacy was found for B. zonata

Proportion of early-warning events that the e-trap preceded with information of a FF capture the information provided by the scout derived from visiting and servicing the conventional trap. Events (N) denote periods between scout visits and conventional trap services. Events also applied to those period that target flies were captured by either one of the paired traps

Training and evaluation of the deep model classifier

Figure 7 shows qualitative examples that illustrate that high accuracy results were obtained for the FF-studio dataset. Following this initial training, we further trained the deep detection model with the field dataset derived from images from the field deployment of e-traps in Austria, Italy, South Africa, Greece and Israel (described previously). Figure 8 shows the loss curve during training (sum of losses) and the accuracy improvement during training. Figure 9 shows precision–recall curves for the three FF species target of the study. The AP (“area under the curve,” AUC) for all three classes of interest was high, ranging from 87.41 to 95.80%. Specifically, the model was robust to different light conditions and was accurate on clustered samples. The total classification accuracy, for all classes in the field dataset, was 98.29%, where both confidence score and IoU thresholds were set to 0.5.

Field validation

More than three hundred images derived from the e-traps in Israel and South Africa (not used in the training process of the classifier) were processed with the developed classifier (see illustrations of images before and after processing in Figs. S7–S9). The data generated by the uploaded images after classification of the three FF species and non-target insect by both code and human entomologist, and the evaluation metrics for the three target FF, are summarized in Table 1. The results show a very high precision of the classifier for the three target FF, with precision ranging from 86 to 97%. The highest precision was obtained with C. capitata and the lowest (86%) with B. dorsalis (mainly due to the limited number of images from South Africa). This result suggests a high ability of the developed code to classify the three target FF. Non-target insects (trapped insects that were attracted to the trap and glued to the sticky board, such as other Diptera, lacewings, and bees) were also classified with a high precision (84–86%). Accuracy (i.e., the ability to discern between target FF and other insects) was usually high (> 86%). Other metrics, including sensitivity, specificity and undetected rate, also produced very good estimates suggesting a high capability of the developed code to provide reasonable results and data.

Discussion

Preti et al. (2021) provide a list of requirements to be considered when developing a camera-equipped automatic trap. These include, between others, a trap design that is effective, a trap structure that optimally accommodates all the required electronics, a high optical resolution but low-power consuming optical system, a good wireless communication system, data access and picture display, image recognition algorithms, and a reliable power supply. Our developed e-trap is in accordance with most of Preti et al. (2021) suggested requirements. The design of the e-trap followed known working trapping systems used throughout the world to surveil and monitor FF, the McPhail trap (Steyskal 1977). In addition, we demonstrated comparable functionality and attractiveness of the e-trap in contrast to conventional trapping systems for two FF of economic importance considered in this study (Fig. 5). This was not the case for B. zonata where we had in average 1/3 of the captures shown by the Steiner trap (Fig. 5). However, in terms of Early Warning, the e-trap functioned very well (Fig. 6). The modular accommodation of the electronics, optics and transmission systems provides an efficient use of the internal space and produces a compact e-trap structure (Fig. S1). The power supply is provided by lithium batteries, and at the current rate of energy use, the e-trap can uninterruptedly function for at least 8 months in field conditions, as suggested by one of the field e-traps (A003, Table S1). We selected the option of lithium batteries to avoid any problem that can arise from low solar radiation and the ability of solar panels to recharge batteries resulting from cloudy environmental conditions, or due to certain canopy structures with low penetration of light where the e-trap may be placed to attract FF. Our developed e-trap and image analysis system is also in accordance with the required requisites for a good smart trap prototype (Preti et al. 2021), uploading images to the cloud where they can be inspected by the end-user, or can be processed by the image analysis algorithm to provide scalar results and activate population models and alert systems.

The developed insect classification model demonstrated good capabilities to discern the three FF species, and between these FF and other non-Tephritid insects. In addition, the strategy used to obtain images to train the code using the “FF photographic studio” was demonstrated as an appropriate approach. Recently, Huang et al. (2021) proposed developing a classification system for citrus FF based on a “multi-attention and multi-part convolutional neural network.” They demonstrated the efficacy of their method using a simulation approach. Specifically, they collected images of FF from the web to train their model. In these images, insects were intact and in optimal structural conditions. That approach, although efficient in terms of training and developing time, may not generalize well to field data and result in misclassification when applied to real images derived from field trapping. From our experience with actual field data and images (Table 1, Figs. S7–S9), flies can be misclassified due to their landing position in the sticky board, or be confused with other insects attracted and trapped in the sticky board, such as stable flies. Huang et al. (2021) simulation and training approach, however, may provide a good strategy that can be adopted to improve misclassifications encountered in our developed model and that can be distorted when feeding population dynamic models with scalar data, or mislead alert systems.

An important limitation of our e-trap is the fact that the sticky board becomes saturated with insects if fruit fly's populations in the environment are large. This possibility requires that the e-trap be serviced at a higher frequency than planned, and a new adhesive-board installed once the yellow sticky-board becomes saturated. Huang et al. (2021) provides a theoretical solution to solve this situation using a carrousel mechanism rolling a yellow adhesive sticky paper at certain time–frequency. This possibility was considered at the early stages of our e-trap design, but the idea was discharged due to the foreseen complications expected from the addition of a mechanical system into the e-trap. Adding such a mechanism may create mechanical complications in the functionality of the trap, and increase energy requirements. In addition, our e-trap was conceived for environments with low FF populations (i.e., FF free and low prevalence areas) and a surveillance system for exotic FF, thus reducing the possibility of the adhesive-board saturation. In any case, Huang et al. (2021) mechanical approach may be considered and evaluated in the future if the purpose of our e-trap is extended to IPM and monitoring.

Smart-trap counting numbers of a single attracted FF species in endemic areas are already in use and have been commercialized (see for instance, https://rapidaim.io/). These traps are used mainly for monitoring and are combined with decision support systems, providing the farmer’s or consulting company with the ability to detect FF hot-spots, forecast population trends and applied mitigation measures on time to protect their crops (Schellhorn and Jones 2021). These system are a good selection for locations with endemic populations of a single, or dominant, FF species pest. If, however, the identity of the FF requires to be confirmed, camera-based smart-traps are a better option.

Camera-based smart-traps can be used, for example, for certification of FF-free orchards or low-prevalence systems (Anderson et al. 2017). Camera-based smart-trap can also be of significance to National Plant Protection Organization (NPPO) dealing with alien FF pest surveillance, allowing them to optimize surveillance costs and efforts. In this sense, early talks with potential NPPO user highlighted their interest and the possible initial application of the early warning systems using the E-trap in strategic locations, where information on interceptions is highly critical, and/or in remote areas with difficult access and very high cost to survey. In the case of camera-based surveillance systems, the addition of image analysis capabilities can greatly enhance the power of these devices as a surveillance tool and provide early warning alerts to the appropriate end-user. However, electronic traps based on cameras and images have some drawbacks. Cost may be an important limitation (Preti et al. 2021). These systems require expensive electronic equipment that can importantly increase cost, driving away small and medium size farmers from this technology. This limitation, however, is expected to become of less importance in the future due to continuous reduction in the costs of these electronic components. In addition, energy and power supply required to drive the camera-based systems, is also an important limitation that has lately been solved in commercial smart-traps (Preti et al. 2021). Finally, Preti et al. (2021) point at a fundamental problem of image-based smart-trap which relates to the lack of automatic image recognition and classification. The current study is a step further to solve this problem. Although image recognition at the species level is almost impossible, the developed code can provide good information at the level of FF genus. This ability, together with other information on the status of FF pest in certain region and trade patterns, can be integrated into the system, providing a good system for early warning of exotic FF and for FF free areas, or low-prevalence orchards. In addition, the fact that images can be inspected remotely by a specialist provides the system with an initial filter before scouts are sent to the field, especially in remote areas.

Overall, the current e-trap prototype developed in the current study has shown satisfactory performance for capturing, identifying and counting adults of three major invasive FF species in field conditions. The current prototype is ready to go a step forward and be evaluated by stakeholders in FF management for further upscaling, and possible commercialization, were dedicated hardware can be incorporated importantly reducing costs. We are sure that our e-trap (at this stage, a prototype) can be a major tool for detecting FF all over the globe providing timely, accurate and economically feasible information to National Plant Protection Authorities, growers and the fresh fruit trading industry.

Author Contribution

DN conceived and coordinated the study. GC coordinated the development of the classification code, supported by YD, AS and YA. VA, BS and B-CD coordinated the development of the e-trap electronics and digitation. AM, LS, EB, MW, AE, MC, AS, YA and DN helped plan field work in the different locations and collected field data. DN wrote the first draft of the manuscript, and all authors commented on previous drafts of the final manuscript. All authors read and approved the final manuscript.

References

Anderson C, Low-Choy S, Whittle P, Taylor S, Gambley C, Smith L, Gillespie P, Locker H, David R, Dominiak B (2017) Australian plant biosecurity surveillance systems. Crop Prot 100:8–20. https://doi.org/10.1080/00779962.2019.1707343

Bhardwaj S, Mittal A (2012) A survey on various edge detector techniques. Procedia Technol 4:220–226. https://doi.org/10.1016/j.protcy.2012.05.033

Bottou L (2010) Large-scale machine learning with stochastic gradient descent. In: Proceedings of COMPSTAT'2010. Physica-Verlag, Heidelberg, pp 177–186. https://doi.org/10.1007/978-3-7908-2604-3_16

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 6:679–698. https://doi.org/10.1109/TPAMI.1986.4767851

Cardim Ferreira Lima M, Damascena de Almeida Leandro ME, Valero C, Pereira Coronel LC, Gonzalves Bazzo CO (2020) Automatic detection and monitoring of insect pests—a review. Agriculture 10:161. https://doi.org/10.3390/agriculture10050161

Deqin X, Qiumei Y, Junqian F, Xiaohui D, Jianzhao F, Yaowen Y, Yongyue L (2016) A multitarget trapping and tracking algorithm for Bactrocera dorsalis based on cost model. Comput Electronic Agricult 123:224–231. https://www.sciencedirect.com/science/article/pii/S0168169916300680?via%3Dihub

Diamantidis AD, Papadopoulos NT, Nakas CT, Wu S, Muller H-G, Carey JR (2009) Life history evolution in a globally invading tephritid: patterns of survival and reproduction in medflies from six world regions. Biol J Linn Soc 97:106–117. https://academic.oup.com/biolinnean/article/97/1/106/2448052

Egartner A, Lethmayer C, Gottsberger RA, Blümel S (2019) Survey on Bactrocera spp. (Tephritidae, Diptera) in Austria. Bull OEPP/EPPO Bull 49(3):578–584. https://doi.org/10.1111/epp.12604.

Follett PA, Neven LG (2006) Current trends in quarantine entomology. Annu Rev Entomol 51:359–385. https://doi.org/10.1146/annurev.ento.49.061802.123314

Gilioli G, Sperandio G, Colturato M, Pasquali S, Gervasio P, Wilstermann A, Raja DA, Schrader G (2021) Non-linear physiological responses to climate change: the case of Ceratitis capitata distribution and abundance in Europe. Biol Invasions. https://doi.org/10.1007/s10530-021-02639-9

Goldshtein E, Cohen Y, Hetzroni A, Gazit Y, Timar D, Rosenfeld L, Grinshpon Y, Hoffman A, Mizrach A (2017) Development of an automatic monitoring trap for Mediterranean fruit fly (Ceratitis capitata) to optimize control applications frequency. Comput Electronic Agric 139:115–125. https://doi.org/10.1016/j.compag.2017.04.022

Guillemaud T, Ciosi M, Lombaert E, Estoup A (2011) Biological invasions in agricultural settings: insights from evolutionary biology and population genetics. Comptes Rendus Biol 334:237–246. https://www.sciencedirect.com/science/article/pii/S1631069110002970?via%3Dihub

Huang R, Yao T, Zhan C, Zhang G, Zheng Y (2021) A motor-driven and computer vision-based intelligent E-trap for monitoring insect citrus flies. Agriculture 11:460. https://www.mdpi.com/2077-0472/11/5/460#

IAEA (2019) Shielding the Middle East against invasive pests through good will and regional cooperation. Insect Pest Control Newslett 93:14. https://www.iaea.org/publications/13566/insect-pest-control-newsletter-no-93-july-2019

Ioannou CS, Papanastasiou SA, Zarpas KD, Miranda MA, Sciarretta A, Nestel D, Papadopoulos NT (2019) Development and field testing of a Spatial Decision Support System to control populations of the European Cherry fruit fly, Rhagoletis cerasi, in commercial orchards. Agronomy 9:568. https://doi.org/10.3390/agronomy9100568

Jang EB, Enkerlin W, Miller C, Reyes-Flores J (2014) Trapping related to phytosanitary status and trade. In: Shelly T, Epsky N, Jang EB, Reyes-Flores J, Vargas R (eds) Trapping and the detection, control, and regulation of Tephritid fruit flies. Springer, Cham, pp. 589–608. https://doi.org/10.1007/978-94-017-9193-9_1

Jiang J-E, Tseng C-L, Lu F-M, Yang E-C, Wu Z-S, Chen C-P, Lin S-H, Lin K-C, Liao C-S (2008) A GSM-based remote wireless automation monitoring system for field information: A case study for ecological monitoring of the oriental fruit fly, Bactrocera dorsalis (Hendel). Comput Electron Agric 62:243–259. https://doi.org/10.1016/j.compag.2008.01.005

Jung J, Maeda M, Chang A, Bhandari M, Ashapure A, Landivar-Bowles J (2021) The potential of remote sensing and artificial intelligence as tools to improve the resilience of agricultural production systems. Curr Opin Biotechnol 70:15–22. https://doi.org/10.1016/j.copbio.2020.09.003

Liao M-S, Chuang C-L, Lin T-S, Chen C-P, Zheng X-Y, Chen P-O, Liao K-C, Jiang J-E (2012) Development of an autonomous early warning system for Bactrocera dorsalis (Hendel) outbreaks in remote fruit orchards. Comput Electron Agric 88:1–12. https://doi.org/10.1016/j.compag.2012.06.008

Lockwood JL, Hoopes MF, Marchetti MP (2007) Invasion ecology. Blackwell Publishing, Malden, P. 304.

Miranda MA, Barcelo C, Valdes F, Feliu JF, Nestel D, Papadopoulos N, Sciarretta A, Ruiz M, Alorda B (2019) Developing and implementation of Decision Support System (DSS) for the control of the olive fly, Bactrocera oleae, in Mediterranean olive orchards. Agronomy 9:620. https://doi.org/10.3390/agronomy9100620

Nestel D, Cohen Y, Shaked B, Alchanatis V, Nemny-Lavy E, Miranda MA, Sciarretta A, Papadopoulos NT (2019) An integrated decision support system for environmentally-friendly management of the Ethiopian fruit fly in greenhouse crops. Agronomy 9:459. https://doi.org/10.3390/agronomy9080459

Nugnes F, Russo E, Viggiani G, Bernardo U (2018) First record of an invasive fruit fly belonging to Bactrocera dorsalis complex (Diptera: Tephritidae) in Europe. Insects 9:182. https://www.mdpi.com/2075-4450/9/4/182

Padilla R, Passos WL, Dias TL, Netto SL, da Silva EA (2021) A comparative analysis of object detection metrics with a companion open-source toolkit. Electronics 10(3):279. https://doi.org/10.3390/electronics10030279

Papadopoulos NT, Plant RE, Carey JR (2013) From trickle to flood: the largescale, cryptic invasion of California by tropical fruit flies. Proc R Soc B 280:20131466. https://doi.org/10.1098/rspb.2013.1466

Pimentel D (2011) Biological invasions: economic and environmental costs of Alien plant, animal and microbe species, 2nd edn. CRC Press, Taylor and Francis Group, Boca Raton, p. 446

Potamitis I, Rigakis I, Vidakis N, Petousis M, Weber M (2018) Affordable bimodal optical sensors to spread the use of automated insect monitoring. J Sensors, ID 3949415. https://doi.org/10.1155/2018/3949415

Preti M, Verheggen F, Angeli S (2021) Insect pest monitoring with camera-equipped traps: strengths and limitations. J Pests Sci 94:203–217. https://doi.org/10.1007/s10340-020-01309-4.pdf

Qin Y, Paini DR, Wang C, Fang Y, Li Z (2015) Global establishment risk of economically important fruit fly species (Tephritidae). PLoS ONE 10: e0116424. https://doi.org/10.1371/journal.pone.0116424

Rassati D, Faccoli M, Chinellato F, Hardwick S, Suckling DM, Battisti A (2016) Web‐based automatic traps for early detection of alien wood‐boring beetles. Entomol Exp Appl 160(1):91–95. https://doi.org/10.1111/eea.12453

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inform Process Syst 28:91–99. https://doi.org/10.48550/arXiv.1506.01497

Schellhorn NA, Jones LK (2021) Real-time insect detection and monitoring: Breaking barriers to area-wide integrated management of insect pests. In: Hendrichs J, Pereira R, Vreysens MJV (eds) Area-Wide Integrated Pest Management: development and field applications. CRC Press, Boca Raton, pp. 889–902. https://doi.org/10.1201/9781003169239-51/real-time-insect-detection-monitoring-breaking-barriers-area-wide-integrated-management-insect-pests-schellhorn-jones

Sciarretta A, Tabilio MR, Amore A, Colacci M, Miranda MA, Nestel D, Papadopoulos NT, Trematerra P (2019) Defining and evaluating a Decision Support System (DSS) for the precise pest management of the Mediterranean fruit fly, Ceratitis capitata, at the farm level. Agronomy 9:608. https://doi.org/10.3390/agronomy9100608

Shelly T, Nishimoto J, Kurashima R (2014) Distance-dependent capture probability of male Mediterranean fruit flies in trimedlure-baited traps in Hawaii. J Asian-Pacific Entomol 17:525–530. https://www.sciencedirect.com/science/article/pii/S1226861514000612?via%3Dihub

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6:60. https://doi.org/10.1186/s40537-019-0197-0

Steyskal GC (1977) History and use of the McPhail trap. Florida Entomol 60:11–16. https://www.jstor.org/stable/3494476?seq=1#metadata_info_tab_contents

Stone M (1974) Cross-validatory choice and assessment of statistical predictions. J Roy Stat Soci Ser B (Methodological) 36(2):111–147. https://doi.org/10.1111/j.2517-6161.1974.tb00994.x.

Szyniszewska AM, Tatem AJ (2014) Global assessment of seasonal potential distribution of Mediterranean fruit fly, Ceratitis capitata (Diptera: Tephritidae). PLoS ONE 9:e111582. https://doi.org/10.1371/journal.pone.0111582

White IM, Elson-Harris M (1992) Fruit flies of economic Importance: their Identification and bionomics. CAB International, Oxon, p. 601.

Acknowledgements

This project was partially financed by the European Union Horizon 2020 Research and Innovation Program (FF-IPM) (Grant agreement No 818184) and by the International Atomic Energy Agency Research Contract (No. 22412). We appreciate assistance provided by Maria Rosaria Tabilio (Research Centre for Olive, Citrus and Tree Fruit, CREA, Italy) and Umberto Berardo (Institute for the Sustainable Protection of Plants, CNR, Italy) for their assistance in identifying locations and deploying e-traps in Italy. We enormously appreciate NC Manoukis, USDA-ARS, Hilo, Hawaii USA, and two anonymous reviewers for their valuable suggestions to a previous version of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Antonio Biondi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Diller, Y., Shamsian, A., Shaked, B. et al. A real-time remote surveillance system for fruit flies of economic importance: sensitivity and image analysis. J Pest Sci 96, 611–622 (2023). https://doi.org/10.1007/s10340-022-01528-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10340-022-01528-x