Abstract

Climate change is defined as the shift in climate patterns mainly caused by greenhouse gas emissions from natural systems and human activities. So far, anthropogenic activities have caused about 1.0 °C of global warming above the pre-industrial level and this is likely to reach 1.5 °C between 2030 and 2052 if the current emission rates persist. In 2018, the world encountered 315 cases of natural disasters which are mainly related to the climate. Approximately 68.5 million people were affected, and economic losses amounted to $131.7 billion, of which storms, floods, wildfires and droughts accounted for approximately 93%. Economic losses attributed to wildfires in 2018 alone are almost equal to the collective losses from wildfires incurred over the past decade, which is quite alarming. Furthermore, food, water, health, ecosystem, human habitat and infrastructure have been identified as the most vulnerable sectors under climate attack. In 2015, the Paris agreement was introduced with the main objective of limiting global temperature increase to 2 °C by 2100 and pursuing efforts to limit the increase to 1.5 °C. This article reviews the main strategies for climate change abatement, namely conventional mitigation, negative emissions and radiative forcing geoengineering. Conventional mitigation technologies focus on reducing fossil-based CO2 emissions. Negative emissions technologies are aiming to capture and sequester atmospheric carbon to reduce carbon dioxide levels. Finally, geoengineering techniques of radiative forcing alter the earth’s radiative energy budget to stabilize or reduce global temperatures. It is evident that conventional mitigation efforts alone are not sufficient to meet the targets stipulated by the Paris agreement; therefore, the utilization of alternative routes appears inevitable. While various technologies presented may still be at an early stage of development, biogenic-based sequestration techniques are to a certain extent mature and can be deployed immediately.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Status of climate change

Climate change is defined as the shift in climate patterns mainly caused by greenhouse gas emissions. Greenhouse gas emissions cause heat to be trapped by the earth’s atmosphere, and this has been the main driving force behind global warming. The main sources of such emissions are natural systems and human activities. Natural systems include forest fires, earthquakes, oceans, permafrost, wetlands, mud volcanoes and volcanoes (Yue and Gao 2018), while human activities are predominantly related to energy production, industrial activities and those related to forestry, land use and land-use change (Edenhofer et al. 2014). Yue and Gao statistically analysed global greenhouse gas emissions from natural systems and anthropogenic activities and concluded that the earth’s natural system can be considered as self-balancing and that anthropogenic emissions add extra pressure to the earth system (Yue and Gao 2018).

GHG emissions overview

The greenhouse gases widely discussed in the literature and defined by the Kyoto protocol are carbon dioxide (CO2), methane (CH4), nitrous oxide (N2O), and the fluorinated gases such as hydrofluorocarbons (HFCs), perfluorocarbons (PFCs) and sulphur hexafluoride (SF6) (UNFCCC 2008). According to the emissions gap report prepared by the United Nations Environment Programme (UNEP) in 2019, total greenhouse gas emissions in 2018 amounted to 55.3 GtCO2e, of which 37.5 GtCO2 are attributed to fossil CO2 emissions from energy production and industrial activities. An increase of 2% in 2018 is noted, as compared to an annual increase of 1.5% over the past decade for both total global greenhouse gas and fossil CO2 emissions. The rise of fossil CO2 emissions in 2018 is mainly driven by higher energy demand. Furthermore, emissions related to land-use change amounted to 3.5 GtCO2 in 2018 (UNEP 2019). Together in 2018, fossil-based and land-use-related CO2 emissions accounted for approximately 74% of the total global greenhouse gas emissions. Methane (CH4), another significant greenhouse gas, had an emission rate increase of 1.7% in 2018 as compared to an annual increase of 1.3% over the past decade. Nitrous oxide (N2O) emissions, which are mainly influenced by agricultural and industrial activities, saw an increase of 0.8% in 2018 as compared to a 1% annual increase over the past decade. A significant increase was, however, noted in the fluorinated gases during 2018 at 6.1% as compared to a 4.6% annual increase over the past decade (UNEP 2019). To put these numbers into perspective, a recent Intergovernmental Panel on Climate Change (IPCC) report demonstrated that anthropogenic activities so far have caused an estimated 1.0 °C of global warming above the pre-industrial level, specifying a likely range between 0.8 and 1.2 °C. It is stated that global warming is likely to reach 1.5 °C between 2030 and 2052 if the current emission rates persist (IPCC 2018).

Climate change impacts, risks and vulnerabilities

An understanding of the severe impact of climate change on natural and human systems as well as the risks and associated vulnerabilities is an important starting point in comprehending the current state of climate emergency. Changes in climate indicators, namely temperature, precipitation, seal-level rise, ocean acidification and extreme weather conditions have been highlighted in a recent report by the United Nations Climate Change Secretariat (UNCCS). Climate hazards reported included droughts, floods, hurricanes, severe storms, heatwaves, wildfires, cold spells and landslides (UNCCS 2019). According to the Centre for Research on the Epidemiology of Disasters (CRED), the world encountered 315 cases of natural disasters in 2018, mainly climate-related. This included 16 cases of drought, 26 cases of extreme temperature, 127 cases of flooding, 13 cases of landslides, 95 cases of storms and 10 cases of wildfire. The number of people affected by natural disasters in 2018 was 68.5 million, with floods, storms and droughts accounting for 94% of total affected people. In terms of economic losses, a total of $131.7 billion was lost in 2018 due to natural disasters, with storms ($70.8B), floods ($19.7B), wildfires ($22.8B) and droughts ($9.7B) accounting for approximately 93% of the total costs. CRED also provides data on disasters over the past decade, which shows even higher annual averages in almost all areas, except for wildfire cases. The economic losses attributed to wildfires in 2018 alone are approximately equal to the collective losses from wildfires incurred over the past decade, which is quite alarming (CRED 2019). Moreover, wildfires are a direct source of CO2 emissions. Although wildfires are part of the natural system, it is clear that human-induced emissions are directly interfering and amplifying the impact of natural system emissions. It is evident that human-induced climate change is a major driving force behind many natural disasters occurring globally.

Furthermore, climate risks such as temperature shifts, precipitation variability, changing seasonal patterns, changes in disease distribution, desertification, ocean-related impacts and soil and coastal degradation contribute to vulnerability across multiple sectors in many countries (UNCCS 2019). Sarkodie et al. empirically examined climate change vulnerability and adaptation readiness of 192 United Nations countries and concluded that food, water, health, ecosystem, human habitat and infrastructure are the most vulnerable sectors under climate attack while pointing out that Africa is the most vulnerable region to climate variability (Sarkodie and Strezov 2019). It is also important to note the interconnected nature of such sectors and the associated impacts.

The 15th edition of the global risks report 2020 prepared by the world economic forum thoroughly presented a number of climate realities, laying out areas that are greatly affected. The risks included loss of life due to health hazards and natural disasters, as well as excessive stress on ecosystems, especially aquatic/marine systems. Moreover, food and water security are other areas that are highly impacted. Increased migration is anticipated due to extreme weather conditions and disasters as well as rising sea levels. Geopolitical tensions and conflicts are likely to arise as countries aim to extract resources along water and land boundaries. The report also discusses the negative financial impact on capital markets as systematic risks soar. Finally, the impact on trade and supply chains is presented (WEF 2020).

An assessment, recently presented in an Intergovernmental Panel on Climate Change (IPCC) special report, covered the impacts and projected risks associated with 2 levels of global warming, 1.5 °C and 2 °C. The report investigated the negative impact of global warming on freshwater sources, food security and food production systems, ecosystems, human health, urbanization as well as poverty and changing structures of communities. The report also investigated climate change impact on key economic sectors such as tourism, energy and transportation. It is evident that most of the impacts assessed have lower associated risks at 1.5 °C compared to 2 °C warming level. We would likely reach 1.5 °C within the next 3 decades and increases in warming levels beyond this point would amplify risk effects; for example, water stress would carry double the risk under a 2 °C level compared to 1.5 °C. An increase of 70% in population affected by fluvial floods is projected under the 2 °C scenario compared to 1.5 °C, especially in USA, Europe and Asia. Double or triple rates of species extinction in terrestrial ecosystems are projected under the 2 °C level compared to 1.5 °C (IPCC 2018). It can be simply concluded that the world is in a current state of climate emergency.

Global climate action

Acknowledgement of climate change realities started in 1979 when the first world climate conference was held in Geneva. The world climate conference was introduced by the World Meteorological Organization in response to the observation of climatic events over the previous decade. The main purpose was to invite technical and scientific experts to review the latest knowledge on climate change and variability caused by natural and human systems as well as assess future impacts and risks to formulate recommendations moving forward (WMO 1979). This was possibly the first of its kind conference discussing the adverse effects of climate change. In 1988, the Intergovernmental Panel on Climate Change (IPCC) was set up by the World Meteorological Organization in collaboration with the United Nations Environment Programme (UNEP) to provide governments and official bodies with scientific knowledge and information that can be used to formulate climate-related policies (IPCC 2013).

Perhaps, the most critical step taken, in terms of action, was the adoption of the United Nations Framework Convention on Climate Change (UNFCCC) in 1992, which then went into force in 1994. Since then, the UNFCCC has been the main driving force and facilitator of climate action globally. The main objective of the convention is the stabilization of greenhouse gas concentrations in the atmosphere to prevent severe impacts on the climate system. The convention set out the commitments to all parties involved, putting major responsibilities on developed countries to implement national policies to limit anthropogenic emissions and enhance greenhouse gas sinks. The target was to reduce emissions by the year 2000 to the levels achieved in the previous decade. Moreover, committing developed country parties to assist vulnerable developing country parties financially and technologically in taking climate action. The convention established the structure, reporting requirements and mechanism for financial resources, fundamentally setting the scene for global climate policy (UN 1992). The convention is currently ratified by 197 countries (UNCCS 2019).

During the third UNFCCC conference of the parties (COP-3) in 1997, the Kyoto protocol was adopted and went into force in 2005. The Kyoto protocol introduced the emission reduction commitments for developed countries for a five-year commitment period between 2008 and 2012. The protocol laid out all related policies, monitoring and reporting systems, as well as introduced three market-based mechanisms to achieve those targets. The protocol introduced two project-based mechanisms, clean development mechanism and joint implementation mechanism. The clean development mechanism allows developed country parties to invest and develop emission reduction projects in developing countries, to drive sustainable development in the host country as well as offset carbon emissions of the investing party. Joint implementation projects allow developed country parties to develop similar projects, however, in other developed countries that are protocol parties, offsetting excess emissions of the investing party. Furthermore, the protocol introduced an emissions trading mechanism as a platform to facilitate the trading of annually assigned emissions that are saved by protocol members to those that exceed their limits (UNFCCC 1997). Emission reduction has mainly been achieved through the introduction of renewable energy, energy efficiency and afforestation/reforestation-related projects.

The Kyoto protocol defines four emission saving units, each representing one metric ton of CO2 equivalent and are all tradeable (UNFCCC 2005).

-

1

Certified emissions reduction unit, obtained through clean development mechanism projects.

-

2

Emission reduction unit, obtained through joint implementation projects.

-

3

Assigned amount unit, obtained through the trading of unused assigned emissions between protocol parties.

-

4

Removal unit, obtained through reforestation-related projects.

The Kyoto units and general framework introduced laid the structural foundation of a carbon emissions market and the concept of carbon pricing. Many national and regional governments introduced emissions trading schemes; some are mandatory while others are voluntary. In some cases, such schemes are linked to Kyoto commitments and regulations. The largest emissions trading scheme introduced thus far is the European emissions trading scheme (Perdan and Azapagic 2011). Villoria-Saez et al. empirically investigated the effectiveness of greenhouse gas emissions trading scheme implementation on actual emission reductions covering six major emitting regions. The investigation presented a number of findings; first, it is possible to reduce greenhouse gas emissions by approximately 1.58% annually upon scheme implementation. Furthermore, after 10 years of implementation, approximately 23.43% of emissions reduction can be achieved in comparison with a scenario of non-implementation (Villoria-Sáez et al. 2016). Another emission abatement instrument widely discussed in the literature is carbon taxation. There is growing scientific evidence that carbon taxation is an effective instrument in reducing greenhouse gas emissions; however, political opposition by the public and industry is the main reason delaying many countries in adopting such mechanism (Wang et al. 2016).

In 2012, the Doha amendment to the Kyoto protocol was adopted, mainly proposing a second commitment period from 2013 to 2020 as well as updating emissions reduction targets. The amendment proposed a greenhouse gas emissions reduction target of at least 18% below 1990 levels. The amendment has not yet entered into force since it has not been ratified by the minimum number of parties required to this date (UNFCCC 2012).

During the twenty-first UNFCCC conference of the parties (COP-21) held in Paris in 2015, the Paris agreement was adopted and entered into force in 2016. The Paris agreement added further objectives, commitments, enhanced compliance and reporting regulations, as well as support mechanisms to the existing climate change combat framework in place. The main objective of the agreement is to limit the global temperature increase to 2 °C by 2100 and pursue efforts to limit the increase to 1.5 °C. The agreement aims to reach global peaking of greenhouse gases as soon as possible as to strike a balance between human-induced emission sources and greenhouse gas sinks and reservoirs between 2050 and 2100. The agreement also introduced new binding commitments, asking all parties to deliver nationally determined contributions and to enforce national measures to achieve, and attempt to exceed such commitments. Enhanced transparency, compliance and clear reporting and communication are advocated under the agreement. Furthermore, the agreement encourages voluntary cooperation between parties beyond mandated initiatives. Moreover, financial support and technological support, as well as capacity building initiatives for developing countries, are mandated by the agreement. Such obligations are to be undertaken by developed country parties to promote sustainable development and establish adequate mitigation and adaptation support measures within vulnerable countries. Perhaps, one of the most important goals established under the agreement is that of adaptation and adaptive capacity building concerning the temperature goal set (UN 2015).

Under article 6 of the agreement, two international market mechanisms were introduced, cooperative approaches and the sustainable development mechanism. These mechanisms are to be utilized by all parties to meet their nationally determined contributions. Cooperative approaches are a framework that allows parties to utilize internationally transferred mitigation outcomes (ITMOs) to meet nationally determined contribution goals as well as stimulate sustainable development. On the other hand, the sustainable development mechanism is a new approach that promotes mitigation and sustainable development and is perceived as the successor of the clean development mechanism. There is still much debate and negotiations on such mechanisms moving forward (Gao et al. 2019).

Nieto et al. conducted an in-depth systematic analysis of the effectiveness of the Paris agreement policies through the evaluation of 161 intended nationally determined contributions (INDCs) representing 188 countries. The study investigated sectoral policies in each of these countries and quantified emissions under such INDCs. The analysis concluded that a best-case scenario would be an annual global emission increase of approximately 19.3% in 2030 compared to the base period (2005–2015). In comparison, if no measures were taken a 31.5% increase in global emissions is projected. It is concluded that if the predicted best-case level of emissions is maintained between 2030 and 2050 a temperature increase of at least 3 °C would be realized. Furthermore, a 4 °C increase would be assured if annual emissions continue to increase (Nieto et al. 2018).

To meet the 1.5 °C target by the end of the century, the IPCC stated that by 2030 greenhouse gas emissions should be maintained at 25–30 GtCO2e year−1. In comparison, the current unconditional nationally determined contributions for 2030 are estimated at 52–58 GtCO2e year−1. Based on pathway modelling for a 1.5 °C warming scenario, a 45% decline in anthropogenic greenhouse gas emissions must be reached by 2030 as compared to 2010 levels, and net-zero emissions must be achieved by 2050. To maintain a 2 °C global warming level by the end of the century, emissions should decline by approximately 25% in 2030 as compared to 2010 levels and net-zero emissions should be achieved by 2070 (IPCC 2018). There is growing evidence that confirms that current mitigation efforts, as well as future emissions commitments, are not sufficient to achieve the temperature goals set by the Paris agreement (Nieto et al. 2018; Lawrence et al. 2018). Further measures and new abatement routes must be explored if an attempt is to be made to achieve such goals.

Climate change mitigation strategies

Introduction

There are three main climate change mitigation approaches discussed throughout the literature. First, conventional mitigation efforts employ decarbonization technologies and techniques that reduce CO2 emissions, such as renewable energy, fuel switching, efficiency gains, nuclear power, and carbon capture storage and utilization. Most of these technologies are well established and carry an acceptable level of managed risk (Ricke et al. 2017; Victor et al. 2018; Bataille et al. 2018; Mathy et al. 2018; Shinnar and Citro 2008; Bustreo et al. 2019).

A second route constitutes a new set of technologies and methods that have been recently proposed. These techniques are potentially deployed to capture and sequester CO2 from the atmosphere and are termed negative emissions technologies, also referred to as carbon dioxide removal methods (Ricke et al. 2017). The main negative emissions techniques widely discussed in the literature include bioenergy carbon capture and storage, biochar, enhanced weathering, direct air carbon capture and storage, ocean fertilization, ocean alkalinity enhancement, soil carbon sequestration, afforestation and reforestation, wetland construction and restoration, as well as alternative negative emissions utilization and storage methods such as mineral carbonation and using biomass in construction (Lawrence et al. 2018; Palmer 2019; McLaren 2012; Yan et al. 2019; McGlashan et al. 2012; Goglio et al. 2020; Lin 2019; Pires 2019; RoyalSociety 2018; Lenzi 2018).

Finally, a third route revolves around the principle of altering the earth’s radiation balance through the management of solar and terrestrial radiation. Such techniques are termed radiative forcing geoengineering technologies, and the main objective is temperature stabilization or reduction. Unlike negative emissions technologies, this is achieved without altering greenhouse gas concentrations in the atmosphere. The main radiative forcing geoengineering techniques that are discussed in the literature include stratospheric aerosol injection, marine sky brightening, cirrus cloud thinning, space-based mirrors, surface-based brightening and various radiation management techniques. All these techniques are still theoretical or at very early trial stages and carry a lot of uncertainty and risk in terms of practical large-scale deployment. At the moment, radiative forcing geoengineering techniques are not included within policy frameworks (Lawrence et al. 2018; Lockley et al. 2019).

Conventional mitigation technologies

As previously discussed, energy-related emissions are the main driver behind the increased greenhouse gas concentration levels in the atmosphere; hence, conventional mitigation technologies and efforts should be focused on both the supply and demand sides of energy. Mitigation efforts primarily discussed in the literature cover technologies and techniques that are deployed in four main sectors, power on the supply side and industry, transportation and buildings on the demand side. Within the power sector, decarbonization can be achieved through the introduction of renewable energy, nuclear power, carbon capture and storage as well as supply-side fuel switch to low-carbon fuels such as natural gas and renewable fuels. Furthermore, mitigation efforts on the demand side include the efficiency gains achieved through the deployment of energy-efficient processes and sector-specific technologies that reduce energy consumption, as well as end-use fuel switch from fossil-based fuels to renewable fuels, and, moreover, the integration of renewable power technologies within the energy matrix of such sectors (Mathy et al. 2018; Hache 2015). This section will review the literature on decarbonization and efficiency technologies and techniques that cover those four main sectors introduced. Figure 1 depicts the conventional mitigation technologies and techniques discussed in the literature and critically reviewed in this paper.

Major decarbonization technologies which focus on the reduction of CO2 emissions related to the supply and demand sides of energy. Conventional mitigation technologies include renewable energy, nuclear power, carbon capture and storage (CCS) as well as utilization (CCU), fuel switching and efficiency gains. These technologies and techniques are mainly deployed in the power, industrial, transportation and building sectors

Renewable energy

According to a recent global status report on renewables, the share of renewable energy from the total final energy consumption globally has been estimated at 18.1% in 2017 (REN21 2019). An array of modern renewable energy technologies is discussed throughout the literature. The most prominent technologies include photovoltaic solar power, concentrated solar power, solar thermal power for heating and cooling applications, onshore and offshore wind power, hydropower, marine power, geothermal power, biomass power and biofuels (Mathy et al. 2018; Shinnar and Citro 2008; Hache 2015; REN21 2019; Hussain et al. 2017; Østergaard et al. 2020; Shivakumar et al. 2019; Collura et al. 2006; Gude and Martinez-Guerra 2018; Akalın et al. 2017; Srivastava et al. 2017).

In terms of power production, as of 2018, renewable energy accounted for approximately 26.2% of global electricity production. Hydropower accounted for 15.8%, while wind power’s share was 5.5%, photovoltaic solar power 2.4%, biopower 2.2% and geothermal, concentrated solar power and marine power accounted for 0.46% of the generated electricity (REN21 2019). While large-scale hydropower leads in terms of generation capacity as well as production, there has been a significant capacity increase in photovoltaic solar power and onshore wind power over the past decade. By the end of 2018, a total of 505 GW of global installed capacity for photovoltaic solar power has been noted as compared to 15 GW in 2008. Regarding wind power, 591 GW of global installed capacity is recorded in 2018 as compared to 121 GW in 2008. Global biopower capacity has been estimated at 130 GW in 2018 with a total 581 TWh of production in that year. China has maintained its position as the largest renewable energy producing country, from solar, wind and biomass sources. The total share of renewable energy in global power capacity has reached approximately 33% in 2018 (REN21 2019).

Besides the power sector, renewable energy can be deployed within the industry, transportation and building sectors. Photovoltaic and thermal solar energy as well as industrial end-use fuel switch to renewable fuels such as solid, liquid and gaseous biofuels for combined thermal and power production are examples of decarbonization efforts through renewables. Buildings can also benefit from solar as well as biomass-based technologies for power, heating and cooling requirements. In relation to the transportation sector, end-use fuel switch is a determinant to sector decarbonization. Some examples of biofuels are biodiesel, first- and second-generation bioethanol, bio-hydrogen, bio-methane and bio-dimethyl ether (bio-DME) (Srivastava et al. 2020; Chauhan et al. 2009; Hajilary et al. 2019; Osman 2020). Furthermore, hydrogen produced through electrolysis using renewable energy is a potential renewable fuel for sector decarbonization. Another example of sector decarbonization through renewable energy deployment is electric vehicles using renewable power (Michalski et al. 2019). Other mitigation measures within these sectors will be further discussed in the following section.

Variable renewables, such as solar and wind, are key technologies with significant decarbonization potential. One of the main technological challenges associated is the intermittent nature/variability in power production. This has been overcome by integrating such technologies with storage as well as other renewable baseload and grid technologies. Sinsel et al. discuss four specific challenge areas related to variable renewables, namely quality, flow, stability and balance. Furthermore, they present a number of solutions that mainly revolve around flexibility as well as grid technologies for distributed as well as centralized systems (Sinsel et al. 2020).

Economic, social and policy dimensions play an influencing role in renewable energy technology innovation and deployment. Pitelis et al. investigated the choice of policy instruments and its effectiveness in driving renewable energy technology innovation for 21 Organization for Economic Co-operation and Development (OECD) countries between 1994 and 2014. The study classified renewable energy policies into three categories: technology-push, demand-pull and systemic policy instruments. Furthermore, the study investigated the impact of each policy classification on innovation activity of various renewable energy technologies: solar, wind, biomass, geothermal and hydro. The study concluded that not all policy instruments have the same effect on renewable energy technologies and that each technology would require appropriate policies. However, the study suggested that demand-pull policy instruments are more effective in driving renewable energy innovation compared to alternative policy types (Pitelis et al. 2019). On barriers and drivers of renewable energy deployment, Shivakumar et al. highlighted various dimensions that may hinder or enable renewable energy project development. The main points highlighted revolve around policy, financial access, government stability and long-term intentions, administrative procedures and support framework or lack thereof, as well as the profitability of renewable energy investments (Shivakumar et al. 2019). Seetharaman et al. analysed the impact of various barriers on renewable energy deployment. The research confirms that regulatory, social and technological barriers play a significant role in renewable energy deployment. The research does not find a significant direct relationship between economic barriers and project deployment; however, the interrelated nature between the economic dimension with regulatory, social and technological barriers affects deployment, however, indirectly (Seetharaman et al. 2019).

In terms of the relationship between financial accessibility and renewable energy deployment, Kim et al. empirically investigated such relationship by analysing a panel data set of 30 countries during a 13-year period from 2000 to 2013. Statistical evidence shows the positive impact of well-developed financial markets on renewable energy deployment and sector growth. Furthermore, the study confirms a positive and significant relationship between market-based mechanisms, such as clean development mechanism, with renewable energy deployment. There is a strong impact on photovoltaic solar and wind technologies, while the impact is marginal under biomass and geothermal technologies (Kim and Park 2016).

Pfeiffer et al. studied the diffusion of non-hydro renewable energy (NHRE) technologies in 108 developing countries throughout a 30-year period from 1980 to 2010. Based on the results, economic and regulatory policies played a pivotal role in NHRE deployment, as well as governmental stability, higher education levels and per capita income. On the other hand, growth in energy demand, aid and high local fossil fuel production hindered NHRE diffusion. In contrast with Kim et al., the study finds weak support to show that international financing mechanisms and financial market development positively influenced diffusion (Pfeiffer and Mulder 2013). The reason may be related to how the analysis was constructed, different data sets, periods and statistical methods.

Decarbonization through renewable energy deployment is extremely significant. Development of renewable energy projects should be seen as a top priority. The areas that would drive decarbonization through renewable energy and should be focused upon by policymakers, financiers and market participants include policy instruments, financial support and accessibility, and market-based mechanisms to incentivize project developers. Moreover, governmental support frameworks, public education for social acceptance as well as research and development efforts for technological advances and enhanced efficiencies are important focus areas.

Nuclear power

According to the latest report prepared by the international atomic energy agency (IAEA), as of 2018, 450 nuclear energy plants are operational with a total global installed capacity of 396.4 GW. It is projected that an increase of 30% in installed capacity will be realized by 2030 (from a base case of 392 GW in 2017). As a low-case projection scenario, it is estimated that by 2030 a 10% dip might be realized based on the 2017 numbers. On the long term, it is projected that global capacity might reach 748 GW by 2050, as a high-case scenario (IAEA 2018). Pravalie et al. provide an interesting review of the status of nuclear power. The investigation demonstrates the significant role nuclear power has played in terms of contribution to global energy production as well as its decarbonization potential in the global energy system. The study presents an estimation of approximately 1.2–2.4 Gt CO2 emissions that are prevented annually from nuclear power deployment, as alternatively the power would have been produced through coal or natural gas combustion. The paper suggests that to be in line with the 2 °C target stipulated by the Paris agreement, nuclear plant capacity must be expanded to approximately 930 GW by 2050, with a total investment of approximately $ 4 trillion (Prăvălie and Bandoc 2018).

Although nuclear energy is considered as a low-carbon solution for climate change mitigation, it comes with a number of major disadvantages. First, the capital outlay and operating costs associated with nuclear power development are quite significant. Furthermore, risk of environmental radioactive pollution is a major issue related to nuclear power, which is mainly caused through the threat of reactor accidents as well as the danger associated with nuclear waste disposal (Prăvălie and Bandoc 2018; Abdulla et al. 2019). While conventional fission-based nuclear plants are suggested to be phased out in future, the introduction of enhanced fusion-based nuclear technology may positively contribute to mitigation efforts in the second half of the century. Fusion power is a new generation of nuclear power, which is more efficient than the conventional fission-based technology and does not carry the hazardous waste disposal risk associated with conventional fission-based nuclear technology. Furthermore, fusion power is characterized as a zero-emission technology (Prăvălie and Bandoc 2018; Gi et al. 2020).

Carbon capture, storage and utilization

Carbon capture and storage is a promising technology discussed in the literature as a potential decarbonization approach to be applied to the power as well as the industrial sectors. The technology consists of separating and capturing CO2 gases from processes that rely on fossil fuels such as coal, oil or gas. The captured CO2 is then transported and stored in geological reservoirs for very long periods. The main objective is the reduction in emission levels while utilizing fossil sources. Three capturing technologies are discussed in the literature: pre-combustion, post-combustion and oxyfuel combustion. Each technology carries a specific process to extracting and capturing CO2. Post-combustion capture technologies, however, are the most suitable for retrofit projects and have vast application potential. Once CO2 has been successfully captured, it is liquified and transported through pipelines or ships to suitable storage sites. Based on the literature, storage options include depleted oil and gas fields, coal beds and underground saline aquifers not used for potable water (Vinca et al. 2018). Some of the main drawbacks of carbon capture and storage include safety in relation to secured storage and the possibility of leakage. Negative environmental impacts that may result from onshore storage locations that undergo accidental leakage have been investigated by Ma et al. The investigation focused on the impact of leakage on agricultural land (Ma et al. 2020). Risk of leakage and associated negative impacts have also been pointed out by Vinca et al. (2018). Other issues related to this technology include public acceptance (Tcvetkov et al. 2019; Arning et al. 2019) as well as the high deployment costs associated (Vinca et al. 2018). Another pathway post-carbon capture is the utilization of the CO2 captured in the production of chemicals, fuels, microalgae and concrete building materials, as well as utilization in enhanced oil recovery (Hepburn et al. 2019; Aresta et al. 2005; Su et al. 2016; Qin et al. 2020).

Large-scale deployment of carbon capture storage and utilization technologies is yet to be proven. According to the international energy agency, there are only 2 carbon capture and storage projects under operation as of 2018, with a combined annual capture capacity of 2.4 MtCO2. There are 9 more carbon capture projects under development and are projected to increase capacity to 11 MtCO2 by 2025; however, a significant deviation exists from the sustainable development scenario targeted by the international energy agency for 2040 which is a capacity of 1488 MtCO2 (IEA 2019a).

Fuel switch and efficiency gains

Fuel switching in the power sector from coal to gas, in the short-term, has been discussed extensively in the literature as a potential approach to economically transition to a low-carbon and hopefully a zero-carbon economy in future (Victor et al. 2018; Wendling 2019; Pleßmann and Blechinger 2017). The move to natural gas is also applicable to industry, transportation and building sectors; however, as discussed previously the switch to renewable fuels is a more sustainable approach creating further decarbonization potential in these sectors.

In addition to fuel switching, efficiency gains are of extreme significance within mitigation efforts. Efficiency gains in the power sector are achieved through improvements in thermal power plants by enhancing the efficiency of fuel combustion as well as improving turbine generator efficiencies. Furthermore, waste heat recovery for additional thermal as well as electric production enhances efficiency. In gas-fired power plants, the utilization of a combined cycle technology enhances the efficiency significantly. Combined heat and power units have also played an interesting role in efficiency gains. Technological advances within transmission and distribution networks also enhance efficiencies by reducing losses (REN21 2019).

In industry, there are many potential areas where efficiency gains may be realized. For example, in steel and cement applications, waste heat can be recovered for onsite power and heat production through the installation of waste heat-driven power plants that utilize waste heat from exhaust gases. For industries that utilize process steam, there is an excellent opportunity to utilize waste steam pressure to generate electric power for onsite usage or drive rotating equipment. The application of back pressure steam turbines in areas where steam pressure reduction is required can enhance energy efficiency significantly. The same approach can be deployed in applications where gas pressure reduction is required, however, using turboexpanders. Waste gases from industrial processes can also be utilized to generate onsite heat and power using micro- and small gas turbines. In addition, further efficiency gains can be realized through the deployment of advanced machinery controls in a multitude of processes and industrial sectors.

A number of factors influence energy efficiency within buildings, first the building design as well as materials utilized in construction, e.g. insulation and glazing. Furthermore, appliances, devices and systems used throughout buildings, e.g. heating, cooling and ventilation systems, and lighting, play a pivotal role in energy consumption. Efficiency gains can be realized by utilizing energy-efficient systems and appliances as well as improved construction materials (REN21 2019; Leibowicz et al. 2018).

In the transportation sector, efficiency gains can be realized through the introduction of enhanced and more efficient thermal engines, hybrid and electric vehicles as well as hydrogen (H2) vehicles (Hache 2015). Furthermore, efficiency gains can be achieved through technological advances within aviation, shipping and rail, although rail is currently one of the most energy-efficient modes. Efficiency measures in the transportation sector can also take other forms. For example, travel demand management, to reduce frequency and distance of travel, can be an interesting approach. Moreover, shifting travel to the most efficient modes where possible, such as electrified rail, and reducing dependence on high-intensity travel methods can play an interesting role in enhancing efficiency (IEA 2019b).

Negative emissions technologies

Most of the climate pathways that were investigated by the Intergovernmental Panel on Climate Change (IPCC) included the deployment of negative emissions technologies along with conventional decarbonization technologies to assess the feasibility of achieving the targets mandated by the Paris agreement. Only two negative emissions technologies have been included in the IPCC assessments so far, bioenergy carbon capture and storage as well as afforestation and reforestation (IPCC 2018).

Gasser et al. empirically investigated the potential negative emissions needed to limit global warming to less than 2 °C. The analysis utilized an IPCC pathway that is most likely to maintain warming at such level and constructed a number of scenarios based on conventional mitigation assumptions in an attempt to quantify the potential negative emissions efforts required. The results indicated that in the best-case scenario, that is under the best assumptions on conventional mitigation efforts, negative emissions of 0.5–3Gt C year−1 and 50–250 Gt C of storage capacity are required. Based on a worst-case scenario, negative emissions of 7–11 Gt C year−1 and 1000–1600 Gt C of storage capacity are required. (1 Gigaton Carbon = 3.6667 Gigaton CO2e) The results indicate the inevitable need for negative emissions, even at very high rates of conventional mitigation efforts. Furthermore, the study suggests that negative emissions alone should not be relied upon to meet the 2 °C target. The investigation concluded that since negative emissions technologies are still at an infant stage of development, conventional mitigation technologies should remain focused upon within climate policy, while further financial resources are to be mobilized to accelerate the development of negative emissions technologies (Gasser et al. 2015).

It is argued that negative emissions technologies should be deployed to remove residual emissions after all conventional decarbonization efforts have been maximized and that such approach should be utilized to remove emissions that are difficult to eliminate through conventional methods (Lin 2019). It is important to note that negative emissions should be viewed as a complementary suite of technologies and techniques to conventional decarbonization methods, and not a substitute (Pires 2019).

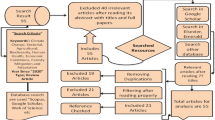

The significant role of negative emissions in meeting climate targets is understood and appreciated amongst academics, scientists and policymakers; however, there still remains a debate on the social, economic and technical feasibility as well as the risk associated with large-scale deployment (Lenzi 2018). This section will carry out an extensive literature review on the main negative emissions technologies and techniques, their current state of development, perceived limitations and risks as well as social and policy implications. Figure 2 depicts the major negative emissions technologies and carbon removal methods discussed in the literature and critically reviewed in this article.

Major negative emissions technologies and techniques which are deployed to capture and sequester carbon from the atmosphere. This approach includes bioenergy carbon capture and storage, afforestation and reforestation, biochar, soil carbon sequestration, enhanced terrestrial weathering, wetland restoration and construction, direct air carbon capture and storage, ocean alkalinity enhancement and ocean fertilization

Bioenergy carbon capture and storage

Bioenergy carbon capture and storage, also referred to as BECCS, is one of the prominent negative emissions technologies discussed widely in the literature. The Intergovernmental Panel on Climate Change (IPCC) heavily relied on bioenergy carbon capture and storage within their assessments as a potential route to meet temperature goals (IPCC 2018). The technology is simply an integration of biopower, and carbon capture and storage technologies discussed earlier. The basic principle behind the technology is quite straightforward. Biomass biologically captures atmospheric CO2 through photosynthesis during growth, which is then utilized for energy production through combustion. The CO2 emissions realized upon combustion are then captured and stored in suitable geological reservoirs (Pires 2019; RoyalSociety 2018). This technology can significantly reduce greenhouse gas concentration levels by removing CO2 from the atmosphere. The carbon dioxide removal potential of this technology varies within the literature; however, a conservative assessment by Fuss et al. presents an estimated range of 0.5–5 GtCO2 year−1 by 2050 (Fuss et al. 2018). In terms of global estimates for storage capacity, the literature presents a wide range from 200 to 50,000 GtCO2 (Fuss et al. 2018). Cost estimates for carbon dioxide removal through bioenergy carbon capture and storage are in the range of $100-$200/tCO2 (Fuss et al. 2018).

The biomass feedstocks utilized for this approach can either be dedicated energy crops or wastes from agricultural or forestry sources. Furthermore, such feedstocks can either be used as dedicated bio-based feedstocks or can be combined with fossil-based fuels in co-fired power plants (RoyalSociety 2018). Besides the standard combustion route, the literature suggests that CO2 can be captured in non-power bio-based applications, such as during the fermentation process in ethanol production or the gasification of wood pulp effluent, e.g. black liquor, in pulp production (McLaren 2012; Pires 2019).

The main challenge associated with this technology is the significant amount of biomass feedstocks required to be an effective emission abatement approach. Under large-scale deployment, resource demand when utilizing dedicated crops would be quite significant, with high pressure exerted on land, water as well as nutrient resources. A major issue would be the direct competition with food and feed crops for land, freshwater and nutrients (RoyalSociety 2018; GNASL 2018). Heck et al. empirically investigated the large-scale deployment of bioenergy carbon capture and storage for climate change abatement and demonstrated its impact on freshwater use, land system change, biosphere integrity and biogeochemical flows. Furthermore, the investigation identified the interrelated nature between each of these dimensions as well as the associated impacts when any one dimension is prioritized (Heck et al. 2018). A sustainable approach to land use is quite critical in approaching bioenergy carbon capture and storage. Competing with food for arable land and changing forest land to dedicated plantations have serious negative social and environmental effects. Harper et al. argue that the effectiveness of this technology in achieving negative emissions is based on several factors which include previous land cover, the initial carbon gain or loss due to land-use change, bioenergy crop yields, and the amount of harvested carbon that is ultimately sequestered. Their empirical investigation highlights the negative impact of bioenergy carbon capture and storage when dedicated plantations replace carbon-dense ecosystems (Harper et al. 2018). Another issue discussed in the literature is the albedo effects of biomass cultivation. This is mainly applicable in high-latitude locations, where biomass replaces snow cover and reduces radiation reflection potential which offsets mitigation efforts (Fuss et al. 2018).

In terms of technology readiness, bioenergy technologies are to a certain extent well developed; however, carbon capture and storage are still at an early stage. Technology risk is mainly associated with storage integrity and the potential of leakage as discussed previously on carbon capture and storage. Furthermore, Mander et al. discuss the technical difficulties in scaling deployment within a short period. Besides, they question whether this technology can deliver its abatement potential within the projected time frame. In terms of policy, it is argued that a strong framework, as well as adequate incentives, need to be in place to properly push the technology forward (Mander et al. 2017). Commercial logic may not be enough to drive forward global deployment. Financial viability of such projects will depend on a utilitarian carbon market that caters for negative emissions as well as an appropriate carbon price that incentivizes deployment (Hansson et al. 2019). Therefore, policy should look at ways to strengthen carbon pricing mechanisms and introduce negative emissions as a new class of tradeable credits (Fajardy et al. 2019).

Afforestation and reforestation

During tree growth, CO2 is captured from the atmosphere and stored in living biomass, dead organic matter and soils. Forestation is thus a biogenic negative emissions technology that plays an important role within climate change abatement efforts. Forestation can be deployed by either establishing new forests, referred to as afforestation, or re-establishing previous forest areas that have undergone deforestation or degradation, which is referred to as reforestation. Depending on tree species, once forests are established CO2 uptake may span 20–100 years until trees reach maturity and then sequestration rates slow down significantly. At that stage, forest products can be harvested and utilized. It is argued that forest management activities and practices have an environmental impact and should be carefully planned (RoyalSociety 2018). Harper et al. discuss several advantages and co-benefits that are associated with forest-based mitigation which include biodiversity, flood control as well as quality improvement for soil, water and air (Harper et al. 2018).

Carbon can be stored in forests for a very long time; however, permanence is vulnerable due to natural and human disturbances. Natural disasters such as fire, droughts and disease or human-induced deforestation activities are all risks that negatively impact storage integrity. In general, biogenic storage has a much shorter lifespan than storage in geological formations, such as in the case of bioenergy carbon capture and storage (Fuss et al. 2018). Another issue related to forestation is land requirement as well as competition with other land use. Significant amounts of land are required to achieve effective abatement results (RoyalSociety 2018). Fuss et al. discuss another issue and that is the albedo effect. Forests in high latitudes would actually be counterproductive, accelerating local warming as well as ice and snow cover loss. They argue that tropical areas would be the most suitable zones to host forestation projects. However, competition with agriculture and other sectors for land will be another problem. Based on global tropical boundary limitations, an estimated total area of 500 Mha is argued to be suitable for forestation deployment. This would allow for a global carbon dioxide removal potential of 0.5–3.6 GtCO2 year−1 by 2050. Removal costs are estimated at $5–$50/tCO2 (Fuss et al. 2018).

In terms of technology readiness, afforestation and reforestation have already been widely adopted on a global level and have already been integrated within climate policies through the Kyoto protocol’s clean development mechanism programme since the 1990s. To drive forward forest-based mitigation efforts, the protocol introduced removal units which allowed forestation projects to yield tradeable credits. Despite the early policy measures, forest-based mitigation efforts accounted for a small fraction of emissions at that time. Forest-based abatement projects have also been introduced through national regulations as well as voluntary systems such as the reducing emissions from deforestation and forest degradation (REDD+) programme that was introduced by the United Nations in 2008. However, carbon sequestration through forestation remained insignificant, as it only accounted for 0.5% of the total carbon traded in 2013 (Gren and Aklilu 2016). The effectiveness of the REDD+ programme is argued in the literature after more than 10 years of its introduction. Hein et al. present a number of arguments around the programme’s poor track record in achieving its intended purpose of emissions reduction. However, despite the uncertainty and weaknesses discussed, REDD+ implementation intentions have been indicated by 56 countries in their INDC submissions under the Paris agreement (Hein et al. 2018). Permanence, sequestration uncertainty, the availability of efficient financing mechanisms as well as monitoring, reporting and verification systems are all difficulties associated around forest-based abatement projects (Gren and Aklilu 2016).

Biochar

Biochar has recently gained considerable recognition as a viable approach for carbon capture and permanent storage and is considered as one of the promising negative emissions technologies. Biochar is produced from biomass, e.g. dedicated crops, agricultural residues and forestry residues, through a thermochemical conversion process. It is produced through pyrolysis, a process of heating in the absence of oxygen, as well as through gasification and hydrothermal carbonization (Matovic 2011; Oni et al. 2020; Osman et al. 2020a, b). The carbon captured by biomass through CO2 uptake during plant growth is then processed into a char that can be applied to soils for extended periods. The conversion process stores biomass carbon in a form that is very stable and resistant to decomposition. Stability in soils is perhaps the most important property of biochar that makes it a solid carbon removal technology. Although considered more stable than soil organic carbon, there are certain uncertainties around decomposition rates of various types of biochar, which depends on the feedstock used and process conditions utilized (Osman et al. 2019; Chen et al. 2019). Depending on the feedstock used, it is estimated that this technology can potentially remove between 2.1 and 4.8 tCO2/tonne of biochar (RoyalSociety 2018). Carbon removal potential, as well as costs, varies greatly in the literature; however, a conservative range is provided by Fuss et al. It is estimated that by 2050 global carbon reduction removal potential achieved through biochar can be in the range of 0.3–2 Gt CO2 year−1, with costs ranging from $90 to $120/tCO2 (Fuss et al. 2018).

In terms of resource requirements, biochar production would require vast amounts of land to have an effective impact on greenhouse gas concentration levels. Land is required for feedstock cultivation, as well as for biochar dispersal acting as a carbon sink. While land for dedicated biomass cultivation may create competition issues with agriculture and other land-use sectors, same as the case of bioenergy carbon capture and storage, there would be no issues with areas required for biochar dispersal. This would be the case as long as the biochar is technically matched with the type of crop, soil and growing conditions related to the specific cropping system. Besides soil, Schmidt et al. introduced other carbon sink applications for biochar which include construction materials, wastewater treatment and electronics, as long as the product does not thermally degrade or oxidize throughout its life cycle (Schmidt et al. 2019). Furthermore, it has been argued in the literature that marginal and degraded lands can potentially be utilized for dedicated plantations, relieving pressure on land that can be used for other purposes. Moreover, using waste biomass eliminates the need for land and provides a waste disposal solution; however, competition over waste for other purposes increases feedstock availability risk as well as price volatility. Biomass availability is one of the limiting factors to successful large-scale deployment of biochar projects (RoyalSociety 2018).

In addition to the beneficial effect of capturing and storing CO2 from the atmosphere, there is growing evidence in the literature that biochar also has an impact on other greenhouse gas emissions such as CH4 and N2O. Although the literature shows a positive impact in many occasions, in terms of reduced emissions, Semida et al. present mixed results, where the application of biochar has positive as well as negative effects on CH4 and N2O emissions. This is specific to the cropping system as well as the type of biochar utilized and its processing conditions (Semida et al. 2019). Xiao et al. also present conflicting results regarding biochar application, which is very specific to the condition of the soils amended with biochar (Xiao et al. 2019). Impact on greenhouse gas emissions should, therefore, be studied on a case-by-case basis.

Another benefit that is widely discussed in the literature is the positive effects associated with biochar application to soils. It is argued that soil quality and fertility are significantly enhanced. Improvement in nutrient cycling, reduction in nutrient leaching from the soil and an increase in water and nutrient retention as well as stimulation of soil microbial activity are all co-benefits associated with biochar application. However, this is mainly dependent on biochar physical and chemical properties. Such properties are defined by the type of feedstock utilized, pyrolysis conditions, as well as other processing conditions. Furthermore, despite the general perception that biochar positively impacts plant growth and production, which is true in a large number of cases, there is evidence that biochar application may hinder plant growth in certain cropping systems. This is based on the type of biochar, the quantity applied and the specific crops under cultivation and sometimes management practices. The evidence is mixed, and therefore careful analysis should be carried out to successfully match biochar with appropriate carbon sinks (Oni et al. 2020; Semida et al. 2019; El-Naggar et al. 2019; Maraseni 2010; Purakayastha et al. 2019; Xu et al. 2019).

Concerning the risks associated with large-scale deployment, albedo effect is mentioned in the literature. With high application rates of biochar to the soil surface, e.g. 30–60 tons/ha, it is argued that a decrease in surface reflectivity would increase soil temperature, which in turn would reduce the beneficial effect of carbon sequestration through this route (RoyalSociety 2018; Fuss et al. 2018). Other risks and challenges associated include the risk of reversibility and challenges in monitoring, reporting and verification. Moreover, limited policy incentives and support, as well as lack of carbon pricing mechanisms that incorporate CO2 removal through biochar (Ernsting et al. 2011), hinder this technology’s potential for large-scale commercialization. Pourhashem et al. examined the role of government policy in accelerating biochar adoption and identified three types of existing policy instruments that can be used to stimulate biochar deployment in the USA: commercial financial incentives, non-financial incentives and research and development funding (Pourhashem et al. 2019). With the current technological advancements, in particular blockchain, a number of start-ups are developing carbon removal platforms to drive forward voluntary carbon offsets for consumers and corporations. A Finnish start-up, Puro.earth, has introduced biochar as a net-negative technology. Once verified through the company’s verification system, the carbon removal certificates generated by biochar producers are auctioned to potential offset parties. However, until carbon removal is adequately monetized and supported through sufficient policy instruments, biochar project development will probably not reach the scale required to have a profound impact within the time frame mandated by international policy.

Soil carbon sequestration

Soil carbon sequestration is the process of capturing atmospheric CO2 through changing land management practices to increase soil carbon content. The level of carbon concentration within the soil is determined by the balance of inputs, e.g. residues, litter, roots and manure, and the carbon losses realized through respiration which is mainly influenced by soil disturbance. Practices that increase inputs and/or reduce losses drive soil carbon sequestration (RoyalSociety 2018; Fuss et al. 2018). It is well noted in the literature that soil carbon sequestration promotes enhanced soil fertility and health as well as improves crop yields due to organic carbon accumulation within soils (Fuss et al. 2018). Various land management practices that promote soil carbon sequestration are discussed in the literature which include cropping system intensity and rotation practices, zero-tillage and conservation tillage practices, nutrient management, mulching and use of crop residues and manure, incorporation of biochar, use of organic fertilizers and water management (RoyalSociety 2018; Srivastava 2012; Farooqi et al. 2018). Furthermore, the impact of perennial cropping systems on soil carbon sequestration is well documented in the literature. Agostini et al. investigated the impact of herbaceous and woody perennial cropping systems on soil organic carbon and confirmed an increase in soil organic carbon levels by 1.14–1.88 tCha−1 year−1 for herbaceous crops and 0.63–0.72 tCha−1 year−1 for woody crops. It is reported that these values are well above the proposed sequestration requirement (0.25 tCha−1 year−1) to make the crop carbon neutral once converted to biofuels (Agostini et al. 2015). The positive impact of perennial cropping systems on soil carbon sequestration is supported and documented in the literature by several other investigations (Nakajima et al. 2018; Sarkhot et al. 2012).

The main issues related to this approach revolve around permanence, sink saturation as well as the impact on other greenhouse gas emissions. According to Fuss et al., the potential of carbon removal through soil carbon sequestration is time-limited. Once soils reach a level of saturation, further sequestration is no longer achieved. This may take 10–100 years depending on soil type and climatic conditions. However, the Intergovernmental Panel on Climate Change (IPCC) defined a default saturation period of 20 years (Fuss et al. 2018). Once saturation is reached, land management practices need to be maintained indefinitely to mitigate reversal. A disadvantage to this would be the ongoing costs with no further removal benefits. Risks of reversibility are significant and weaken this approach’s storage integrity. Another negative effect discussed in the literature is the impact of soil carbon sequestration on other greenhouse gas emissions, mainly CH4 and N2O; however, this effect is reported to be negligible (Fuss et al. 2018).

By 2050, the global carbon dioxide removal potential discussed in the literature is estimated between 2.3 and 5.3 GtCO2 year−1 at costs ranging from $0 to $100 t/CO2 (Fuss et al. 2018). While soil carbon sequestration is ready for large-scale deployment, since many of such practices are already being used, lack of knowledge, resistance to change as well as lack of policy and financial incentives are identified as barriers for scalability. Challenges around monitoring, reporting and verification, as well as concerns about sink saturation and potential reversibility, have been the main reasons behind slow policy action. However, non-climate policies have mainly promoted land management practices to improve soil quality, fertility and productivity as well as prevent land degradation (RoyalSociety 2018). While policy and market-based mechanisms are required to push this approach forward, international voluntary carbon removal platforms are emerging. A US-based platform (Nori) is based on the concept of soil carbon sequestration and operates by linking consumers and businesses that wish to offset their carbon footprint with farmers that offer carbon removal certificates that have been audited through an independent verification party. Using blockchain technology, this company is one step further in fighting the challenges associated with monitoring, reporting and verification systems.

Direct air carbon capture and storage

Direct air carbon capture and storage, also referred to as DACCS in the literature, is emerging as a potential synthetic CO2 removal technology. The underlying principle behind this technology is the use of chemical bonding to remove atmospheric CO2 directly from the air and then store it in geological reservoirs or utilize it for other purposes such as the production of chemicals or mineral carbonates. CO2 is captured from the air by allowing ambient air to get in contact with chemicals known as sorbents. Furthermore, the sorbents are then regenerated by applying heat or water to release the CO2 for storage or utilization. There are mainly two processes by which sorbents work: first through absorption, where the CO2 dissolves in the sorbent material, typically using liquid sorbents such as potassium hydroxide or sodium hydroxide; second through adsorption, whereby the CO2 adheres to the sorbent, typically using solid materials such as amines (Pires 2019; GNASL 2018; Gambhir and Tavoni 2019; Liu et al. 2018). Both processes require thermal energy to regenerate the sorbent and release the CO2; however, it is important to note that less energy is required under the adsorption route (Gambhir and Tavoni 2019). A key issue widely discussed in the literature is the significant energy required by direct air carbon capture and storage plants. Besides the energy required for sorbent regeneration, energy is required for fans, pumps as well as compressors for pressurizing the CO2. It is of course very important to utilize low-carbon energy sources, preferably renewable energy as well as sources of waste heat, to drive the operation (Fuss et al. 2018). Another major drawback highlighted in the literature is the significant cost associated with developing direct air carbon capture and storage projects (Fuss et al. 2018). The major risk associated with this technology is CO2 storage integrity, similar to that of carbon capture and storage and bioenergy carbon capture and storage (RoyalSociety 2018).

Gambhir et al. compare direct air carbon capture and storage to carbon capture and storage and explain that the former technology is more energy- and material-intensive due to the fact that capturing CO2 from ambient air is much more difficult compared to capturing CO2 from highly concentrated flue gas streams. Direct air carbon capture is three times energy-intensive compared to conventional carbon capture per ton of CO2 removed (Gambhir and Tavoni 2019). However, direct air carbon capture and storage plants are more flexible and can be located anywhere, provided that low-carbon energy and adequate transportation and storage facilities are available. In terms of technology readiness, a lot of processes are currently being developed and are either under laboratory-scale or pilot-scale phases. Technology developers are mainly working on reducing energy requirements as this is one of the main challenges to deployment and scalability (RoyalSociety 2018).

The global potential for carbon dioxide removal has been estimated by Fuss et al. to be in the range of 0.5–5 GtCO2 year−1 by 2050, and this may potentially go up to 40 GtCO2 year−1 by the end of the century if the unexpected challenges associated with large-scale deployment are overcome. Furthermore, CO2 removal costs are estimated at $600–$1000/tCO2 initially, moving down to the range of $100–$300/tCO2 as the technology matures (Fuss et al. 2018). Currently, there are no policy instruments to support this technology, similar to many of the negative emissions technologies discussed (RoyalSociety 2018).

Ocean fertilization

Ocean fertilization is the process of adding nutrients, macro such as phosphorus and nitrates as well as micro such as iron, to the upper surface of the ocean to enhance CO2 uptake by promoting biological activity. Microscopic organisms, called phytoplankton, found at the surface layer of oceans are an important contributor to the concept of oceanic carbon sequestration. The sequestered CO2, in the form of organic marine biomass, is naturally transported to the deep ocean; this process is termed “the biological pump”. It is important to note that this downward flow is to a certain extent balanced by oceanic carbon respiration. Similar to land-based plants, phytoplankton utilizes light, CO2 as well as nutrients to grow. In the natural system, nutrients are available in the ocean as a consequence of death and decomposition of marine life. Hence, marine production is limited by the availability of recycled nutrients in the ocean. The idea behind ocean fertilization is to introduce additional nutrients to increase the magnitude of biological production, which in turn increases CO2 uptake rate as compared to the natural rate of respiration creating a carbon-negative atmospheric balance (RoyalSociety 2018; Williamson et al. 2012). Although there is not much information in the literature regarding carbon removal potential, it is estimated that ocean fertilization can potentially sequester up to 3.7 GtCO2 year−1 by 2100 with a total global storage capacity of 70–300 GtCO2 (RoyalSociety 2018). In terms of potential abatement costs, a range between $2 and $457/tCO2 has been estimated in the literature (Fuss et al. 2018).

Side effects of ocean fertilization that are discussed in the literature include ocean acidification, deep and mid-water oxygen decrease or depletion, increase in production of further greenhouse gases, unpredictable impact on food cycles, creation of toxic algal blooms as well as mixed effects on the seafloor and upper ocean ecosystems (Fuss et al. 2018; Williamson et al. 2012). Furthermore, the environmental, economic and social effects as well as the energy and material resources associated with fertilizer production, transportation and distribution are significant. Moreover, according to Fuss et al., uncertainty around permeance is a major drawback. Permanence depends on whether the sequestered carbon, in organic form, remains dissolved in the different layers of the ocean or whether sedimentation allows it to settle within long-term oceanic compartments for extended periods (Fuss et al. 2018). The issue with permeance, impact on ecosystems, low sequestration efficiency, as well as lack of adequate monitoring, reporting and verification systems, do not support the concept that ocean fertilization is an effective climate change abatement approach (Fuss et al. 2018; Williamson et al. 2012).

Enhanced terrestrial weathering

In the natural system, silicate rocks decompose; this is a process termed weathering. This chemical reaction consumes atmospheric CO2 and releases metal ions as well as carbonate and/or bicarbonate ions. The dissolved ions are transported through groundwater streams through to rivers and eventually end up in the ocean where they are stored as alkalinity, or they precipitate in the land system as carbonate minerals. Enhanced weathering is an approach that can accelerate this weathering process to enhance CO2 uptake on a much shorter timescale. This is achieved through milling silicate rocks to increase its reactive surface and enhance its mineral dissolution rate. The ground material is then applied to croplands providing a multitude of co-benefits (RoyalSociety 2018; Bach et al. 2019). Kantola et al. discuss the potential of applying this approach to bioenergy cropping systems (Kantola et al. 2017). According to Fuss et al., enhanced weathering promotes the sequestration of atmospheric carbon in two forms, inorganic and organic. Inorganic carbon is sequestered through the production of alkalinity and carbonates, as discussed above. Organic carbon, on the other hand, is sequestered when additional carbon sequestration is realized from enhanced biomass production, through photosynthesis, as a result of the nutrients that are naturally released from the rocks (Fuss et al. 2018).

Besides the carbon removal potential associated with enhanced weathering, the literature presents a number of positive side effects. This includes favourable impact on soil hydrological properties, a source for plant nutrients allowing lower dependence on conventional fertilizers, increase in water pH, enhanced soil health, increase in biomass production and an opportunity to reduce dependence on conventional pesticides. Such benefits depend on the type of rock and its application rate, climate, soil and cropping system (RoyalSociety 2018; Fuss et al. 2018; de Oliveira Garcia et al. 2019; Strefler et al. 2018).

In terms of technology readiness, enhanced weathering can be practically deployed at the moment. Current land management practices incorporate the application of granular materials, e.g. lime. Existing equipment can be utilized with no additional investment in equipment or infrastructure. The technologies related to quarrying, crushing and grinding are well developed, and there would not be issues with scalability. However, under large-scale deployment, the energy required for extraction, production and transportation would be quite significant (RoyalSociety 2018). Careful attention should be paid to the carbon footprint of enhanced weathering operations to assess actual sequestration potential. Lefebevre et al. investigated carbon sequestration through EW in Brazil by conducting a life cycle assessment to identify the carbon removal potential using basalt on agricultural land in Sao Paolo. The investigation presented several key findings, first, that the operation emits 75 kg of CO2 per ton of CO2 removed through enhanced weathering and 135 kg of CO2 per ton of CO2 removed through carbonation. This is based on a distance of 65 km between the production site and the field on which the ground rock is applied. The results indicate a maximum road travel distance of 540 km for carbonation and 990 km for enhanced weathering, above which the emissions offset the potential benefits realized from such activity. It is concluded that transportation is a major drawback which places limitations on the potential viability of this technology. Furthermore, the results suggest a capture rate of approximately 0.11–0.2 tCO2e/ton of basaltic rock applied (Lefebvre et al. 2019).

Another approach to reducing pressure on the resources required for extraction is to utilize silicate wastes from various industries. Potential materials include wastes from mining operations, cement, steel, aluminium, and coal or biomass combustion activities (Renforth 2019). However, this needs to be carefully assessed as potentially there is a risk of releasing heavy metals into soils if inappropriate materials are used (Fuss et al. 2018). Another risk associated with enhanced weathering is the potential health risk from the respiration of fine dust in the production and application of finely ground rock materials (Strefler et al. 2018). Furthermore, uncertainties about the impacts of enhanced weathering on microbial and marine biodiversity require further investigation (RoyalSociety 2018).

In terms of permanence, the sequestered CO2 can be stored in several earth pools. Initially, CO2 can be stored as dissolved inorganic carbon, alkalinity, in soils as well as in groundwater. Depending on conditions, precipitation of carbonate minerals in the soil can take place and such minerals can be stored for an extended period (in the order of 106 years) (Fuss et al. 2018). If precipitation does not take place, the dissolved inorganic carbon will be transported to the ocean through water streams, where it would be stored as alkalinity, providing a number of additional benefits and challenges to the oceanic pool. Based on an extensive literature assessment, Fuss et al. estimate global carbon removal potential of 2–4 GtCO2 year−1 by 2050 at a cost ranging from $50 to $200/tCO2 (Fuss et al. 2018). Strefler et al. conducted a techno-economic investigation on the carbon removal potential and costs of enhanced weathering using two rock types (dunite and basaltic rock). The results are inline and support the estimates presented by Fuss et al. in terms of removal potential as well as costs. Furthermore, the investigation highlighted the dimensions that influence removal potential and cost, mainly being rock grain size and weathering rates. Finally, the study indicated that climates that are warm and humid with lands that lack sufficient nutrients are the most appropriate areas for enhanced weathering activities (Strefler et al. 2018).

At the moment, enhanced weathering is not included in any carbon markets and does not have any policy support. Further research on social and environmental implications as well as adequate monitoring, reporting and verification systems needs to be developed for this approach to gain traction (RoyalSociety 2018). Moreover, integration within carbon markets and adequate carbon pricing are required to incentivize deployment.

Ocean alkalinity enhancement