Abstract

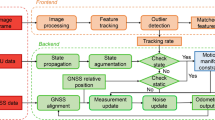

In recent years, with the rapid development of automated driving technology, the task for achieving continuous, dependable, and high-precision vehicle navigation becomes crucial. The integration of the global navigation satellite system (GNSS) and inertial navigation system (INS), as a proven technology, is confined by the grade of inertial measurement unit and time-increasing INS errors during GNSS outages. Meanwhile, the ability of simultaneous localization and environment perception makes the vision-based navigation technology yield excellent results. Nevertheless, such methods still have to rely on global navigation results to eliminate the accumulation of errors because of the limitation of loop closing. In this case, we proposed a GNSS/INS/Vision integrated solution to provide robust and continuous navigation output in complex driving conditions, especially for the GNSS-degraded environment. Raw observations of multi-GNSS are used to construct double-differenced equations for global navigation estimation, and a tightly coupled extended Kalman filter-based visual-inertial method is applied to achieve high-accuracy local pose. The integrated system was evaluated in experimental validation by both the GNSS outage simulation and vehicular field experiments in different GNSS availability situations. The results indicate that the GNSS navigation performance is significantly improved comparing to the GNSS/INS loosely coupled solution in the GNSS-challenged environment.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Bloesch M, Omari S, Hutter M, Siegwart R (2015) Robust visual inertial odometry using a direct EKF-based approach. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, September 28–October 2, pp 298–304

Chatfield AB (1997) Fundamentals of High Accuracy Inertial Navigation. Reston, VAFLIR Corporation (2019) GS3-U3–28S5M-C Product specifications. http://www.flir.com/products/grassshopper3-usb3/?model=GS3-U3-28S5M-C.

Forster C, Carlone L, Dellaert F, Scaramuzza D (2017) On-manifold preintegration for real-time visual-inertial odometry. IEEE Trans Robot 33(1):1–21

Furgale P, Rehder J, Siegwart R (2013) Unified temporal and spatial calibration for multi-sensor systems. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, November 3–7, pp 1280–1286

Gao Z, Shen W, Zhang H, Ge M, Niu X (2016) Application of helmert variance component based adaptive kalman filter in multi-gnss ppp/ins tightly coupled integration. Remote Sens 8(7):553

Ge M, Gendt G, Rothacher M, Shi C, Liu J (2008) Resolution of GPS carrier-phase ambiguities in precise point positioning (PPP) with daily observations. J Geodesy 82(7):389–399

Hartley R, Zisserman A (2003) Multiple view geometry in computer vision, 2nd edn. Cambridge University Press, Cambridge, UK

He Y, Zhao J, Guo Y, He W, Yuan K (2018) PL-VIO: tightly-coupled monocular visual–inertial odometry using point and line features. Sensors 18(4):1159

Hesch JA, Kottas DG, Bowman SL, Roumeliotis SI (2017) Consistency analysis and improvement of vision-aided inertial navigation. IEEE Trans Rob 30(1):158–176

Huang GP, Mourikis AI, Roumeliotis SI (2010) Observability-based rules for designing consistent ekf slam estimators. Int J Robot Res 29(5):502–528

Klein I, Filin S, Toledo T (2010) Pseudo-measurements as aiding to INS during GPS outages. Navigation 57(1):25–34

Leutenegger S, Lynen S, Bosse M, Siegwart R, Furgale P (2015) Keyframe-based visual-inertial odometry using nonlinear optimization. Int J Robot Res 34(3):314–334

Li X, Ge M, Dai X, Ren X, Fritsche M, Wickert J, Schuh H (2015) Accuracy and reliability of multi-GNSS real-time precise positioning: GPS, GLONASS, BeiDou, and Galileo. J Geod 89(6):607–635

Li M, Mourikis AI (2013) High-precision, consistent EKF-based visual-inertial odometry. Int J Robotics Res 32(6):690–711

Liu W, Duan R, Zhu F (2017) A robust cascaded strategy of in-motion alignment for inertial navigation systems. Int J Distrib Sens Netw 13(9):1550147717732919

Lucas B, Kanade T (1981) An Iterative Image Registration Technique with an Application to Stereo Vision. In: Proceedings of the International Joint Conference on Artificial Intelligence, Vancouver, Canada, Aug, pp 24–28

Lynen S, Achtelik MW, Weiss S, Chli M, Siegwart R (2013) A Robust and Modular Multi-sensor Fusion Approach Applied to MAV Navigation. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, November 3–7, pp 3923–3929.

Mascaro R, Teixeira L, Hinzmann T, Siegwart R, Chli M (2018) Gomsf: graph-optimization based multi-sensor fusion for robust uav pose estimation. In: Proceedings of the IEEE international conference on robotics and automation, Brisbane, Australia May 21–25, pp 1421–1428

Montiel ADJ, Civera J (2006) Unified inverse depth parametrization for monocular slam. In: Proceedings of Robotics: Science and Systems, Philadelphia, Pennsylvania, USA, August 16–19

Mourikis AI, Roumeliotis SI (2007) A Multi-State Constraint Kalman Filter for Vision-aided Inertial Navigation. In: Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, April 10–14, pp 3565–3572

NovAtel Corporation (2014) GPS-703 Antenna Product sheet. https://www.novatel.com/assets/Documents/Papers/FSAS.pdf.

NovAtel Corporation (2015) SPAN-FSAS Product sheet. https://www.novatel.com/assets/Documents /Papers/FSAS.pdf.

NovAtel Corporation (2018) Inertial Explorer 8.70 User Manual. https://www.novatel.com/assets/Documents/Waypoint/Downloads/Inertial-Explorer-User-Manual-870.pdf.

Qin T, Li P, Shen S (2018) VINS-mono: a robust and versatile monocular visual-inertial state estimator. IEEE Trans Rob 34(4):1004–1020

Rublee E, Rabaud V, Konolige K, Bradski G (2011) ORB: an efficient alternative to SIFT or SURF. In: Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, November, pp 2564–2571

Septentrio Corporation (2019) PolaRx5 Product datasheet. https://www.septentrio.com/

en/products/gnss-receivers/reference-receivers/polarx-5.

Savage PG (2000) Strapdown analytics. Strapdown Associates, Minnesota

Shin E (2005) Estimation Techniques for Low-Cost Inertial Navigation. Dissertation, University of Calgary

Sun K, Mohta K, Pfrommer B, Watterson M, Liu S, Mulgaonkar Y, Taylor CJ, Kumar V (2018) Robust stereo visual inertial odometry for fast autonomous flight. IEEE Robot Autom Lett 3(2):965–972

Trajkovic M, Hedley M (1998) Fast corner detection. Image Vis Comput 16(2):75–87

Usenko V, Engel J, Stuckler J, Cremers D (2016) Direct visual-inertial odometry with stereo cameras. In: Proceedings of the IEEE International Conference on Robotics & Automation, Stockholm, Sweden, May 16–21, pp 1885–1892

Vu A, Ramanandan A, Chen A, Farrell JA, Barth M (2012) Real-time computer vision/dgps-aided inertial navigation system for lane-level vehicle navigation. IEEE Trans Intell Transp Syst 13(2):899–913

Won DH, Lee E, Heo M, Sung S, Lee J, Lee YJ (2014) GNSS integration with vision-based navigation for low GNSS visibility conditions. GPS Solut 18(2):177–187

Zheng F, Tsai G, Zhang Z, Liu S, Chu C, Hu H (2018) Trifo-VIO: Robust and efficient stereo visual inertial odometry using points and lines. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, October 1–5, pp 3686–3693

Zhang H, Ye C (2020) Plane-aided visual-inertial odometry for 6-dof pose estimation of a robotic navigation aid. IEEE Access 8:90042–90051

Acknowledgements

This study is financially supported by the National Natural Science Foundation of China (Grant No. 41774030, Grant 41974027), the Hubei Province Natural Science Foundation of China (Grant No. 2018CFA081), the frontier project of basic application form Wuhan science and technology bureau (Grant No. 2019010701011395). The numerical calculations in this paper have been done on the supercomputing system in the Supercomputing Center of Wuhan University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liao, J., Li, X., Wang, X. et al. Enhancing navigation performance through visual-inertial odometry in GNSS-degraded environment. GPS Solut 25, 50 (2021). https://doi.org/10.1007/s10291-020-01056-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10291-020-01056-0