Abstract

To develop a deep learning-based model for detecting rib fractures on chest X-Ray and to evaluate its performance based on a multicenter study. Chest digital radiography (DR) images from 18,631 subjects were used for the training, testing, and validation of the deep learning fracture detection model. We first built a pretrained model, a simple framework for contrastive learning of visual representations (simCLR), using contrastive learning with the training set. Then, simCLR was used as the backbone for a fully convolutional one-stage (FCOS) objective detection network to identify rib fractures from chest X-ray images. The detection performance of the network for four different types of rib fractures was evaluated using the testing set. A total of 127 images from Data-CZ and 109 images from Data-CH with the annotations for four types of rib fractures were used for evaluation. The results showed that for Data-CZ, the sensitivities of the detection model with no pretraining, pretrained ImageNet, and pretrained DR were 0.465, 0.735, and 0.822, respectively, and the average number of false positives per scan was five in all cases. For the Data-CH test set, the sensitivities of three different pretraining methods were 0.403, 0.655, and 0.748. In the identification of four fracture types, the detection model achieved the highest performance for displaced fractures, with sensitivities of 0.873 and 0.774 for the Data-CZ and Data-CH test sets, respectively, with 5 false positives per scan, followed by nondisplaced fractures, buckle fractures, and old fractures. A pretrained model can significantly improve the performance of the deep learning-based rib fracture detection based on X-ray images, which can reduce missed diagnoses and improve the diagnostic efficacy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Rib fractures are common blunt chest traumas that occur in 20% of all cases of chest trauma and affect approximately 40% of patients who suffer from severe chest trauma [1, 2]. The clinical symptoms of patients with rib fractures include localized pain, abnormal breathing, and skin bruising. The number of rib fracture locations and the type of fracture can indicate the severity of trauma and can be used to predict complications and mortality rates [3,4,5]. Conventional imaging examinations for identifying and classifying rib fractures are based on chest X-ray and CT. According to the imaging modality selection criteria established by the American College of Radiologists, an X-ray (or DR) is usually obtained to initially diagnose trauma patients because it is convenient and inexpensive [6]. However, missed diagnosis and misdiagnosis of rib fractures often occur due to ambiguity caused by multiple overlapping anatomical structures coupled with different locations and sizes of fractures in X-ray; moreover, image reading requires experience and training for radiologists [7, 8]. Therefore, to reduce miss- and misdiagnosis, an automatic rib fracture detection and localization method is highly desirable to assist radiologists in reading chest X-ray images.

In the literature, deep learning-based (DL) models have demonstrated effectiveness in helping radiologists quickly and precisely identify rib fractures in CT images [9,10,11]. For X-ray images, several studies have illustrated unprecedented success in applying DL-based models to detect suspicious fractures, such as hip fractures [12], mandibular fractures [13], scaphoid fractures [14], and femoral neck fractures [15]. In one study [16], a DL-based model of chest radiography was established to identify four types of features (fracture, pneumothorax, nodule, and opacity), and the sensitivity and specificity for fracture detection based on the public dataset ChestX-Ray14 were 43.1% and 92.8%, respectively. However, many delineated images are needed to train DL-based models. To address this problem, an automatic rib fracture recognition algorithm was designed using mixed supervised learning while considering the spatial information from class activation maps [17]. The experimental results showed that the recognition algorithm outperformed other methods by annotating 20% of the positive samples in 10,966 images.

Moreover, to overcome the weak prominence of fractures in chest X-ray images, a hybrid supervised learning model was established to provide fracture classification decisions and change image interpretation [17]. This model incorporated partial correlation decoupling and example separation enhancement strategies to accurately detect fracture regions [17]. Nevertheless, compared to CT, it is still challenging for deep learning models to achieve radiologist-level merit for rib fracture detection on chest X-ray.

In this study, we compare the performance of different fully convolutional one-stage (FCOS) objective detection pretraining methods [18], such as a model with no pretraining (no pretraining), a model pretrained with ImageNet data (pretrained ImageNet), and a model pretrained with DR images (pretrained DR), to validate a deep learning-based model for detecting rib fractures in X-ray images.

Materials and Methods

This study was performed with adherence to the principles of the Declaration of Helsinki. Approval was granted by the Ethics Committee of Shanghai Changzheng Hospital (No.2022SL071), and the requirement for informed consent of patients was waived due to the retrospective nature of the analysis and the anonymity of the data.

Datasets

A total of 18,631 chest DR scans (anteroposterior or posteroanterior) were collected for the pretraining, training, and testing of the rib fracture detection model, with all images unique. The datasets used for pretraining included a public dataset, ChestX-Ray14 [19], and a dataset collected from multiple hospitals, denoted as DR-data. ChestX-Ray14 consists of 16,253 radiographs in a posteroanterior projection with fracture cases (7424 images) and nonfracture cases (8829), and 1842 cases in DR-data are fracture cases based on the corresponding radiological reports. All these data were labelled as 1 for fracture (with bounding boxes for each fracture) and 0 for nonfracture.

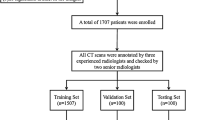

For training and testing, we collected two datasets, Data-CZ and Data-CH. Data-CZ consists of 427 images/cases (1388 fractures) from Shanghai Changzheng Hospital and was used for training (300 fracture cases) and testing (72 fracture and 55 no-fracture cases). Data-CH includes 109 paired chest DR and CT images (290 fractures) from Changhai Hospital and was used for testing. All the fracture cases were also annotated into four types, namely F0, displaced fractures; F1, nondisplaced fractures; F2, buckle fractures; and F3, old fractures [10]. Each fracture in the ground-truth data was considered in the evaluation of the detection models.

Details of the pretraining, training, and testing datasets are listed in Table 1. This retrospective study was approved by the institutional review boards of the respective hospitals, all patient information was anonymized, and informed consent forms were waived. The inclusion criteria for our privately collected data were as follows: (a) age of 18 years or older, (b) DR or CT for acute chest trauma within 2 weeks, and (c) complete DR and CT image data. The exclusion criteria were as follows: (a) images with artifacts caused by malposition, respiratory motion, etc.; (b) patients with post-thoracic spine and rib surgery; (c) pathological fractures or other nonfracture lesions of the ribs; and (d) thoracic deformities. All the data were examined by two radiologists from Shanghai Changzheng Hospital (5 years of experience and 7 years of experience), by referring to the corresponding CT images. In cases when a consensus was not reached, a third expert (12 years of experience) further evaluated the images. Finally, the fracture sites and fracture types were marked on the images by using an internal labelling tool.

Accurate fracture annotations are critical for training and testing DL-based models. In fact, for DR images, neither the hierarchy annotation strategy nor the majority-voting approach can prevent interreader variability and mislabelling. Due to the ambiguity of DR images, and compared to DR images, CT images provide clearer visualization of bone fractures. Therefore, we double-checked the ground truth data, the bounding box drawn on X-ray images, by referring to the corresponding CT images. The corresponding locations of the fractures between 2D chest X-ray (2D bounding box) and 3D CT images (3D volume) were checked and confirmed by a radiologist. The details of the annotations for each stage of model development are given in Fig. 1.

Pretrained Detection Model simCLR

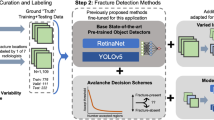

Because the training dataset with four types of rib fractures labelled is limited, we first built a pretraining model for fracture detection using public X-ray datasets, that included fracture labels (0 indicating no fracture, 1 indicating a fracture) but lacked location and type annotations. The model derived from contrastive learning was based on a simple framework, simCLR, which was proposed by Hinton’s group [20] to improve the performance of DL-based models with limited labelled data. simCLR serves as a pretrained model for various downstream tasks such as classification and detection [20]. In this work, we first use simCLR to extract deep features from public datasets (ImageNet and ChestX-Ray14) and then transfer the pretrained network to the downstream tasks of rib fracture detection from chest X-ray images.

One of the mainstream approaches to learn visual representations without supervision is a discriminative algorithm based on contrastive learning. Contrastive learning is a self-supervised learning method, that trains an encoder to yield an effective image representation without labelling. simCLR [20] learns reliable representations in the feature space by maximizing agreement between different augmentations. Specifically, each sample is randomly processed by two separate data augmentation operators, such as cropping, rotation, and style transfer. As a result, given a mini-batch of \(N\) images, \(2N\) samples can be generated. In the augmented mini-batch, two augmented images from the same sample are grouped as a positive pair \((i,j)\), whereas the other \(2(N-1)\) augmented images in the mini-batch are grouped as negative examples. Contrastive learning is driven by contrastive loss, and the goal is to maximize the agreement among positive pairs while minimizing the agreement between positive and negative pairs. Therefore, the learned features are generalized and robust to diversifying operations. A detailed illustration of self-supervised pretraining for rib fracture detection from chest X-ray images is shown in Fig. 2.

The contrastive loss for a positive pair is defined as follows:

where \(\mathrm{sim}({z}_{i},{z}_{j})\) is the dot product (cosine similarity) between the extracted features \({z}_{i}\) and \({z}_{j}\). \({1}_{[k\ne i]}\in \left\{0, 1\right\}\) is the indicator function, which equals 1 when \(\mathrm{k}\ne \mathrm{i}\). \(\tau\) is a temperature parameter, and the network loss is defined as follows:

An original image \(x\) is transformed into images \({\widetilde{x}}_{i}\) and \({\widetilde{x}}_{j}\) via two random augmentations. Then, \({\widetilde{x}}_{i}\) and \({\widetilde{x}}_{j}\) are passed through an encoder network (ResNet50). The representations generated by the encoder network are then passed into a contrastive loss function that promotes similarity between representations \({z}_{i}\) and \({z}_{j}\). The trained model retains the base encoder network, and the 2-layer MLP projection head is removed as a pretrained model for FCOS objective detection.

Fully Convolutional One-Stage Object Detection Model

In this study, a classic detection network, the FCOS objective detector [18], was applied to identify fractures in frontal chest X-rays. The FCOS objective detection approach involves a classic single-view detection network that is able to balance speed and performance and can handle a wide range of object detection tasks. With post-processing non-maximum suppression, the FCOS objective detection is anchor box free and completely eliminates complicated computations related to anchor boxes. The model also performs object detection in a per-pixel prediction fashion and avoids using all the hyperparameters related to anchor boxes. The pretrained model is used to initialize the parameters of the detection model. The derived pretrained model from contrastive learning is adopted as the backbone of the FCOS architecture for the realization of the downstream lesion detection tasks. Therefore, we demonstrate a highly simple and flexible detection framework for rib fracture identification, as shown in Fig. 3.

Statistical Analysis

To evaluate the performance of the fracture detection algorithms, we calculated the sensitivity of fracture detection and summarized the free-response ROC (FROC) curves for each experiment. Referring to the rules of the LUNA challenge [21], the criterion to determine if a prediction result matches the annotation is based on whether the predicted box and the annotated box overlap each other. In this case, the detection result is considered a true positive (TP) if the intersection over union (IoU) is more than 30% [22]; otherwise, it is a false positive (FP) compared with any ground truth bounding boxes. On the other hand, if no predicted nodule matches an annotated nodule, the missed nodule is counted as a false negative (FN). Based on TP, FP, and FN, the sensitivity of the detection is given as \(\mathrm{sensitivity}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}}\). For the FROC curve, the x-axis represents the average number of FPs per scan, and the y-axis represents sensitivity. SPSS 25.0 software (version 25.0; SPSS Inc., Chicago, IL, USA) was used for statistical analysis. A paired-sample t-test was used to compare the sensitivity of detection for the three DL models. A P value <0.05 indicated a statistically significant difference.

With the public datasets ImageNet and ChestX-ray14, we trained the pretrained ImageNet and pretrained DR models, respectively. The pretrained models were then used to initialize the parameters of the FCOS objective detection model. Herein, pretrained ImageNet means the pretraining model was built using the ImageNet dataset (1.2 million labelled images depicting 10,000+ object categories), and the pretrained-DR model was trained using chest DR scans from the dataset of ChestX-ray14 and data from hospitals. Then, the FCOS objective detection model was trained with 300 chest X-ray images, which includes 1111 fractures. Examples of rib fractures detected from Data-CZ with the proposed method are shown in Fig. 4.

Results

Performance of Rib Fracture Detection

To evaluate the performance of the detection models with two pretrained models, we calculated the sensitivity in cases with different numbers of FPs, as shown in Table 2, and the FROC curve results are plotted in Fig. 5. Based on a compromise between sensitivity and the number of FPs, our model reached the best performance with five FPs per scan, which might be tolerable clinically. With the 127 chest DR scans in the testing dataset of Data-CZ, we performed an ablation study, and the detection sensitivities of the no pretraining, pretrained ImageNet, and pretrained DR models were 0.465, 0.735, and 0.822, respectively, as listed in Table 2, based on five FPs per scan. For 109 DR scans in Data-CH, the sensitivities of the three pretrained methods were 0.403, 0.655, and 0.748.

The FCOS objective detection model combined with the pretrained DR model achieved the best performance, and the sensitivity was 0.822 and 0.748 with five FPs for the test datasets Data-CZ and Data-CH, respectively; a comparison of these results with those of the no pretraining and pretrained ImageNet models is shown in Fig. 5. The detection performance of no pretraining and pretrained DR increased significantly from 0.465 to 0.822 (Data-CZ: t=−37.2, P=0.000) and from 0.403 to 0.748 (Data-CH: t=−32.8, P=0.000), respectively. The pretrained ImageNet model did not perform as well as pretrained DR (Data-CZ: t=−7.8, P=0.001, and Data-CH: t=−14.6, P=0.000).

Detection Performance for Four Fracture Types

In the previous section, the detection model was initialized with the pretrained models and achieved significantly better performance than those without pretraining. Based on the detection results, we further analyzed the performance of the models in the detection of different fracture types. For the testing images of Data-CZ and Data-CH, the detection model achieved the highest performance for displaced fractures, with sensitivities of 0.873 and 0.774, respectively, with 5 FPs per scan, followed by nondisplaced fractures and buckle fractures. Old fractures were the most complicated type to detect, with sensitivities of 0.667 and 0.500, for Data-CZ and Data-CH, respectively. The details are shown in Table 3.

In addition, a radar map (Fig. 6) clearly shows that the performance of the detection model was better for Data-CZ than for Data-CH. The fracture detection results indicated that the detection of displaced fractures was better than that of other fracture types at different FP values [1,2,3,4, and 5], followed by nondisplaced fractures and buckle fractures. Old fractures are the most challenging type to detect. The F1 performance was higher than others and had significant differences (Data-CZ F1 vs. F2 P=0.000, F1 vs. F3 P=0.007, F1 vs. F4 P=0.011, and Data-CH F1 vs. F2 P=0.032, F1 vs. F3 P=0.006, F1 vs. F4 P=0.000). For the other three fracture types, there was no significant difference for Data-CZ (all P>0.05), and there was a significant difference for Data-CH (F2 vs. F3 P=0.013, F2 vs. F4 P=0.000, F3 vs. F4 P=0.015).

Radiologist Performance Using the DL Model

The performance of radiologists was evaluated with and without the DL model based on Data-CZ. The sensitivity for rib fractures diagnosed by the radiologist (SEN=0.731) was lower than that for the DL model (SEN=0.822), and the difference was significant (\({\chi }^{2}=6.5,P=0.01\)). The sensitivity of the radiologist was improved with the DL model (SEN=0.865) across all four fracture types compared to that in the case without the DL model, and the difference was significant (\({\chi }^{2}=15.5,P=0.00\)). With the help of the DL model, missing positive cases, such as nondisplaced fractures, were identified by the radiologist.

Discussion

We developed deep learning models for rib fracture detection on chest X-ray and evaluated the diagnostic efficacy of the models with two testing datasets. Among the two pretrained models, for both datasets, the pretrained DR model performed best, yielding a sensitivity of 0.822 for the CZ dataset and 0.748 for the CH dataset at an average of five FPs, which is beyond our expectation and better than the results of previous related studies.

Rib fractures are common and severe injuries in chest trauma cases, and some patients have an obvious manifestation of compound injuries. The threat to patients increases in complicated and aggravated cases [8, 23]. Therefore, there is a need to diagnose and treat these conditions as early as possible. In addition, rib fracture has a high missed diagnosis rate. Cho et al. reported a missed diagnosis rate of up to 20.7% based on CT images [24]. The missed diagnosis rate for DR is even higher (up to 33–50%) [7, 25]. The missed diagnosis of fractures not only affects patient prognosis but also leads to adverse medicolegal disputes. Multilayer spiral CT can improve the accuracy of rib fracture diagnosis by reconstructing multilayer scan data in 3D. However, in the first instance of injury, an X-ray is still the primary diagnostic modality for rib fractures due to the low radiation dose and high convenience and affordability.

Anna Majkowska et al. applied a deep learning model to detect fracture lesions at multiple sites in DR, including clavicle, shoulder, rib, and spine fractures and spanned acute, subacute, and chronic conditions [16]. Compared to manual reading, this model demonstrated higher sensitivity for acute fracture identification (with an AUC of 0.86). Among the different fractures in DR, rib fractures are the most challenging to detect, as they are easily affected by postural and anatomical factors. Our model reduced false positives with high sensitivity and detected four different types of fractures simultaneously. We obtained a sensitivity of 43.1% and a specificity of 92.8% for the public dataset ChestX-Ray14. We believe that this was due to some limitations of X-ray results, such as heavy organ overlap artifacts, and the limited information in X-ray images, resulting in poor performance for the deep learning model.

Therefore, we used a more straightforward and flexible deep learning model, the classical FCOS objective detection network [18], to identify fractures in orthopantomograms of chest DR. The efficiency of this model was significantly improved for the CZ and CH datasets. The sensitivity for the CZ data was 0.607 and 0.822 for one and five FPs on average, respectively; these values were within the acceptable range. In contrast, the sensitivity for CH data was 0.548 and 0.748 for one and five FPs on average, respectively. The experimental results showed that a pretrained model can considerably enhance rib fracture detection. Furthermore, our deep learning model is highly generalizable and can be applied in different hospitals.

Furthermore, we analyzed the detection performance of the models for four different fracture types. The sensitivities were highest for displaced fractures at 0.873 and 0.774 for five FPs per scan based on the CZ and CH test sets, respectively, and these results were consistent with those in other research. Because nondisplaced fractures and cortical bone distortions do not involve significant displacement, it is challenging for clinicians to accurately identify these two types of fractures. However, our model achieved unprecedented success in detecting both types of fractures. In contrast, our model was less sensitive for old fracture detection. We believe the reason may be linked to the small size of the old fracture sample. Moreover, old fractures are characterized by mature calluses, no clear fracture lines, and a morphology similar to that of the surrounding healthy ribs. Therefore, it is difficult to distinguish them from surrounding healthy ribs. In the future, the model will be further optimized, and the accuracy will be further improved as the sample size increases. In addition, only rib fracture detection was considered in this study, and it is equally important for clavicle, scapula, and spine fracture detection [26, 27]. In addition, the detection of severe complications due to trauma, such as pneumothorax issues, atelectasis, and cardiac great vessel injury, should be considered in future models [28,29,30,31]. Risk prediction models could also be established by combining the clinical semantic features of patients (e.g., blood pressure and respiration) [32]. It is believed that future multitasking models will be more suitable in clinical application scenarios to further benefit patients.

There were some limitations in this study. First, this study was retrospective, and although various types of fractures were detected, clinical work with cortical bone distortion and old lesions may not be of significant clinical significance for emergency management. Thus, further important features will be detected according to clinical needs. Second, we did not compare the performance of our model with physician-based models in terms of key factors, such as clinical outcomes and the time required to obtain a diagnosis. Finally, the size of the validation set selected in our model was relatively small. Additional data should be obtained, and prospective studies should be subsequently performed to validate the convolutional neural network (CNN) model.

Conclusion

A DL-based rib fracture detection model was proposed, which achieved good performance in detecting different rib fractures from chest X-ray based on multi-center/multi-parameter validation. The results illustrated that compared to traditional models, the DL model not only improved the diagnostic efficiency but also reduced the diagnostic time and eased the workload of radiologists. Therefore, it can be used to diagnose rib fractures from chest X-ray images with the assistance of artificial intelligence. In the future, we will study ways to incorporate clinical measures and medical images into 3D CNN architectures to achieve risk prediction for rib fracture patients.

Data Availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

Abbreviations

- DR:

-

Digital radiography

- simCLR:

-

Simple framework for contrastive learning of visual representations

- FCOS:

-

Fully convolutional one-stage

- DL:

-

Deep learning

- FROC:

-

Free-response ROC

- IoU:

-

Intersection over union

- CNN:

-

Convolutional neural network

- TP:

-

True positive

- FP:

-

False positive

- FN:

-

False negative

References

Alkadhi H, Wildermuth S, Marincek B, Boehm T. Accuracy and time efficiency for the detection of thoracic cage fractures: volume rendering compared with transverse computed tomography images. Journal of computer assisted tomography. 2004;28(3):378-85.

Ten Duis K, IJpma F. Surgical Treatment of Snapping Scapula Syndrome Due to Malunion of Rib Fractures. The Annals of thoracic surgery. 2017;103(2):e143-e4.

Ahmad M, Delli Sante E, Giannoudis P. Assessment of severity of chest trauma: is there an ideal scoring system? Injury. 2010;41(10):981-3.

Murphy C, Raja A, Baumann B, Medak A, Langdorf M, Nishijima D, et al. Rib Fracture Diagnosis in the Panscan Era. Annals of emergency medicine. 2017;70(6):904-9.

Talbot B, Gange C, Chaturvedi A, Klionsky N, Hobbs S, Chaturvedi A. Traumatic Rib Injury: Patterns, Imaging Pitfalls, Complications, and Treatment-Erratum. Radiographics : a review publication of the Radiological Society of North America, Inc. 2017;37(3):1004.

Stojanovska J, Hurwitz Koweek L, Chung J, Ghoshhajra B, Walker C, Beache G, et al. ACR Appropriateness Criteria® Blunt Chest Trauma-Suspected Cardiac Injury. Journal of the American College of Radiology : JACR. 2020;17:S380-S90.

Livingston D, Shogan B, John P, Lavery R. CT diagnosis of Rib fractures and the prediction of acute respiratory failure. The Journal of trauma. 2008;64(4):905-11.

Omert L, Yeaney W, Protetch J. Efficacy of thoracic computerized tomography in blunt chest trauma. The American surgeon. 2001;67(7):660-4.

Weikert T, Noordtzij L, Bremerich J, Stieltjes B, Parmar V, Cyriac J, et al. Assessment of a Deep Learning Algorithm for the Detection of Rib Fractures on Whole-Body Trauma Computed Tomography. Korean journal of radiology. 2020;21(7):891-9.

Meng X, Wu D, Wang Z, Ma X, Dong X, Liu A, et al. A fully automated rib fracture detection system on chest CT images and its impact on radiologist performance. Skeletal radiology. 2021;50(9):1821-8.

Zhang B, Jia C, Wu R, Lv B, Li B, Li F, et al. Improving rib fracture detection accuracy and reading efficiency with deep learning-based detection software: a clinical evaluation. The British journal of radiology. 2021;94(1118):20200870.

Yamada Y, Maki S, Kishida S, Nagai H, Arima J, Yamakawa N, et al. Automated classification of hip fractures using deep convolutional neural networks with orthopedic surgeon-level accuracy: ensemble decision-making with antero-posterior and lateral radiographs. Acta orthopaedica. 2020;91(6):699-704.

Son D, Yoon Y, Kwon H, An C, Lee S. Automatic Detection of Mandibular Fractures in Panoramic Radiographs Using Deep Learning. Diagnostics (Basel, Switzerland). 2021;11(6).

Yoon A, Lee Y, Kane R, Kuo C, Lin C, Chung K. Development and Validation of a Deep Learning Model Using Convolutional Neural Networks to Identify Scaphoid Fractures in Radiographs. JAMA network open. 2021;4(5):e216096.

Bae J, Yu S, Oh J, Kim T, Chung J, Byun H, et al. External Validation of Deep Learning Algorithm for Detecting and Visualizing Femoral Neck Fracture Including Displaced and Non-displaced Fracture on Plain X-ray. Journal of digital imaging. 2021;34(5):1099-109.

Majkowska A, Mittal S, Steiner D, Reicher J, McKinney S, Duggan G, et al. Chest Radiograph Interpretation with Deep Learning Models: Assessment with Radiologist-adjudicated Reference Standards and Population-adjusted Evaluation. Radiology. 2020;294(2):421-31.

Huang Y, Liu W, Wang X, Fang Q, Wang R, Wang Y, et al. Rectifying Supporting Regions With Mixed and Active Supervision for Rib Fracture Recognition. IEEE transactions on medical imaging. 2020;39(12):3843-54.

Tian Z, Shen C, Chen H, He T. FCOS: A Simple and Strong Anchor-Free Object Detector. IEEE transactions on pattern analysis and machine intelligence. 2022;44(4):1922-33.

Rajpurkar P, Irvin J, Ball R, Zhu K, Yang B, Mehta H, et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS medicine. 2018;15(11):e1002686.

Chen T, Kornblith S, Norouzi M, Hinton G, editors. A Simple Framework for Contrastive Learning of Visual Representations. International Conference on Machine Learning (ICML); 2020 Jul 13–18; Electr Network2020.

Setio AAA, Traverso A, de Bel T, Berens MSN, van den Bogaard C, Cerello P, et al. Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The LUNA16 challenge. Medical Image Analysis. 2017;42:1-13.

Liu J, Zhao G, Fei Y, Zhang M, Wang Y, Yu Y, editors. Align, Attend and Locate: Chest X-Ray Diagnosis via Contrast Induced Attention Network With Limited Supervision. 2019 IEEE/CVF International Conference on Computer Vision (ICCV).

Shulzhenko N, Zens T, Beems M, Jung H, O'Rourke A, Liepert A, et al. Number of rib fractures thresholds independently predict worse outcomes in older patients with blunt trauma. Surgery. 2017;161(4):1083-9.

Cho S, Sung Y, Kim M. Missed rib fractures on evaluation of initial chest CT for trauma patients: pattern analysis and diagnostic value of coronal multiplanar reconstruction images with multidetector row CT. The British journal of radiology. 2012;85(1018):e845-50.

Davis S, Affatato A. Blunt chest trauma: utility of radiological evaluation and effect on treatment patterns. The American journal of emergency medicine. 2006;24(4):482-6.

Thangaraju S, Tauber M, Habermeyer P, Martetschläger F. Clavicle and coracoid process periprosthetic fractures as late post-operative complications in arthroscopically assisted acromioclavicular joint stabilization. Knee surgery, sports traumatology, arthroscopy : official journal of the ESSKA. 2019;27(12):3797-802.

Spiegl U, Bork H, Grüninger S, Maus U, Osterhoff G, Scheyerer M, et al. Osteoporotic Fractures of the Thoracic and Lumbar Vertebrae: Diagnosis and Conservative Treatment. Deutsches Arzteblatt international. 2021;118(40):670-7.

Kim C, Lee G, Oh H, Jeong G, Kim S, Chun E, et al. A deep learning-based automatic analysis of cardiovascular borders on chest radiographs of valvular heart disease: development/external validation. European radiology. 2022;32(3):1558-69.

Thachuthara-George J. Pneumothorax in patients with respiratory failure in ICU. Journal of thoracic disease. 2021;13(8):5195-204.

Baltruschat I, Steinmeister L, Nickisch H, Saalbach A, Grass M, Adam G, et al. Smart chest X-ray worklist prioritization using artificial intelligence: a clinical workflow simulation. European radiology. 2021;31(6):3837-45.

Gassert F, Urban T, Frank M, Willer K, Noichl W, Buchberger P, et al. X-ray Dark-Field Chest Imaging: Qualitative and Quantitative Results in Healthy Humans. Radiology. 2021;301(2):389-95.

Holmes J, Wisner D, McGahan J, Mower W, Kuppermann N. Clinical prediction rules for identifying adults at very low risk for intra-abdominal injuries after blunt trauma. Annals of emergency medicine. 2009;54(4):575-84.

Funding

This work was supported by the National Natural Science Foundation of China (grant number (82271994&82001812)); a contract grant sponsor: Pyramid Talent Project of Shanghai Changzheng Hospital; the Shenkang capacity enhancement project (grant number (SHDC22022310-B)); the Military Commission surface project (grant number (22BJZ07)); the National Key Research and Development Program (2022YFC2410000); the Shanghai Science and Technology Commission (19411951300) and the National Health Commission Radiological Imaging Database Construction Project (grant number (YXFSC2022JJSJ010)).

Author information

Authors and Affiliations

Contributions

Our research is a joint project of medical and engineering, involving a lot of data collection, analysis, and processing, which is jointly participated by researchers from multiple centers. As the corresponding author, I promise that every paper author meets the requirements of the author. Hongbiao Sun, Xiang Wang, and Zheren Li completed the task of data annotation and the writing of the medical part of the paper. Aie Liu did the literature retrieval and the initial data collection and analysis. Shaochun Xu and ZhongXue completed the specific implementation of the algorithm and completed the writing of the algorithm. Qinling Jiang and Lei Chen completed the analysis of the algorithm output results and updated the iterative algorithm. Qingchu Li planned the involved direction of our whole research and continuously revised it. Jing Gong formulated our research concept and served as the general person in charge of the algorithm part. Lei Chen and Aie Liu completed the data cleaning and desensitization and made strict control on the data quality. Yi Xiao completed the review of data annotation and edited the written articles. As the general responsible person of the project, Shiyuan Liu was the guarantor for the completion of the whole project and reviewed the final articles. All the staff listed as authors fully participated in the research work and played a very important role in the research. Every link was carried out with the consent of all the staff, including the final version of the paper to be published at the end.

Corresponding authors

Ethics declarations

Ethics Approval and Consent to Participate

This study was performed with adherence to the principles of the Declaration of Helsinki. Approval was granted by the Ethics Committee of Shanghai Changzheng Hospital (No.2022SL071), and the requirement for informed consent of patients was waived due to the retrospective nature of the analysis and the anonymity of the data.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Hongbiao Sun, Xiang Wang, and Zheren Li contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sun, H., Wang, X., Li, Z. et al. Automated Rib Fracture Detection on Chest X-Ray Using Contrastive Learning. J Digit Imaging 36, 2138–2147 (2023). https://doi.org/10.1007/s10278-023-00868-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00868-z