Abstract

It is important that a Cyber-Physical System (CPS) with uncertainty in its behavior caused by its unpredictable operating environment, to ensure its reliable operation. One method to ensure that the CPS will handle such uncertainty during its operation is by testing the CPS with model-based testing (MBT) techniques. However, existing MBT techniques do not explicitly capture uncertainty in test ready models, i.e., capturing the uncertain expected behavior of a CPS in the presence of environment uncertainty. To fill this gap, we present an Uncertainty-Wise test-modeling framework, named as UncerTum, to create test ready models to support MBT of CPSs facing uncertainty. UncerTum relies on the definition of a UML profile [the UML Uncertainty Profile (UUP)] and a set of UML Model Libraries extending the UML profile for Modeling and Analysis of Real-Time and Embedded Systems (MARTE). UncerTum also benefits from the UML Testing Profile V.2 to support standard-based MBT. UncerTum was evaluated with two industrial CPS case studies, one real-world case study, and one open-source CPS case study from the following four perspectives: (1) Completeness and Coverage of the profiles and Model Libraries in terms of concepts defined in their underlying uncertainty conceptual model for CPSs, i.e., U-Model and MARTE, (2) Effort required to model uncertainty with UncerTum, and (3) Correctness of the developed test ready models, which was assessed via model execution. Based on the evaluation, we can conclude that we were successful in modeling all the uncertainties identified in the four case studies, which gives us an indication that UncerTum is sufficiently complete. In terms of modeling effort, we concluded that on average UncerTum requires 18.5% more time to apply stereotypes from UUP on test ready models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

“Cyber-Physical Systems (CPS) are integrations of computation, networking, and physical processes. Embedded computers and networks monitor and control the physical processes, with feedback loops where physical processes affect computations and vice versa” [1]. These systems often function in the unpredictable physical environment, leaving them vulnerable to uncertainty during their operation [2,3,4]. CPSs are often designed and developed with known assumptions on their operating physical environment, which may not hold during their operation. Currently, a common practice is to develop CPSs by integrating physical units without knowing their internals. Consequently, even during testing, assumptions about the expected behavior of CPSs and their operating environment are often made. Thus, we argue that when applying model-based testing (MBT), uncertainty (i.e., “lack of knowledge” [5, 6] about the internal behavior of a CPS and its composed physical units, and its operating environment) must be explicitly captured in test ready models, i.e., the models representing the expected behavior of the CPS being tested and are detailed enough such that test cases can be generated from them. We took a subjective approach to capture uncertainty since a test modeler(s) creates test ready models, during which assumptions are made by the modeler(s) about the internal behavior of a CPS and its physical units, and its operating environment, based on her/his (their) belief at the time the models are created.

Uncertainty in the context of CPSs is an immature area of research in general, and several efforts have just begun to study uncertainty in CPSs [7]. In this paper, we report one such effort, where we aim to devise a set of modeling methodologies for explicitly modeling test ready models (with uncertainty) for CPSs under test with the ultimate aim of automatically generating test cases from test ready models with MBT techniques. We report an Uncertainty-Wise Modeling Framework, named as UncerTum (Fig. 1), which is developed as part of an EU project [8]. The project has various types of partners contributing to the overall approach such as researchers, use case providers, tool vendor, and test bed providers, as shown in Fig. 1. UncerTum, developed by researchers, supports modeling test ready models with known uncertainty based on uncertainty test requirements provided by use case providers (Fig. 1). In the project, the first use case provider is Future Position X, Sweden [9], who provides the CPS case study about GeoSports (GS) from the healthcare domain, whereas the second use case provider is ULMA Handling Systems [10] who provides case study about Automated Warehouse (AW) from the logistics domain.

The core of UncerTum is the UML Uncertainty Profile (UUP) (Fig. 1), which is defined based on the uncertainty conceptual model for CPSs (U-Model) [7]. The UUP profile consists of three parts (i.e., Belief, Uncertainty, and Measurement profiles) and an internal library containing enumerations required in the profiles. In addition, UncerTum also defines an extensive set of UML Model Libraries (Model Libraries in Fig. 1) by either extending the UML profile for Modeling and Analysis of Real-Time and Embedded Systems (MARTE) [11] or defining new ones that were not covered by existing standards. The key libraries include Uncertainty Pattern Library,Measure Library, and Time Library. Moreover, UncerTum relies on the UML Testing Profile (UTP) V.2 to model test ready models for the purpose of enabling MBT. Last, UncerTum includes a set of guidelines (Fig. 1) with recommendations and alternative scenarios for applying the proposed modeling notations.

UncerTum was deployed on IBM Rational Software Architect (RSA) [12] as shown in Fig. 1. Once test ready models are created, they are inputted into the CertifyIt [13] MBT tool, which is a plugin to IBM RSA. With this tool, a set of executable test cases can be generated based on various test strategies that are devised and prototyped by researchers. Both the implementation of UncerTum and test case generation strategies will be integrated into CertifyIt by the tool vendor (EGM [14]). Finally, test bed providers provide facilities to execute generated test cases on the provided CPSs case studies. This includes Test Infrastructure (physical infrastructures and test emulators/simulators) and Test APIs to control and monitor both the test infrastructure itself and the CPS being tested. In the context of the U-Test project, Nordic Med Test [15] (NMT) provides the facility to execute test cases on GS, whereas in the case of AW, ULMA [10], and IK4-Ikerlan [16] provide the corresponding facility. Finally, the tool vendor implements the Test Case Execution Platform, which executes test cases on the CPS (Fig. 1). Note that the focus of this paper is only on UncerTum, which is indicated by a dashed line box of Fig. 1 (i.e., “Scope of the paper”) and the rest is ongoing.

UncerTum was evaluated with two industrial case studies, one real world, and one open-source case study from the literature. The first two case studies are GS and AW available to us as part of the project, whereas the third case study is embedded Videoconferencing Systems (VCSs) developed by Cisco, Norway [17], and was used in the second author’s previous work [18]. Currently, we have access to several VCSs in our research laboratory due to our long-term collaboration with Cisco and we modeled them ourselves for the purpose of evaluating UncerTum. Thus, this case study is a real case study, but using it to evaluate UncerTum is not performed in a real industrial setting. The GS and AW case studies were however performed in real industrial settings. The fourth case study (SafeHome) is an open-source case study from [19], and we extended it for our purpose. With these case studies, we performed evaluation from these three perspectives: (1) Completeness and Coverage of UUP/Model Libraries to U-Model and MARTE, (2) Effort required to model uncertainty using UncerTum in terms of the number of model elements and effort measured in terms of time, and (3) Correctness of the developed models by executing the models.

In our previous work, we developed a generic conceptual model (called U-Model) to understand uncertainty independent of its final use [7]. Notice that to keep the paper self-contained, we have provided U-Model and definitions of its concepts in “Appendix A” and we refer to it when necessary. In this paper, U-Model was implemented as UUP, i.e., one of the key contributions of this paper, to enable the development of test ready models for supporting MBT. Other contributions include a set of Model Libraries to model (partially extending MARTE), for example, various types of uncertainties and their measures and a set of precise guidelines to create test ready models using UUP, UTP V.2 and Model Libraries. Note that the development of UTP V.2 is not a contribution of this paper; rather its application to create test ready models with uncertainty is one of our contributions. Notice that this is one of the first papers reporting the application of UTP V.2 to industrial case studies. Another contribution of the paper is our modeling approach to check the correctness of test ready models through model execution. Finally, we consider the extensive evaluation of the applicability of UncerTum with the three real industrial case studies as a contribution as well.

The rest of the paper is organized as follows. Section 2 presents the background, followed by a running example (Sect. 3). Section 4 presents the overview of UncerTum. Section 5 discusses details of the UUP profile, and Sect. 6 discusses the Model Libraries. Section 7 presents the guidelines for applying UncerTum. Section 8 presents our modeling approach for checking the correctness of test ready models with model execution. Section 9 provides the evaluation, and Sect. 10 presents the related work. We conclude the paper in Sect. 11.

2 Background

2.1 Cyber-Physical Systems and testing levels

A CPS is defined in [7] as: “A set of heterogeneous physical units (e.g., sensors, control modules) communicating via heterogeneous networks (using networking equipment) and potentially interacting with applications deployed on cloud infrastructures and/or humans to achieve a common goal” and is conceptually shown in Fig. 2. Uncertainty can occur at the following three logical levels [7] (Fig. 2): (1) Applicationlevel, due to events/data originating from an application (one or more software components) of a physical unit of the CPS; (2) Infrastructurelevel, due to data transmission via information network enabled through networking infrastructure and/or cloud infrastructure; (3) Integrationlevel, due to either interactions of applications across the physical units at the application level, or interactions of physical units across the application and infrastructure levels. Notice that we chose the definition of CPS from [7] as it was defined in the context of our project and was further used to define the three levels of uncertainties in CPS that are modeled in this paper and conforms to the well-known definition in [1].

2.2 U-Model

In our previous work [7], to understand uncertainty in CPSs, we developed a conceptual model called U-Model to define uncertainty and associated concepts, and their relationships at a conceptual level. Some of the U-Model concepts were extended for supporting MBT of all the three levels of CPS under uncertainty (Sect. 2.1). U-Model was developed based on an extensive review of existing literature on uncertainty from several disciplines including philosophy, healthcare, and physics, and two industrial CPS case studies from the two industrial partners of the U-Test EU project. In this paper, we implement U-Model as UncerTum to support the construction of test ready models with uncertainty. Details of U-Model are given in [7], and part of U-Model is provided in “Appendix A” for the purpose of keeping this paper self-contained.

2.3 UML Testing Profile (UTP)

UML Testing Profile (UTP) [20, 21] is a standard at Object Management Group (OMG) for enabling MBT. With UTP, the expected behavior of a system under test can be modeled, from where test cases can be derived. UTP can be also used to directly model test cases. Transformations from models specified with UTP to executable test cases can be performed using specialized test generators. Since UTP is defined as a UML profile, it is often applied to UML sequence, activity diagrams, and state machines for describing behaviors of a system under test or test cases. The key purpose is to introduce testing-related concepts [e.g., Test Case, Test Data, and Test Design Model and Model Libraries such as various types of test case Verdict (pass, fail)] to UML models for the purpose of enabling automated generation of test cases. UTP V.2 [21] is the latest revision of the UTP profile, which is conceptually composed of five packages of concepts: Test Analysis and Design, Test Architecture, Test Behavior, Test Data, and Test Evaluations. Various numbers of stereotypes have been defined for some concepts of these packages. Similar to other modeling notations, it is never been an objective to exhaustively apply all the stereotypes when using UTP V.2 to annotate UML models with testing concepts [21]. Which stereotypes to apply and how to apply them are however problem/purpose specific and should be defined by users of the profile. More information about the UTP V.2 standardization and the team can be found in [22, 23].

To enable MBT of CPSs under uncertainty, we rely on UTP V.2 to model the testing aspect of test ready models. In our context, only a subset of UTP V.2 was used.

3 Running example

To illustrate UncerTum throughout the paper, we present a running example in this section, which is a simplified security function of the SafeHome system described in [19]. The developed test ready model of the running example includes a class diagram (Fig. 3), a composite structure diagram (Fig. 4), and a set of state machines (Figs. 5, 6, 7) using IBM Rational Software Architect (RSA) 9.1 [12]. For the sake of simplicity, we only show one security function related to intrusion detection. Notice that, even though we present all the diagrams of the model of the running example in this section (including the application of the profiles and Model Libraries), we illustrate them using the running example when they are discussed in later sections.

In general, the security system controls and configures Alarm and related Sensors through their corresponding interfaces (class diagram in Fig. 3, detailed explanation in Table 1). In Fig. 4, we show a composite structure of the security system. Notice that the alarm and sensors do not talk to each other directly. Instead, they communicate via the provided interface of the port of the system: ISecuritySystem. For example, the security system receives the IntrusionOccurred signal via portSecurity, which is sent by a sensor from portSensor when an intrusion is detected (see the implementation of effect notifyIntrusion in Fig. 6).

Behaviors of the alarm, sensors, and the system were specified as the three state machines by the first author of the paper (modeled in Fig. 10) shown in Figs. 5, 6, and 7, respectively. The alarm state machine has two states: AlarmDeactivated and AlarmActivated. AlarmDeactivated represents the state that the alarm is not ringing, whereas the AlarmActivated state denotes that the alarm is ringing. The sensor state machine has two states (Fig. 6): SensorDeactivated denotes the state that a sensor is deactivated to detect intrusion, whereas SensorActivated represents that a sensor is activated to sense intrusion. The security system state machine (Fig. 7) has two concurrent regions in the composite state MonitoringAndAlarm and a set of sequential states (e.g., Idle and Ready). The top region (Monitor Intrusion) of the MonitoringAndAlarm composite state has two states: Normal and IntrusionDetected, which represent that an intrusion is not detected and detected, respectively. The bottom region (Timer Control) has three states: Timer Stopped, TimerStarted, and Police Notified, representing the states that the timer of the system is stopped to notify the police (TimerStopped), the timer is activated to wait for 3 min before notifying the police (TimerStarted), and the police is notified (PoliceNotified).

These three state machines communicate via signals using the ports defined in the composite structure (Fig. 4). One typical scenario in case of security breach is: (1) When a sensor is in the state of SensorActivated, it sends the IntrusionOccurred signal to the security system (UML Action Language (UAL) [24] code in the comment in Fig. 6) once the intrusion is detected via the effect notifyIntrusion defined in the self-transition (Fig. 6, A) of the SensorActivated state; (2) when the Security System receives the IntrusionOccurred signal, it transits to the IntrusionDetected state from the Normal state (Fig. 7 B.1). In parallel, as one can see from the bottom region (Timer Control) of the MonitoringAndAlarm composite state of the system (Fig. 7), the system sends the StartAlarm signal to the Alarm state machine via activateAlarm (Fig. 7 and effect* in Table 1) and triggers StartTimer() when entering the IntrusionDetected state (Figs. 7 B.2, 8), which leads to the transition from TimerStopped to TimerStarted (Fig. 7). From TimerStarted, after 3 min (time event), the system notifies the police and transits to PoliceNotified; (3) the Alarm state machine receives the StartAlarm signal in the AlarmDeactivated state and activates the alarm and transits to AlarmActivated.

Figures 9 and 10 illustrate how we model uncertainty using UUP/Model Libraries, whereas Figs. 3 and 4 show the application of the CPS Testing Levels profile and UTP V.2 on the models of the running example. As an example, the detailed description for classifier SecuritySystem is shown in Table 1. An example of using the Model Libraries is shown in Table 1, on transition B.1, where the probability of successful intrusion detection is measured in a 7 scale of probability (Probability_7Scale defined in the probability library) as VeryLikely (see the Transition row in Table 1 and Success:ReceiveIntrsionOccurred in Fig. 9). More details are presented in the following sections.

4 Overview of UncerTum

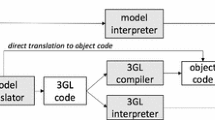

Figure 11 shows the overall flow of using UncerTum, and an overview of UncerTum is presented in Fig. 12. Step 1 in Fig. 11 is about creating test ready models using the UML profiles (e.g., UUP), Model Libraries, and guidelines defined in UncerTum. Section 5 presents the profiles in detail, Sect. 6 presents the Model Libraries, whereas Sect. 7 presents the guidelines. Step 2 in Fig. 11 involves developing executable test ready models to support validation of these models based on the guidelines defined in Sect. 8.1. Step 3 executes these executable test ready models and in case errors are found a test modeler can use our guidelines defined in Sect. 8.2 to fix these errors. Notice that our framework only supports test modeling, i.e., creating test ready models that can be used to generate executable test cases. Such type of modeling is less detailed as compared to, e.g., modeling for automated code generation. This is mainly because, during test modeling, we are only interested in modeling test interfaces (e.g., APIs to send a stimulus to the system and capturing state variables) and the expected behavior of a system.

The core of UncerTum is the implementation of U-Model (relevant part of U-Model in “Appendix A” and complete details in [7]) as UUP (Fig. 12). Notice that U-Model was used as the reference model to create UUP. While developing UUP based on U-Model, we took three types of decisions: (1) Some concepts from U-Model (e.g., Uncertainty) were incorporated into UUP as it is; (2) some concepts from U-Model (e.g., Belief, which is an abstract concept) were not implemented in UUP; (3) some concepts from U-Model were refined in UUP. For example, the BeliefStatement concept was implemented as «BeliefElement» in UUP since it corresponds to an explicit representation of model elements in the modeling context. At a high level, the core part of U-Model is implemented as UUP comprising of three parts: Belief, Uncertainty, and Measurement. All these profiles import Internal_Library that define necessary enumerations required in the profiles. The framework also consists of a small CPS Testing Levels profile, which permits modeling specific aspects of the three testing levels of CPSs, i.e., Application, Infrastructure, and Integration, just for MBT.

The framework also consists of three UML Model Libraries: Measure Library, Pattern Library, and Time Library (which extend MARTE [11]). We would like to highlight that we imported Time Library from MARTE without any modifications and thus we will not describe it in the paper. The framework relies on UTP V.2 to bring necessary MBT concepts to test ready models. Finally, the framework provides a set of guidelines on how to use its modeling notations to construct test ready models for enabling MBT of CPSs under uncertainty.

5 UUP and CPS testing levels profile

This section presents UUP, whose modeling notations are composed of stereotypes and classes for Belief (Sect. 5.1), Uncertainty, and Measurement (Sect. 5.2), as shown in Figs. 13, 14, and 15. The complete profile documentation (including constraints) is provided in [25], and the mapping between concepts in UUP and U-Model is provided in Table 2. We also present the CPS Testing Levels profile in Sect. 5.3. Notice that in this section, we describe the UUP concepts at a high level and please refer to definitions of the U-Model concepts in “Appendix A” and the detailed profile specification in [25].

5.1 UUP belief

The Belief part of UUP is one of the key components of UUP since we focus on subjective uncertainty (“lack of knowledge”), where a test modeler(s) (BeliefAgent(s), see “Appendix A”) creates test ready models of a CPS based on her/his/their assumptions (Belief, see “Appendix A”) about the expected behavior of the CPS and its operating environment. The Belief part of UUP thus defines concrete concepts to model Belief of test modelers with UML. As shown in Fig. 13, it implements the high-level concepts defined in U-Model:BeliefModel provided in “Appendix A.1” (the mapping is provided in Table 2, and further discussion is provided in Sect. 9.2.1). As shown in Fig. 13 and Table 2, five stereotypes are defined, among which «BeliefElement» is the key stereotype associated with various UML metaclasses. This stereotype is used to signify which UML model elements are representing belief of belief agent(s). Generally speaking, any model element may represent a belief, but in the context of our work, we only extend UML metaclasses that are required to support MBT. For example, a StateMachine (subtype of metaclass Behavior) itself can be a belief element (i.e., expected state-based behavior of a CPS and its operating environment), such that «BeliefElement » can be applied on it to characterize it with additional information such as to which extent a test modeler (stereotyped with «BeliefAgent») is confident about the state machine (i.e., beliefDegree of BeliefStatement), all uncertainties (i.e., Uncertainty) associated with the state machine, and their Measurements. In our context, we extend UML state machines; however, it is worth mentioning that «BeliefElement» can be used, for example, with activity and sequence diagrams if needed. We intentionally kept the profile generic (e.g., by making «BeliefElement» extend the UML metaclass Behavior) such that different MBT techniques based on different diagrams can be defined when needed.

In case that there is some evidence, e.g., existing data to support the belief, «Evidence» can be used. It is defined to capture any evidence for supporting the definition of a measurement for an uncertainty. The stereotype extends UML metaclass Element, implying that any UML model element (e.g., Class) can be used to specify evidence, e.g., as a ValueSpecification. Each uncertainty is also associated with a set of indeterminacy sources (definition in “Appendix A”), which can be explicitly specified using «IndeterminacySource» and classified with enumeration IndeterminacyNature (Fig. 13) as defined in “Appendix A.”

The profile also implements OCL constraints defined in U-Model. For example, as shown in Fig. 13, each beliefDegree (an instance of Measurement) of a «BeliefStatement» must have exactly one measure associated with it, which can be specified in three different ways: a «Measure» (via attribute measure of Measurement), DataType (via measureInDT) or Class (via measureInDTViaClass). This OCL constraint is given:

When we look at the running example, the belief agent (Fig. 10) is Man_Simula (stereotyped with «BeliefAgent») who defines three state machines: one for the alarm, one for the sensors, and one for the security system itself. As shown in Table 1, «BeliefElement» is applied on the IntrusionOccurred transition from Normal to IntrusionDetected (Fig. 7 B.1). The belief agent of this belief element is specified as class Man_Simula (stereotyped with «BeliefAgent» shown in Fig. 10). The belief degree of this belief element is specified as a value specification «VeryLikely» and measured as Probability_7Scale. The belief element has one occurrence uncertainty, which is associated to «BeliefElement, Cause» notifyIntrusion of «IndeterminacySource» Sensor (Table 1).

5.2 UUP uncertainty and measurement

The second key component of UUP is about the implementation of concepts related to Uncertainty («BeliefElement » composed of Uncertainty in Fig. 14) and is presented in Fig. 14. In addition, each Uncertainty may have associated measurements that are captured in the Measurement part as shown in Fig. 15. In Fig. 14, the key element is Uncertainty, which is characterized with a list of attributes such as kind (typed with enumeration UncertaintyKind) indicating a particular type of uncertainties. All of the attributes (except for kind and field) are optional. For example, an uncertainty might or might not have an indeterminacy source (i.e., indeterminacySource as defined in “Appendix A”).

The U-Model concepts of Effect, Pattern, Lifetime, and Risk (“Appendix A”) can be specified with UUP in difference ways. For example, one can specify the effect (i.e., the result of an uncertainty, as defined in “Appendix A”) of an uncertainty simply as a string (attribute effect of Uncertainty). One can also create a UML model element and stereotype it with «Effect», and the uncertainty can then be associated with it (i.e., referredEffect). More details regarding the possible alternatives can be found in Sect. 7.

«IndeterminacySource», «BeliefStatement», Uncertainty, «Effect», and «Risk» can be further elaborated with Measurement. A measurement can be specified in different ways: (1) as a string (attribute measurement of class Measurement), (2) as a value specification (measurementInVS), (3) as a package stereotyped with a subtype of «Measurement», and (4) a constraint stereotyped with «MeasurementConstraint». «Measurement» is further classified into five subtypes, corresponding to the five types of elements to be measured.

«Measure» is defined to classify different types of measures and provide users an option to denote classes and data types with concrete measure types such as «EffectMeasure». Such a stereotyped class or data type is linked via Measurement to «IndeterminacySource», «Effect», Uncertainty, «Risk» or «BeliefStatement».

A set of OCL constraints has been implemented in UUP. One of the examples is that Element with applied «Effect» should be referred at least once via the “referredEffect” attribute of the Uncertainty instance:

For the running example, «BeliefElement, Effect» ActivatedAlarm is associated with Uncertainty of «BeliefStatement» IntrusionOccurred via the “referredEffect” attribute (Table 1).

5.3 CPS testing levels profile

We define a small CPS Testing Levels profile with the three stereotypes (Fig. 16) to denote which model element belongs to which level of the three: «Application», “Infrastructure,” and «Integration» . This enables a test generator to use different mechanisms (if used) to control and access elements from different levels. For example, infrastructure-level variables may be accessed with different tools/APIs as compared to application-level variables. All the three stereotypes extend the UML metaclass Element, as one can apply them to a class, a state, a state machine, and many other model elements.

Note that this profile is defined for enabling MBT of CPS under uncertainty from the three logical levels, and we have no intention to break down CPS according to their system architectures by denoting physical units, sensors, network, etc. For example, class Sensor in Fig. 3 is stereotyped with «IndeterminacySource» and «InfrastructureElement», meaning that sensors are infrastructure elements. As shown in Fig. 4, the composite structure of the system describes the interactions between the infrastructure elements (Alarm and Sensors) and the application-level elements: portSensor, portAlarm, and portSecurity, which are typed by three interfaces (i.e., ISensor, IAlarm, and ISecuritySystem) as shown in Fig. 3. This is the reason that the composite structure is stereotyped as «IntegrationElement».

6 Model Libraries

To model uncertainty with advanced modeling features, we define three Model Libraries that can be used together with UUP for modeling uncertainty Patterns (in Fig. 20), uncertainty Measurements (corresponding to Probability, Vagueness, and Ambiguity in Figs. 17, 18, 19), and Time-related properties. Measure, Pattern, and Time libraries import the MARTE_PrimitiveTypes library [11] to facilitate the expression of data in the domain of CPSs such as Real. Respectively, the Measure library adapts the operation of NFP_CommonType of MARTE [11] related to probability distributions. The Pattern library imports elements related to Pattern from the BasicNFP_Types library of MARTE [11] (e.g., AperiodicPattern) and further extends them. For example, the Transient pattern does not exist in MARTE [11] and has been newly defined. The Time library imports the time concepts from MARTE_DataTypes library [11] to facilitate the expression of time, e.g., Duration and Frequency, and thus does not discuss these in this paper.

6.1 Measure Libraries

We define three measure packages (Probability, Ambiguity, and Vagueness) to facilitate modeling with different uncertainty measures (Figs. 17, 18, 19; Table 3). Notice that in U-Model (Appendix A), these three concepts were defined only at a very high level; no breakdown of Probability, Ambiguity, and Vagueness was provided in U-Model. In this paper, we largely extended and implemented the detailed measures belonging to these three categories/packages, based on various theories such as Fuzzy Set and Probability Theory.

In the Ambiguity library, we define the data types corresponding to the relevant Ambiguity measures published in the literature (Fig. 17). Since these measures are well known, we do not provide further details in this paper; however, interested readers may consult the provided references listed in Table 3 for more details and the technical report corresponding to this paper [25]. The concepts of the fuzzy set theory [26] are defined in the Vagueness library (Fig. 18), and Table 3 lists the relevant references.

Various data types related to the probability are defined in the Probability library (Fig. 19). All the modeled probability distributions are well known, and thus we do not present further details in this paper. Some details of these distributions are provided in the technical report corresponding to this paper [25]. The other data types such as Percentage, Probability, Probability_Interval, and different qualitative scales of probability (e.g., Probability_4Scale) are from basic statistics and thus are not further explained.

For example, as shown in Fig. 9, the IndeterminacyDegree of Sensor_IntrusionSensed, which is used to measure the occurrence of successful sensing intrusion of Sensor, the self-transition of SensorActivated (Fig. 6), is expressed by BeliefInterval [27], which is composed of belief (97%) and plausibility (99%), which are predefined in the Ambiguity library (Fig. 17). Further details are provided in the technical report corresponding to this paper [25] for references.

6.2 Pattern Library

This section presents Pattern Library shown in Fig. 20 to support modeling various known patterns, in which an uncertainty may occur. All the patterns except for Transient andPersistentPattern are imported from MARTE [11]. Details of these patterns can be consulted from the MARTE specification and the technical report corresponding to this paper [25]. The definition of Transient is adopted from [7], i.e., “Transient is the situation whereby an uncertainty does not last long”. Transient inherits from IrregularPattern. The newly introduced attributes are minDuration and maxDuration describing the duration for which the uncertainty lasts. The definition of PersistentPattern is adopted from [7], i.e., “the uncertainty that lasts forever”. The definition of “forever” varies. For example, an uncertainty may exist permanently until appropriate actions are taken to deal with the uncertainty. On the other hand, an uncertainty may not be able to resolve and stays forever. The duration attribute of PersistentPattern is set to “forever” to indicate that the uncertainty with this pattern stays forever until resolved. In the context of testing, “forever” may be the duration for which a test case is being executed on a CPS.

7 UncerTum modeling methodology

In this section, we present our modeling methodology for UncerTum. The rest of this section is organized as follows: Sect. 7.1 presents the overview of modeling activities, Sect. 7.2 presents modeling activities at Application Level, Sect. 7.3 presents modeling activities at Infrastructure Level, Sect. 7.4 presents modeling activities at Integration level, and Sect. 7.5 presents the modeling activities of applyingUUP which is invoked at the above three levels. Notice that we used the activity diagram to provide a step-wise procedure to create test ready models and this activity diagram is not used for testing. We used structured activities in the activity diagram when it was necessary to break an activity down. Whenever an activity is used by multiple activity diagrams, we created the activity and call it from the multiple activity diagrams using call behavior activity nodes.

To facilitate the construction of test ready models, we made a set of design decisions, which are summarized, along with the rationales behind, in Table 4. We refer to them in the text whenever those are discussed.

7.1 Overview

The modeling methodology is naturally organized from the viewpoints of the three types of stakeholders: Application Modeler, Infrastructure Modeler, and Integration Modeler, as shown in Fig. 21. For activities performed by each type of modelers, we distinguish them by tagging each of them (in their names) using “AP”, “IF”, and “IT”, respectively.

As shown in Fig. 21, all modelers are recommended to start from creating a package (i.e., AP1, IF1, and IT1), which is used to group and contain model elements for each respective level (DD1 in Table 4). Next, application and infrastructure modelers apply the UUP notations to model system behaviors of the application and infrastructure levels, respectively (i.e., AP2 and IF2). These two structured activities are further elaborated in Sects. 7.2 and 7.3. When these two activities are finished, integration modelers take their results as inputs and perform IT2: Model Integration Behavior. Details of this structured activity are further discussed in Sect. 7.4.

7.2 Application-level modeling

The application-level modeling activities (Fig. 22) include four sequential steps: creating application-level class diagrams (AP2.1, DD2 in Table 4), creating application-level state machines (AP2.2, DD5 in Table 4), applying CPS testing levels profile (AP2.3), and applying the UUP notations on the created class and state machines (AP2.4).

A class diagram (DD2) created for the application level captures application-level state variables (attributes), whose values either can be accessed directly or with dedicated APIs. We also model operations representing APIs to send stimuli to the CPS being tested. Also, it is important to mention that such a class diagram usually needs to specify Signal, which is a Classifier for specifying communication of send requests across different state machines. In addition, a class in the class diagram may receive signals from other classes (even across levels) that are modeled as signal reception (DD3/DD4 in Table 4). When creating a class diagram for the application level, for each class, each of its attributes captures an observable system attribute, which may be typed by a DataType in the UUP’s Model Libraries (Sect. [25]) or MARTE_Library [11]. An attribute may represent a physical observation on a device (e.g., battery status on an X4 device). Each operation of a class in a class diagram represents either an API of the application software or an action physically performed by an operator (e.g., switching on or off of an X4 device). Each signal reception represents the stimulus that can be received from a different state machine.

In a state machine (DD5-DD8 in Table 4), each state is precisely defined with an OCL constraint specifying its state invariants (DD6 in Table 4). Such an OCL constraint is constructed, based on one or more attributes of one or more classes of an application-level class diagram. Each transition in a state machine should have its trigger defined as a call event corresponding to an API or a physical action defined in the class diagrams of the application level and has its guard condition modeled as an OCL constraint on the input parameters of the transition’s trigger (DD6/DD7 in Table 4).

Next, application modelers need to apply UUP on state machines (AP2.4) to specify uncertainties and apply the UTP profile to add testing information (e.g., indicating TestItem). The application of UUP is the same for the three levels, and thus we only describe it under the integration-level modeling section (Sect. 7.4).

7.3 Infrastructure-level modeling

For the infrastructure level, a similar modeling procedure as the one defined for the application level should be followed to derive class diagrams and state machines, apply UUP and UTP (further details in Sect. 7.4), as shown in Fig. 23. One difference is that attributes of infrastructure-level class diagrams should capture observable infrastructure attributes. For example, an attribute (isIntrusionOccurred in Fig. 3) can reflect the occurrence of intrusion sensed by Sensor. Operations of infrastructure-level class diagrams represent APIs for manipulating infrastructure-level components. Regarding state machines, they should be consistent with the infrastructure-level class diagrams. In other words, states should have their invariants defined as OCL constraints based on the attributes defined in the infrastructure-level class diagrams, and transitions having their triggers defined as call events or time/change events (DD5–DD7 in Table 4).

7.4 Integration-level modeling

Recall that, activity IT2 is started after class diagrams and state machines created for the application and infrastructure levels. As shown in Fig. 24, the IT2 activity starts from creating integration-level class diagrams (IT2.1) and state machines (IT2.2) and applying the CPS testing levels profile (IT2.3), followed by applying UUP and UTP.

Regarding creating class diagrams for the integration level, such a class diagram should focus on specifying interactions between the application software and infrastructure. Particularly, signal receptions should be defined to model events that a class can receive from the infrastructure and/or application levels (DD3–DD4). Each signal reception corresponds to an instance of UML Signal defined in a created integration-level class diagram (DD3–DD4). Notice that creating class diagrams for the integration level is not mandatory (DD1). Model elements that have been defined in the application and infrastructure-level class diagrams can appear in the integration-level class diagrams, and they should be specified from the perspective of integration-level modelers.

There are different ways of defining model elements for the integration level. One way is to refine the created application and infrastructure-level state machines by directly introducing new model elements to them. For example, a state in the application level can send a Signal to the infrastructure level and vice versa. Transitions of a state machine in the application (infrastructure) level should capture triggers of type Signal Reception and effects containing Signals from the infrastructure (application) level. Another way is to keep application and infrastructure-level state machines untouched by applying a specific modeling methodology (e.g., Aspect Oriented Modeling methodologies) to specify crosscutting behaviors separately. In addition, one should also benefit from advanced features of UML state machines (e.g., concurrent state machines, parallel regions) to for example refer to existing state machines defined in the application and infrastructure levels.

7.5 Apply UUP (AP2/IF2/IT2)

Since test ready models can be created in several different ways, we propose a set of options to restrict the way, in which test modelers apply UUP. Notice that our test generators will only be able to generate test cases when one of these options is followed. The same modeling decisions (D12, D13 in Table 4) are taken for several concepts in UUP including Belief Agent, Evidence, Measurement, Lifetime, Cause, Pattern, Effect, IndeterminacySource, andRisk. All these can be simply modeled as String values. This option is informal since a test modeler is allowed to provide any String value. Second, a more formal way is to model these concepts as Fig. 10 (e.g., a Ph.D. student at Simula class for a particular BeliefAgent) with possible attributes and operations inside a dedicated package (e.g., for all BeliefAgents for a CPS under test). Followed by this, we recommend applying dedicated stereotypes (e.g., «BeliefAgent») either on classes, package, or both. The justification of these design decisions is summarized in Table 4.

Recall that the activity of applying UUP is invoked at all the three levels. We tag each type of the activities of the activity diagrams from Figs. 25, 26, 27, 28, 29, 30, 31, 32, and 33 with S, C, and A to represent structured activities, call behavior, and normal activity nodes (standard semantics as in UML). Note that these activity diagrams are developed to explain the step-wise procedure to create test ready models and themselves are not part of the test ready models. As shown in Fig. 25, applying UUP starts from applying «BeliefElement» on any UUP allowed state machine model element. Then a modeler can specify values for the “from” and “duration” attributes of the stereotype, model belief agents, model belief degree, and/or model uncertainties (Fig. 25).

As shown in Fig. 26, there are two ways (D12, D13) to model belief agents (S1.1 and S1.2). A modeler can specify belief agents simply as one or more strings via the “beliefAgent” attribute of «BeliefElement» (S1.1). She/he can also create a package to organize all the belief agents (S1.2). In this case, each belief agent can be modeled as a class in the package and the package is stereotyped with «BeliefAgent» . Alternatively, one can model each belief agent as a class and stereotype it with «BeliefAgent». The other option is to model each belief agent as a class and stereotype it with «BeliefAgent» and also stereotype the package with «BeliefAgent» . When choosing to apply options 2, 3, and 4, one needs to link a created belief agent package to the agent attribute of «BeliefElement» (S2). For example, we modeled the belief agent, Man_Simula, using Option 3 as shown in Fig. 10.

Modeling BeliefDegree is presented in Sect. 7.5.1, and modeling uncertainties are discussed in Sect. 7.5.2.

7.5.1 Measurement modeling

Modeling measurements and measures are important for applying UUP. These activities are used to measure beliefDegree, Uncertainty, indeterminacyDegree, Risk, and Effect. As shown in Fig. 27, one first needs to create a package to contain measurements for indeterminacyDegree, beliefDegree, uncertaintyMeasurement, measurement of Risk and measurement of Effect (A1). Then, a modeler can optionally specify Evidence (S1), followed by the specification of each measurement instance and its corresponding measure (S3 and S2).

A. Specify Evidence

As shown in Fig. 28, there are two ways (D12, D13) to specify evidence. Option 1 is to specify evidence as a String value (in the “measurement” attribute of Measurement). Option 2 is to create a package for evidence if such a package does not exist and optionally stereotype it with «Evidence» (S1.2.1). One can then create any UML model element to represent evidence, according to UUP and optionally stereotype it with «Evidence» (S1.2.2). The last step of Option 2 is to link either the package or UML model elements representing evidence to the “referredEvidence” attribute of Measurement (S1.2.3).

B. Specify Measure

As shown in Fig. 29, to specify a measure, a modeler needs to create a class diagram (A1) and then create instances of Measures (for measurements of either “indeterminacyDegree,” “beliefDegree,” “uncertaintyMeasurement,” measurement of Risk or measurement of Effect) as classes or data types (A2). One then needs to add attributes to these classes or data types by using the data types defined in the Measure Libraries (Sect. 6.1). One can optionally apply corresponding measure stereotypes (e.g., «UncertaintyMeasure») to the classes or datatypes (A4). The last step is to link a measure to an instance of Measurement (A5).

C. Specify Measurement

There are three ways (D12, D13) to specify measurements (in Fig. 30): specifying a measurement as a String of the measurement attribute of Measurement (A1), ValueSpecification (A2), and an OCL constraint owned by a class or datatype representing a measure, based on the attributes defined in the class or datatype (A3.1). One can also optionally apply «MeasurementConstraint» to an OCL constraint defined to specify a measurement (A3.2).

7.5.2 Uncertainty modeling

As shown in Fig. 31, one first needs to specify the kind of an uncertainty (A1), optionally specify values for attributes “from,” “field,” and “locality” of the uncertainty, optionally model Lifetime (or Cause, Pattern, Effect) of the uncertainty, optionally define IndeterminacySource(s), optionally model uncertaintyMeasurement and Risk.

A. Model Lifetime/Cause/Pattern/Effect of Uncertainty

A modeler has two options (D12, D13) to specify Lifetime/Cause/Pattern/Effect of an uncertainty, as shown in Fig. 32. One option is to simply specify an instance of these as a String value owned by the uncertainty (via attributes “lifetime,” “cause,” “effect,” “pattern” or “risk” of Uncertainty). The second option needs to start from creating a package for Lifetime/Cause/Pattern/Effect if such a package does not exist, and optionally apply «Lifetime», «Cause», «Pattern», or «Effect» (S1.2.1). After creating packages, one needs to create Lifetime/Cause/Pattern/Effect as any UML model element and optionally apply the corresponding stereotypes. Since Effect can be measured, an instance of it can be optionally associated with one or more measurements (Sect. 7.5.1). The last step of Option 2 is to associate each created package or element to corresponding attributes of Uncertainty, i.e., “referredPattern,” “referredEffect,” “referredLifetime,” or “referredCause.”

B. Model IndeterminacySource

As shown in Fig. 33, a modeler can simply specify an indeterminacy source as a String value (D12) of attribute “indeterminacySource” of Uncertainty (Option 1). Alternatively, one can create a package (D13) to organize indeterminacy sources (A2.2.1), create instances of any UML Classifier to represent an indeterminacy source and apply «IndeterminacySource» on them (A2.2.2), specify the nature and description of each indeterminacy source (A2.2.3), specify measurements for each indeterminacy source (C1), and associate the created classifiers to the “referredIndeterminacySource” attribute of Uncertainty.

C. Model Risk

A modeler can optionally associate an uncertainty to Risk (D12, D13). As shown in Fig. 34, one can simply specify Risk as a String value of the “riskLevel” attribute of Uncertainty (Option 1) or one of the predefined risk levels in enumeration RiskLevel (Option 2). Alternatively, one can create a package for Risk if such a package does not exist, followed by creating classes and/or data types to represent Risks and optionally applying “Risk” (A4.3.2). Afterward, a modeler can also optionally specify measurement for Risk (C1) and link the created classes and datatypes to Uncertainty via the “riskInDTViaClass” and/or “riskInDT” attributes (A4.3.3).

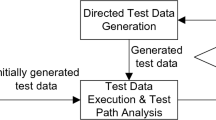

8 UncerTum validation process

In this section, we explain our validation process, which ensures that test ready models are syntactically correct and communication across state machines of various physical units constituting a CPS takes place correctly. Such validation is aimed at finding modeling errors that may have been introduced by a test modeler accidently. Once test ready models have been validated without any problems, test cases can be then generated from them. Since the execution of test ready models requires data to execute triggers, we generate data manually as follows: (1) If a trigger (Call Event/Signal Event) is guarded with a guard condition, we generate random values for all the variables involved in the guard condition that satisfy the guard condition and use these values to fire the trigger, and generate random values for all the other parameters of the call event/signal event, (2) if a trigger (Call Event/Signal Event) is not guarded, we generate random values for all the parameters of the Call Event/Signal Event to fire the trigger, (3) if a trigger corresponds to a Change Event, we randomly generate values that satisfy the change condition, (4) if a trigger corresponds to a Time Event, we ensure that the specified period of time in the event is elapsed.

To validate test ready models, we apply UAL [24] to execute them with IBM RSA Simulation Toolkit [53] (DD9 in Table 4). We decided to use UAL and IBM RSA Simulation Toolkit since our test generators are built in CertifyIt [13], which is a plugin for IBM RSA as we discussed in Sect. 1. Further, we provide a set of guidelines as an activity diagram to add UAL code on the test ready models in Sect. 8.1 and propose a set of recommended actions in Sect. 8.2, based on various types of problems identified while executing test ready models to help test modelers fix them.

8.1 UAL executable modeling guidelines

In this section, we describe the guidelines (in Fig. 35) to convert test ready models that were created based on the guidelines in the last section into executable models to facilitate validation.

As shown in Fig. 35, (1) in the CD1 activity, a test modeler can optionally specify UAL code on the model elements of classes (e.g., specifying default values for attributes and implementing bodies of operations). For example, the UAL code of the timeout attribute of SecuritySystem (Fig. 3) is false, i.e., its default value. (2) As shown in the CSD2 activity in Fig. 35, a test modeler should create a composite structure diagram (DD10, DD11 in Table 4) to model the internal structure of the Classifier (e.g., a physical unit) and interactions with other associated Classifiers (other physical units) or the operating environment of the CPS. For example, the portSecurity port of SecuritySystem (Fig. 4) specifies an interaction point, through which SecuritySystem can communicate with its surrounding environment or with Alarm or Sensor. The provided interface of the portSecurity is ISecuritySystem, which enables the reception of the IntrusionOccurred signal and other SignalReceptions in Fig. 3. Two connectors between portSecurity and portSensor (Fig. 4) are created to enable two-way communications between SecuritySystem and Sensor. (3) As shown in SM3 in Fig. 35, a test modeler can specify UAL code on the effect and entry/do/exit activity of a state in a state machine to implement a specific activity, especially the ones that involve sending signals across state machines. For example, the effect of the A transition in Fig. 6 is implemented with UAL as portSensor.send(new IntrusionOccurred(this.ID)). Since portSensor and portSecurity are connected (Fig. 4) and provided interface ISecuritySystem of portSecurity has the capability to receive the IntrusionOccurred signal (in Fig. 3), the B.1 / B.2 transition (in Fig. 7) can be triggered when SecuritySystem receives the IntrusionOccurred signal through portSecurity.

8.2 Recommendations to fix problems in test ready models

This section represents our recommendations (Table 5) to fix test ready models, once these are executed and problems are observed. For example, one observed problem is that the IntrusionOccurred signal event cannot be triggered (Fig. 7) even when it was sent out (O4, Table 5). One possible reason is that the IntrusionOccurredSignalReception in the ISecuritySystem interface of SecuritySystem is missing (SA7).

9 Evaluation

In this section, we present the process of the development and validation of UncerTum with two industrial case studies (i.e., GS and AW), which were available to us as part of the project, one real-world case study (VCS), and one case study from the literature in Sect. 9.1, the results are described in Sect. 9.2, and overall discussion and limitations are presented in Sect. 9.3.

9.1 Development and validation of UncerTum and test ready models

As previously discussed, the project has two official CPS case study providers. First, the first one is from the healthcare domain, which is about GeoSports (GS) provided by Future Position X (FPX) [9] Sweden. This case study includes attaching devices to BandyFootnote 1 players that record various measurements (e.g., heartbeat, speed, location) periodically. These measurements are communicated during a Bandy game via a receiver station to the sprint system, where coaches can monitor them at runtime. In addition, these measurements can also be used offline for analyses, for example, aimed at improving the performance of an individual player or a team. To test this CPS in a laboratory setting without real players, Nordic Med Test (NMT) [15] provides a test infrastructure to execute test cases. The second case study is about Automated Warehouse (AW) provided by ULMA Handling Systems [10], Spain. ULMA develops automated handling systems for worldwide warehouses of different natures such as Food and Beverages, Industrial, Textile, and Storage. Each handling facility (e.g., cranes, conveyors, sorting systems, picking systems, rolling tables, lifts, and intermediate storage) forms a physical unit, and together they are deployed to one handling system application (e.g., Storage). A handling system cloud supervision system (HSCS) generally interacts with diverse types of physical units, network equipment, and cloud services. Application-specific processes in HSCS are executed spanning clouds and CPS requiring different configurations. This case study implements several key industrial scenarios, i.e., introducing a large number of pallets to the warehouse, transferring the items by Stacker Crane. To test these scenarios, ULMA [10], and IK4-Ikerlan [16] developed and provided relevant simulators and emulators. Further details on the case studies can be consulted in [54].

In addition, we used a real-world case study of embedded Videoconferencing System (VCS) developed by Cisco Systems, Norway. Simula has been collaborating with Cisco since 2008. As part of our long-term collaboration under the umbrella of Certus Center [55], we have access to real VCS systems. We created test ready models for one of the real CPSs ourselves without involving Cisco, based on the previous work [18] of the second author of this paper. The fourth case study is a modified version of the SafeHome case study provided in [19]. This case study implements various security and safety features in smart homes including intrusion detection, fire detection, and flooding.

The development and validation procedure of UncerTum and test ready models is summarized in Fig. 36, which involves four stakeholders: (1) Simula Researchers (including the first three authors of this paper) play the key role of developing UncerTum and creating test ready models; (2) Use Case Providers (i.e., FPX and ULMA) provided uncertainty test requirements and real operational data from previous Bandy games in the case of GS, and manually checked the conformance of the developed test ready models to their corresponding uncertainty test requirements; (3) Test Bed Providers (NMT and ULMA/IK4-Ikerlan) provide physical and software infrastructures (including test APIs) to automate the execution of test cases and manually checked that the test ready models conform to the provided implementation of the test APIs; (4) Tool Vendor is responsible to integrate UncerTum and the proposed test case strategies to facilitate test case generation and execution. Please note that the UncerTum methodology reported in this paper is fully developed by Simula Research Laboratory, which is generic and therefore can be applied to test CPS at the three levels. Notice that it is also possible to develop different modeling methodologies than the one proposed in this paper, e.g., one such instance is reported in [56] for the application level by one of the our project partners. Such modeling methodologies can be potentially compared when needed in the future.

The development of UncerTum took place incrementally (Activities A1 and A2 in Fig. 36). First, UncerTum (A1) was developed by researchers based on U-Model and MARTE, in parallel to creating the initial test ready models (B1) for VCS, SafeHome, GS, and AW with this initial version of UncerTum. For GS and AW, uncertainty test requirements were provided by FPX and ULMA; for VCS, we had some requirements available to us from our previous work [18]; SafeHome is from the literature. Based on our experience of creating these test ready models, we further refined UncerTum (A2) and as a result UncerTum V.1 was developed. This was in turn used to further refine the initial test ready models (B2). At this point, both versions of the test ready models and UncerTum were refined once again by researchers. As a result, Test Ready Model V.1 and UncerTum V.2 were produced (Fig. 36).

UncerTum V2 and Test Ready Models V1 were then used in the modeling technique workshop (two days) conducted with the industrial use case providers (FPX and ULMA), test bed providers (ULMA/IK4-Ikerlan and NMT), tool vendor (Easy Global Market (EGM))[14], and two other research partners who focused on their own modeling methodologies and models. During the workshop, UncerTum and test ready models were presented to the participants of the workshop and their feedback was collected. In addition, the test API documentation was also presented. Based on the feedback and test APIs, a plan was devised to further refine the test ready models after the workshop. The key output of the workshop from our side was UncerTum (V.3), which is presented in this paper. Based on the feedback and test API documentation, we refined the test ready models (i.e., Test Ready Models V2 in Fig. 36) after the workshop. In parallel, the test bed providers started to develop the test infrastructures to enable the execution of test cases, which is not in the scope of this paper.

To further refine the test ready models, another two workshops were conducted: one for AW and one for GS arranged by the respective industrial partners. The first workshop took place at IK4-Ikerlan [16], where Simula researchers and ULMA participated and the workshop lasted for three days. During the workshop, detailed uncertainty test requirements, test ready models, and detailed implementation of test execution were discussed. The second workshop lasted for two days and took place at the NMT’s site in Sweden. FPX, EGM, and other research partners participated. Similar discussion as with ULMA took place with FPX/NMT. In addition, EGM presented their tool (CertifyIt) and their plans to integrate UncerTum and further implementation of test execution APIs. The outputs of these workshops were Test Ready Models V3 as shown in Fig. 36. Finally, we validated Test Ready Models V3 using IBM RSA Simulation Toolkit (see Sect. 9.2.3 for results).

9.2 Evaluation results

Descriptive statistics of the test ready models developed for the four case studies are provided in Table 6. For each case study, (1) the number of modeled UML diagrams is presented in the first row, (2) the second, third, and fourth rows represent the number of application, infrastructure, and integration-level elements, respectively, (3) the last row shows the number of uncertainties and indeterminacy sources modeled for each case study. Notice that these statistics provide an indication of the complexity and scale of the developed test ready models.

9.2.1 Mapping UUP/Model Libraries to U-Model and MARTE

This section provides the descriptive statistics for the mapping of the UUP model elements and the Model Libraries to concepts defined in U-Model and elements in MARTE.

Table 7 is divided into four main sections. First, we provide the statistics of elements in UUP/Model Libraries that can be directly mapped to U-Model. For example, «BeliefStatement» in UUP can be directly mapped to the BeliefStatement concept defined in U-Model. Second, we provide the statistics of elements in UUP/Model Libraries (e.g., BeliefInterval) that can be indirectly mapped to U-Model concepts (e.g., Ambiguity). Third, we provide statistics of elements that are introduced to UUP/Model Libraries (e.g., «BeliefElement») by extending U-Model concepts (e.g., BeliefStatement). Fourth, since the Model Libraries are developed via extending MARTE, we also provide statistics for mapping elements in UUP/Model Libraries to elements in MARTE. For example, 10 data types in the Measure library can be mapped to MARTE.

As we can see from the last row of Table 7, 33% of the elements in UUP/Model Libraries can be directly mapped to U-Model, whereas 13% of elements can be indirectly mapped to U-Model, 54% of elements were newly introduced by extending U-Model concepts, most of which are for measures. In addition, 10% of UUP/Model Libraries elements were either directly adopted from MARTE or are extensions of MARTE elements. The last column of Table 7 shows the coverage of the U-Model concepts, from which one can observe that 83% of the U-Model concepts were implemented in UUP, whereas 9% of the U-Model concepts were implemented in the Model Libraries. The remaining 8% of the concepts that were not mapped to any element of UUP, and the Model Libraries are the ones related to Knowledge. Such concepts are important at the conceptual level and are defined based on well-defined taxonomies of Knowledge [57], but are not required to be implemented in UUP and the Model Libraries. From these results, we can see that U-Model is comprehensive enough to develop UncerTum and it has potential to be used as the basis for other researchers and practitioners to develop similar kinds of uncertainty-related modeling solutions in the future. We, therefore, consider data reported here as a useful experience that can be shared with the community. On the other side, from the reported data, one can get confidence about UncerTum, as it was indeed developed by following a rigor process and a comprehensive conceptual model.

9.2.2 Application of UUP/Model Libraries

In this section, we present the results of our evaluation with the aim of assessing the applicability of UncerTum in terms of effort required to create test ready models. We conducted the evaluation from two aspects: (1) the percentage of the applied UUP/Model Libraries elements in all the test ready models (UML class diagrams and state machines) developed for all the four case studies and (2) the effort in terms of time required to apply UUP/Model Libraries. The first aspect focuses on assessing the effort in terms of the number of model elements and gives us a surrogate measure of measuring effort, whereas the second aspect focuses on measuring the effort in terms of time taken by the test modelers to create the test ready models. In our case studies, the first author (second year Ph.D. candidate) created the first version of the test ready models, which were iteratively discussed with the second (a senior scientist) and third (a chief scientist) authors of this paper. In addition, as we discussed in Sect. 9.1, the test ready models were discussed with other partners involved in the project. As it does not exist an approach comparable with UncerTum in the literature (see more discussions in Sect. 10), we, therefore, do not have a comparison baseline. Conducting controlled experiments with test modelers could be a better option, which is fortunately under the plan and is a future work item, though it is notably that conducting such controlled experiments is often time and monetarywise expensive.

As shown in Table 8, for the SafeHome case study, in total we modeled 21 classes in the class diagrams, 7 out of which have UUP stereotypes applied (e.g., the «IndeterminacySource» sensor is applied to Sensor, see Fig. 3). For the modeled state machines, three out of 17 states and seven out of 29 transitions require the application of UUP/Model Libraries. In total, as shown in the last column of the table, around 20% of the modeling elements of the SafeHome case study required the application of UUP/Model Libraries. Similarly, 12% (16, 17%) of the modeling elements for the VCS (GS, AW) case study required the application of UUP/Model Libraries. For all the four case studies, on average 16.25% of the model elements require applying UUP/Model Libraries.

Table 9 summarizes effort [measured in time (hours)] spent by the first author (the modeler) on constructing the test ready models for the four case studies. The effort is divided into two parts: time for applying standard UML notations and additional effort required for applying UUP/Model Libraries. For example, as shown in Table 9, for SafeHome, it took the modeler 4.5 h for modeling the UML class diagrams, whereas additional 0.5 h was spent on applying UUP/Model Libraries. For the UML state machines, it took 22.5 h, whereas additional 7.5 h were spent on applying UUP/Model Libraries. For SafeHome, as shown in the last column (%Time) of Table 9, it took additional 22% of time to apply UUP/Model Libraries. Similarly, for VCS it took additional 23% of time, 15% of additional time for GS and 14% of additional time for AW. On average, for all the four case studies, modeling with UUP/Model Libraries required additional 18.5% of the total modeling effort.

9.2.3 Validation of test ready models via model execution

In this section, we present the results of the validation of the test ready models developed with UncerTum for the four case studies. The overall aim is to check the correctness of the test ready models against collected (uncertainty) requirements. The test ready models were enriched with UAL (a implementation of the Action Language For Foundational UML [24], Alf [58]))—a formal language supported in IBM RSA [12] for executing UML models implemented in Java. UML models with UAL can be executed with IBM RSA Simulation Toolkit [53] as we discussed in Sect. 8.

Table 10 shows the results of the validation. We classified identified problems during the validation process into two main categories: incorrect and incomplete model elements (states and transitions) for each case study. For State, we report problems identified in state invariants and «BeliefElement» . For Transition, we report problems identified in Guard, Trigger, Effect, and «BeliefElement» . For State, in total, 79 problems \((17+62)\) were identified across the four case studies, where 17 problems were related to Incorrectness and 62 were related to Incompleteness. For «BeliefElement» related to State, we identified 32 missing stereotypes. For Transition, we discovered 122 problems, 22 (100) of which were related to Incorrectness (Incompleteness). For «BeliefElement» related to Transition, we identified 32 missing stereotypes.

The typical problems identified include: (1) A transition between two states was fired without any event (O7 in Table 5); (2) after firing a transition the state change did not occur or the state changed to an unexpected one (O1, O2 in Table 5); (3) failed to send signals across concurrent state machines (O4 in Table 5); (4) there were no non-deterministic transitions from a state (O8 in Table 5); (5) unexpected exit, block, or deadlock were observed in a state machine (O1, O9 in Table 5); (6) unreachable states were discovered (O3 in Table 5); and (7) a guard condition was always true (O2, O7 in Table 5). Notice that these problems are not a comprehensive set of problems, but demonstrate the most commonly observed ones. After simulating the test ready models, we ensure that our models are correct and complete and hence can be used for facilitating MBT.

9.2.4 Application of UTP V.2

Applying UTP V.2 is the last step of UncerTum modeling as shown in Fig. 24. In the running example, «TestItem» from the Test Context package of UTP V.2 was applied on SecuritySystem (Fig. 3) and «CheckPropertyAction» from the Arbitration Specification package of UTP V.2 was applied to the state invariant of IntrusionDetected (Fig. 7).

Table 11 reports the results of the application of UTP V.2 to the models of the case studies. Notice that we only report the descriptive statistics of the high-level packages (e.g., Arbitration Specification) of UTP V.2 instead of the number of applications of each stereotype. Notice that each high-level package contains a set of related stereotypes. For SafeHome, in total UTP V.2 stereotypes were applied 54 times, whereas 551 for VCS, 209 for GS and 247 for AW.

Based on our experience of applying UTP V.2, we discovered that it is a generic UML profile for MBT and does meet all our needs. However, we discovered that combining UUP/Model Libraries and UTP V.2 together is sufficient to model test ready models with uncertainty in our case.

9.3 Overall discussion and limitations

Based on the results presented in Sect. 9.2, we conclude our findings as follows: (1) With UncerTum, we were able to model all the identified uncertainties in the four case studies. Such modeling suggests that UncerTum is sufficiently complete to create test ready models of CPS with explicit consideration of various types of uncertainties to support testing of CPS in the presence of such uncertainties; (2) in terms of estimating the effort required to apply the UUP stereotypes and Model Libraries, we conclude that we need to apply them to on average 16.25% of model elements (Table 8). When estimating effort in terms of time, we observed that we needed on average additional 18.5% of time to apply UUP (Table 9); (3) with our model execution-based model validation, we managed to identify and fix in total 264 problems across the four case studies (Table 10) which are necessary before test case generation as otherwise generated test cases would have been incorrect.

In terms of evaluation, we would like to highlight the fact this section reported a preliminary evaluation of UncerTum from various perspectives. A more thorough evaluation would require conducting surveys and questionnaires from the participants from our industrial partners to solicit their views about the modeling methodology in terms of, for example, understandability and usability. We plan to conduct such evaluation at the end of our project when the complete results have been transferred to the industry partners with the participants who are not the co-authors of this paper in order to obtain unbiased feedback about UncerTum.

We would also like to mention that UncerTum cannot be used to model detailed continuous behaviors of a CPS, to support, for example, analyses during the system design and analysis phase or to generate code. UncerTum only supports test modeling for enabling the generation of executable test cases. Such types of models are less detailed as compared to models used for code generation or models for design time analyses. This is due to the fact that testing is always concerned with sending a stimulus to the system and observing whether the system transits to a correct state because of the stimulus according to the expected behavior specified in a test ready model, developed for the system.

10 Related work

There are some works in the literature that attempt to deal with modeling uncertainty with UML. For example, the authors of [59] proposed to perform fuzzy modeling with UML 1.5 without violating its semantics, based on theoretical analyses of UML 1.5. However, the proposed extensions to UML 1.5 were not implemented and validated. Moreover, there is no evidence to show the proposed extensions can be applicable for UML 2.x.

To model uncertainty (inherent in real-world applications) with UML class diagrams, an extension was proposed in [60,61,62], which is referred to as fuzzy UML data modeling. The extension relies on two theories: fuzzy set and possibility distribution, and was later on further extended in [63] to transform fuzzy UML data models into representations in the fuzzy description logic (FDLR) to check the correctness of fuzzy properties. Furthermore, another automated transformation was proposed in [64] to transform fuzzy UML data models into web ontologies to support automated reasoning on fuzzy properties in the context of web services.

In [65], the UML profile (named as fuzzy UML) was proposed to model uncertainty on use case diagrams, sequence diagrams, and state machines. Another work in [66] formalizes UML state diagrams with fuzzy information and transforms them into fuzzy petri nets for supporting automated verification and performance analysis. In [67], the authors developed two stereotypes: moveTo and moveTo? for UML collaboration diagrams. The first stereotype is applied when a modeler has full confidence, whereas the second stereotype is used when the modeler lacks confidence.

In comparison with these works, UncerTum focuses on modeling uncertainty in a comprehensive and precise manner by considering various types of measures such as probability, vagueness, and fuzziness. The methodologies proposed in [60,61,62] for specifying fuzzy UML data can easily be integrated with our Model Libraries when needed. Notice that UncerTum is proposed to explicitly capture the uncertainty of CPSs for the purpose of supporting MBT of CPSs under uncertainty, and there is no evidence showing that these works can be used for this purpose.

The work reported in [68] is the closest to our work, where uncertainty in time is modeled in UML sequence diagrams applied with the UML-SPT profile [69]. These sequence diagrams are then used for test case generation by taking into consideration the uncertainties in time. This work, however, only supports modeling uncertainty in time on messages of sequence diagrams. In contrast, UncerTum covers other types of uncertainties, in addition to time, such as content and environment. Moreover, the work does not account for sources of time uncertainties that are essential to be explicitly captured in order to introduce uncertainties for test execution.

In [70], the authors presented a solution to transform UML use case diagrams and state diagrams into usage graphs appended with probability information about expected use of the software. Such probability information can be obtained in several ways by relying on domain expertise or usage profiles of software, for example. Usage graphs with probability can be eventually used for testing. This work only deals with modeling uncertainty using probabilities and does not support other types of uncertainty measures such as ambiguity as supported in UncerTum. In addition, the work only supports modeling application-level uncertainties and cannot be used to model uncertainties in the other two CPS levels as UncerTum.

In [71], a language-independent solution was proposed for Partial Modeling with four types of partialities: May partiality, Abs partiality, Var partiality, and OW partiality, to denote the degree of incompleteness specified by model designers. The work also provides a solution for merging and reasoning possible partial models with tool support [72, 73]. The approach was demonstrated on UML class and sequence diagrams [71]. This work is related to our work in terms of expressing the uncertainty of modelers. In UUP, the Belief-related stereotypes and classes capture subjective views of modelers and provide modeling notations for specifying the degree of their confidence (uncertainty) on the models they built. A set of possible models may have different belief degrees provided by different belief agents at the same time. In the context of their work, the focus is on uncertainty in partial models for supporting model refinement and evolution. In contrast, UUP focuses on modeling uncertainty (lack of confidence) in test ready models to support MBT of CPSs under uncertainty.

11 Conclusion and future work