Abstract

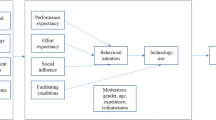

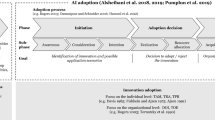

The class of business intelligence (BI) systems is widely used to guide decisions in all kinds of organizations and across hierarchical levels and functions. Organizations have launched many initiatives to accomplish adequate and timely decision support as an important factor to achieve and sustain competitive advantage. Given today’s turbulent environments it is increasingly challenging to bridge the gap between establishing a long-term strategy and quickly adopting to the dynamics in market competition. BI must address this field of tension as it was originally used to retrospectively reflect an organization’s performance and build upon stability and efficiency. This study aims to understand and achieve an agile BI from a dynamic capability perspective. Therefore, we investigate how dynamic BI capabilities, i.e., adoption of assets, market understanding, and intimacy as well as business operations, impact the agility of BI. Starting from a literature review of dynamic capabilities in information systems and BI, we propose hypotheses to connect dynamic BI capabilities with the agility to provide information. The derived hypotheses were tested using partial least squares structural equation modeling on data collected in a questionnaire-based survey. The results show that the lens of dynamic capabilities provides useful means to foster BI agility. The study identifies that technological advancements like in-memory technology seem to be a technology enabler for BI agility. However, an adequate adoption and integration of BI assets as well as the focus on market orientation and business operations are crucial to reach BI agility.

Similar content being viewed by others

Notes

BI agility may then enable further concepts, e.g., sustainable corporate advantage. But, a study beyond BI agility is not in the scope of this paper.

The result of the measurement item validation from the pre-study is shown in Appendix G.

For the questionnaire statements as well as the detailed literature sources for the items please refer to Appendix A, Table 10.

Tables 11 and 12 in Appendix B provide detailed information about the participants.

Below, the steps general evaluation and organization-specific evaluation are also referred to as general view and organization-specific implementations.

See Appendix C for details.

Further details are shown in Appendix D.

Further details are available in Appendix E.

Details for the model evaluation with controlled organization size can be found in Appendix H.

Further details of the model validation are shown in Appendix D.

Indirect effects represent a relationship between constructs via a third construct, e.g., a mediator. If x is the path coefficient between an independent and a mediator variable and y is the path coefficient between the mediator variable and the dependent variable, the indirect effect is the product of x and y. If only one path exists between two variables the total effect equals the direct effect.

Further details of the model validation are shown in Appendix E.

Further details can be found in Appendix F.

References

Abbasi A, Chen H (2008) CyberGate: a system and design framework for text analysis of computer-mediated communication. MIS Q 32(4):811–837

Aghina W, De Smet A, Weerda K (2016) Agility: it rhymes with stability. McKinsey Quarterly 2016(1):58–69

Aguirre-Urreta M, Rönkkö M (2015) Sample size determination and statistical power analysis in PLS using R: an annotated tutorial. Commun Assoc Inf Syst (CAIS) 36(3):33–51

Akter S, D’Ambra J, Ray P (2011) An evaluation of PLS based complex models: the roles of power analysis, predictive relevance and GoF index. In: Proceedings of the 2011 Americas conference on information systems (AMCIS 2011)

Aral S, Weill P (2007) IT assets, organizational capabilities, and firm performance: how resource allocations and organizational differences explain performance variation. Organ Sci 18(5):763–780. doi:10.1287/orsc.1070.0306

Baars H, Hütter H (2015) A framework for identifying and selecting measures to enhance BI-agility. In: Proceedings of the 48th Hawaii international conference on system sciences (HICSS 2015). IEEE, Kauai, pp 4712–4721

Baars H, Kemper H (2008) Management support with structured and unstructured data—an integrated business intelligence framework. Inf Syst Manag 25(2):132–148. doi:10.1080/10580530801941058

Baars H, Felden C, Gluchowski P, Hilbert A, Kemper H, Olbrich S (2014) Shaping the next incarnation of business intelligence: towards a flexibly governed network of information integration and analysis capabilities. Bus Inf Syst Eng 6(1):11–16. doi:10.1007/s12599-013-0307-z

Bagozzi RP, Yi Y, Phillips LW (1991) Assessing construct validity in organizational research. Adm Sci Q 36(3):421–458

Banker RD, Bardhan IR, Chang H, Lin S (2006) Plant information systems, manufacturing capabilities, and plant performance. MIS Q 30(2):315–337

Barney J (1991) Firm resources and sustained competitive advantage. J Manag 17:99–120

Beatty P, Herrmann D, Puskar C, Kerwin J (1998) “Don’t know” responses in surveys: Is what I know what you want to know and do I want you to know it? Memory 6(4):407–426

Bhattacherjee A (2012) Social science research: principles, methods, and practices, 2nd edn. Book 3. Open Access Textbooks. http://scholarcommons.usf.edu/oa_textbooks/3. Accessed 14 Apr 2012

Bort J (2015) SAP just shared detailed customer figures for its most important product. http://www.businessinsider.com/sap-shares-hana-customer-numbers-2015-6?IR=T. Accessed 8 Oct 2016

Butler T, Murphy C (2008) An exploratory study on IS capabilities and assets in a small-to-medium software enterprise. J Inf Technol 23(4):330–344

Caruso D (2011) Bringing agility to business intelligence. http://www.information-management.com/infodirect/2009_191/business_intelligence_metadata_analytics_ETL_data_management-10019747-1.html. Accessed 13 April 2012

Chen X, Siau K (2012) Effect of business intelligence and IT infrastructure flexibility on organizational agility. In: Proceedings of the international conference on information systems, ICIS 2012. Association for Information Systems, Orlando

Chen H, Chiang RHL, Storey VC (2012) Business intelligence and analytics: from big data to big impact. MIS Q 36(4):1165–1188

Chenoweth T, Corral K, Demirkan H (2006) Seven key interventions for data warehouse success. Commun ACM 49(1):114–119. doi:10.1145/1107458.1107464

Chin WW (1998) The partial least squares approach to structural equation modeling. In: Marcoulides GA (ed) Modern methods for business research. Lawrence Erlbaum Associates, Hillsdale, pp 294–336

Chung W, Chen H, Nunamaker JF (2005) A visual knowledge map framework for the discovery of business intelligence on the web. J Manag Inf Syst 21(4):57–84

Clavier PR, Lotriet HH, van Loggerenberg JJ (2012) Business intelligence challenges in the context of goods- and service-dominant logic. In: Proceedings of the 45th Hawaii international conference on system science (HICSS 2012)

Cohen J (1988) Statistical power analysis for the behavioral sciences, 2nd edn. Lawrence Erlbaum Associates, Hillsdale

Collier K (2011) Agile analytics: a value-driven approach to business intelligence and data warehousing. Addison-Wesley, Upper Saddle River

Collis DJ (1994) Research note: how valuable are organizational capabilities? Strateg Manag J 15(S1):143–152. doi:10.1002/smj.4250150910

Conboy K (2009) Agility from first principles: reconstructing the concept of agility in information systems development. Inf Syst Res 20(3):329–354

Conboy K, Fitzgerald B (2004) Toward a conceptual framework of agile methods: a study of agility in different disciplines. In: Proceedings of the 2004 ACM workshop on interdisciplinary software engineering research. ACM, New York, pp 37–44

Cosic R, Shanks G, Maynard S (2012) Towards a business analytics capability maturity model. In: Proceedings of the 23rd Australasian conference on information systems (ACIS)

Daniel EM, Wilson HN (2003) The role of dynamic capabilities in e-Business transformation. Eur J Inf Syst (EJIS) 12(4):282–296. doi:10.1057/palgrave.ejis.3000478

Daniel EM, Ward JM, Franken A (2014) A dynamic capabilities perspective of IS project portfolio management. J Strateg Inf Syst 23(2):95–111. doi:10.1016/j.jsis.2014.03.001

Davenport TH, Harris JG (2007) Competing on analytics: the new science of winning. Harvard Business School Press, Boston

de Leeuw ED, Hox JJ, Dillman DA (2008) International handbook of survey methodology. EAM book series. Lawrence Erlbaum Associates, New York

DeVellis RF (2003) Scale development: theory and applications. Applied Social Research Methods Series, vol 26, 2nd edn. Sage, Thousand Oaks

Dillman DA, Smyth JD, Christian LM (2009) Internet, mail, and mixed mode surveys: the tailored design method, 3rd edn. Wiley, Hoboken

Dosi G, Nelson RR, Winter SG (2000) Introduction: the nature and dynamics of organizational capabilities. In: Dosi G, Nelson RR, Winter SG (eds) The nature and dynamics of organizational capabilities. Oxford University Press, New York, pp 1–22

Dove R (2005) Fundamental principles for agile systems engineering. In: Proceedings CSER 2005. Stevens Institute of Technology, Hoboken

Drnevich PL, Croson DC (2013) Information technology and business-level strategy: toward an integrated theoretical perspective. MIS Q 37(2):483–510

Drnevich PL, Kriauciunas AP (2011) Clarifying the conditions and limits of the contributions of ordinary and dynamic capabilities to relative firm performance. Strateg Manag J 32(3):254–279. doi:10.1002/smj.882

Eisenhardt K, Martin J (2000) Dynamic capabilities: what are they? Strateg Manag J 21:1105–1121

El Sawy OA, Malhotra A, Park Y, Pavlou PA (2010) Seeking the configurations of digital ecodynamics: it takes three to tango. Inf Syst Res 21(4):835–848

Erickson J, Lyytinen K, Siau Keng (2005) Agile modeling, agile software development, and extreme programming: the state of research. J Database Manag 16(4):88–100

Evelson B (2011) Trends 2011 and beyond: business intelligence. Agility will shape business intelligence for the next decade. Forrester Research. www.forrester.com/go?objectid=RES58854. Accessed 13 April 2012

Fainshmidt S, Pezeshkan A, Lance Frazier M, Nair A, Markowski E (2016) Dynamic capabilities and organizational performance: a meta-analytic evaluation and extension. J Manag Stud 53(8):1348–1380. doi:10.1111/joms.12213

Falk RF, Miller NB (1992) A primer for soft modeling, 1st edn. University of Akron Press, Akron

Fettke P (2006) State-of-the-Art des State-of-the-Art—Eine Untersuchung der Forschungsmethode “Review” innerhalb der Wirtschaftsinformatik. Wirtschaftsinformatik 48(4):257–266

Foley É, Guillemette MG (2010) What is business intelligence? Int J Bus Intell Res 1(4):1–28. doi:10.4018/jbir.2010100101

Fornell C, Larcker DF (1981) Evaluating structural equation models with unobservable variables and measurement error. J Mark Res 18(1):39–50

Galliers RD (2007) Strategizing for agility: confronting information systems inflexibility in dynamic environments. In: Desouza KC (ed) Agile information systems: conceptualization, construction, and management. Butterworth-Heinemann, Amsterdam, pp 1–15

Gartner (2009) Gartner Says organisations can save more than $500,000 per year by rationalising data integration tools. http://www.gartner.com/it/page.jsp?id=944512. Accessed 19 Nov 2011

Gartner (2013) Gartner says in-memory computing is racing towards mainstream adoption. http://www.gartner.com/newsroom/id/2405315. Accessed 8 Oct 2016

Gartner (2016) Gartner says worldwide business intelligence and analytics market to reach $16.9 billion in 2016. http://www.gartner.com/newsroom/id/3198917. Accessed 3 Jan 2017

Gefen D, Straub D (2005) A practical guide to factorial validity using PLS-Graph: tutorial and annotated example. Commun Assoc Inf Syst 16(1):91–109

Gefen D, Straub D, Boudreau M (2000) Structural equation modeling and regression: guidelines for research practice. Commun Assoc Inf Syst 4(1):7

Gold AH, Malhotra A, Segars AH (2001) Knowledge management: an organizational capabilities perspective. J Manag Inf Syst 18(1):185–214. doi:10.1080/07421222.2001.11045669

Goldman S, Preiss K, Nagel R, Dove R (1991) 21st century manufacturing enterprise strategy: an industry-led view, vol 1. Iacocca Institute, Lehigh University, Bethlehem

Hair J, Ringle C, Sarstedt M (2011) PLS-SEM: indeed a silver bullet. J Mark Theory Pract 19(2):139–152. doi:10.2753/MTP1069-6679190202

Hair JF, Hult GTM, Ringle CM, Sarstedt M (2014) A primer on partial least squares structural equation modeling (PLS-SEM). Sage, Los Angeles

Hair JF, Hult GTM, Ringle CM, Sarstedt M (2017) A primer on partial least squares structural equation modeling (PLS-SEM), 2nd edn. Sage, Los Angeles

Helfat CE, Peteraf MA (2003) The dynamic resource-based view: capability lifecycles. Strateg Manag J 24(10):997–1010

Henseler J, Ringle CM, Sinkovics RR (2009) The use of partial least squares path modeling in international marketing. Adv Int Mark 20(2009):277–319

Henseler J, Ringle CM, Sarstedt M (2015) A new criterion for assessing discriminant validity in variance-based structural equation modeling. J Acad Mark Sci 43(1):115–135

Hoopes DG, Madsen TL (2008) A capability-based view of competitive heterogeneity. Ind Corp Change. doi:10.1093/icc/dtn008

Hughes R (2008) Agile data warehousing: delivering world-class business intelligence systems using scrum and XP. iUniverse, New York

Hulland J (1999) Use of partial least squares (PLS) in strategic management research: a review of four recent studies. Strateg Manag J 20(2):195–204. doi:10.1002/(SICI)1097-0266(199902)20:2<195:AID-SMJ13>3.0.CO;2-7

Inmon WH (1996) Building the data warehouse, 2nd edn. Wiley Computer Publishing, Wiley, New York

Kim G, Shin B, Kim KK, Lee HG (2011) IT capabilities, process-oriented dynamic capabilities, and firm financial performance. J Assoc Inf Syst 12(7):487

Knabke T, Olbrich S (2011) Towards agile BI: applying in-memory technology to data warehouse architectures. In: Lehner W, Piller G (eds) Proceedings zur Tagung Innovative Unternehmensanwendungen mit In-Memory-Data-Management: Beiträge der Tagung IMDM 2011. GI, Bonn, pp 101–114

Knabke T, Olbrich S (2013) Understanding information system agility—the example of business intelligence. In: Proceedings of the 46th Hawaii international conference on system sciences (HICSS 2013), pp 3817–3826

Knabke T, Olbrich S (2015a) Exploring the future shape of business intelligence: mapping dynamic capabilities of information systems to business intelligence agility. In: Proceedings of the 2015 Americas conference on information systems (AMCIS 2015)

Knabke T, Olbrich S (2015b) In-memory technology and the agility of business intelligence—a case study at a German Sportswear Company. In: Proceedings of the 19th Pacific Asia conference on information systems (PACIS 2015)

Knabke T, Olbrich S, Fahim S (2014) Impacts of in-memory technology on data warehouse architectures—a prototype implementation in the field of aircraft maintenance and service. In: Tremblay MC, VanderMeer DE, Rothenberger MA, Gupta A, Yoon VY (eds) Advancing the impact of design science: moving from theory to practice. Proceedings of the 9th international conference (DESRIST 2014). Springer, Berlin, pp 383–387

Knabke T, Olbrich S, Biederstedt L (2015) Considering risks in planning and budgeting process—a prototype implementation in the automotive industry. In: Donnellan B, Helfert M, Kenneally J, VanderMeer DE, Rothenberger MA, Winter R (eds) New horizons in design science: broadening the research agenda. Proceedings of the 10th international conference (DESRIST 2015). Springer, Berlin, pp 401–405

Köffer S, Ortbach K, Junglas I, Niehaves B, Harris J (2015) Innovation through BYOD? Bus Inf Syst Eng 57(6):363–375. doi:10.1007/s12599-015-0387-z

Kohli R, Devaraj S, Mahmood MA (2004) understanding determinants of online consumer satisfaction: a decision process perspective. J Manag Inf Syst 21(1):115–136

Krawatzeck R, Dinter B (2015) Agile business intelligence: collection and classification of agile business intelligence actions by means of a catalog and a selection guide. Inf Syst Manag 32(3):177–191. doi:10.1080/10580530.2015.1044336

Krawatzeck R, Dinter B, Pham Thi DA (2015) How to make business intelligence agile: the Agile BI actions catalog. In: Proceedings of the 48th Hawaii international conference on system sciences (HICSS 2015). IEEE, Kauai, pp 4762–4771

Laney D (2001) 3D data management: controlling data volume, velocity, and variety. Application Delivery Strategies

Li X, Hsieh JJP, Rai A (2013) Motivational differences across post-acceptance information system usage behaviors: an investigation in the business intelligence systems context. Inf Syst Res 24(3):659–682. doi:10.1287/isre.1120.0456

Limaj E, Bernroider EW, Choudrie J (2016) The impact of social information system governance, utilization, and capabilities on absorptive capacity and innovation: a case of Austrian SMEs. Inf Manag 53(3):380–397. doi:10.1016/j.im.2015.12.003

Luftman J, Derksen B, Dwivedi R, Santana M, Zadeh HS, Rigoni E (2015) Influential IT management trends: an international study. J Inf Technol 30(3):293–305

Makadok R (2001) Toward a synthesis of the resource-based and dynamic-capability views of rent creation. Strateg Manag J 22(5):387–401. doi:10.2307/3094265

March ST, Hevner AR (2007) Integrated decision support systems: a data warehousing perspective. Decis Support Syst 43(3):1031–1043

Marcoulides GA, Saunders C (2006) Editor’s comments: PLS: a silver bullet? MIS Q 30(2):iii–ix

Marjanovic O (2007) The next stage of operational business intelligence: creating new challenges for business process management. In: Proceedings of the 40th annual Hawaii international conference on system sciences (HICSS’07). IEEE Computer Society, Washington

MarketsandMarkets (2015) In-memory computing market by component (IMDM, IMAP), sub-components (IMDB, IMDG), solutions (OLAP, OLTP), verticals (BFSI, Retail, Government, & others)—global forecast to 2020. http://www.marketsandmarkets.com/Market-Reports/in-memory-computing-820.html. Accessed 8 Oct 2010

McCoy DW, Plummer DC (2006) Defining, cultivating and measuring enterprise agility. Gartner. http://www.gartner.com/id=491436. Accessed 9 April 2012

Members of the Senior Scholars Consortium (2011) Senior Scholars’ Basket of Journals. http://aisnet.org/?SeniorScholarBasket. Accessed 19 Feb 2015

Mikalef P, Pateli AG, van de Wetering R (2016) It flexibility and competitive performance: the mediating role of IT-enabled dynamic capabilities Turkey. In: Proceedings of the 24th European conference on information systems (ECIS 2016), Paper 176

Moss L (2007) Extreme scoping—an Agile project management approach. EIMInsight Mag 1(5)

Moss L (2009) Beware of scrum fanatics on DW/BI projects. EIMInsight Mag 3(3)

Negash S (2004) Business intelligence. Comm Assoc Inf Syst 13:15

Nevo S, Wade MR (2010) The formation and value of IT-enabled resources: antecedents and consequences of synergistic relationships. MIS Q 34(1):163–183

Nucleus Research (2014) Analytics pays back $13.01 for every dollar spent. http://nucleusresearch.com/research/single/analytics-pays-back-13-01-for-every-dollar-spent/. Accessed 10 Feb 2016

Nunnally JC, Bernstein IH (2010) Psychometric theory, 3rd edn., internat. stud. ed., [Nachdr.]. McGraw-Hill Series in Psychology. Tata McGraw-Hill Ed, New Delhi

Olszak CM (2014) Dynamic business intelligence and analytical capabilities in organizations. In: Proceedings of the e-skills for knowledge production and innovation conference 2014, pp 289–303

Olszak CM (2016) Toward better understanding and use of business intelligence in organizations. Inf Syst Manag 33(2):105–123. doi:10.1080/10580530.2016.1155946

Overby E, Bharadwaj A, Sambamurthy V (2006) Enterprise agility and the enabling role of information technology. Eur J Inf Syst 15(2):120–131. doi:10.1057/palgrave.ejis.3000600

Pankaj, Hyde M, Ramaprasad A, Tadisina SK (2009) Revisiting agility to conceptualize information systems agility. In: Lytras MD, Ordóñez de Pablos P (eds) Emerging topics and technologies in information systems. Information Science Reference, Hershey, PA. [et al.], pp 19–54

Pavlou PA, El Sawy O (2006) From IT leveraging competence to competitive advantage in turbulent environments: the case of new product development. Inf Syst Res 17(3):198–227

Pavlou PA, El Sawy OA (2011) Understanding the elusive black box of dynamic capabilities. Decis Sci 42(1):239–273

Pavlou PA, Liang H, Xue Y (2007) Understanding and mitigating uncertainty in online exchange relationships: a principal-agent perspective. MIS Q 31(1):105–136

Plattner H (2009) A common database approach for OLTP and OLAP using an in-memory column database. In: Proceedings of the 35th SIGMOD international conference on management of data. Providence

Plattner H, Zeier A (2011) In-memory data management: an inflection point for enterprise application. Springer, Berlin

Podsakoff PM, MacKenzie SB, Lee J, Podsakoff NP (2003) Common method biases in behavioral research: a critical review of the literature and recommended remedies. J Appl Psychol 88(5):879–903. doi:10.1037/0021-9010.88.5.879

Poonacha KM, Bhattacharya S (2012) Towards a framework for assessing agility. In: Proceedings of the 45th Hawaii international conference on system science (HICSS 2012), pp 5329–5338

Porter ME (1979) How competitive forces shape strategy. Harv Bus Rev 57(2):137–145

Porter ME (2008) The five competitive forces that shape strategy. Harv Bus Rev 86(1):78–93

Rifaie M, Kianmehr K, Alhajj R, Ridley MJ (2008) Data warehouse architecture and design. In: Proceedings of the IEEE international conference on information reuse and integration, IRI 2008. IEEE Systems, Man, and Cybernetics Society, Las Vegas, pp 58–63

Ringle C, Wende S, Will A (2005) SmartPLS: version 2.0.M3. http://www.smartpls.com/. Accessed 19 Nov 2015

Ringle CM, Wende S, Becker J (2015) SmartPLS 3: version 3.2.3. http://www.smartpls.com. Accessed 14 Dec 2015

Rivard S, Raymond L, Verreault D (2006) Resource-based view and competitive strategy: An integrated model of the contribution of information technology to firm performance. J Strateg Inf Syst 15(1):29–50. doi:10.1016/j.jsis.2005.06.003

Roberts N, Grover V (2012) Leveraging information technology infrastructure to facilitate a firm’s customer agility and competitive activity: an empirical investigation. J Manag Inf Syst 28(4):231–270. doi:10.2753/MIS0742-1222280409

Rockart JF, Earl MJ, Ross JW (1996) Eight imperatives for the new IT organization. Sloan Manag Rev 38(1):43–55

Rouse WB (2007) Agile information systems for agile decision making. In: Desouza KC (ed) Agile information systems: conceptualization, construction, and management. Butterworth-Heinemann, Amsterdam, pp 16–30

Sambamurthy V, Bharadwaj AS, Grover V (2003) Shaping agility through digital options: reconceptualizing the role of information technology in contemporary firms. MIS Q 27(2):237–263

Sarstedt M, Henseler J, Ringle CM (2011) Multigroup analysis in partial least squares (PLS) path modeling: alternative methods and empirical results. Adv Int Mark 22:195–218

Schaffner J, Bog A, Krüger J, Zeier A (2009) A hybrid row-column OLTP database architecture for operational reporting. In: Business intelligence for the real-time enterprise. Springer, Berlin, pp 61–74

Schwaber K (1997) SCRUM development process. In: Sutherland J, Patel P, Casanave C, Hollowell G, Miller J (eds) Business object design and implementation: OOPSLA ‘95 workshop proceedings, Austin, Texas. Springer, London, pp 117–134

Sharifi H, Zhang Z (1999) A methodology for achieving agility in manufacturing organisations: an introduction. Int J Prod Econ 62(1/2):7–22

Shin B (2003) An exploratory investigation of system success factors in data warehousing. J Assoc Inf Syst 4(1):141–170

Simon HA (1977) The new science of management decision, revised edn. Prentice-Hall, Englewood Cliffs

Simon HA (1996) The sciences of the artificial, 3rd edn. MIT Press, Cambridge

Singh R, Mathiassen L, Stachura ME, Astapova EV (2011) Dynamic capabilities in home health: IT-enabled transformation of post-acute care. J Assoc Inf Syst 12(2):163–188

Soper DS (2017) Post-hoc statistical power calculator for multiple regression [Software]. http://www.danielsoper.com/statcalc. Accessed 7 Jan 2017

Spanos YE, Lioukas S (2001) An examination into the causal logic of rent generation: contrasting Porter’s competitive strategy framework and the resource-based perspective. Strateg Manag J 22(10):907–934. doi:10.1002/smj.174

Sprague RH (1980) A framework for the development of decision support systems. MIS Q 4(4):1–26

Straub D, Boudreau M, Gefen D (2004) Validation guidelines for IS positivist research. Commun Assoc Inf Syst 13(24):380–427

Teece DJ, Pisano G, Shuen A (1997) Dynamic capabilities and strategic management. Strateg Manag J 18(7):509–533

Towill D, Christopher M (2002) The supply chain strategy conundrum: to be lean or agile or to be lean and agile? Int J Logist Res Appl 5(3):299–309. doi:10.1080/1367556021000026736

Urbach N, Ahlemann F (2010) Structural equation modeling in information systems research using partial least squares. J Inf Technol Theory Appl 11(2):5–40

van Oosterhout M, Waarts E, van Hillegersberg J (2006) Change factors requiring agility and implications for IT. Eur J Inf Syst 15(2):132–145

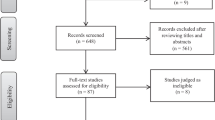

vom Brocke J, Simons A, Niehaves B, Riemer K, Plattfaut R, Cleven A (2009) Reconstructing the giant: on the importance of rigour in documenting the literature search process. In: Newell S, Whitley EA, Pouloudi N, Wareham J, Mathiassen L (eds) 17th European conference on information systems (ECIS 2009), pp 2206–2217

Wade M, Hulland J (2004) Review: the resource-based view and information systems research: review, extension, and suggestions for future research. MIS Q 28(1):107–142

Watson HJ (2009) Tutorial: business intelligence—past, present, and future. Commun Assoc Inf Syst 25(Article 39):487–511

Watson HJ, Wixom BH (2007a) Enterprise agility and mature BI capabilities. Bus Intell J 12(3):4

Watson HJ, Wixom BH (2007b) The current state of business intelligence. Computer 40(9):96–99. doi:10.1109/MC.2007.331

Watson HJ, Hogue JT, White MC (1984) An investigation of DSS developmental methodology. In: Proceedings of the 17th annual Hawaii international conference on system sciences (HICSS’84)

Webster J, Watson RT (2002) Analyzing the past to prepare for the future: writing a literature review. MIS Q 26(2):xiii–xxiii

Wernerfelt B (1984) A resource-based view of the firm. Strateg Manag J 5:171–180

Westerman G, Bonnet D, McAfee A (2014) Leading digital: turning technology into business transformation. Harvard Business Review Press, Boston

Winter S (2003) Understanding dynamic capabilities. Strateg Manag J 24(10):991–995

Wixom BH, Watson HJ (2001) An empirical investigation of the factors affecting data warehousing success. MIS Q 25(1):17–41

Wixom BH, Watson HJ (2010) The BI-based organization. Int J Bus Intell Res 1(1):13–28

Xu H, Hwang MI (2007) The effect of implementation factors on data warehousing success: an exploratory study. J Inf Inf Technol Organ 2:1–14

Zahra SA, Sapienza HJ, Davidsson P (2006) Entrepreneurship and dynamic capabilities: a review, model and research agenda. J Manag Stud 43(4):917–955

Zimmer M, Baars H, Kemper HG (2012) the impact of agility requirements on business intelligence architectures. In: Proceedings of the 45th Hawaii international conference on system science (HICSS 2012), pp 4189–4198

Zollo M, Winter SG (2002) Deliberate learning and the evolution of dynamic capabilities. Organ Sci 13(3):339–351

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Survey measures

Table 10 contains the items of our constructs and literature sources. All items are presented on a 7-point Likert scale. First, the rating about the importance in general is asked for the item. Second, the participants are asked to rate their organization.

We are well aware of the discussion about the pros and cons of “don’t know responses” (Beatty et al. 1998; Dillman et al. 2009). As the respondents’ best subjective estimation adds value to our analysis, and to avoid missing values, we used mandatory questions in the survey.

Appendix B: Survey statistics

Table 11 illustrates the work experience of the participants. Fifty-three percent have more than 10 years of work experience. Further information about the participants, such as industries or consultant rate, is shown in Table 12.

Appendix C: Common method bias

Common method bias (CMB) is a potential problem in survey research. It exists if a significant amount of spurious variance is attributed to the measurement or data collection method rather than to the constructs that the measures represent. Ex-post statistical analysis, e.g., correlation analysis, helps to identify common method variance in the collected data (Chen and Siau 2012; Urbach and Ahlemann 2010; Podsakoff et al. 2003).

According to Pavlou et al. (2007), Chen and Siau (2012) and Bagozzi et al. (1991) correlation analysis is a useful means to determine common method bias. A high correlation among the main constructs of a model of 0.9 and above (Pavlou et al. 2007; Chen and Siau 2012) indicates the evidence of common method bias. The highest correlation among the constructs in this study is 0.73 for the general view (see Table 13) and 0.83 for the organization-specific implementations (see Table 14). This suggests that no common method bias exists. The sample size is n = 110.

Appendix D: Model validation (main study): general view

We conducted a series of tests to analyze the validation of our outer measurement model (n = 110).

4.1 Indicator reliability

Indicator reliability describes the extent of consistency in what an item (or a variable or a set of variables) measures and what it intends to measure. Indicator reliability can be assessed by the standardized outer loading of an item. Indicator loadings should be significant at the 0.05 level and should exceed 0.7 or 0.5 if the squared indicators are used (Urbach and Ahlemann 2010; Hair et al. 2014; Henseler et al. 2009). For exploratory research with newly developed items lower thresholds have been proposed. Hulland (1999) suggests dropping an item if the loading is below 0.4 or 0.5. But, eliminating reflective indicators has been done with care and only if the reliability of the item is low and the elimination substantially increases the composite reliability (Henseler et al. 2009). Table 15 contains the item loadings as well as the t values and p levels.

4.2 Internal consistency reliability

We computed two criteria to assess internal consistency reliability, Cronbach’s alpha (CA) and composite reliability (CR). A high value for CA assumes that the correlation of a set of items within a construct is a good estimate for the correlation of all items within this construct (item inter-correlation) and that the items have the same meaning and range (Urbach and Ahlemann 2010; Henseler et al. 2009). CA is said to have some limitations. Therefore, we also considered composite reliability (CR) as suggested by Hair et al. (2014). Both, CA and CR should exceed the threshold of 0.7 and must not be lower than 0.6 (Urbach and Ahlemann 2010). While Nunnally and Bernstein (2010) postulate a CA of 0.95 as a desirable standard in specific cases, Straub et al. (2004) consider values above 0.95 to be suspicious. As our constructs are newly developed, we follow the threshold of 0.70 for “early stage” research of CA and CR (Nunnally and Bernstein 2010; Henseler et al. 2009). The results of the tests for internal consistency reliability are shown in Table 4.

4.3 Convergent validity

A commonly accepted criterion of convergent validity is the average variance extracted (AVE) proposed by Fornell and Larcker (1981). It measures the average amount of variance which a construct captures from its indicators in relation to the amount of measurement error. In other words, convergent validity tests whether an item measures the construct that it is supposed to measure. An AVE value of 0.5 or higher is deemed acceptable as it indicates that a construct explains more than half of the variance of its indicators (Urbach and Ahlemann 2010; Hair et al. 2014). Table 4 contains the AVE values.

4.4 Discriminant validity

Discriminant validity describes the extent to which a construct is distinct from other constructs. If discriminant validity is established, it implies that a construct is unique and items of a construct do not unintentionally measure constructs they are not supposed to measure. Discriminant validity is established to be assessed in two ways (Hair et al. 2014; Urbach and Ahlemann 2010). One way is to examine cross-loadings of indicators, and the other approach is to use the Fornell–Larcker criterion (Fornell and Larcker 1981). If cross-loadings are used, each loading of an indicator should be higher for its designated construct than for other constructs and each of the construct’s highest item is among its own items compared to the corresponding constructs’ items (Urbach and Ahlemann 2010). Gefen and Straub (2005) suggest 0.1 as a threshold for the difference between own loading and loadings on other constructs.

The Fornell–Larcker criterion postulates a construct to share more variance with its indicators than with other constructs. It is fulfilled if the square root of a construct’s AVE is higher than the correlation with any other construct.

Recently, Henseler et al. (2015) introduced a new criterion for assessing discriminant validity, the heterotrait-monotrait (HTMT) ratio of correlations criterion. Values that meet the threshold of 0.9 are deemed acceptable (Henseler et al. 2015; Gold et al. 2001). The analysis for assessing discriminant validity with HTMT was done using SmartPLS 3 (Ringle et al. 2015).

The cross-loadings are shown in Table 15, whereas the discriminant validity check according to Fornell–Larcker is depicted in Table 4. The HTMT ratio values are shown in Table 16.

Tables 17, 18 show the path coefficients, indirect effects and the corresponding significance.

Appendix E: Model validation (main study): organization-specific view

Tables 19, 20, 21, 22 show the validity assessments for the second part of our study, i.e., the specific implementations of BI at the organizations (n = 110).

Appendix F: Model validation (main study): impact of in-memory experience

The group “non-experienced IM organizations” contains participants that work at organizations using no IM technology for BI at all. For this group (n = 31) we analyzed their expectation, i.e., general view (Fig. 7).

The group “experienced IM organizations” contains participants that work at organizations that have been using IM technology for BI for at least three years. We took the organization-specific view (Fig. 8) for this group (n = 39).

Appendix G: Model validation (pre-study): results of scale development validation

Tables below contain the results of the outer measurement model validation of the preliminary study (n = 16). Tables 23 and 24 show the validation of general view, whereas Tables 25 and 26 consider the statements regarding organization-specific implementations. We assessed internal reliability using Cronbach’s alpha and composite reliability (see Tables 24, 26). All values are above the proposed threshold of 0.7 for both indicators (Urbach and Ahlemann 2010). We also checked for convergent reliability with average variance extracted (AVE). Tables 24 and 26 show that the criterion is met as all values exceed 0.5 (Urbach and Ahlemann 2010). We used the Fornell–Larcker criterion to check discriminant validity. The criterion is missed in one of six cases (Tables 24, 26). Tables 23 and 25 show the item and cross-loadings.

Appendix H: Control variable: size of organization

We assessed the robustness of the research model by using the size of organization, i.e., the number of employees, as control variable (n = 110). This analysis is shown in Table 27 and Fig. 9 (general view) and Table 28 and Fig. 10 (organization-specific view).

Rights and permissions

About this article

Cite this article

Knabke, T., Olbrich, S. Building novel capabilities to enable business intelligence agility: results from a quantitative study. Inf Syst E-Bus Manage 16, 493–546 (2018). https://doi.org/10.1007/s10257-017-0361-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10257-017-0361-z