Abstract

We address the problem of determining convergent upper bounds in continuous non-convex global minimization of box-constrained problems with equality constraints. These upper bounds are important for the termination of spatial branch-and-bound algorithms. Our method is based on the theorem of Miranda which helps to ensure the existence of feasible points in certain boxes. Then, the computation of upper bounds at the objective function over those boxes yields an upper bound for the globally minimal value. A proof of convergence is given under mild assumptions. An extension of our approach to problems including inequality constraints is possible.

Similar content being viewed by others

1 Introduction

In this article we propose a method to determine convergent valid upper bounds for the globally optimal value \(v^*\) of nonconvex minimization problems of the form

where all functions f and \(h_j\), \(j \in J = \left\{ 1,\ldots ,m \right\} \), with \(m \in {\mathbb {N}}\) and \(m \le n\), are at least continuously differentiable on some open set containing the box B. As usual in continuous optimization we are mainly interested in the case \(m < n\). Yet, our results also hold for \(m=n\). We do not assume any convexity properties for P(B) so that, in particular, the functions \(h_j\), \(j\in J\), are not necessarily linear.

Inequalities of the form \(g_i(x) \le 0\), \(i \in I\), may be treated by introducing slack variables \(y_i\), \(i \in I,\) and rewriting the constraints by

where appropriate box constraints for the slack variables can be determined using interval arithmetic [33], for instance. We point out that in general in nonlinear programming this approach is known to have some drawbacks which will be discussed in the setting of spatial branch-and-bound algorithms in Sect. 6.

The box B is defined by

with  ,

,  , and all inequalities meant componentwise. We assume all feasible points to strictly satisfy the box constraints. Although this is in contrast to standard literature assumptions in global optimization, in practice usually this should be fulfilled. Otherwise, it can be achieved by introducing additional, slightly larger box constraints and handling the original box constraints as standard inequality constraints, for example by using the aforementioned slack variable approach.

, and all inequalities meant componentwise. We assume all feasible points to strictly satisfy the box constraints. Although this is in contrast to standard literature assumptions in global optimization, in practice usually this should be fulfilled. Otherwise, it can be achieved by introducing additional, slightly larger box constraints and handling the original box constraints as standard inequality constraints, for example by using the aforementioned slack variable approach.

Additionally, we make the following assumption:

Assumption 1.1

For any globally minimal point \(x^*\) of P(B) the gradients \(\nabla h_j(x^*)\) of all functions \(h_j\), \(j \in J\), are linearly independent.

Assumption 1.1 means that each globally minimal point satisfies the Linear Independence Constraint Qualification (LICQ). Note that this assumption is mild in the sense that it has been proven to generically hold everywhere in the feasible set M(B) [17]. The latter means, in particular, that in case of its violation it may be expected to hold at least under small perturbations of the problem data. Let us remark that in nonlinear optimization the assumption of LICQ even in all locally minimal points is standard for convergence proofs. This means, in particular, that common upper bounding procedures in spatial branch-and-bound methods which rely on the local solution of NLP subproblems implicitly use this or related assumptions as well. In this sense, Assumption 1.1 is not restrictive.

For a problem of type P(B) and some predefined tolerance \(\varepsilon > 0\) a typical aim in global optimization is to determine an \(\varepsilon \)-optimal feasible point \(x^* \in M(B)\), that is, in addition to feasibility it satisfies \(f(x^*) \le f(x) + \varepsilon \) for all \(x \in M(B)\). One of the state-of-the-art approaches to globally solve problems of type P(B) in this sense is to apply spatial branch-and-bound algorithms. In such methods, the problem is iteratively branched into subproblems of the form

with \(M(X) = \left\{ x \in X \left| h_j(x) = 0, \ j \in J \right. \right\} \) and X a sub-box of B. Then, for those subproblems upper and lower bounds are constructed to approximate the globally minimal value \(v^*\) and exclude boxes from the search space.

The application of spatial branch-and-bound algorithms in global optimization goes back to Falk in [12]. Since then, various extensions, improvements and related methods have been proposed, such as branch-and-reduce [37, 38], symbolic branch-and-bound [41, 42], branch-and-contract [46] or branch-and-cut [44]. Several state-of-the-art global optimization solvers are based on implementations of branch-and-bound algorithms, see for example BARON [39], COUENNE [8], ANTIGONE [30] and LINDOGLOBAL [25]. For extensive reviews on deterministic global optimization we refer to [13, 14] and [16]. The latter in particular covers convergence results.

Typically, within a spatial branch-and-bound algorithm, lower bounds are obtained by special bounding procedures. We will discuss some of them in Sect. 2. Upper bounds, on the contrary, can be obtained by evaluating the objective function f at feasible points of P(B), either directly or indirectly by applying local solvers. However, as the problem P(B) is in general nonconvex, finding a feasible point may be as difficult as solving the problem itself. Therefore, there is no guarantee for the occurrence of feasible points within current branch-and-bound algorithms [21]. This is crucial, though, since a branch-and-bound algorithm requires convergent valid upper bounds in the termination criterion. To cope with this, in the literature sometimes it is suggested to accept \(\varepsilon _f\)-feasible solutions [13], but [21] shows that instead of upper bounds this may generate arbitrarily bad lower bounds. We shall discuss this issue in more detail in Sect. 2.2.

Several upper bound generation techniques proposed in literature suffer from the above mentioned theoretical drawbacks, although they provide sufficiently good upper bounds on average for practical applications. As we will discuss more thoroughly in Sect. 2, this also holds for the most common approach to obtain an upper bound by solving the nonconvex problem P(B) locally as proposed in [6]. In fact, we are not aware of any proof of convergence for upper bounds to the true globally minimal value \(v^*\) for this or similar approaches.

In this article, we address this issue and propose a method to determine convergent valid upper bounds for the true globally minimal value \(v^*\). Thus, our research focuses on the theoretical convergence proof. In practice, the proposed method may be used to complement existing upper bounding procedures to ensure convergence. We remark that, regarding upper bounds, by the brief term convergent we will mean convergence to the optimal value \(v^*\) throughout this paper.

To theoretically ensure termination of a spatial branch-and-bound algorithm, it is essential to either determine a specific feasible point or, at least, to verify the existence of such a point in some sub-box X of B. In the latter case, an upper bound for the objective function on X may be used as a valid upper bound for \(v^*\). In this paper, we propose a method to calculate convergent valid upper bounds for \(v^*\) based on this approach.

At least in the absence of rounding errors, by using our upper bounding procedure within a standard branch-and-bound algorithm with convergent lower bounding procedure, it should be possible to obtain a global optimization method that terminates after a finite number of iterations, since the overall lower bound as well as the overall upper bound converge to the globally minimal value. Note that, even without rounding errors, this does not hold for other mentioned upper bounding procedures such as acceptance of \(\varepsilon _f\)-feasible points or by using local solvers, since it is not clear whether sufficiently good and correct upper bounds are found at all. In contrast, for commonly used lower bounding procedures such proofs of convergence are available, and so the combination of these methods with our new upper bounding procedure leads to convergent branch-and-bound methods where lower as well as upper bounds converge to the globally minimal value, at least in the absence of rounding errors. In order to obtain a rigorous global branch-and-bound solver, finite precision arithmetic has to be taken into consideration during the implementation, which we have not done so far. Our new upper bounding procedure may be seen as the mathematical foundation of such a rigorous global solver. This will be discussed in more detail in Sect. 6.

In the literature, the problem of feasibility verification has been mainly addressed by heuristics, which are not considered in this article. In contrast, our focus is on deterministic methods. In context of rigorous upper bound determination, such approaches are introduced by Kearfott [19, 20] and Domes and Neumaier [9]. They determine approximately feasible points with conventional nonlinear solvers and then construct boxes around such points, for which the existence of a feasible point can be proven via interval Newton methods. Yet, as \(n-m\) variables have to be fixed using heuristic approaches and some parameters have to be carefully chosen, verification of feasible points is not guaranteed in general. A deterministic method to calculate convergent upper bounds for \(v^*\) and ensure the termination of branch-and-bound algorithms for purely inequality constrained problems is presented in [21]. In the context of semi-infinite programming a deterministic upper bounding procedure is derived in [31, 32] by exploiting a certain Slater condition.

The idea of feasibility verification in our method is based on a theorem of Miranda in [28] which extends the intermediate value theorem from the one-dimensional to the multidimensional case with several constraints in some sense. Instead of single points, here facets of boxes have to satisfy certain sign conditions to assure the existence of a feasible point. We will show that under mild assumptions and transformations, the conditions of this generalization are guaranteed to be satisfied in a branch-and-bound algorithm after a finite number of steps. Thus, valid upper bounds for \(v^*\) can be calculated in a deterministic way and, as the boxes gradually become smaller leading to convergent upper bounds, termination of the branch-and-bound algorithm is assured.

We start by presenting the general spatial branch-and-bound framework for global optimization and its shortcomings with respect to upper bounds for nonconvex problems in Sect. 2. In Sect. 3 we introduce the theorem of Miranda, before we use it to develop our algorithm in Sect. 4. Preliminary numerical results are presented in Sect. 5, and Sect. 6 concludes the paper with some final remarks.

The notation in this paper is standard. The gradient of a function \(h_j\), \(j \in J\), is denoted by \(\nabla h_j\) and the Jacobian of the vector-valued function \(h: {\mathbb {R}}^n \rightarrow {\mathbb {R}}^m\) with \(h=(h_1,\ldots ,h_m)\) by \({\nabla h = (\nabla h_1, \ldots , \nabla h_m)}\) or \({Dh = (\nabla h)^\intercal }\). For a box  we denote the midpoint by

we denote the midpoint by  and the diagonal length with

and the diagonal length with  .

.

2 Difficulties in branch-and-bound algorithms

In this section we present a general spatial branch-and-bound framework to solve global minimization problems and then discuss its main difficulties in the presence of nonconvex equality constraints. The framework and our notation are based on [21]. It is primarily designed to approximate the minimal value \(v^*\) of problem P(B).

2.1 The spatial branch-and-bound framework

The main principle of the branch-and-bound framework is to branch on the original problem P(B) iteratively, yielding subproblems on sub-boxes. Using these subproblems, convergent upper and lower bounds for the global minimal value \(v^*\) are calculated to approximate the globally minimal value \(v^*\). Moreover, the bounds can be used to exclude subproblems from further examination if they cannot contain a globally minimal point of the original problem. In this subsection, we will present the framework in a very generic way. Afterwards, we will discuss procedures to determine lower and upper bounds.

The main steps within the general framework are the following.

Branching In each iteration k the current box \(X^k\) is divided into two sub-boxes \(X^{k,1}\) and \(X^{k,2}\), for example along the midpoint of a longest edge. In the literature also different, and more efficient, branching techniques for spatial branch-and-bound algorithms are proposed, for example in [8] in the setting of mixed-integer variables.

Bounding For both sub-boxes \(X^{k,l}, l \in \{1,2\}\), a lower bounding procedure is used to calculate a lower bound \(\ell (X^{k,l})\) for the globally minimal value \(v^l_k\) of P restricted to \(X^{k,l}\). If the latter problem is infeasible, we formally set \(\ell (X^{k,l}):=+\infty \). For boxes \(X^{k.l}\) which do not only satisfy \(\ell (X^{k,l})<+\infty \) but also \(\ell (X^{k,l})\le u_{k-1}\) for some known overall upper bound \(u_{k-1}\) of \(v^*\) (see below), the pair \((X^{k,l}, \ell (X^{k,l}))\) is added to a list \({\mathcal {L}}\) of subproblems which are potentially needed to approximate \(v^\star \).

Then, some upper bounding procedure is used to determine a valid upper bound \({\bar{u}}_k^l\) for \(v^*\). The value \(u_k\) is defined as the smallest known upper bound for \(v^*\). It is initialized as \(u_0 = +\infty \) and updated in each iteration using \({\bar{u}}_k^1\) and \({\bar{u}}_k^2\), if possible. Commonly \({\bar{u}}_k^l\) is determined by evaluating f at some feasible point \(x^{k,l}\in M(X^{k,l})\), if such a trial point exists, and the point corresponding to the best known upper bound \(u_k\) is stored as the best known feasible point so far.

More generally, in our approach \({\bar{u}}_k^l\) is determined as an upper bound for f on some box which is only known to contain a point from \(M(X^{k,l})\), but the explicit knowledge of this point is not necessarily required. Hence, instead of storing the best known trial point so far, one could store the best known box so far. However, since the proposed algorithm focuses on the approximation of the optimal value \(v^*\), we do not state these possibilities to approximate minimal points in the below pseudo code of Algorithm 1, but we refer to the subsequent discussion for further details.

Fathoming If \({\mathcal {L}}\) contains an element \(({\widetilde{X}}, \ell ({\widetilde{X}}))\) with \(\ell ({\widetilde{X}}) > u_k\), this element can be removed from the list, since the lower bound for the minimal value on the corresponding box is larger than the best known upper bound for \(v^*\). Hence, \({\tilde{X}}\) cannot contain a globally minimal point.

Update of Lower Bound From all lower bounds \(\ell ({\widetilde{X}})\) for the global minimal value of P(B) restricted to boxes \({\widetilde{X}}\) a valid lower bound for \(v^*\) can be constructed by \(\ell _k := \min \left\{ {\ell ({\widetilde{X}})} \left| \right. ({\widetilde{X}},{\ell ({\widetilde{X}})}) \in {\mathcal {L}}\right\} \).

Stopping Criterion The algorithm terminates if \(u_k-\ell _k \le \varepsilon \) holds for a prescribed tolerance \(\varepsilon > 0\). Otherwise, a new iteration is started in which a box \({\widetilde{X}}\) corresponding to \(\ell _k\) is branched.

Algorithm 1 summarizes the procedure of the branch-and-bound method. Upon termination, the case \({\mathcal {L}}=\emptyset \) yields a certificate for infeasibility of P. Otherwise, the value \(v^*\) lies in the interval \([\ell _k,u_k]\) of length \(\varepsilon \). Moreover, if as mentioned above in each iteration the best known feasible point so far, or the best known box, are stored, upon termination the point is \(\varepsilon \)-minimal or the box contains an \(\varepsilon \)-minimal point, respectively.

2.2 Bounding and difficulties in presence of nonconvexities

The aim of Algorithm 1 is to solve nonconvex problems of type P(B). The algorithm requires convergent lower and upper bounds for the globally minimal value \(v^*\) to terminate. However, as the feasible set of P(B) is nonconvex, difficulties may occur with regard to the upper bounds, as we will show.

Typically, lower bounds at \(v^*\) are obtained by special bounding procedures. Among those are procedures based on Lipschitz constants [34], interval arithmetic [33] and its enhancements, such as centered forms [22] or optimal centered forms [7]. Another approach is to obtain lower bounds from convex relaxations. This approach is followed in the \(\alpha \)BB-method, which has been originally proposed in [6] and extended in [1,2,3,4,5, 26]. Convex relaxations are also used in COUENNE, where symbolic reformulations [27, 42], determination of convex underestimators [43] and bound tightening techniques [8] are combined. Moreover, lower bounds can be derived by exploiting duality as proposed in [10, 11] or by using piecewise linear approximations of multidimensional functions [15, 29, 35]. For several of those techniques it is proven that the determined lower bounds converge to \(v^*\) for decreasing box sizes, as they occur in Algorithm 1.

Upper bounds are commonly obtained by evaluating the objective function f at feasible points of P(B), either explicitly or implicitly by applying local solvers. However, as the problem P(B) is in general nonconvex, it may be hard to determine feasible points. Thus, it cannot be assured that within Algorithm 1, feasible points with sufficiently good objective function value are found and the current upper bound \(u_k\) is updated. This also implies that the upper bounds are not guaranteed to converge for decreasing box sizes. Therefore, Algorithm 1 is not guaranteed to terminate.

To cope with this, in the literature sometimes it is suggested to accept \(\varepsilon _f\)-feasible solutions, thats is, for a given tolerance \(\varepsilon _f > 0\) points \(x \in B\) with

are considered to be feasible (see for example [13]). The idea behind this is that the small distance between approximately feasible points and the feasible set leads to a small distance in function values as well. However, the function value of an \(\varepsilon _f\)-feasible solution is not guaranteed to be a valid upper bound for \(v^*\), as exemplarily shown in [21] for purely inequality constrained problems. In fact, for any \(\varepsilon _f\) examples can be created where the objective value of an \(\varepsilon _f\)-feasible point is arbitrarily much smaller than \(v^*\). This feasibility tolerance should not be confused with a termination tolerance or a machine precision, since even in the absence of rounding errors and even for a small value of \(\varepsilon _f\) this approach can lead to arbitrary large errors, depending on the problem at hand.

Despite providing sufficiently good upper bounds on average in practical applications, upper bound generation techniques proposed in literature suffer from the above mentioned theoretical drawbacks. The naive approach to obtain upper bounds using the optimal point of the convex relaxation after checking its feasibility, for example proposed in [26], is not guaranteed to work, as such points might never be feasible in the course of the algorithm. For box- and inequality-constrained problems in [21] an example is given, for which the \(\alpha \)BB method with such an upper bound procedure does not terminate, since \(u_0\) is never updated.

The current state-of-the-art approach to solve the nonconvex optimization problem P(B) (or some restricted subproblem P(X)) locally, as proposed in [6], has several drawbacks: Firstly, it is computationally expensive. Liberti and Maculan even identify it as the most expensive step in current branch-and-bound implementations [24]. Secondly, for nonconvex feasible sets it is not guaranteed that the local solver will eventually find a feasible point of P(B). Thus, usually \(\varepsilon _f\)-feasible solutions are accepted, see for example [6, 13]. However, as stated before, in such a case it is not ensured that valid upper bounds for the true globally minimal value \(v^*\) are determined.

Hence, neither of the two approaches is suitable to guarantee the termination of Algorithm 1. In the following we will present a method which determines a valid upper bound for \(v^*\) for a sufficiently small box X based on verifying the existence of a feasible point of the problem P(B). If a feasible point in X is verified, an upper bound for \(v^*\) does not have to be determined by evaluating the objective function f at a specific feasible point, but it suffices to apply an upper bounding procedure to the whole box X.

The obtained upper bounds converge for decreasing box sizes and, thus, incorporating our method into Algorithm 1 is sufficient to ensure termination, i.e. convergence to \(v^*\). This can be proven using standard arguments from global optimization [16]. The main idea is that in a branch-and-bound algorithm the boxes eventually become sufficiently small and, hence, upper bounds for \(v^*\) can be determined in a finite number of steps. Feasibility verification will also succeed for any sufficiently small box containing a globally minimal point of P(B), and with smaller such boxes the upper bounds for \(v^*\) gradually become better and converge to \(v^*\). Contrary to lower bounding procedures, we are not aware of any convergent upper bounding procedure proposed so far.

3 Miranda’s theorem

Before presenting our algorithm to calculate convergent upper bounds, we will first introduce the main idea that our approach is based on. As stated before, our algorithm is designed to verify the existence of a feasible point within some sub-box \(X \subseteq B\), this means a point \({\widehat{x}} \in X\) satisfying \(h_j({\widehat{x}}) = 0\) for all \(j \in J\). If the existence of a feasible point in a sub-box X is verified, an upper bound calculated on this whole box can serve as a valid upper bound for \(v^*\).

The main idea of feasibility verification is based on Miranda’s Theorem, sometimes also referred to as Poincaré–Miranda Theorem. It was first proven by Carlo Miranda in 1940, see [28], and in some sense can be thought of as generalizing Bolzano’s intermediate value theorem to the multidimensional case. It states that if not single points but whole facets

of a box X, with \(i=1,\ldots ,n\), satisfy certain sign conditions, the existence of a feasible point in X is guaranteed. Formally, this is stated in the following result.

Theorem 3.1

(Miranda’s Theorem, from [28]) Let \(X \subseteq {\mathbb {R}}^n\) be a box and \(h=(h_1,\ldots ,h_n): X \rightarrow {\mathbb {R}}^n\) a continuous function. Moreover, let h satisfy the conditions

for all \(i=1,\ldots ,n\). Then \(h(x) = 0\) has a solution in X.

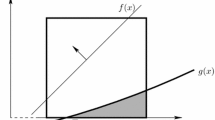

Proofs of this theorem can be found in [23, 45] among others. The number of facets of a n-dimensional box is 2n and, hence, can be iterated over at low computational costs. Figure 1a shows an example where all conditions of Theorem 3.1 are satisfied.

Illustration of Theorem 3.2 and its difficulties

Note that by index changes arbitrary allocations of facet pairs and functions are possible in the conditions (2) and that the sign conditions for the facets could also be reversed to guarantee a zero of h in X. However, later on we will transform the system in such a way that it is reasonable to use the sign conditions as stated above.

Theorem 3.1 can be extended so that it can be applied not only for the same number of equality constraints m and variables n, but more general for \(m \le n\) and, thus, for problems we are interested in.

Theorem 3.2

(Extended Miranda’s Theorem) Let \(X \subseteq {\mathbb {R}}^n\) be a box and \(h=(h_1,\ldots ,h_m): X \rightarrow {\mathbb {R}}^m\) a continuous function on X with \(m \le n\). Moreover, let there exist a set of indices \(S := \left\{ s_1, \ldots , s_m \right\} \) with \(S \subseteq \left\{ 1,\ldots ,n \right\} \) so that h satisfies the conditions

for all \(j \in J\). Then \(h(x) = 0\) has a solution in X.

Proof

Without loss of generality we assume that \(S = \left\{ 1, \ldots , m \right\} \), so that

holds for all \(j \in J\). For arbitrary \({\widehat{x}}_\ell \in X_\ell \), with \(\ell \in \left\{ m+1, \ldots , n \right\} ,\) we define \({\widehat{h}} = ({\widehat{h}}_1, \ldots , {\widehat{h}}_m): X_1 \times \cdots \times X_m \rightarrow {\mathbb {R}}^m\) with

With the conditions in (4) for all \(x \in X_1 \times \cdots \times X_m\) and \(j \in J\) we have

so that with Theorem 3.1 it follows that \({\widehat{h}}\) has a zero \(({\widehat{x}}_1, \ldots , {\widehat{x}}_m)\) in \(X_1 \times \cdots \times X_m\).

From the definition of \({\widehat{h}}\) it follows \(h({\widehat{x}}_1,\ldots ,{\widehat{x}}_m, {\widehat{x}}_{m+1}, \ldots , {\widehat{x}}_n) = 0\) and since \(({\widehat{x}}_1, \ldots , {\widehat{x}}_n) \in X\), the function h has a zero in X. \(\square \)

Theorem 3.2 provides a sufficient, but not necessary condition for the existence of feasible points in X. One can think of simple examples of feasible points within boxes for which the conditions (3) are not satisfied. Additionally, even if they are, it is not clear in advance which function \(h_j\), \(j \in J\), does satisfy the sign conditions on which pair of facets \(\left\{ {\underline{X}}_i, {\overline{X}}_i \right\} , i = 1,\ldots ,n\). Therefore it is not possible to exclude facets from examination or fix variables without excluding possible feasible points from the search space.

For an upper bounding procedure that ensures termination of spatial branch-and-bound algorithms in global optimization we shall overcome these difficulties in the remaining part of this article. We will start by discussing different scenarios, in which the conditions (3) are not satisfied even though a feasible point exists in X.

Case 1

(The box X is too large) Despite containing a feasible point the conditions (3) in Theorem 3.2 might not be satisfied for a box X if it is too large, as illustrated exemplarily in Fig. 1b. While for \(h_2\) the conditions are satisfied, they are not for \(h_1\), even though the point \({\widehat{x}} = (0,0) \in X\) is feasible. However, if the box is shrunk sufficiently around \({\widehat{x}}\), the existence of a feasible point can be verified by using Theorem 3.2.

Case 2

(Feasible point on a facet of X) Further difficulties might occur if a feasible point \({\widehat{x}} \in X\) is located on a facet of X. In this case, the conditions (3) might still be satisfied for sufficiently small boxes, but this is not guaranteed, as shown in Fig. 1c. The function \(h_2\) takes solely positive values on \({\underline{X}}_2\). Even if the box X is shrunk with \({\widehat{x}}\) remaining feasible, \(h_2\) will never take negative values on this facet. In Sect. 4.3 we will present an approach which makes it still possible to verify the existence of feasible points using Theorem 3.2 in such a case.

Case 3

(Gradients in feasible point not directed towards the facets) The conditions (3) in Theorem 3.2 might also not be satisfied if the gradients \(\nabla h_j({\widehat{x}})\), \(j \in J\), in a feasible point \({\widehat{x}}\) have no unit direction and, hence, are not directed towards the facets \({\overline{X}}_j\). This is shown in Fig. 1d for the function \(h_1\). It is not guaranteed that this difficulty vanishes for any type of smaller boxes around \({\widehat{x}}\). As within a branch-and-bound algorithm usually the rule to construct new boxes is predetermined, this case requires a more specific approach, which we will present in Sect. 4.4.

4 Convergent upper bounds for the globally minimal value

In this section we will develop the announced algorithm to calculate convergent valid upper bounds for the globally minimal value \(v^*\) of P(B) based on Theorem 3.2.

A straightforward idea might be to consider every box that occurs during the branch-and-bound algorithm and to check whether the conditions of Theorem 3.2 are fulfilled. If this is the case, a valid upper bound at the globally minimal value could be obtained by computing an upper bound at the objective function over the whole box, for instance by applying interval arithmetic or any other convergent bounding procedure.

However, as already discussed at the end of the previous section, in Theorem 3.2 only a sufficient, but not necessary condition for verifying the existence of a feasible point is provided. Therefore, this approach does not necessarily need to lead to a convergent procedure. In this section, we will show how the boxes obtained by standard branch-and-bound algorithms can be altered so that the conditions are fulfilled, provided a sufficiently small box contains a feasible point.

In order to achieve this we start by considering an artificial and very simple case where

-

the box midpoint equals the feasible point \({\widehat{x}}\),

-

the gradient \(\nabla h_j({\widehat{x}})\) is the j-th unit vector for all \(j \in J\) and

-

the box X is sufficiently small.

Then it can be ensured that the conditions of Theorem 3.2 are satisfied, as we will prove in Sect. 4.1. Afterwards in Sects. 4.2, 4.3 and 4.4, we will sequentially generalize our results to more realistic sequences of boxes that occur in spatial branch-and-bound algorithms by addressing the difficulties mentioned in Sect. 3, and finally state our algorithm for the general case.

4.1 Feasibility verification in a simple case

In this subsection we consider the simple case where the gradient of \(h_j\), \(j \in J\), in a feasible point \({\widehat{x}}\) equals the j-th unit vector \(e_j\), that is, \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). Moreover, we assume the midpoint \({{\,\mathrm{mid}\,}}(X)\) of a box X to be feasible, so we have \({\widehat{x}} = {{\,\mathrm{mid}\,}}(X)\) with \(h_j({\widehat{x}}) = 0\) for all \(j \in J\).

We will show that in this specific case, for a sufficiently small box X, the conditions of Theorem 3.2 are guaranteed to be satisfied and hence, the existence of a feasible point can be verified. The intuition behind this is that starting from \({\widehat{x}}\) the function \(h_j\) will take positive values in direction \(e_j\) and any direction towards \({\overline{X}}_j\) for sufficiently small steps and for all \(j \in J\). On the other hand, \(h_j\) will take negative values in direction \(-e_j\) and any other direction towards \({\underline{X}}_j\) for sufficiently small steps.

To derive the above result, we will analyze exhaustive sequences of boxes, that is, sequences \(\big (X^k\big )_{k \in {\mathbb {N}}}\) of non-empty boxes \(X^k \subseteq B\) with \(X^{k+1} \subseteq X^k\) (nestedness) and \({\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big (X^k\big )=0}\). Additionally, we assume that the feasible point \({\widehat{x}}\) is midpoint of each element \(X^k\). We will show that there is a \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widehat{k}}\) Miranda’s conditions are satisfied. To make this work, in addition, we have to ensure that boxes do not become increasingly deformed. Therefore we will only consider non-deformed exhaustive sequences of boxes, i.e. sequences for which the maximum ratio of the length of box edges is bounded above by a constant \({\bar{t}} < \infty \) for all boxes \(X^k\).

Definition 4.1

(Non-Deformed Sequence of Boxes) Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with the maximum ratio of the length of box edges

bounded above by a constant \({\bar{t}} < \infty \). Then we call \(\big (X^k\big )_{k \in {\mathbb {N}}}\) a non-deformed sequence of boxes.

The following lemma shows that this additional requirement does not exclude the typical branching rule of standard branch-and-bound algorithms in global optimization to divide a box along a longest edge. We believe that this may also be shown for other branching rules along the lines of this proof.

Lemma 4.2

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes where \(X^{k+1}\) is constructed by dividing \(X^k\) along a longest edge. Then \(\big (X^k\big )_{k \in {\mathbb {N}}}\) is non-deformed.

Proof

For simplicity we denote the lengths of the edges \(i=1,\ldots ,n\) by  . For \(n=1\) the ratio \(t^k\) is trivially 1 and thus, bounded above for all \(k \in {\mathbb {N}}\) . For \(n > 1\) we distinguish two cases:

. For \(n=1\) the ratio \(t^k\) is trivially 1 and thus, bounded above for all \(k \in {\mathbb {N}}\) . For \(n > 1\) we distinguish two cases:

First, let \(t^k > 2\). As a longest edge is bisected we obtain

Now, let \(t^k \le 2\). Then, after dividing a longest edge the length of the shortest edge is exactly half the length of the previously longest edge. That is, we have \(\min _{i = 1,\ldots ,n} \varDelta x_i^{k+1} = \frac{1}{2} \cdot \max _{i = 1,\ldots ,n} \varDelta x_i^k\). With this we obtain

where the second equality follows from the bisection of a longest edge.

Summarizing, the ratio \(t^k\) is bounded above by \({\bar{t}} := \max \left\{ t^1, 2 \right\} < \infty \) for all \(k \in {\mathbb {N}}\).

\(\square \)

For non-deformed exhaustive sequences of boxes we can now show that there is a \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widehat{k}}\) the conditions of Theorem 3.2 are satisfied. This is stated formally in the following result.

Theorem 4.3

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed exhaustive sequence of boxes with \({\widehat{x}} := {{\,\mathrm{mid}\,}}\big (X^k\big )\) for all \(k \in {\mathbb {N}}\) and \({\widehat{x}}\) feasible. Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m\), \(h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and let the gradients satisfy the conditions \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that the conditions (3) of Theorem 3.2 are satisfied for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\).

Proof

Consider an arbitrary element \(X^k \in \big (X^k\big )_{k \in {\mathbb {N}}}\). Without loss of generality we examine constraint \(h_1\). Let \({\widetilde{x}}^k\) be a maximum point of \(h_1\) on \({\underline{X}}_1^k\). Using Taylor’s Theorem with \(\lim _{{\widetilde{x}}^k \rightarrow {\widehat{x}}} \omega \big ({\widetilde{x}}^k\big ) = \omega ({\widehat{x}}) = 0\) we obtain

With the feasibility of \({\widehat{x}}\), \(\nabla h_1 = e_1\) and \({\widetilde{x}}^k \in {\underline{X}}_1^k\) it follows

With the box property \(\Vert x - {{\,\mathrm{mid}\,}}(X) \Vert _2 \le \frac{1}{2} {{\,\mathrm{diag}\,}}(X)\) for all \(x \in X\) we obtain

Using the equivalence of norms and that \(\big (X^k\big )_{k \in {\mathbb {N}}}\) is non-deformed we have

and hence,

Since for k approaching infinity we have \({\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big (X^k\big )=0}\) but also \({\widetilde{x}}^k \rightarrow {\widehat{x}}\) and hence \(\omega \big ({\widetilde{x}}^k\big ) \rightarrow \omega ({\widehat{x}}) = 0\), the first term on the right hand side determines the sign of the whole expression. With \(n, {\bar{t}} > 0\), it is always negative, so that there is some \(k^1_1 \in {\mathbb {N}}\) so that for all \(k \ge k^1_1\) and all \(x \in {\underline{X}}_1^k\) we have

Analogously, with \({\check{x}}^k\) the minimum point of \(h_1\) on \({\overline{X}}_1^k\) there is some \(k^2_1 \in {\mathbb {N}}\) so that for all \(k \ge k^2_1\) and all \(x \in {\overline{X}}_1^k\) we have

We proceed analogously for the equality constraints \(h_2, \ldots , h_m\). Thus, for all \(k \ge {\widehat{k}} := \max _{\ell = 1,2} \max _{j=1,\ldots ,m} \left\{ k^{\ell }_j \right\} \) the assertion follows. \(\square \)

Consequently, for this specific case an algorithm based on Theorem 3.2 is able to verify the existence of a feasible point within some box \(X^k\) and to determine a valid upper bound for \(v^*\).

4.2 A first extension of the simple case

In Sect. 4.1 we assume the midpoint \({{\,\mathrm{mid}\,}}\big (X^k\big )\) of each box \(X^k\) in \(\big (X^k\big )_{k \in {\mathbb {N}}}\) to be feasible. Now we relax this assumption and generalize our results to exhaustive sequences of boxes for which the feasible point \({\widehat{x}}\) only becomes midpoint asymptotically, but still \(\nabla h_j({\widehat{x}}) = e_j\) holds for all \(j \in J\). We will show that for sufficiently small boxes, i.e. after a finite number of steps within a branch-and-bound algorithm, still the conditions (3) in Theorem 3.2 are guaranteed to be satisfied. To begin with, we introduce the concept of asymptotically centered sequences of boxes.

Definition 4.4

(Asymptotically Centered Sequence of Boxes) Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}},\) and

Then, we call the sequence \(\big (X^k\big )_{k \in {\mathbb {N}}}\) asymptotically centered on \({\widehat{x}}\).

Definition 4.4 states that a sequence of boxes is asymptotically centered on a point \({\widehat{x}}\) if this point asymptotically becomes the midpoint of elements of the sequence. Note that for this property it is not sufficient that the midpoint \({{\,\mathrm{mid}\,}}\big (X^k\big )\) of \(X^k\) solely converges to \({\widehat{x}}\) for k approaching infinity.

Although \({\widehat{x}}\) only becomes midpoint of \(X^k\) asymptotically, the following theorem proves that for a non-deformed and exhaustive sequence of boxes \(\big (X^k\big )_{k \in {\mathbb {N}}}\), which is asymptotically centered on a feasible point \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}}\), there still is a \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widehat{k}}\) the conditions of Theorem 3.2 are satisfied.

Theorem 4.5

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed sequence of boxes asymptotically centered on a feasible point \({\widehat{x}}\) of P(B) and with \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big (X^k\big ) = 0\). Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and the gradients satisfy the conditions \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that the conditions (3) of Theorem 3.2 are satisfied for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\).

Proof

We follow the same approach as in the proof of Theorem 4.3 and obtain

Introducing the ratio

we can express the first term in terms of a box edge. Then, using norm properties and the non-deformity of \(\big (X^k\big )_{k \in {\mathbb {N}}}\) it follows

Since we assume \(X^k\) to be an element of a sequence of boxes asymptotically centered on \({\widehat{x}}\), \(s\big (X^k\big )\) converges to \(\frac{1}{2}\) and thus, \(-\frac{s\big (X^k\big )}{\sqrt{n} \ {\bar{t}}}\) converges to a non-zero constant when \({{\,\mathrm{diag}\,}}\big (X^k\big )\) approaches 0. Hence, there is some \(k_1^1 \in {\mathbb {N}},\) so that for all \(k \ge k_1^1, k \in {\mathbb {N}},\) and for all \(x \in {\underline{X}}_1^k\) we have

For \({\overline{X}}_1^k\) and \(h_2, \ldots , h_m\) we proceed analogously. With the same line of argumentation as for Theorem 4.3 the assertion follows. \(\square \)

4.3 A further extension of the simple case

In Sect. 3 we demonstrated that the conditions (3) of Theorem 3.2 may not be satisfied if a feasible point is located on a facet of X. In this subsection we will address this challenge and present an approach to guarantee that upper bounds to \(v^*\) can be determined in a finite number of steps within a branch-and-bound algorithm.

The main idea to handle such situations is to artificially extend a box X to a box \({\widetilde{X}}\) so that all feasible points in X are interior points of \({\widetilde{X}}\). Thereby, for each exhaustive sequence of boxes \(\big (X^k\big )_{k \in {\mathbb {N}}}\) with \({\widehat{x}} \in X^k\) we obtain an additional sequence \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) with \(X^k \subseteq {\widetilde{X}}^k\). This sequence is not guaranteed to be nested any longer, but this is no restriction to our results. Additionally, we construct this sequence such that it is asymptotically centered on \({\widehat{x}}\). Then, using Theorem 3.2 the existence of a feasible point in some box \({\widetilde{X}}^k\) can be verified, as shown in the previous subsection. Generally, the verification of a feasible point in \({\widetilde{X}}^k\) does not guarantee the existence of a feasible point in \(X^k\). However, we will demonstrate that it is sufficient to determine valid upper bounds for \(v^*\).

The following lemma provides a method to construct a sequence with the desired properties for boxes with edge lengths smaller than 1. This is sufficient for our purposes, as we are only interested in convergence properties for small boxes.

Lemma 4.6

(Construction of a Centered Sequence of Super-Boxes) Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be an exhaustive sequence of boxes with  for all \(i=1,\ldots ,n\) and \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}}\). Furthermore, let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with

for all \(i=1,\ldots ,n\) and \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}}\). Furthermore, let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with  , \({{\,\mathrm{mid}\,}}\big ({\widetilde{X}}^k\big ) = {{\,\mathrm{mid}\,}}\big (X^k\big )\) and

, \({{\,\mathrm{mid}\,}}\big ({\widetilde{X}}^k\big ) = {{\,\mathrm{mid}\,}}\big (X^k\big )\) and  for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\). Then, \(X^k \subseteq {\widetilde{X}}^k \) for each \(k \in {\mathbb {N}}\) and the sequence \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) is centered on \({\widehat{x}}\).

for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\). Then, \(X^k \subseteq {\widetilde{X}}^k \) for each \(k \in {\mathbb {N}}\) and the sequence \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) is centered on \({\widehat{x}}\).

Proof

With  and

and  we have \(X^k \subseteq {\widetilde{X}}^k \) for all \(k \in {\mathbb {N}}\). To prove the second assertion, we will show that

we have \(X^k \subseteq {\widetilde{X}}^k \) for all \(k \in {\mathbb {N}}\). To prove the second assertion, we will show that  for an arbitrary \(i \in \left\{ 1,\ldots ,n \right\} \).

for an arbitrary \(i \in \left\{ 1,\ldots ,n \right\} \).

We start with the case \({\widehat{x}}_i \ge {{\,\mathrm{mid}\,}}\big (X^k_i\big )\). Using the definition of \({\widetilde{X}}^k\) and basic box properties it follows

For an exhaustive sequence of boxes \(\big (X^k\big )_{k \in {\mathbb {N}}}\) we have  . Hence, we obtain \(\lim _{k \rightarrow \infty } s\big ({\widetilde{X}}^k\big ) \le \frac{1}{2}\). Moreover, with \({\widehat{x}}_i \ge {{\,\mathrm{mid}\,}}\big (X^k_i\big )\) we have

. Hence, we obtain \(\lim _{k \rightarrow \infty } s\big ({\widetilde{X}}^k\big ) \le \frac{1}{2}\). Moreover, with \({\widehat{x}}_i \ge {{\,\mathrm{mid}\,}}\big (X^k_i\big )\) we have

and hence the assertion.

For the case \({\widehat{x}}_i < {{\,\mathrm{mid}\,}}\big (X^k_i\big )\) with the same line of argumentation we obtain  and

and

from which the assertion follows for k approaching infinity. \(\square \)

Figure 2 illustrates the proven result for an exemplary sequence of boxes \(X^k\) where consecutive boxes are constructed by division along a longest edge.

A sequence of boxes \(\big (X^k\big )_{k \in {\mathbb {N}}}\) and the sequence \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) constructed using Lemma 4.6. The latter is asymptotically centered on \({\widehat{x}}\)

Using this construction we obtain the following result with regard to the verification of the existence of feasible points:

Corollary 4.7

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed exhaustive sequence of boxes with  for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}}\) and \({\widehat{x}}\) feasible. Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and the gradients satisfy the conditions \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) the conditions (3) of Theorem 3.2 are satisfied for the boxes \({\widetilde{X}}^k\) constructed as in Lemma 4.6.

for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \({\widehat{x}} \in X^k\) for all \(k \in {\mathbb {N}}\) and \({\widehat{x}}\) feasible. Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and the gradients satisfy the conditions \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) the conditions (3) of Theorem 3.2 are satisfied for the boxes \({\widetilde{X}}^k\) constructed as in Lemma 4.6.

Proof

With Lemma 4.6 it follows that the constructed sequence of boxes \(({\widetilde{X}})_{k \in {\mathbb {N}}}\) is asymptotically centered on \({\widehat{x}}\). For a sufficiently small box \(X^k\) also \({\widetilde{X}}^k\) becomes sufficiently small, since \({{\,\mathrm{diag}\,}}\big (X^k\big ) \rightarrow 0\) implies \({{\,\mathrm{diag}\,}}\big ({\widetilde{X}}^k\big ) \rightarrow 0\). Consequently, there is some \({\widehat{k}}\) so that all assumptions of Theorem 4.5 are satisfied and the assertion follows. \(\square \)

Therefore, if \(X^k\) contains a feasible point and \(X^k\) is sufficiently small, it is guaranteed that with the construction of \({\widetilde{X}}^k\) the existence of this point can be verified using Theorem 3.2, as \(X^k \subseteq {\widetilde{X}}^k\). In a branch-and-bound algorithm with additional construction of boxes \({\widetilde{X}}^k\) this is the case after a finite number of steps. On the contrary, it is also possible that the conditions (3) in Theorem 3.2 are satisfied due to a point \({\widehat{x}} \in {\widetilde{X}}^k\) with \(h({\widehat{x}}) = 0\) but \({\widehat{x}} \notin X^k\) or even \({\widehat{x}} \notin B\). We will discuss this aspect in detail later on and show that it is no strong restriction to our approach.

4.4 The general case

In this subsection we further generalize our results from the previous subsections by dropping the assumption \(\nabla h_j({\widehat{x}}) = e_j\) for all \(j \in J\). In this case it is possible, but not guaranteed that the conditions (3) of Theorem 3.2 are satisfied for sufficiently small boxes. The main idea to still guarantee the verification of the existence of feasible points based on this theorem, is to transform the system in such a way that at least asymptotically all conditions from Corollary 4.7 are satisfied. In particular, that all gradients of constraints in a feasible point have unit direction at least asymptotically.

First, we will introduce our transformation approach in Sect. 4.4.1, afterwards in Sect. 4.4.2 we will present our algorithm and prove that within a branch-and-bound context it determines valid upper bounds for \(v^*\) after a finite number of steps.

4.4.1 Transformation of the system

As before, we will first construct a sequence of boxes \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) with midpoints \({{\,\mathrm{mid}\,}}\big (X^k\big )\) which is asymptotically centered on \({\widehat{x}}\). Then, to transform the system in the desired manner, for each \(k \in {\mathbb {N}}\) we will apply a coordinate transformation from the standard basis of \({\mathbb {R}}^n\) to another basis \({\mathcal {B}}_k\).

Such a coordinate transformation can be described with a matrix \(A_k\) whose columns are the basis vectors of \({\mathcal {B}}_k\). Then, we obtain \(x \mapsto y^k = A_k^{-1}x\) for the transformation of a point x in the original coordinates to a point y in the new coordinates and \(y^k \mapsto x = A_k y^k\) for the reverse transformation from the new to the original coordinates. In the following, \(y^k\) will always denote the image of a point x under \(A_k^{-1}\) and \({\widehat{y}}^k\) that of a feasible point \({\widehat{x}}\). The functions \(h_j\), \(j \in J\), can be expressed in the new coordinates by

Using the chain rule it follows \(\nabla {\widetilde{h}}^k_j ({\widehat{y}}^k) = A_k^{\intercal } \nabla h_j({\widehat{x}})\) for the gradient of \({\widetilde{h}}^k_j\) in the transformed feasible point \({\widehat{y}}^k\).

Our aim is to determine \(A_k\) in such a way that the gradients of \({\widetilde{h}}^k_j\) in the new coordinates have unit direction at a feasible point \({\widehat{y}}^k\). As the feasible points and their gradients are not yet known, we will use the box midpoints instead. With \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) asymptotically centered on \({\widehat{x}}\), asymptotically the feasible point \({\widehat{x}}\) becomes box midpoint and the coordinate transformation will be conducted in \({\widehat{x}}\). This will prove to be sufficient to verify the existence of a feasible point in a finite number of steps in a branch-and-bound algorithm with additional construction of boxes \({\widetilde{X}}^k\).

We want to choose the (n, n)-matrix \(A_k^{\intercal }\) such that

If \(Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))\) is quadratic and the gradients \(\nabla h_j({{\,\mathrm{mid}\,}}\big (X^k\big ))\) are linearly independent, using the inverse of \(Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))\) we obtain

If \(Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))\) is not quadratic, since \(m < n\), we will extend the matrix to a basis of \({\mathbb {R}}^n\) by adding a basis of its null space \({{\,\mathrm{null}\,}}(Dh({{\,\mathrm{mid}\,}}\big (X^k\big )))\) and then proceed analogously. Denoting the extended matrix with \(\overline{Dh(m\big (X^k\big ))}\) we obtain

With Assumption 1.1, linearly independent gradients are guaranteed asymptotically for sequences of boxes asymptotically centered on a globally minimal point \(x^*\) of P(B). Moreover, as the following lemma shows, this condition is also satisfied after a finite number of steps within a branch-and-bound algorithm with additional construction of boxes \({\widetilde{X}}^k\).

Lemma 4.8

Let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes centered on a globally minimal point \(x^*\) of P(B) and with \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big ({\widetilde{X}}^k\big ) = 0\), and let Assumption 1.1 hold. Then, there is a \({\widetilde{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widetilde{k}}\) the coordinate transformation using \(A_k = \left( \overline{Dh({\widehat{x}})}\right) ^{-1}\) can be applied in \({{\,\mathrm{mid}\,}}\big (X^k\big )\).

Proof

With Assumption 1.1 we have linearly independent gradients of \(h_j\), \(j \in J\) in \(x^*\), and hence, for sequences \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) asymptotically. Additionally, all functions \(h_j\), \(j \in J\), are presumed to be continuously differentiable, so linear independence will also be guaranteed for midpoints sufficiently close to \(x^*\). Within a branch-and-bound algorithm such midpoints occur after a finite number of steps. \(\square \)

Based on this lemma, in the following we will restrict our results to sequences of boxes asymptotically centered on globally minimal points although they also hold for any sequence asymptotically centered on a feasible point \({\widehat{x}}\) with linearly independent gradients \(\nabla h_j({\widehat{x}})\).

As intended, with the above transformation asymptotically we obtain unit directed gradients in the transformed globally minimal point \(y^{*k}\), as Lemma 4.9 shows. In its proof we shall need that the matrices \(A^{-1}_k = \overline{D h({{\,\mathrm{mid}\,}}\big (X^k\big ))}\), \(k\in {\mathbb {N}}\), form a convergent sequence. While this is clear in the case \(m=n\), for \(m<n\) the bases of the null spaces of the matrices \(Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))\), \(k\in {\mathbb {N}}\), need to be chosen carefully. One such possibility is to collect m linearly independent columns of \(D h({{\,\mathrm{mid}\,}}\big (X^k\big ))\) in a matrix \(R^k\) and write \(D h({{\,\mathrm{mid}\,}}\big (X^k\big ))=(R^k,S^k)\) where, without loss of generality, we assume that one can choose the first m columns. Then the columns of the matrices \(\begin{pmatrix}-\left( R^k\right) ^{-1}S^k\\ I_{n-m,n-m}\end{pmatrix}\) form convergent bases of the null spaces of \(Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))\), \(k\in {\mathbb {N}}\).

Lemma 4.9

Let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes centered on a globally minimal point \(x^*\) and with \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big ({\widetilde{X}}^k\big ) = 0\), and let Assumption 1.1 hold. Then for all \(j \in J\) we have

where \({\widetilde{h}}_j^{x^*}\) refers to a transformation conducted in \(x^*\).

Proof

Since \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) is centered on \(x^*\) we have \({\lim _{k \rightarrow \infty } {{\,\mathrm{mid}\,}}\big (X^k\big ) = x^*}\). With Lemma 4.8 there is a \({\widetilde{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widetilde{k}}\) the coordinate transformation is applicable. For all such k, with \(A^{-1}_k = \overline{Dh({{\,\mathrm{mid}\,}}\big (X^k\big ))}\) and h continuously differentiable we obtain \(\lim _{k \rightarrow \infty } A_k^{-1} = A_{x^*}^{-1}\), where \(A_{x^*}\) refers to a transformation conducted in \(x^*\). By construction of the transformation we have

Since inverting a matrix is a continuous transformation and h is continuously differentiable, introducing limits for all \(j \in J\) it follows

\(\square \)

So applying the coordinate transformation we obtain a sequence of functions \(({\widetilde{h}}^k)_{k \in {\mathbb {N}}}\) whose elements at least asymptotically satisfy the conditions from Corollary 4.7. Yet, the box \({\widetilde{X}}^k\) is not necessarily transformed to another box, but to a n-parallelepiped \({\widetilde{Y}}^k\). To cope with that, for each \(k \in {\mathbb {N}}\) we will overestimate \({\widetilde{Y}}^k\) with a box \({\widetilde{Y}}'^k\) and then prove that all conditions from Corollary 4.7 are fulfilled.

To overestimate an n-parallelepiped \({\widetilde{Y}}^k\) with a box we will construct its minimum bounding box \({\widetilde{Y}}'^k\) by determining the maximum and minimum entries for each component \({i=1,\ldots ,n}\) among all vertices of \({\widetilde{Y}}^k\) and then taking them as component-wise interval boundaries. Iterating over all vertices of \({\widetilde{Y}}^k\) is expensive from a computational point of view, but the maxima and minima can also be determined by solving 2n linear optimization problems.

To derive these linear optimization problems and some following results, we will make use of the edge vectors of a box \(X^k\), which we define by  for all \(i = 1,\ldots ,n\), with edge length

for all \(i = 1,\ldots ,n\), with edge length  . Any point \({\widetilde{x}}\) in \(X^k\) can be addressed by

. Any point \({\widetilde{x}}\) in \(X^k\) can be addressed by

with appropriate \(\alpha _i \in [0,1]\) for all \(i=1,\ldots ,n\). Analogously, we denote the edge vectors of an n-parallelepiped \({\widetilde{Y}}^k\) by \(d_i^{{\widetilde{Y}}^k} := A^{-1}_k d_i^{X^k}\). Any point \({\widetilde{y}}_k\) within the n-parallelepiped can be addressed by

with appropriate \(t_i \in [0,1]\) for all \(i=1,\ldots ,n\).

Using these definitions, for each \(i=1,\ldots ,n\) we have to solve the linear optimization problems

and

Constructing the boxes \({\widetilde{Y}}'^k\) for each \(k \ge {\widetilde{k}}\) we obtain a sequence of boxes \(({\widetilde{Y}}'^k)_{k \in {\mathbb {N}}}\). In order to apply our results from Corollary 4.7 we now have to check whether this sequence has all the required properties.

First, we can state that the transformed midpoint \({{\,\mathrm{mid}\,}}({\widetilde{Y}}^k) := A^{-1}_k {{\,\mathrm{mid}\,}}\big (X^k\big )\) of the box \({\widetilde{X}}^k\) is also midpoint of the n-parallelepiped \({\widetilde{Y}}^k\), i.e.  , which follows from the linearity of the coordinate transformation. As constructing the box \({\widetilde{Y}}'^k\) does not change the componentwise boundaries, \({{\,\mathrm{mid}\,}}({\widetilde{Y}}^k)\) is also midpoint of the box \({\widetilde{Y}}'^k\), and the previous results from this subsection remain valid. In addition, we have to show that \(({\widetilde{Y}}'^k)_{k \in {\mathbb {N}}}\) is a non-deformed sequence of boxes asymptotically centered on the globally minimal point \(y^{*k}\) in the new coordinates and satisfies \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}({\widetilde{Y}}'^k) = 0\). The following lemma proves these properties.

, which follows from the linearity of the coordinate transformation. As constructing the box \({\widetilde{Y}}'^k\) does not change the componentwise boundaries, \({{\,\mathrm{mid}\,}}({\widetilde{Y}}^k)\) is also midpoint of the box \({\widetilde{Y}}'^k\), and the previous results from this subsection remain valid. In addition, we have to show that \(({\widetilde{Y}}'^k)_{k \in {\mathbb {N}}}\) is a non-deformed sequence of boxes asymptotically centered on the globally minimal point \(y^{*k}\) in the new coordinates and satisfies \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}({\widetilde{Y}}'^k) = 0\). The following lemma proves these properties.

Lemma 4.10

Let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed sequence of boxes asymptotically centered on a globally minimal point \(x^*\) and with \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big ({\widetilde{X}}^k\big ) = 0\), and let Assumption 1.1 hold. Then the sequence \(({\widetilde{Y}}'^k)_{k \in {\mathbb {N}}}\) of boxes

-

(a)

satisfies \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}({\widetilde{Y}}'^k) = 0\),

-

(b)

is asymptotically centered on

$$\begin{aligned} y^{*} = \lim _{k \rightarrow \infty } y^{*k} = \lim _{k \rightarrow \infty } A^{-1}_{k} x^{*} = A^{-1}_{x^{*}} x^{*}, \end{aligned}$$ -

(c)

is non-deformed.

Proof

To prove assertion (a) we use the row-sum norm, i.e. the matrix norm induced by \(\Vert \cdot \Vert _\infty \). With the compatibility of the row-sum norm of \(A^{-1}_k\) we have

for all \(x \in {\mathbb {R}}^n, k \in {\mathbb {N}}\). In particular, for all \(x', x'' \in {\widetilde{X}}^k\) it follows

With \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) asymptotically centered on \(x^*\) we have \(\lim _{k \rightarrow \infty } {{\,\mathrm{mid}\,}}\big (X^k\big ) = x^*\) and with h being continuously differentiable it follows that \(\lim _{k \rightarrow \infty } A^{-1}_k = A^{-1}_{x^*}\). With \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}\big ({\widetilde{X}}^k\big ) = 0\) we also have  . Moreover, \(\Vert A^{-1}_{x^*} \Vert _\infty \) is a real number. As both limit values exist, we obtain

. Moreover, \(\Vert A^{-1}_{x^*} \Vert _\infty \) is a real number. As both limit values exist, we obtain

Hence, for arbitrary \(x', x'' \in {\widetilde{X}}^k\) all components of \(A^{-1}_k\big (x' - x''\big )\) converge to 0. As \({\widetilde{Y}}'^k\) is constructed from these component entries, also all edge lengths of \({\widetilde{Y}}'^{k}\) converge to zero and thus, also \({{\,\mathrm{diag}\,}}({\widetilde{Y}}'^k)\).

Now we turn towards assertion (b). The globally minimal point \(x^*\) can be described as a linear combination of all edge vectors of \({\widetilde{X}}^k\) starting from  , i.e.

, i.e.

with \(\alpha _i \in {\mathbb {R}}\) for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\). As \(x^*\) asymptotically becomes midpoint of the elements of \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\), we have \(\lim _{k \rightarrow \infty } \alpha _i^k = \frac{1}{2}\) for all \({i = 1,\ldots ,n}\).

Since the coordinate transformation is linear, it follows

Hence, with \(\lim _{k \rightarrow \infty } \alpha _i^k = \frac{1}{2}\) the feasible point \({\widehat{y}}^k\) in the new coordinates also becomes midpoint of \({\widetilde{Y}}^k\) and, thus, \({\widetilde{Y}}'^k\) asymptotically.

To show assertion (c) again we use the row-sum norm of \(A^{-1}_k\). Similarly to (a), for all \(x', x'' \in {\widetilde{X}}^k\) we obtain

Analogously, with the induced norm of \(A_k\) for all \(x', x'' \in {\widetilde{X}}^k\) it follows

The Eqs. (10) and (11) provide the maximum and minimum stretching under the coordinate transformation. These factors also bound the edge lengths of the new box \({\widetilde{Y}}'^k\). Hence, for the maximum ratio of the length of box edges we obtain

where in the last step we used that \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) is a non-deformed sequence. Thus, the ratio of the edge lengths of \({\widetilde{Y}}'^k\) is bounded above by a constant, \(\kappa \), containing the condition number of \(A^{-1}_k\).

Since the gradients of h in \(x^*\) are linearly independent, because of Assumption 1.1, \(A^{-1}_{x^*}\) is a regular matrix. For regular matrices the condition number is bounded. Hence, for k approaching infinity the ratio \(t^{{\widetilde{Y}}'^k}\) is bounded by a finite constant. Thus, the boxes do not become increasingly deformed. \(\square \)

Lemmas 4.9 and 4.10 show that using the, possibly extended, Jacobian of h in \({{\,\mathrm{mid}\,}}\big (X^k\big )\) as the transformation matrix \(A^{-1}_k\) for all \(k \ge {\widetilde{k}}\) the system is transformed in a way that all requirements of Corollary 4.7 are met at least asymptotically. Therefore, we obtain the following result with regard to Theorem 3.2.

Theorem 4.11

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed exhaustive sequence of boxes with  for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \(x^* \in X^k\) for all \(k \in {\mathbb {N}}\) and \(x^*\) globally minimal point of P(B). Let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with

for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \(x^* \in X^k\) for all \(k \in {\mathbb {N}}\) and \(x^*\) globally minimal point of P(B). Let \(\big ({\widetilde{X}}^k\big )_{k \in {\mathbb {N}}}\) be a sequence of boxes with  ,

,  and

and  for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\). Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and let Assumption 1.1 hold. Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) the conditions (3) of Theorem 3.2 are satisfied for the boxes \({\widetilde{Y}}'^k\) obtained by applying the aforementioned transformation of the system.

for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\). Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and let Assumption 1.1 hold. Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) the conditions (3) of Theorem 3.2 are satisfied for the boxes \({\widetilde{Y}}'^k\) obtained by applying the aforementioned transformation of the system.

Proof

With the extension of \(X^k\) for each \(k \in {\mathbb {N}}\) according to Lemma 4.6 we obtain a sequence \(({\widetilde{X}})_{k \in {\mathbb {N}}}\) of boxes which is asymptotically centered on the globally minimal point \(x^*\). According to Lemma 4.8 there is a \({\widetilde{k}} \in {\mathbb {N}}\) so that for all \(k \ge {\widetilde{k}}\) the coordinate transformation, and afterwards the extension of \({\widetilde{Y}}^k\) to a box \({\widetilde{Y}}'^k\) can be applied. Thereby we obtain a sequence of boxes \(({\widetilde{Y}}'^k)_{k \in {\mathbb {N}}}\) that is non-deformed, asymptotically centered on \(y^*\) and satisfies \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}(Y'^k) = 0\), as shown in Lemma 4.10, and a sequence of functions \(({\widetilde{h}}_j^k)_{k \in {\mathbb {N}}}\) in the new coordinates. Using Lemma 4.9 it follows \(\lim _{k \rightarrow \infty } \nabla {\widetilde{h}}_j^k(y*_k) = e_j\). Hence, asymptotically, all assumptions from Corollary 4.7 are met, so that we can conclude that \({\widetilde{h}}^k\) asymptotically satisfies the sign conditions of Theorem 3.2 on \({\widetilde{Y}}'^k\). As \({\widetilde{h}}^k\) is continuously differentiable, there is some \({\widehat{k}}\) so that for all \(k \ge {\widehat{k}}\) the sign conditions are satisfied as well. \(\square \)

4.4.2 Determining upper bounds in the transformed system

With the previous results we are now able to present our method to calculate valid upper bounds for the globally minimal value \(v^*\).

In a first phase, the box is extended to cope with feasible points on facets. If the box is too large for our extension approach, we set \({\widetilde{X}} = X\). In a second phase, if possible, the system is transformed such that at least asymptotically the gradients \(\nabla {\widetilde{h}}_j\), \(j \in J\), at a transformed globally minimal point \(y^*\) have unit direction. We note again that the transformation can be also applied for sufficiently small boxes in a sequence of boxes asymptotically centered on a feasible point \({\widehat{x}}\) with linearly independent gradients \(\nabla h_j({\widehat{x}})\). In phase three, Theorem 3.2 is used to check for feasibility. Theorem 4.11 states that after a finite number of steps within a branch-and-bound algorithm, its conditions are satisfied and the existence of a zero in \({\widetilde{Y}}'^k\) can be verified. However, as checking

for all \(j \in J\) is not an easy task, we will use quadratically convergent upper bounding procedures, e.g. centered forms [7, 22], to determine upper and lower bounds on the facets instead.

For all \(j \in J\) we will determine an upper bound \(u_j(\underline{{\widetilde{Y}}'^k_j})\) for the maximal value of \({\widetilde{h}}^k_j\) on the facet \(\underline{{\widetilde{Y}}'^k_j}\) and a lower bound \(\ell _j(\overline{{\widetilde{Y}}'^k_j})\) for the minimal value of \({\widetilde{h}}^k_j\) on the facet \(\overline{{\widetilde{Y}}'^k_j}\). Using such bounds is sufficient to verify the existence of feasible points, since \(u_j(\underline{{\widetilde{Y}}'^k_j}) \le 0\) and \(\ell _j(\overline{{\widetilde{Y}}'^k_j}) \ge 0\) for all \(j \in J\) imply that all conditions of Theorem 3.2 are satisfied.

In general, the verification of a zero of \({\widetilde{h}}^k\) in a box \({\widetilde{Y}}'^k\) does neither guarantee the existence of a feasible point in the original box \(X^k\) nor the existence of a feasible point of P(B) at all, since by extending \(X^k\) to \({\widetilde{X}}^k\) and \({\widetilde{Y}}^k\) to \({\widetilde{Y}}'^k\) the considered set of points is enlarged. Applying the coordinate transformation, for each feasible point \({\widehat{x}} \in X^k \subseteq B\) we obtain a feasible point \({\widehat{y}}^k \in {\widetilde{Y}}^{*k}\) in the new coordinates. Hence, if \(X^k\) is sufficiently small, the existence of a feasible point is verified correctly. On the contrary, it is also possible that the conditions (3) in Theorem 3.2 are satisfied due to a point \({\widehat{y}} \in {\widetilde{Y}}'^k\) with \({\widetilde{h}}^k_j({\widehat{y}}^k) = 0\) for all \(j \in J\) without a corresponding point \({\widehat{x}} \in X^k\) in the original coordinates. While for \({\widehat{x}} \notin B\) the existence of a feasible point of P(B) cannot be concluded then, if we have \({\widehat{x}} \notin X^k\) but \({\widehat{x}} \in B\), still a feasible point of the original problem P(B) is verified. We will show that for a sufficiently small box \(X^k\) the latter condition is guaranteed to be satisfied so that for such a box our algorithm verifies the existence of a feasible point of the problem P(B). Then, an upper bound for the objective function on \({\widetilde{Y}}'^k\) may serve as a new upper bound for \(v^*\).

In practice, it is not clear in advance whether a box is sufficiently small for a detected zero to be located in B. As the zero is not determined explicitly, to check for this, we transform the whole box \({\widetilde{Y}}'^k\) to an n-parallelepiped \({\widetilde{X}}^{'k}\) in the original coordinates and then extend it to a minimum bounding box \({\widetilde{X}}''^k\) with the same ideas as in the previous subsection. Then, we can check whether \({\widetilde{X}}''^k \subseteq B\) which implies \({\widehat{x}} \in B\). The box \({\widetilde{X}}''^k\) is also used to save the best-known approximation of a globally minimal point in the original coordinates within a branch-and-bound algorithm. However, to calculate an upper bound for \(v^*\) that is as tight as possible, the upper bound should be determined on \({\widetilde{Y}}'^k\) in the new coordinates.

The following corollary formally proves that Algorithm 2 determines an upper bound for \(v^*\) after a finite number of steps.

Corollary 4.12

Let \(\big (X^k\big )_{k \in {\mathbb {N}}}\) be a non-deformed exhaustive sequence of boxes with  for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \(X^k \subseteq B\), \(x^* \in X^k\) for all \(k \in {\mathbb {N}}\) and \(x^*\) a globally minimal point of P(B). Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and let Assumption 1.1 hold. Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) Algorithm 2 verifies the existence of a feasible point of P(B) and determines a valid upper bound \(u_f\) for \(v^*\).

for all \(i=1,\ldots ,n\) and \(k \in {\mathbb {N}}\), \(X^k \subseteq B\), \(x^* \in X^k\) for all \(k \in {\mathbb {N}}\) and \(x^*\) a globally minimal point of P(B). Furthermore, let \(h: {\mathbb {R}}^n \mapsto {\mathbb {R}}^m, h \in C^1({\mathbb {R}}^n,{\mathbb {R}}^m)\) and let Assumption 1.1 hold. Then, there is some \({\widehat{k}} \in {\mathbb {N}}\) so that for all \(k \in {\mathbb {N}}\) with \(k \ge {\widehat{k}}\) Algorithm 2 verifies the existence of a feasible point of P(B) and determines a valid upper bound \(u_f\) for \(v^*\).

Proof

With Theorem 4.11 there is a \(k^1\) so that the conditions (3) of Theorem 3.2 are satisfied for all \(k \ge k^1\). Hence, with \({\widetilde{y}}^k\) the maximum point on \(\underline{{\widetilde{Y}}'^k_j}\) for some arbitrary \(j \in J\) we have

Using a quadratically convergent bounding procedure on \(\underline{{\widetilde{Y}}'^k_j}\) we obtain

Since the first term is negative for sufficiently large k and converges to zero linearly while the second one converges to zero quadratically, there is some \(k^2 \in {\mathbb {N}}\) so that for all \(k \ge k^2\) and for all \(j \in J\) we have

The proof for the facet \(\overline{{\widetilde{Y}}'^{k}_j}\) follows completely analogously.

In the retransformation phase we obtain a sequence of boxes \(({\widetilde{X}}^{''k})_{k \in {\mathbb {N}}}\) in the original coordinates. Analogously to our ideas in Sect. 4.4 we can conclude that \(\lim _{k \rightarrow \infty } {{\,\mathrm{diag}\,}}({\widetilde{X}}''^k) = 0\). Additionally, we assume all feasible points of P(B) to strictly satisfy the box constraints. Hence, for any box containing a feasible point there is some \(k^3 \in {\mathbb {N}}\) so that \({\widetilde{X}}''^k \subseteq B\) for all \(k \ge k^3\).

So in total, there is some \({\widehat{k}} = \max \left\{ k^1, k^2, k^3 \right\} \) so that Algorithm 2 verifies the existence of a feasible point of P(B) in \({\widetilde{Y}}'^k\) for all \(k \ge {\widetilde{k}}\) and a valid upper bound \(u_f\) for \(v^*\) is determined. \(\square \)

5 Preliminary numerical results

In this subsection we present preliminary numerical results for Algorithm 2. The aim of these results is to demonstrate the effectiveness of the algorithm to detect the existence of feasible points of P(B) and, thus, to complement our theoretical results. The results also indicate that our approach could be used within a branch-and-bound framework to calculate convergent upper bounds for \(v^*\).

For the purpose of demonstration, we construct (sequences of) boxes as they typically occur within a branch-and-bound algorithm by division along the longest edge. Then, we verify the existence of feasible points on the obtained boxes. If the existence of a feasible point is verified for a box, the algorithm determines an upper bound for \(v^*\) and terminates. Otherwise, the box is divided along a longest edge, added to a list and the next box is chosen from the list based on the FIFO principle, as long as the list is not empty.

Our current implementation is not rigorous in the sense that it does not take rounding errors into consideration. To the best of our knowledge, currently there is no commercial branch-and-bound solver in global optimization that ensures that all computations are performed in such a rigorous manner so that the user can be sure that the lower bound is actually less or equal than the globally minimal value, although mathematically the proofs for the lower bounds are available for exact arithmetic. So far, we follow this practice also for our new upper bounding procedure and do not consider rounding errors in our implementation, although the proofs for the case of exact arithmetic are now available in this paper. A more detailed discussion on this subject can be found at the end of this article.

5.1 Implementation details

Algorithm 2 constitutes the basis of the implementation, but some further additions are made to improve its efficiency and the quality of the upper bounds. Figure 3 illustrates the feasibility verification checks and transformations within Algorithm 2 together with these additions.

-

Bounds u(X) and \(\ell (X)\) for \(h_j\), \(j \in J\), are calculated on the current box X to exclude it from further examination, if it is guaranteed not to contain a feasible point, that is, \(u(X) < 0\) or \(\ell (X) > 0\) hold.

-

Despite the better convergence rate of the former, centered forms as well as interval arithmetic are applied as bounding procedures and the tighter bounds are used in each case.

-

We use a cascaded system of feasibility verifications: First we check the sign conditions of Theorem 3.2 for X. If they are satisfied, a feasible point is verified in X and there is no need for a box extension or coordinate transformation. If they are not satisfied, we extend X to \({\widetilde{X}}\) and check the sign conditions for the extended box. If they are not satisfied, we apply the system transformation and check the conditions for \({\widetilde{Y}}'\). The reason behind this approach is that we obtain tighter upper bounds if we verify the existence of a feasible point in a smaller box. Therefore, it is reasonable to extend the boxes only if necessary.

As shown in Corollary 4.12, Algorithm 2 guarantees that the conditions (3) of Theorem 3.2 are satisfied for sufficiently small boxes containing feasible points. After applying the coordinate transformation it is clear which function \(h_j\), \(j \in J\) is allocated to which box facet with respect to the sign conditions because their gradients have unit direction. However, a reasonable allocation beforehand might lead to a less rough overestimation of \({\widetilde{X}}\) in the new coordinates and increase the probability that no transformation is required at all, as the conditions (3) might be satisfied for the boxes X or \({\widetilde{X}}\) already. Therefore, we test two different strategies of allocating m of the n facets of a box to the m functions \(h_j\), \(j \in J\). Allocation strategy I simply allocates the j-th function to the j-th facets of X. Allocation strategy II determines the allocation iteratively based on the angle of the m gradients \(\nabla h_j\), \(j \in J\), with the n unit vectors.

The method is implemented in MATLAB R2018b and executed on an Intel Core i5-6300 U (2.4 GHz) processor with 12 GB RAM. In addition to standard MATLAB commands, the INTLAB toolbox V7.1 for reliable computing is used to realize the calculation of bounds, the determination of the box \({\widetilde{Y}}^*\) and interval computations in general [36].

5.2 Results for test problems

Our implementation is tested for a selection of problems from the COCONUT benchmark [40]. All selected problems are also examined by Domes and Neumaier in [9]. In the case that a test problem did not include any box constraints, these were added to the problem to make it applicable to our purposes. We chose \(B=[-1000,1000]^n\) as the initial box. The problems aljazzaf and bt13 were slightly modified for the feasible points to strictly satisfy the box constraints. The examined problems and the main results are summarized in Table 1.

For all but two problems the algorithm successfully verifies the existence of feasible points. For 26 of 35 problems the algorithm terminates successfully for both allocation strategies, as indicated by \(yes \) in Table 1. For the specific problem parabola the following example illustrates the application of our method in detail.

Example 5.1

The problem consists of the following constraints

The only feasible point of this problem is \({\widehat{x}} = (1,1)\).