Abstract

Linked social-ecological systems in which surprise and crisis are interspersed with periods of stability and predictability are inherently difficult to manage. This condition, coupled with the legacies of past management actions, typically leaves policy and decision makers few options other than to incrementally adapt and reinforce the current trajectory of the system. Decision making becomes increasingly reactive and incremental as the system moves from one crisis to another. Eventually the system loses its capacity to cope with perturbations and surprise. Using a combination of dynamical-systems modeling and historical analysis, we studied a process of this kind as it developed in the Goulburn Broken Catchment in southeastern Australia over the past 150 years. Using the model to simulate trajectories of the biohydrological system, we correlate the state of the physical system to historical events and management action. We show how sequential management decisions have eroded the resilience of the system (its ability to cope with shocks and surprises) and reduced options for future change. Using the model in a forward-looking mode, we explore future management options and their implications for the resilience of the system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

INTRODUCTION

As complex nonlinear systems, the behavior of linked social-ecological systems (SES) can be unpredictable, and the effects of policy interventions can be highly uncertain. Surprise and crisis are regular occurrences. This uncertainty, coupled with legacies of past management actions, often leaves decision makers few options other than to reinforce the current trajectory of the system. Decision making becomes reactive and incremental as the system moves from one crisis to another (Gunderson 2001). Management options become limited and typically involve significant trade-offs. Ultimately the system becomes vulnerable to external shocks. This cycle of declining biophysical productivity, leading to actions and institutional change directed at mitigating this decline, leading to yet further decline, is all too common (Perrings 1989). Can this cycle be avoided?

We combine biophysical modeling and historical analysis to explore this question. This work is akin to historical institutional analysis, which seeks to understand both why societies evolve along distinct trajectories (Greif 1998) and why inferior institutions persist (North 1990). This approach relies on the careful analysis of historical cases to understand the dynamic interplay among economic drivers, technology, and institutional change. Ecological factors, however, are typically not considered. What is novel in our work is the emphasis on the role played by ecological and hydrological processes in structuring this dynamic process. We present a study of an agricultural region in southeastern Australia that demonstrates the effects of crisis-driven decision making. We show how institutional responses to ecological and hydrological change have constrained the current system and increased its vulnerability to shocks. We conclude with a discussion of strategies for future management that might increase the resilience of the SES under study.

BACKGROUND

The Case Study: Balancing Salt, Water, and Agriculture

The Goulburn Broken Catchment (GBC) in Victoria, Australia, covers approximately 2400 km2 (200 km × 120 km) (Figure 1). Precipitation ranges from 1200 mm/y in the southeast of the catchment to 400 mm/y in the northwest. The regional population exceeds 190,000, of which 65% reside in the Shepparton Irrigation Region (SIR). The regional economy, accounting for 25% of Victoria’s export earnings, is based primarily on irrigated dairy, horticulture, and food processing. The GBC is made up of three broad geographic regions (Figure 1):

-

1.

The Shepparton Irrigation Region (SIR): 500,000 hectares on riverine plains in the lower catchment. Approximately 60% of this land area is irrigated. Native vegetation types have been reduced to less than 2% of their extent prior to European settlement. Roughly 88% of irrigated land is pasture for dairy production. Horticulture, vegetables, and other crops make up the remainder. Annual commodity output is worth approximately $1billion, with value add industries generating an additional $3billion.

-

2.

Mid catchment: Riverine plains and low foothills between the forested highlands and the SIR. Less than 15% of the native vegetation cover, which provides important habitat for threatened flora and fauna species, now remains. Land use is dominated by broad-acre cropping and grazing, with minor areas of farm forestry, horticulture, and other crops. Given the area’s proximity to Melbourne, land use is changing to “life style” farming.

-

3.

Upper catchment: Large area comprised predominantly of public land above the major water storage in the catchment, Eildon Reservoir. Total forest cover has remained relatively unchanged since European settlement. The main land uses are forestry, conservation, and recreation.

In the academic resource management literature, government communications, news media, and popular imagination in Australia, the term “salinity” has come to describe the combined processes of soil salinization, increased salt loads in surface drainages, damage to infrastructure, and associated social issues generated by rising saline water tables that threaten many regions. Prior to European settlement, the hydrological balance was maintained by deep-rooted woody vegetation. Replacement of 70% of this vegetation with shallow-rooted crops and pastures, along with intensive irrigation, has caused water tables to rise. Rising water tables mobilize salt stored in the soil. This salt then makes its way into the root zone, rivers, and streams. Salinity is a major threat to the long-term viability of the GBC. Annual economic losses are estimated to reach $100 million by 2020 if the problem is not addressed; 50% of remaining woodland habitat will be threatened; and 40% of wetlands will be impacted. A total of 56 threatened plant and animal species live in areas with high water tables (GBRSAC 1989).

Analytical Perspective

The study of simple, coupled ecological and economic models using the tools of dynamic optimization has played a central role in the effort to better understand resource management problems. Early models of fisheries (Gordon 1954; Clark 1990) have been extended in many directions to incorporate complex ecological dynamics, uncertainty, and investment decisions (for example, Clark and others 1979; Ludwig 1980; Ludwig and Walters 1982; Clark 1990; Beltratti 1997; Carpenter and others 1999). The key message from this literature is the importance of discount rates and resource renewal rates (Clark 1973). High discount rates typically lead to overexploitation, whereas low discount rates can induce conservation. Most relevant to our study is the work of Carpenter and others (1999) on lakes.

What is novel and important in this work is the incorporation of ecological dynamics that include multiple regimes and thresholds. By coupling simple models of ecological and economic processes, the authors explore the policy implications of multiple regimes. The main insight is that more cautious policies are required to avoid welfare losses when there is the possibility of moving from one regime to another. The level of caution increases when managers are uncertain about the location of thresholds or there are time lags in policy implementation.

The GBC has much in common with lakes. In the GBC, there is a conflict between benefits from activities that generate “loading” (as in phosphorus loading in a lake) and the disbenefits generated by the effects of that loading on an ecosystem. In the GBC, loading is irrigation water rather than phosphorus, and the stock is salty groundwater rather than phosphorus in the water column and mud. Ecology links the stocks and flows in lakes, whereas hydrological dynamics create the link in the GBC. Like lakes, the GBC has multiple regimes: groundwater-at-depth (desirable) and groundwater-at-surface (undesirable), separated by a threshold.

An optimization problem very similar to that of Carpenter and others (1999) can be set up for the GBC, yielding very similar insights. Namely, lower discount rates imply lower levels of clearing and irrigation because the future cost of water tables reaching the surface (the analogue of a eutrophic lake) and associated salinity problems are more heavily weighted. As with lakes, there is considerable uncertainty about the response of the system to revegetation and reduction in irrigation. This uncertainty would lead to lower optimal levels of clearing and irrigation.

The explanation for the historically high levels of clearing and irrigation in the GBC derived from such a simple bioeconomic would therefore be a combination of three things: (a) land managers’ discount rates were too high, (b) managers were naive about their lack of understanding of the system, and (c) the usual collective action problem obtained (that is, management occurred at the farm rather than catchment scale, and there were no incentives for cooperation to achieve catchment-scale management objectives). This explanation, however, is quite general. The thorough analysis of Carpenter and others (1999) applies with equal force to any system with conflicting services and complex ecological dynamics. This analysis allows one to determine why, in very general terms, an actual case was not optimal. The shortcoming of this analysis is that important details of system organization that structure economic interactions cannot be carefully considered.

The approach taken here appreciates that details do matter and path dependency is important (North 1990). We believe this adds considerable value by capturing institutional and social constraints to management action typically not considered in the approach outlined above. We combine a biophysical model not with an economic model, but rather with historical analysis. We are interested in how actual management actions affected the biophysical conditions, which then fed back into subsequent institutional arrangements and management actions. An understanding of this process provides important insights for resource management practice and complements the general insights derived from simple coupled economic and ecological models.

To structure our inquiry, we rely on several concepts from resilience theory; namely, ecological systems exhibit threshold behavior (see the many examples cataloged by Walker and Meyers 2004), which is generally controlled by slow variables (Ludwig and others 1978, 1997; Walker and others 1981; Anderies and others 2002; Carpenter and Brock 2004). Resilience theory also emphasizes that systems can move between phases of relatively stable, slowly increasing accumulation and connectedness, and chaotic, rapid phases of breakdown and reorganization (Holling and Gunderson 2002). We do not claim that these concepts explain the historical evolution of the system. Rather, we use them as heuristics to help relate the analysis of the model to historical events and to generalize our findings.

ANALYSIS: BIOHYDROLOGICAL DYNAMICS AND INSTITUTIONAL CHANGE

The biophysical model of the GBC developed by Anderies (2005) captures three key variables in each of the lower and mid catchments: depth to water table, salt concentration in the soil, and salt concentration in the groundwater. Because the upper catchment has remained unchanged through the period we analyze, we do not include it in the model. The model also accounts for surface flows and the effect of vegetation cover on partitioning water between surface runoff, evaporation, transpiration, and recharge. For full details, see the online appendix (http://www.Springerlink.com) for a summary or Anderies (2005).

Of central interest is the dynamic feedback among land use, resilience, management action, and institutional change. We contend that the interplay among these factors has caused the system to “lock in” to an unsustainable trajectory and has limited future management options. To explore this issue, we computed the resilience of the desirable system configuration (that allows for water-table-at-depth equilibria) as a function of vegetation clearing. Next, we computed the time at which the resilience of this configuration to exceptionally wet events fell to zero (basin size shrinks to zero). We then used the model to assess the impact of the exceptionally wet event in the 1970s on the system and explored how the crisis induced by this event could have been avoided. Finally, we assessed the impact of this event on the evolution of institutions in the region and the effect of the induced institutional structure on possible future trajectories from the mid 1970s onward.

Rare Events and Crisis

In the 1970s, exceptionally heavy rain generated a crisis in the GBC when water tables rose to the surface in many places. We refer to this event as a “crisis” because of the scale of the economic loss experienced by those who lost crops and the fact that even though some of them were well aware of the rising water tables and their consequences, farmers and government agencies were mostly oblivious and water tables reaching the surface came as a real surprise. Estimates were that if nothing was done, high water tables could have destroyed the economic base of the region. Under the conditions extant prior to European settlement, however, such a wet period would not have generated a crisis. To explore the relationship between land-use change and vulnerability, we reconstruct the historical depth of the water table in the lower catchment using actual rainfall data. We compare three scenarios: (a) actual rainfall, (b) average rainfall, and (c) actual rainfall with key rare events removed to illustrate the effect of rare events and their relation to loss of resilience.

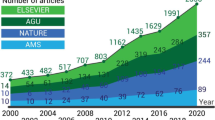

Data on clearing levels and irrigation efficiency over time are scarce. We make rough estimates by combining historical records (Garran 1886) and recent survey data (GBCMA 2000) for the lower catchment and assume that clearing in the mid catchment proceeded somewhat more slowly because the area is less suitable for intensive agriculture. We assume that clearing occurs at a constant annual percentage rate (which generates an exponential decline in vegetation cover) chosen to roughly match the few available historical data points (Figure 2). Similarly, we assume that irrigation efficiency (the proportion of irrigation water applied that is actually used by crops) increased at a constant annual rate until stabilizing at the present level of 90%. Using this scenario, the historical trajectory of the system from 1860 to 2003 was reconstructed. Although there are six state variables, we focus on the depth to the water table in the lower catchment, where the crisis was most severely felt (Figure 3).

The triangles show data points for the percentage of native vegetation cover. The downward-sloping dotted and dashed curves show simulated native vegetation levels based on clearing at a constant, proportional annual rate (which generates an exponential decline in the vegetation cover). The total cleared area in the lower catchment is the sum of irrigated (roughly 70%) and dryland agriculture and grazing areas (roughly 30%). Cleared area in the mid catchment is due entirely to dryland agriculture and grazing. The upward-sloping dash-dot line shows the simulated evolution of irrigation efficiency (percentage of irrigation water actually used by crops).

a Historical evolution of water table depth in the lower catchment. b Rainfall patterns used in the simulations. The water table depth trajectories shown in (a) were computed using rainfall patterns as follows: solid—top graph in (b); dashed—constant average rainfall of 480 mm/y from 1860 to 2000; dash-dot—bottom graph in (b) in which actual rainfall between the vertical gray lines (both exceptionally wet) has been replaced with mean rainfall.

Comparison of the trajectories that result from the precipitation patterns in Figure 3b enables the effects of exceptionally wet periods (Figure 3a inset) to be isolated. Several points emerge:

-

The interaction between fast and slow variables becomes apparent when average and highly fluctuating conditions are compared. The difference prior to 1920 is negligible. The groundwater system (slow variable) smoothes the highly fluctuating rainfall patterns (fast variable).

-

Only sustained deviations from average conditions impact groundwater dynamics. During the exceptionally dry period from the mid 1920s to the mid 1940s, the water table rose less than under average conditions (solid curve falls below dashed curve). The water table increased much faster than the average during the extremely wet period from the late 1940s to the late 1950s (inset in Figure 3a; solid curve rapidly crosses back above dashed curve). Finally, the wet period from 1968 to 1975 caused another sharp rise in the water table that precipitated the water table crisis in the mid 1970s.

-

If these sustained deviations in precipitation had occurred when the water table was deeper, it is likely that they too would have eventually been integrated out. As the water table neared the surface, these fluctuations became more important.

-

Regardless of rainfall patterns, the clearing in the catchment was unsustainable. A comparison of the time when the water table would have reached the surface without wet periods (1984), with the time when it actually did (1973) shows that the crisis was brought forward 11 years. Although this difference is insignificant on hydrological time scales, it is significant on human time scales.

We turn now to the historical record to explore the conditions leading up to this crisis and the impact it had on the subsequent organization of the system.

Self-organization and Adaptability: Response to Crisis

Setting the Stage

The “surprise” of the water table crisis was, in fact, the inevitable culmination of a sequence of events. Slow hydrological dynamics meant that feedbacks between clearing and water tables became apparent only when they rose above a critical threshold and impacted production. The severity of this water table crisis was the impetus for a period of reorganization in the GBC that coincided with a range of social and institutional reforms at the state and federal scale and transformed the management and utilization of natural resources in Australia (Langford and others 1999).

Common sense suggests that responses to such a crisis would involve enhancing the capacity of the system to cope with such shocks. We contend, however, that the responses reduced intervention options and further increased, rather than decreased, the system’s vulnerability to shocks. Understanding such a response requires knowledge of the critical processes and events that led up to the crisis. These events comprised two phases:

-

Phase I: Early attempts to develop local irrigation infrastructure highlighted how vulnerable the system was to environmental variation. This gave rise to a perceived need for centralized control. Irrigated agriculture began in the 1870s with local water trusts along major rivers. The trusts soon failed due to poor management, insufficient infrastructure, and a run of severe droughts (Langford and others 1999). The state government responded with legislation that vested ownership of all stream and river flows to the state government, established a centralized body to oversee water resources, tied water allocations to land, and required farmers to pay a set annual fee for water delivery (Barr and Carey 1992; Langford and others 1999). The state thus established its central role in the development of irrigation in the region.

-

Phase II: A nation-building ideology coupled with particular environmental and social conditions provided motivation for the development of massive irrigation infrastructure that would never generate net economic benefits (after accounting for its true cost) (Quiggin 1988). The state intervention in Phase I enabled a period of stable growth in irrigated agriculture and food processing from 1900 to 1950. The exceptional dryness during this period generated significant lobbying on the part of irrigators for additional infrastructure. This resulted in a 700% increase in storage capacity in the GBC. Concurrently, disastrous effects of droughts across the nation, and the need to attract skilled labor to Australia gave rise to the Snowy Mountains Scheme. Begun in 1949 and completed in 1974 with funding from the Commonwealth government, the immense project diverts water from the Snowy River to the Murray Darling Basin in which the GBC is located.

The Crisis

The stage was now set for the crisis and the subsequent response. Unlike 1900–50, the period from 1950 to 1975 was exceptionally wet. The heavy national investment in irrigation infrastructure during Phase II encouraged low-value, high water-use activities (dairy) and left little incentive for efficient water use (Langford and others 1999) (because water was priced below its true cost). Meanwhile’s little attention was paid to the underground, largely invisible, problems to come.

Historical water table depths were estimated at between 20 and 50 m. Although high water tables were observed in the 1930s and 1940s, they were highly localized and were attributed to soil types and drainage problems rather than rising regional water tables (GBRSAC 1989). High rainfall and inefficient irrigation practices caused rapid water table rise in the mid 1950s, removing the “depth-to-water-table” buffer across large areas of the irrigation region.

Extreme rainfall during 1973–74 caused the water table to rise rapidly into the critical zone (2 m below the surface) across more than a third of the region, reducing dairy production and severely impacting high-value horticultural crops. Subsequent investigations revealed that more than half of the irrigation region (274,000 ha) was at risk due to high water tables (GBRSAC 1989). Not only had water tables risen, they mobilized salt deposits and thus made much of the groundwater unsuitable for irrigation or domestic use. The problem was further exacerbated by the irrigation infrastructure itself, which both transports 100,000 tons/y of salt from the mid catchment to the lower catchment via irrigation water and leaks directly to the groundwater system (Bethune and others 2004; Sampson 1996; Surapaneni and Olsson 2002; GBRSAC 1989).

The Response

Wet phases in the 1950s and 1960s and early 1970s accelerated water table rise by more than a decade, turning a gradual, insidious threat into a crisis (Figure 3a). Dairy and horticultural production and processing underpinned approximately half the regional economy, and the realizatoin that these activities were under threat had an immediate impact on local communities. A consequence of Phase II processes was to elevate irrigation infrastructure to the national level and link hydrological processes at very large scales. This meant a “whole of catchment” response was required. Community leaders prepared an integrated catchment management plan based on community decision making, with costs apportioned according to private and public benefits.

The state government responded by introducing new institutions and devolving responsibility for management to regional communities. Concurrent reforms in the state water management agencies and the appointment of an interstate commission to manage the Murray Darling Basin created the larger-scale institutional framework in which the GBC now operates (Langford and others 1999). The formation of the Murray Darling Basin Commission (MDBC) established a mechanism for community groups to access federal resources for large-scale infrastructure development (see http://www.mdbc.gov.au/). The region now operates within a three-way partnership among the community, local government agencies, and state and national agencies (Barr and Carey 1992; Christen and van Meerveld 2000; Langford and others 1999).

These reforms, essentially at the national scale (see http://www.ndsp.gov.au/ and http://www.napswq.gov.au/), have had several effects. Although irrigation practices have improved markedly, there is a limit to water-use efficiency because some leaching of irrigation water (approximately 10%) through the root zone is required to prevent salt buildup. In areas where local soil types enable groundwater pumping, water tables have been lowered considerably. However, disposal of saline water then becomes a problem (Christen and van Meerveld 2000; Langford and others 1999). Some of it can be used to irrigate pastures, but this practice ultimately concentrates salt in the groundwater. When groundwater is too saline for irrigation, it can be disposed of in the Goulburn River, a tributary of the Murray. However, the current salt quota specified by state and federal agencies is inadequate under wetter conditions, forcing the use of evaporation basins. Although costly and spatially limited, the restoration of perennial vegetation can be a viable option to lower water tables.

Due to drier-than-average rainfall patterns since the late 1970s, water tables began to fall in the mid 1990s (Power and others 1999). Nonetheless, water tables across the region are still sufficiently high that relatively short periods of exceptional rainfall could cause them to cross the critical 2-m threshold (Figure 3a and b). Although improved water-use efficiency and engineering solutions will continue to provide benefits, they cannot reestablish a significant buffer to guard against wet phases (Power and others 1999). The only viable long-term options are groundwater pumping and significant revegetation.

This historical narrative highlights a very important point: The community-developed management plan did not emphasize revegetation but instead generated more complex, large-scale institutional structures to manage an increasingly sensitive system based on enhanced efficiency and engineering solutions. The catchment community responded to the crisis by asking “What must be done to keep irrigated dairy running?” rather than “Is irrigated dairy a reasonable use of natural resources in the GB, given inevitable biohydrological changes that will reduce the ability of the system to cope with minor change? And if not, what changes would move the region toward a more suitable economic basis while simultaneously managing the economic disruption during the transition?”

Land-use Patterns, Groundwater Pumping, and Resilience

Several specific questions emerge from this historical analysis. When did the GBC SES lose the capacity to cope with wet periods? What implications did this have for the evolution of institutions? What mechanisms might have enabled the GBC SES to cope with shocks and what are their costs? The simple dynamic model developed by Anderies (2005) enables us to explore these questions. With the use of this model, the size of a perturbation that the system can tolerate (that is, its resilience) can be determined by computing the size of the desirable basin of attraction as a function of key land-use parameters. The hydrological model has two stable equilibria: (a) water table below ground level; and (b) water table at the surface. Each equilibrium is characterized by a different set of dynamic processes. The first is dominated by precipitation, balanced by healthy evapotranspiration and leakage from the groundwater system to surface drainages. The second is dominated by the positive feedback loop among rising water tables, increased salt concentration due to evapotranspiration near the surface, and the subsequent reduction of transpiration capacity, leading to increased rates of water table rise (see online appendix at http://www.Springerlink.com Anderies [2005]).

A natural threshold emerges between the system states in which one of the two sets of processes dominates the dynamics. Mathematically, this threshold takes the form of an unstable equilibrium (Figure 4). Figure 4a shows the stable (heavy lines) and unstable (light line) equilibria as a function of the percent of native vegetation cleared in the mid catchment (lower catchment native vegetation cover remains at 100%). Figure 4b is the analogue for clearing in the lower catchment. Increased clearing decreases the distance between the desirable equilibrium and the unstable equilibrium. This distance is a measure of the resilience of the system. Beyond a certain point, the desirable equilibrium vanishes, leaving only the undesirable one.

a Equilibrium depth to water tables in both the mid (top graph) and lower (bottom graph) catchments as a function of the proportion of cleared land in the mid catchment when the lower catchment is left uncleared. b Analogue of a as a function of the proportion of cleared land in the lower catchment when the mid catchment is left uncleared. The heavy curves are stable equilibria; the light curves are unstable equilibria. The arrows indicate the movement of the water tables if perturbed away from equilibrium. At point A (about 9% cleared), perturbations will return to equilibrium. At point B (about 17 % cleared), small perturbations still return to equilibrium, but larger perturbations can cause the system to move to the equilibrium with water tables at the surface. At C, the system inevitably tends toward this undesirable state. Points at D, E, and F are analogues for clearing in the lower catchment.

Because they are hydrologically connected, the level of clearing for which the desirable equilibrium vanishes in the lower catchment depends on the level of clearing in the mid catchment and vice versa. To determine the land-use patterns for which the desirable equilibrium exists, the joint levels of clearing beyond which the desirable equilibrium vanishes must be computed (Figure 5).

a Possible equilibria as a function of clearing in the lower and mid catchments. The cross-hatched region marked “A” represents all levels of clearing for which the equilibrium will be stable and non-zero (equilibrium water table will be below the surface). In the region marked “B”, the equilibrium water table will be at the surface. The superimposed curve represents a simulated historical clearing trajectory. The historical trajectory leaves Region A at the white circle labeled on the diagram. b Effect of pumping and revegetation on the system. Pumping expands the size of Region A; revegetation moves the system back toward the origin.

For combinations of clearing in Region A in Figure 5, there is an equilibrium with the water table at depth in both the mid catchment and the lower catchment. For combinations in Region B, the equilibrium water table will be at the surface in either the mid, lower, or both catchment areas. The further the system is below and to the left of the boundary between these regions, the higher its capacity to absorb extremely wet periods. At the boundary, the size of the desirable basin of attraction shrinks to zero, and the system has lost its capacity to absorb perturbations.

The superimposed curve shows a simulated historical clearing trajectory. Each point on the curve represents the level of clearing in the mid and lower catchments for a given time t. The curve, beginning at the origin (no clearing) in 1860 and ending at the black circle in 2000, is constructed by reading a series of values for native vegetation cover from Figure 2 as time increases. Subtracting each of these values from 100 yields the percentage of land that has been cleared. These values are then plotted in Figure 5a. The point at which the historical clearing trajectory leaves Region A is marked with a white circle. At this point, the mid and lower catchments are 18% and 22% cleared, respectively. Referring back to Figure 2, we see that the boundary is crossed roughly 12 years after clearing began (native vegetation reaches 82% in the mid catchment). Note that the system is more sensitive to clearing in the mid than the lower catchment (Region A is taller than it is wide).

Care must be taken in interpreting these results. Because of slow hydrological dynamics, entering Region B does not necessarily imply that water tables will reach the surface. The system must be held in Region B sufficiently long (more than 100 years) to reach equilibrium. There is a lag between the time when the system enters Region B and the time when it becomes locked into the undesirable basin. Before the system gets locked in, replanting can move the system back toward Region A (Figure 5b), and groundwater pumping can increase the size of Region A (Figure 5b). Thus, the groundwater system provides part of the capacity to absorb perturbations (Region A in Figure 5a), while the human system (potential to move along the trajectory in Figure 5b and increase the size of Region A) provides the other. To address the question of when (and if) the SES lost its capacity to cope with wet periods, we must consider these factors.

Finally, we must account for momentum in the groundwater system. Even if, for example, a pumping and revegetation program were initiated to expand Region A and move the system to the point marked with the star in Figure 5b, the water table may still reach the surface. This is because the points in Region A describe system behavior near equilibrium. Different conditions are required to restore equilibrium than to maintain it. In the next section, we combine these insights with historical data to explore the potential of different management options to prevent a water table crisis like that during the 1970s and their implications of these options for future management.

RESILIENCE IMPLICATIONS OF CURRENT INSTITUTIONAL ARRANGEMENTS

The simple management options we explore are: pumping only; cessation of clearing, enabling natural regeneration (no revegetation); active revegetation; and a combination of pumping and revegetation. The key question is under what conditions each of these options can prevent the water table from reaching the surface.

Pumping affects the location of the boundary of Region A in Figure 5. The present pumping rate in the lower catchment of 100 gl/y (gl = gigaliters) expands Region A, as shown in Figure 6a. However, pumping in the lower catchment alone does not compensate for clearing in the mid catchment, even if it is increased well beyond present levels. The light gray region in Figure 6a shows that pumping twice the present amount (200 gl/y) in the lower catchment increases the level of tolerable clearing in the mid catchment by only 3%. To capture the present location of the system (black circle in Figure 5), Region A must expand appreciably in both the directions. To accomplish this, pumping must be undertaken in both the mid and lower catchments (Figure 6b). In this case, pumping 200 gl/y in both the mid and lower catchments, combined with approximately 15% revegetation in the mid catchment, would move the system to a water-table-at-depth equilibrium.

There are, unfortunately, problems with pumping. First, because of soil type constraints, groundwater can be pumped in only 30%–40% of the catchment. Second, pumping saline groundwater generates significant salt loads. Under present institutional arrangements, the GBC is severely limited in the amount of salt it is permitted to export from the catchment. Other means to dispose of the salt would have to be devised.

Revegetation, on the other hand, reduces groundwater levels without generating additional salt, but it takes time and there is a significant lag before benefits are realized. Further, there are initial costs in the form of decreased surface water yield. To explore revegetation as a policy option, we looked at two cases: cessation of clearing with either (a) natural regeneration only or, (b) active revegetation. The key question is at what water table depth would actions have to be taken to prevent the water table from reaching the surface in the lower catchment. Figure 7 contrasts the actual clearing history with three scenarios for natural regeneration only. Each scenario relates to the water table depth, D *, at which action is taken (for details of the simple feedback management model see online appendix at http://www.Springerlink com).

a Three stop-clearing scenarios overlaid on the actual clearing history (the estimated data points are represented by triangles). Each of the labels D* indicates the water table depth at which action is taken. The dates corresponding to cessation of clearing in these scenarios are 1920, 1914, and 1912 for D* = 9,D* = 10, and D* = 10.4, respectively. b Water table depth trajectories associated with each scenario shown in (a).

Figure 7a shows the percent area cleared in the lower catchment for each of the three scenarios overlaid on the actual clearing history. These scenarios show that to prevent water tables from reaching the surface, clearing would have to stop about 70 years after it began. Also, the system is very sensitive to the timing of management action. When D* = 9, clearing stops about 8 years later than when D* = 10.4. However, in the former case the water table reaches the surface in 2006 (Figure 7b); whereas in the latter case, it never does. The point is that history matters; a small delay can make a huge difference. The “kink” in the clearing curves is caused by water tables nearing the surface, increasing natural tree mortality and causing the “cleared” area to increase. The D* = 10 case highlights an important aspect of the dynamics of complex systems such as this one. Note that although the percentage of cleared area returns to Region A (Figure 6) around the year 2030 (suggesting that the intervention has been successful), the water table nevertheless reaches the surface about 30 years later. Although the system was on its way back to the water-table-at-depth basin of attraction, it did not make it before it fell into the water-table-at-surface equilibrium. (Think of navigating a ridge between two valleys that moves beneath your feet.) This is an example of a hysteretic effect: to reenter the desirable basin of attraction, a larger area of native vegetation is required than when the system left the safe basin.

The management implication of this hysteretic effect is that inaction (often the most politically palatable way to deal with uncertainty) can be fatal. To complicate matters, other historical factors serve to limit options. For example, in the scenario depicted in Figure 7, 80% of the lower catchment would have to be returned to native vegetation by 2050. Given past investment in irrigated agriculture, this is not likely achievable. Given that stopping the clearing (early enough) has been eliminated from the feasible set of options, what options are left?

Consider an active revegetation scenario characterized by how early, how fast, and how much total area society is willing to revegetate. Supposing that society is willing to revegetate all the land area (as in the stop-clearing scenario) at a maximum rate similar to the historical annual clearing rate (3%, which is highly optimistic), it can wait to take action until D 2 = 5.5 m (Figure 8a, solid curve). This enables clearing to continue for about 33 years longer than in the stop-clearing scenario. This extra clearing comes at the expense of a very aggressive revegetation program at a later date. This scenario requires that the lower catchment be completely revegetated and remain so from the mid 1980s until roughly 2100. Again, past investments in irrigation infrastructure make this unlikely. As the amount of land that society is willing to revegetate decreases, the earlier it must act. If society is willing to revegetate only 65% in the lower catchment, taking action when D 2 = 10 (as a point of comparison to the stop-clearing scenario) causes the water table to reach the surface in 2138 (Figure 8a, dotted curve). Thus, rapid revegetation of up to anything less than 65% of the catchment area will not solve the problem. Feasible options must include pumping.

a Two revegetation scenarios overlaid on the actual clearing history and the stop-clearing scenario with no revegetation (dashed line). The solid line corresponds to the case where there is no limit on society’s willingness to revegetate. The dotted line represents the case when society is willing to revegetate only up to 65% of the total. b Two pumping scenarios, one with revegetation (dash-dash-dot) and one without revegetation (dash-dot). The corresponding cleared-area levels are shown by the solid (no revegetation) and dashed (revegetation) lines and are overlaid on the actual clearing history.

It is thus no surprise that present policy focuses on establishing a new equilibrium through pumping; past events and (in)actions have closed off the other options. The model shows that even with absolutely no revegetation, if society is willing to pump 250 gl/y out of the lower catchment starting when the water table reaches 7 m, the water table can be prevented from reaching the surface (if no pumping occurs in the mid catchment, the water table will eventually reach the surface in the mid catchment but only after such a long period as to be irrelevant to this discussion). The longer society waits, the more it must be willing to pump (for example, reacting when the water table reaches 3 m requires pumping approximately 420 gl/y to prevent the water table from reaching the surface). The perspicacious reader may recognize from Figure 6 that a lower pumping level should establish a water-table-at-depth equilibrium (300 gl/y would cover the entire parameter space). However, Figure 6 gives the parameter values required to maintain a water-table-at-depth equilibrium, and it requires that all state variables be at their equilibrium values. More drastic action is required to drive the system to the desired equilibrium than to maintain it there. The additional effort is required to overcome the momentum of the groundwater dynamics and prevent the system from being “sucked” into the water-table-at-surface equilibrium. Consider the following analogy: It is much easier to hold a bowling ball above ground than to prevent one that has fallen 10 m from hitting the ground. The pulling power of this equilibrium is evident from the dramatic increase in the rate at which the water table moves toward the surface when the water table depth approaches 2 m (D* = 9 and D* = 10 in Figure 7b).

This suggests that pumping alone provides a precarious solution. Even if a new equilibrium is established with the water table between 3 and 5 m, a wet phase could cause the system to be pulled into the water-table-at-surface equilibrium. However, economic and historical contingencies have left few alternatives to pumping. We conclude with one last scenario: Suppose society is willing to revegetate up to 30% of the lower catchment and to pump at the rate necessary to stabilize the water table. Society reacts when D 2 = 3. How important is revegetation?

In Figure 8b, a scenario with revegetation is compared to one without it. The lower two curves show the pumped volume in teraliters per year. The dash-dot pumping curve corresponds to the solid clearing curve (no revegetation), whereas the dash-dash-dot pumping curve corresponds to the dashed clearing curve (revegetation). Although revegetation does not play a significant role early on, after about 30 years it cuts the pumped volume required to stabilize the water table almost in half. Given the salt load implications, this is significant. Unfortunately, there are few economic incentives to revegetate even this small amount.

MANAGING RESILIENCE IN THE REAL WORLD

The preceding scenarios suggest that drastic management actions may be required to prevent water tables from reaching the surface and thus show how few options decision makers really have. The GBC community is now dealing with the results of complex interactions among historical decision making, political economy, social values, climate variability, and biophysical processes that together directed the system onto an unsustainable trajectory soon after European settlement and the beginning of agricultural development. The collapse of water trusts and the period of legislative reform during the early 1900s, the postwar closer settlement schemes, and the reform period in the mid 1980s were all opportunities for transformative change in the institutional framework. In contrast, Figures 3, 7, and 8 suggest that there were different windows of opportunity to control the key biophysical drivers in the system. If some of these institutional and biophysical windows had opened simultaneously, transformative change to a more sustainable trajectory might have been possible.

Although historical opportunities are easy to identify a posteriori, transformative change is difficult to implement. When presented with an opportunity for dramatic change in the 1970s, the GBC community chose instead to continue with the current production systems and attempt to manage the system to a new hydrological equilibrium (GBRSAC 1989; SPAC 1989). The local community, farmers, and government alike have supported this approach in which they together have invested on the order of $A500 million (Christen and van Meerveld 2000). Although this investment has delayed the impact of rising water tables, the system now sits perilously close to a number of important ecological, social, and economic thresholds.

A shift back to wetter rainfall patterns would increase the risk of a second, and potentially more serious, water table crisis (Power and others 1999). Sunk costs have locked the system into the current approach, and decision makers are highly reluctant to take another course. Groundwater control is a trade-off among economic, social, and environmental costs, and options are becoming increasingly limited (GBRSAC 1989; SPAC 1989). Importantly, these problems are not uniform in extent and severity across the landscape. Local hydrology, soil types, topography, and past and current land use all play a role in determining spatial and temporal expression of the impacts which will be borne more by some industries and local communities than others. In fact, because of this heterogeneity, a water-table-at-surface equilibrium will not eliminate all agricultural production. The exact proportion (determination of which would require a finer-scale analysis) will be crucial in determining whether a viable agricultural industry can persist. Ironically, farms higher in the landscape on more “leaky” soil types will remain relatively immune, even though they are major contributors to groundwater recharge (GBRSAC 1989). The influence of this heterogeneity on the future of the system is unclear, but it could create significant stress for various communities and industries.

The catchment community has very little influence over salt quotas. Political or social changes at the state or national levels could alter present arrangements and make pumping-based strategies look far less attractive. Alternative disposal options, such as evaporation basins, are expensive, cannot be developed quickly, and are unattractive. In addition, the potential for pumping as a solution is limited to specific hydrological and geological conditions, which are currently met by less than half the irrigation region (GBRSAC 1989; SPAC 1989). Revegetation may be a viable option, but the opportunity costs are high, response times are slow, and there is little incentive for native revegetation. In areas with saline groundwater, trees accumulate salt in the upper soil layers (by transpiring water and leaving salt behind). This severely inhibits growth and makes agroforestry uneconomical (Heuperman 1999). Simply taking land out of irrigation is not a solution and may, in fact, be more costly in the long run. Without the flushing action of irrigation, when water tables get within 2 m of the surface, salt accumulates in the upper soil profile through capillary action. The land becomes virtually useless for production, and the saline soil and water tables impact infrastructure such as buildings and roads, thus limiting other uses (Bethune and others 2004a; Sampson 1996; Surapaneni and Olsson 2002).

CONCLUSION

The problems faced by the GBC have become very complex. This complexity has, in part, arisen from a pathological cycle of resource degradation,followed by social response aimed at reestablishing or maintaining productivity of the resource-degrading activity, leading to further degradation, and so on. This focus on improving efficiency typically requires ever-increasing investment in physical and social infrastructure. In this way, the GBC may become highly optimized in its ability to continue to generate high output from irrigated dairy activities while at the same time tolerating high water tables and soil salinity. However, the system has become much more vulnerable to wetter climate phases and shifts in larger-scale social and political processes (for example, salt quotas). Is this process of the foreclosure of options in pursuit of efficiency dictated by past investment toward a highly optimized but extremely fragile system inevitable? This process is at least observable in many instances in engineering and natural resource systems (for example, Csete and Doyle 2002).

An important message of the GBC is that the maintenance of diversity in the class of “reachable” social-ecological configurations needs to be considered before implementing resource management decisions (Walker and others 2004). The historical development of the GBC provides a clear illustration of the problems that may arise when incremental adaptation is aimed solely at maintaining productivity. Each incremental adaptation has consequences for the economic and social structures within the community. These structures, in turn, generate powerful economic forces and vested interests that work to ensure that the system stays on the current trajectory, continually trading off future options for short-term returns and reinforcing the incremental adaptation process. The capacity to reorganize around a fundamentally different set of principles and escape the incremental adaptation trap is critical to the long-term viability of SESs. To continue to provide a stream of goods and services, the GBC SES may now require a transformation rather than marginal adaptation. But has the inertia generated by historical and biophysical processes become so great that it precludes transformative change?

References

Anderies JM 2005. Minimal models and agroecological policy at the regional scale: an application to salinity problems in southeastern Australia. Reg Environ Change 5:1–17

Anderies J, Janssen M, Walker B. 2002. Grazing management, resilience, and the dynamics of a fire driven rangeland system. Ecosystems 5:23–44

Barr N, Carey J. 1992. Greening a brown land: the Australian search for sustainable land use Melbourne: MacMillan

Beltratti A. 1997. Growth with natural and environmental resources. In: Carraro C, Siniscalcoa D, Eds. New directions in the economic theory of the environment. Cambridge (UK): Cambridge University Press. pp 7–47

Bethune M, Gyles O, Wang Q. 2004. Options for management of saline groundwater in an irrigated farming system. Austr J Exp Agric 44:181–88

Carpenter SR, Brock WA. 2004. Spatial complexity, resilience and policy diversity: fishing on lake-rich landscapes. Ecol Soc 9:8. Available online at: http://www.ecologyandsociety.org/vol9/iss1/art8.

Carpenter SA, Ludwig D, Brock WA. 1999. Management of eutrophication for lakes subject to potentially irreversible change. Ecol Appl 9:751–71

Christen E, van Meerveld I. 2000. Institutional arrangements in the Shepparton Irrigation Region Victoria, Australia. Technical report. Lahore, Pakistan: International Water Management Institute

Clark CW 1973. The economics of overexploitation. Science 189:630–34

Clark CW 1990. Mathematical bioeconomics: the optimal management of renewable resources.New York: Wiley

Clark CW, Clarke FH, Munro GR. 1979. The optimal exploitation of renewable resource stocks: problems of irreversible investment. Econometrica 47:25–47

Csete ME, Doyle JC. 2002. Reverse engineering of biological complexity. Science 295:1664–69

Garran A. Ed. 1886. Picturesque atlas of Australasia. Sydney: Picturesque Atlas

[GBCMA] Goulburn Broken Catchment Management Authority. 2000. Goulburn broken native vegetation management strategy. Technical report. Shepparton (Victoria, Australia): GBCMA

[GBRSAC] Goulburn Broken Region Salinity Advisory Council. 1989. Shepparton land and water salinity management plan—background papers; vol 1–3. Technical report. Shepparton (Victoria, Australia): GBRSAC

Gordon H 1954. The economic theory of a common property resource: the fishery. J Poli Econ 62:124–42

Greif A 1998. Historical and comparative institutional analysis. Am Econ Rev 88:80–84

Gunderson L 2001. Managing surprising ecosystems in southern Florida. Ecol Econ 37:371–78

Heuperman A 1999. Hydraulic gradient reversal by trees in shallow water table areas and repercussions for the sustainability of tree-growing systems. Agric Water Manage 39:153–67

Holling CS, Gunderson LH. 2002. Resilience and adaptive cycles. In: Gunderson LH, Holling CS, Eds, Panarchy: understanding transformations in systems of humans and nature. Island Press Washington, pp 25–62

Langford K, Forster C, Malcom D. 1999. Towards a financially sustainable irrigation system: lessons from the state of Victoria, Australia. Technical paper 413. World Bank, Washington, DC

Ludwig D 1980. Harvesting strategies for a randomly fluctuating population. J. Cons Int Explor Mer 39:168–74

Ludwig D, Walters C. 1982. Optimal harvesting with imprecise parameter estimates. Ecol Model 14:273–92

Ludwig D, Jones DD, Holling CS. 1978. Qualitative analysis of insect outbreak systems: The spruce budworm and forest. J Animal Ecol 47:315–32

Ludwig D, Walker B, Holling CS. 1997. Sustainability, stability, and resilience. Conserv Ecol. Available online at: http://www.consecol.org/vol1/iss1/art7/

North DC. 1990. Institutions, institutional change and economic performance: political economy of institutions and decisions. Cambridge (UK): Cambridge University Press

Perrings C 1989. An optimal path to extinction—poverty and resource degradation in the open agrarian economy. J Develop Econ 30:1–24

Power S, Casey T, Folland C, Colman A, Mehta V. 1999. Inter-decadal modulation of the impact of enso on Australia. Clim Dynam 15:319–324

Quiggin J 1988. Murray River salinity—an illustrative model. Am J Agric Econ 70:635–645

Sampson K 1996. Irrigation with saline water in the Shepparton Irrigation Region, Australia. J Soil Water Conserv 9:29–33

[SPAC] Salinity Pilot Program Advisory Council. 1989. Shepparton land and water salinity management plan. Salt Action Victoria, Shepparton, Victoria, Australia

Surapaneni A, Olsson K. 2002. Sodification under conjunctive water use in the Shepparton Irrigation Region of northern Victoria: a review. Aus J Exp Agric 42:249–263

Walker BH, Meyers JA. 2004. Thresholds in ecological and socialecological systems: a developing database. Ecol Soc. Available online at: http://www.ecologyandsociety.org/ vol9/iss2/art3

Walker BH, Ludwig D, Holling CS, Peterman RM. 1981. Stability of semi-arid savanna grazing systems. J Ecol 69:473–498

Walker B, Holling C, Carpenter S, Kinzig A. 2004. Resilience, adaptability and transformability Ecol Soc. Available online at: http://www.ecologyandsociety.org/ vol9/iss2/art5

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Here we present a very brief summary of the underlying biohydrological model. Although the model is developed and analyzed in detail in Anderies (2005), this appendix provides details for the simple feedback management component that has been added to the model but is not addressed in Anderies (2005).

The Biophysical Model

The model is based on simple mass balance principles. The region of interest is divided into two areas, the lower and mid catchments (Figure A1). In each area, three state variables are considered: volume of groundwater, mass of salt in the groundwater, and mass of salt in the soil profile. Salt and water enter the system through rainfall and move through the groundwater system based on Darcy’s law. Salt moves into the soil profile through capillary action and out of the soil through flushing action. A well-mixed model is assumed as a benchmark; that is, salt is distributed evenly throughout the soil profile and groundwater system. The effects of deviations from this well-mixed case are explored through the use of tunable parameters (the θs in the equations of motion).

The equations of motion for the system are given below. Symbols not defined in Figure A1 are defined as follows: φ = porosity, \( R_{r_i} = \) regional recharge, C b = background salinity in rain, C bs = minimum achievable salt concentration in the soil (cannot be less than the salt concentration of the flushing water), γ sc and γ sd = parameters controlling capillary action; all Cs with the same subscript as the Ns are concentrations corresponding to the N variable with the same subscript. The dynamics can be conceptualized as two leaky buckets that are connected: Water leaks into the lid of each bucket depending on the the vegetation cover; water leaks out of the buckets through the groundwater system. Some of the water moves from the upper bucket to the lower bucket, and some of it leaves both buckets and enters the river system. Salt is carried along with these water flows.

The equations for the dynamics of the depth of water table are self-explanatory. The change in height of the water table = (water in–water out)/area. However, the equations for salt dynamics need some explanation. In Eq. (3), salt enters the soil (\( N_{s_{1}} \) and \( N_{s_{2}} \)) via rain (computed as the total volume times concentration (P 1 A 1 C b )), through capillary action (\( \gamma _{sc} C_{g_{1}} \exp (\gamma_{sd} D_{1}) \)), or as deposits when water tables fall (\( \theta_{m_1} A_{1} C_{s_{1}} (D_{1}){{dD_{1}} \over {dt}} \)). Salt leaves the soil as it is flushed by downward-flowing water (\( \theta_{s_{1}} R_{1} A_{1} (C_{s_{1}} (D_{1}) - C_{bs}) \)) and lateral subsurface flow (\( S_{1} C_{s_{1}} (d_{sr}) \)); it is washed out as the water table rises (\( \theta _{m_{1}} A_{1} C_{s_{1}} (D_{1}){dD_{1} \over {dt}} \)). The rationale for Eq. (4) is identical. In a similar way, salt enters the groundwater system (\( N_{g_{1}} \) and \( N_{g_{2}} \)) as it is flushed out of the topsoil (\( \theta_{s_{1}} R_{1} A_{1} (C_{s_{1}} (D_{1}) - C_{bs}) \)). Salt leaves (Eq. [5]) through capillary action (\( \gamma_{sc} C_{g_{1}} \exp (\gamma_{sd} D_{1}) \)), via groundwater leakage (\( \theta_{g_{1}} G_{1} C_{g_{1}} \) and \( \theta_{l_{12}} L_{12} C_{g_{1}} \)), and when water tables fall (\( \theta_{m_{1}} A_{1} C_{s_{1}} (D_{1}){dD_{1} \over {dt}} \)). It is important to note that salt moves from the mid to the lower catchment via the groundwater system (\( -\theta_{l_{12}} L_{12} C_{g_{1}} \)in Eq. [5] and \( +\theta_{l_{12}} L_{12} C_{g_{1}} \) in Eq. [6]).

Perhaps the most challenging aspect of the model is relating groundwater recharge, rainfall, and vegetation cover; that is, determining the relationship between P i and R i and establishing how it depends on vegetation cover. Figure A2 shows the flowpath of a raindrop through the catchment. The details of the relationships that partition rain into the different processes depicted are based on common-sense consideration outlined in Anderies (2005).

Feedback Management

The objective of the feedback management model component is to build in a simple response to rising water tables in the form of cessation of clearing, active revegetation, and groundwater pumping. A management strategy can consist of any combination of these three options. We assume that feedback management is tied to the depth of the water table in the lower catchment. To implement such a strategy, we have to model vegetation dynamics. This model must incorporate the effects of active revegetation efforts and of soil salinization and water-logging on regeneration. The simplest way to accomplish this is to assume the following:

where x i is the biomass of native vegetation (nongrass) in the i th region rescaled to the carrying capacity of region i; \( r_{x_i} \) is the intrinsic regeneration rate of vegetation; and RV 1 , CL 1 , and M 1 are revegetation, clearing, and natural mortality rates, respectively. Note that the intrinsic rate of regeneration depends on the transpirative capacity, \( T_{t_i} \), in each region.

Feedback management enters the model through the dependence of the revegetation and clearing rates on D 2 . If feedback management is in place, as D 2 decreases, clearing stops and revegetation begins. Feedback pumping in each region is modeled in a similar fashion; as D 2 declines, pumping increases. In all three cases, a sigmoidal function showing a threshold near D * 2 (chosen by the feedback manager) is used. The choice of this critical depth near which feedback responses occur has a major influence on the ability of the system to avoid salinization and water-logging in the lower catchment.

At equilibrium with no clearing, \( r_{x_i} \rightarrow r^{\max}_{x_i} \) and \( x_{i} \rightarrow 1 - M \ {\rm subscript}/r^{\max}_{x_i} \). Because \( {x_i} \) has been rescaled to carrying capacity (in arbitrary units of biomass and unit area), they can be interpreted as the percentage of total area under native vegetation. Note that revegetation efforts increase biomass directly (that is, they produce immediate returns through the \( RV_{i} (D_{2})x_{i} \) term), but these efforts also increase future natural regenerative capacity (\( r_{x_1} (T_{t1})x_{1} (1 - x_{1}) \) term).

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Anderies, J.M., Ryan, P. & Walker, B.H. Loss of Resilience, Crisis, and Institutional Change: Lessons from an Intensive Agricultural System in Southeastern Australia. Ecosystems 9, 865–878 (2006). https://doi.org/10.1007/s10021-006-0017-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10021-006-0017-1