Abstract

About half a century after its little-known beginnings, the quantum topological approach called QTAIM has grown into a widespread, but still not mainstream, methodology of interpretational quantum chemistry. Although often confused in textbooks with yet another population analysis, be it perhaps an elegant but somewhat esoteric one, QTAIM has been enriched with about a dozen other research areas sharing its main mathematical language, such as Interacting Quantum Atoms (IQA) or Electron Localisation Function (ELF), to form an overarching approach called Quantum Chemical Topology (QCT). Instead of reviewing the latter’s role in understanding non-covalent interactions, we propose a number of ideas emerging from the full consequences of the space-filling nature of topological atoms, and discuss how they (will) impact on interatomic interactions, including non-covalent ones. The architecture of a force field called FFLUX, which is based on these ideas, is outlined. A new method called Relative Energy Gradient (REG) is put forward, which is able, by computation, to detect which fragments of a given molecular assembly govern the energetic behaviour of this whole assembly. This method can offer insight into the typical balance of competing atomic energies both in covalent and non-covalent case studies. A brief discussion on so-called bond critical points is given, highlighting concerns about their meaning, mainly in the arena of non-covalent interactions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

While chemistry used to be mainly the science of the molecule, much of this central discipline has moved on to become the science of the molecular assembly. This part of Nature is governed by so-called non-covalent interactions, which are energetically weaker than covalent interactions. This weakness poses a challenge on two fronts: quantitative computation and qualitative interpretation. On the one hand, the quantitative front is conveniently illustrated by the fact that, so far, no computation has ever predicted a successful drug. Certainly, the energetics of drug design is one where each kilojoule per mole matters in the calculation of protein–ligand interaction. On the other hand, a spectacular example of the qualitative front is the hypothesis [1] that a gecko’s ability to defy gravity is due to attractive van der Waals forces in hairs on its feet. The difficulty here was that later work discredited [2] this interpretation.

Looking at the totality of the “non-covalent literature”, including all scales (from noble gas dimers to proteins in aqueous solution and beyond) and all methods (first principles and force fields), reveals many successes. Yet, there is currently no fully integrated and consistent numerical treatment (i.e. quantitative prediction) of non-covalent interactions across all system scales and research fields. Secondly, interpretative models used by experimentalists often remain too simple, and poorly connected to a rigorous (quantum) physical reality. In summary, it will probably take a long time before scientists meet the challenge of both quantitatively predicting and qualitatively understanding non-covalent interactions.

The importance of properly grasping non-covalent interaction energies and forces cannot be underestimated. One can only marvel at the complexity of the meticulous packing of biomolecules in a living cell. For example, inside the nucleus, each chromosome contains a long DNA double helix, which is cleverly wrapped around proteins called histones. Again, non-covalent interactions govern this apparently delicate yet robust process, not to mention the stability of the double helix itself. Even more stunning is the complexity of transcription in the nucleus. The enzyme RNA polymerase attaches to a gene (a piece of DNA) and starts making messenger RNA, steered by molecular complementarity. This mechanism, as well as the architecture and operation of this whole molecular machine, is again managed by non-covalent interactions. That such an ingenious process automatically materialised in Nature seems to suggest an as of yet unstated law; something within irreversible thermodynamics just has to lead to such wonders of organisation. Other biochemical processes are even more breath-taking in their complexity and precision, to the point that one begins to question how all this can actually emerge from the non-covalent forces that quantum chemists hope to master. Will, in a century perhaps, embryology become a special branch of chemistry?

In this article, much more down-to-earth aspects of non-covalent interactions will be discussed from the point of view of Quantum Chemical Topology (QCT), [3,4,5,6] which is a generalisation of the Quantum Theory of Atoms in Molecules (QTAIM) [7,8,9,10]. There are a number of directions that this article will not follow because they have been reviewed elsewhere or do not meet its intent and spirit. One such direction is teaching technical content behind QCT because several pedagogical accounts (see the four references on QCT mentioned just above) have done so recently. The reader is kindly requested to consult those references because this Conversation is unfortunately not a tutorial. Secondly, the direction of reviewing [11] the by now vast literature that uses the original [10, 12] QTAIM descriptors (to characterise all sorts of atomic interactions across all areas of chemistry) will not be followed either. Many such studies, such as a fairly recent one [13] on E…π (E = O, S, Se, and Te) and E…π interactions, report only local properties evaluated at so-called bond critical points (see Section 2.11). There is also interesting quantum topological work on halogen bonding, for example, that has again been surveyed [14] elsewhere. Another important branch of QCT is the richly documented topology [15] of the so-called Electron Localisation Function (ELF). This function too has been used in the study of non-covalent interactions (see reference [16] for a recent example) but again this noteworthy work falls outside the scope of this paper. What is then the direction followed? The bias of this article is on integrated properties (such as charges, higher rank multipole moments, and energies). A number of rather bold statements (listed in subsection of the “Proposals and claims” of Section 2) will be made, with an eye on complying with the intent and character of this type of article.

2 Proposals and claims

2.1 How to define an atom inside a system?

Many will agree that non-covalent interactions are typically described at atomistic level except perhaps for an elementary description of π-π stacking where the molecular quadrupole moments of, for example, substituted benzenes are brought in. Thus, there is a need to define an atom within a molecule (or molecular assembly). How to do this is a contentious question, which cannot be reviewed here due to space restriction. However, five arguments in favour of the quantum topological proposal can be rehearsed here. To set the scene, Fig. 1 shows examples of topological atoms.

A first argument is that the topological approach takes the (molecular) electron density as its starting point rather than a Hilbert space of basis functions. The topological partitioning is therefore a real space method that operates in ordinary 3D space. This feature has two advantages. Firstly, the definition of the atom survives upon varying the generator of the electron density (e.g. SCF-LCAO-MO, X-ray crystallography, or a grid-based computational scheme). In other words, the topological atom does not depend on the details of how the electron density was obtained. Topological atoms transcend orbitals; their shapes do not depend on any choice made here. Because the electron density is observable, atomic properties can be obtained from both theory and experiment. The second advantage is that atomic charges are robust with respect to the nature of the basis set. To the contrary, the concept of Mulliken charges, for example, becomes unstable in the presence of diffuse Gaussian primitive functions and even evaporates altogether when using plane-wavefunctions. Similarly, the well-known Distributed Multipole Analysis (DMA) [17] leads to atomic charges that become unreliable when diffuse Gaussian primitives are used. However, this problem has been overcome [18] by essentially introducing less fuzzy boundaries to the DMA atoms. This modification acknowledges the benefit offered by sharper boundaries, which also underpin the QTAIM philosophy. We note that DMA lies at the heart of a number of next-generation multipolar force fields such as SIBFA, [19] XED, [20] EFP, [21] AMOEBA, [22] NEMO, [23] and the force field behind the crystal structure prediction code DMACRYS, [24] which will hopefully replace classical force fields such as AMBER, CHARMM, OPLS, MM3, or GROMOS. Thorough comparisons [25, 26] between 7 Hirshfeld variants of atomic charge, the QTAIM charge, and 4 ESP-fitted types of charges show that QTAIM is the most robust, i.e. not too sensitive to details in the electronic structure calculations from which they are derived (basis set, conformational changes, chemical changes in the environment).

A second argument in favour of the topological approach is that it is minimal (not to be confused with “simple” (see preface of ref [27])) in the sense of Occam’s razor. The only notion needed to reveal the atoms is the gradient path, which is a trajectory that always follows the direction of maximal ascent of the function at hand (i.e. the electron density). This notion is sufficient to recover the language of dynamical systems and algebraic topology (p. 31/32 in ref [6]): atomic basins (i.e. topological atoms), critical points, separatrices (i.e. interatomic surfaces), conflict and bifurcation catastrophes, the gradient vector field, critical points, and attractors. The concept of the gradient path is parameter-free other than for practical decisions (i.e. numerical method and settings) on how to solve the system of differential equations that generate the gradient paths. Moreover, no reference density is brought in, unlike in the case of high-resolution crystallographic deformation densities or Hirshfeld charges, for example. We note that the original Hirshfeld approach [28] was made independent of its damaging promolecular reference density, resulting in the so-called Hirshfeld-I method [29]. Interestingly, the new atomic charges thus obtained become larger in magnitude than the original ones. Thus, the iterative Hirshfeld charges moved towards the topological charges, which were ironically often criticised for being too large. The further developments around Hirshfeld charges have more problems and subsequent solutions but they are beyond the scope of this article. Finally, we note that, in addition to Hirshfeld-I, the MBIS and ISA approaches also do not have the problems shown by the original Hirshfeld approach.

Thirdly, within the real space partitioning approach, there are fuzzy (i.e. interpenetrating) or non-fuzzy (i.e. space-filling) methods. QCT belongs to the latter category because topological atoms do not overlap nor do they leave gaps between them. A thorough and systematic comparison [30] of both types of partitioning concluded that fuzzy partitions give small atomic net charges and enhanced covalency, while space-filling partitions generate larger net charges and smaller covalencies. The smallest deformations respective to a reference are found in space-filling decompositions, which generate a less distorted image of chemical phenomena leading to smaller deformation and interaction energies. This is an important conclusion because it is at the heart of chemistry: space-filling decompositions better preserve the atomic (or fragment) identity from the energetic point of view.

Fourthly, from Gauss’s divergence theorem, it can be proven that a topological atom has a well-defined kinetic energy. Put differently, an arbitrary subspace would suffer from an ambiguous kinetic energy. There are at least two different ways to define a local kinetic energy density, and for an arbitrary subspace, they each return a different kinetic energy. However, for a topological atom, the two energies are the same. An atom with such a well-defined kinetic energy is called a quantum atom. Note that all topological atoms are quantum atoms but not vice versa. When integrating over the special (zero-flux) volume that is a topological atom, the otherwise digressing kinetic energies (for an arbitrary volume) congregate to the same value. However, Anderson et al. [31] showed that all this is only true within what they call the “Laplacian family of local kinetic energies” when introducing quasiprobability distributions. But then again, this expanded knowledge does not reduce [32] the value of the topological atom; it just points out that not all quantum atoms are topological atoms.

Fifthly and finally, a significant effort was made to answer the question if quantum mechanics can provide a complete description of an atomic subsystem. Schwinger’s principle [33] served as a starting point to answer this question because of the elegance of its unified approach: as a single principle, it provides a complete development of quantum mechanics. The answer to the question posed above is affirmative because the same principle applies [34] to an atomic subsystem when it is a topological atom. However, it was shown [35] much later that the topological atom does not uniquely follow from quantum mechanics as the only quantum atom.

The advantages listed so far need to be balanced by the disadvantage of computational expense and algorithmic difficulties that QCT introduces. A brief literature review on QCT algorithms has been given before, in both Introductions of two previous papers [36, 37] on new algorithms. The computational expense of calculating atomic properties has slackened the uptake of QCT over the decades but this infelicity dwindles by the year through the automatic advent of improved hardware. Furthermore, improved algorithms cope better with the complexity of delineating the boundary of a topological atom as seen by an integration ray (centred at the nucleus) while sweeping the atom’s volume. However, setting up a completely robust and efficient algorithm remains a challenge.

We end this section with a quasi-philosophical comment on the nature of space-filling atoms. The mainstream view on the nature of atoms inside molecules appears to be one that regards atoms as fuzzy, interpenetrating objects. This view then also applies to the molecules that atoms form: molecules too will penetrate each other and thus overlap. We will return to this point in Section 2.4, with the alternative view following from QCT. A central question is, looking at the natural world, can one find support for the idea of non-overlapping objects? Life itself could not have emerged were it not for its sharp boundaries, starting with cells and the many confined, membraned organelles within them. At a conceptual level, there are several more important examples: the phases in a phase diagram do not overlap, and thermodynamics exhibits a sharp distinction between the system and the surroundings. To push examples further, beyond the physical sciences: Portugal and Spain do not overlap, and legally one is dead or alive, or married or not.

2.2 Quantum topological energy partitioning

The birth of QTAIM, now almost half a century ago, can be traced back to a paper published [38] in 1972, which for the first time showed the emblematic shape of an interatomic surface. The authors showed the existence of an atomic subspace obeying its own virial relationship and illustrated this for a number of lithium-containing diatomics. The follow-up paper [39] showed that the total energy of a topological atom can be obtained without calculating its potential energy thanks to the existence of the atomic virial theorem. However, this is only possible if the forces on all the nuclei in the molecule (that the atom is part of) vanish. The fact that atomic energies could only be computed for a molecule at a stationary point on its potential energy surface remained an undesirable restriction for decades. However, in 1997 an algorithm appeared [40] that calculated the (electrostatic) potential energy between two topological atoms. Unwittingly this work paved the way to break free from the constraint of the virial theorem. In 2001, Salvador et al. [41] and ourselves [42] established an algorithm to calculate interatomic electrostatic potential energies, independently of each other, and both independently of that 1997 paper. This work inspired another group to create their own version 3 years later, [43] which then led to the main paper [44] establishing the IQA method. IQA attracts a growing number of users and supporters as recently reviewed. [45]

Today, IQA is the most used topological energy partitioning method although an alternative one exists [46,47,48]. IQA enables the calculation of both intra- and interatomic energies for any molecular geometry. Importantly, it also achieves this for non-stationary points on the potential energy surface, which was not possible with the original QTAIM approach. The water hexamer, for example, has been analysed [49] in terms of hydrogen bond cooperativity and anti-cooperativity. More on this work will be mentioned near the end of this section.

Two IQA alternatives exist when combined with DFT: the first one, [50] which is implemented in the popular and fast program AIMAll, [51] and one that followed shortly after [52]. Recent work [53] compared these two energy partitionings with the original virial-based approach. The current variation in topological energy partitionings is much smaller than that in non-topological ones [54].

The brief history above merely serves to put QTAIM in a context, and definitely one that shows that its origin lies in energy partitioning. Indeed, quite often, QTAIM is introduced and portrayed [55] as a population analysis [56] but this is not doing it justice. In fact, it would be more exciting and useful to point out that QTAIM offers both atomic charges and atomic energies from the same underlying idea. This idea is the integration, over an atomic volume, of relevant quantum mechanical property densities, which produce all atomic properties (including volumes and multipole moments). Such universality cannot be claimed by a slightly more recent (1976) and popular energy partitioning scheme, [57] namely that of Kitaura and Morokuma. This scheme offers no corresponding atomic charges.

We now briefly explain how IQA partitions the total energy of a system, Etot, which can be written as follows:

where \({\rho }_{1}({\mathbf{r}}_{1},{\mathbf{r}}_{1}^{\boldsymbol{^{\prime}}})\) is the non-diagonal first-order reduced density matrix, \({\rho }_{2}({\mathbf{r}}_{1},{\mathbf{r}}_{2})\) the diagonal second-order reduced density matrix, and Vnn the internuclear repulsion energy. Note that r1′ is set to r1 after the Laplacian operator in the kinetic energy operator has acted on r1 only. The one-electron operators \(\widehat{T}\) and \({\widehat{V}}_{ne}\) respectively represent the electronic kinetic energy and the attractive nuclear-electron potential energy while the two-electron operator r12 expresses the interelectronic repulsion. The integrations take place over the whole of three-dimensional space. However, the topological atomic partitioning introduces integration over atomic volumes ΩA and ΩB. For example, the nuclear-electron potential energy between the molecular electron density within ΩA and the nuclear charge of ΩB is given by

where r1B is the distance between the nucleus in ΩB and an electron. Similarly, the intra-atomic electron–electron repulsion energy within ΩA is defined by

The mono-atomic energy contributions (also called [42] self-energy) are collected in a single contribution for ΩA,

where TA is the atomic kinetic energy and \({V}_{en}^{AA}\) the nuclear-electron potential energy between ΩA’s own nucleus and the molecular electron density that is within this atom’s volume. The overall intra-atomic energy \({E}_{intra}^{A}\) has been fitted successfully to the repulsive part of the Buckingham potential for van der Waals complexes, [58] and more general work [59] of this type also confirms that this IQA term represents steric energy.

The interatomic interaction energies are obtained by invoking the fine-structure of \({\rho }_{2}\left({\mathbf{r}}_{1}, {\mathbf{r}}_{2}\right)\), which involves three well-known contributions: Coulomb (C), exchange (X), and correlation (c). The latter two energies are often combined in one term of representing exchange–correlation (Xc). Each of the three terms that make up \({\rho }_{2}\left({\mathbf{r}}_{1}, {\mathbf{r}}_{2}\right)\) corresponds to an energy, as shown in Eq. (5). This is formally expressed for a pair of interacting atoms ΩA and ΩB as

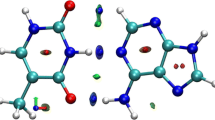

The six-dimensional integrations that are at the heart of the three types of energy contributions are time-consuming but can be carried out by the thousands, on the typical multi-core hardware that most labs have nowadays. Figure 2 illustrates the fine-structure of \({\rho }_{2}\left({\mathbf{r}}_{1}, {\mathbf{r}}_{2}\right)\) and how it relates to various chemical concepts.

A traditional IQA analysis, which operates at atomic resolution and which sees both intra- and interatomic energies, can be generalised. Firstly, due to the space-filling and thus additive nature of topological atoms, it is trivial to obtain intra-group and inter-group energies by simply summing the energies of the participating atoms. A group can be any bunch of atoms, such as a functional group, but it can also be a molecule inside an assembly of molecules. Secondly, it is possible [49] to lump the intra-group (deformation) energy into the inter-group energy. This is no unique way of doing this but a popular choice is using the ratio of an inter-group energy to the sum of all inter-group energies as a weight that contributes to the intra-group energy. As an example, the various configurations of the water hexamer (ring, prism, cage, book, bag) can then be analysed by IQA interaction energies defined for any monomer inside the hexamer. This value is on average − 39 kJ mol−1 between any two adjacent monomers in the so-called homodromic ring. This energy stabilises to − 41 kJ mol−1 for two waters in the book configuration, where one water forms a hydrogen bond with another water that acts as a double hydrogen acceptor. This subtle hydrogen bond strengthening effect occurs in the presence of the latter’s anti-cooperative effect and came as a surprise.

It is helpful to further illustrate IQA with energies occurring in a wider set of hydrogen-bonded systems. An analysis of 9 simple hydrogen-bonded complexes [60] focuses on groups of atoms, namely the molecules (monomers) in each complex. Before we can discuss the data in Table 1, we need to define a few more energies derived from the primary IQA energies already defined above. Firstly, the mono-atomic energy \({E}_{intra}^{\Omega }\) has been identified with steric energy [58, 59]. It is informative to compare it with the energy of a free atom Ω and thus define the resulting deformation energy as

Note that a “free atom” (and thus \({E}_{\mathrm{free}}^{\Omega }\)) can refer to both a single atom in vacuo as well as to an atom in a free or isolated molecule. Hence, “free” can refer to a group of atoms, forming the molecule that one wants the deformation energy to refer to. Changing the reference energy just alters the zero the energy scale. If one wants to study two interacting molecules, it may make more sense to define each molecule as a quantum fragment. One thus applies an Interacting Quantum Fragments (IQF) analysis, strictly speaking, rather than an IQA analysis.

In analogy with the summation explained above, we can then also sum \({E}_{\mathrm{def}}^{\Omega }\) over the atoms in the proton donor (D) monomer of the hydrogen-bonded complex to obtain \({E}_{\mathrm{def}}^{D}\), and similarly the atoms in the proton acceptor (A) monomer to obtain \({E}_{\mathrm{def}}^{A}\). From Table 1, it is clear that the acceptor molecule is always more deformed (costing more energy) than the donor molecule except for the water dimer and the HF…F− system.

Secondly, we define the traditional electrostatic energy between two atoms A and B from the Coulomb energy \({V}_{\mathrm{ee},\mathrm{C}}\) (see Eq. (5))

by bringing in the nuclear charge density to balance the purely electronic Coulomb interaction \({(V}_{\mathrm{ee},\mathrm{C}}^{AB}\)), where n refers to the nucleus of the atom under whose superscript it directly appears. Thirdly, the full interaction energy (electrons and nuclei) between atoms A and B is defined as

which can again readily be generalised to the interaction energy between the donor (D) and acceptor (A) molecule, \({E}_{\mathrm{int}}^{DA}={V}_{elec}^{DA}+{V}_{Xc}^{DA}\), by simple summation of the participating atoms, where DA (a shorthand for D…A) refers to the interaction between D and A. Fourthly, the supermolecular complex’s binding energy Ebind is defined as

which shows that binding is ultimately the result of attractive interatomic interactions partially counteracted by the atoms’ positive deformations. This binding energy is typically associated with the strength of the hydrogen bond. For example, one may read off Table 1 that “the water dimer is held together by a hydrogen bond” of about 21.3 kJ/mol (the typical 5 kcal/mol, familiar to many). Note that each fragment’s (i.e. molecule’s) Edef is obtained by subtracting the total energy of the optimised molecule in vacuo. Group (i.e. fragment or molecule) deformation energies thus include the so-called preparation energy due to the rearrangement undergone by the molecule in transitioning from its in vacuo geometry to the interacting geometry.

At atomic (rather than monomeric) level, it is useful to inspect the electrostatic energy of the hydrogen bond itself, \({V}_{elec}^{HB}\), involving only the hydrogen-bonded hydrogen atom and the base atom it is bonded to (N, O, or F). Table 1 provides this energy, as well as the exchange–correlation counterpart \({V}_{Xc}^{HB}\). Finally, the charge transfer between the donor and acceptor molecule is gauged by summing the net atomic charges of the donor (just by choice), QD. Table 1 shows that electronic charge (expressed in the atomic unit of “electron, e”) always migrates from the acceptor to the donor because all values of QD are negative.

A final note concerns the relation of IQA to other energy decomposition schemes. The IQA energy terms used in this article are well-defined and consistent within the context of IQA. Already in 2006, work [60] of the Oviedo group made careful comparisons between IQA and SAPT, [61] the paradigm of modern perturbation approaches. Hence, there is a perspective for comparing IQA and non-IQA methods, and IQA is not isolated. However, in order to maximise the extent of comparison, that is, ensuring that energy terms mean the same, physical principles may have to be violated (such as the Pauli principle). The same may be true for published comparisons [60, 62] between IQA on the one hand and KM, [63] NBO, [64] EDA, [65] or NEDA [66] on the other.

2.3 Covalency is a sliding scale

The interatomic exchange energy, denoted VX(A,B), quantifies the covalent character of the interaction between any two atoms A and B no matter how far apart. The fact that VX(A,B) adopts a range of values undermines the traditional binary picture of covalent versus non-covalent interactions. A systematic study [67] of VX(A,B) values for dozens of simple but representative compounds proves this point alongside many other interesting points whose discussion is precluded by space restriction. It is informative to plot the relationship between |VX(A,B)| and internuclear distance d(A,B), as is done in Fig. 3 for the global minimum of the water dimer.

As anticipated, the molecular Lewis diagram is recuperated from its clear signature in such a plot. Indeed, all molecules clearly show a cluster housing all expected (covalent) bonds and thereby recovering the stripes in the Lewis diagram (or the sticks between the balls in a 3D ball-and-stick model). These so-called 1,2 interactions (originating in force field language) appear as rather isolated clusters of several hundreds of kilojoules per mole. Their island-like nature perhaps instigates the perception of the black-and-white covalent/non-covalent divide. But then the 1,3 interactions (where now two covalent bonds link the two nuclei of interest) can be associated with still handsome values for |VX(A,B)|, of the order of 10 kJ mol−1. The 1,4 interactions are typically again weaker than the 1,3 interactions, by an order of magnitude or so.

A look at all 1,n interactions (including n > 4) in molecular (rather than metallic-like or strongly conjugated (e.g. polyaromatic)) systems exposes an exponential decay of |VX(A,B)| with increasing distance. Certainly one can draw a broad line that connects the various 1,n clusters. However, it turns out that hydrogen bonds appear somewhat set aside from this line. For example, the classical O–H…O hydrogen bond occurring in the global minimum of the water dimer has a |VX(A,B)| value that is too high for its internuclear distance according to that overall connecting broad line. This means that the O…H bond is more covalent than expected. Moreover, the O…O interaction is also anomalously strong (i.e. covalent) for its internuclear distance. When taken together, both observations suggest that hydrogen bonding should actually be seen as a three-atom phenomenon, involving the donor atom (D), the acceptor atom (A), and the hydrogen atom. Truly, in a D-H…A system, both |VX(D,A)| and |VX(H,A)| values are anomalous because they do not appear in the expected place in the VX(A,B)- d(A,B) plot.

The 529 interatomic interactions of the oligopeptide GlyGlyGly have also been studied [50] by such a plot (up to 1,15 interactions) as shown in Fig. 4. Again the broad line emerges (now in black), connecting very weak interactions (hundredths of kJ mol−1) to the covalent bonds of the completely recovered Lewis diagram (hundreds of kJ mol−1). Curiously, the |VX(N,O)| values arising in the four peptide groups are unexpectedly large. This N…O “through space” contact occurs in the O = C-N group and suggests that the peptidic CN bond is harder to break than expected. As a reason for this observation, one can think of the extra “glue” offered by the N…O interaction stabilising the peptide group.

Logarithmic plot of interatomic distance versus |VX(A,B)| for Gly-Gly-Gly interactions up to 1,6, calculated at both HF/6–31 + G(d,p) and B3LYP/6–31 + G(d,p) level of theory. Key outliers of VX have been labelled in both the plot and the insert molecular image. The black line shows the overall correlation of the B3LYP energies, with a correlation coefficient r. [2] of 0.91

A second case study in our work [50] focused on alloxan, a heterocyclic planar molecular. The stability of its crystalline form is puzzling because it lacks any hydrogen bonds, which usually fulfill the role of stabiliser. For 40 years, this crystal structure has been regarded as “problematic” until in 2007 Dunitz and Schweizer suggested [68] that it may be explained by important attractive interactions of the type C = O…C = O. Interestingly, strong intermolecular |VX(C,O)| values were found (stronger than intra-molecular 1,4 interactions), of the order of 20 kJ mol−1 each, which supports their hypothesis.

In summary, the image that QTAIM leaves one within its description of molecules and their aggregates (condensed matter) is one of “bubbles”. These are the space-filling, topological atoms, which initially appear without sticks, that is, if the system if thought of in terms of balls and sticks in the first place. Each bubble interacts with any other and their shapes do not give away which atoms are bonded to which. Bonding patterns, of various strengths, then emerge from the energy term VX, which acts as a covalency quantifier. We return to the issue of bonded versus non-bonded interactions in Section 2.5 in connection with force field planning but first we follow through the lack of overlap between topological atoms.

2.4 The full consequence of no overlap

The space-filling nature of topological atoms means that there are no gaps between them. This is the correct topological picture, which applies to condensed matter. However, in 1987, Bader et al. [69] defined and calculated atomic volumes, occurring in gas-phase molecules, by considering practical, finite edge to a molecule. This view was based on the concept of collision diameters, which seem to endow molecules with some finite volume, often based on the ρ = 0.001 a.u. or 0.002 a.u. constant electron density envelope. Similarly, the traditional (non-topological) picture often shows atoms on different molecules being separated by portions of empty space. For example, the Corey-Pauling-Koltun (CPK) picture portrays molecules as having an abrupt edge, presenting them as macroscopic objects that can literally be grabbed.

The consequence of the space-filling nature of condensed matter (e.g. a ligand inside a protein pocket) is that each point in space must belong to an atom; there is no “empty” unassigned space. The full impact of this fact must still be worked out in connection with protein–ligand docking [70]. Surely, the CPK picture may just be for visual convenience mainly, while traditional quantum descriptions think of molecules as never ending (overlapping) clouds. These two pictures clash and both interpretations disturb energy book keeping, actually. Electrostatic energy, for example, is mathematically connected to electron density (see the first term of Eq. (5)). Thus, if some “empty space” electron density does not belong to an atom then the associated electrostatic energy does not either. This means that some energy will be unaccounted for. Equally, if some electron density simultaneously belongs to two atoms then energy will be double-counted. In summary, space-filling atoms present a clean and minimal picture, certainly when constructing a force field because all energies must be associated with nuclei.

Figure 5 shows an example of the space-filling character of topological atoms in an intermolecular context. It is clear that the two molecules forming a van der Waals complex do not overlap; instead, they indent each other. If the complex were strongly compressed, then the molecular distortion would increase but the respective atoms would remain well-defined, and so would their properties.

This fully non-overlapping picture is the purest (in the world of QCT) that one can work with but it can be diluted. In that case, a “halfway house” compromise appears in which the molecules themselves consist of non-overlapping atoms but the molecules are allowed to overlap each other. This route was followed in a study [71] of the convergence behaviour of the electrostatic interaction, allowing for two separate and overlapping monomeric wavefunctions. It should be mentioned that this convergence behaviour was also investigated [42] for atoms appearing in supermolecular wavefunctions, which corresponds to the proper topological view of non-overlapping monomers. Further reflection on the nature of overlapping objects has been published in the context of clouds [72] in the sky and even colliding galaxies [73].

“Monomeric simulations” can still be carried out (for example refs [74,75,76]) as a first approximation by allowing the “topological sacrilege” of overlapping molecules. The force field FFLUX, [77] which is still under construction because of its novel architecture and its tabula rasa origin, embraces the idea of non-overlapping molecules. We strive to work out the full impact of that idea once transferability has been incorporated into FFLUX. FFLUX uses the machine learning method kriging [78] to map the atomic energies and multipole moments (output) of a given atom onto the coordinates of the atoms surrounding it (input). How to do this for the atoms in a central water molecule inside a water decamer, for example, has been shown before [79].

2.5 Bonded versus non-bonded interactions

The construction of classical force fields is strongly influenced by the binary divide between bonded (i.e. covalent) and non-bonded (non-covalent) interactions. The bonded interactions are typically of the types 1,2; 1,3; and 1,4. These interactions are solely modelled by bond-stretching potentials (e.g. harmonic, cubic, or even Morse-like), valence potentials, and torsion potentials. The non-bonded interactions (1,n; n > 4) are then suddenly modelled by an electrostatic potential. An honest and innocuous question is why bonded atoms do not interact electrostatically too? Of course, physically they do. Undeniably, within the IQA ansatz, the quantity \({V}_{\mathrm{ee},\mathrm{C}}^{AB}\) appearing in Eq. (5) is not restricted to A…B interactions of the type 1,n > 4. So classical and many next-generation force fields have a non-physical dichotomy at the heart of their design. Perhaps this dichotomy causes the documented ambiguities in energy representation at the level of 1,4 interactions, which are at the border between bonded and non-bonded interactions. Similarly, hydrogen bonds have witnessed a checkered history in the modelling of their energies, with dedicated potentials being added and then eliminated again during the typically protracted chronology of force field development.

A variant of the innocuous question above is why the dispersion interaction does not operate between bonded atoms either in the familiar potentials of force fields. For sure, the dispersion part of the Lennard–Jones potential only seems to act between non-bonded atoms. Looking at Eq. (5) shows that now the atomic electron correlation, denoted \({V}_{\mathrm{ee},\mathrm{c}}^{AB}\), is again not restricted in terms of 1,n types. Indeed, dispersion should be covered by the dynamic correlation behind \({V}_{\mathrm{ee},\mathrm{c}}^{AB}\). Such correlation energies can be routinely calculated at the Møller-Plesset level, initially for very small systems [80] and then upscaled [81] to MP2 correlation energies for bonded and non-bonded interactions in a deprotonated and hydrogen-bonded glycine…water complex, for example. FFLUX is planned to also incorporate this type of energy contribution and thereby break the artificial bonded/non-bonded barrier. Although a proof-of-concept to machine learn \({V}_{\mathrm{ee},\mathrm{c}}^{AB}\) was recently reached, [82] the enormous size of the two-particle density matrix causes the concomitant computation to be very slow too. Work to tackle this challenge is in progress in our lab.

2.6 No perturbation theory

A long time ago, it was decided that FFLUX should not to be developed within the context of long-range Rayleigh-Schrödinger perturbation theory [83]. Intermolecular forces at long range are traditionally treated according to this formalism, which operates when the overlap between interacting moieties is small (although textbooks do not seem to quantify “small”). The very idea of perturbation theory has a stronger imprint on classical and next-generation force fields (listed above) than expected at first glance. This imprint consists of the rigid-body nature of the molecule being perturbed. Force fields that incorporate advanced treatments such as distributed multipole moments or polarisabilities were originally limited to handling rigid fragments. This imprint also has an impact on polymorphism prediction, [84] for example, when using force fields. However, the introduction of machine learning into the world of topological atoms has freed FFLUX from the rigid-body shackles. It is possible [85] to carry out simulations with flexible water molecules whose (high-rank) atomic multipole moments vary with the water molecules’ geometries.

Let us return to “monomeric” molecular dynamics simulation. Here, one allows single molecules to interact via their gas-phase (isolated) wavefunctions. In principle, polarisability can be added [86] to the potential governing the simulation. Instead of introducing a single polarisability tensor for the whole molecule having, it is better to work with atomically distributed polarisabilities. However, in our group (so not in general), this route was abandoned early on. This local decision was taken in spite of the fact that the topological partitioning generates polarisabilities of excellent stability [87] with respect to basis set variation. Note that some non-topological (atomically distributed) polarisabilities [88] suffer from instability but ISA-Pol is basis set stable. What is then the ultimate strategy (“Plan A”) for FFLUX?

First, Plan B is discussed briefly because it is easier and has already been realised. We are currently running monomeric simulations on liquid water with high-rank multipolar electrostatics implemented by Smooth Particle Mesh Ewald (SPME) summation. A given water, call it central, interacts with the electric field generated by the surrounding waters. This interaction causes electrostatic forces on the nuclei. In turn, these forces cause a geometry change inside the (flexible) water molecule. This change leads to a new intra-molecular energy, which is predicted by machine learnt models, one prediction for each (new) atomic energy. The machine learning only needs the input of that new geometry to make its predictions. Secondly, concomitant models predict the new multipole moments for that geometry. These new moments create a new electric field such that another iteration of energy and geometry adjustment can happen. The whole process is a “negotiation” of intra- and intermolecular energy, which makes the geometry of each participating water molecule fluctuate.

Note that any polarisation effects are based on the change of electron density within a single gas-phase molecule. Unfortunately, these effects are small compared to the more realistic situation where a change in electron density (and thus multipole moments) is obtained from a water wavefunction calculated in an electric field. One could go down this route (and call it Plan B) but it is tempting to take up the challenge of Plan A right away. This plan involves training the models for pieces of matter larger than a single molecule, for example, a water dimer or trimer. We call this “oligomeric modelling”, which will automatically take into account how the electron density of an atom inside an oligomer changes. This change will cover all polarisation effects, including that due to partial covalency in hydrogen bonds and to many-body influence. In summary, FFLUX treats polarisation, not by focusing on the process of polarisation (i.e. polarisability) but on the result of this process (i.e. the final multipole moments including charge (or 0th moment)).

According to the polarisation approximation, [89] the energy of two interacting ground-state molecules A and B consists, up to second order, of the sum of (i) the individual molecules’ energy (0th order), (ii) the purely electrostatic energy between A and B (1st order), and (iii) the induction energy of A and of B, and the dispersion energy between A and B (2nd order). These energy contributions are well-defined within this particular formalism, and can be calculated accordingly. However, how robust is the definition of dispersion outside the polarisation approximation?

Imagine a practical case where the formal distinction between intra- and intermolecular interaction is blurred. Take a chainlike molecule and curl it such that its two endpoints are facing each other over a short distance. The atoms of these endpoints interact as if they were part of two different molecules. In other words, if the chain were not shown in full, then one would not know that these terminal atoms are actually part of the same molecule, that is, a curled chain. The formal difficulty is that the unperturbed Hamiltonian cannot be written as a sum of two Hamiltonians, one for each isolated fragment A or B, because there are no two fragments. Instead, there is a single molecule. Thus, one may conclude that the concept of dispersion mathematically dissolves because of the framework of perturbation theory. Yet, there must be dynamical correlation between these two end-of-chain atoms. The topological interatomic correlation energy, \(V_{{\text{ee,c}}}^{{}}\), which exists independently of perturbation theory, will pick up this phenomenon. In fairness to symmetry-adapted perturbation theories such as SAPT, [61] however, it is finally possible to formulate an atomically decomposed version called A-SAPT [90]. A melee of partitioning methods is invoked for the different types of energy contributions, running counter to the minimal and streamlined philosophy behind IQA. Alas, A-SAPT often has difficulty producing chemically useful partitions of the electrostatic energy, due to the buildup of oscillating partial charges on adjacent functional groups. This is why, immediately after the presentation of A-SAPT, F-SAPT was proposed, [91] the functional-group SAPT partitioning. F-SAPT could be used to solve the problem of the curling chainlike molecule mentioned above, as well as from an incremental fragmentation method [92]. Note, however, that IQA does not need special constructions to be able to calculate any interaction energies between any two atoms, wherever they occur, within the same molecule or not. The “curly chain molecule” situation is not a problem for this method, atoms being atoms, wherever they are; the wavefunction that provides the atomic electron densities can be any justifiable size, whether a single molecule or an assembly thereof.

A second problem with perturbation theory is that, at short-range, Rayleigh-Schrödinger perturbation theory actually breaks down because there is no unique definition of the order of a term in the perturbation expansion. However, exchange perturbation theories have been proposed, of two main types: symmetric methods (e.g. Stone-Hayes [93]) or symmetry-adapted theories (e.g. SAPT [61]). Then again, however, short-range perturbation theory is computationally expensive because one needs to take into account the other molecules that a given molecule interacts with. This is still true for methods such as SAPT(DFT), which are competitive in terms of accuracy and computational expense. In contrast, no knowledge of surrounding molecules required for long-range perturbation theory. In other words, one can calculate the polarisability of a molecule without having to know which molecule causes the polarisation.

Based on the considerations above, it makes sense to develop FFLUX using supermolecular wavefunctions. The latter are free of any molecular imprint. At a deep level, FFLUX’s architecture does not differentiate between intra- and intermolecular interactions: an atom is an atom wherever it is. Put differently, the electron density of the overall system partitions itself, according to QCT, by following the (nuclear) attractors rather than by where the molecules stop or start. The machine learning is well suited to be trained on atoms that that are sufficiently embedded in a relevant piece of matter.

2.7 No penetration, no damping functions

A damping function is a mathematical function that prevents an energy from becoming unreasonably large (and even infinite) at short range. A damping function can occur within the context of electrostatic energy, induction energy, or dispersion energy (e.g. reference [94]). The reason for damping functions can be traced back to the so-called penetration effect, which in turn follows from the (conceptual) picture of electron clouds extending infinitely far and thus being able to substantially overlap with each other. However, if electron clouds do not overlap, as in the topological approach, then no penetration effect emerges. Both the penetration energy and damping function arise from the fact that the point at which we want to know the electrostatic potential resides inside the electronic charge density that generates this potential. However, with a topological atom, it is possible to take a point that is rigorously outside the atom (i.e. the finite object that generates the potential) and calculate [95] the electrostatic potential at that point.

2.8 Point-charges for electrostatics versus monopole moments as a measure for charge transfer

In research circles preoccupied with applied biomolecular simulation, the point-charge is still the standard way of handling electrostatic interaction. While more and more ambitious biomolecular problems are tackled at accelerated pace, it is a “suppressed truth” that the results ultimately depend on the quality of the potentials used. The electrostatic component of these potentials is crucial in the polar and charged systems that are biosystems. The examples of non-covalent interactions mentioned in the “Introduction” cannot be treated correctly if the electrostatics are faulty. And they are faulty at short and medium range if an atom’s charge density is represented by only one point-charge.

While several research groups (e.g. references [84, 96,97,98,99,100,101,102,103,104] amongst others) continue to improve potentials, the essentially stagnant architecture of classical force fields means that ever growing computer power will yield the wrong answer faster, frankly. A recent and dramatic example [105] of the lack of reliability is the comparison of ensembles of intrinsically disordered proteins, generated by eight all-atom empirical force fields, with primary small-angle X-ray scattering and NMR data. Ensembles obtained with different force fields exhibit marked differences in chain dimensions, hydrogen bonding, and secondary structure content. These differences turned out to be unexpectedly large: changing the force field is found to have a stronger effect on secondary structure content than changing the entire peptide sequence! All this vindicates a fresh start in force field design and FFLUX is such an attempt, commenced several years ago.

A second, more modest but still poignant, case study [106] is that on the paradigm molecule trialanine. This thorough computational and experimental study ran 20 ns simulations with six different force fields [Amber (parm94, parm96), GROMOS (43A1, 45A3), CHARMM (1998) and OPLS (all atom, 1996)]. Their conclusion was disappointing: “…lifetimes of the conformational states differ by more than an order of magnitude, depending on which model.” Indeed, even the minor modification between “parm94” and “parm96” significantly changed the population ratio of the conformational states.

There is considerable evidence [107] that multipolar electrostatics overcome the limitations of the ubiquitous point-charge approach. In particular, a model of one point-charge for each atom fails to capture the anisotropic nature of electronic features such as lone pairs or π-systems. However, high-rank electrostatic terms (involving multipole moments) naturally recover these important electronic features. One extremity is to add (point) multipole moments centred on the nucleus. The other extremity is to surrender to the obsession of point-charges and add more point-charges per nucleus, away from the nuclear position. This route was followed by the TIPnP family of water potentials, for example. However, a third option, of searching for the elusive point-charge that will magically turn out to be correct at short range, is pointless. No work seems to have been done [108] on comparing the computational cost of using multipole moments versus an equivalent number of point-charges yielding the same accuracy.

A final comment relates to an unfair interpretation [55] of the performance of a point-charge in reproducing the molecular dipole moment or the electrostatic potential. We briefly discuss both, in turn. Claiming that QTAIM charges do not reproduce the molecular dipole moment is misleading because the latter is made up of two contributions, often similar in magnitude. One contribution is the typical one, due to the point-charges. This interatomic charge transfer component is the only contribution considered in naïve and incomplete accounts. The other is the intra-atomic (dipolar) polarisation contribution, which should not be ignored. If one omits this contribution, then one cannot explain the nearly vanishing dipole moment of carbon monoxide, for example. QTAIM clearly explains what happens in CO, and does so with its typically large values for the monopole moments (i.e. charges) but which are consistent with the electronegativity difference between carbon and oxygen. When it comes to the electrostatic potential, a QTAIM charge does not claim to be able to reproduce it well. The argument is that it does not have to do so: an atomic charge is a measure or product of charge transfer, no more and no less. If an electrostatic potential [95, 109] or interaction [71] is to be modelled exactly then atomic multipole moments need to be invoked. The latter are necessary to represent the details of an atomic electron density beyond the first “summary” offered by a point-charge.

2.9 Multipolar electrostatics and convergence

We have thoroughly researched [42, 71, 95, 109,110,111,112,113,114,115,116] topological multipolar electrostatics, especially the convergence behaviour of the multipolar expansion. At very long range, the interatomic electrostatic interaction \({V}_{elec}^{AB}\) becomes identical to the usual qAqB /rAB expression where q is a net atomic charge (e.g. − 1.1e for oxygen in water). At closer range, expressions for charge-dipole, dipole–dipole, and dipole-quadrupole are necessary to approximate \({V}_{elec}^{AB}\) better.

Figure 6 illustrates convergence (or lack thereof) for the case of the electrostatic interaction between the two hydrogen-bonded atoms (O…H) in the global minimum of the water dimer. The interaction rank L of the multipolar expansion is defined as

where \(\ell_{\Omega }\) is the rank of a multipole moment centred on atom Ω. For example, \(\ell=2\) for a quadrupole moment, which has 5 (not 6) components in the spherical tensor formalism that we use (instead of the Cartesian one, which contains redundancies).

The exact electrostatic interaction energy \({V}_{elec}^{AB}\) between two (topological) atoms (dashed horizontal line) compared with the energy obtained by multipolar expansion according to interaction rank L for the hydrogen-bonded atoms (O5 and H2) in the global minimum of the water dimer [114].

The energy profile in Fig. 6 shows that the point-charge representation (L = 1) is a poor approximation to the exact electrostatic interaction, \({V}_{elec}^{AB}\), obtained without expansion. The situation improves dramatically at L = 2, with the addition of a dipole moment on oxygen and on hydrogen. Worse agreement then appears at L = 3 but at L = 5 the convergence is essentially exact. This stays so as L increases until divergence sets in after L = 13. This whole energy profile is a case of pseudo-convergence because of the convergence breakdown at very high L. However, for all practical purposes, an essentially exact \({V}_{elec}^{AB}\) value can be obtained from the multipolar expansion for a stable plateau between L = 4 and L = 12. Finally, real (full) convergence can be realised while divergence never occurs, no matter how high the interaction rank L. We note that this formal (exact) convergence is elusive if the respective atoms are infinite in size, which is the case for DMA. It would be nice if this topological convergence work of this section were finally reported in a classic and otherwise valuable reference work on intermolecular forces, [83] the second edition of which unfortunately still mentions the same erroneous statement on the poor convergence of topological atoms as in the first edition.

Next, a systematic study [117] on the small protein crambin provides a wealth of information on universal convergence behaviour between the most five common elements of Life (C, H, N, O and S). One of five key questions answered in that study asks: For a given convergence energy error, ΔE, and a given pair of atoms A and B, how does the interaction rank L at which the energy has converged, change with increasing internuclear distance R? Essentially this question reduces to when a multipolar expansion can be truncated. This is actually a five-dimensional function, involving the quantities R, L, and ΔE, and the qualifiers A and B. It is abundantly clear that short-range electrostatics cannot be achieved by multipole moments. Instead, exact electrostatics is achieved via six-dimensional integration over two interacting atoms. However, accurate electrostatic descriptions (within 0.1 kJ mol−1 of the exact answer) can be obtained, for example for O…H interactions, if the charges are far apart. An internuclear distance of around 25 Å would be safe to cover all geometries and particular atoms. However, a distance of around 8–9 Å already suffices for some favourable interactions. An example of good news in this context is that all C…C interactions are within 0.1 kJ mol−1 of the exact answer already for L = 1 (dipole–dipole), and already around 7 Å.

Finally, molecular dynamics simulations on water clusters (25–216 molecules) with a machine-learnt quantum topological monomeric water potential should be mentioned [118]. Both the intramolecular energy and the atomic multipole moments were trained by Gaussian process regression (aka kriging). This is the first time that multipolar electrostatics “negotiated” its intermolecular energies with the monomers’ intramolecular energy. It turned out that while incorporating charge-dipole interactions into the description of the electrostatics resulted in only minor differences, the incorporation of charge-quadrupole, dipole–dipole, and quadrupole-charge interactions resulted in significant changes to the intermolecular structuring of the water molecules.

2.10 Polarisation

Typically, anisotropic polarisabilities handle the complexity of the electron density responding differently depending on direction, and capture the subtleties for all multipole moments. Long-range perturbation theory is the traditional framework in which this approach is formulated. However, one could argue that long-range perturbation theory has two conceptual drawbacks when it operates at too short a range (and SAPT is not invoked): (i) it handles charge transfer in a “bolt on” manner, and (ii) it causes the polarisation catastrophe. A further drawback is more practical in nature: a polarisation scheme governed by a polarisability tensor introduces computational overhead during a molecular simulation. This is so because, for each number of time steps, the new multipole moments resulting from each polarisation process must be computed “on-the-fly” in an iterative manner, until self-consistency is reached.

In order to tackle these drawbacks, we proposed [119] an approach that does not focus on the polarisability itself but on the effect that it has, after the polarisation process has done its work, as it were. The machine-learning-based force field FFLUX embraces this idea and thereby predicts the new multipole moments that an atom must adopt when in the presence of a new atomic environment. A machine learning method is basically a mapping between inputs and outputs, and needs to be trained to achieve a successful mapping. This success is measured by an objective function, which is essentially the difference between an original output and a predicted output. This difference (i.e. prediction error) should be minimised and this is best done by comparing the predictions against an external test set, i.e. data points that do not appear in the training set. FFLUX took up a method called kriging or Gaussian processes in order to predict the effect of polarisation. However, we originally used [120] an artificial neural network but this methodology was abandoned [121] in favour of kriging because the latter is more accurate albeit more CPU intensive. An important advantage of kriging is that it handles a high-dimensional input space better than neural networks.

It is important to realise that all multipole moments (including monopole and dipole moment) are treated in the same way. Essentially, charge transfer is the effect of “monopolar polarisation”. Thus, charge transfer is not in need of special handling but is just another special case of multipolar polarisation. Hence, unlike in perturbation theory, FFLUX handles charge transfer and multipolar polarisation in a unified [122] and streamlined manner. Having a robust way of defining atomic charges is pivotal to make this uniform treatment a reality but, earlier on, QTAIM (which underpins FFLUX) has been argued to do so.

2.11 The bond critical point (BCP)

As discussed in Section 2.2, QTAIM started as an energy partitioning method in 1972. While the concepts of atomic basin and interatomic surface had crystallised first, the concept of a bond path appeared only 5 years later. A bond path was introduced [123] as the two gradient paths that originate at the “internuclear saddle point” and terminate at each of the thus connected nuclei. The name bond critical point (BCP) then appeared [124] another 2 years later. A critical point is a point in space where the gradient of the electron density vanishes. Inspecting the eigenvalue spectrum of the Hessian at a critical point gives four types of critical point: a minimum, a maximum, and two types of saddle point [5, 7, 8]. The BCP is a minimum in the direction of the bond path and a maximum in the two directions orthogonal to this path, at the critical point. It is important to realise two matters: (i) the BCP point was coined by observation; that is, it appears between nuclei that everyone agrees on are bonded, by a standard covalent bond, and (ii) there is no connection between the BCP and the energetics of the virial partitioning proposed earlier, which is at the heart of QTAIM.

The four critical points can be characterised by their so-called rank and signature. This is a purely mathematical characterisation without any reference to the chemical meaning behind a critical point. The reason why this comment is important, especially for the bond critical point, will be clear at the end of this section. The critical points can be attributed names such as ring critical point (one type of saddle) or cage critical point (a minimum). Importantly, neither name carries any chemical content: they only describe the geometrical (or even topological) relationship that the critical point has with its environment. Only the bond critical point is “loaded” with chemical meaning because its name goes beyond pure geometry, topology or its mathematical name of (3, − 1). While the name BCP is fit for purpose for standard covalent bonds, it will soon be clear that this is not the case for non-covalent bonds. The latter are those interactions for which the community has an increasing and urgent need to know if a BCP can help in proving the localisation of non-covalent interactions and the measurement of their strength. However, BCP patterns helped [125] defining the molecular structures of non-trivial covalent bonds in closo-, nido-, and arachno-boranes.

Already in the 1980s, the Bader group knew [126] that an external condition was necessary to make a BCP an indicator of a bond. More precisely, a bond path only indicates a bond when the forces on all nuclei in the system vanish. Otherwise, the “bond path” is actually a so-called atomic interaction line. The external condition of vanishing forces solves a problem occurring with Hartree–Fock wavefunctions of noble gas dimers. For example, for He2, there is no energy minimum at any finite internuclear distance for a Hartree–Fock wavefunction. Thus, the forces on the nuclei never vanish and the atomic interaction line never becomes a bond path. Thus, there is no bond between the two He atoms, which is consistent with the Hartree–Fock energy profile. As such, the interpretation remains consistent but at the expense of an external condition. However, very recently it has been suggested to improve the nomenclature for the general (non-covalent) case, and call [127] a BCP a line critical point. [128] This critical point’s name is then on a par with the other critical points and devoid of any judgement on what it means chemically. The same can be done for the bond path, which then is better called a line path.

Similarly to standard covalent bonds, QTAIM provided, [129] already back in 1988, a non-controversial topological picture of standard hydrogen bonds. These occurred in a dozen or so simple van der Waals complexes of the type base…HF. Four of these systems were revisited [60] much later (alongside 5 more elementary complexes including F–H-F−) with more sophisticated topological tools such as IQA. Also, in the late 1990s a successful and widely used relationship was proposed, [130] based on 83 X–H…O (X = C, N, or O) hydrogen bonds, experimentally observed by accurate X-ray diffraction. This simple relationship links the hydrogen bond dissociation energy (calculated at HF level) with the potential energy density (V) evaluated at the hydrogen-bond BCP. So, in partial summary, all was still well up to that point, when staying with standard covalent and non-covalent interactions. However, from the early 1990s onwards, work started to appear that called into question the presence of a BCP as a signature of an attractive interaction that one calls a bond. A gaggle of seven papers, ending with one on torsional motion in biphenyl, [131] feverishly discussed systems (ranging from C(NO2)3−, over kekulene and biphenyl, to push–pull hexasubstituted ethanes) that had some unexpected BCPs. The authors changed their mind about whether the BPCs that they saw, represented bonds or steric interactions. The history of this debate can be found in Section 4.3 of reference [73] alongside Bader’s utter rejection [132] of the notion of steric repulsion expressed as “interaction lines” (i.e. the collection of gradient paths springing from the “BCP”). This 56-page essay, [73] about half of which is on bonding, reflects on these matters in a way that is as relevant today as it was 15 years ago, including fresh ideas that have still not been explored further. However, in 2019, promising progress was made on the case of biphenyl, [133] a system with its own controversial history spanning almost three decades, which may well have been closed by this recent work.

It is worth spending a paragraph on the important case of biphenyl because we consider it solved [133] by the so-called Relative Energy Gradient (REG) method, [134] which is briefly explained in the next section. The controversy concerns the BCP that appears between two ortho-hydrogens (each hydrogen belonging to a different phenyl ring) as the central torsion angle is rotated from its value of ~ 45° (at equilibrium) to a value below 28°, en route to the planar conformation. This BCP was originally interpreted [131] as a signature of the repulsive interaction between ortho-hydrogens, a steric clash to which the origin of the torsional rotation was ascribed. The REG analysis disproved this interpretation. REG looks at all possible intra- and interatomic energies (of all IQA types) and does not project a chemical view onto the individual energy profile, even if following chemical intuition. Instead, REG finds out, in a minimal, unbiased, and mathematical way, which atomic energy profile acts most like (or unlike) the total energy profile. The REG study concluded [133] that the planar energy barrier is caused by the inner destabilisation of the two ortho-hydrogens, which is equivalent to the textbook steric clash. However, this destabilisation is partially counteracted by the formation of a weak covalent bond between the ortho-hydrogens. When energy types are summed, this partial cancellation diminishes the role of the ortho-hydrogens. As a consequence, the REG analysis actually identifies the energy behaviour of the Cortho atoms as the cause of the planar barrier. This means that the role of the BCP as a signature of an attractive interaction is preserved. This conclusion also increases confidence in the status [135] of hydrogen-hydrogen [136] bond paths as markers of stabilising interactions in molecules and crystals, as opposed to nonbonded steric repulsions. But then again, in 2016, an extensive study [137] on critical points (and molecular graphs) of promolecular densities of unsubstituted hydrocarbons was published. It showed that the promolecular densities yield the same number and types of critical points for 90% of the hydrocarbons as the real molecular electron densities. The conclusion stated that “the topology of the electron density is not dictated by chemical bonds or strong interactions and deformations induced by the interactions of atoms in molecules have a quite marginal role, virtually null, in shaping the general traits of the topology of molecular electron densities of the studied hydrocarbons, whereas the key factor is the underlying atomic densities.” If IQA’s interatomic exchange energies were introduced to vindicate the covalent nature of the allegedly bonded atoms at stake, then promolecular criticism would collapse. Indeed, all energies would vanish for such promolecular wavefunctions and the BCPs would be exposed as false signatures.

Further damage was done to the elegant but increasingly frail idea that BCPs could be used as simple, and indeed computable, indicators of bonding. A few, but then already sufficient, recent computational experiments [138] showed the horrors that can be inflicted on BCP interpretation, at least to those who wish BCPs to succeed in their originally intended remit. For example, a uniform external field polarising a high-level-of-theory electron density of H− creates a BCP, and so does the positioning of a proton in its vicinity.

A natural question that arises from all this alarming news is: what is the link between the appearance of a BCP and an IQA energy balance? After all, the latter can be trusted as an information source to decide which atoms considerably attract each other as judged by their \({V}_{x}^{AB}\) values. A remarkable answer came from a paper published [139] in 2007, which looked at the classical water formation from O(1D) and a [1]\({\Sigma }_{\mathrm{g}}^{+}\) H2 molecule. This system was already studied [124] in 1979 for the topological change that it displayed as the oxygen approaches the hydrogen molecule along its perpendicular symmetry axis. The 2007 work monitored the exchange–correlation energy (\({V}_{xc}^{AB}\), which is dominated by \({V}_{x}^{AB}\)) during the oxygen’s approach. During this process, the H–H bond weakens while the O–H interaction strengthens (covalently). The key point is that these two interactions compete. It was observed that OH defeats HH (i.e. \(|{V}_{xc}^{OH}|\ge |{V}_{xc}^{HH}|\)) very closely to the point where HH’s BCP disappears at roughly the same time that the BCP of the O–H bond in water appears. This story is slightly simplified because there is a fleeting topological ring involved but the astonishing fact remains that an interpretational connection between energy and topology was established for the first time. A second such classical case is that of the HCN isomerisation to CNH, which shows the same connection.

About 6 years later, Tognetti and Joubert introduced [140] a simple measure (called β) to quantify the competition between atomic interactions. For 36 systems of the type O…X (X = O, S, or halogen), they found that the value of β, which is a \({V}_{x}^{AB}\) ratio between primary and secondary interactions, determines where the BCP will appear. Again about 6 years later, Jabłoński refuted this approach in a paper [141] with an unusually aggressive title. Incidentally, this paper suitably introduces the trials and tribulations of the interpretation of BCPs extensively but could benefit from a couple of additions. Instead of repeating this history here, it is more fruitful to mention these two additions. One addition is a popular method, [142] unhelpfullyFootnote 1 called non-covalent interactions (NCI), and based on the simple observation and assumption that the combination of a vanishing reduced density gradient (a fundamental dimensional quantity in DFT) and low electron density identifies a non-covalent interaction. When this gradient vanishes, one actually ends up with a critical point in the electron density. So, practically, this method actually boils down to spotting BCPs but with the get-out clause of finding a “near critical point”. Indeed, the method highlights a zone around an elusive BCP by plotting some low-value iso-surface of the reduced density gradient. Of course this procedure gets around the thorny problem of a BCP appearing or disappearing upon a small variation in the system’s geometry. Because of the relaxed “critical point” allocation of NCI, it can claim [143] that QTAIM’s criteria are too stringent and they can thus miss an intramolecular BCP in 1,n-alkanediols, for example; miss it exactly there where a red-shifted OH-stretching vibrational mode says there should be a hydrogen bond. In summary, as a result of the contour surface this method is, strictly speaking, not part of the topological approach (although inspired by it) and will not be discussed further. The second addition is unique work [144] on BCP distributions collected from a molecular dynamics simulation of an ethanol…water mixture. This dynamic study of BCPs looked beyond static BCP patterns thereby eliminating the ephemeral nature of the BCP in terms of its presence sensitively depending on the exact nuclear geometry. It was found that the more localised such a dynamical BCP distribution, the higher the average electron density at its BCPs. Furthermore, the hydrogen atoms of water strongly preferred to form H…H interactions with ethanol’s alkyl hydrogen atoms over its hydroxyl hydrogen.

2.12 Relative Energy Gradient (REG) method

Before explaining REG it is useful to consider the general context that motivates this method. There is a universal challenge at the heart of chemistry and biochemistry, which is due to an unbridged gap between chemical insight and quantum mechanics. This challenge is best explained by a representative example (see below) but it essentially states that we must be able to detect, by computation, which fragment (atoms, functional groups) of a given molecular assembly governs the energetic behaviour of this whole assembly. If solved, this quantum-based insight will rigorously guide predictions on the relative stability of molecular assemblies. REG, which will be explained at the end of this section, is a promising attempt to tackle this challenge. So far all REG case studies have been carried out in conjunction with IQA but REG could in principle operate with non-IQA atomic energies or even other atomic properties.

As announced, an example will sharpen the nature of the challenge described above. Textbooks state that the guanine…cytosine complex is more stable than the adenine…thymine one because the former is held together by three hydrogen bonds while the latter by only two. In this case, the atoms in the hydrogen bonds constitute the fragment that is supposed to govern the energetic stability of the complexes. Yet, the uracil…2,6-diaminopyridine complex, which also has three hydrogen bonds, is two orders of magnitude less stable than guanine…cytosine. Clearly, counting hydrogen bonds does not explain stability. A (bio)chemist wants to understand the behaviour of a system by the presence or action of relevant atoms in the system, i.e. a “localised explanation”. However, this undertaking can derail, as shown in this well-known example, and thereby undermines confidence in standard chemical intuition.

However, in order to remedy the inadequacy of focusing on hydrogen bonds only, Jorgensen and Pranata proposed [145] their secondary interaction hypothesis. This hypothesis is actually a simple rule, based on more distant atomic interactions than those involved in the hydrogen bonds only. This rule claims to make reliable predictions of stability, and again on the “back-of-an-envelope”, like hydrogen bonds claim to do. However, this proposal did unfortunately still not solve the main problem of how and why a molecular assembly is held together, in terms of key atoms. Sure, the secondary interaction hypothesis was seized by supramolecular chemists (e.g. Gellman, Zimmerman, or Rebek) as the next best concept beyond hydrogen bonding, to explain and predict relative stabilities of various complexes. However, the hypothesis fails [146,147,148,149,150] regularly. Furthermore, it was shown that the secondary interaction hypothesis cannot be linked to the underlying quantum reality offered by modern wavefunctions. In other words, if this rule works on the right occasions, it does not do so for the right reasons.

This unsatisfactory state-of-affairs prompts one to look harder to find that much needed, reliable bridge between modern wavefunctions and back-of-an-envelope explanations. This bridge is not just essential for the pivotal example given above but also for a wide variety of recurring questions such as:

-

what is the origin of this torsional rotation barrier? [151]

-

what holds heterocyclic aromatics (including DNA base pairs) together? [152]

-

what is the degree of covalency [153] of this halogen bond?

-

which atoms are actually driving this reaction [154] and by which energy type?

-

what is the character of this mysterious through-space interaction [155] in this enzyme?

-

how is this molecular crystal held together in the curious absence [156] of hydrogen bonds?

-

why is the distance between these two atoms suspiciously short, again and again? Is there perhaps a new type of non-covalent interaction [157] ?

-

if the fluorine gauche-effect is actually electrostatic in nature [158] (unlike what Wikipedia claims) then how does this knowledge influence the future design of compounds in terms of highly desirable conformational control?

So how does REG work [134] ? The method is dynamic: its control coordinate s (yellow hydrogen bond length in Fig. 7) induces a geometry change in a molecular system (e.g. motion along a reaction coordinate, torsional rotation. In this case, this change is a compression of water monomers in a water dimer).