Abstract

Goal-oriented modelling is one of the most important research developments in the requirements engineering (RE) field. This paper conducts a systematic analysis of the visual syntax of i*, one of the leading goal-oriented languages. Like most RE notations, i* is highly visual. Yet surprisingly, there has been little debate about or modification to its graphical conventions since it was proposed more than a decade ago. We evaluate the i* visual notation using a set of principles for designing cognitively effective visual notations (the Physics of Notations). The analysis reveals some serious flaws in the notation together with some practical recommendations for improvement. The results can be used to improve its effectiveness in practice, particularly for communicating with end users. A broader goal of the paper is to raise awareness about the importance of visual representation in RE research, which has historically received little attention.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Visual syntax: an important but neglected issue

Visual notationsFootnote 1 play a critical role in requirements engineering (RE), and have dominated research and practice from its earliest beginnings. Virtually all RE notations use diagrams as the primary basis for documenting and communicating requirements. For example, the “structured techniques” of the 1970s, probably the first RE techniques, were highly graphical. This was their major distinguishing feature compared to previous (text-based) techniques and claimed as one of their major advantages [16, 22]. This pattern continues to the present day, with UML (the industry standard modelling language) and i* (one of the most influential modern RE notations) also being visual notations.

This makes it all the more surprising that visual representation issues receive so little attention in RE research. Evaluations and comparisons of RE notations tend to be conducted based primarily on their semantics, with issues of visual syntax rarely mentioned. In designing notations, the majority of effort is spent designing the semantics of notations (what constructs to include and what they mean), with design of visual syntax (how to perceptually represent these constructs) taking place largely as an afterthought. There is also little or no attempt to justify the symbols chosen (design rationale) [33, 58].

1.2 i*: A goal-oriented modelling language

Goal-oriented modelling is one of the most important research developments in the RE field. This shifts the focus from what and how (data and processes) as addressed by traditional analysis to who and why (the actors and the goals they wish to achieve). Goal-oriented modelling addresses the early analysis or requirements elicitation phase in the RE process [11]. i* [90] is one of the most widely used goal modelling languages [2] and vies with KAOS [14] as the leading goal modelling notation.

1.2.1 A highly visual language

Like most RE notations, i* is a visual language. In fact, it is more visual than most: every construct in the language is represented graphically and i* models are defined only by diagrams (rather than diagrams plus supporting text as is usually the case). i* uses two diagram types to document requirements, which correspond to different levels of abstraction (Fig. 1):

i* Consists of two diagram types (from [90])

-

Strategic Dependency (SD) Diagrams (intentional level) define dependencies among actors, treating each actor as a “black box”.

-

Strategic Rationale (SR) Diagrams (rational level) define the internal rationale or intentions of each actor (shown within dotted circles), corresponding to a “glass box” view of actors.

1.2.2 Lack of design rationale

Like most RE notations, i* lacks explicit design rationale for its graphical conventions: in all the sources of i* [26, 89, 90], symbols are defined without any explanation of why they were chosen. In fact, i* contains less design rationale than most RE notations: graphical conventions are mostly defined by example without even being described in the text. For example, this is how the visual syntax of SD diagrams is defined in the original source of i*:

Figure 2 shows an SD model of the meeting scheduling setting with a computer-based meeting scheduler.

1.2.3 Lack of adoption in practice

Goal-oriented modelling has been enthusiastically embraced by the RE research community but has so far had negligible impact on practice [19]. As Lockerbie and Maiden say [45]:

Whilst the i* approach [1] has been developed and applied to case studies for some time, it has not been applied widely in industrial requirements projects

A recent survey of practice [15] showed that the most widely used RE notations in practice are Data Flow Diagrams (DFDs) and Entity Relationship (ER) models, both developed in the 1970s. i* was not even mentioned by survey participants, suggesting an adoption rate of close to zero. This should be of major concern to RE researchers: while there is always inertia to adopt new methods [8], it has been over a decade since i* was proposed (around the same time the first version of UML was released).

1.3 Objectives of this paper

This paper conducts an systematic analysis of i* visual syntax, the first so far conducted. We believe such an analysis is long overdue as there has been little debate about or modification to its graphical conventions since it was originally proposed (more than a decade ago). It is always easy to criticise, but our aim in conducting this analysis is constructive: to improve i*’s usability and effectiveness in practice, especially for communicating with end users. In this spirit, rather than simply pointing out problems, where possible, we suggest ways of resolving them.

A broader goal of this paper is to raise awareness about the importance of visual representation issues in RE, which have historically been ignored or undervalued. Visual syntax has a profound effect on the effectiveness of RE notations, equal to (if not greater than) than decisions about semantics [58]. For this reason, it deserves (at least) equal effort and attention in evaluating and designing RE notations.

2 Previous research

A review of the literature revealed no previous analyses of i* visual syntax. i* has stimulated an enormous amount of research, with over 1,000 citations to its primary sources [89, 90]. Given that it is primarily a visual language, it is surprising that none of these papers relate specifically to its visual syntax. While some papers propose changes to its visual syntax, this is typically only to reflect changes or extensions in semantics (e.g. [18]).

Despite the lack of analyses of i* visual syntax, there is a widespread perception in the literature that the i* visual notation is effective (italics added below):

The undoubted strengths of i* include a simple but formal and stable semantics, a graphical modelling notation that is simple to use, models that are amenable to computational analysis, and applicability in both agent-oriented and goal-oriented requirements methods. [46]

i* allows for the clear and simple statement of actor’s goals and dependencies among them. It also includes a graphical notation which allows for a unified and intuitive vision of the environment being modelled, showing its actors and the dependencies among them. [2]

However, the effectiveness of the visual notation is stated rather than shown: in the absence of formal analyses, it is impossible to say whether it is effective or not. One goal of this paper is to determine whether statements like these are justified.

The lack of attention to i* visual syntax reflects a common pattern in RE research, where issues of visual representation are rarely given the attention they deserve. One possible reason for this is that methods for analysing visual syntax are less mature than those available for analysing semantics [29, 48, 87] (an issue this paper also addresses). However, another explanation is that researchers consider visual syntax to be unimportant: a matter of “aesthetics” rather than effectiveness [33]. This view is contradicted by research in diagrammatic reasoning, which shows that the form of representations has an equal, if not greater, influence on human understanding and problem solving performance as their content [42, 76]. Empirical studies confirm that the visual appearance of RE notations significantly affects understanding, especially by novices [51, 62].

3 Theoretical basis

As discussed in the previous section, one possible reason for the lack of attention to i* visual syntax is the lack of accepted principles for evaluating and designing visual notations. In the absence of such principles, evaluations can only be carried out in a subjective manner. The analysis in this paper is based on a recently proposed theory of visual notations, called the Physics of Notations as it focuses on the physical (perceptual) properties of notations rather than their logical (semantic) properties [58]. This provides a scientific basis for comparing, evaluating, improving, and constructing visual notations, which has previously been lacking in the RE field.

3.1 Definitions: the anatomy of a visual notation

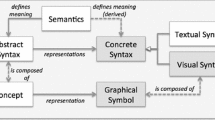

A visual notation consists of a set of graphical symbols (visual vocabulary), a set of compositional rules for forming valid expressions (visual grammar), and semantic definitions for each symbol (visual semantics). The set of symbols and compositional rules together form the visual (concrete) syntax. Graphical symbols are used to signify or symbolise (perceptually represent) semantic constructs, typically defined by a metamodel. An expression in a visual notation is called a visual sentence or diagram. Diagrams are composed of instances of graphical symbols (symbol instances or tokens), arranged according to the rules of the visual grammar. In this paper, we focus only on visual syntax: how constructs are perceptually represented; issues of semantics are specifically excluded (Fig. 2).

3.2 The dependent variable (design goal): what makes a “good” visual notation?

Visual notations are uniquely human-oriented representations: their primary purpose is to facilitate human communication and problem solving [31]. To be most effective in doing this, they need to be optimised for processing by the human mind. Cognitive effectiveness is defined as the speed, ease and accuracy with which a representation can be processed by the human mind [42]. This provides an operational definition of visual notation “goodness” that can be empirically evaluated. The Physics of Notations defines this as the primary dependent variable for evaluating and comparing visual notations and the primary design goal in constructing them. Cognitive effectiveness determines the ability of visual notations to support communication with business stakeholders as well as reasoning and problem solving by requirements engineers.

The cognitive effectiveness of visual notations is one of the most widely accepted and infrequently challenged assumptions in the RE field. However, as Larkin and Simon showed in their seminal paper, “Why a Diagram is (Sometimes) Worth 10,000 Words”, cognitive effectiveness is not an inherent property of visual representations but something that must be designed into them [42]. All visual representations are not equally effective and poorly designed visual representations can be far less effective than text.

3.3 The visual notation design process

The Physics of Notations conceptualises the process of visual notation design as consisting of three “spaces”: the problem space, the design space, and the solution space.

3.3.1 The problem space

In any graphic design task, the starting point is always the information content to be expressed: form follows content. In visual notation design, the problem space is defined by the notation semantics, which should be defined by a metamodel [35, 65] and formal semantics [32].

3.3.2 The (graphic) design space

The design space is the set of all possible graphical encodings of a particular notation semantics. This defines a set of semantically equivalent but cognitively inequivalent visual notations. There are 8 elementary visual variables which can be used to graphically encode information [4]. These are categorised into planar variables (the two spatial dimensions) and retinal variables (features of the retinal image) (Fig. 3). These define the dimensions of the graphic design space. They also define a visual alphabet for constructing visual notations: any graphical symbol can be defined by specifying particular values for the visual variables (e.g. shape = rectangle, colour = green) [49]. Notation designers can create an infinite number of graphical symbols by using different combinations of values of these variables.

3.3.3 The solution (optimisation) space

Designing cognitively effective visual notations is a problem of choosing the most cognitively effective representations from the infinite possibilities in the graphic design space. Principles of visual perception and cognition provide the basis for making informed choices among the alternative graphical encodings in the graphic design space.

3.4 Principles for designing cognitively effective visual notations

The practical (prescriptive) component of the Physics of Notations is a set of nine principles for designing cognitively effective visual notations. These are summarised briefly belowFootnote 2:

-

1.

Semiotic Clarity: there should be a 1:1 correspondence between semantic constructs and graphical symbols

-

2.

Perceptual Discriminability: symbols should be clearly distinguishable from one another

-

3.

Semantic Transparency: use symbols whose appearance suggests their meaning

-

4.

Complexity Management: include explicit mechanisms for dealing with complexity

-

5.

Cognitive Integration: include explicit mechanisms to support integration of information from different diagrams

-

6.

Visual Expressiveness: use the full range and capacities of visual variables

-

7.

Dual Coding: use text to complement graphics

-

8.

Graphic Economy: keep the number of different graphical symbols cognitively manageable

-

9.

Cognitive Fit: use different visual dialects for different tasks and/or audiences

All principles define desirable and measurable properties of notations, so provide a basis for evaluation and design. Improving a visual notation with respect to any of the principles will increase its cognitive effectiveness (subject to tradeoffs among them). The Physics of Notations thus defines a causal theory, which posits (positive) causal relationships between each principle and cognitive effectiveness (Fig. 4). In the language of scientific theories, the principles represent independent variables, while cognitive effectiveness is the sole dependent variable. Most importantly, the principles are evidence based: they were synthesised from theory and empirical evidence from a wide range of fields.

Causal structure of the Physics of Notations (using standard scientific notation for representing theories [68])

3.4.1 Interactions among principles

In all design tasks, there are tradeoffs among design goals [1]. Figure 5 summarises the interactions among the visual notation design principles (effects are not necessarily symmetrical). Knowledge of these interactions can be used to make tradeoffs (where principles conflict with one another) and exploit synergies (where principles aid port one another one another). The objective should be to satisfy all principles to an acceptable level, rather than to optimise some at the expense of others.

3.5 i* Representation of the Physics of Notations

Visual notation design is a goal-oriented activity (like any design task), so it should be possible to represent the Physics of Notations as an i* model. The dependent variable (cognitive effectiveness) is operationally defined, so corresponds to a goal in i*. The principles represent desirable and measurable properties of notations, so also correspond to goals. Logically then, the relationship between the principles and cognitive effectiveness (shown in Fig. 4) could be represented using decomposition relationships. However, goals cannot be decomposed in i* (only tasks can be). Another possibility would be to relate them using means-end relationships. However, i* does not allow goals to act as means: only tasks can.Footnote 3 Finally (and we are running out of relationship types here), we could consider that each principle contributes to the overall goal of cognitive effectiveness: these would be help contributions (as each principle positively affects cognitive effectiveness but satisfying any single principle is not enough to achieve cognitive effectiveness). However, i* does not allow contributions to goals (only to softgoals or beliefs). As a result, there seems to be no way of representing the Physics of Notations using i* (Fig. 6).

The relationships among the principles and cognitive effectiveness defined in the Physics of Notations cannot be represented using i*. The leftmost cell shows the relationships using standard scientific notation (a causal graph), while the cells on the right attempt to show the same relationships using i*: however, all result in violations to i* grammatical rules

It is also not possible to represent the interactions among the principles (shown in Fig. 5), which correspond semantically to help, hurt or combined help/hurt contributions, as i* does not allow goals to be the target of contributions. The inability to represent the Physics of Notations using i* suggests a possible problem with its semantics: its grammatical rules are too restrictive and exclude valid situations that can occur in the real world [28].

4 Research approach

4.1 Unit of analysis: choosing an appropriate source

The first issue we faced in conducting our analysis was to choose a particular source of i* as the basis for our analysis: there is no single definition of the language as there is, say, for UML. There are multiple versions and variants of the i* notation, often not fully defined and even contradictory [2]. A review of the literature revealed 4 leading candidates for our analysis:

-

The original i* notation as defined in Eric Yu’s doctoral thesis [89] and RE’97 paper [90]. These are the most cited sources but are becoming rather dated as both are more than 10 years old. They are also unlikely to be used in practice as they (a) are written for an academic audience; (b) [89] is not electronically available; (c) [90] lacks sufficient detail to apply the language in practice.

-

The goal-oriented modelling component of Tropos [6], which was originally based on i* but has since followed its own evolutionary path. Tropos has the advantage of having an explicit metamodel but differs from standard i* in both syntax and semantics.

-

The Goal-oriented Requirements Language (GRL), which has recently been adopted as an international standard in the telecommunications field [36]. Like Tropos, GRL has an explicit metamodel but differs in both syntax and semantics from standard i*.

-

The i* Guide 3.0 [26], available in the form of a Wiki.

We chose the i* Guide [26] for the following reasons:

-

It so represents the most up-to-date source. Being Wiki-based represents a “living” version of the language, while most other sources represent “snapshots” at a point in time.

-

It provides the most detailed description of i* visual syntax.

-

Being web-based, it is the most easily accessible source, so most likely to be used by potential i* users. Empirical studies show that web-based sources are generally preferred to other sources [43].

-

It is the only interactive source: it is based on Wiki technology, so is able to incorporate feedback from the i* user community. For this reason, it is most likely to reflect i* as actually used in practice.

However, the differences in visual syntax between the different sources of i* are relatively minor, so most of our findings will apply to the other sources as well.

4.2 Structure of analysis

The visual notation design principles defined in the Physics of Notations were used to conduct a systematic, symbol-by-symbol analysis of the i* visual notation. The findings for each principle are reported in separate sections (Sects. 5–13). The analysis for each principle is structured as follows:

-

Definition of principle

-

Results of evaluation

-

Recommendations for improvement

-

Interactions with other principles (where relevant)

4.3 Typographical conventions

Cross-references between principles are indicated by underlining; new or important terms by bolding; and i* concepts by small capitals.

5 Semiotic Clarity

5.1 Definition of principle

The Principle of Semiotic Clarity states that there should be a 1:1 correspondence between semantic constructs and graphical symbols. This is necessary to satisfy the requirements of a notational system, as defined in Goodman’s theory of symbols [24]. When there is not a 1:1 correspondence, one or more of the following anomalies can occur (Fig. 7):

-

Symbol deficit: when a semantic construct is not represented by any symbol

-

Symbol redundancy: when a semantic construct is represented by multiple symbols

-

Symbol overload: when the same symbol is used to represent multiple constructs

-

Symbol excess: when a symbol does not represent any semantic construct.

Semiotic Clarity maximises expressiveness (by eliminating symbol deficit), precision (by eliminating symbol overload), and parsimony (by eliminating symbol redundancy and excess) of visual notations.

Evaluating semiotic clarity involves conducting a two-way mapping between a notation’s metamodel and its symbol set (visual vocabulary). This is problematic for the i* Guide, as it does not include an explicit metamodel. While Yu’s PhD thesis [89] includes a metamodel, it is incomplete and represented using non-standard conventions (using Telos [61]). There have been several proposals for i* metamodels in the literature [2, 21], but these have no official status as they were reverse engineered from text and examples describing i*. There are also metamodels for variants of i* such as GRL [36] and Tropos [5, 6]. A problem with all of these for the purposes of our analysis is that they relate to syntactically different versions of i* (different visual dialects) to that defined in the i* Guide. To conduct semiotic analysis, we need a metamodel specific to the notation: an approximate one is not good enough. For this reason, we reverse engineered a metamodel (or more precisely, a metaclass hierarchy) from the i* Guide.

5.1.1 Why i* needs a metamodel

A clear recommendation from this research is that i* needs an official metamodel. To facilitate communication and tool support, this should be represented using industry standard conventions (e.g. using the Meta Object Facility (MOF) [65] or the recently defined ISO/IEC Standard 24744 [35]). Currently, both i* semantic constructs and grammatical rules are defined using natural language, which leads to problems of inconsistency, ambiguity, and incompleteness [2]: an explicit metamodel would help resolve such issues. i* was developed before meta-modelling was standard practice in defining software language engineering so it is not surprising that it did not have one when it was first defined. What is surprising is that it still doesn’t have an official metamodel after more than 10 years in use. Metamodelling represents current best practice in software language engineering: not having one represents a barrier to learning, correct usage, and tool support.

5.2 Results of evaluation

5.2.1 Metaclass hierarchy

The metaclass hierarchy for the i* Guide is shown in Fig. 8: this defines an inheritance hierarchy consisting of semantic constructs (metaclasses) and generalisation relationships among them. Abstract metaclasses (constructs that cannot be instantiated) are shown as dotted boxes. We adopt the UML convention of inheriting from a single (root) element and distinguishing between relationships and other element types: all i* relationships are directed relationships. It is often difficult to determine from the textual descriptions in the i* Guide what is a separate “construct”. To avoid making arbitrary judgements about this, we considered everything with a separate entry or heading in the Wiki to be a construct. However, without an official metamodel, semiotic analysis can only be conducted in an approximate manner.

5.2.2 i* Visual vocabulary

The i* visual vocabulary is summarised in Fig. 9. Symbols are categorised as 2-D symbols (areas or node types), 1-D symbols (lines or link types), and spatial relationships [13].

5.2.3 Semiotic clarity analysis results

There are 31 semantic constructs in i*, consisting of 9 element types and 21 relationship types: this defines the semantic complexity of the notation. There are 16 visually distinct graphical symbols in the i* visual vocabulary, consisting of 11 node types, 4 line types, and 1 spatial relationship: this defines the graphic complexity of the notation. Two symbols are visually distinct if and only if they have a different value for at least one visual variable. This is based on the definition of a graphical symbol (Sect. 3.1): a graphical symbol is defined by specifying particular values for the 8 visual variables.

Any discrepancy between the number of constructs and the number of symbols in a notation is due to semiotic clarity anomalies. The equation below defines the relationship between the number of constructs, the number of symbols, and violations to semiotic clarity.

The results of the semiotic clarity analysis are summarised in Table 1: there is a minor problem of symbol redundancy, a major problem of symbol overload, and no symbol excess or symbol deficit. There is a negative symbol balance (number of symbols − number of constructs) of 15, mainly due to symbol overload.

5.2.4 Symbol redundancy (synographs)

There are two instances of symbol redundancy or synographs (the graphical equivalent of synonyms) in i*. The first and most obvious case is that two alternative symbols may be used to represent beliefs (Fig. 10). No explanation is given for why a choice is provided, which is not provided for any other construct. This places a burden of choice on the notation user to decide which symbol to use and an additional load on the reader to remember multiple representations of the same construct.

A less obvious form of symbol redundancy (a rare case of between-diagram symbol redundancy) is that actors are shown in different ways on different diagram types: as circles on SD diagrams and as a compound symbol (a circle superimposed on a larger, dotted circle) on SR diagrams (Fig. 11). This is an unusual representation choice, and the rationale for this is not explained. In most visual notations, either the same symbol is used to represent the same construct at different levels of abstraction (e.g. UML packages are shown using the same symbol but larger in size when their contents are expanded) or the symbol disappears at the lower level (e.g. when processes are exploded to lower level diagrams in DFDs). Using different symbols also leads to potential problems of Cognitive Integration.

5.2.5 Symbol overload (homographs)

Symbol overload is the worst type of anomaly as it results in perceptual ambiguity and the potential for misinterpretation [24]. There are 17 instances of symbol overload or homographs (the graphical equivalent of homonyms) in i*. All of these occur among relationship types: there are 22 distinct semantic relationship types but only 5 visually distinct graphic relationships: on average, each graphical link has to convey more than 4 different meaningsFootnote 4 (Table 2). The symbol overload for a given symbol is the number of constructs it represents minus one (as each symbol should represent at most one construct).

The high level of symbol overload in i* is due to:

-

Contextual differentiation: actor associations and contributions use the same graphical link but connect different types of elements. The 4 types of strategic dependencies are also differentiated by context (the type of dependum). Contextual differentiation violates one of the basic properties of the symbol system of graphics: monosemy, which means that all symbols should have a single meaning, defined in advance and independent of context [4].

-

Textual differentiation: labels are used to distinguish between 6 types of actor associations and 9 types of contributions. Adding text to a graphical symbol does not result in a new symbol as text is not a visual variable: a graphical symbol is fully defined by its values for the 8 visual variables. Textual differentiation is discussed in more detail under Perceptual Discriminability.

5.2.6 Symbol excess (visual noise)

There are no instances of symbol excess in i*.

5.2.7 Symbol deficit (visual silence)

There are no instances of symbol deficit in i*. However this is not necessarily a good thing as some level of symbol deficit is normally required to keep graphic complexity manageable (see Graphic Economy). It is highly unusual (and generally undesirable) for any RE notation to show all constructs in graphical form.

5.3 Recommendations for improvement

To improve semiotic clarity of i*, all instances of symbol redundancy and overload should be removed.

5.3.1 Remove synographs

Symbol redundancy can be resolved by choosing one of the symbols to represent the construct and removing the other symbol(s) from the notation. As we will see, later principles provide clear guidelines for choosing between the alternative belief symbols (Perceptual Discriminability and Semantic Transparency). A potential solution to the problem of different symbols for actors on SD and SR diagrams is proposed in Cognitive Integration.

5.3.2 Remove homographs

Symbol overload can be resolved by using visual variables (instead of text or context) to distinguish between symbols. Potential solutions to symbol overload are discussed under Perceptual Discriminability (as symbol overload is a special case of perceptual discriminability).

5.4 Interactions with other principles

Semiotic Clarity has important interactions with Complexity Management and Graphic Economy. It can have either positive or negative effects on these principles (Fig. 12):

Interactions between Semiotic Clarity and other principles (like Fig. 6 this also violates rules for showing contributions in i*)

-

Symbol deficit has a positive effect on Complexity Management by decreasing diagrammatic complexity (the number of diagram elements or symbol tokens) and on Graphic Economy by decreasing graphic complexity (the number of symbol types in the notation).

-

Symbol overload has a positive effect on Graphic Economy but no effect on Complexity Management: it reduces the number of symbol types but does not affect the number of symbol tokens.

-

Symbol excess has a negative effect on both Complexity Management and Graphic Economy as it increases both the number of symbol types and symbol tokens.

-

Symbol redundancy has a negative effect on Graphic Economy but no effect on Complexity Management as it increases the number of symbol types but the number of symbol tokens stays the same.

6 Perceptual Discriminability

6.1 Definition of principle

Perceptual Discriminability refers to the ease and accuracy with which symbols can be differentiated from each other. Accurate discrimination between symbols is a prerequisite for accurate interpretation of diagrams [87]. Discriminability is determined by the visual distance between symbols, which is measured by the number of visual variables on which symbols differ and the size of these differences (measured by the number of perceptible steps). While each visual variable has an infinite number of possible values, it only has a finite number of perceptible steps (values that are reliably discriminable by the human mind). In general, the greater the visual distance between symbols, the faster and more accurately they will be recognised [88]: if differences are too subtle, errors in interpretation can result. Discriminability requirements are much higher for novices than for experts as we are able to make much finer distinctions with practice [7].

6.2 Results of evaluation

6.2.1 Similarity of shapes

Of all visual variables, shape plays a privileged role in perceptual discrimination, as it is the primary basis on which we classify objects in the real world. This means that more than any other variable, shapes used to represent different constructs should be clearly distinguishable from one another. Figure 13 shows the shapes used in i*. Experimental studies show that entities (rectangles) and relationships (diamonds) are often confused by novices on ER diagrams [62]. This suggests that similar—or more likely worse—confusion is likely to occur between i* symbols, as there are more symbols and less obvious differences between them.

In particular, the shapes used to represent goal and belief are very similar and do not represent perceptible steps of the visual variable shape. A similar problem exists with agents and roles, which have a very subtle difference: agents have a straight line at the top of the symbol while roles have a curved line at the bottom. No explanation is given for these graphic design choices (top versus bottom = vertical position and straight versus curved = shape), suggesting they are arbitrary. Having some rationale would help people remember which is which (Semantic Transparency), as these symbols are frequently confused in practice.

6.2.2 Shape inconsistency

Discriminability problems can also occur when dissimilar shapes are used to represent the same or similar constructs. This is a problem of visual-semantic congruence: the visual distance between symbols should be consistent with semantic distance between the constructs they represent. In general, similar shapes should be used to represent similar constructs: family resemblances among shapes can be exploited to show family relationships among constructs [29].

This requirement is clearly violated in i*, where one of the subtypes of actor (position) is represented by a shape from a different shape family (Fig. 14).Footnote 5

A similar issue exists with goals and softgoals: shapes from different families are used to represent these concepts when they represent the same (or very similar) things. While much is often made of the difference between goals and softgoals in i*, the semantic distance between these concepts is really quite small. In ontological terms, both represent states of the world an actor desires to achieve [84]. The only difference is that a goal has explicit measures defined while a softgoal can only be evaluated subjectively. A softgoal can become a goal if it is operationalised (i.e. made measurable), suggesting these are not different constructs but different states of the same construct.

6.2.3 Discriminability of relationships: strategic dependencies

strategic dependencies in i* are represented by lines with the letter “D” attached to each side (Fig. 15), with the orientation of the letters indicating the direction of the dependency (the “D”s point towards the dependee). This convention is one of the most distinctive (and peculiar) characteristics of the i* visual notation and makes i* diagrams immediately recognisable.

However, this is not particularly effective as a visual representation technique:

-

The letter “D” is too symmetrical, making it perceptually difficult to identify the direction of the dependency (which way the “D” is pointing). In contrast, direction of conventional arrows (which use “V”s or triangles) can be perceived unambiguously.

-

Attaching “D”s to both sides of the dependency exacerbates this problem, as it requires conscious effort to determine which actor is the depender (the origin of the dependency, who is vulnerable to the dependee) and which is the dependee (the target of the dependency, who has a commitment to the depender). Using conventional arrows, it is easy to distinguish between the origin and destination simply by looking at which end the arrowhead appears.

6.2.4 Discriminability of relationships: textual differentiation

Textual differentiation is commonly used in UML to distinguish between symbols, and many notations have since followed their lead. However, this is not a graphic design practice that should be emulated, as it is cognitively ineffective [60]. Textual differentiation is an extreme case of perceptual discriminability where symbols are not just similar but visually identical: symbols that are differentiated only by text have zero visual distance.

i* uses textual differentiation: to an even greater extent than UML. In fact, most of its relationship types (around 70%) are differentiated in this way (Fig. 16): 6 types of actor associations and 9 types of contributions, which accounts for most of the symbol overload in the notation (Semiotic Clarity).Footnote 6

There are a number of problems with using text to differentiate between symbols:

-

The first is a syntactic one: visual variables form the building blocks for constructing visual notations while alphabetic characters form the building blocks for constructing textual languages. Text is not a visual variable and using it to differentiate between graphical symbols violates the rules of the symbol system of graphics [4].

-

It reduces speed and accuracy of perceptual discrimination: text processing relies on slower, sequential cognitive processes [60]. To maximise discriminability, symbols should be differentiated using visual variables so that differences can be detected automatically and in parallel using perceptual processes.

-

It confounds the role of labels in diagrams. Labels play a critical role at the diagram (sentence) level to differentiate between symbol instances (tokens) and define their correspondence to the real world (Fig. 17). Using labels to differentiate between symbol types (at the language level) confounds this role. In general, text is an effective way to distinguish between symbol instances but not between symbol types. At the notation level, labels should appear as placeholders (variables) rather than literals (constants).

-

Using labels to distinguish between relationship types precludes the use of user-defined and domain relevant labels for relationships at the diagram level.

Textual differentiation of symbols is a common way of dealing with excessive graphic complexity, a problem that i* and UML both suffer from. However, there are much more effective ways of dealing with this than resorting to such measures (see Graphic Economy). In particular, the most successful RE visual notations (e.g. ER, DFDs [15]) don’t do this. Wherever possible, information should be encoded graphically (i.e. using visual variables) to take advantage of the power of human visual processing and computational offloading [73].

6.3 Recommendations for improvement

6.3.1 Shape similarity

The similarity between the standard symbols used for belief and goal provides a strong reason to use the alternative symbol for belief (the cloud) as the sole representation. This would also resolve the problem of symbol redundancy identified in Semiotic Clarity.

6.3.2 Shape inconsistency

Possible solutions to problems of shape inconsistency (between actor and goal types) are discussed under Semantic Transparency.

6.3.3 Strategic dependencies

strategic dependencies are among the most important relationships in i*, so it is important they are clearly discriminable. dependencies could be represented much more clearly using conventional arrows, making sure to use a different type of arrow to those already used in i* (Fig. 18). It is now a straightforward perceptual task to distinguish between vulnerabilities and commitments by the absence or presence of arrowheads. As with the “Ds” in the original representation, the arrows go from the depender to the dependee.

6.3.4 Dealing with textual differentiation of relationships

Textual differentiation of relationships is the major source of Perceptual Discriminability and Semiotic Clarity problems in i*, so resolving this should be a major priority. To do this requires using visual variables instead of text to distinguish between relationship types. As an example of how to do this, consider the case of contributions, the worst case of textual differentiation in i*, in which labels are used to distinguish between 9 different relationship types.Footnote 7 To address this, we need to go back to the semantics these relationships are designed to express: visual representation should always begin with a thorough analysis of the information to be conveyed [4]. As shown in Table 3, these relationships are encoding a number of different (and orthogonal) properties:

-

Sign (positive, negative, and unknown): is there a positive or negative effect on the softgoal?

-

Sufficiency (sufficient, insufficient, and unknown): is the relationship sufficient to satisfy or deny the softgoal?

-

Logical dependencies (AND/OR): are the contributions inter-dependent?

The different contribution types represent different combinations of values of these properties. To graphically encode these relationships, we need to use visual variables to encode each of these elementary properties.

Sign. As one possibility, we could use different line textures to represent positive, negative, and unknown sign correlations. This represents use of texture (a variable rarely used by visual notation designers but heavily used by graphic designers and cartographers):

-

Positive: ++++++++

-

Negative: | | | | | | | | | | |

-

Unknown sign: ? ? ? ? ? ? ? ? ? ?

Alternatively, we could use different line connectors (in place of the existing arrow heads): this represents use of shape (Fig. 19). This would resolve the problem of symbol overload with actor relationships, which currently use the same type of arrowhead. It would also increase discriminability of contributions from all other relationship types, which mostly use different types of arrows.

Sufficiency. Sufficiency is an ordinal property, so should be encoded using ordinal variables (see Visual Expressiveness for a discussion about matching properties of information to properties of visual variables). For example, if we used texture to encode sign, we could use darker and larger texture elements (making use of brightness and size, both ordinal variables) to encode different levels of sufficiency.

-

Positive sufficient (Make): ++++++++

-

Positive unknown (Some +): + + + + + + + +

-

Positive insufficient (Help): + + + + + + + +

Alternatively, if we used shape to encode sign (as in Fig. 19), we could use darker or lighter fills (brightness) to encode sufficiency (Fig. 20).

Logical relationships. One thing to notice about the analysis in Table 3 is that the last two relationships (AND, OR) are independent of all the others. The reason is that they do not define properties of contributions themselves but logical relationships among them: this suggests that they should not be represented as visual properties of links (like sign and sufficiency) but as relationships between links. One way of doing this would be to use merged lines to represent AND relationships and separate lines for OR relationships (Fig. 21: left). Alternatively, different junctions could be used (as in Event Driven Process Chains): in Fig. 21 (right), shape + brightness are used to differentiate the junctions.

7 Semantic Transparency

7.1 Definition of principle

Semantic transparency refers to the use of graphical representations whose appearance suggests their meaning. While Perceptual Discriminability simply requires that symbols be different from each other, this principle requires that they provide cues to their meaning. Semantically transparent symbols reduce cognitive load because they have built-in mnemonics, making it easier to learn and remember what they mean [70]. Such representations improve speed and accuracy of understanding, especially by naïve users [7, 51].

Semantic transparency is not a binary state but a continuum (Fig. 22). Semantic immediacy means that a novice reader would be able to (correctly) infer the meaning of a symbol from its appearance alone (e.g. stick figure for a person). At the other end of the scale, semantic perversity means a novice reader would be likely to guess a completely different meaning. At the zero point of the scale, semantically opacity means there is an arbitrary association between a symbol and its meaning (e.g. rectangles on UML Class Diagrams).

The concept of semantic transparency formalises and operationalises subjective notions like “intuitiveness” or “naturalness” that are often used when discussing visual notations, as it can be empirically evaluated e.g. by getting novices to guess what symbols mean and measuring the correlation between guesses and correct answers (1 indicates semantic immediacy while −1 indicates semantic perversity).

Icons are symbols that perceptually resemble their referent concepts: this reflects one of the basic distinctions in semiotics [69]. Icons are particularly effective for communication with novices as their meaning can be perceived directly or easily learnt; they also support communication across international boundaries [25]. For this reason, icons are routinely used in design of graphical user interfaces (GUIs) but surprisingly rarely in RE visual notations. Empirical studies show that replacing abstract shapes with concrete icons significantly improves understanding of RE models by novices [51]. Semantic transparency also applies to spatial relationships: certain spatial arrangements of visual elements predispose people towards a particular interpretation of how they are related even before the meaning of the elements is known [29, 87].

7.2 Results of evaluation

i* currently makes very little use of semantic transparency. Most symbols in i* are abstract geometrical shapes whose meaning is purely conventional and must be learnt (i.e. semantically opaque). A novice would be unlikely to be able to guess what any of the symbols in Fig. 23 mean. Ironically, one of the few exceptions to this is a synograph: the cloud (the alternative symbol for belief) is a widely recognised convention for expressing inner thoughts—the ubiquitous “thought bubble”. i* also uses spatial enclosure to show actor viewpoints on SR diagrams. Placing elements inside the actor boundary suggests that they form part of that actor’s rationale (as spatial enclosure naturally suggests containment).

7.3 Recommendations for improvement

The semantic opacity of the i* visual notation represents one of its major weaknesses for communicating with end users. On the positive side, it also represents one of the major opportunities for improving it. Semantic transparency is one of the most powerful tools in the visual notation designer’s bag for improving understanding by novices.

7.3.1 Beliefs

The alternative symbol for belief (cloud) is the only semantically transparent node type in i*. This provides another reason to choose this as the standard (and sole) symbol for belief to resolve the problem of symbol redundancy (Semiotic Clarity).

7.3.2 Actors and actor types

The current symbols used to represent actors and actor types are neither discriminable nor mnemonic. An obvious way to increase the semantic transparency of i* diagrams would be to use stick figures to represent actors (as in UML Use Cases and rich pictures). Such figures are truly semantically immediate, as they are universally interpreted as representing people and have been used for this purpose across cultures and time: stick figures are commonly seen in early cave paintings and children’s drawings. This would also increase the discriminability of actors from other symbols (Perceptual Discriminability).

Different types of actors could be distinguished using variations of stick figures (Fig. 24):

-

An agent could be shown wearing dark glasses and holding a gun (by association with agents of the 007 kind)

-

A position could be shown without a face as it does not represent a specific person (or perhaps by association with faceless bureaucrats!). Alternatively, a position could be shown with a rectangular head (not shown in Fig. 24), suggesting a position in an organisational chart.

-

A role could be shown with a hat, as “wearing different hats” is a common metaphor used across cultures for playing different roles.

Note that all actor types now have the same basic shape, thus resolving the problem of shape inconsistency identified in Perceptual Discriminability. Drawing these figures could present problems for the artistically challenged (Cognitive Fit), but would make diagrams more visually interesting and appealing to novices.

Semantic immediacy versus semantic translucency. While semantic immediacy draws on direct (literal) associations such as perceptual resemblance (e.g. stick figures for people), semantic translucency draws on indirect (mnemonic) associations. To be a good mnemonic, a symbol needs to be able to trigger the appropriate concept in the reader’s mind. For example, we are not suggesting that i* agents wear dark glasses and carry guns, but use this image to trigger the concept of “agent”. If a novice reader sees this for the first time, they would be unlikely to be able to guess what it means (i.e. it is not semantic immediate in the strict sense); however, once the association is explained to them, it will help them to remember it in the future. Such representations can draw on word association (e.g. secret agent → agent), rhetorical devices (e.g. alliteration: green → goal), metaphorical associations (e.g. hats → roles), or cultural associations (e.g. red → danger).

7.3.3 Intentional elements

The remaining node types in i* are the intentional elements: goals, softgoals, tasks, and resources. These are among the most important constructs in i*, yet currently all use abstract symbols that have no association with their referent concepts (semantic opacity), making it difficult for novices to learn and remember what they mean. In general, it is much more difficult to find semantically transparent representations for abstract concepts such as these, which unfortunately represent the majority of constructs in RE notations. It is usually impossible to find a direct (semantically immediate) representation, as this relies on perceptual resemblance, so the best we can hope for is an indirect (semantically translucent) association.

Figure 25 shows a set of semantically translucent symbols for the i* intentional elements. All of these are designed to resemble concrete objects that are somehow associated with the referent concept:

-

The task symbol is designed to look like a “sticky note”, one of the most common ways of recording tasks in everyday life.

-

The resource symbol resembles a tree, one of our most important natural resources.

-

The goal symbol is designed to look like a football, by association with goals in football (drawing on a sporting metaphor). This symbol could be made to look more football-like by using a 3D rather than a 3D symbol (i.e. a sphere rather than a circle). 3D symbols have the added advantage of being more perceptually effective than 2D shapes [34].

-

softgoals are shown as dotted circles, to suggest that they are goals (as they are the same shape) but are less well defined (as shown by their “fuzzy” outline). This resolves the problem of shape inconsistency identified in Perceptual Discriminability: goals and softgoals now have the same shape and are differentiated by a secondary visual variable (brightness).

All of these symbols rely on indirect associations rather than direct perceptual resemblance: sticky note → task; tree → resource; football → goal (Fig. 26). However, once the association between the (concrete) object and the (abstract) concept is explained, it can be used to aid recognition and recall.

The proposed new symbols are also much more discriminable as they use shapes from different shape families (circles, squares, and irregular polygons) so are highly unlikely to be confused. In most cases, improving Semantic Transparency also improves Perceptual Discriminability [58].

7.3.4 Putting the “fun” back into i*

Another, less tangible benefit of using iconic representations is that it makes diagrams look more fun and accessible to novices. Appearances are important and can affect users’ motivation to participate in the analysis process and their perceptions of their ability to do so effectively (self-efficacy) [70]. Pictorial representations appear less daunting to novices (especially technophobes) than diagrams comprised only of abstract symbols [3, 70]. If diagrams look easy to understand, this can go a long way towards breaking down communication barriers between analysts and business stakeholders. Empirical studies also show that people prefer concrete objects to abstract symbols, which makes iconic representations more enjoyable and "fun" [3]. Rich pictures [10], another technique used in early analysis, make extensive use of iconic representations, resulting in diagrams that look more like cartoons than technical diagrams (Fig. 27). These demonstrate that RE visual notations don’t have to be dull (though they almost always are).

Rich pictures [10]: an example of the use of pictorial representations in early analysis

Currently, there is very little about i* diagrams that suggests they are intended for use in early analysis. They are as technical-looking—if not more—as diagrams used in later development stages (e.g. UML). Replacing the existing i* symbol set by a more iconic vocabulary would make diagrams more cartoon-like and accessible to non-technical people (i.e. more like rich pictures).

7.3.5 Task decomposition relationships

task decomposition in i* is currently shown using links with a perpendicular bar at the “parent” end (Fig. 28: left): subtasks may appear above, below, left, or right of their parent task. This is a semantically opaque way to show decomposition and a novice reader would be highly unlikely to guess the relationship among the elements. Decomposition could be shown in a more semantically transparent way using “organisational chart” or “tree structure” lines (right-angled lines which converge into one at the parent end), with children horizontally aligned and vertically below the parent (Fig. 28: right). Experimental studies show that this configuration of elements is naturally interpreted as a hierarchy [87] and using any other spatial layout of elements (e.g. left to right, right to left, or bottom to top) is likely to be misinterpreted [40]. Of course, using such a layout convention is only possible if diagrammatic complexity is reduced (Complexity Management): currently i* diagrams are so complex that elements must be placed wherever they fit.

Task decomposition. Left: existing representation (from [89]); right: this spatial arrangement of elements is naturally interpreted as a hierarchy

There is no longer any need to indicate the direction of decomposition (the perpendicular bar in the existing representation) as this is defined by vertical position (the parent is always above the child). However, circles are used as line connectors to clearly differentiate these relationships from other types of relationships (Perceptual Discriminability).

This representation incorporates 3 visual variables: horizontal position, vertical position, and shape (for both line topology and line connectors). Using multiple visual variables to differentiate between symbols is called redundant coding, which improves Perceptual Discriminability (by increasing visual distance) and Visual Expressiveness.

8 Complexity Management

8.1 Definition of principle

Complexity management refers to the ability to present large amounts of information without overloading the human mind. This relates to diagrammatic complexity: the number of symbol instances or tokens on each diagram (not to be confused with graphic complexity, the number of symbol types in a notation, which is covered under Graphic Economy). Managing complexity is an important issue in RE [37] and was identified as the #1 “hot spot” in a recent review of RE research [11]. It is also one of the most intractable issues in design of visual notations: a well-known problem with visual representations is that they do not scale well [12].

Complexity has a major effect on cognitive effectiveness as the amount of information that can be effectively conveyed by a diagram is limited by human perceptual and cognitive abilities:

-

Perceptual limits: The ability to discriminate between diagram elements increases with diagram size [67].

-

Cognitive limits: The number of diagram elements that can be comprehended at a time is limited by working memory capacity (believed to be seven, plus or minus two elements at a time [54]). When this is exceeded, a state of cognitive overload ensues and comprehension degrades rapidly.

Complexity management is especially important for communicating with end users, who lack strategies for dealing with complexity [58]. Complexity is one of the major barriers to end user understanding of RE diagrams [55, 75].

To effectively represent complex situations, visual notations need to allow diagrams to be divided into perceptually and cognitively manageable “chunks”. The most effective way of doing this is by recursive decomposition: allowing diagram elements to be defined by complete diagrams at the next level of abstraction [16]. This is the common denominator among all visual notations that effectively manage complexity. Visual languages that support this are called hierarchical visual languages [13]. Hierarchical structuring of RE diagrams in this way can improve end user understanding by more than 50% [55]. DFDs provide one of the earliest (and best) examples of how to do this, which may partly explain their longevity in practice despite their well-known semantic limitations (Fig. 29).

8.1.1 The role of tool support

A common misconception is that complexity management should be provided by tools rather than by the notation itself. It is only because so many RE notations lack formal complexity management mechanisms that tool designers are forced to incorporate them to make notations workable in practice. However, leaving this up to tools is likely to result in inconsistent and suboptimal solutions. Each tool vendor will implement complexity management in different ways (“point solutions” [9]), creating problems of learning and communication. To be most effective, complexity management mechanisms should be defined at the notation level [56, 85]. Notations such as DFDs, UML Activity Diagrams, and Statecharts do this, which increases both their usability (for analysts) and communication effectiveness (for end users).

8.2 Results of evaluation

Currently, i* lacks effective complexity management mechanisms. It provides some hierarchical structuring, as SD and SR diagrams define two levels of abstraction. However, to be most effective, the number of levels of abstraction should not be fixed but should vary depending on the complexity of the underlying domain [20]. A much greater limitation is that it provides no way of partitioning either type of diagram, meaning that both SD and SR diagrams must be represented as single monolithic diagrams, no matter how complex they become (Fig. 30). Empirical studies show SD diagrams consist of an average of around 100 elements while SR diagrams can consist of more than 300 elements [19], which exceed perceptual and cognitive limits.

SD diagram example: i* lacks mechanisms for modularising diagrams, which means that each diagram type must be shown as a single monolithic diagram (example from [89])

Without effective complexity management mechanisms, i* stands little chance of being adopted in projects of real world size and complexity, where managing complexity represents one of the greatest challenges [17, 23]. One of the only empirical evaluations of i* in practice identified lack of complexity management as the most serious barrier to its use in industrial projects. The study concluded:

The evaluation has demonstrated that there is a set of issues that need to be addressed by the i* modelling framework to ensure its successful application within industrial software development projects. These issues boil down to a lack of modularization mechanisms for creating and structuring organizational models [19].

The main weakness in i* is that it does not support recursive (element → diagram) decomposition (Fig. 31). Currently, i* provides two forms of decomposition:

-

Element → element decomposition: tasks can be decomposed via task decomposition relationships. However, they can only be decomposed in situ (on the same diagram), which does not reduce diagrammatic complexity.

-

Diagram → Diagram decomposition: actors are decomposed in more detail on the SR diagram, but this is limited to one level of decomposition and all actors are decomposed on a single diagram, which does not reduce diagrammatic complexity at the lowest level (where it is needed most).

8.3 Recommendations for improvement

8.3.1 Partitioning the SR diagram

One way of reducing complexity of the SR diagram would be to “explode” each actor on the SD diagram into a separate SR diagram: this would partition the SR diagram into a set of smaller diagrams (one for each actor). This provides a recursive decomposition capability for actors, though this is limited to a single level.

8.3.2 Allow all elements to be decomposed

Currently, i* only allows actors and tasks to be decomposed. To effectively support complexity management, this capability should be extended to other (possibly all) constructs. Among other things, this would solve the problem of the Physics of Notations not being representable in i* by allowing goals to be decomposed into sub-goals (Sect. 3.5).

8.3.3 Provide recursive decomposition capability

To effectively support complexity management, i* needs to support recursive (element → diagram) decomposition. That is, to allow elements on higher level diagrams to be defined by complete diagrams at the next level, to as many levels as required. For example, tasks could “explode” to task decomposition diagrams, goals to end-means diagrams, and softgoals to contribution diagrams. This would result in a hierarchy of diagrams, with the SD diagram at the top level, SR diagrams (one for each actor) at the second level and lower level diagrams (“exploding” elements on SR diagrams) to as many levels as required (Fig. 32).

9 Cognitive Integration

9.1 Definition of principle

This principle only applies when multiple diagrams are used to represent a problem situation. Using multiple diagrams places additional cognitive demands on the reader to mentally integrate information from different diagrams and keep track of where they are in the system of diagrams [76]. Kim et al. [30, 38] have proposed a theory to address this issue, called the cognitive integration of diagrams (Fig. 33). According to this theory (which has been validated in an RE context), for multi-diagram representations to be cognitively effective, they must include explicit mechanisms to support:

-

Conceptual integration: to enable readers to assemble information from separate diagrams into a coherent mental representation of the system.

-

Perceptual integration: perceptual cues to simplify navigation and transitions between diagrams.

9.2 Results of evaluation

Currently, cognitive integration is not a major problem in i* as there are only two diagrams to integrate (as there are only two diagram types and each is represented as a single diagram). However, introducing complexity management (as described in the previous section) introduces cognitive integration problems, as it multiplies the number of diagrams.

9.2.1 Naming of diagram types

The current names for the i* diagram types (Strategic Dependency and Strategic Rationale) are too similar and easily confused. In particular, "strategic" acts as a “noise word” as it lacks a precise meaning and is overused in most business contexts. It also performs no differentiating function as it prefixes both diagram types.

9.2.2 Linking diagram types (perceptual integration)

The use of different symbols to represent actors on SD and SR diagrams (discussed under Semiotic Clarity) makes the link between the diagram types unclear.

9.2.3 Lack of overview (conceptual integration)

An important mechanism to support conceptual integration is a longshot diagram, a diagram that provides an overview of the system as a whole. This acts as an overall cognitive map into which information from individual diagrams can be assembled [38, 72]. Examples of such diagrams are rich pictures in the Soft System Methodology and context diagrams in DFDs. While i* includes a top level diagram (the SD diagram), this is too complex to provide an effective overview (e.g. see Fig. 30). As Estrada et al. [19] say:

The dependency model is too concrete to serve as starting point for the analysis of a large enterprise. In such cases, it may contain many actors with a large number of dependencies corresponding to different business processes, whose union constitutes a very complicated model to manage.

9.3 Recommendations for improvement

9.3.1 Naming of diagram types

Alternative names for the diagram types could be:

-

Actor Dependency Diagram: top level diagram showing dependencies among actors.

-

Actor Rationale Diagrams: diagrams for each actor showing their internal rationale or intentions.

These names are more indicative of the content of each diagram and less likely to be confused with each other.

9.3.2 Linking diagram types (perceptual integration)

To more clearly show the link between the SD and SR diagrams, the same symbol should be used to represent actors on both diagram types, as the SR diagram is really just a refinement of the SD diagram. One way of doing this would be to use the stick figure on both diagrams, but with the head expanded on the SR diagram to show the “inner workings” of each actor’s mind (Fig. 34). This would also resolve the problem of symbol redundancy identified in Semiotic Clarity.

9.3.3 Contextualisation (focus + context)

Contextualisation (or focus + context) is a technique used in information visualisation where the part of a system of interest (the focus) is displayed in the context of the system as a whole [41, 81]. This could be applied in SR diagrams by exploding a single actor at a time (the focus), while showing dependencies with all other actors (the context). All actors apart from the focus would be shown in “black box” form (as on the SD diagram). Currently, the SR diagram shows all actors in “glass box” form, leading to uncontrolled complexity.

9.3.4 Create an overview diagram (conceptual integration)

Currently, the SD diagram is too complex to provide an effective overview or longshot diagram. This is one of the limitations of the complexity management approach proposed in the previous section: while it provides a way of decomposing the SR diagram, the SD diagram remains as a single monolithic diagram.

This problem is difficult to resolve within the existing i* diagram architecture, where the SD diagram shows all actors and dependencies among them and the SR diagram explodes each actor. One solution would be to create an overview diagram that shows only a subset of elements on the SD diagram e.g. the central actors and their most important goals. This should be limited to 7 ± 2 elements so that it is cognitively manageable. Each element on this diagram can then be recursively decomposed to as many levels as required. This would remove the (somewhat artificial) distinction between SD and SR diagrams that currently exists in i*. In this new proposal, there would be a single diagram type, shown at multiple levels of abstraction. There would be no restriction on the type of elements that can appear on each diagram, only on their number.

10 Visual Expressiveness

10.1 Definition of principle

The visual expressiveness of a notation is defined by the number of different visual variables used and the range of values (capacity) used of each variable: this measures utilisation of the graphic design space. Using a variety of visual variables results in a perceptually enriched representation that exploits multiple visual communication channels.

The choice of visual variables should not be arbitrary but should be based on the nature of the information to be conveyed [4]. Different visual variables have properties that make them suitable for encoding different types of information. For example, colour can only be used for nominal data as it is not psychologically ordered [39].

10.2 Results of evaluation

Currently, i* uses three visual variables (shape, brightness, and orientation), resulting in a visual expressiveness of 3. This is more than most RE visual notations, which typically only rely on a single visual variable (shape). Another positive aspect is that the i* symbol set uses more curved shapes than most RE visual notations, which mostly rely on rectangles or rectangle variants. Curved shapes are both more perceptually efficient and aesthetically pleasing [3, 71]. Overall, i* does well on this criterion compared to most RE visual notations, but there is still room for improvement. In particular, it uses only two levels of brightness (dotted vs. solid lines) and a limited range of shapes (all abstract geometrical shapes). Iconic and 3D shapes are not used at all, even though these are more perceptually and cognitively effective than abstract 2D shapes [34, 87].

10.2.1 Informal and ineffective use of colour

Colour is one of the most cognitively effective of all visual variables: the human visual system is highly sensitive to variations in colour and can quickly and accurately distinguish between them [49, 88]. Differences in colour are detected three times faster than shape and are also more easily remembered [47, 79]. However, if not used carefully, colour can undermine communication.

Currently, colour is not used effectively in i*. Most examples in the i* Guide use blue text on a green background for symbols (e.g. Fig. 35) but use of colour is not explicitly mentioned in the text, making it unclear whether this an official part of the visual syntax. Whether it is or not, it certainly does not represent effective use of colour:

Ineffective use of colour in i* (from [26])

-

All symbols are the same colour, meaning that colour plays no role in differentiating between symbols (Perceptual Discriminability). In fact, colour does not convey any additional information, which is why it is not included in the calculation of i*’s visual expressiveness (a variable only adds to visual expressiveness if it is used to encode information).

-

Coloured text on a coloured background reduces understanding of text and is the worst possible combination for both legibility and aesthetics [86]. This means that the use of colour actively undermines communication.

10.3 Recommendations for improvement

10.3.1 Effective use of colour

A common misconception is that use of colour should be defined as part of tool support rather than the notation itself. In the case of i*, use of colour is not formally prescribed in the notation so different software tools apply different colour schemes (e.g. [50]). However, like complexity management, use of colour should not be left up to tool designers to implement in idiosyncratic and possibly suboptimal ways (e.g. using colours that are not reliably discriminable or reduce legibility of text). Effective use of colour should be prescribed at the notation level to avoid such problems.

Colour could be used to increase the visual expressiveness of i* by using different colours to distinguish between symbols (Fig. 36). The colours are chosen to be as discriminable and mnemonic as possible:

-

tasks are yellow (the standard colour for “sticky notes”)

-

resources are green (like trees)

-

softgoals are pink (suggesting “softness” or “fluffiness”)

-

goals are white (like footballs)

In this case, colour is used to communicate rather than to decorate: it helps both to distinguish between symbols (Perceptual Discriminability) and suggest their meaning (Semantic Transparency). Note that colour is only used redundantly, to reinforce differences in shape and brightness. Colour should never be used as the sole basis for distinguishing between symbols, as it is highly sensitive to differences in visual perception (e.g. colour blindness) and printing/screen technology (e.g. black and white printers). Robust design means designing symbols so they are impervious to such differences. Figure 37 shows how the differences between symbols are preserved even in conversion to black and white (the strongest test for robust design).

10.3.2 Dependency strengths (level of vulnerability)

As discussed in Semiotic Clarity and Perceptual Discriminability, i* makes extensive use of textual encoding to distinguish between different types of relationships. However, it also uses text to encode properties of relationships (e.g. as UML does for multiplicities). For example, different dependency strengths or vulnerability levels for strategic dependencies are distinguished by placing different letters next to the lines (Fig. 38: left). Where possible, information should be encoded graphically (using visual variables rather than text or typographical characteristics) to take maximum advantage of the power of human visual processing. Because dependency strength is an ordinal property, ordinal visual variables need to be used to encode this information. Figure 38 (right) shows how dependency strengths could be encoded graphically. Three visual variables are used in this representation: brightness (dotted lines for open dependencies), size (thick lines for committed dependencies), and colour (red for critical dependencies).

10.3.3 Visual saturation

Many of the recommendations in this paper have involved introducing additional visual variables or expanding the range of values used of a particular visual variable:

-

contributions (Perceptual Discriminability): texture

-

Actors and intentional elements (Semantic Transparency): greater range of shapes (iconic and 3D), brightness

-

task decomposition relationships (Semantic Transparency): vertical position (y), horizontal position (x), and shape

-

Colour coding of intentional elements (Visual Expressiveness): colour

-

dependency strengths (Visual Expressiveness): colour, brightness, and size

Visual saturation refers to the use of the full range of visual variables (the maximum level of visual expressiveness). If all these recommendations were implemented, the visual expressiveness of i* would reach the point of visual saturation, the first RE notation ever to achieve this.

10.4 Interactions with other principles

Increasing Perceptual Discriminability almost always increases visual expressiveness as increasing visual distance involves using a greater range of visual variables and/or a greater range of values. Visual expressiveness also assists Graphic Economy.

11 Dual Coding

11.1 Definition of principle

Perceptual Discriminability and Visual Expressiveness both advise against using text to encode information. However, this does not mean that text has no place in visual notations. According to dual coding theory [66], using text and graphics together to convey information is more effective than using either on their own. This suggests that textual encoding is most effective when it is used in a supporting role: to supplement rather than to substitute for graphics. In particular, text should never be used as the sole basis for distinguishing between symbols, but can be usefully used as a form of redundant coding, to reinforce and clarify meaning.

11.2 Results of evaluation

Currently, i* only uses text or graphics on its own to encode information in its symbol set, so makes no use of Dual Coding.

11.2.1 Labelling of elements

Labels play an important role in interpretation of diagrams and in defining their real world semantics. Many of the examples in the i* Guide use a distinctive convention for labelling diagram elements, called the Type [Topic] notation (e.g. see Fig. 35). However, the rules for formulating such labels and how to interpret them are not explained.

One of the most unusual features of i* is that all relationship labels on diagrams are drawn from a predefined vocabulary of reserved words (e.g. plays, covers, help, and hurt) rather than allowing users to define domain relevant labels. This is something more commonly associated with programming languages than RE notations and is a side-effect of using labels to differentiate between relationship types (Perceptual Discriminability).

A final problem is that most relationships on i* diagrams don’t have labels at all (e.g. see Fig. 35): in particular, strategic dependencies, task decomposition, and means-end relationships. Currently, i* only uses relationship labels for differentiating between relationship types, which is an inappropriate use of labels (as explained in Perceptual Discriminability).

11.2.2 Definitions of elements