Abstract

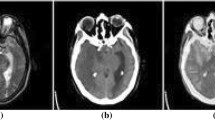

Medical image fusion is a synthesis of visual information present in any number of medical imaging inputs into a single fused image without any distortion or loss of detail. It enhances image quality by retaining specific features to improve the clinical applicability of medical imaging for treatment and evaluation of medical conditions. A big challenge in the processing of medical images is to incorporate the pathological features of the complement into one image. The fused image presents various challenges, such as existence of fusion artifacts, hardness of the base, comparison of medical image input, and computational cost. The techniques of hybrid multimodal medical image fusion (HMMIF) have been designed for pathologic studies, such as neurocysticercosis, degenerative and neoplastic diseases. Two domain algorithms based on HMMIF techniques have been developed in this research for various medical image fusion applications for MRI-SPECT, MRI-PET, and MRI-CT. NSCT is initially used in the proposed method to decompose the input images which give components of low and high frequency. The average fusion rule applies to NSCT components with low frequency. The NSCT high frequency components are fused by the law of full fusion. NSCTs high frequency is handled with directed image filtration scheme. The fused picture is obtained by taking inverse transformations from all frequency bands with the coefficients obtained from them. The methods suggested are contrasted with traditional approaches in the state of the art. Experimentation proves that the methods suggested are superior in terms of both qualitative and quantitative assessment. The fused images using proposed algorithms provide information useful for visualizing and understanding the diseases to the best of both sources’ modality.

Similar content being viewed by others

References

Stephan, T., Al-Turjman, F., Joseph, S., Balusamy, B.: Energy and spectrum aware unequal clustering with deep learning based primary user classification in cognitive radio sensor networks. Int. J. Mach. Learn. Cybern. (2020). https://doi.org/10.1007/s13042-020-01154-y

Ullah, F., Jabbar, S., Al-Turjman, F.: Programmers’ de-anonymization using a hybrid approach of abstract syntax tree and deep learning. Technol. Forecast. Soc. Change 159, 120186 (2020). https://doi.org/10.1016/j.techfore.2020.120186

Hossain, M.S., Amin, S.U., Muhammad, G., Al-Sulaiman, M.: Applying deep learning for epilepsy seizure detection and brain mapping visualization. ACM Trans. Multimedia Comput. Commun. Appl. 15(1), 17 (2019)

Rajalingam, B., Priya, R.: Multimodal medical image fusion based on deep learning neural network for clinical treatment analysis. Int. J. ChemTech Res. 11(06), 160–176 (2018)

Ramlal, S.D., Sachdeva, J., Ahuja, C.K., Khandelwal, N.: Multimodal medical image fusion using non-subsampled shearlet transform and pulse coupled neural network incorporated with morphological gradient. Signal Image Video Process. 12, 1479–1487 (2018)

James, A.P., Dasarathy, B.V.: Medical image fusion: a survey of the state of the art. Inf. Fusion 19, 4–19 (2014)

Gupta, D.: Nonsubsampled shearlet domain fusion techniques for CT-MR neurological images using improved biological inspired neural model. Biocybern. Biomed. Eng. 38, 262–274 (2017)

Daniel, E.: Optimum wavelet based homomorphic medical image fusion using hybrid genetic—grey wolf optimization algorithm. IEEE Sens. J. 18, 1558–1748 (2018)

Daniel, E., Anithaa, J., Kamaleshwaran, K.K., Rani, I.: Optimum spectrum mask based medical image fusion using Gray Wolf Optimization. Biomed. Signal Process. Control. 34, 36–43 (2017)

Bhatnagar, G., Wua, Q.M.J., Liu, Z.: Human visual system inspired multi-modal medical image fusion framework. Expert Syst. Appl. 40, 1708–1720 (2013)

Bhadauria, H.S., Dewal, M.L.: Medical image denoising using adaptive fusion of curvelet transform and total variation. Comput. Electr. Eng. 39, 1451–1460 (2013)

Hermessi, H., Mourali, O., Zagrouba, E.: Convolutional neural network-based multimodal image fusion via similarity learning in the shearlet domain. Neural Comput. Appl. 30, 2029–2045 (2018)

Shahdoosti, H.R., Mehrabi, A.: Multimodal image fusion using sparse representation classification in tetrolet domain. Digit. Signal Process. 79, 9–22 (2018)

El-Hoseny, H.M., El-Rabaie, E.-S.M., Elrahman, W.A., El-Samie, F.A.A.: Medical image fusion techniques based on combined discrete transform domains. Arab Acad. Sci. Technol. Marit. Transp. IEEE, pp. 471–480 (2017)

Du, J., Li, W., Lu, K., Xiao, B.: An overview of multi-modal medical image fusion. Neurocomputing 215, 3–20 (2016)

Jiao, Du., Li, W., Xiao, B., Nawaz, Q.: Union Laplacian pyramid with multiple features for medical image fusion. Neurocomputing 194, 326–339 (2016)

Zonga, J.-J., Qiu, T.-S.: Medical image fusion based on sparse representation of classified image patches. Biomed. Signal Process. Control 34, 195–205 (2017)

Xi, J., Chen, Y., Chen, A., Chen, Y.: Medical image fusion based on sparse representation and PCNN in NSCT domain. Comput. Math. Methods Med. (2018)

Tao, J., Li, S., Yang, B.: Multimodal image fusion algorithm using dual-tree complex wavelet transform and particle swarm optimization, CCIS 93, pp. 296–303. Springer, Berlin (2010)

Xia, K.J., Yin, H.S., Wang, J.Q.: A novel improved deep convolutional neural network model for medical image fusion. Cluster Comput. 22, 1515–1527 (2018)

Chavan, S., Mahajan, A., Talbar, S.N., Desai, S., Thakur, M., D’cruz A, : Nonsubsampled rotated complex wavelet transform (NSRCxWT) for medical image fusion related to clinical aspects in neurocysticercosis. Comput. Biol. Med. 81, 64–78 (2017)

Liu, Y., Chen, X., Cheng, J., Peng, H.: A medical image fusion method based on convolutional neural networks. In: 20th International Conference on Information Fusion, Xi'an, China—July 10–13 (2017)

Liu, Y., Chen, X., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191–207 (2017)

Liu, Y., Chen, X., Wang, Z., Wang, Z.J., Ward, R.K., Wang, X.: Deep learning for pixel-level image fusion: recent advances and future prospects. Inf. Fusion 42, 158–173 (2018)

Du, C.B., Gao, S.S.: Multi-focus image fusion with the all convolutional neural network. Optoelectron. Lett. 14(1), 71–75 (2018)

Sreeja, P., Hariharan, S.: An improved feature based image fusion technique for enhancement of liver lesions. Biocybern. Biomed. Eng. 38, 611–623 (2018)

Xiaojun, Xu., Wang, Y., Chen, S.: Medical image fusion using discrete fractional wavelet transform. Biomed. Signal Process. Control 27, 103–111 (2016)

Liu, X., Mei, W., Du, H.: Structure tensor and nonsubsampled sheasrlet transform based algorithm for CT and MRI image fusion. Neurocomputing 235, 131–139 (2017)

Hossain, M.S., Muhammad, G.: Emotion recognition using deep learning approach from audio–visual emotional big data. Inf. Fusion. 49(2019), 69–78 (2019)

Jacob, S., Menon, V., Al-Turjman, F., Mostarda, L.: Artificial muscle intelligence system with deep learning for post-stroke assistance and rehabilitation. IEEE Access 7(1), 133463–133473 (2019)

Wang, J., Jabbar, S., Al-Turjman, F., Alazab, M.: Source code authorship attribution using hybrid approach of program dependence graph and deep learning model. IEEE Access 7(1), 141987–141999 (2019)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rajalingam, B., Al-Turjman, F., Santhoshkumar, R. et al. Intelligent multimodal medical image fusion with deep guided filtering. Multimedia Systems 28, 1449–1463 (2022). https://doi.org/10.1007/s00530-020-00706-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-020-00706-0