Abstract

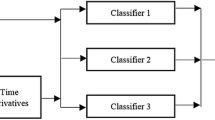

The need to classify audio into categories such as speech or music is an important aspect of many multimedia document retrieval systems. In this paper, we investigate audio features that have not been previously used in music-speech classification, such as the mean and variance of the discrete wavelet transform, the variance of Mel-frequency cepstral coefficients, the root mean square of a lowpass signal, and the difference of the maximum and minimum zero-crossings. We, then, employ fuzzy C-means clustering to the problem of selecting a viable set of features that enables better classification accuracy. Three different classification frameworks have been studied:Multi-Layer Perceptron (MLP) Neural Networks, radial basis functions (RBF) Neural Networks, and Hidden Markov Model (HMM), and results of each framework have been reported and compared. Our extensive experimentation have identified a subset of features that contributes most to accurate classification, and have shown that MLP networks are the most suitable classification framework for the problem at hand.

Similar content being viewed by others

References

Scheirer, E., Slaney, M.: Construction and evaluation of a robust multifeature speech/music discriminator. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'97, IEEE), Vol. 2, pp. 1331–1334 (1997)

Saad, E.M., El-Adawy, M.I., Abu-El-Wafa, M.E., Wahba, A.A.: A multifeature speech/music discrimination system. In: Proceedings of the 19th National Radio Science Conference (NRSC'02, IEEE), pp. 208–213 (2002)

John Saunders: Real-time discrimination of broadcast speech/music. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'96, IEEE), Vol. 2, pp. 993–996 (1996)

Carey, M.J., Parris, E.S., Lloyd-Thomas, H.: A comparison of features for speech, music discrimination. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'99, IEEE), Vol. 1, pp. 149–152 (1999)

Parris, E.S., Carey, M.J., Lloyd-Thomas, H.: Feature fusion for music detection. In: Proceedings of the European Conference on Speech Communication and Technology (EUROSPEECH'99), pp. 2191–2194 (1999)

Chou, W., Gu, L.: Robust singing detection in speech/music discriminator design. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'01, IEEE), Vol. 2, pp. 865–868 (2001)

Pinquier, J., Sénac, C., André-Obrecht, R.: Speech and music classification in audio documents. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'02, IEEE), Vol. 4, pp. 4164–4164 (2002)

Pinquier, J., Rouas, J.-L., André-Obrecht, R.: Robust speech/music classification in audio documents. In: Proceedings of the 7th International Conference on Spoken Language (ICSLP'02), Vol. 3, pp. 2005–2008 (2002)

Pinquier, J., Rouas, J.L., André-Obrecht, R.: A fusion study in speech/music classification. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'03, IEEE), Vol. 2, pp. II-17–II-20 (2003)

Harb, H., Chen, L.: Robust speech music discrimination using spectrum's first order statistics and neural networks. In: Proceedings of the 7th International Symposium on Signal Processing and its Applications, IEEE, Vol. 2, pp. 125–128 (2003)

Harb, H., Chen, L., Auloge, J.Y.: Speech/music/silence and gender detection algorithm. In: Proceedings of the 7th International Conference on Distributed Multimedia Systems (DMS'01), pp. 257–262 (2001)

Karnebäck, S.: Discrimination between speech and music based on a low frequency modulation feature. In: Proceedings of the European Conference on Speech Communication and Technology (EUROSPEECH'01), pp. 1891–1894 (2001)

Wang, W.Q., Gao, W., Ying, D.W.: A fast and robust speech/music discrimination approach. In: Proceedings of the Information, Communications & Signal Processing (ICICS-PCM'03, IEEE), Vol. 3, pp. 1325–1329 (2003)

El-Maleh, K., Klein, M., Petrucci, G., Kabal, P.: Speech/music discrimination for multimedia applications. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'00, IEEE), Vol. 4, pp. 2445–2448 (2000)

Panagiotakis, C., Tziritas, G.: A speech/music discriminator based on rms and zero-crossings. IEEE Trans. Multimedia (2004)

Shao, X., Xu, C., Kankanhalli, M.S.: Applying neural network on content-based audio classification. In: Proceedings of the Fourth International Conference on Information, Communications and Signal Processing, IEEE, Vol. 3, pp. 1823–1825 (2003)

Lippens, S., Martens, J.P., De Mulder, T., Tzanetakis, G.: A comparison of human and automatic musical genre classification. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'04, IEEE), Vol. 4, pp. IV-233–IV-236 (2004)

Srinivasan, S.H., Kankanhalli, M.: Harmonicity and dynamics-based features for audio. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'04, IEEE), Vol. 4, pp. IV-321–IV-324 (2004)

Vesa Peltonen: Computational auditory scene recognition. Master's thesis, Department of Information Technology, Tampere University of Technology, Finland (2001)

Tzanetakis, G., Essl, G., Cook, P.: Automatic musical genre classification of audio signals. In: Proceedings of the International Symposium on Music Information Retrieval (ISMIR'01), pp. 205–210 (2001)

Tzanetakis, G., Cook, P.: Musical genre classification of audio signals. IEEE Trans. Speech Audio Proc. 10(5), 293–302 (2002)

Lu, L., Zhang, H.-J., Li, S.Z.: Content-based audio classification and segmentation by using support vector machines. ACM Mult. Sys. J. 8(6), 482–492 (2003)

Bugatti, A., Flammini, A., Migliorati, P.: Audio classification in speech and music: A comparison between a statistical and a neural approach. EURASIP J. Appl. Sig. Proc. 4, 372–378 (2002)

Lu, L., Jiang, H., Zhang, H.-J.: A robust audio classification and segmentation method. In: Proceedings of the 9th ACM International Conference on Multimedia (MM'01, ACM), pp. 203–211 (2001)

Lu, L., Zhang, H.-J., Jiang, H.: Content analysis for audio classification and segmentation. IEEE Trans. Speech Audio Proc. 10(7), 504–516 (2002)

Beierholm, T., Baggenstoss, P.M.: Speech music discrimination using class-specific features. In: Proceedings of the 17th International Conference on Pattern Recognition (ICPR'04, IEEE), Vol. 2, pp. 379–382 (2004)

Hoyt, J.D., Wechsler, H.: Detection of human speech in structured noise. In: Proceedings of the International Conference on Neural Networks, IEEE, Vol. 7, pp. 4493–4496 (1994)

Li, D., Sethi, I.K., Dimitrova, N., McGee, T.: Classification of general audio data for content-based retrieval. Patt. Recog. Lett. 22(5), 533–544 (2001)

Tzanetakis, G., Cook, P.: A framework for audio analysis based on classification and temporal segmentation. In: EUROMICRO Workshop on Music Technology and Audio Processing, IEEE, Vol. 2, pp. 61–67 (1999)

Lambrou, T., Kudumakis, P., Speller, R., Sandler, M., Linney, A.: Classification of audio signals using statistical features on time and wavelet transform domains. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'98, IEEE), Vol. 6, pp. 3621–3624 (1998)

Delfs, C., Jondral, F.: Classification of transient time-varying signals using dft and wavelet packet based methods. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP'98, IEEE), Vol. 3, pp. 1569–1572 (1998)

Bezdek J.C.: Pattern Recognition with Fuzzy Objective Function Algorithms. Plenum Press, New York (1981)

Duda, R.O., Stork, D.G., Hart, P.E.: Pattern classification, 2nd edn. Wiley, New York (2001)

Kashif Saeed Khan, M.: Automatic classification of speech and music in digitized audio. Master's thesis, King Fahd University of Petroleum andMinerals, Dhahran, Saudi Arabia (2005)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Con. Sig. Sys. 2(4), 303–314 (1989)

Mammone, R.J. (ed.): Artificial neural networks for speech and vision. Chapman & Hall Neural Computing, 1st edn. Chapman & Hall, London (1994)

Rabiner, L.R., Juang, B.H.: An introduction to hidden markov models. IEEE ASSP Magazine 3(1), 4–16 (1986)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Khan, M.K.S., Al-Khatib, W.G. Machine-learning based classification of speech and music. Multimedia Systems 12, 55–67 (2006). https://doi.org/10.1007/s00530-006-0034-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-006-0034-0