Abstract

A problem with convolutional neural networks (CNNs) is that they require large datasets to obtain adequate robustness; on small datasets, they are prone to overfitting. Many methods have been proposed to overcome this shortcoming with CNNs. In cases where additional samples cannot easily be collected, a common approach is to generate more data points from existing data using an augmentation technique. In image classification, many augmentation approaches utilize simple image manipulation algorithms. In this work, we propose some new methods for data augmentation based on several image transformations: the Fourier transform (FT), the Radon transform (RT), and the discrete cosine transform (DCT). These and other data augmentation methods are considered in order to quantify their effectiveness in creating ensembles of neural networks. The novelty of this research is to consider different strategies for data augmentation to generate training sets from which to train several classifiers which are combined into an ensemble. Specifically, the idea is to create an ensemble based on a kind of bagging of the training set, where each model is trained on a different training set obtained by augmenting the original training set with different approaches. We build ensembles on the data level by adding images generated by combining fourteen augmentation approaches, with three based on FT, RT, and DCT, proposed here for the first time. Pretrained ResNet50 networks are finetuned on training sets that include images derived from each augmentation method. These networks and several fusions are evaluated and compared across eleven benchmarks. Results show that building ensembles on the data level by combining different data augmentation methods produce classifiers that not only compete competitively against the state-of-the-art but often surpass the best approaches reported in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deep learners, especially convolutional neural networks (CNNs), are now the dominant classification paradigm in image classification, as witnessed by the plethora of articles in the literature that currently spotlight these networks. Learners like CNN are attractive, in part, because the architectures of these networks learn to extract salient features directly from samples, thus bypassing the need for human intervention in selecting the appropriate feature extraction method for the task at hand. These learned features have been shown to eclipse the power of handcrafted features chiefly because CNNs progressively downsample the spatial resolution of images while at the same time enlarging the depth of the feature maps.

Despite the strengths of CNNs, there are some significant drawbacks. Because the parameter size of CNNs is huge, these networks tend to overfit when trained on small datasets. Overfitting reduces the classifier's ability to generalize its learning so that it can correctly predict unseen samples. Researchers are now pressured to collect colossal datasets to accommodate the needs of deep learners, as exemplified by the ever-growing dataset ImageNet [1], which now contains over 14 million images classified into 1000 plus classes. In many domains, such as medical image analysis and bioinformatics (where samples might only number in the hundreds), collecting sufficient data for proper CNN training is prohibitively expensive and labor-intensive. This need for enormous datasets also requires that researchers have access to costly machines with considerable computational power.

Several solutions to the problem of overfitting that bypass the need for collecting more data have been proposed. Two of the most powerful techniques are transfer learning, where a given CNN architecture is pretrained on a massive dataset and provided to researchers and practitioners so that the network can be finetuned on smaller datasets, and 2) data augmentation, which adds new data points based on the original samples in a training set. Other methods unrelated to the techniques employed here include dropout [2], zero-shot/one-shot learning [3, 4], and batch normalization [2].

This study focuses on data augmentation since it has become a vital technology in fields where large datasets are difficult to procure [5,6,7]. Data augmentation methods aim at increasing the amount of training data by adding slightly modified copies of already existing data or newly created synthetic data from existing data. They act as a regularizer and help reduce overfitting when training a machine learning model. Not only does data augmentation promote learning that leads to better CNN generalization, but it also fixes the problem of overfitting by adding and extracting information that is inherent within the training space. Data augmentation (DA) is a key element in the success of CNN models, as its use can lead to a faster convergence to solution and better prediction accuracy.

In the literature (see surveys [5,6,7]), most of the work focuses essentially on geometric transforms, statistical methods for color modification, and, recently, also on learned methods such as those based on GAN. To the best of our knowledge, this is the first paper that reports a very large study that deals with data augmentation approaches based on feature transform, testing them in several datasets spanning different applications.

Specifically, we focus on data augmentation techniques for image classification. These techniques can be divided into two broad types depending on whether the methods are based on basic image manipulations (such as translating and cropping) or on deep learning approaches [5]. The main object of this study is to evaluate the feasibility of building ensembles at the data level by adding augmented images generated using different sets of image manipulation methods, an approach that was taken in [8]. Unlike [8], however, this work performs a more exhaustive study building ensembles of augmentation methods by assessing over twice the number of techniques across eleven (versus only four) benchmark datasets.

The remainder of this paper is organized as follows: in Sect. 2, we review some of the best-performing data image manipulation approaches. In Sect. 3, novel data augmentation algorithms based on the radon transform (RT) [9], the discrete cosine transform (DCT), and the Fourier transform (FT) are proposed. As described more fully in Sect. 3, ensembles are built with pretrained ResNet50s finetuned on training sets composed of images taken from the original data and generated by an augmentation technique. These networks and their fusions are evaluated on the benchmarks described at the end of Sect. 3. In Sect. 4, we compare the performance of individual augmentation approaches and the ensembles built on them. The best ensemble reported in this work either exceeds the performance of the state-of-the-art in the literature or achieves similar performance on all the tested datasets. In Sect. 5, we conclude with a few suggestions for further research in this area.

The main contributions of this study can be summarized as follows:

-

Presented is an extensive evaluation of common image manipulation methods used for data augmentation across eleven freely available and diverse benchmarks.

-

Proposed are three new augmentation approaches utilizing RT, FT, and DCT transforms.

-

Demonstrated is the value of building deep ensembles of classifiers on the data level by adding to the training sets images generated using different data augmentation approaches: the experimentally derived ensemble developed in this work is shown to achieve state-of-the-art performance on several benchmarks.

-

Provided to the public at no charge is the MATLAB source code used in the experiments reported in this work (available at https://github.com/LorisNanni/Feature-transforms-for-image-data-augmentation).

2 Related work

As mentioned in the Introduction, this study focuses on building ensembles with augmentation methods produced by the application of image manipulation methods. In [5], these methods are divided into the following groups based on: (1) simple geometric transformations, (2) randomly erasing and cutting, (3) mixing images, (4) kernel filters, and (5) color space transforms [5]. Most of these augmentation algorithms are easy to implement. Practitioners must be careful, however, when applying these image manipulations to a sample because it is possible to produce new images that no longer belong to the same class as the original. Flipping an image of the number six, for instance, would result in an image recognized as the number nine.

Flipping, especially along the horizontal axis, is one of the simplest and most popular geometric transforms for data augmentation [5], as are rotation (typically on the right or left axis in the range [1°, 359°]) and translation (where positional bias is avoided by shifting a sample up, down, left, and right) [5]. A problem with translation is that it can introduce undesirable noise [10]. Random cropping is another simple technique that reduces the size of new images, which is often needed to fit the input of a network. Augmented data can also be generated by merely substituting random values in an image, as extensively evaluated in [11]. In [12], the authors compared the performance of these simple augmentation techniques with each trained on AlexNet and assessed on two datasets, ImageNet and CIFAR10 [13]: rotation was found to perform better than translation, random cropping, and random values.

Random erasing [14] and cutting [15] occlude images; these methods model what occurs regularly in the real world, where objects are often only partially presented in the visual field. A review of the literature on augmentation methods based on this category can be found in [6]. Of particular interest is the method proposed in [14] that randomly erases an image with patches that vary in size. This method of partially erasing images was evaluated on ResNet architectures across three datasets: Fashion-MNIST, CIFAR10, and CIFAR100. Results showed consistent performance improvements.

Another simple method for constructing images is to mix them. A simple way to accomplish this task is to average the pixels between two or more images belonging to the same class [16]. Alternatively, images can be submitted to a transform, and the resulting components can be mixed, for example, by chaining, as in [17]. Masks can also be applied. In [16], the authors combined images using several image manipulation techniques: random images were flipped and cropped, and then, the RGB channel values for each pixel were averaged. Some nonlinear methods for mixing images were proposed in [18], and GANs were used in [18] to blend images.

Kernel filters can also be applied to create new images within a sample space. Filters are often used to sharpen or blur images. Filters, such as Gaussian blur, are applied by sliding an n × n window across the image. PatchShuffle, proposed in [19], randomly swaps matrix values in the filter window to make new images.

Novel color images can be created by means of color space transformations. A positive side-effect of this technique is the removal of illumination bias [5]. Transformations of color space can involve making a histogram of pixels in a color channel and applying different filters, much like those positioned over the lenses of cameras to alter the characteristics of the color space in a scene. Alternatively, color spaces can be converted into other color spaces. Care must be taken when transforming the color space, as it has been observed, for example, that changing an RGB image to a grayscale image can reduce the performance of a classifier [20]. New images can be produced by adding noise to color distributions or by jittering and adjusting the brightness, contrast, and saturation of samples [12, 21]. These color adjustments run the risk of removing valuable information. A review of color space transforms for image augmentation and a comparison of this type of image manipulation with geometric transforms is available in [22].

Not all data augmentation techniques take into account the entire training set. One popular technique in this vein is PCA jittering [12, 21,22,23,24], which produces new images by multiplying the PCA components by a small number. In [22], only the first component, which contains the most information, is jittered by being multiplied by a random number selected from a uniform distribution. Finally, in [23], an original image is transformed by PCA and DCT and jittered by adding noise to all components before reconstructing the image.

3 Materials and methods

3.1 Proposed approach

The method proposed here for building ensembles of deep learners is illustrated in Fig. 1. A given training set is augmented using n = 14 approaches, each detailed in Sect. 3.2. These new training sets are then used to finetune fourteen ResNet50s pretrained on ImageNet. In this work, each pre-trained ResNet50 is finetuned with a batch size of 30 and a learning rate of 0.001. In the testing phase, each unknown sample is classified by the 14 CNNs, and the resulting scores are fused by the sum rule.

3.2 Data augmentation methods

In this section, we describe the data augmentation sets (APPs1-14) explored in this study. APPs1-11 have been detailed in [8], so they will receive less attention. APPs12-14 are proposed here for the first time; these augmentations are explained more fully and illustrated in Fig. 2 (resulting images) and Fig. 3 (methods).

The method proposed here for building ensembles of deep learners is illustrated in Fig. 1. A given training set is augmented using n = 14 approaches, each detailed in Sect. 3.2. These new training sets are then used to finetune fourteen ResNet50s pretrained on the following training sets:

APP1 (number of new images generated: 3) takes a given image and randomly reflects it top–bottom and left–right for two new images. The third transform linearly scales the original image along both axes with two factors randomly extracted from the uniform distribution [1, 2].

APP2 (number of new images generated: 6) replicates App1 with three additional manipulations: image rotation (randomly extracted from [− 10, 10] degrees), translation (along both axes with the value randomly sampled from the interval [0, 5] pixels), and shear (with vertical and horizontal angles randomly sampled in the interval [0, 30] degrees).

APP3 (number of new images generated: 4) replicates App2 without shear.

APP4 (number of new images generated: 3), proposed in [23], applies a transform based on PCA, where the PCA coefficients extracted from a given image are transformed (1) by randomly setting to zero (with a probability of 0.5) each element of the feature vector; (2) by adding noise to each component based on the standard deviation of the projected image; and (3) by selecting five images from the same class as the original image, computing the PCA vector, and randomly selecting components with a probability 0.05 from the original PCA vector and swapping them out with some of the corresponding components of the five other PCA vectors. The PCA inverse transform is applied to the three perturbed PCA vectors of the original image to produce the augmented images.

APP5 (number of new images generated: 3) applies the same perturbation method as those described in App4 using the DCT transform rather than PCA. With APP5, the DC coefficient is never changed.

APP6 (number of new images generated: 3) is applied to color images. The three images are constructed by altering contrast, sharpness, and color shifting. The contrast is altered by linearly scaling the original contrast of the image between the values a, the lowest value, and b, the highest value allowed for the augmented image. Every pixel in the original image outside this range is mapped to 0 if less than a or 255 if greater than b. Sharpness is altered by blurring the original with a Gaussian filter (variance = 1) and by subtracting the blurred image from the original. The color is shifted with three integer shifts from three RGB filters. Each shift is then added to one of the three channels in the original image.

APP7 (number of new images generated: 7) is applied to color images. The first four augmented images are produced by altering the pixel colors in the original image using the MATLAB function jitterColorHSV with randomly selected values in the range of [0.05, 0.15] for hue, in the range [− 0.4, − 0.1] for hue saturation, in the range [− 0.3, − 0.1] for brightness, and in the range [1.2, 1.4] for contrast. Image five is generated with the MATLAB function imgaussfilt, where the standard deviation of the Gaussian filter randomly ranges in [1, 6]. Image six is generated by the MATLAB function imsharpen with sharpening equal to two and the radius of the Gaussian low-pass filter equal to one. Image seven applies the same color-shifting detailed in App6.

APP8 (number of new images generated: 2) is applied to color images and produces the two augmented images by randomly selecting a target image belonging to the same class as a given image followed by the application of two nonlinear mappings: RGB histogram specification and stain normalization using the Reinhard Method [25].

APP9 (number of new images generated: 6), proposed in [8], applies two elastic deformations: one MATLAB method that introduces distortions into the original image and an RGB adaptation of ElasticTransform from the computer vision tool Albumentations (available at https://albumentations.ai/ accessed 01/15/22). Both methods transform a given image by applying a randomly generated displacement field to its pixels by a value extracted from the standard uniform distribution in the range [− 1, 1] for the first method or in the range of [− 1. + 1] for the second. The resulting horizontal and vertical displacement fields are passed through three low-pass filters: (1) circular averaging filter, (2) rotationally symmetric Gaussian low-pass filter, and (3) rotationally symmetric Laplacian of Gaussian filter. For more details, see [8].

APP10 (number of new images generated: 3), proposed in [8], is based on DWT [26] (specifically, Daubechies wavelet db1 with one vanishing moment). DWT produces four matrices: the approximation coefficients (cA) and the horizontal, vertical, and diagonal coefficients (cH, cV, and cD, respectively). APP10 performs three perturbations on these matrices to generate three new images. In the first method, each element in the coefficient matrices is randomly selected, with a probability of 0.5, to be set to zero. In the second method, a constant is added to each element that is calculated by summing the standard deviation of the original image with a number randomly selected in the range [− 0.5, 0.5]. In the third method, five images from the same class as the original image are randomly selected, and the DWT coefficient matrices are calculated for each one. Elements of the original cA, cH, cV, and cD matrices are then replaced, with a probability of 0.05, with values in the matrices of the other five images. The three augmented images are produced by applying the inverse DWT transform on the three sets of perturbed matrices.

APP11 (number of new images generated: 3): was first proposed in [8] and is based on the Constant-Q Transform (CQT) [27]. After calculating the CQT arrays of a given image, it undergoes the same perturbations to generate the three images as in APP10. The three augmented images are then produced by applying the inverse CQT transform on the perturbed CQT arrays.

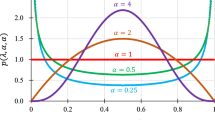

APP12 (number of new images generated: 5) is a new method proposed here based on DCT and the random selection of other images. Three novel images are extracted from the same class as the original image and two from a different class. The original image and the five selected images are projected on the DCT space, with each element of the five images having a probability of 0.2 of being averaged with the original DCT element. Notice that this approach is cumulative, as can be observed in the following pseudo-code:

IDCT is applied at the end. Since there are three channels for each color image, these perturbations are applied to each channel independently.

APP13 (number of new images generated: 3) takes an original image and builds 3 augmented images, using the Radon transform, as can be observed in the following pseudo-code:

where randSel(num, range) randomly select num values in a range.

The first image is produced with the Radon transform (RT), which projects the original image's intensity along a radial line oriented at a specific angle (angles with values between [0, 179]), but, for the first image, twenty angles are randomly selected and discarded. Then, the image is back-projected with the inverse RT (IRT). The second image is generated in the same way as the first, but all angles are used to project the image with RT, after which 15% of the angle values (that is, the columns of the projected image) are set to zero before IRT is applied. The third image is like the first in that 20 angles are randomly selected and discarded in the projection step, and, like in the construction of the second image, only 15% of the angles (columns of the projected image) are set to zero before IRT is applied.

APP14 (number of new images generated: 2) The first image is generated using the Fast Fourier Transform (FFT). After FFT is applied, 50% of the coefficients are randomly set to zero before performing the inverse FFT. The second image is built by applying DCT; next, a square low-frequency filter (size 40 × 40) is applied on the DCT image before performing the inverse DCT:

where randomMask(image, prob) returns a random pixel mask which is of the same size as the image and prob is the probability of each pixel to be 1.

3.3 Datasets

In this work, ensembles of augmentation methods are tested and compared with the literature on eleven benchmark datasets for image classification (see Table 1).

In Table 1, the following information is reported for each dataset: a short name, the original dataset name (if provided in the reference), the number of classes and samples, the size(s) of the images, the testing protocol, and the original reference. The abbreviations for the testing protocols in Table 1 are detailed as follows:

-

5CV,10CV represents fivefold and tenfold cross-validation.

-

Tr-Te represents a dataset that is pre-divided into training and testing sets. For LAR, a threefold division is provided by the authors. For PBC, the official protocol specifies that 88% of the images be included in the training set and 12% in the test set, with both sets maintaining the same sample per class ratio as in the original dataset. END includes a training set of 3302 images and an external validation set of 200 images.

The performance indicator typically reported on these datasets is accuracy, which measures the rate of correct classifications. For the GRAV dataset, four different views are extracted at different durations from each glitch/image; therefore, the final score is obtained by combining the four classification scores via the average rule. Validation of the superiority of one method over the others is provided by the Wilcoxon signed rank test [39].

4 Experimental results

We start our experiments by comparing the performance of the augmentation sets with ResNet50 (see Table 2), along with the results of these approaches on the following ensembles:

-

EnsDA_A: this is the fusion by sum rule among all the ResNet50 trained using App1-11; each ResNet50 is trained with a different data augmentation approach. The data augmentation methods based on color spaces (App6-8) are not reported on VIR, HE, and MA since they are gray-level images.

-

EnsDA_B: this fusion is the same as EnsDA_A except for the addition of ResNet50s trained with the new augmentation methods App12-14.

-

EnsDA_C: this is the fusion by sum rule among those methods not based on feature transforms. Each approach is iterated twice (three times for datasets VIR, HE, and MA since they are gray-level images; they are trained three times so that the size of the ensemble EnsDA_C is similar to EnsDA_B).

-

EnsBase(X): this is a baseline ensemble intended to compare/validate the performance of EnsDA_* (i.e., 1–3 above); EnsBase(X) combines (via sum rule) X ResNet50 networks trained separately on App3, which produces the best average performance compared with all the other data augmentation sets.

The label NoDA in the first row of Table 2 is a stand-alone ResNet50 trained without data augmentation.

Several conclusions can be drawn by examining Table 2:

-

The best augmentation set varies with each dataset: in some, the best approach is a feature transform (see GRAV, Triz, and END); in others, a color-based method (see POR), and for some, the best performance is obtained using affine transformations.

-

Considering a stand-alone CNN, in some datasets (see PBC), the performance of NoDA is similar to that of the best App augmentation sets.

-

In general, though, the ensembles strongly boost the performance of NoDA: both Ens_Base(11) and Ens_Base(14) outperform NoDA with a p value of 0.1, and all the EnsDA_* outperform NoDA with a p value of 0.001. Across all the datasets, the EnsDA_* ensembles obtain an accuracy higher than or equal to that obtained by NoDA.

-

EnsDA_C outperforms Ens_Base(14) with a p value of 0.1. Both EnsDA_A and EnsDA_B (which include the augmentation methods based on the feature transform approaches) outperform Ens_Base(14) with a p value of 0.05. Among the different tested ensembles, our suggested approach is EnsDA_B since it obtains the highest average performance among the EnsDA_* (EnsDA_A: 93.34%, EnsDA_B: 93.54%, EnsDA_C: 92.98%, EnsBase(14): 90.76%).

The approach proposed in [40] selects only a subset of images from a larger set of built images. Here, as a base, we use a large dataset made up of the images created by all the methods belonging to EnsDA_B, but it produced no improvement in performance compared to EnsDA_B; for this reason, and for the sake of reducing computation time, it was tested only on a subset of all the datasets.

A second experiment was performed to confirm the previous results on a different architecture. In Table 3, the same ensembles reported in Table 2 are evaluated using mobileNetV2 [41] instead of ResNet50. MobileNetv2 is a lightweight architecture that produces results comparable to heavy architectures using far less computational resources.

The results in Table 3 substantially confirm conclusions reported in Table 2, viz., the proposed approaches for data augmentation are valid methods for increasing diversity among classifiers and designing high-performing ensembles: EnsDA_* outperform EnsBase(14) with a p value of 0.1.

Even if accuracy is probably the most used performance indicator for classification problems, it is not the most suitable for comparing classifiers. The area under the curve (AUC) is preferred as a standard measure in tests of predictive modeling performance. The AUC is an estimate of the probability that a classifier will rank a randomly chosen positive instance higher than a randomly chosen negative instance. In this work, we use the one's complement of AUC, i.e., the error under the ROC curve (EUC): EUC = 1 − AUC. Thus, in Tables 4 and 5, the performance of the proposed approaches in terms of EUC is reported. Because EUC is an indicator for binary classifiers, in multiclass problems, the average value of one-versus-all EUC is used (the rocmetrics MATLAB function has been employed). The results in Tables 4 and 5 largely reflect the trend of accuracy, namely, that the proposed ensembles based on data augmentation outperform both base ensembles and stand-alone approaches.

The results reported in Tables 4 and 5 substantially confirm previous conclusions:

EnsDA_A and EnsDA_B outperforms (considering EUC) EnsBase(14) with a p value of 0.1 in both the tested network topologies (MobileNetV2 and ResNet50). EnsDA_B obtains an average performance better than that obtained by EnsDA_A.

In Table 6, our best ensemble is compared with the best methods reported in the literature on the same datasets. As can be observed, our proposed method obtains state-of-the-art or similar performance. Note that the performance indicator is the F1-measure with the LAR dataset because that is the measure that is reported most commonly in the literature for this dataset.

In Fig. 4, we evaluate the disagreement of predictions on different CNNs to evaluate their degree of diversity [58]. The cosine similarity among scores is calculated for eight networks: the first five are networks trained separately on App3 (we used POR dataset for this experiment). In contrast, the last three are trained on an augmented dataset by APP12, APP13, and APP14, respectively. As can be observed from Fig. 4, the dissimilarity among scores is maximized in the last three rows/columns, proving the higher diversity among classifiers.

5 Conclusion

In this study, we compare combinations of pretrained ResNet50s finetuned on training sets with the addition of some of the best-performing image manipulation methods for generating new images. The performance of these networks and their fusions were compared across eleven benchmarks representing diverse image classification tasks.

This study shows that constructing ensembles of deep learners on the data level by adding images generated by different data augmentation techniques increases the robustness of CNNs. Given the breadth in the diversity of the selected benchmarks, the approach taken here for building CNN ensembles should work on most image problems.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Deng J, Dong W, Socher R, Li L, Li K, Fei-Fei L (2009) ImageNet: a large-scale hierarchical image database. In: CVPR. IEEE, Miami, pp 248–255

Shirke V, Walika R, Tambade L (2018) Drop: a simple way to prevent neural network by overfitting. Int J Res Eng Sci Manag 1(9):2581–5782

Palatucci M, Pomerleau DA, Hinton GE, Mitchell TM (2009) Zero-shot learning with semantic output codes. In: Neural information processing systems (NIPS), Vancouver, British Columbia, Canada, vol 22

Xian Y, Lampert CH, Schiele B, Akata Z (2019) Zero-shot learning-a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans Pattern Anal Mach Intell 41(9):2251–2265

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6(60):1–48

Naveed H (2021) Survey: image mixing and deleting for data augmentation. ArXiv, https://arxiv.org/abs/2106.07085

Khosla C, Saini BS (2020) Enhancing performance of deep learning models with different data augmentation techniques: a survey. In: International conference on intelligent engineering and management (ICIEM), pp 79–85. https://doi.org/10.1109/ICIEM48762.2020.9160048

Nanni L, Paci M, Brahnam S, Lumini A (2021) Comparison of different image data augmentation approaches. J Imaging 7(12):254

Bracewell RN (1995) Two-dimensional imaging. Prentice-Hall Inc., Prentice-Hall

Mikołajczyk A, Grochowski M (2018) Data augmentation for improving deep learning in image classification problem. In: 2018 International interdisciplinary PhD workshop (IIPhDW), 9–12 May 2018, pp 117–122. https://doi.org/10.1109/IIPHDW.2018.8388338

Moreno-Barea FJ, Strazzera F, Jerez JM, Urda D, Franco L (2018) Forward noise adjustment scheme for data augmentation. In: 2018 IEEE symposium series on computational intelligence (SSCI), pp 728–734

Shijie J, Ping W, Peiyi J, Siping H (2017) Research on data augmentation for image classification based on convolution neural networks. In: Chinese Automation Congress (CAC) 2017, Jinan, CN, pp 4165–4170

Krizhevsky A (2009) Learning multiple layers of features from tiny images. University of Toronto. [Online]. Available: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf

Zhong Z, Zheng L, Kang G, Li S, Yang Y (2020) Random erasing data augmentation. In: AAAI conference on artificial intelligence, New York, vol 34, no 7, pp 13001–13008

Devries T, Taylor GW (2017) Improved regularization of convolutional neural networks with cutout. ArXiv https://arxiv.org/abs/1708.04552

Inoue H (2018) Data augmentation by pairing samples for images classification. ArXiv https;//arxiv.org/abs/1801.02929

Hendrycks D, Mu N, Cubuk ED, Zoph B, Gilmer J, Lakshminarayanan B (2020) AugMix: a simple data processing method to improve robustness and uncertainty. ArXiv https://arxiv.org/abs/1912.02781

Liang D, Yang F, Zhang T, Yang P (2018) Understanding mixup training methods. IEEE Access 6:58774–58783

Kang G, Dong X, Zheng L, Yang Y (2017) PatchShuffle regularization. ArXiv https://arxiv.org/abs/1707.07103

Chatfield K, Simonyan K, Vedaldi A, Zisserman A (2014) Return of the devil in the details: delving deep into convolutional nets. In: Proceedings British machine vision conference, University of Nottingham, Britian. https://doi.org/10.5244/C.28.6

Krizhevsky A, Sutskever I, Hinton GE (2012) COPY ImageNet classification with deep convolutional neural networks. In: Bartlett PL, Pereira FCN, Burges CJC, Bottou L, Weinberger KQ (eds) Advances in neural information processing systems. Curran Associates Inc, Lake Tahoe, pp 1106–1114

Taylor L, Nitschke G (2018) Improving deep learning with generic data augmentation. In: 2018 IEEE symposium series on computational intelligence (SSCI), pp 1542–1547

Nanni L, Brahnam S, Ghidoni S, Maguolo G (2019) General purpose (GenP) bioimage ensemble of handcrafted and learned features with data augmentation. ArXiv, https://arxiv.org/abs/1904.08084

Nalepa J, Myller M, Kawulok M (2020) Training- and test-time data augmentation for hyperspectral image segmentation. IEEE Geosci Remote Sens Lett 17:292–296

Khan AM, Rajpoot N, Treanor D, Magee D (2014) A nonlinear mapping approach to stain normalization in digital histopathology images using image-specific color deconvolution. IEEE Trans Biomed Eng 61(6):1729–1738. https://doi.org/10.1109/TBME.2014.2303294

Gupta D, Choubey S (2014) Discrete wavelet transform for image processing. Int J Emerg Technol Adv Eng 4(3):598–602

Angelo G, Velasco NH, Dörfler M, Grill T (2011) Constructing an invertible constant-q transform with nonstationary gabor frames. In: 14th International conference on digital audio effects (DAFx 11), Paris, France, p 33

Kylberg G, Uppström M, Sintorn I-M (2011) Virus texture analysis using local binary patterns and radial density profiles. In: Martin S, Kim S-W (eds) 18th Iberoamerican congress on pattern recognition (CIARP), Havana, Cuba, pp 573–580

Carpentier M, Giguère P, Gaudreault J (2018) Tree species identification from bark images using convolutional neural networks. In: 2018 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 1075–1081

Bahaadini S et al (2018) Machine learning for gravity spy: glitch classification and dataset. Inf Sci 444(May):172–186

Liu S, Yang J, Agaian SS, Yuan C (2021) Novel features for art movement classification of portrait paintings. Image Vis Comput 108:104121. https://doi.org/10.1016/j.imavis.2021.104121

Acevedo A, Merino A, Alférez S, Molina Á, Boldú L, Rodellar J (2020) A dataset of microscopic peripheral blood cell images for development of automatic recognition systems. Data Brief 30:105474. https://doi.org/10.1016/j.dib.2020.105474

Boland MV, Murphy RF (2001) A neural network classifier capable of recognizing the patterns of all major subcellular structures in fluorescence microscope images of HeLa cells. Bioinformatics 17(12):1213–1223

Shamir L, Orlov NV, Eckley DM, Goldberg I (2008) IICBU 2008: a proposed benchmark suite for biological image analysis. Med Biol Eng Comput 46(9):943–947

Dimitropoulos K, Barmpoutis P, Zioga C, Kamas A, Patsiaoura K, Grammalidis N (2017) Grading of invasive breast carcinoma through Grassmannian VLAD encoding. PLoS ONE 12:1–18. https://doi.org/10.1371/journal.pone.0185110

Moccia S et al (2017) Confident texture-based laryngeal tissue classification for early stage diagnosis support. J Med Imaging (Bellingham) 4(3):34502

Zhao R et al (2018) TriZ-a rotation-tolerant image feature and its application in endoscope-based disease diagnosis. Comput Biol Med 99:182–190

Sun H, Zeng X, Xu T, Peng G, Ma Y (2020) Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms. IEEE J Biomed Health Inform 24(6):1664–1676. https://doi.org/10.1109/JBHI.2019.2944977

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Liu Z, Jin H, Wang T-H, Zhou K, Hu X (2021) DivAug: plug-in automated data augmentation with explicit diversity maximization. In: IEEE/CVF international conference on computer vision. Virtual, pp 4762–4770

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L (2018) MobileNetV2: inverted residuals and linear bottlenecks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, 18–23 June 2018, pp 4510–4520. https://doi.org/10.1109/CVPR.2018.00474

Nanni L, Ghidoni S, Brahnam S (2021) Deep features for training support vector machines. J Imaging 7(9):177

Nanni L, Luca ED, Facin ML (2020) Deep learning and hand-crafted features for virus image classification. J Imaging 6:143

Geus AR, Backes AR, Souza JR (2020) Variability evaluation of CNNs using cross-validation on viruses images. In: VISIGRAPP. University of Malta, Malta, pp 626–632

Wen Z-J, Liu Z, Zong Y, Li B (2020) Latent local feature extraction for low-resolution virus image classification. J Oper Res Soc China 8:117–132

Backes AR, Junior JJMS (2020) Virus classification by using a fusion of texture analysis methods. In: 2020 International conference on systems, signals and image processing (IWSSIP), pp 290–295

dos Santosa FLC, Paci M, Nanni L, Brahnam S, Hyttinen J (2015) Computer vision for virus image classification. Biosyst Eng 138(October):11–22

Nanni L, Ghidoni S, Brahnam S (2021) Ensemble of convolutional neural networks for bioimage classification. Appl Comput Inform 17(1):19–35. https://doi.org/10.1016/j.aci.2018.06.002

Boudra S, Yahiaoui I, Behloul A (2021) A set of statistical radial binary patterns for tree species identification based on bark images. Multimedia Tools Appl 80(15):22373–22404. https://doi.org/10.1007/s11042-020-08874-x

Remeš V, Haindl M (2019) Bark recognition using novel rotationally invariant multispectral textural features. Pattern Recognit Lett 125:612–617. https://doi.org/10.1016/j.patrec.2019.06.027

Remes V, Haindl M (2018) Rotationally invariant bark recognition. In: Joint IAPR international workshops on statistical techniques in pattern recognition (SPR) and structural and syntactic pattern recognition (SSPR S+SSPR), Beijing, China, pp 22–31

Long F, Peng J-J, Song W, Xia X, Sang J (2021) BloodCaps: a capsule network based model for the multiclassification of human peripheral blood cells. Comput Methods Programs Biomed 202:105972. https://doi.org/10.1016/j.cmpb.2021.105972

Ucar F (2020) Deep learning approach to cell classificatio in human peripheral blood. In: 2020 5th International conference on computer science and engineering (UBMK), pp 383–387. https://doi.org/10.1109/UBMK50275.2020.9219480

Song Y, Cai W, Huang H, Feng D, Wang Y, Chen M (2016) Bioimage classification with subcategory discriminant transform of high dimensional visual descriptors. BMC Bioinform 17:465

Coelho LP et al (2013) Determining the subcellular location of new proteins from microscope images using local features. Bioinformatics 29(18):2343–2352

Zhou J, Lamichhane S, Sterne G, Ye B, Peng H (2013) BIOCAT: a pattern recognition platform for customizable biological image classification and annotation. BMC Bioinform 14:291

Shamir L, Orlov N, Eckley DM, Macura TJ, Johnston J, Goldberg IG (2008) Wndchrm—an open source utility for biological image analysis. Source Code Biol Med 3(1):13

Fort S, Hu H, Lakshminarayanan B (2019) Deep ensembles: a loss landscape perspective. arXiv preprint https://arxiv.org/abs/1912.02757

Acknowledgements

The authors wish to acknowledge the NVIDIA Corporation for supporting this research with the donation of a Titan Xp GPU and the TCSC–Tampere Center for Scientific Computing for generous computational resources.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no any conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nanni, L., Paci, M., Brahnam, S. et al. Feature transforms for image data augmentation. Neural Comput & Applic 34, 22345–22356 (2022). https://doi.org/10.1007/s00521-022-07645-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07645-z