Abstract

Feedforward neural networks offer a possible approach for solving differential equations. However, the reliability and accuracy of the approximation still represent delicate issues that are not fully resolved in the current literature. Computational approaches are in general highly dependent on a variety of computational parameters as well as on the choice of optimisation methods, a point that has to be seen together with the structure of the cost function. The intention of this paper is to make a step towards resolving these open issues. To this end, we study here the solution of a simple but fundamental stiff ordinary differential equation modelling a damped system. We consider two computational approaches for solving differential equations by neural forms. These are the classic but still actual method of trial solutions defining the cost function, and a recent direct construction of the cost function related to the trial solution method. Let us note that the settings we study can easily be applied more generally, including solution of partial differential equations. By a very detailed computational study, we show that it is possible to identify preferable choices to be made for parameters and methods. We also illuminate some interesting effects that are observable in the neural network simulations. Overall we extend the current literature in the field by showing what can be done in order to obtain useful and accurate results by the neural network approach. By doing this we illustrate the importance of a careful choice of the computational setup.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Differential equations occur in many fields of science and engineering and represent a useful description of many physical phenomena. They are usually formulated as initial or boundary value problems, where conditions at the beginning of a process or at boundary points are given to obtain one specific solution. It is useful to approximate differential equations by numerical methods [1, 2] like finite difference methods, and also neural networks have been applied to this end, see e.g., [3,4,5].

The variety of possible neural network architectures is immense [6]. Already in classic works in the field, feedforward neural networks have proven to be useful for solving differential equations [7, 8]. Within this framework of feedforward neural networks (from now on denoted here simply as neural networks), two particular approaches have been investigated in the literature within the last decades that appear to be very promising.

The trial solution (TS) method has been proposed for the approximation of a given differential equation, which we abbreviate here as TSM [9]. The TS, also called neural form in [9, 10], has to contain the neural network output and has to satisfy given initial or boundary conditions by construction. Under the latter conditions, there are multiple different possible forms of the TS for the approximation of a differential equation. Recently, a systematic construction approach for the TS has been proposed [10]. However, as indicated in the latter work, the TS construction may become difficult to realise for complex problems. We will follow here the original approach proposed in [9]. Let us note that the same TS structure is used for example in the recent Legendre neural network [11]. It appears evident that our investigation may also be useful in the context of such extensions.

Recently published in 2019, an approach has been proposed to avoid finding a TS [12] as this may be an intricate ingredient of the TSM. Because the corresponding method is motivated and technically related to the TSM, we call it here modified trial solution method (mTSM). Instead of building the cost function by use of the TS that meets conditions imposed on a differential equation, the approximating solution function is set in [12] to be the neural network output directly. The latter does not satisfy given initial or boundary conditions by construction as in TSM, but these are added as additional terms in the cost function.

In the mentioned works, both TSM and mTSM have proven to be capable of solving ordinary (ODEs) and partial differential equations (PDEs) as well as systems of ODEs and PDEs. This has been demonstrated for several examples and even complex simulations, showing the potential of the methods to obtain high-quality results. This has motivated us to consider in higher detail some of the computational issues that arise in the application of these methods in a first study [13]. Let us mention here also a recent complementary work where the activation functions are subject of a computational study [14].

Despite these promising developments, there are still many open questions related to both TSM and mTSM. First of all, in the original work [12] an emphasis was laid on the new construction principle and the application of the proposed method in a cosmological context. However, one may wonder about the direct comparison of TSM and mTSM in terms of quality of results as well as in the related computational aspects. Let us stress in this context, that the original works [9, 10, 12] mainly describe the network architecture and elaborate on the TS and mTSM construction, but they do not contain more details of the computational characteristics of the methods. Yet it turns out that it is not trivial to define a computational framework that gives competitive results.

Our contribution In this work, we build upon our first parameter study in [13] and extend the investigations. Following the basic line of the first work, we study here the variance between the exact solution of an ordinary differential equation and the approximations provided by TSM and mTSM. As one apparent difference to the proceeding in our previous conference paper, we extend here the investigation w.r.t. the number of training points, and we give many more details in the evaluation. We also give here additional and as it turns out meaningful experiments concerned with the roles of neural network weight initialisation, number of hidden layers and number of hidden layer neurons. We perform several experiments on the variety of parameters related to the differential equation, neural network and optimisation methods.

Let us stress that the amount of parameters for the differential equation, neural network and optimisation is numerous. Our contribution in the main part of this paper is a study of the variation on (i) Weight initialisation methods, (ii) Number of hidden layer neurons, (iii) Number of hidden layers, (iv) Number of training epochs, (v) Stiffness parameter and domain size, (vi) Optimisation methods, and especially their mutual dependence. Let us note that it has turned out to be a non-trivial task to set up a meaningful proceeding that gives an account of the latter aspect. We consider the evaluation presented here as a number of carefully chosen experiments that are in many respects related to each other.

For investigating the computational characteristics, we consider a simple while important stiff ODE model equation [15] with a damping behaviour for studying the stability and reliability of both methods. We also present here as another contribution a detailed study of the influence of the stiffness parameter contained in the ODE. Let us note that a similar solution behaviour is to be expected when resolving for instance parabolic PDEs.

2 Neural network architecture

Neural networks are usually pictured as neurons (circles) and connecting weights (lines). Figure 1 shows the standard neural network architecture for our experiments. It consists of three layers and features one input layer neuron for \(x\in D\subset \mathbb {R}\) (where D denotes the domain) with one bias neuron which can be considered as an offset, five hidden layer neurons and one linear output layer neuron. In experiments on the number of hidden layers and the number of hidden layer neurons, the architecture is extended. Each neuron is connected with every single neuron in the next layer by the weights \(w_j\), \(u_j\) and \(v_j\), \(j=1,\ldots ,5\), which are stored in the weight vector \(\mathbf {p}\). The input layer passes the domain data x, weighted by \(w_j\) and \(u_j\) to the hidden layer for processing. The processed data are then, now weighted by \(v_j\), sent to the output layer in order to generate the neural network output \(N(x,\mathbf {p})\). That means in detail, the hidden layer receives the weighted sum \(z_j=w_jx+u_j\) as input and processes these data by the sigmoid activation function \(\sigma _j=\sigma (z_j)=1/(1+e^{-z_j})\). Since the output layer consists of a linear neuron, the neural network output is generated by the linear combination

The sigmoid activation function is a continuous and arbitrarily often differentiable function with values between 0 and 1.

Let us note that in order to solve differential equations of order n with neural networks, it is important to choose an activation function, which is at least \((n+1)\) times continuously differentiable, since the later shown solution approaches require the n-th activation function derivative and the optimisation methods require another differentiation.

The universal approximation theorem [16] states that one hidden layer with a finite number of sigmoidal activation functions is able to approximate every continuous function on a subset of \(\mathbb {R}\).

In general, it is common to initialise the weights with small random values [17]; therefore, the first computation of \(N(x,\mathbf {p})\) returns a random value. This value is used to compute the cost or loss function \(E[\mathbf {p}]\) which is then subject to optimisation. With the first random output of \(N(x,\mathbf {p})\), the optimisation will return different weight updates when starting several computations with exactly the same computational parameters (but random weight initialisation).

Another option is to choose the initialisation to be constant when starting several computations. That is, \(N(x,\mathbf {p})\) first returns always the same value, and therefore with the weight updates to be constant as well, computations with same parameter setting return equal results.

For supervised learning, where both input data \(x_i\) (representing the discrete domain or grid) and correct output data \(d_i,~i=1,\ldots ,n\), are known, the cost function may be chosen as the squared \(l_2\)-norm

while in case of unsupervised learning, where no correct output data are known, the cost function is part of the modelling process. Our approach follows the latter track.

3 Solution approaches

In this section, we will describe the trial solution construction for TSM and mTSM more in detail, as well as the approaches on how to make use of neural networks in order to solve ordinary differential equations (ODEs) in form of

with given initial or boundary conditions. In Eq. (1), u(x) denotes the exact solution function with x as independent variable. Although G denotes a first order ODE, let us note again that it is also possible to solve higher order ordinary or partial differential equations (PDEs), as well as systems of ODEs or PDEs, cf. [9, 12].

3.1 Trial solution method (TSM)

Let us now recall the approach from [9]. In order to satisfy initial or boundary conditions, the TS is constructed to satisfy these conditions and is therefore written as a sum of two terms

In Eq. (2), A(x) is supposed to satisfy the initial or boundary conditions at the initial or boundary points, while B(x) is constructed to become zero at these points to eliminate the impact of \(N(x,\mathbf {p})\) there. That is, the TS may be defined in many possible forms for one differential equation, satisfying the mentioned conditions. Especially the choice of B(x) determines the impact of \(N(x,\mathbf {p})\) over the domain. Now, the TS transforms Eq. (1) into

so that the partial derivative of the trial solution with respect to input x which we have to consider is

with

In order to generate training points for the neural network, we discretise the domain D by a uniform grid with n grid points \(x_i\). Over this discrete domain, Eq. (3) is now solved by an unconstrained optimisation problem using the cost function

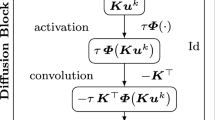

3.2 Modified trial solution method (mTSM)

This method, proposed in [12], introduces

as a TS directly for all differential equations. Therefore, \(u_t\) does not satisfy initial or boundary conditions by construction as in (2), they rather appear in the cost function as additional terms

where \(K(x_m),~m=1,\ldots ,l\), denote the initial or boundary conditions.

The modelled cost function is now subject to optimisation with respect to the adjustable neural network weights \(\mathbf {p}\).

4 Optimisation

For cost function minimisation, we use first-order methods, based on gradient descent. A commonly employed, simple optimisation technique is backpropagation, which uses the cost function gradient with respect to the neural network weights to determine their influence on \(N(x,\mathbf {p})\) and to update them. It is well known that backpropagation enables to find a local minimum in the weight space. The training is usually done several times with all training points. After one complete iteration through all input data, one epoch of training is done and for efficient training (finding a minimum in the weight space), several epochs of training are performed. For the kth epoch, backpropagation with momentum update rule [18] reads as

Since only the neural network output \(N(x,\mathbf {p})\) and the derivative w.r.t. x depend on \(\mathbf {p}\) and as both expressions are given, the corresponding derivatives used in the gradient of the cost function are computed as

and

The momentum term in Eq. (4), with momentum parameter \(\beta \), uses impact from last epoch to reduce the chance of getting stuck too early during training in a local minimum or at a saddle point.

The learning rate \(\alpha \), in general, is a scaling factor for the gradient and has major influence on the update. A very basic approach is to choose \(\alpha \) as a constant learning rate (cBP). In order to prevent the optimiser from oscillating around a minimum one may employ a variable learning rate (vBP) as an alternative. Different approaches for learning rate control exist [19], we opt to employ the linear decreasing model

with an initial learning rate \(\alpha _0\), a final learning rate \(\alpha _e\) and an epoch cap \(k_c\).

In our experiments, we also consider Adam (adaptive moment estimation) which is an adaptive optimisation method. It uses estimations of first (mean) and second (uncentred variance) moments of the gradient, see [20] for details. An advantage of Adam is the potential for achieving rapid training speed. While backpropagation scales the gradient uniformly in every direction in weight space (by \(\alpha \)), Adam computes an individual learning rate for every weight.

5 Experiments and results

For experiments on both solution approaches with different parameter variations, as well as optimisation with Adam and backpropagation, we make use of the model problem

a homogeneous first-order ordinary differential equation with \(\lambda \in \mathbb {R}\), \(\lambda <0\). The ODE (5) has the exact solution \(u(x)=e^{\lambda x}\) and represents a simple model for stiff phenomena involving a damping mechanism.

The numeric error \(\varDelta u\) shown in subsequent diagrams is defined as the \(l_1\)-norm of the difference between the exact solution and the corresponding trial solution

With \(u_t(x,\mathbf {p})=1+xN(x,\mathbf {p})\) we take the form of the trial solution for TSM proposed in [9] to construct the cost function

For mTSM the trial solution \(u_t(x,\mathbf {p})=N(x,\mathbf {p})\) results in the cost function

In subsequent experiments, we study \(\varDelta u\) with respect to several, meaningful variations of computational parameters.

The main parameters and abbreviations of the computational settings are defined as in Table 1. We use our own Fortran implementation for the neural network, the solution approaches and the optimiser, by following the proposed methods in the corresponding papers, without the use of deep learning libraries. Therefore, we have total control over the computations and are able to perform investigations related to every aspect of the methods and the code.

In most subsequent experiments, we used cBP instead of vBP, to reduce the amount of parameters. The learning rate for cBP is \(\alpha =1\)e-3 with \(\beta =9\)e-1. Only in optimisation comparison, vBP appears with \(\alpha _0=1\)e-2, \(\alpha _e=1\)e-3, \(k_c=1\)e4 and \(\beta =9\)e-1 as well. Adam parameters are, as employed in [20], \(\alpha =1\)e-3, \(\beta _1=9\)e-1, \(\beta _2=9.99\)e-1 and \(\epsilon =1\)e-8. In addition, some experiments show averaged graphs to see the general trend with a reduced influence of fluctuations. If we do not say otherwise in the subsequent experiments, the computational parameters are fixed with one hidden layer, five hidden layer neurons, number of maximal epochs \(k_{\mathrm{max}}=1\)e5, domain data \(x\in [0,2]\) and stiffness parameter \(\lambda =-5\).

Concerning the following experiments, let us stress again that these are not considered to be separate or independent of each other. We will consequently follow a line of argumentation that enables us (i) to reduce step by step the degrees of freedom in the choice of computational settings, and (ii) to clarify the influence of individual computational parameters. In doing this, we also demonstrate how to achieve tractable results. We consider this as an important part of our work since this makes the whole approach more meaningful.

5.1 Experiment 1: weight initialisation

This experiment illustrates differences between the two weight initialisation methods, employing either \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) or \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\). We averaged 100 iterations for displaying each point in the graph depicting \(\overline{\varDelta u}_{\mathrm{rnd}}\), implying that for the values given at the lower axis we perform computations with 100 overlaid random perturbations, with random numbers in range of 1e-2, as initialisation around each point. The averaging is important to mention, because every iteration with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) and exactly the same parameter setup, is expected to return different results.

Experiment 5.1. Weight initialisation variation, (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\overline{\varDelta u}_{\mathrm{rnd}}\)

Let us first comment on our choice of \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\). Evidently, one has to choose here some fixed value, and by further experiments not documented here in detail, the value zero appears to be a suitable generic choice for mTSM.

Let us now consider the experiments documented in Fig. 2. In general, TSM with both cBP and Adam (see illustrations (a)–(f)) does not return helpful results for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) with the current parameter setup. All experiments for TSM with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) give here uniformly a very high error (depicted by orange/solid lines), even when increasing the number of training points.

Turning to mTSM, the overall clearly best results for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) are provided by using Adam and ntD=40 in terms of the largest stable region. The results demonstrated in all of the experiments for mTSM with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) show that the Adam solver provides a desirable proceeding, virtually independently of the number of training points.

When considering \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), the Adam solver gives also for TSM reasonable results in terms of the numerical error with a large stable region. A similar but less clear error behaviour can be observed for using Adam with mTSM. As a general trend in all experiments with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), we observe that weight initialisation in a small range around zero seems to work best.

Let us also comment on illustrations (j)–(l) that we observe here the behaviour that both \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) around zero seem to work reasonably with cBP. One may conjecture for other example ODEs that there could be some constant initialisation and a range of random fluctuations around it that may work well.

Since a suitable choice of \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) is important in all subsequent experiments, we decided as a consequence of the experiments discussed here to initialise \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) with zeros and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) with random values in range of 0 to 1e-2 from now on.

5.2 Experiment 2: number of hidden layer neurons

The behaviour of \(\varDelta u_{\mathrm{const}}\) and \(\overline{\varDelta u}_{\mathrm{rnd}}\) when increasing the number of hidden layer neurons is subject to this experiment, where \(\overline{\varDelta u}_{\mathrm{rnd}}\) is averaged over 100 computations for every tested number of hidden layer neurons.

Experiment 5.2. Number of hidden layer neurons variation, (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\overline{\varDelta u}_{\mathrm{rnd}}\)

There is almost no difference between the experiments for TSM in Fig. 3a–f, they all show a similar saturating behaviour. As discussed in the previous experiment, it is clear that we have to focus here on the random initialisation, and for this setup we observe here desirable results for about five or more neurons.

Turning to mTSM, a higher number of hidden layer neurons lead to an increase in accuracy for Adam for larger numbers of training points explored here (\(ntD=40\)). For smaller numbers of training points (\(ntD=10,20\)), we observe here that the number of hidden layer neurons and thus the degrees of freedom introduced by the neural network should be in a relatively small range, e.g., about half the amount of training points.

Also, for cBP the saturation value of the error is affected by increasing the amount of hidden layer neurons. The general trend for \(\overline{\varDelta u}_{\mathrm{rnd}}\) is that slightly higher accuracy is provided in this way and that the saturation level is visible already when using a small number of neurons.

Generally in all cases, one can clearly observe the benefit of introducing two to three or more neurons, as this leads to a significant drop in all computed numerical errors.

As a consequence of these investigations, we employ five hidden layer neurons in the other experiments (note that this setting has also been used in the previous experiment) as this appears to be justified by the stable solutions and the amount of computational time.

5.3 Experiment 3: number of hidden layers

In order to focus on the impact of the number of hidden layers, we decided here to keep the number of neurons in the hidden layers constant, employing five neurons plus an additional bias neuron in each layer. As in previous experiments, \(\overline{\varDelta u}_{\mathrm{rnd}}\) is averaged by 100 iterations.

Results in Table 2 show that one hidden layer is not always enough to provide useful results, especially for \(\varDelta u_{\mathrm{const}}\) and TSM. Increasing the number of training points (ntD) changes the number of hidden layers that give the best approximation in some cases, but it does not seem to have in general a highly beneficial influence.

Turning to the most important aspect of our investigation in this experiment, one has to distinguish the effect of increasing the number of hidden layers with respect to the individual methods mTSM and TSM. For the mTSM, we find that one or two layers are sufficient to obtain—together with Adam optimisation—accurate and convenient results. Considering TSM our study shows a very different result, namely that each increase in the number of hidden layers up to about three or four makes up one order of accuracy gain, for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\).

The latter result appears to be to some degree surprising, as the universal approximation theorem should imply that one hidden layer could be enough to give here experimentally an accurate approximation of our solution function. Let us recall in this context Experiment 5.2, where we have seen that an increase of the number of neurons in one hidden layer leads to a saturation in the accuracy for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), while we observe here a clear improvement. Increasing the number of neurons and using \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) did not lead to reasonable results there, while \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) here in combination with more hidden layers gives good results plus a significant improvement in the current study.

As a consequence of this investigation, we decided to use one hidden layer for all computations in the other experiments, having in mind that TSM may allow an accuracy gain for more hidden layers.

5.4 Experiment 4: number of epochs

In this experiment, we aim to investigate if it is possible to fix the maximal number of training epochs to a convenient value. This relates to the question if one could bound the computational load by employing in general a small number of training cycles. To this end, we consider the convergence of the training as a function of an increasing maximal number of epochs \(k_{\mathrm{max}}\). In addition, we illuminate the influence of the number of training points.

Experiment 5.4. Number of maximal epochs variation, (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\overline{\varDelta u}_{\mathrm{rnd}}\)

More precisely, we increased \(k_{\mathrm{max}}\) from 1 to 1e5 and averaged 100 iterations for one and the same \(k_{\mathrm{max}}\). Put in other words, and to make clear the meaning of the lower axis in Fig. 4, one entry of the number \(k_{\mathrm{max}}\) relates to 100 corresponding complete optimisations of the neural network. Let us note again that in the case of \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), the convergence behaviour can only be evaluated by average values and that each computation was done with a new \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\).

As can be seen in Fig. 4, best results are returned by mTSM with Adam for both \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) (especially \(ntD=20\)) and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) (especially \(ntD=40\)). Except for TSM and \(ntD=10\), the Adam optimiser clearly reaches a saturation regime showing convergence for TSM and mTSM with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\). For cBP, \(\varDelta u_{\mathrm{const}}\) and \(\overline{\varDelta u}_{\mathrm{rnd}}\), still may decrease for even higher \(k_{\mathrm{max}}\) as evaluated here. However, let us note here that we employed in cBP a constant learning rate, for decreasing learning rates as often used for training we may expect that a saturation regime may be observed. However, with Adam, \(\overline{\varDelta u}_{\mathrm{rnd}}\) shows a small fluctuating behaviour in the convergence regime, so that results for non-averaged computations with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\) may be not satisfying. The cBP optimiser together with both TSM and mTSM shows very minor fluctuations, but also provides less good approximations. However, these tend to get better with higher ntD.

In the context of our results, let us note that in [12] the authors employed 5e4 epochs. Our investigation shows that the corresponding results are supposed to be in the convergence regime.

In conclusion, we find that \(k_{\mathrm{max}}\)=1e5 as used for all other experiments is suitable to obtain useful approximations.

5.5 Experiment 5: stiffness parameter \(\lambda \) (part 1) and domain size of D (part 2)

Let us now investigate the solution behaviour with respect to interesting choices of the stiffness parameter \(\lambda \), and it turns out that it makes sense to do this together with an investigation of the domain size of D. Let us note that informally speaking, these parameters also impact the general trend of the exact solution in a similar way so that it appears also from this point of view natural to evaluate them together in one experiment.

Experiment 5.5(part 1). Stiffness parameter \(\lambda \) variation, (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\overline{\varDelta u}_{\mathrm{rnd}}\)

Experiment 5.5(part 2). Domain size variation, (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\overline{\varDelta u}_{\mathrm{rnd}}\)

As shown in Fig. 6, the influence of different domains with increasing ntD is the objective of this experiment. Intervals used for computations are given in terms of \(x\in [0,x_{end}]\), with the smallest interval being \(x\in [0,5\mathrm{e}-2]\) and then increasing in steps of \(5\mathrm{e}-2\). As also in the first experimental part here, \(\overline{\varDelta u}_{\mathrm{rnd}}\) is averaged by 100 iterations for each domain.

Turning to the results, first we want to point out that for TSM, cBP and \(ntD=20\) there are values displayed as \(\overline{\varDelta u}_{\mathrm{rnd}}=9\mathrm{e}0\), to visualise them. In reality, these values were Not a Number (NaN), which means that at this point at least one of the 100 averaged iterations diverged for small values of \(\lambda \) in Fig. 5c, or large domains.

Furthermore, the solution accuracy for TSM and cBP is strictly decreasing for smaller \(\lambda \) and larger domains until it saturates in unstable regions. While increasing the number of training points from \(ntD=10\) to \(ntD=20\) some iterations diverged, another increase to \(ntD=40\) enlarges the unstable region with a stabilisation in between.

In the total, we observe that there seems to be a relation between the experiments that one may roughly formulate as a relation between \(\lambda \) and domain size given by \(x_{\mathrm{end}}\) as a factor of \(-2\). We also conjecture that the higher the values of \(-\lambda \) and \(x_{\mathrm{end}}\), the more neurons or layers are required for a convenient solution. As a consequence of these experiments, we decided to fix \(\lambda =-5\) and \(x\in [0,2]\) for all computations in the other experiments.

5.6 Experiment 6: optimisation methods

The final experiment in this paper compares Adam, cBP and vBP optimisation for TSM and mTSM, depending on ntD=10,20,40 with the other computational parameters fixed to one hidden layer, five hidden layer neurons, \(k_{\mathrm{max}}=1\)e5, \(\lambda =-5\) and \(x\in [0,2]\). Figures 7 and 8 show 1e5 (non-averaged) computed results for each parameter setup and weight initialisation.

Experiment 5.6. Optimiser comparison (part 1), (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\varDelta u_{\mathrm{rnd}}\)

Experiment 5.6. Optimiser comparison (part 2), (orange/solid) \(\varDelta u_{\mathrm{const}}\), (blue/dotted) \(\varDelta u_{\mathrm{rnd}}\)

Previous experiments led to the conclusion that TSM in combination with \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) only provides unstable solutions for the chosen parameter setup. Therefore, when evaluating TSM, we will only refer to the non-averaged numeric error \(\varDelta u_{\mathrm{rnd}}\) for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\).

To start the evaluation with TSM and Adam, there are almost no visible differences between \(ntD=10\) and \(ntD=40\), with a large difference between the best and the least good approximation, see first row in Fig. 7. Only for \(ntD=20\) the solutions tend to be more similar.

In contrast, the difference between the best and the least good approximation for TSM and cBP grows by one order of magnitude with a higher number of training points while simultaneously the accuracy for the best approximations increases, cf. second row in the figure.

The reason we show results on vBP only in this final experiment (see third row in the figure) is that the efficiency of an adaptive step size method may be in general highly dependent on the used step size model and parameters. However, the results turn out to be interesting. In combination with \(ntD=10\), vBP and TSM reveal several minima far away from the best approximation. Even more minima appear for a training points increase to \(ntD=20\). However, another increase to \(ntD=40\) stabilises the solutions. In addition, \(ntD=40\) provides the best approximations for TSM and vBP. One may conjecture here that either one has here to reach a critical number of training points or that the weight initialisation here is not adequate together with lower ntD.

Now, we turn to mTSM and Adam, see first row in Fig. 8. We find \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) to show useful results (\(\varDelta u_{\mathrm{const}}\)) and a small gain in accuracy for higher ntD. For \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), we find the best approximations throughout the whole experiment to be provided by \(ntD=10\). However, most of the 1e5 computed results appear around a less good (but still reasonable) accuracy with only a few results peaking further in accuracy. Increasing the number of training points to \(ntD=20\) and \(ntD=40\) results in a drop of accuracy from the former best solutions, while overall the results become more similar.

For mTSM and cBP, see second row in the figure, we find a similar behaviour of \(\varDelta u_{\mathrm{const}}\), similarly to the case mTSM and Adam. The solutions become slightly more accurate and similar with higher ntD. However, both weight initialisation methods cannot compete with the combination mTSM and Adam.

Now for mTSM and vBP as displayed by the last row in the figure, we find stable results for all ntD, which is in sharp contrast to TSM and vBP. Again, \(\varDelta \mathrm{u}_{\mathrm{const}}\) behaves like the other computations for mTSM, and we find similarities in the overall behaviour of \(\varDelta u_{\mathrm{rnd}}\) compared to TSM and cBP. Increasing ntD leads to slightly better approximations, while the difference between the best and the least good approximation grows.

Turning to Table 3, we now discuss the stochastic quantities for \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\), related to the results shown in Figs. 7 and 8. We focus on the results for random weight initialisation as the diagrams have shown the constant weight initialisation to always return the same numerical error. The 1e5 complete computations (optimisations) should sufficiently support the meaning of the analysed data.

Regarding the mean value, Adam has the overall smallest value and seems to be the best choice. However, for TSM and \(ntD=40\), cBP almost equals Adam with vBP outperforming Adam in this specific setting. That result is particular interesting, since vBP shows for TSM and both \(ntD=10\) and \(ntD=20\) very limited approximations. In contrast to TSM, Adam dominates for mTSM the lowest mean value without any exception.

The former statement, however, does only hold partially when it comes to the standard deviation. Here, TSM seems to favour cBP over Adam, again with vBP for \(ntD=40\) to pass downwards. Excluding vBP for \(ntD= 10\) and \(ntD=20\), the standard deviation in the other cases are in an acceptable range. That is, the mean value and standard deviation could be suitable when evaluating stability and reliability. However, it can be difficult to specify the term reliability. The lower the numerical error, the better the approximation. Nonetheless, defining a threshold needs justification and discussion on how the neural network methods behave compared to standard numerical algorithms like Runge–Kutta.

We also take different quantiles (10%,20%,30%) into account. The percentage specifies the relative amount of data points which appear below the quantile value itself. Although several minima for TSM and vBP in Fig. 5g,h appear to be less useful than the lowest one, all quantiles for these cases are better than for the same settings with cBP. The situation for mTSM is the same, while Adam outperforms both cBP and vBP in this context. Therefore, one may find that further adjusting the optimisation parameters for vBP can in general lead to perform better than cBP. However, it is questionable if this would also perform better than Adam. We find all quantiles values to be good in case of Adam optimisation. In this sense, we consider Adam here as the most reliable optimiser.

Concluding, the overall best performance related to the numeric error shows mTSM and Adam for both \(\mathbf {p}^{\mathrm{init}}_{\mathrm{const}}\) and \(\mathbf {p}^{\mathrm{init}}_{\mathrm{rnd}}\). Although TSM and vBP appear to have some stability flaws for lower ntD, it stabilises for \(ntD=40\). Overall, both vBP and cBP cannot compete with Adam and mTSM.

6 Conclusion and future work

When solving the stiff model ODE with feedforward neural networks, the solution reliability depends on a variety of parameters. We find the weight initialisation to have a major influence. While the initialisation with zeros does not provide reasonable approximations for TSM with one hidden layer, it is capable to work reasonably well for mTSM. First setting the weights to small random values shows the best results with Adam and mTSM, although the use of more training points may yield less suitable results. This may indicate an overfitting and could be resolved by employing more neurons or other adjustments. This may be a subject for a future study.

However, our work also indicates that all the investigated issues may have to be considered together as a complete package, i.e., the investigated aspects may not be evaluated completely independent of each other. Even after a detailed investigation as provided here it seems not to be possible to single out an individual aspect that dominates the overall accuracy and reliability.

We tend to favour the combination of Adam and mTSM in further computationally oriented research, since it provides the best approximations for both weight initialisation methods. Future research may also include theoretical work, e.g., on sensitivity and different trial solution forms for TSM. One main goal in this context is to decrease the variation of possible solutions together with an increase of the solution accuracy.

Moreover, our third experiment has shown that it may make sense to investigate deep networks, since these could result in a significant accuracy gain, reminding of higher order effects in classic numerical analysis.

Furthermore, our future work will include more difficult differential equations with a focus on initial value problems and the improvement of constant weight initialisation.

References

Hanke-Bourgeois M (2009) Grundlagen der numerischen mathematik und des wissenschaftlichen rechnens. Vieweg+Teubner Verlag / GWV Fachverlage GmbH, Wiesbaden

Antia HM (2012) Numerical methods for scientists and engineers. Hindustan Book Agency, New Delhi

Kumar K, Thakur GSM (2012) Advanced applications of neural networks and artifical intelligence: a review. Int J Inf Technol Comput Sci 4:57–68

Parisi DR, Mariani MC, Laborde MA (2003) Solving differential equations with unsupervised neural networks. Chem Eng Process Process Intensific 42(8):715–721

Dissanayake MWMG, Phan-Thien N (1994) Neural-network-based approximations for solving partial differential equations. Commun Numer Methods Eng 10(3):195–201

Leijnen S, Veen F (2020) The neural network zoo. Multidiscip Digital Publ Inst Proc 47(9):1–6

Meade AJ Jr, Fernandez AA (1994) The numerical solution of linear ordinary differential equations by feedforward neural networks. Math Comput Model 19(12):1–25

Meade AJ Jr, Fernandez AA (1994) Solution of nonlinear ordinary differential equations by feedforward neural networks. Math Comput Model 20(9):19–44

Lagaris IE, Likas A, Fotiadis DI (1998) Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans Neural Netw 9(5):987–1000

Lagari PL, Tsoukalas LH, Safarkhani S, Lagaris IE (2020) Systematic construction of neural forms for solving partial differential equations inside rectangular domains, subject to initial, boundary and interface conditions. Int J Artif Intell Tools 29(5):2050009

Mall S, Chakraverty S (2016) Application of legendre neural network for solving ordinary differential equations. Appl Soft Comput 43:347–356

Piscopo ML, Spannowsky M, Waite P (2019) Solving differential equations with neural networks: applications to the calculation of cosmological phase transitions. Phys Rev D 100(1):016002

Schneidereit T, Breuß M (2020) Solving ordinary differential equations using artificial neural networks-a study on the solution variance, In: Proceedings of the conference algoritmy, pp. 21–30

Famelis IT, Kaloutsa V (2020) Parameterized neural network training for the solution of a class of stiff initial value systems. Neural Comput Appl 33:1–8

Dahlquist GG (1978) G-stability is equivalent to A-stability. BIT Numer Math 18(4):384–401

Cybenko G (1989) Approximation by superpositions of a sigmoidal function. Math Control Signals Syst 2(4):303–314

Nguyen D, Widrow B (1990) Improving the learning speed of 2-layer neural networks by choosing initial values of the adaptive weights, In: 1990 IJCNN international joint conference on neural networks, vol 3, pp 21–26

Phansalkar VV, Sastry PS (1994) Analysis of the back-propagation algorithm with momentum. IEEE Trans Neural Networks 5(3):505–506

Kaneda Y, Zhao Q, Liu Y, Pei Y (2015) Strategies for determining effective step size of the backpropagation algorithm for on-line learning, In: 7th international conference of soft computing and pattern recognition (SoCPaR), pp 155–160

Kingma DP, Ba J (2017) Adam: a method for stochastic optimization, arXiv:1412.6980v9

Acknowledgements

This publication was funded by the Graduate Research School (GRS) of the Brandenburg University of Technology Cottbus-Senftenberg. This work is part of the Research Cluster Cognitive Dependable Cyber Physical Systems.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schneidereit, T., Breuß, M. Computational characteristics of feedforward neural networks for solving a stiff differential equation. Neural Comput & Applic 34, 7975–7989 (2022). https://doi.org/10.1007/s00521-022-06901-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-06901-6