Abstract

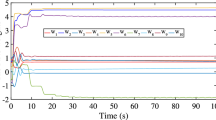

In this paper, a novel scheme for the tracking problem of nonlinear systems is proposed. First, as a new technology of neural network in control field, linear differential inclusion is used to approximate the nonlinear term for the entire system. Based on the equivalent linear system, tracking reference signal is given and a new augmented system is built. According to the mentioned value function, two reinforcement learning algorithms are proposed to design the optimal control law. Notice that the online algorithm does not involve the system dynamics and tracking dynamics. In the simulation section, the model of trolley system is given to prove the effectiveness and accuracy of the scheme proposed in this paper.

Similar content being viewed by others

References

Bai W, Li T, Tong S (2020) NN reinforcement learning adaptive control for a class of nonstrict-feedback discrete-time systems. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2020.2963849

Song Z, Yang J, Mei X et al (2020) Deep reinforcement learning for permanent magnet synchronous motor speed control systems. Neural Comput Appl. https://doi.org/10.1007/s00521-020-05352-1

Khater A, El-Nagar A, El-Bardini M et al (2020) Online learning based on adaptive learning rate for a class of recurrent fuzzy neural network. Neural Comput Appl 32(12):8691–8710. https://doi.org/10.1007/s00521-019-04372-w

Li H, Wu Y, Chen M (2020) Adaptive fault-tolerant tracking control for discrete-time multiagent systems via reinforcement learning algorithm. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2020.2982168

Vrabie D, Pastravanu O, Abu-Khalaf M et al (2009) Adaptive optimal control for continuous-time linear systems based on policy iteration. Automatica 45(2):477–484. https://doi.org/10.1016/j.automatica.2008.08.017

Li H, Liu D, Wang D (2014) Integral reinforcement learning for linear continuous-time zero-sum games with completely unknown dynamics. IEEE Trans Autom Sci Eng 11(3):706–714. https://doi.org/10.1109/TASE.2014.2300532

He S, Zhang M, Fang H et al (2019) Reinforcement learning and adaptive optimization of a class of Markov jump systems with completely unknown dynamic information. Neural Comput Appl. https://doi.org/10.1007/s00521-019-04180-2

Wu H, Song S, You K et al (2018) Depth control of model-free auvs via reinforcement learning. IEEE Trans Syst Man Cybern Syst 49(12):2499–2510. https://doi.org/10.1109/TSMC.2017.2785794

Liang Y, Zhang H, Xiao G et al (2018) Reinforcement learning-based online adaptive controller design for a class of unknown nonlinear discrete-time systems with time delays. Neural Comput Appl 30(6):1733–1745. https://doi.org/10.1007/s00521-018-3537-7

Modares H, Nageshrao SP, Lopes GAD et al (2016) Optimal model-free output synchronization of heterogeneous systems using off-policy reinforcement learning. Automatica 71:334–341. https://doi.org/10.1016/j.automatica.2016.05.017

Hu Y, Wang H, He S, Zheng J, Ping Z, Ke S, Cao Z, Man Z (2021) Adaptive tracking control of an electronic throttle valve based on recursive terminal sliding mode. IEEE Trans Veh Technol. https://doi.org/10.1109/TVT.2020.3045778

Zhang J, Wang H, Zheng J, Cao Z, Man Z, Yu M, Chen L (2020) Adaptive sliding mode-based lateral stability control of Steer-by-Wire vehicles with experimental validations. IEEE Trans Veh Technol 69(9):9589–9600. https://doi.org/10.1109/TVT.2020.3003326

Hu Y, Wang H, Cao Z, Zheng J, Ping Z, Chen L, Jin X (2019) Extreme-learning-machine-based FNTSM control strategy for electronic throttle. Neural Comput Appl 32:14507–14518. https://doi.org/10.1007/s00521-019-04446-9

Yang Y, Wan Y, Zhu J et al (2020) \(H_\infty\) tracking control for linear discrete-time systems: model-free Q-Learning designs. IEEE Control Syst Lett 5(1):175–180. https://doi.org/10.1109/LCSYS.2020.3001241

Qin C, Zhang H, Luo Y (2014) Online optimal tracking control of continuous-time linear systems with unknown dynamics by using adaptive dynamic programming. Int J Control 87(5):1000–1009. https://doi.org/10.1080/00207179.2013.863432

Xiao G, Zhang H, Luo Y et al (2016) Data-driven optimal tracking control for a class of affine non-linear continuous-time systems with completely unknown dynamics. IET Control Theory Appl 10(6):700–710. https://doi.org/10.1049/iet-cta.2015.0590

Yang X, He H, Liu D et al (2017) Adaptive dynamic programming for robust neural control of unknown continuous-time non-linear systems. IET Control Theory Appl 11(14):2307–2316. https://doi.org/10.1049/iet-cta.2017.0154

Yang X, Liu D, Wei Q et al (2016) Guaranteed cost neural tracking control for a class of uncertain nonlinear systems using adaptive dynamic programming. Neurocomputing 198:80–90. https://doi.org/10.1016/j.neucom.2015.08.119

Zhang J, Wang H, Cao Z, Zheng J, Yu M, Yazdani A, Shahnia F (2019) Fast nonsingular terminal sliding mode control for permanent magnet linear motor via ELM. Neural Comput Appl 32:14447–14457. https://doi.org/10.1007/s00521-019-04502-4

Ye M, Wang H (2020) Robust adaptive integral terminal sliding mode control for steer-by-wire systems based on extreme learning machine. Comput Electr Eng. https://doi.org/10.1016/j.compeleceng.2020.106756

He S, Fang H, Zhang M et al (2019) Adaptive optimal control for a class of nonlinear systems: the online policy iteration approach. IEEE Trans Neural Netw Learn Syst 31(2):549–558. https://doi.org/10.1109/TNNLS.2019.2905715

Fang H, Zhu G, Stojanovic V, Nie R, He S, Luan X, Liu F (2020) Adaptive optimization algorithm for nonlinear Markov jump systems with parrtial unknown dynamics. Int J Robust Nonlinear Control. https://doi.org/10.1002/rnc.5350

Wang C, Fang H, He S (2020) Adaptive optimal controller design for a class of LDI-based neural network systems with input time-delays. Neurocomputing 385:292–299. https://doi.org/10.1016/j.neucom.2019.12.084

Chen L, Wang H, Huang Y, Ping Z, Yu M, Ye M, Hu Y (2020) Robust hierarchical terminal sliding mode control of two-wheeled self-balancing vehicle using perturbation estimation. Mech Syst Signal Process. https://doi.org/10.1016/j.ymssp.2019.106584

Ye M, Wang H (2019) A robust adaptive chattering-free sliding mode control strategy for automotive electronic throttle system via genetic algorithm. IEEE Access 8(99):68–80. https://doi.org/10.1109/ACCESS.2019.2934232

Suykens JAK, De Moor B, Vandewalle J (2000) Robust local stability of multilayer recurrent neural networks. IEEE Trans Neural Netw 11(1):222–229. https://doi.org/10.1109/72.822525

Modares H, Lewis FL (2014) Linear quadratic tracking control of partially-unknown continuous-time systems using reinforcement learning. IEEE Trans Autom Control 59(11):3051–3056. https://doi.org/10.1109/TAC.2014.2317301

Kleinman D (1968) On an iterative technique for Riccati equation computations. IEEE Trans Autom Control 13(1):114–115. https://doi.org/10.1109/TAC.1968.1098829

Jiang Y, Jiang Z (2012) Computational adaptive optimal control for continuous-time linear systems with completely unknown dynamic. Automatica 48(10):2699–2704. https://doi.org/10.1016/j.automatica.2012.06.096

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (Nos. 62073001, 61673001), Foundation for Distinguished Young Scholars of Anhui Province (No. 1608085J05) and Key Support Program of University Outstanding Youth Talent of Anhui Province (No.gxydZD2017001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Equation (7) can be obtained as follows:

Proof

Taking into account the characteristics of the activation function mentioned in Eq. (4), \(\sum _{i=0}^{1}h(i)=0\). Then, for the whole neural network, it can obtain Eq. (7), and the proof is completed. \(\square\)

Rights and permissions

About this article

Cite this article

Tu, Y., Fang, H., Yin, Y. et al. Reinforcement learning-based nonlinear tracking control system design via LDI approach with application to trolley system. Neural Comput & Applic 34, 5055–5062 (2022). https://doi.org/10.1007/s00521-021-05909-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05909-8