Abstract

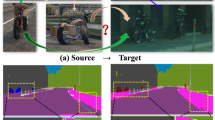

Selective manipulation of data attributes using deep generative models is an active area of research. In this paper, we present a novel method to structure the latent space of a variational auto-encoder to encode different continuous-valued attributes explicitly. This is accomplished by using an attribute regularization loss which enforces a monotonic relationship between the attribute values and the latent code of the dimension along which the attribute is to be encoded. Consequently, post training, the model can be used to manipulate the attribute by simply changing the latent code of the corresponding regularized dimension. The results obtained from several quantitative and qualitative experiments show that the proposed method leads to disentangled and interpretable latent spaces which can be used to effectively manipulate a wide range of data attributes spanning image and symbolic music domains.

Similar content being viewed by others

Notes

https://faceapp.com/app, last accessed: 20th July 2020.

https://prisma-ai.com, last accessed: 20th July 2020.

https://pytorch.org, last accessed: 20th July 2020.

References

Adel T, Ghahramani Z, Weller A (2018) Discovering interpretable representations for both deep generative and discriminative models. In: 35th international conference on machine learning (ICML), Stockholm, Sweeden, pp 50–59

Akuzawa K, Iwasawa Y, Matsuo Y (2018) Expressive speech synthesis via modeling expressions with variational autoencoder. In: 19th Interspeech, Graz, Austria

Aubry M, Maturana D, Efros AA, Russell BC, Sivic J (2014) Seeing 3D chairs: exemplar part-based 2D-3D alignment using a large dataset of CAD models. In: IEEE conference on computer vision and pattern recognition (CVPR), Columbus, Ohio, USA, pp 3762–3769

Bengio Y, Courville A, Vincent P (2013) Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell 35(8):1798–1828

Bouchacourt D, Tomioka R, Nowozin S (2018) Multi-level variational autoencoder: learning disentangled representations from grouped observations. In: 32nd AAAI conference on artificial intelligence, New Orleans, USA

Bowman SR, Vilnis L, Vinyals O, Dai AM, Jozefowicz R, Bengio S (2016) Generating Sentences from a Continuous Space. In: SIGNLL conference on computational natural language learning, Berlin, Germany

Brunner G, Konrad A, Wang Y, Wattenhofer R (2018) MIDI-VAE: modeling dynamics and instrumentation of music with applications to style transfer. In: 19th international society for music information retrieval conference (ISMIR), Paris, France

Burges C, Hyunjik K (2020) 3d-shapes dataset. https://github.com/deepmind/3d-shapes. Last accessed, 2nd April 2020

Burgess CP, Higgins I, Pal A, Matthey L, Watters N, Desjardins G, Lerchner A (2018) Understanding disentangling in $\beta $-VAE. arXiv:1804.03599 [cs, stat]

Carter S, Nielsen M (2017) Using artificial intelligence to augment human intelligence. Distill 2(12):e9. https://doi.org/10.23915/distill.00009

Castro DC, Tan J, Kainz B, Konukoglu E, Glocker B (2019) Morpho-MNIST: quantitative assessment and diagnostics for representation learning. J Mach Learn Res 20:1–29

Chen RTQ, Li X, Grosse R, Duvenaud D (2018) Isolating sources of disentanglement in variational autoencoders. In: Advances in neural information processing systems 31 (NeurIPS)

Chen X, Duan Y, Houthooft R, Schulman J, Sutskever I, Abbeel P (2016) InfoGAN: interpretable representation learning by information maximizing generative adversarial nets. In: Advances in neural information processing systems 29 (NeurIPS), pp 2172–2180

Cuthbert MS, Ariza C (2010) music21: a toolkit for computer-aided musicology and symbolic music data. In: 11th international society of music information retrieval conference (ISMIR), Utrecht, The Netherlands

Dai Z, Yang Z, Yang Y, Carbonell J, Le QV, Salakhutdinov R (2019) Transformer-XL: attentive language models beyond a fixed-length context. In: Assoication of computational linguistics (ACL), Florence, Italy

Donahue C, Lipton ZC, Balsubramani A, McAuley J (2018) Semantically decomposing the latent spaces of generative adversarial networks. In: 6th international conference on learning representations (ICLR), Vancouver, Canada

Eastwood C, Williams CKI (2018) A framework for the quantitative evaluation of disentangled representations. In: 6th international conference on learning representations (ICLR), Vancouver, Canada

Engel J, Hoffman M, Roberts A (2017) Latent constraints: learning to generate conditionally from unconditional generative models. In: 5th international conference on learning representations (ICLR), Toulon, France

Gatys LA, Ecker AS, Bethge M (2016) Image style transfer using convolutional neural networks. In: IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, USA, pp 2414–2423

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems 27 (NeurIPS), pp 2672–2680

Hadjeres G, Nielsen F, Pachet F (2017) GLSR-VAE: geodesic latent space regularization for variational autoencoder architectures. In: IEEE symposium series on computational intelligence (SSCI), Hawaii, USA, pp 1–7

Higgins I, Matthey L, Pal A, Burgess C, Glorot X, Botvinick MM, Mohamed S, Lerchner A (2017) $\beta $-VAE: learning basic visual concepts with a constrained variational framework. In: 5th international conference on learning representations (ICLR), Toulon, France

Hsu WN, Zhang Y, Glass J (2017) Learning latent representations for speech generation and transformation. In: 18th Interspeech, Stockholm, Sweeden

Huang CZA, Vaswani A, Uszkoreit J, Simon I, Hawthorne C, Shazeer N, Dai AM, Hoffman MD, Dinculescu M, Eck D (2018) Music transformer: generating music with long-term structure. In: 6th international conference on learning representations (ICLR), Vancouver, Canada

Jozefowicz R, Zaremba W, Sutskever I (2015) An empirical exploration of recurrent network architectures. In: 32nd international conference on machine learning (ICML), Lille, France

Kim H, Mnih A (2018) Disentangling by factorising. In: 35th international conference on machine learning (ICML), Stockholm, Sweeden

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: 3rd international conference on learning representations (ICLR), San Diego, USA

Kingma DP, Welling M (2014) Auto-encoding variational Bayes. In: 2nd international conference on learning representations (ICLR), Banff, Canada

Klambauer G, Unterthiner T, Mayr A, Hochreiter S (2017) Self-normalizing neural networks. In: Advances in neural information processing systems 30 (NeurIPS), pp 971–980

Kulkarni TD, Whitney WF, Kohli P, Tenenbaum J (2015) Deep convolutional inverse graphics network. In: Advances in neural information processing systems 28 (NeurIPS), pp 2539–2547

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86

Kumar A, Sattigeri P, Balakrishnan A (2017) Variational inference of disentangled latent concepts from unlabeled observations. In: 5th international conference on learning representations (ICLR), Toulon, France

Lample G, Zeghidour N, Usunier N, Bordes A, Denoyer L, Ranzato MA (2017) Fader networks: manipulating images by sliding attributes. In: Advances in neural information processing systems 30 (NeurIPS), pp 5967–5976

Ledig C, Theis L, Huszar F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, Shi W (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: IEEE conference on computer vision and pattern recognition (CVPR), Hawaii, USA, pp 4681–4690

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision (ICCV), Santiago, Chile, pp 3730–3738

Locatello F, Bauer S, Lucic M, Rätsch G, Gelly S, Schölkopf B, Bachem O (2019) Challenging common assumptions in the unsupervised learning of disentangled representations. In: 36th international conference on machine learning (ICML), Long Beach, California, USA

Matthey L, Higgins I, Hassabis D, Lerchner A (2017) dSprites: disentanglement testing sprites dataset. https://github.com/deepmind/dsprites-dataset. Last accessed, 2nd April 2020

Mikolov T, Sutskever I, Chen K, Corrado GS, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Advances in neural information processing systems 26 (NeurIPS), pp 3111–3119

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv:1411.1784 [cs, stat]

Pati A, Lerch A, Hadjeres G (2019) Learning to traverse latent spaces for musical score inpainting. In: 20th international society for music information retrieval conference (ISMIR), Delft, The Netherlands

Razavi A, van den Oord A, Vinyals O (2019) Generating diverse high-fidelity images with VQ-VAE-2. In: Advances in neural information processing systems 32 (NeurIPS), pp 14866–14876

Reed SE, Zhang Y, Zhang Y, Lee H (2015) Deep visual analogy-making. In: Advances in neural information processing systems 28 (NeurIPS), pp 1252–1260

Rezende DJ, Mohamed S (2015) Variational inference with normalizing flows. In: 32nd international conference on machine learning (ICML), Lille, France. ArXiv: 1505.05770

Ridgeway K, Mozer MC (2018) Learning deep disentangled embeddings with the F-statistic loss. In: Advances in neural information processing systems 31 (NeurIPS), pp 185–194

Roberts A, Engel J, Oore S, Eck D (2018) Learning latent representations of music to generate interactive musical palettes. In: Intelligent user interfaces workshops (IUI), Tokyo, Japan

Roberts A, Engel J, Raffel C, Hawthorne C, Eck D (2018) A hierarchical latent vector model for learning long-term structure in music. In: 35th international conference on machine learning (ICML), Stockholm, Sweeden

Rubenstein P, Scholkopf B, Tolstikhin I (2018) Learning disentangled representations with wasserstein auto-encoders. In: 6th international conference on learning representations (ICLR), workshop track, Vancouver, Canada

Sohn K, Lee H, Yan X (2015) Learning structured output representation using deep conditional generative models. In: Advances in neural information processing systems 28 (NeurIPS)

Sturm BL, Santos JF, Ben-Tal O, Korshunova I (2016) Music transcription modelling and composition using deep learning. In: 1st international conference on computer simulation of musical creativity (CSMC), Huddersfield, UK

Toussaint G (2002) A mathematical analysis of African, Brazilian and Cuban Clave rhythms. In: BRIDGES: mathematical connections in art, music and science, pp 157–168

van den Oord A, Kalchbrenner N, Espeholt L, kavukcuoglu K, Vinyals O, Graves A (2016) Conditional image generation with PixelCNN decoders. In: Advances in neural information processing systems 29 (NeurIPS), pp 4790–4798

Vincent P, Larochelle H, Bengio Y, Manzagol PA (2008) Extracting and composing robust features with denoising autoencoders. In: 25th international conference on machine learning (ICML), Helsinki, Finland, pp 1096–1103

Wang Y, Stanton D, Zhang Y, Skerry-Ryan RJ, Battenberg E, Shor J, Xiao Y, Ren F, Jia Y, Saurous RA (2018) Style tokens: unsupervised style modeling, control and transfer in end-to-end speech synthesis. In: 35th international conference on machine learning (ICML), Stockholm, Sweeden

Yan X, Yang J, Sohn K, Lee H (2016) Attribute2Image: conditional image generation from visual attributes. In: Leibe B, Matas J, Sebe N, Welling M (eds) European conference for computer vision (ECCV), Amsterdam, The Netherlands, pp 776–791

Yang J, Reed SE, Yang MH, Lee H (2015) Weakly-supervised disentangling with recurrent transformations for 3D view synthesis. In: Advances in neural information processing systems 28 (NeurIPS), pp 1099–1107

Zhang Y, Gan Z, Fan K, Chen Z, Henao R, Shen D, Carin L (2017) Adversarial feature matching for text generation. In: 34th international conference on machine learning (ICML), Sydney, Australia, pp 4006–4015

Acknowledgements

The authors would like to thank Nvidia Corporation for their donation of a Titan V awarded as part of the Graphics Processing Unit (GPU) grant program which was used for running several experiments pertaining to this research work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Computation of musical attributes

The data representation scheme from [40] is chosen where each monophonic measure of music M is a sequence of N symbols \(\left\{ m_t \right\} , t \in [0, N)\), where \(N=24\). The set of symbols consists of note names (e.g., A#, Eb, B, C), a continuation symbol ‘__’, and a special token for Rest. The computation steps for the musical metrics are as follows:

-

(a)

Rhythmic Complexity (r): This attribute measures the rhythmic complexity of a given measure. To compute this, a complexity coefficient array \(\left\{ f_t \right\} , t \in [0, N)\) is first constructed which assigns weights to different metrical locations based on Toussaint’s metrical complexity measure [50]. Metrical locations which are on the beat are given low weights while locations which are off-beat are given higher weights. The attribute is computed by taking a weighted average of the note onset locations with the complexity coefficient array f. Mathematically,

$$\begin{aligned} r(M) = \frac{\sum _{t=0}^{N-1} \mathrm {ONSET}(m_t) . f_t}{\sum _{t=0}^{N-1}f_t} , \end{aligned}$$(10)where \(\mathrm {ONSET} \left( \cdot \right)\) detects if there is a note onset at location t, i.e., it is 1 if \(m_t\) is a note name symbol and 0 otherwise.

-

(b)

Pitch Range (p): This is computed as the normalized difference between the maximum and minimum MIDI pitch values:

$$\begin{aligned} p(M) = \frac{1}{R} \left( \underset{t \in [0, N)}{\mathrm {max}}(\mathrm {MIDI}(m_t)) - \underset{t \in [0, N)}{\mathrm {min}}(\mathrm {MIDI}(m_t)) \right) , \end{aligned}$$(11)where \(\mathrm {MIDI} \left( \cdot \right)\) computes the pitch value in MIDI for the note symbol. The MIDI pitch value for Rest and ‘__’ symbols are set to zero. The normalization factor R is based on the range of the dataset.

-

(c)

Note Density (d): This measures the count of the number of notes per measure normalized by the total length of the measure sequence:

$$\begin{aligned} d(M) = \frac{1}{N} \sum _{i=0}^{N-1} \mathrm {ONSET}(m_t), \end{aligned}$$(12)where \(\mathrm {ONSET} \left( \cdot \right)\) has the same meaning as in Eq. (10).

-

(d)

Contour (c): This measures the degree to which the melody moves up or down and is measured by summing up the difference in pitch values of all the notes in the measure. Mathematically,

$$\begin{aligned} c(M) = \frac{1}{R} \sum _{t=0}^{N-2} \left[ \mathrm {MIDI}(m_{t+1}) - \mathrm {MIDI}(m_t) \right] , \end{aligned}$$(13)where \(\mathrm {MIDI} \left( \cdot \right)\) and R have same meaning as in Eq. (11).

Appendix 2: Implementation details

Image-based models For the image-based models, a stacked convolutional VAE architecture is used. The encoder consists of a stack of N 2-dimensional convolutional layers followed by a stack of linear layers. The decoder mirrors the encoder and consists of a stack of linear layers followed by a stack of N 2-dimensional transposed convolutional layers. The configuration details are given in Table 1.

Music-based models For the music-based models, the model architecture is based on our previous work on musical score inpainting [40]. A hierarchical recurrent VAE architecture is used. Figure 14 shows the overall schematic of the architecture, and Table 2 provides the configuration details.

MeasureVAE schematic. Individual components of the encoder and decoder are shown below the main blocks (dotted arrows indicate data flow within the individual components). \(\mathbf {z}\) denotes the latent vector and \(\hat{\mathbf {x}}\) denotes the reconstructed measure, \(b=4\) denotes the number of beats in a measure and \(t=6\) denotes the number of symbols/ticks in a beat. Figure taken from [40]

Training details All models for the same dataset are trained for the same number of epochs (models for both image-based datasets and Bach Chorales are trained for 100 epochs, models for the Folk Music dataset are trained for 30 epochs). The optimization is carried out using the ADAM optimizer [27] with a fixed learning rate of \(1\mathrm {e}{-4}\), \(\beta _1 = 0.9\), \(\beta _2 = 0.999\), and \(\epsilon =1\)e\(-8\).

All the models are implemented using the Python programming language and the PytorchFootnote 4 library.

Appendix 3: Additional results

Some additional examples from the image-based datasets are shown in Figs. 15 and 16. The musical scores for AR-VAE generated interpolations from Fig. 11 is shown in Fig. 17.

Manipulating attributes for three different shapes from the 2-d sprites dataset images using AR-VAE. The interpolations for each attribute are generated by changing the latent code of the corresponding regularized dimension for the original shapes shown on the extreme left. Attributes can be manipulated independently

Manipulating attributes for ten different digits from the Morpho-MNIST dataset images using AR-VAE. The interpolations for each attribute are generated by changing the latent code of the corresponding regularized dimension for the original digits shown on the extreme left. AR-VAE is able to manipulate the different attributes and is able to retain the digit identity in most cases

Musical score corresponding to the AR-VAE generated interpolations from Fig. 11. While the attribute values of the generated measures are controlled effectively, the musical coherence is often lost (particularly in the case of Bach Chorales)

Rights and permissions

About this article

Cite this article

Pati, A., Lerch, A. Attribute-based regularization of latent spaces for variational auto-encoders. Neural Comput & Applic 33, 4429–4444 (2021). https://doi.org/10.1007/s00521-020-05270-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-05270-2