Abstract

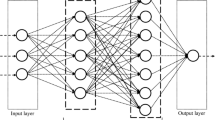

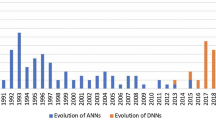

The use of artificial neural networks for various problems has provided many benefits in various fields of research and engineering. Yet, depending on the problem, different architectures need to be developed and most of the time the design decision relies on a trial and error basis as well as on the experience of the developer. Many approaches have been investigated concerning the topology modelling, training algorithms, data processing. This paper proposes a novel automatic method for the search of a neural network architecture given a specific task. When selecting the best topology, our method allows the exploration of a multidimensional space of possible structures, including the choice of the number of neurons, the number of hidden layers, the types of synaptic connections, and the use of transfer functions. Whereas the backpropagation algorithm is being conventionally used in the field of neural networks, one of the known disadvantages of the technique represents the possibility of the method to reach saddle points or local minima, hence overfitting the output data. In this work, we introduce a novel strategy which is capable to generate a network topology with overfitting being avoided in the majority of the cases at affordable computational cost. In order to validate our method, we provide several numerical experiments and discuss the outcomes.

Similar content being viewed by others

Notes

The reader should note that in this paper we interchangeably use the words topology and architecture having in mind the same meaning.

The reader should note that when the notation \(n_{l}\times n_{max}\times c_{max}\) is used it signifies information about the number of layers in the architecture (in this case \(n_{l}\)), the maximum number of neurons in every hidden layer (in this case \(n_{max}\)) and finally the maximum amount of connections from neuron to neuron (in this case \(c_{max}\)).

References

Haykin S (2009) Neural networks and learning machines, 3rd edn. Pearson Education, Upper Saddle River

Bishop CM (1993) Neural networks for pattern recognition. Clarendon Press, Cambridge

Mucherino A, Papajorgji PJ, Pardalos PM (2009) Data Mining in Agriculture, vol 34. Springer Science & Business Media

Hagan MT, Demuth HB, Beale MH, De Jesus O (2014) Neural network design, 2nd edn. Martin Hagan, New York

Kordik P, Koutnik J, Drchal J, Kovarik O, Cepek M, Snorek M (2010) Meta-learning approach to neural network optimization. Neural Netw 23(4):568–582

Almeida LM, Ludermir TB (2010) A multi-objective memetic and hybrid methodology for optimizing the parameters and performance of artificial neural networks. Neurocomputing 73:1438–1450

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86:2278–2324

Yao X, Yong L (1997) A new evolutionary system for evolving artificial neural networks. IEEE Trans Neural Netw 8(3):694–713

Branke J (1995) Evolutionary algorithms for neural network design and training. In: Proceedings of the First Nordic Workshop on Genetic Algorithms and its Applications

Carvalho R, Ramos FM, Chaves AA (2011) Metaheuristics for the feedforward artificial neural network (ANN) architecture optimization problem. Neural Comput Appl 20(8):1273–1284

Balkin SD, Ord JK (2000) Automatic neural network modeling for univariate time series. Int J Forecast 16:509515

Ma L, Khorasani K (2003) A new strategy for adaptively constructing multilayer feedforward neural networks. Neurocomputing 51:361385

Stanley KO, Miikkulainen R (2002) Efficient evolution of neural network topologies. In: IEEE Proceedings of the 2002 Congress on Evolutionary Computation, vol 2

Stanley KO, Bryant BD, Miikkulainen R (2003) Evolving adaptive neural networks with and without adaptive synapses.In: IEEE The 2003 Congress on Evolutionary Computation, vol 4

Fahlman SE, Lebiere C (1991) The Cascade-Correlation Learning Architecture Technical report

Moriarty DE, Mikkulainen R (1996) Efficient reinforcement learning through symbiotic evolution. Mach Learn 22:11–32

Moriarty DE, Miikkulainen R (1997) Forming neural networks through efficient and adaptive coevolution. Evolut Comput 5(4):373–399

Angeline PJ, Saunders GM, Pollack JB (1994) An evolutionary algorithm that constructs recurrent neural networks. Trans Neural Netw 5(1):54–65

Gruau F, Whitley D, Pyeatt L (1996) A comparison between cellular encoding and direct encoding for genetic neural networks. In: Koza JR et al (eds) Genetic programming: proceedings of the first annual conference. MIT Press, Cambridge, pp 81–89

Coello CA, Van Veldhuizen DA, Lamont GB (2002) Evolutionary algorithms for solving multi-objective problems, vol 242. Kluwer Academic, New York

Yu J, Wang S, Xi L (2008) Evolving artificial neural networks using an improved PSO and DPSO. Neurocomputing 71(4):1054–1060

Liu LB, Wang L, Jin Y, Huang D (2007) Designing neural networks using PSO-based memetic algorithm. In: International Symposium on Neural Networks. Springer, Berlin, pp. 219–224

Maniezzo V (1994) Genetic evolution of the topology and weight distribution of neural networks. IEEE Trans Neural Netw 5(1):39–53

Yao X (1999) Evolving artificial neural networks. Proc IEEE 87(9):1423–1447

Kirkpatrick S, Gelatt CD Jr, Vecchi MP (1983) Optimization by simulated annealing. Science 220(4598):671–680

Thierens D, Goldberg D (1994) Convergence models of genetic algorithm selection schemes, parallel problem solving from nature PPSN III. Springer, Berlin Heidelberg

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to rpevent neural networks from overfitting. J Mach Learn Res 15:1929–2958

Cybenko G (1989) Approximation by superpositions of a sigmoidal function. Math Control Signals Syst 2(4):303–314

Acknowledgments

This work has been supported by the project EC AComIn (FP7-REGPOT-20122013-1), by the Bulgarian Science Fund under Grant DFNI I02/20, and by the Grant DFNP-176-A1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kapanova, K.G., Dimov, I. & Sellier, J.M. A genetic approach to automatic neural network architecture optimization. Neural Comput & Applic 29, 1481–1492 (2018). https://doi.org/10.1007/s00521-016-2510-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-016-2510-6