Abstract

Wind energy is the primary energy source for a sustainable and pollution-free global power supply. However, because of its characteristic irregularity, nonlinearity, non-stationarity, randomness, and intermittency, previous studies have only focused on stability or accuracy, and the forecast performances of their models were poor. Moreover, in previous research, the selection of sub-models used for the combined model was not considered, which weakened the generalisability. Therefore, to further improve the forecast accuracy and stability of the wind speed forecasting model, and to solve the problem of sub-model selection in the combined model, this study developed a wind speed forecasting model using data pre-processing, a multi-objective optimisation algorithm, and sub-model selection for the combined model. Simulation experiments showed that our combined model not only improved the forecasting accuracy and stability but also chose different sub-models and different weights of the combined model for different data; this improved the model generalisability. Specifically, the MAPEs of our model are less than 4.96%, 4.60%, and 5.25% in one-, two-, and three-step forecast. Thus, the proposed combined model is demonstrated as an effective tool for grid dispatching.

Similar content being viewed by others

Data availability

Data not available due to legal and commercial restrictions.

References

Abdollahzade M, Miranian A, Hassani H, Iranmanesh H (2015) A new hybrid enhanced local linear neuro-fuzzy model based on the optimized singular spectrum analysis and its application for nonlinear and chaotic time series forecasting. Inf Sci 295:107–125

Aguilar Vargas S, Telles Esteves GR, Medina Maçaira P, Quaresma Bastos B, Cyrino Oliveira FL, Castro Souza R (2019) Wind power generation: a review and a research agenda. J Clean Prod 218:850–870

Bates JM, Granger CWJ (2001) The combination of forecasts. In: Essays in econometrics. Cambridge University Press, Cambridge, pp 451–468

Brown BG, Katz RW, Murphy AH (1984) Time series models to simulate and forecast wind speed and wind power. J Appl Meteorol 23:1184–1195

Bruninx K, Bergh KVD, Delarue E et al (2016) Optimization and allocation of spinning reserves in a low-carbon framework, IEEE Power and Energy Society General Meeting (PESGM). IEEE Trans Power Syst 31(2):872–882

Chang W-Y (2014) A literature review of wind forecasting methods. Power Energy Eng 2:161–168

Contreras J, Espinola R, Nogales F, Conejo A (2003) ARIMA models to predict next-day electricity prices. IEEE Trans Power Syst 18(3):1014–1020

Damousis IG, Alexiadis MC, Theocharis JB, Dokopoulos PS (2004) A fuzzy model for wind speed prediction and power generation in wind parks using spatial correlation. IEEE Trans Energy Convers 19(2):352–361

Deb K, Jain H (2014) An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part I: solving problems with box constraints. IEEE Trans Evol Comput 18(4):577–601

Diebold FX, Mariano R (1995) Comparing predictive accuracy. J Bus Econ Stat 20(1):134–144

Dorvlo AS, Jervase JA, Al-Lawati A (2002) Solar radiation estimation using artificial neural networks. Appl Energy 71(4):307–319

Egrioglu E, Aladag CH, Günay S (2008) A new model selection strategy in artificial neural networks. Appl Math Comput 195:591–597

Elman JL (1990) Finding structure in time. Cogn Sci 14:179–211

Enrique R, David B, Jorge-Juan B, Ana P (2019) Review of wind energy technology and associated market and economic conditions in Spain. Renew Sustain Energy Rev 101:415–427

Fried L, Qiao L, Sawyer S (2021) Global wind report, global wind energy council. https://gwec.net/members-area-market-intelligence/reports/

Fu T, Zhang S, Wang C (2020) Application and research for electricity price forecasting system based on multi-objective optimization and sub-models selection strategy. Soft Comput 24(20):15611–15637

Gers FA, Schmidhuber J (2000) Recurrent nets that time and count. Ieee-Inns-Enns Int Jt Conf Neural Netw 3:189–194

Global Wind Energy Council. Global wind statistics (2019), p. 2019 www.gwec.net/wpcontent/uploads/vip/GWEC_PRstats2018_EN_WEB.pdf.

Greff K, Srivastava RK, Koutnik J, Steunebrink BR, Schmidhuber J (2017) LSTM: a search space odyssey. IEEE Trans Neural Netw Learn Syst 28:2222–2232

Grigonyte E, Butkeviciute E (2016) Short-term wind speed forecasting using ARIMA model. Energetika 62(1–2):45–55

Guo ZH, Wu J, Lu HY, Wang JZ (2011) A case study on a hybrid wind speed forecasting method using BP neural network. Knowl Based Syst 24:1048–1056

Heng J, Hong Y, Hu J, Wang S (2022) Probabilistic and deterministic wind speed forecasting based on non-parametric approaches and wind characteristics information. Appl Energy 306:118029

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9:1735–1780

Huang GB, Zhu QY, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

İnan G, GöKtepe AB, Ramyar K et al (2007) Prediction of sulfate expansion of PC mortar using adaptive neuro-fuzzy methodology. Build Environ 42(3):1264–1269

Jang J-SR (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans Syst Man Cybern 23(3):665–685

Laumanns M, Thiele L, Zitzler E (2006) An efficient, adaptive parameter variation scheme for metaheuristics based on the epsilon-constraint method. Eur J Oper Res 169(3):932–942

Lei M, Shiyan L, Chuanwen J, Hongling L, Yan Z (2009) A review on the forecasting of wind speed and generated power. Renew Sustain Energy Rev 13(4):915–920

Li G, Shi J (2010) On comparing three artificial neural networks for wind speed forecasting. Appl Energy 87(7):2313–2320

Liu M, Ling YY (2003) Using fuzzy neural network approach to estimate contractors’ markup. Build Environ 38(11):1303–1308

Liu Z, Jiang P, Wang J, Zhang L (2022) Ensemble system for short term carbon dioxide emissions forecasting based on multi-objective tangent search algorithm. J Environ Manag 302:113951

Meng K, Yang H, Dong ZY, Guo W, Wen F, Xu Z (2016) Flexible operational planning framework considering multiple wind energy forecasting service providers. IEEE Trans Sustain Energy 7(2):708–717

Neshat M, Adeli A, Sepidnam G (2012) Predication of concrete mix design using adaptive neural fuzzy inference systems and fuzzy inference systems. Int J Adv Manuf Technol 63(1–4):373–390

Niu T, Wang J, Zhang K, Du P (2018) Multi-step-ahead wind speed forecasting based on optimal feature selection and a modified bat algorithm with the cognition strategy. Renew Energy 118:213–229

Riahy G, Abedi M (2008) Short term wind speed forecasting for wind turbine applications using linear prediction method. Renew Energy 33:35–41

Schwenker F, Kestler HA, Palm G (2001) Three learning phases for radial-basis- function networks. Neural Netw 14(4–5):439–458

Sfetsos A (2000) A comparison of various forecasting techniques applied to mean hourly wind speed time series. Renew Energy 21(1):23–35

Shamshad A, Bawadi M, Hussin WW, Majid T, Sanusi S (2005) First and second order Markov chain models for synthetic generation of wind speed time series. Energy 30(5):693–708

Smith DA, Mehta KC (1993) Investigation of stationary and nonstationary wind data using classical Box-Jenkins models. J Wind Eng Indus Aerodyn 49:319–328

Soman SS, Zareipour H, Malik O et al (2010) A review of wind power and wind speed forecasting methods with different time horizons. In: North American Power Symposium (NAPS). IEEE, 2010. 1–8.

Specht DF (1991) A general regression neural network. IEEE Trans Neural Netw 2(6):568–576

Torres JL, Garca A, Blas MD, DeFrancisco A (2005) Forecast of hourly average wind speed with arma models in navarre (Spain). Sol Energy 79(1):65–77

Vapnik V (1997) The nature of statistic learning theory. Springer, Berlin

Wang X, Sideratos G, Hatziargyriou N et al (2004) Wind speed forecasting for power system operational planning. In: International conference on probabilistic methods applied to power systems. IEEE, pp 470–474

Wang J, Zhang W, Wang J et al (2014) A novel hybrid approach for wind speed prediction. Inf Sci 273:304–318

Wang S, Zhang N, Wu L et al (2016) Wind speed forecasting based on the hybrid ensemble empirical mode decomposition and GA-BP neural network method. Renew Energy 94:629–636

Wang JZ, Yang WD, Du P, Niu T (2018) A novel hybrid forecasting system of wind speed based on a newly developed multi-objective sine cosine algorithm. Energy Convers Manag 163:134–150

Wang C, Zhang S, Xiao L, Fu T (2021) Wind speed forecasting based on multi-objective grey wolf optimisation algorithm, weighted information criterion, and wind energy conversion system: a case study in Eastern China. Energy Convers Manage 243:114402

Wu J, Hsu C, Chen H (2009) An expert system of price forecasting for used cars using adaptive neuro-fuzzy inference. Expert Syst Appl 36(4):7809–7817

Xiao L, Wang J, Dong Y, Wu J (2015) Combined forecasting models for wind energy forecasting: a case study in China. Renew Sustain Energy Rev 44:271–288. https://doi.org/10.1016/j.rser.2014.12.012

Xiao L, Dong Y, Dong Y (2018) An improved combination approach based on Adaboost algorithm for wind speed time series forecasting. Energy Convers Manage 160:273–288

Yan J, Li F, Liu Y, Gu C (2017) Novel cost model for balancing wind power fore- casting uncertainty. IEEE Trans Energy Convers 32(1):318–329

Yang Y, Chen Y, Wang Y, Li C, Li L (2016) Modelling a combined method based on ANFIS and neural network improved by DE algorithm: a case study for short- term electricity demand forecasting. Appl Soft Comput. https://doi.org/10.1016/j.asoc.2016.07.053

Yu C, Li Y, Zhang M (2017) An improved wavelet transform using singular spectrum analysis for wind speed forecasting based on elman neural network. Energy Convers Manag 148:895–904

Zhang W, Qu Z, Zhang K, Mao W, Ma Y, Fan X (2017) A combined model based on CEEMDAN and modified flower pollination algorithm for wind speed forecasting. Energy Convers Manag 136:439–451

Zhang S, Wang J, Guo Z (2018) Research on combined model based on multi-objective optimization and application in time series forecast. Soft Comput. https://doi.org/10.1007/s00500-018-03690-w

Zhang S, Wang C, Liao P, Xiao L, Fu T (2022) Wind speed forecasting based on model selection, fuzzy cluster, and multi-objective algorithm and wind energy simulation by Betz's theory. Expert Syst Appl 116509

Acknowledgements

This work was supported by Western Project of the National Social Science Foundation of China (Grant No.18XTJ003).

Funding

The work is funded by 'Western Project of the National Social Science Foundation of China (Grant No.18XTJ003).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

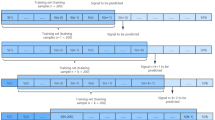

For the data, only with a better understanding of the features of the data we can better select the model to prepare for future work. In order to achieve better results, we must consider the characteristics of the data. Generally speaking, the linear model has a better fitting effect for linear data, as the nonlinear model does for nonlinear data.

Only when we understand the characteristics of the data we can achieve good results in future forecasting work. For the data, it is not just linear or nonlinear, but both. Therefore, it is necessary to judge the linear nonlinearity of the data used in this paper, so we constructed the above experiments.

From the results of Tables 7 and 8, wind speed data are both linear and nonlinear by hypothesis test. So, the linear models and nonlinear models considered in our proposed forecasting model are correct and necessary.

1.1 Basic methods and theories

1.1.1 Support vector machines

The support vector machine (SVM) method is a machine learning method that was proposed by Vapnik (1997) and the nonlinear function can be written as follows:

where \(\phi \left( x \right)\) is the kernel function that maps the data from low-dimensional space to high dimensional space. \({\upomega }\) and b are the coefficient and threshold, respectively.

Using loss function \(\varepsilon\) to optimize regression function, and the best regression function is found by the minimum value of the loss function. \(\varepsilon\) is as follows:

Constraints:

where C is a penalty factor: \(\xi_{i}\), \( \hat{\xi }_{i}\) are slack variables, b is the offset, and \(x_{i}\), \(y_{i}\) are the input and output, respectively.

After the operation, the linear regression function can be obtained as follows:

where \( K\left( {x_{g} x_{i} } \right)\) is the kernel function of SVM.

1.1.2 Long short-term memory network

The long short-term memory (LSTM) network is a recurrent neural network (RNN), which was proposed by Hochreiter and Schmidhuber (Hochreiter and Schmidhuber 1997) in 1997 and can be written as follows (Greff et al. 2017):

where t is the time; N is the cells of LSTM; \(\omega_{z} , \omega_{i} , \omega_{f} , \omega_{o} \in R^{N \times M} \) are the input weights; \(r_{z} , r_{i} , r_{f} , r_{o} \in R^{N \times M}\) are the recurrent weights; \(p_{i} , p_{f} , p_{o} \in R^{N}\) are the peephole weights (Gers and Schmidhuber 2000); \(b_{z} , b_{i} , b_{f} , b_{o} \in R^{N}\) are the biases; \( g\left( x \right), h\left( x \right)\) and \( \sigma \left( x \right)\) are activation functions; \(z^{t}\) is the activation of the input block; \(i^{t}\) is the activation of the input gate; \(f ^{t}\) is the activation of the forget gate; \(c^{t}\) is the cell state at time t; \(o^{t}\) is the activation of the output gate; and \(y^{t}\) is the output of the cell at time t.

1.1.3 Autoregressive integrated moving average

The autoregressive integrated moving average (ARIMA) model is one of the most popular forecasting models in the wind speed forecasting field (Contreras et al. 2003) and can be written as follows:

These above formulas are recorded as ARIMA (p, d, q), where \(B^{q} X_{t} = X_{t - q}\), \(X_{t}\) is a time series at time t, \(\varepsilon_{t}\) is the random error at time t, and B is the backward shift operator.

1.1.4 Back-propagation neural network

Back-propagation neural network (BPNN) is a widely used multi-layer feedforward neural network that is based on a gradient descent method that minimizes the sum of the squared errors between the actual output value and the expected output value. The output function is between 0 and 1, which can convert input to output to achieve continuous non-linear mapping (Guo et al. 2011) and can be written as follows:

The topology of the BPNN is as follows:

where Xmin and Xmax are the minimum and maximum value of the input array or output vectors, and \(X_{i}^{{\prime }}\) denotes the real value of each vector.

Step 1. Calculate the outputs of all hidden layer nodes:

where the activation value of node j is \({\text{net}}_{j}\), \(w_{ji}\) represents the connection weight from input node i to hidden node j, bj represents the bias of neuron j, yj represents the output of hidden layer node j, and f is the activation function of a node, which is usually a sigmoid function.

Step 2. Calculate the output data of the neural network:

where \(w_{0j}\) represents the connection threshold from hidden node j to the output node, b0 represents the bias of the neuron, O1 represents the output data of the network, and f0 is the activation function of the output layer node.

Step 3. Minimize the global error via the training algorithm:

where Z represents the real data vector of the output, and m represents the number of outputs.

1.1.5 Generalized regression neural network

Generalized regression neural networks (GRNN) were proposed by Specht (1991), and the theoretical basis was nonlinear kernel regression analysis. The network was based on nonlinear regression theory and consisted of four layers of neurons: the input layer, pattern layer, summation layer, and output layer.

Definition 1

Setting the joint probability density function of the random variable x and y to f (x, y), the observed value of x is X. Thus, the estimation value as follows:

Definition 2

To set the probability density function f (x, y), which is unknown, but can be obtained using a nonparametric estimation, we use the sample observation values of x and y:

where Xt and Yt are the sample observation values of x and y, respectively; \(\delta\) is the smoothing parameter; n is the number of samples; and p is the dimensionality of the random vector x. Here, we can calculate Y using f (x, y) instead of f (x, y) in formula (30). Finally,

where \(\hat{Y}(X)\) is the weighted average of all sample observations of \(Y_{t}\), and every weight factor \(Y_{t}\) is the Euclidean squared distance index value between the corresponding samples \(X_{t}\) and X.

1.1.6 Radial basis function neural network

The radial basis function neural network (RBFNN) (Schwenker et al. 2001) is an efficient feedforward neural network that exhibits better approximation performance and global optimal ability than other feedforward networks. The structure of the neural network is simple and the training speed is fast. An RBFNN also includes three layers: an input layer, a hidden layer with a nonlinear activation function (the radial basis function), and an output layer.

The RBFNN was proposed by Schwenker, and this network exhibits better approximation performance and global optimal ability than other feedforward networks. The neural network also has a simple structure and fast training speed. There are three layers: an input layer, an output layer, and a hidden layer with a nonlinear activation function.

Definition 1

The modelled input is a real-number vector \(x \in {\mathbb{R}}^{n}\). Then, the output vector of the neural network is a scalar function of the input vector, \(\varphi :{\mathbb{R}}^{n} \to {\mathbb{R}}\), given by

where N represents the total number of neurons in the hidden layer, the centre vector of the ith neuron is represented by \({\text{c}}_{{\text{i}}}\), and \({\text{a}}_{{\text{i}}}\) is the weight of neuron i in the linear output neuron.

Definition 2

A radial basis function is a scalar function that is radially symmetric. Thus, it is defined as a monotone function of the Euclidean distance between any point x in space and \(c_{i}\) of a centre vector. The most commonly used radial basis function is the Gauss kernel function, given as follows:

where \(c_{i}\) is a centre vector and \(\sigma\) is a width parameter that controls the radial scope of the function.

1.1.7 Adaptive network-based fuzzy inference system

Jang (1993) combined the best features of the fuzzy system and neural network to construct an adaptive network-based fuzzy inference system (ANFIS). ANFIS integrates the human inference style of fuzzy inference system (FIS) by using input–output sets and a set of if–then fuzzy rules. FIS (Neshat et al. 2012) has structured knowledge, in which each fuzzy rule describes the current behaviour of the system; however, it lacks adaptability to changes in the external environment. Therefore, the concept of neural network learning and FIS are combined in ANFIS (İnan et al. 2007). ANFIS is a method that uses neural network learning and fuzzy inference to simulate complex nonlinear mapping. This method has the ability to deal with the uncertain noisy and imprecise environments (Liu and Ling 2003). ANFIS uses the training process of the neural network to adjust the membership function and the related parameters close to the expected data set (Wu et al. 2009).

Layer 1: This layer is the input layer, which is responsible for the fuzziness of the input signal. Each node I is a node function represented by square node:

where x (or y) is the input of node i, Ai, Bi are fuzzy sets, and \(O_{i}^{1}\) is the membership function value of Ai and Bi, indicating the degree to which X and Y belong to Ai and Bi. Usually, the \(\mu_{{A_{i} }}\) and \(\mu_{{B_{i} }}\) are chosen as bell-shaped functions or Gaussian functions. The membership function has some parameters; these parameters are called premise parameters.

Layer 2: The nodes in this layer are responsible for multiplying the input signals and calculating the firing strength of each rule. The output is

where the output of each node represents the credibility of the rule.

Layer 3: This layer normalizes all applicability, each node is represented by N. The ratio of the ith rule’s firing strengths to the sum of all rules’ firing strengths is calculated by the ith node:

Layer 4: Calculating the output of the fuzzy rule, the output is

where \(\overline{\omega }_{i}\) is the output of layer 3 and {pi, qi, ri} is the parameter set (consequent parameters).

Layer 5: The single node of this layer is a fixed node that calculates the total output of all input signals:

1.1.8 Extreme learning machine

Extreme learning machine (ELM) is a machine learning algorithm proposed by Huang, which was designed for single-layer feedforward neural networks (SLFNNs) (Huang et al. 2006). The main feature of ELM is that the parameters of hidden layer nodes can be randomly generated without adjustment. The learning process only needs to calculate the output weights.

Definition 3

An SLFNN consists of three parts: an input layer, hidden layer, and output layer. The output function of the hidden layer is given as follows:

where x is the input vector, \(\beta\) is the output weight of the ith hidden node, and h(x) is the hidden layer output mapping, called the activation function, defined as follows:

Here, \(b_{i}\) is the parameter of the feature mapping (also called the node parameter), and \(a_{i}\) is called the input weight. In the calculation, the parameter of the feature mapping is randomly initialized and is not adjusted. Hence, the feature mapping of the ELM is also random.

1.1.9 Elman neural network

The Elman neural network is a machine learning algorithm proposed by Elman designed for SLFNN. In addition to the input layer, the hidden layer, and the output layer, it also has a special contact unit. The contact unit is used to memorize the previous output value of the hidden layer unit. It can be considered as a delay operator. Therefore, the feedforward link part can be corrected for the connection weight, whilst the recursive part is fixed, that is, the learning correction cannot be performed. The mathematic model of Elman can be written as follows (Elman 1990):

where f (x) is a sigmoid function, and \(0 \le \alpha < 1\) is a self-connected feedback gain operator. If the \(\alpha = 0\), then the network is a standard Elman neural network, if the \(\alpha \ne 0\), then the network is a modified Elman neural network, u is the input data with n-dimensional vector, x is a hidden layer output, Xc is the hidden layer output with an n-dimensional vector, y is the output for the network with an m-dimensional vector, and WI1, WI2, and WI3, are connection weights with \(n \times n\)-, \(n \times q\)-, and \(m \times m\)-dimensional matrices, respectively.

We set the actual output of the k-step system to be \(y_{d} (k)\), define the error function as \( E\left( k \right) = \frac{1}{2}(y_{d} (k) - y(k))^{T} (y_{d} (k) - y(k))\), and let derivative E be the connection weights A, WI1, WI2, WI3. The learning algorithm of an Elman network can be obtained using the gradient descent method:

where r is the node number of the input layer, n is the node number of the hidden layer and unit layer, and m is the node number of the output layer. \(\eta_{1}\), \(\eta_{2}\), and \(\eta_{3}\) are the learning steps of \(W^{I1}\), \(W^{I2}\), and \(W^{I3}\), respectively.

1.1.10 Weighted information criterion (WIC)

The weighted information criterion (WIC) was initially proposed to find the best ANN model (Egrioglu et al. 2008). In this paper, WIC was applied to four real-time series datasets in order to measure the forecasting performance of the examined sub-models’ architectures and to decide the sub-models of the combined model. The number of input nodes depends on the number of sub-models of the combined model. The mean absolute percentage error (MAPE), root mean square error (RMSE), Akaike information criterion (AIC), Bayesian information criterion (BIC), and direction accuracy (DA) were chosen as the model selection criteria. The MAPE and RMSE were used to detect deviations between the actual values and the forecasting values. The AIC and BIC were used for penalizing large models. The DA measured the forecasting direction accuracy. Although the MAPE and RMSE can also be used individually to select a model, the models they select tend not to be sufficiently detailed. These criteria are calculated as follows:

where y is the actual value, \(\hat{y}\) is the forecasted value, T is the total number of data items, and m is the number of ANN weights.

In this paper, we used a special criterion called the modified direction accuracy (MDA), which was proposed according to the special direction accuracy criterion. The MDA criterion is calculated in the following way:

The following describes the algorithm of model selection strategy based on WIC:

(1) All of the structure of the sub-models, which consist of the combined model are determined. For instance, we have five input layer nodes, one output layer node, and 15 hidden layer nodes. Thus, the total number of possible structures is 18.

(2) The best weights of AIC, BIC, RMSE, MAPE, DA, and MDA are determined using the training data and calculated with the training data.

(3) The five criteria must be standardized for neural network structures.

(4) Then, the WIC is calculated as follows:

WIC = 0.2 × (MAPE + RMSE) + 0.1 × (AIC + BIC) + 0.2 × (MDA + (1 – DA)).

(5) We choose an architecture with the minimum WIC.

1.1.11 The theory of the combined model

The combination forecasting theory indicates (Bates and Granger 2001) that if the M forecasting models can solve a certain forecasting problem, the weight coefficients should be appropriately selected, and then the results of the M forecasting methods are added to obtain a new model named the combined model. The results of the combined model are better than the M models. Assuming that the actual time series data is presented in the form of y, the number of sample points is i, yi is the forecasting value obtained by the ith forecast model, the forecast error is e, and the weight coefficient of the ith forecasting model is w, then the general combined forecast model can be expressed as follows:

where \(\hat{\omega }_{i}\) is the estimated value of \(\omega_{i}\) and represents the weight of each single model, \(\hat{y}_{t}\) is the forecasting value of the combined model. Determining the weight coefficient of each model is a key step in establishing a combined forecasting model. Then, by solving the optimization problem of the combination model, an optimal combination model can be obtained. Then this optimization problem can be expressed as:

When the predefined absolute error or the maximum number of iterations are reached, the optimization process is stopped.

1.1.12 Multi-objective optimization theory

The optimization problems of the objective function with multiple measurement indexes in the domain of definition can be solved by multi-objective optimizations. The objective function checks and balances the shortcomings of the forecast model in many aspects by assigning weights to each measurement index, to improve the forecasting accuracy and stability. Generally speaking, multi-objective optimization problems (MOPs) can be divided into two categories: constrained problems and non-constrained problems. A constrained problem with j inequality and k equality constraints can be expressed as (Laumanns et al. 2006):

where M is the number of objectives, \(x = (x_{1} , x_{2} , \ldots , x_{n} )^{T}\) is the decision vector, and n is the number of decision variables. In (61), \(\Omega = \mathop \prod \nolimits_{i = 1}^{n} \left[ {x_{i}^{L} , x_{i}^{U} } \right] \subseteq R^{n}\) is called the decision space, where \(x_{i}^{L}\) and \(x_{i}^{U}\) are the lower and upper limits of the decision variables, respectively.

When the inequality and equality constraints in (61) are omitted, an unconstrained multi-objective problem is obtained, which is expressed as follows

And this method was widely used in many fields like carbon dioxide emissions forecast (Liu et al. 2022), electricity price forecast (Fu et al. 2020) and electricity demand forecast (Zhang et al. 2018).

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fu, T., Zhang, S. Wind speed forecast based on combined theory, multi-objective optimisation, and sub-model selection. Soft Comput 26, 13615–13638 (2022). https://doi.org/10.1007/s00500-022-07334-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-022-07334-y