Abstract

This paper presents a literature survey on time-dependent statistical modelling of extreme waves and sea states. The focus is twofold: on statistical modelling of extreme waves and space- and time-dependent statistical modelling. The first part will consist of a literature review of statistical modelling of extreme waves and wave parameters, most notably on the modelling of extreme significant wave height. The second part will focus on statistical modelling of time- and space-dependent variables in a more general sense, and will focus on the methodology and models used also in other relevant application areas. It was found that limited effort has been put on developing statistical models for waves incorporating spatial and long-term temporal variability and it is suggested that model improvements could be achieved by adopting approaches from other application areas. In particular, Bayesian hierarchical space–time models were identified as promising tools for spatio-temporal modelling of extreme waves. Finally, a review of projections of future extreme wave climate is presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

According to casualty statistics, one of the major causes of ship losses is bad weather (Guedes Soares et al. 2001), which stresses the importance of taking extreme sea state conditions adequately into account in ship design. Therefore, a correct and thorough understanding of meteorological and oceanographic conditions, most notably the extreme values of relevant wave and wind parameters, is of paramount importance to maritime safety. Thus, there is a need for appropriate statistical models to describe these phenomena.

When designing ships and other marine and offshore structures, relevant safety regulations and design standards should be based on the best available knowledge. Meteorological data for the last 50+ years are available and this is often assumed to be representative also for the current situation. However, ships and other marine structures are designed for lifetimes of several decades and design codes and standards should be based on knowledge about the operating environment throughout the expected lifetime of the structure—several decades into the future. Such knowledge will also be crucial for any risk assessment of maritime transportation or offshore operations.

According to the IPCC Fourth Assessment Report (IPCC 2007), the globe is currently experiencing climate change and the Earth is warming. It is also very likely that human activities and emission of greenhouse gasses are mainly responsible for the recent rise of global temperatures. Projections of future climate indicate that it is very likely that frequencies and intensities of extreme weather events will increase (IPCC 2007). Model projections also show a poleward shift of the storm tracks with more extreme wave heights in those regions.

Thus, it is increasingly evident that climate change is a reality. An overwhelming majority of researchers and scientists agree on this and it is reasonable to assume that the averages and extremes of sea states are changing and cannot be considered stationary. Hence, it is no longer sufficient to base design codes on stationary wave parameters without any consideration of how these are expected to change in the future. There is a need for time-dependent statistical models that can take the time-dependency of the integrated wave parameters into account, and also adequately model the uncertainties involved, in order to predict realistic operating environments throughout the lifetime of ships and marine structures.

This paper aims at providing a comprehensive, up-to-date review of statistical models proposed for modelling long-term variability in extreme waves and sea states as well as a review of alternative approaches from other areas of application. The paper is organized as follows: Section 2 outlines alternative sources of wave data, Sect. 3 comprises a review of statistical models for extreme waves, Sect. 4 presents a review of relevant spatio-temporal statistical models from other areas of application, Sect. 5 reviews projections of future wave climate and Sect. 6 concludes with some recommendations for further research. An abbreviated version of this work was presented at the OMAE conference this year (Vanem 2010).

Efforts have been made to include all relevant and important work to make this literature survey as complete as possible, and this has resulted in a rather voluminous list of references at the end of the paper. Notwithstanding, due to the enormous amount of literature in this field some important works might inevitably have been omitted. This is unintended and it should be noted that important contributions to the discussion herein might exist of which I have not been aware. Nevertheless, it is believed that this literature study contains a fair review of relevant literature and as such that it gives a good indication of state-of-the art within the field and may serve as a basis for further research on stochastic modelling of extreme waves and sea states.

1.1 Integrated sea state parameters

The state of the sea changes constantly, and it is therefore neither very practical nor very useful to describe the sea for an instantaneous point in time. Therefore, sea states are normally described by different averages and extreme values for a certain period of time, often referred to as integrated sea state parameters. Typically, such integrated parameters include the significant wave height,Footnote 1 mean wave period, mean main wave direction, spread of the wave direction and mean swell. Such integrated wave parameters represent averages over a defined period of time, typically in the order of 20–30 min.

Integrated wave parameters, which are averages over different periods of time, will have its own averages and extremes. Of particular interest may be the m-year return value of the significant wave height, SWH m , which is defined as the value of H S that is exceeded on average once every m years. In ship design, the SWH20 has traditionally been of particular interest since ships are normally designed for a lifetime of 20 years. The modelling of such extreme values, for example for the significant wave height, is therefore of interest.

It is also of interest to investigate how such average wave parameters vary over time. In particular, long term variations (i.e. how these parameters will vary in the next 50–100 years) will be an important basis for design of marine and offshore structures with expected lifetimes in the range of several decades and also for maritime risk analyses. This is of particular importance at times where climate change indicates that the future is not well represented by today’s situation (i.e. where an increase in extreme weather and sea state is expected).

1.2 Waves as stochastic processes

Although the dynamics of the sea and the mechanisms underlying the generation of waves on the sea surface inevitably follows the laws of physics and therefore, in principle, the sea state could be described deterministically, in reality this is not possible due to the complexity of the system. Hence, the description of waves and the sea must be done probabilistically. The sea is a dynamic system that is influenced by innumerable factors and an infinite number of interrelated parameters would be needed in order to provide an exact description of the sea in any given point in time. It is simply not possible to know all and every one of these parameters. The unknown parameters introduce uncertainties to any description of the system and an exact description of the sea is therefore not feasible. Thus, the problem of describing the sea turns into a statistical problem, and probabilistic models are needed in order to represent waves on the sea surface and to provide a better understanding of the maritime environment in which ships operate. In this regard stochastic models would seem to be the most appropriate approach to describe extreme waves. Also, the fact that the sea state is normally described through different average and extreme properties, as discussed briefly above, indicates that statistical tools are appropriate to model waves and sea states. A comprehensive overview of statistical techniques, methodologies, theories and tools used in climatic analyses is presented in von Storch and Zwiers (1999).

Stochastic modelling of ocean waves can be performed on two very different time scales. In the short-term models, the parameters of most concern are those for individual waves such as individual wave height, wave length and period, etc. The times involved in such models are normally in the order from a few seconds to a couple of hours. The long-term models mainly refer to the description of spectral parameters, and the times that are involved normally span over many years. It is the latter time scales that are of main interest in the present work, considering modelling of possible long-term trends due to climate change.

1.3 Predicting the impact of climate change on extreme sea states

The state of the oceans and the characteristics of the waves are influenced by innumerable external factors, and the most influential boundary conditions are related to the atmosphere and the global and local climate in general. Atmospheric pressure, wind, temperature, precipitation, solar radiation and heat, tidal movements, the rotation of the earth and movements of the seabed (e.g. from earthquakes or volcanic activities) are examples of external factors that jointly influence the generation of waves on the sea surface. In one sense, some of the average and extreme properties of the sea state can be regarded as stationary if the overall average boundary conditions does not change. That is, in spite of the continuous variations of sea states over time, the averages such as seasonal average wave heights and return periods for extreme waves can be considered as stationary if the average boundary conditions (e.g. average atmospheric pressure, average wind, average temperatures, etc.) remain stationary.

However, in recent years it has become increasingly apparent that the climate system overall is not stationary and that the climate will change in the near future—in fact it has been observed that the climate is already undergoing a change with a global long-term trend towards higher temperatures and more frequent and intense severe weather events, although local and regional trends may differ from this global trend. These climate changes—man-made or not—will thus change the overall boundary conditions for the sea, and the assumption that the average sea states can be regarded as stationary ceases to be valid.

In order to predict future trends in sea state parameters in the non-stationary case, one may therefore start with predicting the trends in the boundary conditions such as temperature, atmospheric pressure and wind. Assuming that a significant part of the climate change is man-made and can be ascribed to the increasing emission of greenhouse gases, most notably CO2, and aerosols, predictions of climate change can be made based on various emission scenarios or forcing scenarios (Nakićenović et al. 2000). These forcing scenarios can then be fed into climate models to predict global trends in meteorological variables, which can again be used to predict trends in average and extreme properties of sea waves. However, most wave models are deterministic and not able to handle the inherent uncertainties involved in a rigorous manner.

Estimates of future H S return values are difficult since there are no projections of future H S fields. However, projections of sea level pressure provided by climate models are reasonable reliable and it is known that the H S fields are highly correlated with sea level pressure fields. Therefore, one approach could be to model H S fields by regressing on projected sea level pressure fields, as was done in Wang et al. (2004). Other covariates may also be used to predict changes in extreme wave climate from projected changes in the overall climate, and the utilization of such dependencies may prove important in modelling long-term trends in extreme waves.

2 Wave data and data sources

As in all statistical modelling, a crucial prerequisite for any sensible modelling and reliable analysis is the availability of statistical data. For example, if models describing the spatio-temporal variability of extreme waves are to be developed, wave data with sufficient spatio-temporal resolution is needed. Furthermore, the lack of adequate coverage in the data will restrict the scope of the statistical models that can be used.

Wave data can be obtained from buoys, laser measurements, satellite images, shipborne wave recorders or be generated by numerical wave models. Of these, buoy measurements are most reliable, but the spatial coverage is limited. For regions where buoy data are not available, satellite data may be an alternative for estimation of wave heights (Krogstad and Barstow 1999; Panchang et al. 1999), and there are different satellites that collect such data. Examples of satellite missions are the European Remote Sensing Satellites (ERS-1 and ERS-2), the Topex/Poseidon mission and Jason-1 and -2 missions.

Wave parameters derived from satellite altimeter data were demonstrated to be in reasonable agreement with buoy measurements by the end of last century (Hwang et al. 1998). More recently, further validation of wave heights measured from altimeters have been performed, and the agreement with buoy data is generally good (Queffeulou 2004; Durrant et al. 2009). However, corrections due to biases may be required, and both negative and positive biases for the significant wave height have been reported, indicating that corrections are region-dependent (Meath et al. 2008). Sea state parameters such as significant wave height derived from synthetic aperture radar images taken from satellites were addressed in Lehner et al. (2007).

Ship observations are another source of wave data which covers areas where buoy wave measurements are not available. The Voluntary Observing Ship (VOS) scheme has been in operation for almost 150 years and has a large set of voluntary collected data. However, due to the fact that ships tend to avoid extreme weather whenever possible, extreme wave events are likely to be under-represented in ship observations and hence such data are not ideally suited to model extreme wave events (DelBalzo et al. 2003; Olsen et al. 2006).

Recently, a novel wave acquisition stereo system (WASS) based on a variational image sensor and video observational technology in order to reconstruct the 4D dynamics of ocean waves was developed (Fedele et al. 2009). The spatial and temporal data provided by this system would be rich in statistical content compared to buoy data, but the availability of such data are still limited.

In general, measurements of wave parameters are more scarce than meteorological data such as wind and pressure fields which are collected more systematically and covering a wider area. An alternative is therefore to use output from wave models that uses meteorological data as input rather than to use wave data that are measured directly.

Wave models are normally used for forecast or hindcast of sea states (Guedes Soares et al. 2002). Forecasts typically predicts sea states up to 3–5 days ahead. Hindcast modelling can be used to calibrate the models after precise meteorological measurements have been collected. It can also be used as a basis for design but it is stressed that quality control is necessary and possible errors and biases should be identified and corrected (Bitner-Gregersen and de Valk 2008).

Currently, data are available from various reanalysis projects (Caires et al. 2004). For example, 40 year of meteorological data are available from the NCEP/NCAR reanalysis project (Kalnay et al. 1996) that could be used to run wave models (Swail and Cox 2000; Cox and Swail 2001). A more recent reanalysis project, ERA-40 (Uppala et al. 2005), was carried out by the European Centre for Medium-Range Weather Forecasts (ECMWF) and covers a 45-year period from 1957 to 2002. The data contain six-hourly fields of global wave parameters such as significant wave height, mean wave direction and mean wave period as well as mean sea level pressure and wind fields and other meteorological parameters. A large part of this reanalysis data are freely available for download from their website for research purposes.Footnote 2

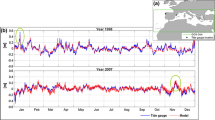

It has been reported that the ERA-40 dataset contains some inhomogeneities in time and that it underestimates high wave heights (Sterl and Caires 2005), but corrected datasets for the significant wave height have been produced (Caires and Sterl 2005). Hence, a new 45-year global six-hourly dataset of significant wave height has been created, and the corrected data shows clear improvements compared to the original data. In Caires and Swail (2004) it is stated that this dataset can be obtained freely from the authors for scientific purposes.

3 Review of statistical models for extreme waves

In order to model long-term trends in the intensity and frequency of occurrence of extreme wave events or extreme sea states due to climate change, appropriate models must be used. There are numerous stochastic wave models proposed in the literature, but most of these are developed for other purposes than predicting such long-term trends. Models used for wave forcasting, for example in operational simulation of safety of ships and offshore structures typically have a short-term perspective, and cannot be used to investigate long-term trends. Also, many wave models assume stationary or cyclic time series, which would not be the case if climate change is a reality.

There are different approaches to estimating the extreme wave heights at a certain location based on available wave data, and some of the most widely used are the initial distribution method, the annual maxima method, the peak-over-threshold method and the MEan Number of Up-crossings (MENU) method. The initial distribution method uses data (measured or calculated) of all wave heights and the extreme wave height of a certain return period is estimated as the quantile h p of the wave height distribution F(h) with probability p. The annual maxima approach uses only the annual (or block) maxima and the extreme wave height will have one of the three limit distributions referred to as the family of the generalized extreme value distribution. The peak-over-threshold approach uses data with wave heights greater than a certain threshold, and thus allows for increased number of samples compared to the annual maxima approach. Waves exceeding this threshold would then be modelled according to the Generalized Pareto distribution. However, the peaks-over-threshold method has demonstrated a clear dependence on the threshold and is therefore not very reliable. The MENU method determines the return period of an extreme wave of a certain wave height by requiring that the expected or mean number of up-crossings of this wave height will be one for that time interval.

Another approach useful in extreme event modelling is the use of quantile functions, an alternative way of defining a probability distribution (Gilchrist 2000). The quantile function, Q, is a function of the cumulative probability of a distribution and is simply the inverse of the cumulative density function: Q(p) = F −1(p) and F(x) = Q −1(x). This function can then be used in frequency analysis to find useful estimates of the quantiles of relevant return periods T of extreme events in the upper tail of the frequency distribution, Q T = Q(1 − 1/T).

Yet another approach for estimating the maxima of a stationary process is to model the number of extreme events, defined as the number of times the process crosses a fixed level u in the upward direction, as a Poisson process (a counting process {N(t), t ≥ 0} with N(0) = 0, independent increments and with number of events in a time interval of length t Poisson distributed with mean λt is said to be a Poisson process with rate λ) and apply the Rice formula to compute the intensity of the extreme events (see e.g. Rychlik 2000).

In the following, a brief review of some wave models proposed in the literature will be given. This includes a brief description of some short-term and stationary wave models as well as a more comprehensive review of proposed approaches to modelling long term trends due to global climatic changes. An introduction to stochastic analysis of ocean waves can be found in Ochi (1998) and Trulsen (2006), albeit the latter with a particular emphasis on freak or rogue waves.

3.1 Short-term stochastic wave models

Waves are generated from wind actions and wave predictions are often based on knowledge of the generating wind and wind-wave relationships. Most wave models for operational wave forecasting is based on the energy balance equation; there is a general consensus that this describes the fundamental principle for wave predictions, and significant progress have been made in recent decades (Janssen 2008). Currently, the third-generation wave model WAM is one of the most widely used models for wave forecasting (The WAMDI Group 1988; Komen et al. 1994) computing the wave spectrum from physical first principles. Other widely used wave models are Wave Watch and SWAN, and there exist a number of other models as well (The Wise Group et al. 2007). However, wave generation is basically an uncertain and random process which makes it difficult to model deterministically, and in Deo et al. (2001), Bazargan et al. (2007) approaches using neural networks were proposed as an alternative to deterministic wave forecasting models.

There are a number of short-term, statistical wave models for modelling of individual waves and for predicting and forecasting sea states in the not too distant future. Most of the models for individual waves are based on Gaussian approaches, but other types of stochastic wave models have also been proposed to account for observed asymmetries (e.g. adding random correction terms to a Gaussian model (Machado and Rychlik 2003) or based on Lagrangian models (Lindgren 2006; Aberg and Lindgren 2008)). Asymptotic models for the distribution of maxima for Gaussian processes for a certain period of time exist, and under certain assumptions, the maximum values are asymptotically distributed according to the Gumbel distribution. However, as noted in Rydén (2006), care should be taken when using this approximation for the modelling of maxima of wave crests. A similar concern was expressed in Coles et al. (2003), albeit not related to waves.

Given the short-term perspective of these types of models, they cannot be used to describe long-term trends due to climate change, nor to formulate design criteria for ships and offshore structures, even though they are important for maritime safety during operation. Improved weather and wave forecasts will of course improve safety at sea, but the main interest in the present study is on long-term trends in ocean wave climate, and the effect this will have on maritime safety and on the design of marine structures. Therefore, short-term wave models will not be considered further herein.

3.1.1 Significant wave height as a function of wind speed

The significant wave height for a fully developed sea, sometimes referred to as the equilibrium sea approximation, given a fixed wind speed have been modelled as a function of the wind speed in different ways, for example as \(H_S \propto U^{5/2}\) or \(H_S \propto U^2\) (Kinsman 1965). This makes it possible to make short-term predictions of the significant wave height under the assumptions of a constant wind speed and assuming unlimited fetch and duration. For developing sea conditions, with limited fetch or limited wind duration, the significant wave height as a function of wind speed, U (m/s) and respectively fetch X (km) and duration D (h) has been modelled in different ways, for example as H S ∼ X 1/2 U and \(H_S \propto D^{5/7}U^{9/7}\) (Özger and Şen 2007).

However, it is observed that the equilibrium wind sea approximation is seldom valid, and an alternative model for predicting the significant wave height for wind waves, H S from the wind speed U 10 at a reference height of 10 m were proposed in Andreas and Wang (2007), using a different, yet simple parametrization. 18 years of hourly data of significant wave height and winds speed for 12 different buoys were used in order to estimate the model which can be written on the following form:

D denotes the water depth and C, a and b are depth-dependent parameters. Based on comparison with measurements it was concluded that this model is reliable for wind speeds up to at least U 10 = 25 m/s.

It is out of scope of the present literature survey to review all models for predicting wave heights from wind speed or other meteorological data. Such models are an integral part of the various wave models available for wave forecasting, but cannot be used directly to model long-term variations in wave height. However, given adequate long-term wind forecasts, such relationships between wind speed and wave height may be exploited in simulating long-term wave data for long-term predictions of wave climate.

3.2 Stationary models

A thorough survey of stochastic models for wind and sea state time series is presented in Monbet et al. (2007). Only time series at the scale of the sea state have been considered without modelling events at the scale of individual waves, and only at given geographical points. One section of Monbet et al. (2007) is discussing how to model non-stationarity such as trends in time series and seasonal components, but for the main part of the paper it is assumed that the studied processes are stationary. The models have been classified in three groups: Models based on Gaussian approximations, other non-parametric models and other parametric models. In the following, the main characteristics for these different types of wave models are highlighted.

Even though ocean wave time series cannot normally be assumed to be Gaussian, it may be possible to transform these time series into time series with Gaussian marginal distributions when they have a continuous state space (Monbet et al. 2007). The transformed time series can then be simulated by using existing techniques to simulate Gaussian processes. If {Y t } is a stationary process in R d, assume that there exists a transformation f: R d → R d and a stationary Gaussian process {X t } so that Y t = f(X t ). Such a procedure consists of determining the transformation function f, generation of realizations of the process {X t } and then transforming the generated samples of {X t } into samples of {Y t } using f. A number of such models for the significant wave height have been proposed in the literature (e.g. Cunha and Guedes Soares (1999), Walton and Borgman (1990) for the univariate time series for significant wave height, H s , Guedes Soares and Cunha (2000), Monbet and Prevosto (2001) for the bivariate time series for significant wave height and mean wave period, (H s , T) and DelBalzo et al. (2003) for the multivariate time series for significant wave height, mean wave period and mean wave direction, (H s , T, Θ m )). However, it is noted that the duration statistics of transformed Gaussian processes has been demonstrated not to fit too well with data, even though the occurrence probability is correctly modelled (Jenkins 2002).

Multimodal wave models for combined seas (e.g. with wind-sea and swell components) have also been discussed in the literature (see e.g. Torsethaugen 1993; Torsethaugen and Haver 2004; Ewans et al. 2006), but these are generally not required to describe severe sea states where extremes occur (Bitner-Gregersen and Toffoli 2009).

A few non-parametric methods for simulating wave parameters have been proposed, as reported in Monbet et al. (2007). One may for example assume that the observed time series are Markov chains and use non-parametric methods such as nearest-neighbor resampling to estimate transition kernels. In Caires and Sterl (2005), a non-parametric regression method was proposed to correct outputs of meteorological models. A continuous space, discrete time Markov model for the trivariate time-series of wind speed, significant wave height and spectral peak period was presented in Monbet and Marteau (2001). However, one major drawback of non-parametric methods is the lack of descriptive power.

An approach based on copulas for multivariate modelling of oceanographic variables, accounting for dependencies between the variables, were proposed in de Waal and van Gelder (2005) and applied to the joint bivariate description of extreme wave heights and wave periods.

Parametric models for wave time series include various linear autoregressive models, nonlinear retrogressive models, finite state space Markov chain models and circular time series models. A modified Weibull model was proposed in Muraleedharan et al. (2007) for modelling of significant and maximum wave height. For short-term modelling of wave parameters, different approaches of artificial neural networks (see e.g. Deo et al. 2001; Mandal and Prabaharan 2006; Arena and Puca 2004; Makarynskyy et al. 2005) and data mining techniques (Mahjoobi and Etemad-Shahidi 2008; Mahjoobi and Mosabbeb 2009) have successfully been applied. A non-linear threshold autoregressive model for the significant waveheight was proposed in Scotto and Guedes Soares (2000).

3.3 Non-stationary models

Many statistical models for extreme waves assume the stationarity of extreme values, but there are some non-stationary models proposed in the literature. In the following, some non-stationary models for extreme waves that are known and previously presented in the literature will be reviewed. A review of classical methods for asymptotic extreme value analysis used in extreme wave predictions are presented in Soukissian and Kalantzi (2006).

3.3.1 Microscopic models

A number of statistical models have been presented in the literature where the focus has been to use sophisticated statistical methods to estimate extreme values at certain specific geographical points (e.g. based on data measurements at that location). This approach is natural, given the limited spatial resolution of available wave data, and aims at exploiting available data measurements at certain locations to the maximum, i.e. to obtain as good predictions as possible for locations where wave data are available. In the following, some of these will be briefly reviewed, even though it is noted that the aim of this study is to extend the scope and broaden the perspective of the statistical models to also include the spatial dimension.

A method for calculating return periods of various levels from long-term nonstationary time series data of significant wave height based on a new definition of the return period is presented in Stefanakos and Monbet (2006) and Stefanakos and Athanassoulis (2006). This definition is based on the mean number of upcrossings of a particular level and was first introduced in the context of prediction of sea-level extremes in Middleton and Thompson (1986). In Soukissian and Kalantzi (2007) and Guedes Soares and Scotto (2004), new de-clustering methods and filtering techniques are proposed in order to apply the r-largest-order statistics for long-term predictions of significant wave height. A new de-clustering method was also suggested in Soukissian et al. (2006) for applying the peaks-over-threshold method for H s time series. An approach using stochastic differential equations for clarifying the relationship between long-term time-series data and its probability density functions in order to extrapolate long-term predictions from shorter historical data is proposed in Minoura and Naito (2006). Two approaches for estimating long term extreme waves are discussed in Hagen (2009) (i.e. an initial distribution approach and a Peak Over Threshold (POT) approach for storm events) and issues related to sampling variability, model fitting and threshold selection (for the POT analysis) are addressed.

Duration statistics of long time series of significant wave height H s (i.e. the duration of sea states with different intensities) where analyzed in Soukissian and Samalekos (2006) using a bottom-up segmentation algorithm. This analysis makes use of the increasing or decreasing intensity of successive sea state conditions, and subdivide long-term H s time series into subsequent series of monotonically increasing or decreasing intensities. This would correspond to developing and decaying sea states, and the segmentation algorithm should ensure that a meaningful subdivision of the long-term time series are obtained. A sensitivity analysis of this approach, investigating the effect of the maximum allowed error on the segmentation of the H s time series is reported in Soukissian and Photiadou (2006).

Return periods of storms with an extreme wave above a certain threshold are found based on an equivalent triangular storm model in Arena and Pavone (2006). This approach is extended to find return periods analytically for storms with two or more waves exceeding the threshold in Arena et al. (2009), Arena and Pavone (2009). The basic idea behind the equivalent triangular storm model is that it, for a fixed location, associates a triangle to each actual storm and represents a significant wave height time series by means of a sequence of triangular storms. The triangle height is the maximum significant wave height during the actual storm and the triangle base is such that the maximum expected wave height in the actual storm equals the maximum expected wave height in the triangular storm model (Bocotti 2000). The equivalent power storm model was presented in Fedele and Arena (2009) as a generalization of the equivalent triangular storm model to predict return periods for waves above a certain threshold. It is noted that the equivalent triangular storm is firmly based on what has become known as the Borgman Integral (Borgman 1973), which gives the distribution function for the largest wave, F m (h) = P(H m ≤ h) as follows, with H m denoting the largest wave height, a 2(t) time varying Rayleigh parameter and T(t) typical wave period at time t:

A nonstationary stochastic model for long-term time series of significant wave height was presented in Athanassoulis and Stefanakos (1995) which were modelled by decomposing detrended time series to a periodic mean value and a residual time series multiplied with a periodic standard deviation: \(X(\tau) = \overline{X}_{\text{trend}}(\tau) + \mu (\tau) + \sigma(\tau)W(\tau).\) It was then showed that W(τ) could be considered stationary. Short-term and long-term wave characteristics of ocean waves were combined in order to develop nested, stochastic models for the distribution of maximum wave heights in Prevesto et al. (2000). Different time scales were introduced, i.e. fast time and slow time, and a stochastic process was modelled in the fast time where the state variables were modelled as a stochastic process in the slow time.

The seasonal effect on return values of significant wave height were investigated in Menéndez et al. (2009), where a time-dependent generalized extreme value model was used for monthly maxima of significant wave height. Non-stationarity representing annual and semiannual cycles is introduced in the model via the location, scale and shape parameters and the inclusion of seasonal variabilities is found to reduce the residuals of the fitted model substantially. Hence, the model provides a way of quantitatively examining the long-term seasonal distribution of significant wave height.

Various other models for the long-term distribution of significant wave height have been suggested (e.g. using the Beta and Gamma models (Ferreira and Guedes Soares 1999), using the Annual Maxima and Peak Over Threshold methods (Guedes Soares and Scotto 2001) using non-linear threshold models (Scotto and Guedes Soares 2000), using time-dependent Peak Over Threshold models for the intensity combined with a Poisson model for frequency (Méndez et al. 2006, 2008), employing different autoregressive models (Guedes Soares and Ferreira 1996; Guedes Soares et al. 1996), and using a transformation of the data and a Gaussian model for the transformed data (Ferreira and Guedes Soares 2000)). Short- and long-term statistics were combined in Krogstad (1985) in order to establish distributions of maximum wave heights and corresponding periods. Some considerations of bias and uncertainty in methods of extreme value analysis were discussed in Gibson et al. (2009), leading to some recommended approaches for such analyses and applied on a set of wave data.

More recently, an interesting approach to long-term predictions of significant wave height, combining Bayesian inference methodology, extreme value techniques and Markov Chain Monte Carlo (MCMC) procedures is presented in Scotto and Guedes Soares (2007). The benefits of using a Bayesian approach compared to a traditional likelihood-based approach is that prior knowledge about parameter values θ can be used together with observed data x to update a posterior distribution π(θ|x). Simulations of this posterior distribution can be obtained by constructing a Markov Chain whose invariant distribution, or target distribution, is proportional to the posterior distribution by employing the Metropolis-Hastings algorithm (see Robert and Casella 2004). This Bayesian approach was used to analyze a dataset of significant wave height collected in the northern North Sea.

Another Bayesian approach to estimating posterior distributions of return periods for extreme waves is proposed in Egozcue et al. (2005). Here, the occurrence of extreme events is modelled as a Poisson-process with extreme wave heights distributed according to a generalized Pareto distribution.

3.3.2 Combining long and short term wave height statistics

The Borgman Integral (Eq. 2) is a fundamental tool for combining the long term distribution of significant wave height with short term distribution for the individual wave heights (Borgman 1973). This is often desired for estimating the maximum wave or crest height occurring in a long return interval. A similar method was proposed by Battjes (1972). It is noted that the particular expression of the Borgman Integral as presented in Eq. 2 is based on the assumption of a Rayleigh distribution for the individual wave height. A more general form would be, letting P(h|H s ) denote the short-term distribution of the individual wave height conditioned on the sea state,

Long time series of individual wave heights are typically not available and calculations must therefore be based on time series of sea state parameters such as the significant wave height. Hence, the problem of modelling the maximum wave height in a long time interval comprises three aspects: modelling of long term sea state parameters (e.g. significant wave height), short term modelling of individual wave heights conditioned on the sea state and combining the two distributions. This can be done by first fitting a short-term distribution and then apply the Borgman Integral to this distribution. Integration of short-term second order models over time series of measured sea states was performed by Krogstad and Barstow (2004). A recent study concerned with finding the most accurate method for combining long and short term wave statistics was reported in Forristall (2008).

3.3.3 Spatio-temporal models for extreme waves

The spatial-temporal variability of ocean wave fields are complex, and the fields will generally be inhomogeneous in space and non-stationary in time, with strong temporal and spatial variation (Jönsson et al. 2002). Different models have been proposed in the literature for modelling these variabilities and for analyzing and synthesizing spatio-temporal wave data.

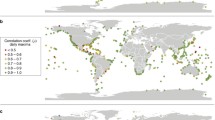

There has been significantly more focus on the temporal variability compared to the spatial variability of wave fields, but the spatial behavior (i.e. the spatial inter-dependence and radius of influence of a set of spatially distributed stations) of significant wave height is investigated in Altunkaynak (2005). The methodology is based on the concept of trigonometric point cumulative semivariograms, consisting of cumulative broken lines where the angle between two successive lines connecting two station records is a measure of the regional dependence, ranging from 0 (complete independence) to 1 (complete dependence). Another approach for predicting the maximum wave height over a spatial area were proposed in Fedele et al. (2009), based on 4D video data of sea states acquired through a wave acquisition stereo system (WASS) and using Euler Characteristics’ theory. A regional frequency analysis of extreme wave heights, analyzing peaks-over-threshold wave data from nine locations along the Dutch North Sea coast was reported in Van Gelder et al. (2001). The different locations could be considered as a homogeneous region and it was shown that the Generalized Pareto Distribution is an optimal regional probability distribution for the extreme wave heights for the region. Notable differences were found for the regional quantile estimates compared to the at-site quantile estimates, indicating that it would be better to rely on the regional estimates in decision making.

Models for stochastic simulation of the annual (Boukhanovsky et al. 2003a) and synoptic (Boukhanovsky et al. 2003b) variability of inhomogeneous metocean fields were proposed as expansions of the field ζ(r, t) in terms of periodical empirical orthogonal functions in Boukhanovsky et al. (2003a, b):

where m(r, t) are the mathematical expectations, ϕ kt (r, t) are the spatio-temporal basis functions, ε(k, t) are inhomogeneous white noise and a k (t) are the coefficients. r denotes the geographical coordinates and t time. The results of simulating these models is a set of simulated metocean fields ζ(r, t) in a discrete set of grid points and at discrete times. They could then be used to investigate the field extremes and rare events in terms of both spatial and temporal extremes, and wave data from the Barents Sea region have been used to test the models with reasonable agreement. The stochastic models for annual variability can be regarded as field generalizations of periodically correlated stochastic processes. The model for synoptic variability uses a Lagrangian approach and the temporal sequence of storm centres are modelled as a finite-state Markov Chain with the storm extensions and field properties as spatio-temporal impulses.

Recently, spatio-temporal statistical models for the significant wave height have been reported that describes the variability of significant wave height over large areas by stochastic fields (Baxevani et al. 2005, 2009).This is based on constructing a homogeneous model valid for a small region and then extending this to a non-homogeneous model valid for large areas. Global wave measurements from satellites have been used for model fitting, providing wave data of spatial variability, but limited physical knowledge about the wave phenomena have been incorporated into the models. The resulting models can then be used to estimate the probability of a maximum significant wave height to exceed a certain level or to estimate the distribution of the (spatial) length of a storm (Baxevani et al. 2007). However, the temporal validity of this model is limited to the order of hours (Baxevani et al. 2009), and therefore it does not seem suited for studying long-term trends and the effects of climate change.

Caires et al. (2006b) used two approaches to model the extremes of non-stationary time series, i.e. the non-homogeneous Poisson process and a non-stationary generalized extreme value model. The non-homogeneous Poisson process was used to model extreme values of the significant wave height, obtained from the 40-year ECMWF re-analysis (ERA-40) (Uppala et al. 2005) and compared to estimates obtained using a nonstationary generalized extreme value model (NS-GEV). The parameter of the Poisson distribution in this model was on the form λ = ∫∫λ(t, x) dt dx, where:

From projections of the sea level pressure under three different forcing scenarios (Nakićenović et al. 2000; Boer et al. 2000), projections of the parameters in the non-homogeneous Poisson process are made up to the end of the twenty-first century. Trends in these parameters are then determined, projections of return value estimates of H S are projected and their uncertainties are assessed.

4 Relevant statistical models from other areas of application

Extreme value analysis has a wide area of applications aside from ocean waves, in particular in various environmental sciences where events are also associated with spatio-temporal variations, and it is believed that some lessons can be learned by examining different statistical models for other types of extreme events.

An interesting discussion on the use of asymptotic models for the description of the variation of extremes is available in Coles et al. (2003), within the context of extreme rainfall modelling. It is concerned with the lack of ability of such models to predict extreme, catastrophic events leading to inadequate designs and lack of preparedness for such rare events. One of the reasons for this, according to Coles et al. (2003) is models that do not take the uncertainties in both model and predictions adequately into account. For example, it is argued that even in cases where data support the reduction of the generalized extreme value model to a Gumbel model, this should not be done without an appraisal of the uncertainty this decision introduces and as a general advice it is suggested to use the generalized extreme value model rather than Gumbel reduction. Furthermore, the preference for Bayesian analysis over the classical likelihood analysis is emphasized, even if using diffuse priors.

In this section, a review of relevant time- and space-dependent statistical models from other areas of application is presented. Further work will then focus on how these approaches can be used for statistical modelling of extreme waves and sea states.

4.1 Bayesian hierarchical space–time models

Modelling of wave data in space and time is an alternative to the common approach of extreme value analysis based on a point process representation, provided that adequate space–time wave data can be obtained. Wikle et al. (1998) propose a hierarchical Bayesian space–time model as an alternative to traditional space–time statistical models and apply it on an atmospheric data set of monthly maximum temperatures. Such models generally consist of three stages often referred to as the data stage, the process stage and the parameter stage.

Similar models has also been used for modeling tropical ocean surface winds (Wikle et al. 2001), North Atlantic sea surface temperatures (Lemos and Sansó 2009), concentrations of PM10 pollutionFootnote 3 (Cocchi et al. 2007), ozone levels (Sahu et al. 2007) and earthquake data (Natvig and Tvete 2007) A brief overview of hierarchical approaches applied to environmental processes is presented in Wikle (2003). More recently, various hierarchical Bayesian space–time models for extreme precipitation events were proposed in Sang and Gelfand (2009). As a proxy for these Bayesian hierarchical space–time models, the model for earthquake data will be briefly reviewed in the following.

The modelling of earthquake data for earthquake prediction, using a Bayesian hierarchical space–time approach in Natvig and Tvete (2007) considered a spatial resolution of 0.5 × 0.5° (about 50 × 50 km) and a temporal resolution of 4 months. The observations are, for each time period, the magnitude of the largest earthquakes (by the Richter scale) observed within each grid. The model is implemented within a Markov Chain Monte Carlo framework using Gibbs sampling and additional Metropolis-Hastings steps. Four different model alternatives were suggested in a hierarchical structure, one main model and three levels of simplified models, nested within the model at the higher level. The main features of the main model are briefly outlined in the following.

Denoting the discrete spatial and temporal locations x = 1, …, X and t = 1, …, T, respectively, the observed maximal magnitude earthquake at location x and time t is M(x, t). The latent variable Y(x, t) is defined in order to describe the maximal earthquake without a cut point at 0:

Corresponding to the latent variable Y(x, t), for every point in space–time (x, t) there is an underlying potential for a maximal magnitude earthquake, modelled by the hidden system state variables θ(x, t). The latent variable is then modelled as this potential and a random noise term as follows:

where the random noise are assumed independent and normally distributed ε Y (x, t) ∼ N(0, σ 2 Y ), σ 2 Y being a random quantity. Furthermore, the earthquake potential is assumed to be decomposed into a time-independent contribution μ(x) and a time-dependent distribution with a spatial description θ S (x, t):

Now, the time-independent term is modelled as a Gaussian Markov Random Field, with spatial dependence only on its nearest neighbours, with the notation N = North, E = East, S = South, W = West, and e.g. μ(x)N the μ value in the grid cell north of x:

c NS , c EW are spatial dependence parameters in the north–south and east–west directions respectively. The noise terms are again normally distributed with variance σ 2μ .

In the expression above, μ0(x) denote the Markov Randow Field mean in grid point x and this is modelled as having a quadratic form, letting m(x) and n(x) denote longitude and latitude corresponding to the grid point x, in the following way (for x = 1, …, X):

The space–time dynamic term are modelled with a vector autoregressive model of order 1, with spatial dependence only on its nearest neighbours (with same notation for North, South, etc. as above, and for x = 1, …, X, t = 1, …, T):

The exponential term in the equation above is included to account for strain which is built-up in periods with only small earthquakes and reduced when tension is released as a major earthquake occurs. κ is a positive parameter that regulates the reduction in strain according to the largest earthquake at the previous time period for a grid cell. a(x) and β(x) are modelled in completely the same way as μ(x) outlined above, introducing parameters a NS , a EW , b NS and b EW accordingly. The noise term \(\epsilon_{\theta_S}(x, t)\) is Gaussian \(N(0, \sigma_{\theta_S}^2) \) and independent in space and time. The various simplified, nested model alternatives are obtained by

-

i.

setting β(x) = 0

-

ii.

setting \(e^{-\kappa (M(x, t-1)-3)^2 I(M(x, t-1)> 3)} = 1\) to remove the strain term

-

iii.

setting \(\theta_S(x, t) = 0\) to obtain a time-independent model

The model outlined above contains a large number of parameters, and all prior parameter distributions are considered independent. A Markov Chain Monte Carlo approach using the Gibbs sampler and also an additional Metropolis-Hastings step for some of the parameters, was adopted for generating independent samples from the posterior distributions in order to arrive at posterior estimates and predictions. For an introduction to Markov Chain Monte Carlo methods, including the Gibbs sampler and the Metropolis-Hastings algorithm, reference is made to Robert and Casella (2004) or similar textbooks.

4.2 Continuous space models

Even though wave data are generally only available at certain specific locations, extreme waves should in principle be considered as a continuous process in space and time rather than a discrete process. Considering the continuous space modelling of a process’ extremes, this would require the specification of a continuous space model for the marginal behaviour of the extremes of the process and a continuous space specification of the dependence structure of the extremes. Hence, a generalization of the dependence structure of multivariate extremes to the infinite dimensional case is needed, and one such generalization is provided by the theory of max-stable processes (de Haan 1984). By definition, a stochastic process {Y t } is called a max-stable process if the following property holds:

In the following, a procedure for using the theory of max-stable processes for modelling data which are collected on a grid of points in space are reviewed. This approach is considered as an infinite dimensional extension of multivariate extreme value theory and has the advantage that it can be used to aggregate the process over the whole region and for interpolation to anywhere within the whole region. Models based on the resulting family of multivariate extreme value distributions are suitable for a large number of grid points.

In Coles (1993), Coles and Tawn (1996) a class of max-stable process models for regional modelling of extreme storms were specified which can be estimated using all relevant extreme data and which are consistent with the multivariate extreme structure of the data. The essence of this approach is to describe the process of storms by the following components:

-

i.

A phase space S of storm types so that the storm type is independent of their size

-

ii.

An index space T for the region, conveniently referred to as the region itself

-

iii.

A measure ν(ds) on S describing the relative frequency of storm types

-

iv.

A function f(s, t) interpreted as the proportion of a storm of type s observed at t

With x j interpreted as the size of the jth storm, s j the type of the jth storm, if {(x i , s i ); i = 1, …} are taken to be the points of a Poisson process on (0, ∞) × S with intensity μ(dx, ds) = x −2 dxν(ds) and letting f(x, s) be a positive function on S × T, then the process

is a max-stable process for t ∈ T.

For statistical modelling of extreme storms as such a max-stable process, it was assumed that the spatial variability of storms could be described adequately by variability within a subset of data sites T 1 (Coles 1993). Then, a multivariate extreme value model was fitted to the data for this subset and the model is extended smoothly as a max-stable process through suitable functions f(· , ·) on the basis of information from the remaining data sites. Such a model was fitted for rainfall data collected from 11 sites, and in spite of some interesting qualitatively observations, the quality of fit of the model was rather poor.

In Buishand et al. (2008) a somewhat different approach of using max-stable processes for the modelling of spatial extreme rainfall is proposed based on random fields. Whereas Coles (1993), Coles and Tawn (1996) indicate how to analytically calculate quantiles of areal rainfall, in Buishand et al. (2008), the 100-year quantile of the total rainfall over an area in Holland is found by simulating synthetic daily rainfall fields using their estimated model. An extended Gaussian max-stable model for spatial extreme rainfall was also presented in Smith and Stephenson (2009), where Bayesian techniques are used in order to incorporate information other than data into the model, i.e. by using informative priors for the marginal site parameters and non-informative priors for parameters relating to the dependence structure of the process. The extended model is estimated using a pairwise likelihood within the Bayesian analysis and Markov chain Monte Carlo techniques were used to simulate from the posterior distributions, using a Gibbs sampler with a Metropolis step. Max-stable processes have also been applied to e.g. modelling of extreme wind speeds (Coles and Walshaw 1994).

4.3 Process convolution models

Several models for spatio-temporal processes based on process convolution have been proposed in the literature (e.g. Higdon 1998; Calder 2007, 2008; Sansó et al. 2008). The main idea is to convolve independent processes to construct a dependent process by some convolution kernel. This kernel may evolve over space and time thus specifying models with non-stationary dependence structure.

The model proposed in Higdon (1998) is motivated by estimation of the mean temperature field in the North Atlantic Ocean based on 80 year of temperature data for a region. First, the temperature field y(s, t) is modelled as a process over space s and time t as the sum of two processes

where z(s, t) is a smooth Gaussian process and ε(s, t) is an independent error process. The smooth process z(s, t) is constructed to model the data by taking the convolution of a 3-dimensional lattice process. Given a grid process x = (x 1, …, x m ) with space–time coordinates (ω1, τ1), …, (ω m , τ m ), the smooth field is expressed as

where the properties of the convolution kernel determine the smoothness of z. A separable kernel were used (i.e. a product of a kernel that smooths over space and one that smooths over time): K s (Δs, Δt) = C s (Δs) · R(Δt). Inference on the resulting model was made using a Bayesian approach and simulating the posterior distribution of the mean temperature field over space and time using Markov chain Monte Carlo methods.

Following a similar approach, but using nonseparable, discrete convolution kernels, regional temperature measurements were modelled in space and time in Sansó et al. (2008). Two alternative set of models were suggested. The first was to convolute spatial Gaussian processes with a kernel providing temporal dependencies and the second was to convolute autoregressive models with a kernel providing spatial interactions. In other words, the data could either be considered as a number of time series at each location (temporal convolution model) or as a number of realizations of spatial processes observed at some locations (spatial convolution model).

A dynamic process convolution model extends the discrete process convolution approach by defining the underlying process x to be a time-dependent process that is spatially smoothed by a smoothing kernel at each time-step (Calder 2007, 2008). Such models have been used in air quality assessment (e.g. in bivariate modelling of levels of particulate matter PM 2.5 and PM 10 in Calder (2008) and for multivariate modelling of the concentration of five pollutants in Calder (2007)). A continuous version in space and time is considered in Brown et al. (2000), where a model is formulated in discrete time and continuous space and a limit argument is applied to obtain continuous time as well. A general approach using cross-convolution of covariance functions for modelling of multivariate geostatistical data were proposed in Majumdar and Gelfand (2007). All of the convolution models discussed above used bayesian approaches and Markov chain Monte Carlo methods for model specification.

Finally, it is noted that some limitations to the convolution model approach is reported in Higdon (1998) and Calder (2008). One is the impact of prior assumptions on the posterior distributions. Furthermore, it is stated that it would be preferable to allow the data to determine the kernels, which could depend on space and time, rather than specifying it a priori. In addition, the model for particulate matter are not able to handle extreme observations very well and permits nonsensible predictions.

4.4 Nonstationary covariance models

Many spatiotemporal models assumes separability in space and time so that the space–time covariance function can be represented as the product of two models: one as a function of space and the other as a function of time. However, the rationale for using a separable model is often convenience rather than the ability of such models to describe the data well, and the assumption is often unrealistic. Other simplifying assumptions often employed are stationarity (e.g. second order stationarity which means that the mean function is assumed constant and the space–time covariance function is assumed to depend only on the directional distance between measurement sites) and isotropy (i.e. that the covariance function is dependent only on the length of the separation and not on its direction). An example of a spatio-temporal covariance model where the assumptions of stationarity and separability is relaxed is presented in Bruno et al. (2009), applied to tropospheric ozone data.

Due to the increased availability of satellite measurements of many geophysical processes, global data are increasingly available. Such data often show strong nonstationarity in the covariance structure. For example, processes may be approximately stationary with respect to longitude but with highly dependent covariance structures with respect to latitude. In order to capture the nonstationarity in such global data, with a spherical spatial domain, a class of parametric covariance models are proposed in Jun and Stein (2008). These assume that processes are axially symmetric, i.e. that they are invariant to rotations about the earth’s axis and hence stationary with respect to longitude.

Assuming a homogeneous, zero-mean process Z 0, a zero-mean nonstationary process Z may be defined by applying differential operators with respect to latitude and longitude, letting L and l denote latitude and longitude respectively (Jun and Stein 2007),

Now, A and B denote nonrandom functions depending on latitude (and may also in principle depend on longitude, but this would break the axial symmetry). A non-negative constant C corresponds to including homogeneous models for the case A(L) = B(L) = 0. In order to apply this model to real applications, the A and B functions need to be estimated, and it is suggested to use linear combinations of Legendre polynomials (Jun and Stein 2008).

The covariance model is applied to global column ozone level data and it is shown that the strong nonstationarity with respect to latitudes as well as the local variation of the process can be well captured with only a modest number of parameters. Thus, it may be a promising candidate for modelling spatially dependent data on a sphere. Furthermore, an extension to spatio-temporal processes would be obtained by introducing a similar differential operator with respect to time in addition to the ones with respect to latitude and longitude. Then, such models should be able to capture spatial-temporal nonstationary behaviour and to create flexible space–time interactions such as space–time asymmetry. Review of various methods and recent developments for the construction of spatio-temporal covariance models are presented in Kolovos et al. (2004) and Ma (2008).

4.5 Coregionalization models

A multivariate spatial process is a natural modelling choice for multivariate, spatially collected data. When the interest is in modelling and predicting such joint processes it will be important to account for the spatial correlation as well as the correlation among the different variables. If this is modelled using a Gaussian process, the main challenge is the specification of an adequate cross-covariance function (Schmidt and Gelfand 2003), which can be developed through linear models of coregionalization (LMC). The linear model of coregionalization is reviewed in Gelfand et al. (2004) where the notion of spatially varying LMC are proposed in order to enhance the usefulness by providing a class of multivariate nonstationary processes.

Traditionally, linear models of coregionalization have been used to reduce dimensions, approximating a multivariate process through a lower dimensional representation. However, it may also be used in multivariate process construction, i.e. obtaining dependent multivariate processes by linear transformation of independent processes. A general multivariate spatial model could be on the form

where \(\varvec{\epsilon}(s)\) is a white noise vector (i.e. \({\varvec{\epsilon}}(s) \sim N(0, {\mathbf{D}})\) where D is a diagonal matrix with (D jj ) = τ 2 j ), v(s) arises from a linear model of coregionalization from independent spatial processes w(s) = (w 1(s), … w p (s)): v(s) = A w(s) and where \(\varvec{\mu}(s)\) may be assumed to arise linearly in the covariates, i.e. μ j (s) = X T j (s)β j where each component may have its own set of covariates X j and its own coefficient vector β j . If ignoring the term \(\varvec{\mu}(s)\) and the w j (s) processes are assumed to have mean 0, variance 1 and a stationary correlation function ρ j (h), then E(Y(s)) = 0 and the cross-covariance matrix associated with Y(s) becomes

with a j the jth column of A. Priors on the model parameters \(\varvec{\theta}\) consisting of {β j }, {τ 2 j }, T and ρ j , j = 1, …, p would then complete the model specification in a Bayesian setting, obtaining the posterior distribution of the model parameters

The extension to a spatially varying linear model of coregionalization is obtained by letting A be spatially dependent, i.e. replacing A with A(s) in v(s) = A(s) w(s) (Gelfand et al. 2004). v(s) will then no longer be a stationary process. Further extensions to spatio-temporal versions of the model, modelling v(s, t) = A(s, t)w(s, t), where the components of w(s, t) are independent spatio-temporal processes may also be feasible, but this was not further investigated.

A stationary Bayesian linear coregionalization model for multivariate air pollutant data was presented in Schmidt and Gelfand (2003) and Gelfand et al. (2004) presents a commercial real estate example of a spatially varying model. Rather than taking the Bayesian approach, an Expectation-Maximization (EM) algorithm for the maximum-likelihood estimation of the parameters in a linear coregionalization model is developed in Zhang (2007), and applied on a spatial model of soil properties.

4.6 Generalized extreme value models

The generalized extreme value distribution is a cornerstone of extreme value modelling, and in Huerta and Sansó (2007) non-stationary, location-dependent processes are studied using the GEV distribution where the parameters are allowed to vary in space and time. The modeling is based on a hierarchical structure assuming an underlying spatial model. Parameter changes over time (i.e. for the location, scale and shape parameters) are modelled by use of Dynamic Linear Models (West and Harrison 1997) which is a very general class of time series models. Now, the trends are not constrained to have a specific parametric form and the significance of short term changes can be assessed together with the long term changes. It is also possible to estimate how the effects of covariates change over time. An extension of this model to include changes in space as well as in time is made using a process convolution approach in defining a Dynamic Linear Model on the parameters.

Several approaches for estimation of parameters and quantiles of the GEV distribution have been applied, such as maximum likelihood estimation, L-moments estimation, Probability Weighted Moments estimation and the method of moments. Recently, an alternative to these, employing a full Bayesian GEV estimation method which contains a semi-Bayesian framework of generalized maximum likelihood estimators and considers the shape, location and scale parameters as random variables were developed (Yoon et al. 2010). However, these approaches do not consider non-stationarity.

A generalized Probability Weighted Moments (PWM) method was suggested in Ribereau et al. (2008) to model temporal covariates and provide accurate estimation of return levels from maxima of non-stationary random sequences modelled by a GEV distribution. This is a generalization of the PWM method that has proved to be efficient in estimating the parameters of the GEV distribution for IID processes and is an alternative to Maximum Likelihood Estimation (MLE) for cases when the IID assumption is violated (e.g. in non-stationary cases). The approach is illustrated by applying it on time series of annual maxima of CO2 concentrations and seasonal maxima of cumulated daily precipitations.

An alternative to GEV models could be to use threshold models (Behrens et al. 2004). For example, various statistical methods for exploring the properties of extreme events in large grid point datasets were presented in Coelho et al. (2008), and a flexible generalized Pareto model that are able to account for spatial and temporal variation in the distribution of excesses were outlined. The generalized Pareto distribution parameters may incorporate the dependence of the extreme values and different explanatory variables related to spatial and temporal changes such as climate change. The methods were illustrated using mean surface temperatures of the Northern Hemisphere.

A generalized PWM method was introduced in Diebolt et al. (2007) in order to estimate the parameters of the generalized Pareto distribution (GPD) from finite length time series. A Bayesian framework for analysis of extremes in a non-stationary context was proposed in Renard et al. (2006) with a case study on peak-over-threshold data. Several probabilistic models, including stationary, step-wise changing and linear trend models, and different extreme value distributions were considered allowing modelling uncertainty to be taken into account.

An alternative to the standard approach of modelling non-stationarity in threshold models (i.e. retaining a constant threshold and letting the parameters of the GPD be functions of some covariates) is proposed in Eastoe and Tawn (2009). This involves preprocessing; attempting to model the non-stationarity in the entire data set and then removing this non-stationarity from the data. If this preprocessing is successful, the extremes of the preprocessed data will have most, if not all, of its non-stationarity removed and a simple extreme value analysis of the preprocessed data can be employed. It is argued that this approach provides improved description of the non-stationarity of the extremes, clearer interpretation, easier threshold selection and reduced threshold sensitivity. The approach was also found to be superior to approaches with continuous varying thresholds.

4.7 Optimality models

One type of statistical models that has recently been applied in evolutionary sciences are optimality models. These assume the evolution of some biological trait towards an optimal state dictated by the environmental conditions. Due to a randomly changing environment, the optimal state is assumed to change over time, and the species are assumed to be adapting to this changing optimality with a certain phylogenetic inertia. One choice of process models for analysing such an adaptation-inertia problem is the Ornstein-Uhlenbeck process, as suggested in Hansen et al. (2008), represented by the stochastic differential equation

Here, dy is the change in some random variable y over a time step dt, α is a parameter measuring the rate of adaptation toward the optimum θ, dW y is a random noise process and σ y is the standard deviation of the random changes. Thus, evolution according to this model has two components: one is a deterministic pull toward the primary optimum and the other is a stochastic change without direction.

A layered process is introduced for modelling adaptation to a randomly changing optimum, assuming that the optimum at any point on the phylogeny (that is, the history of organismal lineages as they change through time) is a function of a randomly changing predictor variable x. Thus, the model is extended to the coupled stochastic differential equations below where the predictor indirectly influences the trait through its influence of the optimum.

Additional layers of hidden processes may also be modelled in this way, where each layer is responding to changes in the layer beneath. The model may also be extended in that the predictor variable itself may be modelled as an Ornstein-Uhlenbeck process, tracing some optimum. The Ornstein-Uhlenbeck process has also been proposed for modelling of drought and flood risks (Unami et al. 2010) and survival data (Aalen and Gjessing 2004) and has been widely used in financial modelling (Stein and Stein 1991; Barndorff-Nielsen and Shephard 2001; Benth et al. 2007).

It could be worthwhile to investigate whether an analogy to this approach would be appropriate for the development of extreme waves, i.e. whether the distribution of extreme sea states are trying to adapt to a changing mean state due to the changing environment. For example, will there be a certain average wave climate given the changing environmental conditions such as the level of CO2 concentration in the atmosphere, global temperatures, greenhouse gas emissions etc.? In other words, it could be investigated whether the distribution of extreme waves in a changing environment could be adequately modelled using layered Ornstein-Uhlenbeck processes in some way.

4.8 Bayesian maximum entropy models

Bayesian maximum entropy (BME) models have been used to model spatiotemporal random fields. For example, in Choi et al. (2009), this approach was used for developing a systematic epidemic forecasting methodology used to study the space–time risk patterns of influenza mortality in California during wintertime. Influenza mortality rates were represented as spatiotemporal random fields and the Bayesian maximum entropy method was used to map the rates in space and time and thus generate predictions. Bayesian maximum entropy models have also been used for space–time mapping of soil salinity (Douanik et al. 2004), urban climate (Lee et al. 2008) and the contamination pattern from the Chernobyl fallout (Savelieva et al. 2005) and for modelling geographic distributions of species (Phillips et al. 2006).

In short, the principle of maximum entropy states that the probability distribution best representing the current state of knowledge, which may be incomplete, is the one with the largest entropy. If some testable information about a probability distribution function is given, then, considering all trial probability distributions that encode this information, the probability distribution that maximizes the information entropy is the true probability distribution with respect to the testable information. This principle is applicable to problems of inference with a well-defined hypothesis space and incomplete data without noise and the Bayesian maximum entropy method can be used to predict the value of a spatiotemporal random field at an unsampled point in space–time based on precise (hard) and imprecise (soft) data.

The BME method applied to influenza mortality risk (Choi et al. 2009) consists of three stages with different knowledge bases at each stage: the general knowledge base (core knowledge), the specificatory knowledge base (case-specific knowledge) and the integration knowledge base (union of the general and specificatory knowledge bases). The influenza risk is represented as a spatiotemporal random field X(p) defined at each space–time point p = (s, t). The influenza modelling approach then follows the three BME stages:

-

a.

A probability density function, f g (x map) is constructed on basis of the general knowledge base, where the vector x map denotes a possible realization of the random field associated with the point vector p map. The x map generally includes hard data x hard = (x 1, …, x h ) at points p hard = (p 1, …, p m_h), soft data \({\bf x}_{\text{soft}} =(x_{m_h+1}, {\ldots,}x_m )\) at points \({\bf p}_{\text{soft}} = ( {\bf p}_{m_h + 1}, \ldots, {\bf p}_m )\) and the unknown estimates x k at points p k .

-

b.

At the specificatory stage, the specificatory knowledge base considers hard data and soft data

-

c.

At the integration stage, the general and specificatory knowledge bases are combined in a total knowledge base to give the integration pdf f κ(x κ) at each mapping point p k using the operational Bayesian formula

$$ f_{\kappa}(x_{\kappa}) = A^{-1} \int\limits_D f_g(x_{\text{map}})d\Xi_S(x_{\text{soft}}) $$(22)where A is a normalizing constant and Ξ S and D denote an integration operator and the range determined by the specificatory knowledge base respectively.

4.9 Stochastic diffusion models

A continuous time parameter stochastic process is referred to as a diffusion process if it possesses the Markov property and its sample paths X(t) are continuous functions of time t. Many physical and other phenomena can be reasonably modelled by diffusion processes. Diffusion processes may be characterized by two infinitesimal parameters describing the mean and the variance of the infinitesimal displacements, defined as the following limits: Let the increment of the process accrued over a time interval h be Δ h X(t) = X(t + h) − X(t), then the infinitesimal parameters of the process are:

μ(x, t) are sometimes referred to as the drift parameter, infinitesimal mean or the expected infinitesimal displacement and σ2(x, t) is called the diffusion parameter or the infinitesimal variance and these are generally continuous functions in x and t. Alternative characterizations of diffusion processes exist, e.g. based on stochastic differential equations.

A methodology for analysing secular trends in the time evolution of certain variables, modelling the variables by nonhomogeneous stochastic diffusion processes with time-continuous trend functions is proposed in Gutiérrez et al. (2008). The methodology was applied to the evolution of CO2 emissions in Spain with the Spanish GDP as an exogenous factor affecting the trend component and hence introducing nonhomogeneity. The trend can be analysed by means of statistical fit of the trend functions of the stochastic diffusion model to the observed data, and the models were also found appropriate for medium-term forecasts.

Stochastic diffusion models have been applied to other temporal or spatial problems as well, such as modelling of tumor growth (Albano and Giorno 2006), ion channel gating (Vaccaro 2008), financial volatility (Todorov 2009) and scaling behaviour of precipitation statistics (Kundu and Bell 2006).

4.10 Regional frequency analysis

A method commonly used in hydrology, referred to as regional frequency analysis, utilizes data from several similar sites in order to estimate event frequencies, typically extreme events, at a particular site. The main idea is that data from neighboring or other sites where the frequency of the event to be investigated are similar provide additional information and hence yield more accurate predictions than data from the particular site alone. This approach can also be used to interpolate to ungauged sites where there are no data, based on data from similar sites.