Abstract

Phase-field modeling of fracture has gained popularity within the last decade due to the flexibility of the related computational framework in simulating three-dimensional arbitrarily complicated fracture processes. However, the numerical predictions are greatly affected by the presence of uncertainties in the mechanical properties of the material originating from unresolved heterogeneities and the use of noisy experimental data. The objective of this work is to apply the Bayesian approach to estimate bulk and shear moduli, tensile strength and fracture toughness of the phase-field model, thus improving accuracy of the simulations with the help of experimental data. Conventional approaches for estimating the Bayesian posterior probability density function adopt sampling schemes, which often require a large amount of model estimations to achieve the desired convergence, thus resulting in a high computational cost. In order to alleviate this problem, we employ a more efficient approach called sampling-free linear Bayesian update, which relies on the evaluation of the conditional expectation of parameters given experimental data. We identify the mechanical properties of cement mortar by conditioning on the experimental data of the three-point bending test (observations) in an online and offline manner. In the online approach the parameter values are sequentially updated on the fly as the new experimental information comes in. In contrast, the offline approach is used only when the whole history of experimental data is provided once the experiment is performed. Both versions of estimation are discussed and compared by validating the phase-field fracture model on an unused set of experimental data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The prediction of fracture is of main concern in many fields of engineering and the goal of several computational methods relying on different modeling frameworks. In the past two decades, a great amount of efforts were made to improve the accuracy of the numerical predictions mainly by (i) developing a more reliable fracture model and computational framework and (ii) identification of mechanical parameters with the help of experimental data.

Computational approaches for simulating the fracture behavior of brittle materials are generally classified into three categories: discrete crack models [1], lattice models [2, 3] and damage models [4]. In the discrete crack models, explicit modeling of cracks requires the mesh update by introducing new boundaries as the crack propagates. The development of the extended finite element method (XFEM), enriching the standard shape functions with discontinuous fields, can avoid remeshing [5]. However, the XFEM is very onerous to implement in three dimensions. In the cohesive zone model (CZM), crack paths are assumed to be known a priori and the insertion of cohesive elements between each pair of continuum elements in a mesh for unknown crack paths may lead to over-estimated crack areas [6]. An alternative is the so-called lattice model, in which the simulation results strongly depend on the chosen fracture criterion and element type [2, 3, 7]. In the local/gradient damage models, the stress-strain relation is hypothesized with the information coming from experiments [4]. Comprehensive summaries on this topic can be found in [8] and [9], and the references therein.

Phase-field modeling of fracture, stemming from Francfort and Marigo’s variational approach [10] is a very elegant and powerful framework to predict cracking phenomena. In this setting fracture is described by using a continuous field variable (order parameter) which provides a smooth transition between intact and fully broken material phases, and thus, fully avoids modeling cracks as discontinuities, see e.g. [11,12,13,14,15]. Purely based on energy minimization, the phase-field framework allows simulations of three-dimensional complicated fracture processes, including crack initiation, propagation, merging and branching in a unified framework without the need for ad-hoc criteria and remeshing. The model shares several features with gradient damage models [16].

In this work, we are concerned with cement mortar, which is known to be brittle or quasi-brittle. In brittle materials, the force resisted across a crack is zero, whereas quasi-brittle materials feature a softening behavior with a cohesive response. For both materials, no apparent plastic deformation occurs before fracture. Wu et al. [17] provided a qualitative and quantitative comparison between mixed-mode fracture test results in cement mortar and numerical simulations through the phase-field approach, and a good agreement was found.

The deterministic phase-field model, however, is not very suitable for the prediction of cracking in materials with unresolved heterogeneities. An example is cement mortar modeled as an homogeneous material but in fact exhibiting a heterogeneous and porous structure at a lower scale. The reason is that the parameterization of the model strongly depends on material characteristics which are not fully known. The choice of the parameter values certainly affects the accuracy of numerical simulations and thereby the estimation of the phase-field parameters given experimental data is required. One widely used and simple way to tune the parameters is to minimize the measured distance between the experimental data and their numerically predicted counterpart. However, the resulting optimization problem is often ill-posed and requires regularization.

The generalization of the optimization methods to the Bayesian approach offers a natural way of combining prior information with experimental data for solving inverse problems within a coherent mathematical framework [18, 19]. In this framework the knowledge about the parameters to be identified is modelled probabilistically, thus a priori reflecting an expert’s personal judgment and/or current state of knowledge about material characteristics. The information gained from the measurement data is used to reduce the uncertainty in the prior description via Bayes’s rule [19]. The posterior is thus the probabilistic prior conditioned on the measurement data. Such an approach is then more suitable than deterministic optimization approaches as if our measurement data do not contain information about the parameter set then Bayes rule will output prior description, whereas deterministic approaches will possibly become unstable and output non-physical values. Therefore, deterministic approaches usually use some type of regularization which further defines the parameter values.

The evaluation of Bayes’s posterior is always challenging. The posterior probability densities can be computed via the Markov chain Monte Carlo (MCMC) integration. However, the method is slowly converging and may lead to high computational costs especially when nonlinear evolution models such as the phase-field model are considered [20]. With the intention of accelerating the MCMC method, the transitional MCMC [21] is often used. The idea is to obtain the samples from series of simpler intermediate PDFs that converge to the target posterior density and are faster to evaluate. Further acceleration of MCMC-like algorithms can be achieved by constructing the proxy-(meta-/surrogate-) models via e.g. a polynomial chaos expansion (PCE) and/or a Karhunen-Loève expansion (KLE) [22,23,24], both of which are cheap to evaluate.

The approaches mentioned above result in the samples from the posterior density as a final outcome. However, a more efficient approach is to focus on posterior estimates that are of engineering importance, such as the conditional mean value or maximum a posteriori point (MAP) in a case of unimodal posterior. In this manner, the posterior random variable representing our knowledge about the parameter mean value can be evaluated via algorithms that are based on approximations of Bayes’s rule [19]. These algorithms give the posterior random variable (RV) as a function of the prior RV and the measurement. The simplest approximation is to try to get the conditional mean correct, as this is the most significant descriptor of the posterior. Such algorithms result from an orthogonal decomposition in the space of random variables [25], which upon restriction to linear maps gives the Gauss–Markov-Kalman filter. Numerical realisations of such maps can be computed again via sampling resulting in the so-called ensemble Kalman filter [26], or the computations can be performed in the framework of a polynomial chaos expansion (PCE) [25]. The latter one allows a direct algebraic way of computing the posterior estimate without any sampling at the update level. Therefore, the computational cost is greatly reduced, while the accuracy of the posterior estimate can be guaranteed as discussed in papers with a broad range of applications, e.g. diffusion [19], elasto-plasticity [19] and damage [27].

Applying the Bayesian approach for parameter identification of the mechanical models can be traced back to the 1970s [28], when elastic parameters describing linear mechanical behavior were estimated. Later on, this framework has been employed for various linear problem applications, e.g. mechanical response of composite [29], dynamic response [30], as well as thermal and diffusion problems [19, 31]. The extension to nonlinear models appeared later on. Rappel et al. [32] adopted the Bayesian approach to identify elastoplastic material parameters of one-dimensional single spring based on uniaxial tensile results, in which the probability density function (PDF) of the posterior was calculated by MCMC with an adaptive Metropolis algorithm. They accounted for the statistical errors which polluted the measurements not only in stress but also in strain. Rosić et al. [19] have applied the sampling-free linear Bayesian update via PCE to estimate the parameters in the elastoplastic model. The method in different variants was thoroughly discussed on a nonlinear thermal conduction example in [31]. Huang et al. [33] have utilized two Gibbs sampling algorithms for Bayesian system identification based on incomplete model data with application to structural damage assessment. The equation-error precision parameter was marginalized over analytically, such that the posterior sample variances were reduced.

The objective of this work is to identify mechanical parameters of cement mortar for phase-field modeling of fracture by using a sampling-free linear Bayesian approach. The measurements which update the prior probability description to a posterior one come from three-point bending tests. However, this paper does not represent just simple combination of nonlinear mechanics model (phase-field model) and sample-free linear Bayesian approach. As the identification procedure is probabilistic and linear, the conditional estimate of the phase-field parameter set will not be optimal due to the nonlinear measurement-parameter relationship. Hence, we develop online sequential algorithm in which the updating is performed step-wise (one measurement at the time) such that the measurement-parameter relationship can be observed as close to linear as possible. This is further compared to the offline approach in which complete measurement history is taken into account for the parameter estimation.

The paper is organised as follows. Section 2 introduces the basics on phase-field modeling of brittle fracture. In Sect. 3, the sampling-free linear Bayesian update of polynomial chaos representations is described. Section 4 is concerned with online and offline procedures for updating. In Sect. 5 the experimental setup of the three-point bending test is presented, and the updating process of the unknown material properties is described. The last section concludes the work with a discussion of the results and possible future work.

2 Phase-field modeling of brittle fracture

The phase-field approach used in this paper originates from the regularization of the variational formulation of brittle fracture by Francfort and Marigo [10]. For brittle materials, the fracture problem is formulated as the minimization problem of the energy functional

in which \({\mathcal {\varOmega }}\subset {\mathbb {R}}^n\), \(n=2,3\), is an open bounded domain representing an elastic n-dimensional body, \(\varGamma \subset \mathcal {\varOmega }\) is the crack set, \(\psi _{\mathrm{el,0}}(\varvec{\varepsilon })\) is the elastic strain energy density, \(G_{c}\) is the fracture toughness (or critical energy release rate), and \(\varvec{\varepsilon }=\frac{1}{2}\left( \nabla {\mathbf {u}} +\nabla {\mathbf {u}}^{T}\right) \) is the infinitesimal strain tensor with \({\mathbf {u}}\) as the displacement field. Introducing regularization as in Bourdin et al. [11], Eq. (2.1) can be recast as

in which s is the phase-field parameter describing the state of the material. This parameter varies smoothly between 1 (intact material) and 0 (completely broken material), \(0\le s\le 1\). Here, \(\ell \) is a length scale parameter characterizing the width of the diffusive approximation of a discrete crack, i.e. the width of the transition zone between completely broken and intact phases. The elastic strain energy density affected by damage, \(\psi _\mathrm{el}\), is given by

in which the split into positive and negative parts is used to differentiate the fracture behavior in tension and compression, as well as to prevent the interpenetration of the crack faces under compression. Therefore, normal contact between the crack faces is automatically dealt with.

In this work we adopt the volumetric-deviatoric split of the density in Eq. (2.3) by Amor et al. [12]:

in which the bulk modulus \(K=\lambda +\frac{2 G}{3}\), \(\langle a\rangle _{\pm }:=\frac{1}{2}(a\pm \arrowvert a\arrowvert )\), and \(\varvec{\varepsilon }_{\mathrm{dev}}:=\varvec{\varepsilon }-\frac{1}{3}\mathrm{tr} (\varvec{\varepsilon }){\mathbf {I}}\) with \({\mathbf {I}}\) as the second-order identity tensor. Here, \(\lambda \) and G are the Lamé constants given in terms of Young’s moduli E and Poisson’s ratio \(\nu \) by

Furthermore, the degradation function g(s) in Eq. (2.3) is defined via

in which \(\eta \) is a very small dimensionless parameter used to prevent numerical difficulties in fully cracked conditions.

The governing system of equations in strong form is obtained from the stationarity conditions of the functional in Eq. (2.2). It is described by the balance equation

with the stress tensor \(\varvec{\sigma }(\varvec{\varepsilon },s)\) taking the form of

and the phase-field state equation

The model is completed with the following boundary conditions

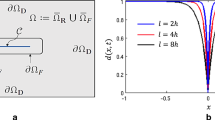

in which \(\bar{{\mathbf {u}}}\) and \(\bar{{\mathbf {t}}}\) are prescribed displacement and traction on the Dirichlet and Neumann portions of the boundary \(\partial \mathcal {\varOmega }_{u}\) and \(\partial \mathcal {\varOmega }_{t}\), respectively, and \({\mathbf {n}}\) is the outward normal unit vector to the boundary.

The governing Eqs. (2.7) and (2.11), with the corresponding boundary conditions, constitute a system of coupled non-linear equations in the unknown fields \({\mathbf {u}}\) and s. Their weak form is appropriately discretized with the finite element method using linear finite elements for both displacement and phase-field. Algorithmically, we adopt a staggered approach, solving alternatively for the displacement and the phase-field [34, 35]. Interpreting s as a damage variable, an irreversibility condition on s is needed additionally to guarantee that damaged points do not “heal”. For this purpose Miehe et al. [36] proposed to introduce the history variable \({\mathcal {H}}^{+}({\mathbf {x}}):= \mathrm{max}_{\tau \in [0,t]} \psi _{el}^{+}({\mathbf {x}},\tau ) \) (\({\mathbf {x}}\) being the spatial coordinate and t the time) ensuring the irreversibility of the evolution of the crack phase-field [36]. Therefore, Eq. (2.9) is used in a slightly different form as follows:

In a one-dimensional setting, the governing equations can be analytically solved for a homogeneous stress state deriving the tensile strength of the material (critical stress) and the corresponding critical strain [12, 14] as

Thus, the tensile strength (for a given E) is a function of \(\ell \) and \(G_{c}\), and increases as \(\ell \) decreases or \(G_{c}\) increases. This suggests the possibility to determine \(\ell \) directly given the tensile strength and the fracture toughness of a material. When the length parameter \(\ell \) goes to zero, the critical stress goes to infinity. This is consistent with the limit case of linear elastic fracture mechanics, which is also not capable of nucleating fractures in the absence of singularities.

3 Bayesian updating

To perform the phase-field modeling of fracture described in the previous section, one requires the qualitative description of the parameters \(q\in {\mathbb {R}}_+^{n}\) consisting of the pair of bulk and shear moduli K and G, the tensile strength \(f_t\), and the fracture toughness \(G_c\). Initially, when no experimental data are available, the only information on q originates from the modeler’s experience and expert’s knowledge. Thus, q is described as uncertain and modeled as a random variable, the probability of which is specified by an a priori probability density function (PDF) \(\pi (q)\). Once the experimental measurement data \(z\in {\mathbb {R}}^{L}\) are available, the a priori description \(\pi (q)\) on q is updated via Bayes’s rule for densities:

in which \(\pi (z\vert q)\) is the likelihood function, \(\pi (q\vert z)\) is the posterior conditional PDF on q given the experimental data z, and P(z) is a normalizing factor enabling the posterior conditional density to integrate to unity. The likelihood factor, hence, plays an important role as it describes how likely the experimental data z are given the a priori knowledge on q. Its form greatly depends on the assumptions made about the observation error \(\epsilon \). For the sake of simplicity, here we assume that the observation error additively sums both the measurement and model errors, and that it is of Gaussian type with zero mean and noise covariance \(C_\epsilon \), as often taken in practice.

3.1 Linear Bayesian update

The evaluation of the posterior density in Eq. (3.1) is not easy, as an analytical solution in most practical cases does not exist, and the numerical evaluation is known to be computationally intensive. On the other hand, we are often not interested in a full posterior distribution, but only in posterior estimates such as the mean and maximum a posteriori (MAP) values. Therefore, instead of evaluating \(\pi (q\vert z)\), the objective of this work is to evaluate only the conditional expectation \({\mathbb {E}}(q\vert z)\) [25] of the parameter q.

Let q be a priori modeled as a random variable \(q_{f}\) (the index f is for “forecast” or a priori knowledge) with values in \({\mathcal {Q}}\), i.e. \(q_{f}:\varOmega \rightarrow {\mathcal {Q}}\) is a measurable mapping with \(\varOmega \) being a basic probability set of elementary events (all possible realisations of q and measurement error). The set \(\varOmega \) carries the structure of a probability space (\(\varOmega , {\mathcal {F}}, {\mathcal {P}}\)), with \({\mathcal {F}}\) being a \(\sigma -\)algebra of subsets of \(\varOmega \) and \({\mathbb {P}}\) being a probability measure. For the sake of simplicity we assume that \(q\in {\mathcal {Q}}_S:=L_{2}(\varOmega ;{\mathcal {Q}}) \) with inner product

i.e. we assume that q has finite variance with the mathematical expectation defined via

As the a priori description of q is a probabilistic one, i.e. q is a RV, the mathematical phase-field model predicts/forecasts the possible measurement values also as RV

Here, Y stands for the measurement operator defined indirectly via the phase-field model given in Eqs. (2.7)–(2.11), and \(\epsilon (\omega )\) is the model of the measurement error. Note that the operator Y may also contain the modeling error that originates in the discretization or the physics based law. Here, the modeling error is assumed to be negligible, and hence will not be modeled. In order to make our estimate reliable, we therefore further assume that the measurement error is not fully known, and in the numerical examples we try to find its optimal value. This further means that \(y_f(\omega )\) is a random variable that takes the values in a Sobolev space \({\mathcal {U}}\) with inner product \(\langle \cdot \vert \cdot \rangle _{{\mathcal {U}}}\), and lives in a space \({\mathcal {U}}_S:=\ L_{2}(\varOmega ,{\mathcal {U}}) \) with inner product defined analogously to \({\mathcal {Q}}_S\).

Following the previous definitions, it is well known that the conditional expectation of the parameter q given the measurement is orthogonal projection of q onto the closed subspace \({\mathcal {Q}}_{n}:= L_{2}(\varOmega , \sigma (y_f), {\mathbb {P}};{\mathcal {Q}})\subset {\mathcal {Q}}_S\) of all RVs representing the measurement information. Here, \(\sigma (y_f)\) is the sub-\(\sigma \) algebra generated by measurements \(y_f\), and by Doob-Dynkin lemma (see [37]) \(L_2(\varOmega ,\sigma (y_f);{\mathcal {Q}})\) is the subspace generated by all measurable maps \(\varphi : {\mathcal {U}}\mapsto {\mathcal {Q}}\). In other words, we are searching for the optimal \({\tilde{q}}\) in \({\mathcal {Q}}_{n}\) such that the quadratic distance

is minimized. This is equivallent to evaluating \(P_{{\mathcal {Q}}_n}(q)\), the projection of q onto the subspace \({\mathcal {Q}}_n\). Furthermore, the closed subspace \({\mathcal {Q}}_n\) induces an orthogonal decomposition \({\mathcal {Q}}_n={\mathcal {Q}}_n\oplus {\mathcal {Q}}_n^\bot \), and correspondingly with the orthogonal projection \(P_{{\mathcal {Q}}_n}\) one arrives at decomposition of the random variable

into the components \(P_{{\mathcal {Q}}_n}(q)\) and \((I-P_{{\mathcal {Q}}_n})(q)\) belonging to \({\mathcal {Q}}_n\) and \({\mathcal {Q}}_n^\bot \), respectivelly. The decomposition is such that the second component has vanishing conditional mean when the conditional expectation \({\mathbb {E}}(\cdot |\sigma (y_f))\) on both sides is taken.

In Eq. (3.6) the measurement z informs us on the first component, and hence a first version for a filter reads:

in which \(q_a\) stands for the updated or “assimilated” q. From the previous remark it is clear that

so that \(q_a(\omega )\) has the correct conditional mean.

The estimator in Eq. (3.5) is computationally not readily accessible. For computational purposes, the space \({\mathcal {Q}}_{n}\) cannot be fully considered, but only approximating subspaces. By focusing only on affine measurable maps \({\mathcal {Q}}\rightarrow {\mathcal {Y}}\), the minimization in Eq. (3.5) results in a generalization of the Gauss–Markov theorem (see [25, 38]), the so-called “Gauss–Markov-Kalman” (GMK) filter

in which \(K_g\) is the “Kalman gain”, taking the form of

for uncorrelated measurement errors. Here, \(C_{q_{f}y_f}:=\text {Cov}(q_{f},y_f)={\mathbb {E}}((q_{f}-{\mathbb {E}}(q_{f})) \otimes (y_f-{\mathbb {E}}(y_f)))\), and similarly \(C_{y_f}=\text {Cov}(y_f,y_f)\) and \(C_{\epsilon }=\text {Cov}(\epsilon ,\epsilon )\) with \(\dagger \) being the Moore-Penrose inverse.

3.2 PCE based sampling-free linear Bayesian update

The estimator in Eq. (3.9) is given in terms of random variables, and not of conditional densities as in Eq. (3.1). In a computational sense this is a great advantage for obtaining \(q_{a}(\omega )\), as one can pick a convenient representation of the corresponding random variables \(q_{f}(\omega )\) and \(y_f(\omega )\). It turns out that choosing an appropriate functional description among all possible representations is the most favorable as the formula in Eq. (3.9) has “deterministic” or purely algebraic flavor. Additionally, if the functional representations are given in terms of the polynomial chaos expansions, all computational tools from the field of uncertainty quantification can be employed in the plain vanilla way of estimating \(q_{a}(\omega )\).

To discretize the infinite dimensional filter in Eq. (3.9) one may further introduce a finite dimensional subspace \(S_{J}\):=span\((\varPsi _{\alpha }({\varvec{\theta }}(\omega )))_{\alpha \in {\mathcal {J}}}\) spanned by the polynomial chaos basis functions \(\varPsi _{\alpha }({\varvec{\theta }}(\omega ))\), i.e. the surrogate model, such that

holds. Here, \(\varPsi _\alpha ({\varvec{\theta }}(\omega ))\) are polynomials with standard random variables \({\varvec{\theta }}(\omega )\) (e.g. standard Gaussian) as arguments indexed by a multi-index \(\alpha \) belonging to a finite set \({\mathcal {J}}\). Analogously, one may discretize \(y_f(\omega )\) by introducing \(\hat{{\mathcal {U}}}_s={\mathcal {U}}_N\otimes S_{\mathcal {J}}\) consisting of the finite element subspace \({\mathcal {U}}_N:=\text {span}(N_i)_{i=1}^n\) spanned by the finite element shape functions \(N_i\) and the discretized stochastic subspace \(S_{\mathcal {J}}\) such that

holds. Here, \(y_f^{(\alpha )}\) are the spatially dependent polynomial chaos coefficients corresponding to the chosen finite element discretisation, i.e.

Substituting Eqs. (3.11) and (3.12) by into Eq. (3.9) one finally obtains

As \(\varPsi _\alpha ({\varvec{\theta }}(\omega ))\) are orthogonal polynomials one may project the previous equation onto \(\varPsi _\beta ({\varvec{\theta }}(\omega ))\) to obtain the index-wise formula

with

being evaluated directly from the polynomial chaos coefficients, e.g.

Finally, in Eq. (3.15) the PCE coefficients \({\varvec{y}}_f^{(\alpha )}\) of the measurement prediction are estimated using the least squares regression technique by using optimised number of Monte Carlo samples.

The framework of the PCE based sampling-free linear Bayesian update can be found in Fig. 1. The input of the programme is the prior PCE information \(q_{f}\) of the material characteristics to be estimated, the experimental data z, as well as the RVs describing the obersvation error \(\epsilon \). This framework outputs the posterior PCE information \(q_{a}\) as a RV from which the mean value, variance and probability of occurence of events can be simply computed. The algorithm of this framework with more details can be found in Table 1 and [38]. Note that Table 1 provides a general description, whereas two specific updating manners, offline and online, will be discussed in the following section.

4 Online and offline identification

As the model described in the previous section is of the nonlinear evolution kind, and the proposed identification procedure is linear, the updating algorithm in Fig. 1 has to be carefully designed. Namely, one may implement the estimator in Eq. (3.9) in at least two different ways, here distinguished as online (sequential) and offline (non-sequential) approaches. By gathering all the data obtained from the loading curve or its parts, the offline approach means estimation of the mechanical properties at once. However, as the whole measurement history is considered, the mapping between the measurement data and the parameter set is highly nonlinear. To allow quasi-linearity, in the online approach the measurement data are observed in pieces, each arriving at a specific moment, and the updates are performed one after each other, i.e. the last posterior is used in time as a new prior until the next observation. Contrary to the offline approach, the online procedure does not use all available measurement data from the load-deflection curve, but only the data at time points marked as the arrival of a new measurement information. In this manner the paremeters can be estimated even when the measurement data set is reduced.

Under offline approach (also known as history matching) we understand a non-sequential procedure in which the experimental data are first collected over a testing period \([t_0,T]\) with regular time intervals \(t_k= k\cdot \Delta t\) to a vector \({\varvec{z}}_c=\{z^{(0)},\ldots z^{(k)},\ldots ,z^{(L)}\}\), and then used for the estimation of the parameter q via

see Fig. 2a for schematic representation. \({\varvec{z}}_c\) stands for the measurement data and \({\varvec{y}}_{fc}\) denotes prediction or forecast of the measurement data. For instance, in numerical examples in Sect. 5, \({\varvec{z}}_c\) stands for the force at the mid-span of the beam on the neutral axis, and \({\varvec{y}}_{fc}\) is the prediction of this quantity by use of the phase-field approach. Here, \({{\varvec{K}}}_g^c={\varvec{C}}_{q_{fc}y_{fc}}({\varvec{C}}_{y_{fc}}+{\varvec{C}}_{\epsilon })^{-1}\) and \({\varvec{y}}_{fc}(\omega )=[y_{fc}^{(0)}(\omega ),\ldots y_{fc}^{(k)}(\omega ),\ldots , y_{fc}^{(L)}(\omega )]\) is the probabilistic prediction of the testing curve over the mentioned time interval. The element-wise components of \({\varvec{y}}_{fc}\) take the form of

in which all \(\epsilon ^{(k)}, {\forall k}\) are assumed to be uncorrelated and independent.

On the other hand, in the online approach, as the name indicates, the estimation of q starts immediately upon arrival of the first portion of the experimental data, see Fig. 2b. After the measurement at time \(t_k\) is available, the data \(z^{(k)}\) are used to estimate

having that

in which the prior \(q_f^{(k)}(\omega ):=q_a^{(k-1)}(\omega )\) equals the last known posterior, and the forecasted measurement \(y_f^{(k)}(\omega )\) is obtained by propagating the prior \(q_f^{(k)}(\omega )\) from \(t_{k-1}\) to \(t_k\) via the incremental version of measurement operator \(Y_{\Delta t}\). Hence, the forecasted measurements are obtained by running the forward problem with the initial conditions taken as the last “updated state” \( w_a^{(k-1)}(\omega )\) consisting of the stress- and strain-like variables corresponding to \(q_a^{(k-1)}(\omega )\). This means that \( w_a^{(k-1)}(\omega )\) has to be evaluated for the new value of updated parameter, which might be computationally expensive. Instead, one may update the state variables as well in a similar form as given in Eq. (4.3), and use the restarting procedure in which the forecast is estimated only one time step ahead instead of running simulation repeatedly from the initial state. Once the parameter posterior is obtained, the process is sequentially repeated until the end of the loading (testing) cycle, see Fig. 2b.

Even though both algorithms essentially work with the same experimental data, the resulting posterior estimates are not necessarily identical. While the offline procedure focuses on the complete time interval and is characterized by high nonlinearity, the online approach takes smaller steps for estimation and thus tends to be quasi-linear when \(\Delta t\rightarrow 0\). On the other hand, the offline approach uses more experimental data acting at once and thus smoothes out the estimate. Additionally, both approaches may result in different posterior variances as the observation errors \(\epsilon ^{(k)}\) in the online approach are integrated in time through the model via updated parameter values \(q_f^{(k)}(\omega )\), and hence the overall uncertainty increases. This is not the case in the offline setting as the observation errors are not integrated through the model but simply added. The accuracy of estimations in the online approach is affected by the time increment chosen for updating, which is not required to be accounted for in the offline approach. Also, the estimated parameter values using the online approach can vary with time, but they do not change in the offline estimation.

In the limit one would expect that both of approaches give same results. However, in general, this is not the case due to many numerical approximations. First we use linear Bayesian procedure, and hence all at once (offline) approach assumes that whole history of the measurement data is linear in the parameter set. Next to that in the offline approach one first carries prediction of whole history of data by propagation of uncertainties through the phase-field model. This means that the uncertainty in the predicted measurement will typically grow in time, and thus is more difficult to build the reliable surrogate model. In an online approach we can better control this, and next to that the linear relationship assumption is more appropriate. It may seem that the online approach is impractical if one first wants to collect data and then analyze them. However, the main idea is to make software that can be built in the machine, and thus estimate parameters online during the experiment. A general conclusion of advantages and disadvantages of online and offline update manners can be found in Table 2. The comparison of both approaches will be further discussed in the following section by using numerical examples.

5 Numerical results

In this section, we employ the PCE based sampling-free linear Bayesian approach to identify the mechanical parameters of cement mortar in the phase-field fracture model given measured forces from three-point bending tests. Both online and offline approaches are employed for this purpose. The online approach is implemented by choosing various time increments \(\Delta t\), the effect of which on the posterior estimate is further studied. Similarly, in the offline approach we do not use the complete time history but its time discretized version, in which either equidistant or non-equidistant time points are preselected for the definition of the measurement data as further numerically analyzed. Besides, we also investigate the effect of the sample size and polynomial expansion order on the estimations of the posterior. All numerical examples are carried out by using a polynomial order of 3 and the sample number of 100, except for a convergence study. As the number of terms in PCE is lower than 100, this number of samples should be sufficient to give reliable estimate. Note that the number of samples can be further reduced but this is not the subject of this study.

5.1 Experimental setup and simulation of the three-point bending test

The experiments utilized in this work are three-point bending tests, conducted at the Institute of Applied Mechanics of the Technische Universität Braunschweig. The material used in the test is a cement mortar with water/cement ratio (w/c) of 0.5 and with hydration time of one week. For the mixture of cement mortar, fine sand with size below 0.7 mm is added to the cement paste. Due to the small aggregate size with no appreciable aggregate interlocking, the material is expected to be quite brittle. The specimen is cast vertically and the upper surface corresponds to the free surface during casting. The dimensions of the specimen are \(120\times 20 \times 36\mathrm{mm}^{3}\) with a central notch of \(2\times 20 \times 24\mathrm{mm}^{3}\) (Fig. 3). The test is performed in displacement control, and both the force and the mid-span deflection of the beam on the neutral axis are recorded until failure.

Figure 4 shows the experimental force-deflection curve of the three-point bending test with each point providing the information on the load increment used for simulations. The finite element mesh must be chosen appropriately to resolve the smallest possible value of \(\ell \). The mesh is refined in the central part of the specimen which is the critical zone of crack propagation. Figure 5 shows sample results of the phase-field fracture simulation of the three-point bending test, with the crack initiating from the notch and propagating upwards.

5.2 Choice of the a priori

In this work four parameters in the phase-field model need to be identified, i.e. bulk modulus K, shear modulus G, tensile strength \(f_{t}\), and fracture toughness \(G_{c}\). These parameters are treated as four random variables, the randomness of which reflects the uncertainty about the true values, allowing the incorporation of measurement information through the Gauss–Markov-Kalman filter. Due to their positive definiteness, the prior of all random variables under consideration are assumed to follow a lognormal distribution. The prior mean values are selected by preliminary numerical simulations, and the chosen standard deviations are 10% of the mean values, see Table 3 for the values and see Fig. 6 for the PDFs. For each combination of the aforementioned properties for phase-field simulation, the length-scale parameter \(\ell \) is calculated by using Eq. (2.12).

This choice of prior is driven by expert’s knowledge as Bayes rule is used to combine our prior knowledge with the data set. If data do not contain information on the parameter in question then Bayes rule will return the experts knowledge, i.e. prior. However, the prior is chosen to be “wide enough” to include the parameter value that we want to estimate. The choice of prior can be also made differently, and in general this will not impact the posterior estimate of the conditional mean as long as the support is wide enough to include the “true” value.

5.3 Results in the elastic region

In the elastic region, we are concerned with the identification of bulk modulus and shear modulus, as fracture toughness and tensile strength do not contribute to the mechanical behavior of the material yet. Figure 7 shows the prior and converged posterior of bulk modulus and shear modulus in the offline and online settings. As the value of the observation error is not known, the updating is repeated for different portions of observation errors. The observation error shown in Fig. 7 is assigned as follows: \(\epsilon =1\%\) indicates that we take 1% of the experimental force as the observation error. In this case the posterior converges with only few experimental data and only few update steps in the offline and online updates, respectively. As shown in Fig. 7, the mean values of bulk modulus and shear modulus move away from their priors. Also, the PDFs of both parameters become narrow, indicating the variances are decreased and the uncertainty is reduced.

The reduction of uncertainty can be treated as a sort of measuring the information gain. The stronger the uncertainty reduction is, the more information one gains from the experimental data. In Fig. 7a, the posterior distribution of the bulk modulus is quite close to its prior information, particularly for the case of choosing a larger observation error, which indicates that no apparent update is captured and one gains less information from the experimental data. The reason is that the prior PDF is chosen already very narrow due to high expert’s confidence in the values of the bulk modulus. This observation also holds for the shear modulus, see Fig. 7b. Moreover, it can be seen that the PDFs of bulk and shear modulus become more narrow as the measurement error decreases. This observation is obvious as a smaller observation error delivers more trustable experimental data. For a given observation error, the results of the online and offline updates are quite close. The small variation can be explained by the choice of seeds.

In Fig. 8 one may distinguish two regions separated by a vertical line. The left part depicts the training set of experimental data, whereas the right part is the validation set and is not used for estimation. Note that the deflection at 0.014 mm is taken to be the end of the elastic zone. The validation of the estimated elastic parameters is conducted by comparing the experimental data with the 95% credible region of the predictive posterior obtained by propagating the estimated elastic parameters through the model. As can be seen in Fig. 8, the mean curve matches the experimental data well, which further means that the estimated parameters are reliable.

5.4 Results in the inelastic region

The next objective is to identify the inelastic mechanical parameters (tensile strength and fracture toughness) by using the experimental data in the inelastic region. Here, the PDFs of the elastic parameters estimated from the aforementioned section remain constant without any update. Figure 9a shows the PDFs of the prior and posterior information of tensile strength at different update steps in the offline framework. The posteriors are denoted by the number of time steps used in the update. Namely, the experimental data are made from loading history in the first ten time increments for update 10, from the first 25 time increments for update 25, etc., see Fig. 11a. In doing so, we are able not only to clearly analyze the influence of selecting the experimental data on the posterior but also to better compare the offline with the online update. Hence, in Fig. 9a one can see that the PDF distribution of tensile strength at update step 10 is quite close to the prior, implying no update of tensile strength as it still remains in the elastic region. The slight change in the prior and posterior PDFs can be explained by the variation of the chosen seeds used for plotting. At the update step 25, the PDF of the tensile strength moves away from the prior, indicating that there is an information gain from the experimental data as the nonlinear material behavior starts occurring. However, the obtained PDF is not optimal or converged, as the chosen experimental data do not contain enough information. When we account for more experimental data, the PDF of tensile strength continues to move in the same direction by shrinking. At update step 70 (the peak of deflection-force curve in Fig. 11a), the PDF of tensile strength starts moving in the opposite direction. When we come to update step 75, the PDF converges due to sufficient information gain from experimental data. Hence, as we take more experimental data, the variance of the updated PDF of tensile strength decreases, indicating that we can be more confident about the value.

In the previous updating process we did not have the exact knowledge on the measurement as well as modelling errors. Therefore, the observation uncertainty is varied and its impact on the posterior is further investigated. Figure 9\(a_{1}\) shows the update results of tensile strength with an observation error \(\epsilon =1\%\) in the offline framework. Figure 9\(a_{2}\) and \(a_{3}\) show the update results of tensile strength in the offline framework with errors \(\epsilon =3\%\) and \(5\%\), respectively. Clearly, the influence of the error on the updated estimates cannot be neglected, which is concluded as follows: (i) a larger error requires more experimental data to initiate the update of tensile strength and leads to lower confidence (higher variance) on the estimated values, see Fig. 11b; (ii) with a smaller error, the updating process admits a high oscillation before the convergence is reached.

Figure 9b shows the update results of tensile strength obtained via the online approach. As the estimation problem is nonlinear and we employ a linear filter, a small update size (performing one update per load increment shown in Fig 11a) is used to ensure the problem to be linear or quasi-linear within the update step, and the accuracy of the results to be guaranteed to the maximum extent. Similarly to the offline update, the PDF of tensile strength does not alter in the elastic region. After arriving to the nonlinear regime, the PDF of tensile strength updates with the reduction of uncertainty and then converges to the final value. Compared to the offline update, there are some essential differences (see Fig. 9): (i) the PDF of tensile strength always updates in one direction from prior to the converged posterior, no matter which observation error is adopted; (ii) for the preselected observation error the PDF reaches convergence with fewer update steps as compared to offline update; and (iii) when the observation error is increased, the update from prior to posterior is less obvious as the information gain from the experimental data is small. In addition, the offline obtained PDF is less narrow than the online one, see Fig. 11b. The reason for this can lie in the uncertainty quantification part of the predicted results. With time the uncertainty in the offline response grows and can be overestimated by regression technique. As previously mentioned, the random variables coming from the observation errors are not propagated through the forward model as in the online procedure, see Sect. 4. Finally, the measurement-parameter relationship is highly nonlinear in an offline approach compared to the one in online approach. Hence, one obtains higher approximation errors.

Figure 10 depicts the convergence of the mean value and deviation of tensile strength obtained by offline and online approaches. Before update step 20 both the mean and variance stay constant as the material remains in the elastic region. After that, the mean increases in value and starts fluctuating as nonlinearity kicks in. The oscillation is more obvious in the offline update due to higher nonlinearity of the measurement operator than in online approach. On the other hand, the variance reduces as more information is gained. Apparently, in Fig. 10b one may see a drastic difference in variances obtained by offline and online approaches for same reasons as explained before. This is confirmed in Fig. 11b, in which the converged posterior of tensile strength between online and offline updates is compared.

After identifying the elastic properties and tensile strength, we focus on the identification of fracture toughness. Fig. 12 shows the prior and offline/online posterior of fracture toughness. The marked update steps in the deflection-force curve used for updating are shown in Fig. 14a. When comparing the offline to online posterior PDFs in Fig. 12, one may notice that the mean values of both update approaches are quite close when converged. However, the offline PDF admits more fluctuations in the process. In the beginning, when the nonlinear regime starts, the linear offline estimator struggles with high-nonlinearity and little information is gained, thus resulting in underestimated posterior. Once the information gain is maximized, the offline PDF behavior is stabilized. This phenomenon is also observed for larger observation errors which, as expected, lead to wider converged posterior PDF outcomes. A comparison of the converged posterior PDFs of fracture toughness with various observation errors is depicted in Fig. 14b. Here, one may notice that the variance of the observation error has an important impact on the online/offline outcomes. If the observation error is large, these two procedures will not essentially lead to an “identical” result. The difference is reflected in the variance of the posterior. The reason is that in an offline update all observation errors are added at once to the already evaluated prior predictions, whereas in an online approach these are added gradually and are further propagated through the forward model.

In a similar manner as for the tensile strength, the results of the convergence of the mean value and deviation of fracture energy obtained via offline/online approaches are depicted in Fig. 13. The only difference is that both approaches for updating fracture toughness lead to a similar variance estimate. In addition, the updating of variance starts bit later than for the tensile strength as expected.

In the examples shown in the previous sections, the sample number 100 (used for the evaluation of the PCE of the predicted results) and the polynomial expansion order of 3 are chosen. Here we conduct a convergence study to prove the reliability of this choice. Figure 15 shows the effects of the polynomial expansion order and the sample number on the convergence of four material properties to be identified. This analysis is carried out in the offline manner. In Fig. 15a we can see that for orders 3 and 4 the bulk modulus converges well when the sample number is around 30, whereas the shear modulus converges for 50 samples, see Fig. 15b. Similarly, Fig. 15c,d show the convergence of tensile strength and fracture toughness for different polynomial expansion orders as the sample number increases. In the process of identifying these two parameters the experimental data from the inelastic region are required, therefore it is reasonable that more samples, i.e. 70 evaluations, are needed to achieve the convergence. The results discussed previously approve the choice of the sample number and the polynomial expansion order that are used in the previous tests.

Effect of update step size on the online updating procedure: \(\mathbf{a} _\mathbf{1 }\) tensile strength with update step size of 1, \(\mathbf{a} _\mathbf{2 }\) tensile strength with update step size of 5, \(\mathbf{a} _\mathbf{3 }\) tensile strength with update step size of 10, \(\mathbf{b} _{1}\) fracture toughness with update step size of 1, \(\mathbf{b} _{2}\) fracture toughness with update step size of 5, and \(\mathbf{b} _{3}\) fracture toughness with update step size of 10

As mentioned before, the online identification result depends on the chosen time increment used for updating. In order to ensure the accuracy of the update results, choosing a small update step size is essential, such that the nonlinear problem can be treated as linear or quasi-linear within the update step. Here we investigate the impact of the update step size on the online updating procedure of tensile strength and fracture toughness. In this analysis, the update step size of 1, 5 and 10 are chosen respectively. Namely, for these three cases we perform the update every 1 time increment, 5 time increments and 10 time increment, see Fig. 4. In Fig. 16, we can see that the results for the update step sizes of 1 and 5 converge, whereas the results for the update step sizes of 10 do not converge, implying the linear filter fails due to strong nonlinearity. Also, in Fig. 17, one can clearly see that the converged results for the update step sizes of 1 and 5 are quite close, indicating that the problem can be considered to be linear or quasi-linear within the update step size of 5.

Finally, the validation of all identified parameters is performed on the part of the loading curve given in Fig. 4 that is not used for estimation purposes. Namely, all identified parameters in their converged posterior PDF form are propagated through the fracture model to obtain the posterior predictive of the loading curve. As seen in Fig. 18, the 95% probability region of the loading curve before the vertical line is not so wide, because this part of the experimental data is used as measurement. On the other hand, the uncertainty grows as one approaches the deflection point due to the nonlinearity of the model. Furthermore, the 95% probability region of the loading curve in the region right to the vertical line represents forecasting of data that are not used for training. As can be seen, both the experimental curve and the mean of the posterior predictive almost perfectly match. This means that the softening part is predicted well by the given model and the identified parameters.

6 Conclusions

The objective of this work is to apply probabilistic Bayesian linear estimation to provide an efficient and reliable parameter identification procedure for phase-field modeling of brittle fracture. The paper focuses on fracture in cement mortar with given load-deflection curves from experimental three-point bending tests. Phase-field modeling is a very powerful and reliable tool to simulate complicated fracture processes, including crack initiation, propagation, merging and branching in a unified framework without the need for ad-hoc criteria and on a fixed mesh. The applied method for parameter identification is based on the conditional expectation approximation via surrogate models, here chosen in the form of the polynomial chaos expansion. In this manner the posterior estimate is evaluated in an algebraic way without any sampling at the update phase. The only sampling stage in the proposed algorithm is related to the estimation of the surrogate model of the forecasted measurement.

The estimation is preformed in online and offline forms varying in the way of updating the parameter set from the perspective of the measurement data arrival. As online approach uses only current data point and offline the complete data history, the online posterior parameter PDFs are wider than in case of those obtained by offline estimation. In contrast to a larger variance, both methods give satisfactory matching results for the posterior mean values. This means that we obtain the same mean estimate from both approaches, only they are characterized by different levels of confidence. These match only when the update frequency increases in both the online and offline stage.

We analyze the influence of the sample (model evaluations) number, the polynomial expansion order and the measurement error on the posterior estimates. Moreover, the effect of the update step size on the online updating procedure is investigated. In this work, the estimation is performed gradually by first considering only the elastic region, and then the inelastic part. For this an a priori assumption is made to split these two stages. As the linear elastic parameters are easy to estimate, the assumption is not so strong and includes only few initial time steps. The probability density functions of the estimated elastic parameters remain constant without any update in the procedure of estimating the inelastic parameters.

The identified mechanical parameters describing the phase-field model of fracture are validated by comparing the set of validation experimental data that are not used for estimation and the numerical results obtained in this work. Numerical analysis show a good matching among them, thus proving the reliability of the posterior estimates.

In this paper only the experimental data from one specimen are utilized. However, cement mortar as material is characterized by a low repetitiveness of different specimens due to the heterogeneities of the material at a lower scale. Moreover, we use a linear filter to resolve the unknown parameters of an obviously nonlinear problem by adopting small time steps in the updating process. With this approach the computational time can drastically increase if the updating time step approaches zero. To improve this, future work will consider non-linear estimation of the parameter values to improve the reliability of the analysis. This further will be extended to quantification of uncertainty in the parameter values using experimental data from more specimens, and accounting for model error arising from many sources, including e.g. model imperfection and discretization error.

References

Ingraffea AR, Saouma V (1985) Numerical modeling of discrete crack propagation in reinforced and plain concrete. Fract Mech Concrete Struct Appl Numer Cal 4:171–225

Ibrahimbegovic A, Nikolic M, Karavelic E, Miscevic P (2018) Lattice element models and their peculiarities. Arch Comput Methods Eng 25(3):753–784

Schlangen E, van Mier JGM (1992) Simple lattice model for numerical simulation of fracture of concrete materials and structures. Mater Struct 25:534–542

Peerlings RHJ, de Borst R, Brekelmans WAM, Geers MGD (1998) Gradient-enhanced damage modelling of concrete fracture. Mech Cohes Frict Mater 3:323–342

Loehnert S, Mueller-Hoeppe DS, Wriggers P (2010) 3D corrected XFEM approach and extension to finite deformation theory. Int J Numer Meth Eng 86:431–452

Wu T, Wriggers P (2015) Multiscale diffusion-thermal-mechanical cohesive zone model for concrete. Comput Mech 55:999–1016

Liu JX, Zhao ZY, Deng SC, Liang NG (2009) Numerical investigation of crack growth in concrete subjected to compression by the generalized beam lattice model. Comput Mech 43:277–295

Rots JG (1988) Computational modeling of concrete fracture. PhD thesis, Delft University of Technology, Delft, The Netherlands

de Borst R (1997) Some recent developments in computational modelling of concrete fracture. Int J Fract 86:5–36

Francfort GA, Marigo JJ (1998) Revisiting brittle fractures as an energy minimization problem. J Mech Phys Solids 46:1319–1342

Bourdin B, Francfort GA, Marigo JJ (2000) Numerical experiments in revisited brittle fracture. J Mech Phys Solids 48:797–826

Amor H, Marigo JJ, Maurini C (2009) Regularized formulation of the variational brittle fracture with unilateral contact: Numerical experiments. J Mech Phys Solids 57:1209–1229

Miehe C, Hofacker M, Welschinger F (2010) A phase field model for rate-independent crack propagation: Robust algorithmic implementation based on operator splits. Comput Methods Appl Mech Eng 199:2765–2778

Borden MJ, Verhoosel CV, Scott MA, Hughes TJR, Landis CM (2012) A phase-field description of dynamic brittle fracture. Comput Methods Appl Mech Eng 217–220:77–95

Ambati M, Gerasimov T, De Lorenzis L (2014) A review on phase-field models of brittle fracture and a new fast hybrid formulation. Comput Mech 55:383–405

de Borst R, Verhoosel CV (2016) Gradient damage vs phase-field approaches for fracture: similarities and differences. Comput Methods Appl Mech Eng 312:78–94

Wu T, Carpiuc-Prisacari A, Poncelet M, De Lorenzis L (2017) Phase-field simulation of interactive mixed-mode fracture tests on cement mortar with full-field displacement boundary conditions. Eng Fract Mech 182:658–688

Idier J (2008) Bayesian approach to inverse problems. Wiley, Hoboken

Rosić BV, Kučerová A, Sýkora J, Pajonk O, Litvinenko A, Matthies HG (2013) Parameter identification in a probabilistic setting. Eng Struct 50:179–196

Gamerman D, Lopes HF (2006) Markov Chain Monte Carlo: stochastic simulation for Bayesian inference. Chapman Hall, Boca Raton (FL)

Ching JY, Chen YC (2007) Transitional Markov chain Monte Carlo method for bayesian model updating, model class selection, and model averaging. J Eng Mech 133:816–832

Matthies H G (2007) Uncertainty quantification with stochastic finite elements. In: Stein E, de Borst R, Hughes TJR (eds) Encyclopaedia of computational mechanics. Wiley, Chichester

Kučerová A, Sýkora J, Rosić B, Matthies HG (2012) Acceleration of uncertainty updating in the description of transport processes in heterogeneous materials. J Comput Appl Math 236:4862–4872

Marzouk Y, Xiu DB (2009) A stochastic collocation approach to Bayesian inference in inverse problems. Commun Comput Phys 6(4):826–847

Matthies HG, Zander E, Rosić BV, Litvinenko A (2016) Parameter estimation via conditional expectation: a Bayesian inversion. Adv Model Simulat Eng Sci 3:1–21

Evensen Geir (2003) The ensemble kalman filter: theoretical formulation and practical implementation. Ocean Dyn 53(4):343–367

Waeytens J, Rosić B (2015) Comparison of deterministic and probabilistic approaches to identify the dynamic moving load and damages of a reinforced concrete beam. Appl Math Comput 267:3–16

Isenberg J (1979) Progressing from least squares to Bayesian estimation. pages 1–11. Proceedings of the 1979 ACME Design Engineering Technical Conference, New York

Lai T, Ip KH (1996) Parameter estimation of orthotropic plates by Bayesian sensitivity analysis. Compos Struct 34(1):29–42

Marwala T, Sibisi S (2005) Finite element model updating using Bayesian framework and modal properties. J Aircraft 42:275–278

Rosić B, Sýkora J, Pajonk O, Kučerová A, Matthies HG (2014) Comparison of numerical approaches to Bayesian updating. Technical Report (Number 10), TU Braunschweig, Germany

Rappel H, Beex LAA, Hale JS, Bordas SPA (2017) Bayesian inference for the stochastic identification of elastoplastic material parameters: introduction, misconceptions and insights. Comput Eng Finance Sci 1:1–40

Huang Y, Beck JL, Li H (2017) Bayesian system identification based on hierarchical sparse Bayesian learning and Gibbs sampling with application to structural damage assessment. Comput Methods Appl Mech Eng 318:382–411

Bourdin B, Francfort GA, Marigo JJ (2008) The variational approach to fracture. J Elast 91:5–148

Gerasimov T, De Lorenzis L (2016) A line search assisted monolithic approach for phase-field computing of brittle fracture. Comput Methods Appl Mech Eng 312:276–303

Miehe C, Welschinger F, Hofacker M (2010) Thermodynamically consistent phase-field models of fracture: Variational principles and multi-field FE implementations. Int J Numer Meth Eng 83:1273–1311

Bobrowski A (2005) Functional analysis for probability and stochastic processes: an introduction. Cambridge University Press, Cambridge

Rosić BV, Litvinenko A, Pajonk O, Matthies HG (2012) Sampling-free linear bayesian update of polynomial chaos representation. J Comput Phys 231:5761–5787

Acknowledgements

L. De Lorenzis and T. Wu would like to acknowledge funding provided by the German Project DFG GRK-2075. H. G. Matthies and B. Rosić acknowledge partial funding provided by the German Projects DFG SPP 1886, DFG SPP 1748 and by the German Project DFG GRK-2075.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, T., Rosić, B., De Lorenzis, L. et al. Parameter identification for phase-field modeling of fracture: a Bayesian approach with sampling-free update. Comput Mech 67, 435–453 (2021). https://doi.org/10.1007/s00466-020-01942-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00466-020-01942-x