Abstract

Purpose

Surgical oncologists are frequently confronted with the question of expected long-term prognosis. The aim of this study was to apply machine learning algorithms to optimize survival prediction after oncological resection of gastroesophageal cancers.

Methods

Eligible patients underwent oncological resection of gastric or distal esophageal cancer between 2001 and 2020 at Heidelberg University Hospital, Department of General Surgery. Machine learning methods such as multi-task logistic regression and survival forests were compared with usual algorithms to establish an individual estimation.

Results

The study included 117 variables with a total of 1360 patients. The overall missingness was 1.3%. Out of eight machine learning algorithms, the random survival forest (RSF) performed best with a concordance index of 0.736 and an integrated Brier score of 0.166. The RSF demonstrated a mean area under the curve (AUC) of 0.814 over a time period of 10 years after diagnosis. The most important long-term outcome predictor was lymph node ratio with a mean AUC of 0.730. A numeric risk score was calculated by the RSF for each patient and three risk groups were defined accordingly. Median survival time was 18.8 months in the high-risk group, 44.6 months in the medium-risk group and above 10 years in the low-risk group.

Conclusion

The results of this study suggest that RSF is most appropriate to accurately answer the question of long-term prognosis. Furthermore, we could establish a compact risk score model with 20 input parameters and thus provide a clinical tool to improve prediction of oncological outcome after upper gastrointestinal surgery.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The demand for adequate prediction of oncological outcome after upper gastrointestinal surgery will increase due to a rising global incidence of esophageal and gastric carcinomas (Malhotra et al. 2017). In this regard, patient profiles are heterogenous due to varying in-hospital courses and additional information about clinical and histopathological characteristics, which make the individual prognosis difficult to predict. While most patient characteristics are hardly improvable and are rather predetermined by the nature of the disease, there might be critical periods during the treatment of a malignancy such as the phase of oncological resection when many fundamental conditions for long-term outcome are set. This way, the in-hospital stay for surgery is literally an incisive event in the clinical course of one individual patient. However, surgical morbidity can significantly influence the overall and long-term survival in gastric cancer (Kulig et al. 2021). Eventually, clinicians are confronted with a patient curious to know what the information gathered so far from surgery actually means for his or her individual prognosis. While the patient is then frequently referred to the oncologist who will further supervise the prospective course, there may be a more concrete estimation to offer at the time of discharge. Surgeons with an oncologic focus need the tools to provide these answers to adequately care for their patients.

The motivation to accurately predict the individual prognosis of one patient also derives from the growing interest to assess individualized patient profiles in times of personalized medicine (Hu and Steingrimsson 2018). Due to the complexity of each patient case, differences in individual prognosis could eventually implicate different (adjuvant) therapeutic regimens. In this context, the vast amount of clinical data should be viewed as another component of “omics”-based technology and as another step toward personalized treatment.

Machine learning techniques can help to determine significant associations based on complex and vast data (Kourou et al. 2015). Retrospective machine learning studies have already delivered suggestive data that artificial intelligence may be promising in case of multiple potential factors with unclear relations to each other. Since the proposal of the proportional hazards model by David Cox (1972) there were many advances to improve survival prediction, especially since computational possibilities have increased. Among those, machine learning algorithms have recently shown promising results (Zhu et al. 2020). According to the current literature there are many works regarding the automated prediction of time-dependent, censored data with machine learning methods. Besides survival being the most typical and classic scenario, there have also been approaches to predict heart failure (Panahiazar et al. 2015), kidney transplant durability (Sekercioglu et al. 2021) and many other settings. Spooner et al. were able to demonstrate that machine learning algorithms for survival analysis were applicable to the clinical task of predicting dementia (Spooner et al. 2020). Based on two separate datasets, it was possible to reach a concordance index (c-index) of 0.82 and 0.93, respectively.

However, the prediction of oncological outcome after diagnosis of a malignant disease is still the most common target variable in machine learning studies. To this date, there are only few medical publications in the current literature dealing with machine learning models for survival analysis of oncologically resected upper gastrointestinal cancers. Akcay et al. have analyzed gastric cancer patients after chemoradiation with a comparable methodology (Akcay et al. 2020) and tested a random forest algorithm to predict tumor relapse in terms of distant metastases or peritoneal recurrence. Compared to other algorithms such as logistic regression, multilayer perceptron and extreme gradient boosting the random forest scored highest with an area under the curve (AUC) of 0.97 for peritoneal recurrence. However, the random forest algorithm did not show convincing results for overall survival with an AUC of only 0.59 and the authors did not apply a random survival forest methodology. Jiang et al. have utilized a LASSO cox analysis to predict oncological outcome of gastric cancer patients analyzing the radiomic signature of PET computer tomography (Jiang et al. 2018). It is notable that the authors evaluated imaging data and reached a powerful prediction for disease-free survival and overall survival with a c-index of 0.786. In the current literature, feature selection and extraction methods both perform poorly on low case numbers such as 500 or less individuals (Pölsterl et al. 2016). Above this minimum amount of 500 cases, machine learning methods should probably yield similar results and the final strategy to be chosen should rather depend on the individual data, the optimization process and the reached performances of the models.

To our knowledge, the topic of survival prediction in case of oncologically resected upper gastrointestinal cancer is not sufficiently covered by machine learning methods. However, the opportunity of pre- and postoperatively supervising an individual patient course offers an ideal setting to predict long-term outcome based on the collected data. The aim of this study was therefore to apply various machine learning algorithms to optimize survival prediction after oncological resection of gastroesophageal cancer compared to previously established methods.

Material and methods

Data collection and follow-up

Eligible subjects of this study were patients with distal esophageal, gastroesophageal junction or gastric cancer who underwent oncological resection between September 2001 and December 2020 at Heidelberg University Hospital, Department of General Surgery. All patients provided written consent for data collection and analysis. The data was initially collected prospectively in a clinical database. The trial protocol was approved by the ethics committee at the University of Heidelberg (committee’s approval: S-635/2013) and was performed in accordance with the Declaration of Helsinki, Good Clinical Practices as well as local ethics and legal requirements. To acquire long-term survival data, all patients were systematically followed up via continuous surveys. The relevant survival data for this patient collective was gathered until October 2020.

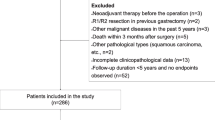

Inclusion and exclusion criteria

Out of the main collective, only those patients were further taken into account with a preoperatively diagnosed and histologically proven adenocarcinoma of the distal esophagus, the esophagogastric junction or stomach. Patients were excluded who had squamous cell carcinoma at any location. Also, only radical oncological resections were evaluated as opposed to exploratory laparotomies with palliative treatment. Furthermore, the type of oncological resection was limited to (sub-) total gastrectomy, gastrectomy with transhiatal extension, proximal gastrectomy, abdominothoracic esophagectomy and discontinuity resection of the esophagus. Variables with a missingness greater than 50% were generally excluded from further analysis. Also, patients were excluded with a case-specific missingness of more than 10%.

Data structure

Eventually, the dataset had a total of 117 features and 1360 patients. Out of the whole dataset, 92 variables were categorical (with 77 Boolean variables) and 25 variables were numeric. Table 1 gives a short overview of the parameters including all dependent variables (or end points, respectively). There were obvious survival predictors and other variables which had to be excluded due to collinearity (see Supplementary Fig. 1). Regarding survival data, 53.5% of the records were censored with an equal distribution over the whole time span. Supplementary Fig. 2 demonstrates the censored data added on top of the patients that verifiably passed away and plotted against the follow-up period.

Missingness and imputation

After the application of the above-mentioned criteria, there was a remaining missingness in 2,033 datapoints and thus an overall missingness of 1.3% without any duplicate values (see Supplementary Fig. 3). For imputation, missingness at random was assumed and multiple imputations with n = 1000 iterations via IterativeImputer from the Scikit learn package (Pedregosa et al. 2011) were performed on Python 3.9 (Rossum and Drake 2009).

Statistical analysis

All statistical analyses were performed on Python 3.9 with packages such as scikit-learn 0.24.2 by Pedregosa et al. (2011). To compare various machine learning algorithms, the package PySurvival by Fotso et al. (2019) was implemented. The performances of the different algorithms were scored according to the concordance index (Uno et al. 2011) which can be regarded as the most important outcome parameter in this study. Hyperparameter optimization and cross-validation are all based on this concordance index, or c-index. The c-index is a generalization of the area under the receiver operating characteristic (ROC) curve (AUC) for censored outcome parameters such as right-censored survival data. It represents the global assessment of the model’s discrimination power which is the model’s ability to correctly provide a reliable ranking of the survival times based on the individual risk scores (Stephane Fotso 2019). The modified version of the c-index by Uno et al. is implemented in PySurvival and thus in this study and works as a simple nonparametric estimator free of censoring under the assumption of random censorship in general.

Furthermore, the inverse probability of censoring weights (IPCW c-index) was considered as an alternative to the c-index which is independent of the distribution of censored cases in the test data. This was relevant due to the comparably high prevalence of censored time points in the present dataset (see Supplementary Fig. 2). To evaluate another performance score, the integrated Brier-score (further abbreviated as IBS) was utilized (Steyerberg et al. 2010). The IBS was eventually plotted to demonstrate prediction error with a cut-off limit of 0.25 considered as critical. Furthermore, the actual survival function was plotted against the predicted function by the model. Finally, time-dependent evaluation of the area under the curve (AUC) was performed for selected machine learning ensembles provided by the scikit-survival package version 0.15.1 (Pölsterl 2020).

Feature selection and importance

We did not perform feature selection before application of algorithms since previous works by Spooner et al. have shown that feature selection in this scenario does not significantly improve model performance (Spooner et al. 2020). The authors have applied seven different feature selection algorithms before running 5-fold cross-validation with 5 repeats. The results did not show any significant improvement of test statistics and most machine learning algorithms have a feature selection method internalized already. Moreover, we tested feature selection methods such as the Boruta variable selection algorithm (Kursa and Rudnicki 2010) for short-term outcome parameters such as in-hospital mortality and 30-day mortality. The results of the outcome analyses based on the selected variables are not shown in this manuscript because of unconvincing results. More importantly, these methods are not appropriate to deal with right-censored survival data and some of the presented machine learning methods have already internalized feature selection algorithms such as the different importance modes of the survival forests.

For eventual interpretation of feature importance, we identified the 20 most relevant predictors after fitting the corresponding machine learning algorithm via permutation-based importance evaluation provided by ELI5 adapted to the scikit-learn package (Arya et al. 2020). The algorithm computes feature importance for any black-box estimator by measuring how the score decreases when a feature is not available.

Machine learning algorithms

The classic Cox proportional hazards model introduced by David Cox in 1972 is probably the most popular survival prediction model with an easy to interpret statistic (Cox 1972). We also tested a non-linear Cox proportional hazards model (also called DeepSurv) which is a multilayer perceptron (Katzman et al. 2018). However, the authors’ work is also known for the focus on recommender functions and treatment decision making. The linear multi-task logistic regression (L-MTLR) was introduced by Yu et al. in 2011 and operates on the basis of multiple logistic regressions (Yu et al. 2011). It may be considered as another general alternative to the Cox regression model. To increase flexibility, the neural multi-task logistic regression (N-MTLR) was introduced in 2019 (Stephane Fotso 2019). To fully represent all available survival prediction models, we also included one parametric model, the Gompertz model, as the relatively strongest test statistic compared to the Weibull and exponential model which are not demonstrated in this work. Last but not least, several random survival forest models were analyzed including the classic random survival forest (RSF) by Ishwaran et al. (2008). The Conditional Survival Forest model was developed by Wright et al. to improve splitting of the RSF by applying maximally selected rank statistics for the split point selection (Wright et al. 2017). The Extra Trees incorporated in PySurvival are an extension of the Extremely Randomized Trees (Geurts et al. 2006) which is a supervised learning method with almost totally randomized decision trees. The model is eventually independent of the output values of the learning sample and impresses with its high computational efficiency.

Hyperparameters and optimization

All machine learning methods provided by PySurvival need specific hyperparameters to be optimized and defined before fitting the model. We performed hyperparameter optimization through defining the closest neighbor parameter values for each algorithm. Thereafter, the optimization was executed via Halving Grid Search included in the latest version of scikit-learn (Pedregosa et al. 2011). Halving Grid Search operates via successive halving of the candidate hyperparameters and their effect on the final test statistic as measured by the c-index. Although Halving Grid Search was not yet applied in many scientific works, it has already been shown to yield equivalent results for hyperparameter tuning compared to usual brute-force Grid Searching, however, with a much higher computation efficiency (Sraitih et al. 2021).

Some of the optimized hyperparameters will be presented in this paragraph. After hyperparameter optimization, the non-linear Cox proportional Hazards (DeepSurv), linear multi-task regression (LMTR), neural multi-task regression (NMTR) and parametric gompertz model used the so-called “Glorot” or “Xavier” uniform method for initialization (Glorot and Bengio 2010) which follows the Sigmoid activation function. The optimal initialization method for the standard Cox proportional hazards model was determined as “zeros” with initial setting of the tensors to 0. Other important hyperparameters for the above-mentioned five models were learning rate, number of epochs and last but not least L2 regularization to fight overfitting through minimization of weights. All survival forest models were fitted with an optimized number of trees of 200. The extra survival trees model (EST) used the “impurity corrected” method for variable importance which is a bias correction for the Gini variable in classification trees introduced by Sandri et al. (Sandri and Zuccolotto 2008). However, the optimal importance mode for conditional survival forest (CSF) and random survival forest (RSF) was determined to be “normalized permutation” which is a normalized version of the permutation importance originally introduced by Breiman et al. (Breiman 2001). While the three survival forest models used all available features, they differed in the determined minimum node sizes which were 15 for the CSF, 8 for the EST and five for the RSF. All hyperparameters are demonstrated in Supplementary Table 2.

Results

The study included 1360 patients with oncological resection between 2001 and 2020 and 117 variables. Table 2 summarizes all patient characteristics with according percentage of missingess which were mainly evaluated as potentially relevant predictors for the machine learning models except for obvious outcome parameters.

First of all, we tested the introduced machine learning algorithms which are demonstrated with the according test statistic in Fig. 1. The standard Cox proportional hazards (CPH) model is demonstrated in Fig. 1a and reached a c-index of 0.645 and an integrated Brier score (or IBS) of 0.221. The prediction error curve is depicted in the last column of Fig. 1 with the values for the Brier score as an integral. The non-linear Cox proportional hazards model (see Fig. 1b) was able to improve the statistic with a c-index of 0.681 and an IBS of 0.194. Note that while both Cox proportional hazards models initially have a comparably good score, the integral reaches the critical limit of 0.25 as the timespan reaches 10 years after cancer diagnosis.

Compared to the first two calculations, the c-index could not be improved by linear multi-task logistic regression (see Fig. 1c, c-index = 0.673, IBS = 0.229). However, the neural multi-task logistic regression reached a clearly better prediction of the actual survival function with a root-mean-square error (RMSE) of the actual predicted survival curve of 9.188 (see Fig. 1d, c-index = 0.672, IBS = 0.254). Note that both regression methods also exceed the IBS cut-off value of 0.25 which is generally considered as an acceptable limit. The Gompertz model as an example for a parametric model was not able to significantly improve the test statistic and showed relatively weak results (see Fig. 1e, c-index = 0.677, IBS = 0.194).

However, the CPH models could be outperformed by all three survival forest methods with the RSF being the strongest prediction model (see Fig. 1h, c-index = 0.736, IBS = 0.166). The Extra Survival Trees (see Fig. 1g, c-index = 0.736, IBS = 0.167) and the Conditional Survival Trees (see Fig. 1f, c-index = 0.726, IBS = 0.166) showed similarly strong results which could still not outperform the RSF even after hyperparameter optimization. The accurate prediction by RSF is again underlined by the direct comparison between actual and predicted survival function demonstrated in the second column. Here, the root-mean-square error (RMSE) of the RSF was calculated as 6.224.

To establish a more practical and compact approach, we selected the most important features identified by the RSF. Table 3 shows the permutation-based importance of the 20 most important parameters identified by the RSF model. While the individual weights of the predictors are not remarkably high (except for lymph node ratio), the RSF model can still build the prediction based on all variables of the dataset. The weights of the predictors are to be interpreted in a fashion that for instance the omittance of lymph node ratio in the model would evoke a change of the resulting c-index by 0.118 with the specified 95% confidence range.

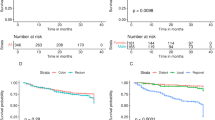

The time-dependent area under the curve (AUC) is separately demonstrated for the six most important predictors. It is remarkable that the AUC factors such as duration of intensive care, postoperative complications and intraoperative blood loss lose their predictive value rapidly as time passes. In contrast, the significance of lymph node ratio increases postoperatively and stays stable on an AUC level above 0.65 more than 5 years after cancer diagnosis (see Fig. 2a).

a Time-dependent AUC of the six most important variables according to permutation-based importange (see Table 3). b Time-dependent AUC of different models. AUC area under the curve; ICU intensive care unit

While predictors have a time-dependent importance, the prediction models also show a time-dependent accuracy. Of note, the RSF algorithm outperforms the CPH model on the time-dependent scale with an AUC of 0.821 (as opposed to 0.720 for the CPH model, see Fig. 2b). The remaining machine learning models also showed better time-dependent performances than CPH, but were not able to outperform the RSF algorithm. While all models were able to predict survival with a very high score above 0.9 in the very first months, the predictions generally tended to decrease in accuracy while time progressed. However, the long-term survival prediction was most successful according to the RSF model.

Figure 3a shows a risk scoring model based on the test statistic calculated by the RSF algorithm. A numeric risk score is assigned to each patient ranging from 4.7 to 7.1. Three different colors were utilized to depict the low-, medium- and high-risk group. The differentiation of three groups was performed manually based on the distribution of risk scores. Figure 3b shows the survival curves of all individuals classified by the scoring system as low, medium or high-risk within the same predefined test group. The three survival curves differ significantly according to the log-rank test (p < 0.0001) with the low-risk group having a 5-year survival rate of 73.18%, the medium-group showing 45.39% and the high-risk group finally 14.87%. Median survival time was 18.754 months in the high-risk group, 44.557 months in the medium-risk group and incalculable in the low-risk group since the survival rate did not fall below 50% in the observed long-term time interval of 10 years. Also, the survival curves can be separated into more groups to enable a further stratification of risk groups (not shown).

Finally, the 20 most relevant predictors from the permutation-based importance scoring (see Table 3) were selected to establish a more compact RSF model. Supplementary Fig. 4 shows the distribution of the risk scores resulting from the compact RSF model. A risk score between 0 and 5.3 was considered as low-risk, a score between 5.3 and 6.1 as medium-risk and a score above 6.1 as high-risk for decease after resection. The according survival curves of the three different groups from the test cohort are demonstrated in Supplementary Fig. 5. The low-risk group had an incalculable median survival longer than 10 years, the medium-risk group had a median survival of 85.639 months and the high-risk group 20.721 months.

Discussion

To improve the prediction of overall survival after gastroesophageal cancer resections we tested eight machine learning algorithms in this retrospective survival analysis to determine the best prediction model for oncological outcome. The Cox proportional hazards (CPH) model is by far the most frequently applied model to evaluate significant survival predictors with coefficient metrics that are easy to interpret. However, the CPH model relies on a manual selection of the most important features with potential omittance of important features. Therefore, the CPH model may not be able to handle complex and vast data with multidimensional features. Machine learning methods have shown since their application in econometrics and biosciences that it is especially useful for prediction problems (Jordan and Mitchell 2015). Using the c-index as a measure of performance, the random survival forest (RSF) model achieved the most accurate prediction with a c-index of 0.736. This value is generally considered in the setting of time-dependent censored data such as long-term survival as a good to strong prediction model. Moreover, we could show that the RSF algorithm was able to outperform the CPH model as well as all other machine learning models. In our setting, the RSF is robust which is proofed by 3-, 4- and 5-fold cross-validation with 5 repeats each (data not shown). We therefore believe that the RSF model is the most suitable machine learning algorithm to predict oncological outcome after curative resection of gastroesophageal adenocarcinoma.

The strength as well as the limitation of this study is the availability of detailed and high-resolution data for each patient. On the one hand, it was possible to establish an excellent prediction model under these circumstances. On the other hand, it might not be feasible in every setting to extract all presented data for each patient who is treated in a surgical hospital. To establish a more practical approach, we selected the 20 most important features identified by the RSF. By reducing the necessary input parameters from 117 to 20 and thus avoiding any tedious or unnecessary data management, it may be possible to make oncological predictions more practicable for the surgical oncologist. The resulting test statistic of this compact RSF model performs in a comparable strength and could be applied in the future. In both cases, extended or compact RSF, the test statistic outperformed the Cox proportional hazards model. It is also imaginable to establish an online application similar to the surgical risk calculator by the American College of Surgeons (Bilimoria et al. 2013) enabling clinicians to enter the 20 variables anonymously, since no identifying information is needed to handle the algorithm. The application can then generate an individual assessment based on the information that is present at the time of discharge. Given the anonymous information, it would also be possible to show specific survival curves according to the trained model and the risk group that the patient belongs to. This is statistically legitimate since the plotted survival curve represents the survival function for all patients with the same risk score range over the time span of 10 years.

The application of an individual and numeric risk score summarizes all available clinical and histopathological data that is predictive for long-term oncological outcome. Future clinical trials could rely on this risk score to find differences for overall survival which is the actual outcome parameter of primary interest. Thus, it is imaginable that subgroups could be treated with or without adjuvant therapy not according to single features such as TNM-status but based on the affiliation to the risk groups presented in this study. Likewise, high-risk patients with worst prognosis could be analyzed separately to eventually achieve improvements in surveillance and treatment. In this context, it is urgent to identify and characterize high-risk patients with unfavorable histopathological and postoperative parameters who still show a comparably good survival. To this date, it is not properly understood if the mere fact that the tumor was resected may nevertheless have an impact on overall survival for this specific patient collective and it is debatable if immunological reactions play a role. It is also necessary to implement a generally accepted risk score such as the presented machine learning model regardless of resectional status to offer a prognostic tool also for palliative situations.

This study is outstanding due to a systematic follow-up, high data quality and a thorough, state-of-the-art imputation with application of documented Python packages. In regard to most medical publications that often do not even deal with missingness at all, the overall data missingness of 1.3% is considered to be comparatively low. Moreover, we imputed the missing data via IterativeImputer from the scikit-learn package. IterativeImputer is a multivariate imputation method which estimates each missing feature from all other existing data points. Thus, it is possible to impute missing values by modeling each missing feature as a function of other features in a round-robin fashion. Even though data missingness is in general a limitation, this very low number of missing data points and our elaborate way of imputation both stand out in contrast to other works with simple data point deletion or imputation via means. All reported machine learning models are retrievable and reproducible via open source and have been cited accordingly. However, the clinical relevance of the reported RSF model as well as the other machine learning models needs to be clarified in prospective analyses with inevitably necessary external validation. Furthermore, the value differences between the several survival forest models are limited to some hundredths of the c-index and the discussion of these differences may be of limited clinical relevance. Nevertheless, we could show in a supervised learning setting that the algorithms were able to automatically select and extract predictors that are verifiably relevant for long-term prognosis.

Conclusions

The results of our study demonstrate how machine learning algorithms can outperform usual prediction models for oncological outcome after resection of gastroesophageal tumors. Our results suggest that compared to other methods the random survival forest is most appropriate to predict long-term prognosis. We provide a clinical tool for clinicians which has to be validated based on further collectives in the future. In the future, interventional trials could be designed in a way that outcome parameters such as the presented risk score are evaluated to measure therapeutic interventions.

References

Akcay M, Etiz D, Celik O (2020) Prediction of survival and recurrence patterns by machine learning in gastric cancer cases undergoing radiation therapy and chemotherapy. Adv Radiat Oncol 5:1179–1187. https://doi.org/10.1016/j.adro.2020.07.007

Arya V, Bellamy RKE, Chen P-Y, Dhurandhar A, Hind M, Hoffman SC, Houde S, Liao QV, Luss R, Mourad S, Pedemonte P, Raghavendra R, Richards JT, Sattigeri P, Shanmugam K, Singh M, Varshney KR, Wei D, Zhang Y (2020) AI explainability 360: an extensible toolkit for understanding data and machine learning models. J Mach Learn Res 21:1–6

Bilimoria KY, Liu Y, Paruch JL, Zhou L, Kmiecik TE, Ko CY, Cohen ME (2013) Development and evaluation of the universal ACS NSQIP surgical risk calculator: a decision aid and informed consent tool for patients and surgeons. J Am Coll Surg 217:833-842.e3. https://doi.org/10.1016/j.jamcollsurg.2013.07.385

Breiman L (2001) Random forests. Mach Learn 45:5–32. https://doi.org/10.1023/A:1010933404324

Cox DR (1972) Regression models and life-tables. J R Stat Soc Ser B 34:187–202. https://doi.org/10.1111/j.2517-6161.1972.tb00899.x

Stephane Fotso (2019) PySurvival: open source package for survival analysis modeling. https://www.pysurvival.io. Accessed 29 May 2021

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63:3–42. https://doi.org/10.1007/s10994-006-6226-1

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. J Mach Learn Res 9:249–256

Hu C, Steingrimsson JA (2018) Personalized risk prediction in clinical oncology research: applications and practical issues using survival trees and random forests. J Biopharm Stat 28:333–349. https://doi.org/10.1080/10543406.2017.1377730

Ishwaran H, Kogalur UB, Blackstone EH, Lauer MS (2008) Random survival forests. Ann Appl Stat 2:841–860. https://doi.org/10.1214/08-AOAS169

Jiang Y, Yuan Q, Lv W, Xi S, Huang W, Sun Z, Chen H, Zhao L, Liu W, Hu Y, Lu L, Ma J, Li T, Yu J, Wang Q, Li G (2018) Radiomic signature of 18F fluorodeoxyglucose PET/CT for prediction of gastric cancer survival and chemotherapeutic benefits. Theranostics 8:5915–5928. https://doi.org/10.7150/thno.28018

Jordan MI, Mitchell TM (2015) Machine learning: trends, perspectives, and prospects. Science. https://doi.org/10.1126/science.aaa8415

Katzman JL, Shaham U, Cloninger A, Bates J, Jiang T, Kluger Y (2018) DeepSurv: personalized treatment recommender system using a Cox proportional hazards deep neural network. BMC Med Res Methodol. https://doi.org/10.1186/s12874-018-0482-1

Kourou K, Exarchos TP, Exarchos KP, Karamouzis MV, Fotiadis DI (2015) Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J 13:8–17. https://doi.org/10.1016/J.CSBJ.2014.11.005

Kulig P, Nowakowski P, Sierzȩga M, Pach R, Majewska O, Markiewicz A, Kołodziejczyk P, Kulig J, Richter P (2021) Analysis of prognostic factors affecting short-term and long-term outcomes of gastric cancer resection. Anticancer Res. https://doi.org/10.21873/anticanres.15140

Kursa MB, Rudnicki WR (2010) Feature selection with the boruta package. J Stat Softw 36:1–13. https://doi.org/10.18637/jss.v036.i11

Malhotra GK, Yanala U, Ravipati A, Follet M, Vijayakumar M, Are C (2017) Global trends in esophageal cancer. J Surg Oncol 115:564–579. https://doi.org/10.1002/jso.24592

Panahiazar M, Taslimitehrani V, Pereira N, Pathak J (2015) Using EHRs and machine learning for heart failure survival analysis. Stud Health Technol Inform. https://doi.org/10.3233/978-1-61499-564-7-40

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay É, Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Pölsterl S (2020) Scikit-survival: a library for time-to-event analysis built on top of scikit-learn. J Mach Learn Res 21:1–6

Pölsterl S, Conjeti S, Navab N, Katouzian A (2016) Survival analysis for high-dimensional, heterogeneous medical data: exploring feature extraction as an alternative to feature selection. Artif Intell Med 72:1–11. https://doi.org/10.1016/j.artmed.2016.07.004

Van Rossum G, Drake FL (2009) Python 3 reference manual. Scotts Val, CA Creat

Sandri M, Zuccolotto P (2008) A bias correction algorithm for the gini variable importance measure in classification trees. J Comput Graph Stat 17:611–628. https://doi.org/10.1198/106186008X344522

Sekercioglu N, Fu R, Kim SJ, Mitsakakis N (2021) Machine learning for predicting long-term kidney allograft survival: a scoping review. J Med Sci. https://doi.org/10.1007/s11845-020-02332-1

Spooner A, Chen E, Sowmya A, Sachdev P, Kochan NA, Trollor J, Brodaty H (2020) A comparison of machine learning methods for survival analysis of high-dimensional clinical data for dementia prediction. Sci Rep 10:20410. https://doi.org/10.1038/s41598-020-77220-w

Sraitih M, Jabrane Y, El Hassani AH (2021) An automated system for ECG arrhythmia detection using machine learning techniques. J Clin Med 10:5450. https://doi.org/10.3390/jcm10225450

Steyerberg EW, Vickers AJ, Cook NR, Gerds T, Gonen M, Obuchowski N, Pencina MJ, Kattan MW (2010) Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology. https://doi.org/10.1097/EDE.0b013e3181c30fb2

Uno H, Cai T, Pencina MJ, D’Agostino RB, Wei LJ (2011) On the c-statistics for evaluating overall adequacy of risk prediction procedures with censored survival data. Stat Med 30:1105–1117. https://doi.org/10.1002/sim.4154

Wright MN, Dankowski T, Ziegler A (2017) Unbiased split variable selection for random survival forests using maximally selected rank statistics. Stat Med 36:1272–1284. https://doi.org/10.1002/sim.7212

Yu CN, Greiner R, Lin HC, Baracos V (2011) Learning patient-specific cancer survival distributions as a sequence of dependent regressors, In: Advances in neural information processing systems 24: 25th annual conference on neural information processing systems 2011. NIPS

Zhu W, Xie L, Han J, Guo X (2020) The application of deep learning in cancer prognosis prediction. Cancers (Basel). https://doi.org/10.3390/cancers12030603

Funding

Open Access funding enabled and organized by Projekt DEAL. The authors declare that no funds, grants, or other support was received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by J-OJ, NC, NMW, HN, LP and TS. The first draft of the manuscript was written by J-OJ and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1964 and later versions. The trial protocol was approved by the ethics committee at the University of Heidelberg (committee’s approval: S-635/2013).

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jung, JO., Crnovrsanin, N., Wirsik, N.M. et al. Machine learning for optimized individual survival prediction in resectable upper gastrointestinal cancer. J Cancer Res Clin Oncol 149, 1691–1702 (2023). https://doi.org/10.1007/s00432-022-04063-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00432-022-04063-5