Abstract

A conceptual and computational framework is proposed for modelling of human sensorimotor control and is exemplified for the sensorimotor task of steering a car. The framework emphasises control intermittency and extends on existing models by suggesting that the nervous system implements intermittent control using a combination of (1) motor primitives, (2) prediction of sensory outcomes of motor actions, and (3) evidence accumulation of prediction errors. It is shown that approximate but useful sensory predictions in the intermittent control context can be constructed without detailed forward models, as a superposition of simple prediction primitives, resembling neurobiologically observed corollary discharges. The proposed mathematical framework allows straightforward extension to intermittent behaviour from existing one-dimensional continuous models in the linear control and ecological psychology traditions. Empirical data from a driving simulator are used in model-fitting analyses to test some of the framework’s main theoretical predictions: it is shown that human steering control, in routine lane-keeping and in a demanding near-limit task, is better described as a sequence of discrete stepwise control adjustments, than as continuous control. Results on the possible roles of sensory prediction in control adjustment amplitudes, and of evidence accumulation mechanisms in control onset timing, show trends that match the theoretical predictions; these warrant further investigation. The results for the accumulation-based model align with other recent literature, in a possibly converging case against the type of threshold mechanisms that are often assumed in existing models of intermittent control.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many human sensorimotor activities that are sustained over time can be understood, on a high level, as the human attempting to control the body or the environment towards certain fixed or time-varying target states. Examples of such behaviours include postural control, tracking of external objects with eyes, hands or tools, and locomotion towards a target or along a path, either by foot or using some form of vehicle. In these types of behaviours, human behaviour has been likened to that of a servomechanism or controller (Wiener 1948), and since the 1940s, many mathematical models of human sensorimotor control behaviour have been proposed based on the continuous, linear feedback mechanisms of classical engineering control theory (e.g. Tustin 1947; McRuer et al. 1965; Nashner 1972; Robinson et al. 1986; Krauzlis and Lisberger 1994; Peterka 2000).

These basic ideas and models have been developed further in various directions. One line of investigation, building on notions from ecological psychology (Gibson 1986) or perceptual control theory (Powers 1978), has investigated the nature of the exact information extracted by humans from their sensory input for purposes of control (e.g. Lee 1976; McBeath et al. 1995; Salvucci and Gray 2004; Warren 2006; Zago et al. 2009; Marken 2014). An important goal in this field has been the identification of perceptual invariants, which provide direct sensory access to task-relevant information (e.g. the ratio between retinal size and expansion of an object is a good approximation of time to collision/interception; Lee 1976) and therefore lend themselves to simple but effective control heuristics, typically formulated as one-dimensional linear control laws.

Another important development has been the uptake of more modern control theoretic constructs, most notably optimal control theory (Kleinman et al. 1970; McRuer 1980). Optimal control models of sensorimotor behaviour suggest that humans act so as to minimise some cost function, typically weighing together control error and control effort, and theoretical predictions from these models have been confirmed experimentally (Todorov and Jordan 2002; Liu and Todorov 2007). Typical engineering-inspired realisations of optimal control models include inverse and forward models of the controlled system (Shadmehr and Krakauer 2008; Franklin and Wolpert 2011), but it remains contentious whether the nervous system has any such internal models, or whether it achieves its apparent optimality by means of other mechanisms (Friston 2011; Pickering and Clark 2014; Sakaguchi et al. 2015).

Another direction of research, which this paper aims to extend upon in particular, has emphasised the intermittency of human control. Already early researchers noted that humans are not always continuously active in their sensorimotor control, but often instead seem to make use of intermittent, ballistic control adjustments (Tustin 1947; Craik 1948); Fig. 1 provides an example. This mode of sensorimotor behaviour is well known from saccadic eye movements (e.g. Girard and Berthoz 2005), but has also been studied and evidenced in visuo-manual tracking (Meyer et al. 1988; Miall et al. 1993; Hanneton et al. 1997; Pasalar et al. 2005; van de Kamp et al. 2013; Sakaguchi et al. 2015), inverted pendulum balancing (Loram and Lakie 2002; Gawthrop et al. 2013; Zgonnikov et al. 2014) and postural control (Collins and De Luca 1993; Loram et al. 2005; Asai et al. 2009). A recurring suggestion in this work has been that control intermittency arises due to a minimum refractory time period that has to pass between consecutive bursts of control activity, and/or minimum control error thresholds that have to be surpassed before control is applied. Based on such assumptions, task-specific computational models of intermittent control have been proposed (e.g. Meyer et al. 1988; Collins and De Luca 1993; Miall et al. 1993; Burdet and Milner 1998; Gordon and Magnuski 2006; Asai et al. 2009; Martínez-García et al. 2016). However, the only complete, task-general, computational framework of intermittent control that we are aware of is that of Gawthrop and colleagues (Gawthrop et al. 2011, 2013, 2015). Their framework is an extension of the continuous optimal control theoretic models by Kleinman et al. (1970), features forward and inverse models, and includes provisions allowing for both a minimum refractory period and error deadzones.

An early observation of intermittent-looking control by Tustin (1947). The plot is of the operator handle position in a gun turret aiming task. Note how a large fraction of the control signal plateaus with zero rate of change. Originally published in Journal of the Institution of Electrical Engineers - Part IIA: Automatic Regulators and Servo Mechanisms, 94(2), https://doi.org/10.1049/ji-2a.1947.0025 (1947); all rights reserved

This paper introduces an alternative computational framework for intermittent control, which was originally developed in the context of longitudinal and lateral control of ground vehicles. In that specific task context, the basic concepts have been described before (Markkula 2014, 2015). Here, the framework will be presented in a more general context, in the hope that it might prove useful also in other sensorimotor task domains. The framework ideas will also be developed for the first time in full mathematical detail, for the special case of one-dimensional control using stepwise control adjustments (further generalisation will be one topic in Sect. 7). The main example will be an application of the computational framework to specify a model of car steering, and human steering data will be used for testing some of the framework’s assumptions.

The two main theoretical aims of this paper are: (1) to propose a framework for sustained, intermittent control that starts out from a classical control theory standpoint, without incorporating the extra assumptions typical of optimal control theory. This allows direct generalisation to intermittent control from existing psychological models based on perceptual invariants and control heuristics, and it also has some interest in light of the above-mentioned debate about the neurobiological plausibility of optimal control theoretic models. (2) To propose a framework that actively connects with three concepts that are well established in contemporary neuroscience: motor primitives, neuronal evidence accumulation, and prediction of sensory consequences of motor actions; these will all be introduced in further detail in the next section. The use of any one of these three concepts in mathematical modelling of sensorimotor control is not novel in itself. However, to the best of our knowledge, the three have not previously been incorporated into one common framework. Such integration of modelling concepts from different research fields (perceptual psychology, control theory, perceptual decision-making, motor control, etc.) necessarily involves some degree of simplification. Specialists in the fields we borrow from here will hopefully forgive component-level imperfections, in the interest of working towards a meaningful bigger picture.

Section 2 will explain the three main concepts mentioned above and briefly review to what extent they have been adopted in existing models of sensorimotor control, before Sect. 3 introduces the proposed framework on a conceptual, qualitative level. Then, Sect. 4 will present a computational realisation of the framework, for the special case of one-dimensional stepwise control, and briefly describe how it can be applied to a minimal example task, as well as to ground vehicle steering. Next, in Sect. 5, a simple signal reconstruction method will be described. This method, the proposed computational formulations, and two datasets of human steering of cars, will then be put to use in Sect. 6, providing some first empirical support for the framework. Section 7 will provide a discussion of the empirical and theoretical results, the relationship between the proposed framework and existing theories and models, as well as outline some possible future developments, before the conclusion in Sect. 8.

2 Background

2.1 Motor primitives

There is much emerging evidence for the idea that animal and human body movement is constructed from a fixed or only slowly changing repertoire of stereotyped pulses or synergies of muscle activation, which can be scaled in amplitude to the needs of the situation, and combined with each other, for example, by linear superposition, to create complex body movement (Flash and Henis 1991; Flash and Hochner 2005; Bizzi et al. 2008; Hart and Giszter 2010; Giszter 2015). Task-specific models have been proposed, where, for example, manual reaching (Meyer et al. 1988; Burdet and Milner 1998) and car steering (Benderius 2014; Martínez-García et al. 2016) has been modelled as a sequence of superpositioned, ballistic motor primitives, for example bell-shaped pulses of movement speed. Furthermore, some authors have suggested task-general accounts describing motor control as constructed from such sequences of primitives (Hogan and Sternad 2012; Karniel 2013). Here, we integrate this line of thinking into a task-general, fully specified closed-loop computational account.

It should be noted that the term “motor primitive” has been used for a range of related but different concepts in the motor control literature; what we intend here could be further specified as kinematic motor primitives, described by Giszter (2015) as “patterns of motion without regard to force or mass, e.g. strokes [...] or cycles [...]” (p. 156).

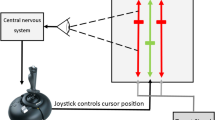

2.2 Evidence accumulation

From laboratory paradigms on perceptual decision-making, where humans or animals have to interpret sensory input to decide on a single correct motor action, there is strong behavioural and neuroimaging evidence suggesting that the initiation of the motor action occurs when neuronal firing activity in task-specific neurons has accumulated to reach a threshold, with noise in the accumulation process explaining action timing variability (Ratcliff 1978; Usher and McClelland 2001; Cook and Maunsell 2002; Gold and Shadlen 2007; Purcell et al. 2010); see Fig. 2 for an illustration. Importantly, the more unambiguous and salient the stimulus being responded to, the quicker the rate of increase of neuronal activity (e.g. Ditterich 2006; Purcell et al. 2010, 2012). It has been shown that by properly adapting the parameters of such an evidence accumulation to the task at hand, including sensory noise levels, the brain could use this type of mechanism to achieve Bayes-optimal perceptual decision-making (Bogacz et al. 2006; Bitzer et al. 2014).

A schematic illustration of how neuronal evidence accumulation mechanisms explain action onset timing distributions in perceptual decision-making tasks. After the onset of a stimulus (\(t = 0\)), noisy neuronal activity builds up over time. The reaching of a threshold activity level predicts overt action onset in individual trials, and stimulus saliency affects the rate of activity build-up

A novel contribution of the present framework is the suggestion, conceptually and computationally, that (1) sustained sensorimotor control can be regarded as a sequence of such perceptual-motor decisions, and (2) the magnitude of control errors (among other things) might affect the rate of evidence accumulation. These suggestions are in contrast with existing models of intermittent control, which, as mentioned above, predominantly assume that control adjustment timing is determined by thresholds on control errors and/or inter-adjustment time durations. (Some interesting exceptions will be discussed in Sect. 7.2.)

2.3 Prediction of sensory outcomes of motor actions

It has been shown in both primates and other animals that whenever a movement command is issued in the nervous system, it tends to be accompanied by a so-called corollary discharge (possibly mediated by an efference copy of the movement command), biasing sensory brain areas whose inputs will be affected by the motor action in question. There is much evidence to support the idea that these biases are predictions of sensory consequences of the motor action, which might allow the nervous system to infer whether incoming sensory stimulation is due to the organism’s own actions or to external events (Sperry 1950; von Holst and Mittelstaedt 1950; Poulet and Hedwig 2007; Crapse and Sommer 2008; Azim and Alstermark 2015). For example, the image of the outside world translating over the retina could mean either that the outside world is rotating, or that that the eyes are.

In sensorimotor control, a specific use of such a discriminating function could be to deal with time delays in the control loop, in a manner similar to the Smith Predictor in engineering control theory (Smith 1957; Miall et al. 1993): after initiating a control action to address a control error, the correct prediction for a time-delayed system is that the error will not disappear immediately, and as long as the control error responds as predicted over time, there is no need to infer that the situation in the external world has changed to warrant further control action than what is already being applied. This type of mechanism is common in continuous models of sensorimotor control (e.g. Kettner et al. 1997; Shadmehr and Krakauer 2008; Friston et al. 2010; Grossberg et al. 2012), and Gawthrop et al. (2011) also include it in their framework as one possible means of triggering control onset.

Here, we will propose a formulation of this type of intermittent predictive control that attempts to align more closely with the neurobiological concept of a corollary discharge. It will be shown how a prediction signal that is useful in the intermittent control context can be generated similarly to the intermittent control itself: as a superposition of simple primitives. Neuronal recordings from animals show time histories of corollary discharge biases that follow a general pattern of rapid initial increase followed by slower decay (Poulet and Hedwig 2007; Chagnaud and Bass 2013; Requarth and Sawtell 2014); interestingly the near-optimal “prediction primitives” proposed here for intermittent sensorimotor control take a similar form.

3 A conceptual framework for intermittent control

On a conceptual level, what is being proposed here is that sustained sensorimotor control can be understood and modelled as a combination of the three mechanisms described above, as follows: perceptual cues (e.g. invariants) that indicate a need for control—i.e. which indicate control error—are considered in a decision-making process that can be modelled as noisy accumulation towards a threshold. At this threshold, a new control action is initiated, in the form of a ballistic motor primitive that is superpositioned, linearly or otherwise, onto any other ongoing motor primitives. The exact motor primitive that is initiated is the one that the nervous system has reason to believe will be most appropriate, based on the available perceptual data and previous experiences. An important part of selecting an appropriate motor primitive might be a heuristic scaling of the primitive’s amplitude with the magnitude of the perceived control error. At motor primitive initiation, a prediction is also made, for example in the form of a corollary discharge, of how the control error will be reduced over time thanks to the new control action. This new prediction is superpositioned onto any previously triggered predictions. The resulting overall prediction signal inhibits (is subtracted from) the control error input, such that what the intermittent control is reacting to (what is being accumulated; what the control actions are scaled by) is actually “control error prediction error” rather than control error per se.

The next section develops this conceptual account into a computational one, for the special case of one-dimensional control using stepwise adjustments of a stereotyped shape, and also shows how it relates to more conventional, continuous linear control models.

4 Computational framework for stepwise one-dimensional control

4.1 Task-general formulation

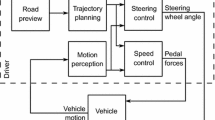

A very general formulation of continuous one-dimensional sensorimotor control is sketched in Fig. 3a. The human is assumed to process sensory inputs \(\mathbf {S}(t)\) and control targets \(\mathbf {T}(t)\) over time t, to yield a one-dimensional quantity P(t), that when delayed and multiplied by a gain K, yields the rate of change \(\dot{C}(t)\) of the control to be applied:

where \({\tau _\mathrm{d}}\triangleq {\tau _\mathrm{p}}+ {\tau _\mathrm{c}}+ {\tau _\mathrm{m}}\) is a sum of delays at perceptual, control decision, and motor stages, and where a positive \(\dot{C}\) changes the control in a direction that tends to change P in a negative direction, and vice versa. The control thus strives to reduce P to zero, such that P can be construed as a perceptual invariant quantifying a negative control error, or, differently put, quantifying the need for a change in control. Typically, this quantification will be non-exact and heuristic. Note that the control gain K can just as well be absorbed into the P function by fixing \(K = 1\) above, which gives P an even more specific interpretation as the needed rate of control change in the given situation, in units of \(\dot{C}\). As will be further explained below, assuming intermittent, constant-duration control adjustments, another possible interpretation of P, after rescaling by the adjustment duration, is as the needed control adjustment amplitude, in units of C. Among these various interpretations of P, we will mainly refer to it as a “perceptual control error” or just “control error”, to emphasise the connection to classical control theory, omitting the “negative” for ease of reading.

Note that P can take any arbitrary form with, for example, any orders of differentiation or integration of sensory inputs with respect to time, and note that mathematically equivalent control laws could also be obtained by such differentiation or integration of the entire Eq. (1), to instead model control in terms of, for example, C or \(\ddot{C}\), if desirable.

As suggested in Fig. 3b, the computational framework being proposed here can be understood as replacing the “control decision and motor output” component of this type of continuous model with the mechanisms that were outlined in Sect. 2, to generate control that is intermittent, but which in many circumstances will be rather similar in appearance to the continuous control (cf. Gawthrop et al. 2011).

Below, the different parts of the framework will be defined in detail.

4.1.1 Perceptual control error quantity

What is being proposed here is independent of what specific quantity P might appropriately quantify the human’s perceived need for control in the task at hand. In contrast, in many continuous models of human control, the main modelling challenge has in practice been to define a P such that Eq. (1) reproduces observed human behaviour as closely as possible. Below, some examples of continuous models from the literature will be provided, all of which can be written on the form of Eq. (1), thus making them all candidates for generalisation from continuous to intermittent control as proposed here.

For example, for a task of manually tracking a one-dimensionally moving target with a mouse cursor, Powers (2008) showed that the rate of mouse cursor movement could be well described as proportional to the distance \(D(t) = C(t) - C_{\mathrm {T}}(t)\) between actual and target cursor position:

i.e. in this case we get simply \(P(t) = -D(t)\).

A more general example can be had from McRuer et al. (1965), McRuer and Jex (1967), who, based on their work on the so-called cross-over model, suggested the following generalised Laplace domain expression for a human controller responding to a control error e:

where \(T_\mathrm{L}\) and \(T_\mathrm{I}\) are lead and lag time constants. Rewriting to time domain:

we see that in this case we can write:

Note that in this expression, the rate of control change that will be applied, after the total neuromuscular delay \({\tau _\mathrm{d}}\), depends also on the control value, and more precisely on what the control value will be just before the new control rate comes into effect.

Another example of this type of rewriting of continuous controllers to the form of Eq. (1) is the PID-controller type model of upright postural control (quiet standing) proposed by Peterka (Peterka 2000; Maurer and Peterka 2005):

with \(\dot{C}\) now the rate of change of a balancing torque around the ankle joint, and where \(\theta \) is the body sway angle. Yet another example is the ecological psychology-based vehicle steering model by Salvucci and Gray (2004):

with \(\dot{C}\) being rate of steering wheel angle change, and where \({\theta _\mathrm{n}}\) and \({\theta _\mathrm{f}}\) are visual angles to two reference points on the road, one “near” and one “far”. Note that in both of these latter two models, there are control gain parameters (the \(K_{\mathrm {\bullet }}\) and \(k_{\mathrm {\bullet }}\)) for all of the terms in P(t), so one can fix \(K = 1\) in Eq. (1), as mentioned above.

4.1.2 Evidence accumulation

When to perform a control adjustment is modelled here as a process of two-sided evidence accumulation (or drift diffusion; Ratcliff 1978; Ratcliff and McKoon 2008). In this type of model, the accumulation of strictly positive neural firing rates, as schematically illustrated in Fig. 2, is replaced by accumulation of a quantity that can be either positive or negative, with one threshold on either side of zero, \(A_+\) and \(A_-\), representing two different alternative decisions (this is mathematically equivalent to for example having two mutually inhibitory one-sided accumulators; Bogacz et al. 2006). In the present context of one-dimensional control, these two thresholds represent decisions to make a control adjustment in either of the two possible directions of control. Such an accumulator can be defined in many different ways. One rather general possible formulation, based on Bogacz et al. (2006) and Purcell et al. (2010), would be:

where A(t) is the activation of the accumulator, \(-\lambda A(t)\) is a leakage term, and \(\nu (t)\) is noise, for example Gaussian white noise with zero mean and variance \({\sigma _\mathrm{a}}^2 \Delta t\) across a simulation time step of duration \(\Delta t\). Furthermore, \(\epsilon \) is the error in predicted need for control (or “control error prediction error”):

where \({P_\mathrm{p}}(t)\) is the brain’s prediction, to be defined in detail in Sect. 4.1.4, of the perception-delayed control error quantity \({P_\mathrm{r}}(t) \triangleq P(t - {\tau _\mathrm{p}})\). Finally, \(\eta (\epsilon )\) in Eq. (8) is an activation function, for example sigmoidal, and \(\gamma \) is a gating function, zero for small input values, for example defined as:

In the example implementations of the framework proposed further below, the accumulators are simplified special cases of Eq. (8) with \(\eta _0 = \lambda = 0\), i.e. without gating or leakage, and with \(\eta (\epsilon ) = k \epsilon \), where k is a gain parameter, thus reducing the accumulation equation to

which is also what is illustrated in Fig. 3.

As for the thresholds of the accumulator, it will in most control tasks probably make sense to select these to be of equal magnitude (\(|A_+| = |A_-|\)), and if so then these can both be set to unity magnitude without loss of generality (\(A_+ = 1; A_- = -1\)), since the accumulator activation is specified in arbitrary units.

4.1.3 Control adjustments

Upon reaching one of the accumulator thresholds, the accumulator is assumed to be reset to zero, and a new control adjustment primitive is generated (the “reset” and “trig” connections in Fig. 3b). In the framework formulation being proposed here, all control adjustments have the same general shape G, which could be any function which starts out at zero and, after an initial motor delay \({\tau _\mathrm{m}}\), rises to unity over the adjustment duration of \(\Delta T\), i.e. any function which fulfils:

Consequently, the rate of change of control during a control adjustment is given by a function \(\dot{G}\) that fulfils:

For example, as hinted at in Fig. 3b, \(\dot{G}(t)\) could be a bell-shaped pulse beginning after a motor delay (\({\tau _\mathrm{m}}\)).

The expected value of the amplitude for the ith adjustment, beginning at the time \(t_i\) at which the accumulator threshold was exceeded for the ith time, is obtained as:

The relationship introduced above,

between the gains of the continuous and intermittent controls, is not a crucial part of the model as such, but ensures that the two controls will typically be close approximations of each other. To see this, consider that for \({P_\mathrm{p}}\approx 0\), \(\epsilon \approx {P_\mathrm{r}}\approx P\), such that the intermittent control will respond to a control error P by adjusting the control by approximately \(K' P\) in a time duration \(\Delta T\), i.e. with an average rate of change of control of

which is also the control rate being applied by the continuous model around the same point in time.

Following up on to the earlier discussion about the meaning of P when absorbing all control gains into it, note that fixing \(K' = 1\) in Eq. (14) now indeed makes P a quantification of needed control adjustment amplitude, in units of C (note that this is a consequence of formulating Eq. (1) in terms of \(\dot{C}\)).

Adding to Eq. (14) also motor noise, for example of a signal-dependent nature, whereby larger control movements will be more likely to have large inaccuracies (Franklin and Wolpert 2011), one can write the actual control adjustment amplitude:

where:

with \(m_i\) drawn from a normal distribution of zero mean and variance \(\sigma _m^2\).

Each new control adjustment is linearly superpositioned onto any adjustments that might be ongoing since previously (see e.g. Flash and Henis 1991; Hogan and Sternad 2012; Karniel 2013; Giszter 2015), yielding an output rate of control:

and therefore:

where n is the total number of adjustments generated so far, and \(C_0\) is an initial value of the control signal.

4.1.4 Prediction of control error

The prediction \({P_\mathrm{p}}(t)\) of the perceptual control error quantity P(t) is generated by a similar superposition:

where H(t) is a function describing how, in the human’s experience, control errors typically become corrected over time by a control adjustment, in the task at hand.Footnote 1 By analogy with Eq. (20), H could be termed a prediction primitive, and it is proposed here that this function should satisfy:

where \({T_\mathrm{p}}\) is the typical time from the triggering of a control adjustment until the controller receives evidence that the control error in question has become completely corrected. For \(0< t < {T_\mathrm{p}}\), H should describe how the perceptual control error quantity is predicted to respond over time to the control adjustment. Mathematically, this part of H should thus be something like the following:

Thus, even though Eqs. (20) and (21) are similar in form, the sensory prediction primitive H is not the same as the motor primitive G; instead the latter is part of shaping the former. It is, however, not necessary to assume that the brain calculates something like Eq. (23) in detail. In practice, it might suffice to have a rather approximate H, for example describing a sigmoidal fall to zero, such as hinted at in Fig. 3b. This will be further exemplified in later sections.

Simulations of a continuous model by Powers (2008), of tracking an on-screen cursor with a mouse, as well as a generalisation of the same model to intermittent control, using the computational framework proposed here. In these examples, the intermittent control model is simulated completely without noise. In panel (a), the grey vertical line shows the time (t = 0.1 s) at which the evidence accumulator (bottom panel) reaches threshold

There are two further specific assumptions motivating the exact formulations of Eqs. (21) and (22). First, in the absence of motor noise it is assumed that the predicted control error immediately after the nth control adjustment onset at time \(t_n\) should be equal to the actual current control error at this time, i.e.:

Thus, after a new adjustment, the prediction should “acknowledge”, and start from, the currently observed control error. Second, over time, predicted control error should fall to zero. That the latter holds true with the proposed formulations is easy to see; it is a trivial consequence of requiring \(H(t > {T_\mathrm{p}}) = 0\). To see that the former assumption is realised, one can write:

It should be noted that if the prediction H is exact, the linear superpositions in Eqs. (19)–(21) should provide (near-) exact overall predictions for controlled systems that are (near-) linear (i.e. for which a superposition of several individual control adjustments yields a system response which is exactly or approximately a superposition of how the system would have responded to each control adjustment separately).

In the next two subsections, the computational framework introduced above will be further explained and illustrated by means of two task-specific implementations.

4.2 A minimal example

Consider the simple continuous model by Powers (2008) in Eq. (2), of a human tracking a target on a screen with a mouse cursor. The panels of Fig. 4 show, in light blue, the response of this model, with \(K' = 1\), \(\Delta T = 0.4\) s (making \(K = K' / \Delta T = 2.5\)), and \({\tau _\mathrm{d}}= 0.2\) s, to a step input (panel a) and a more complex “sum-of-sines” input (panel b).

Also shown in Fig. 4, in black, is the behaviour of the same model when generalised to intermittent control, using the computational framework described above. Here, perceptual and motor delays were set to \({\tau _\mathrm{p}}= 0.05\) s and \({\tau _\mathrm{m}}= 0.1\) s, based on Lamarre et al. (1981), Cook and Maunsell (2002), Morrow and Miller (2003), Purcell et al. (2010),Footnote 2 the accumulator gain was \(k = 20\), the accumulator thresholds were at positive and negative unity, and all the other parameters of the accumulator were set to zero (i.e. no gating, leakage, or noise). As shown in Fig. 5\(,\dot{G}\) was, after the initial \({\tau _\mathrm{m}}\) delay, \(\pm \,2\) standard deviations of a Gaussian, making G reminiscent of (although not identical to) a minimum-jerk movement (Hogan 1984). As for H, since in this task the control signal C is also the quantity being controlled (with appropriate units for mouse and cursor position, and disregarding any delays between them), \(Y(s)F(s) = 1\), and Eq. (23) suggests the following error prediction function:

As can be seen in the third panel of Fig. 4a, H here thus specifies that after a control adjustment has been applied, \({P_\mathrm{p}}\) is first just set to \({P_\mathrm{r}}\), acknowledging the control error, then stays at this level for a period \({\tau _\mathrm{m}}\), before the control adjustment begins, and then an additional \({\tau _\mathrm{p}}\), while the effects of the adjustment feed through the perceptual system. Thereafter, \({P_\mathrm{p}}\) simply follows the shape of G down to a zero predicted error. It may be noted that this shape of H bears some resemblance to typical time courses of corollary discharge inhibition, as discussed in Sect. 2.3.

The bell-shaped control adjustment profile used for both the minimal cursor-tracking example in Sect. 4.2 and for the ground vehicle steering model

In Fig. 4a, note that the onset of control is equally delayed for the continuous and intermittent controllers, due to the parameter values for k and \(A_+\) being such that a unity control error accumulates to threshold in a time \({\tau _\mathrm{a}}= 1/k = 0.05\) s, i.e. \({\tau _\mathrm{p}}+ {\tau _\mathrm{a}}+ {\tau _\mathrm{m}}= 0.2\) s, the same as the \({\tau _\mathrm{d}}\) for the continuous model. In Fig. 4b, note that control adjustments often partially overlap, in linear superposition, to yield a less obviously stepwise resulting signal. Furthermore, note that the rate of control \(\dot{C}\) for the continuous model indeed looks much like an average-filtered version of the \(\dot{C}\) for the intermittent model (as discussed in Sect. 4.1.3). Therefore, if a human would behave as the intermittent controller, the continuous model would still fit the observed behaviour very well. In the terms of Gawthrop et al. (2011), the intermittent control “masquerades” well as the continuous control. As discussed by Benderius (2014) and Gollee et al. (2017), such an underlying control intermittency might potentially be able to account for much of the nonlinear “remnant” that is left unexplained by the continuous model. In other words, although presented here mainly as a first, minimal illustration of the proposed modelling framework, this simple model of visuo-motor tracking could potentially offer many of the same advantages over conventional, continuous models as other intermittent models of this task (Gawthrop et al. 2011; Sakaguchi et al. 2015); closer comparison would be an interesting avenue for future work.

4.3 Application to ground vehicle steering

For the specific sensorimotor task of steering a car, research and control model development have followed the same general directions outlined in Introduction, with examples of both classical control theoretic models (McRuer et al. 1977; Donges 1978; Jürgensohn 2007), ecological psychology models (Fajen and Warren 2003; Wann and Wilkie 2004; Wilkie et al. 2008), optimal control models (MacAdam 1981; Sharp et al. 2000; Plöchl and Edelmann 2007), and more recently also intermittent control models (Gordon and Magnuski 2006; Roy et al. 2009; Benderius 2014; Markkula 2014; Gordon and Srinivasan 2014; Gordon and Zhang 2015; Johns and Cole 2015; Boer et al. 2016; Martínez-García et al. 2016).

To further illustrate the proposed intermittent control framework, and as a platform for testing its major assumptions, a model of ground vehicle steering will be described here. The full details will be developed over several sections below, but for illustration purposes some examples of the final model’s time series behaviour are provided already in Fig. 6. Compared to the minimal example in Fig. 4, note the effect, in panels (b) and (c), of introducing noise: accumulator noise makes the adjustment timing less predictable, and motor noise causes a more inexact-looking steering profile, where \({P_\mathrm{p}}\) is generally not equal to \({P_\mathrm{r}}\) just after the adjustment onset [cf. Eq. (28)]. The simulation in Fig. 6c also includes noise emulating random disturbances in the vehicle’s contact with the road, in the form of a Gaussian disturbance to the vehicle’s yaw rate, of standard deviation \(\sigma _{\mathrm {R}}\) and band limited to 0.5 Hz with a third-order Butterworth filter (Boer et al. 2016).

Example simulations of the lane-keeping steering model driving on a straight road, with model parameters as in Table 1

The steering model illustrated in Fig. 6 uses the computational framework proposed here, with the perceptual control error quantity P from Eq. (7), i.e. the model is a generalisation to intermittent control of the steering model proposed by Salvucci and Gray (2004). The adopted control adjustment functions \(\dot{G}\) and G were again those shown in Fig. 5. This choice was based on the results by Benderius and Markkula (2014), who showed that, across a wide range of real-traffic and driving simulator data sets, steering adjustments almost always followed a Gaussian-like rate profile, with average durations of about 0.4 s, encompassing about \(\pm \,2\) standard deviations of the Gaussian. As for H, note that again a sigmoidally decreasing function was used to generate the control error prediction \({P_\mathrm{p}}\).

The plant model Y was on the general form of a linear so-called “bicycle” model of lateral vehicle dynamics (Jazar 2008):

where \(v_{\mathrm {y}}\) is lateral speed in the vehicle’s reference frame, \(\omega \) is the rate of yaw rotation of the vehicle in a global reference frame, and \(\delta \) is the steering wheel angle, i.e. \(C = \delta \). Here, the \(\mathbf {A}\) and \(\mathbf {b}\) matrices were obtained by fitting to observed vehicle response in two experiments with human drivers.

These data sets of human steering, and how they have been analysed to (i) test framework assumptions and (ii) parameterise the model simulations shown in Fig. 6, will be described in Sect. 6. First, however, Sect. 5 will introduce an analysis method that will be needed in the following.

5 A simple method for interpreting sustained control as intermittent

Methods exist for decomposing shorter movement observations into a sequence of stepwise primitives, for example based on optimisation (Rohrer and Hogan 2003; Polyakov et al. 2009), high-order derivatives of the position signal (Fishbach et al. 2005), or wavelet analysis (Inoue and Sakaguchi 2015). Here, given our sustained control data with thousands of control adjustments, we adopt a considerably simpler method which is less exact, but also less computationally expensive and requiring only first-order derivatives.

For a given digitally recorded control signal with N samples C(j) taken at times t(j), if one can estimate the times \(t_i\) of control adjustment onset, one can use a discretised version of Eq. (20),

to approximately reconstruct C(j) as n stepwise control adjustments with amplitudes \({\tilde{g}_i}\). By rewriting Eq. (32) as the overdetermined matrix equation

where

(i.e. a matrix with N rows and \(n + 1\) columns), and

one can obtain a standard least-squares approximation of \(\varvec{g}\) using:

or more efficient numerical techniques.

In order to estimate the times \(t_i\) of adjustment onset, one can make use of the fact that if a signal is composed of intermittent discrete adjustments with sufficient spacing between them, then each adjustment will show up as an upward or downward peak in the rate of change of the signal (cf. Figs. 4, 6). Therefore, a simple approach to estimating the \(t_i\) is to look for peaks in the control rate signal, after some appropriate amount of noise filtering, and define the steering adjustment onsets as occurring a time \(T_{\mathrm {peak}}\) before these peaks, where \(\dot{G}(\)T\(_{\mathrm {peak}}\)) is the control rate maximum; i.e. here \(T_{\mathrm {peak}} = {\tau _\mathrm{m}}+ \Delta T / 2\).

6 Testing framework assumptions using human steering data

6.1 Data sets

The framework assumptions introduced in Sects. 3 and 4 imply specific predictions about what types of models should best account for human control behaviour. To test these predictions, two data sets of passenger car driving in a high-fidelity driving simulator were used:

-

(1)

One set of 15 drivers recruited from the general public, performing routine lane-keeping on a simulated rural road, in an experiment previously reported on as Experiment 1 in Kountouriotis and Merat (2016). Here, only a subset of these data were used, by extracting the conditions with a straight road, no secondary task distraction and no lead vehicle. In total, there were four segments of such driving per participant, each 30 s long. The average observed speed was 97 km/h.

-

(2)

One set of eight professional test drivers performing a near-limit, low-friction handling task on a circular track (50 m inner radius) on packed snow. The task was to keep a constant turning radius, at the maximum speed at which the driver could maintain stable control of the vehicle. Each driver performed the task four times, and here 15 s were extracted from each such repetition, beginning at the start of the second circular lap, at which point drivers had generally reached a fairly constant speed (observed average 43 km/h). The motivation for including these data here was to study a more extreme form of lane-keeping, where driver steering is arguably operating in an optimizing rather than a satisficing mode (Summala 2007). Three recordings where the driver lost control (identified as heading angle relative to circle tangent \(> 10^{\circ }\)) were excluded.

In both experiments, the University of Leeds Driving Simulator was used. In this simulator, drivers sit in a Jaguar S-type vehicle cockpit with original controls, inside a spherical dome onto which visual input of 310\(^{\circ }\) coverage (250\(^{\circ }\) forward, 60\(^{\circ }\) backward via rear view mirror) is projected. Motion feedback is provided by an eight degree of freedom motion system; a hexapod mounted on a lateral-longitudinal pair of ±5 m rails. In both experiments, the steering wheel angle was recorded at a 60 Hz sample rate, with 0.1\(^{\circ }\) resolution.

The effect of low-pass filtering on the reconstruction of human steering wheel angle data as intermittent stepwise adjustments. Less filtering (lower \({\sigma _\mathrm{I}}\)) produces more exact signal reconstructions, but with a larger fraction of potentially over-fitted steering adjustments (see the text for details)

6.2 Interpreting steering as intermittent control

The computational framework developed in Sect. 4.1 describes control as a sequence of stereotyped stepwise adjustments, with zero control change in between. Is it possible to understand the human steering in our data sets in this way? As a simple first indication, the fraction of time steps with zero change in steering wheel angle was indeed found to be rather large for both tasks: 45.8% for the circle task, and 91.1% for the lane-keeping; cf. the plateaus in Fig. 1.

Example reconstructions of observed human steering as intermittent, stepwise control, using the method proposed in Sect. 5. Grey vertical lines and bands indicate identified adjustment centres and durations, respectively

To get a more complete answer, the signal reconstruction method introduced in Sect. 5 was applied, using the bell-shaped control adjustment G described in Sect. 4.3. The noise filtering of the steering wheel signal, here achieved using a Gaussian-kernel averaging filter, does affect the outcome of this method, since a more heavily filtered signal will present fewer control rate peaks. Therefore, as illustrated in Fig. 7, lower values of the filter kernel standard deviation \({\sigma _\mathrm{I}}\) produced reconstructions with larger numbers of steering adjustments and lower reconstruction error, here quantified in terms of 99th percentile of the absolute difference between recorded and reconstructed steering wheel angle.

However, reconstructing with frequent adjustments also means that more of these are partially overlapping. It was found that this could sometimes produce unwanted effects, such as a rapid succession of two large-amplitude adjustments of opposite sign, together producing a near-zero reconstructed steering angle. Such over-fitting tendencies were identified by comparing the peak steering wheel rate of the individual fitted adjustments to the observed steering wheel rate at the same points in time. These need not be identical, but when the fitted peak amplitude was more than 1.25 times larger than the observed steering rate peak, the adjustment was deemed a possible over-fit. The fraction of such adjustments are graphed against the right y axis in Fig. 7. Based on these results, \({\sigma _\mathrm{I}}\) was fixed at 0.1 s and 0.06 s for the lane-keeping and circle tasks, respectively.

With these values for \({\sigma _\mathrm{I}}\), the estimated adjustment frequencies, across the entire data sets, were 1.1 Hz and 2.0 Hz for the two tasks, a 98.2 and 96.6% compression compared to the original 60 Hz signals. As can be seen in Fig. 7, 99th percentile reconstruction errors were 0.33\(^{\circ }\) and 5.0\(^{\circ }\) in the two tasks. These values were seemingly inflated somewhat by certain recordings with atypically large reconstruction errors. At the level of individual recordings, median 99th percentile reconstruction errors were 0.23\(^{\circ }\) and 3.0\(^{\circ }\). Figure 8 shows examples of reconstructions that are typical in terms of estimated adjustment frequencies and reconstruction errors, as well as one example lane-keeping recording with a higher estimated frequency of control adjustment, and a larger reconstruction error.

Overall, these rather exact reconstructions using a small number of adjustments can be taken to suggest that something like intermittent stepwise control was indeed what drivers were making use of in these steering tasks. Such an interpretation seems qualitatively reasonable also from simply looking at the lane-keeping steering data, which, as mentioned, for the most part looked like Fig. 8a. Also the circle task steering, such as exemplified in Fig. 8c, had a decidedly staircase-like aspect. With examples such as the one shown in Fig. 8b, it is less qualitatively clear from the recorded steering signal itself that intermittent control might have been the case, but if one studies this plot closer (e.g. supported by the vertical stripes in the figure), one can see why a reconstruction as a limited number of stepwise changes works also here: basically, the control signal tends to always be either roughly constant (at 0, 1.3, 3.5, 4.8 s) or is changing upward or downward in a manner which can be understood as a single adjustment of about 0.4 s duration or shorter. Crucially, if control changes in the same direction for more than 0.4 s, it tends to do so with several identifiable peaks of steering rate (at 3–3.5 and 3.6–4.2 s). A main cause of less exact reconstruction seems to be cases where two such peaks come close enough together to merge into one peak in the low-pass filtering (around 4.5 s in Fig. 8b and around 0.6, 1.2, and 4 s in Fig. 8c).

Steering amplitude model fits; one continuous model responding to perceptual control error [CM; panels (a) and (d)], one intermittent model responding to perceptual control error [IM; panels (b) and (e)], and one intermittent model responding to errors in prediction of perceptual control error [PIM; panels (c) and (f)]. The continuous and intermittent models predicted control rates (\(\dot{\delta }\)) and control adjustment amplitudes (\(g_i\)), respectively

6.3 Amplitude of individual steering adjustments

The proposed framework also suggests that it should be possible to predict the control adjustment amplitudes from the control situation at adjustment onset, and more so than what it is possible to predict continuous rates of control change from the continuously developing control situation. To see whether this is the case here, we first consider a simplified, prediction-free version of Eq. (14), where the expected value of each control adjustment amplitude is:

In Fig. 3b, this corresponds to the lower part of the model (“Superposition of motor primitives”) being fed \({P_\mathrm{r}}\) directly instead of \(\epsilon \).

Note the similarity with the original, continuous Salvucci and Gray (2004) control law in Eq. (7), which, with \(K = 1\) and with the continuous model delay included, is:

This corresponds directly to the model in Fig. 3a.

Here, both the intermittent model in Eq. (38) and the continuous model in Eq. (39) were fitted to the observed \(g_i\) and \(\dot{\delta }\), respectively, by means of a grid search, per driver, across all combinations of \(k_\mathrm{nI}\in \{ 0, 0.01, \ldots , 0.20 \}\), \(k_\mathrm{nP}\in \{ 0, 0.1, \ldots , 2 \}\), and \(k_\mathrm{f}\in \{ 0, 0.4, \ldots , 12 \}\) for the continuous model, and the same search ranges for Eq. (38), but scaled by \(\Delta T = 0.4\) s [cf. Eq. (15)]. The delay in Eq. (39) was fixed at \({\tau _\mathrm{d}}= 0.2\) s, after initial exploration suggested that values close to this one worked well across all drivers. For the intermittent model, the \(g_i\) should correlate with the externally observed P at a point \({\tau _\mathrm{p}}+ {\tau _\mathrm{m}}+ \Delta T/2\) before the peak of the observed adjustment; in this respect we here assumed \({\tau _\mathrm{p}}+ {\tau _\mathrm{m}}+ \Delta T/2 = 0.2\) s and did not vary these delays further. Also the preview times to near point and far point were fixed across drivers, again based on initial exploration, at 0.25 s and 2 s.

Figure 9 shows, for both driving tasks, the entirety of observed and model-predicted control for the best-fitting gain parameterisations, for both the continuous model [panels (a) and (d)] and the intermittent model [panels (b) and (e)].

For the continuous model, note that the previously mentioned large fraction of time steps with zero change in the human steering is visible as vertical stripes in the middle of plots (a) and (d). The fitted gain parameters for this model are thus a compromise between not predicting too large steering rates for these stretches of zero control change, while nevertheless predicting nonzero steering rates of correct sign when the human actually is adjusting the steering; this is what is causing the data points in Fig. 9a, d to scatter at a flatter slope than the \(y = x\) line that signifies perfect model fit. This compromise can be seen in more detail for the three example recordings in the top row of panels of Fig. 10. As discussed in Sect. 4.2, note that the continuous model behaviour looks like an average-filtered version of the steering rates, especially in panels (b) and (c) where there are many control adjustments.

Example illustrations of observed steering and fitted models of control amplitude, for the same three recordings as shown in Fig. 8. The topmost panels show the continuous model (CM) fitted to observed steering rates. The middle plots show the \({P_\mathrm{r}}\) for both the intermittent and predictive intermittent models (IM and PIM), and the \({P_\mathrm{p}}\) signal computed from the reconstructed adjustments. The bottom panels show the amplitude-predicting quantities of the two intermittent models, as fitted to the reconstructed adjustment amplitudes shown as vertical stems at the reconstructed times of adjustment onset (i.e. not at the adjustment rate peaks); a perfect intermittent model would pass exactly through all circles

For the intermittent model, the vertical stripes of data naturally disappear, as well as most of the flatness of the scatter. A 10,000 resample bootstrap analysis indicated that while average per-driver \(R^2\) for the continuous model was significantly greater than zero in both tasks, so were the increases in average per-driver \(R^2\) from continuous to intermittent model (\(p < 0.0001\) in all cases). Thus, even though the model by Salvucci and Gray (2004) was originally devised to explain continuous rates of steering change, it was here better suited for explaining amplitudes of intermittent control adjustments, nicely aligning with the framework assumption being tested.

The bottom row of panels in Fig. 10 illustrates how the observed human control adjustment amplitudes \(g_i\) relate to the variations over time of the parameter-fitted \({P_\mathrm{r}}\) quantity in Eq. (38). As one would expect given the residual flatness of the scatter in Fig. 9, some of the above-mentioned model-fitting compromise remains; rather than hitting the observed \(g_i\) directly, the fitted \({P_\mathrm{r}}\) tends to pass below (in absolute terms) the larger \(g_i\), and above the smaller ones.

6.4 Prediction of control errors

Now, consider the full form of amplitude adjustment model proposed here, feeding \(\epsilon \) rather than \({P_\mathrm{r}}\) to the motor control (the bottom part of Fig. 3b):

If the framework proposed here is correct, Eq. (42) should explain adjustment amplitudes better than the prediction-free version in Eq. (38).

To test whether this is the case, one needs to define suitable H functions from which to build \({P_\mathrm{p}}\) (i.e. one needs to determine the “superposition of prediction primitives” component in Fig. 3b). Just as in Sect. 4.2, besides the general requirements on H set out in Eq. (22), we again make use of Eq. (23), suggesting that H should describe how control errors decay when the controlled plant system responds to a control adjustment. In the case of ground vehicle steering, the plant Y is the lateral dynamics of the vehicle, and as mentioned above these dynamics were here approximated using the linear model in Eq. (31). The \(\varvec{A}\) and \(\varvec{b}\) matrices of that equation were least-squares fitted to the two task data sets; Fig. 11 shows the yaw rate response \(\omega _{\mathrm {G}}(t)\) of the linear models thus obtained, when subjected to a steering input of the shape G used here (as depicted in Fig. 5).

Top: Yaw rate responses to the sigmoidal steering adjustment profile G, of a linear vehicle model fitted to the two data sets of human steering. The vertical line indicates \({\tau _\mathrm{m}}\). Bottom: Prediction functions H for the two tasks, obtained using the yaw rate response profiles. The vertical line indicates \({\tau _\mathrm{m}}+ {\tau _\mathrm{p}}\)

Calculating exactly how an arbitrary \({P_\mathrm{r}}\) responds to a stepwise control adjustment G is non-trivial, but it was found here that the following approximation of Eq. (23) worked rather well in practice:

where \(S(v_{\mathrm {x}})\) is the vehicle’s steady-state yaw rate response at longitudinal speed \(v_{\mathrm {x}}\), i.e. for increasing t, \(\omega _{\mathrm {G}}(t) \rightarrow S(v_{\mathrm {x}})\). This prediction function is shown in the bottom panel of Fig. 11. In words, Eq. (43) says that after applying a control adjustment G to address a perceptual control error \({P_\mathrm{r}}\), this control error will over time fall towards zero with a profile that is the same as the profile of the vehicle’s yaw rate response to G. This is only exactly correct if the actual control error is a pure yaw rate error (without heading or lane position errors). However, note in Fig. 6a that this H nevertheless provides rather good prediction following most of the steering adjustments. For example, during the first rightward steering response to the leftward heading error, the prediction is exact while the adjustment is being carried out, when the far and near point rotations respond to the changing vehicle yaw rate. However, since the original error was not a yaw rate error, P continues increasing above zero (which in turn prompts a sequence of stabilising steering adjustments to the right). Eq. (43) thus serves as an example of what was speculated in Sect. 4.1.4; that also approximate predictions might in many control tasks be enough to allow successful control. Note that again H takes the form of a sigmoid-like fall from one to zero.

Best-fitting gain parameters for the prediction-extended Salvucci and Gray (2004) model (Eq. 42), when used to explain adjustment amplitudes in the lane-keeping data set. Each vertical line shows the fit for one driver. Slight random variation in \(k_\mathrm{f}\) has been added for legibility; the actual fitted values are the ones indicated on the x axis

Now, since we have fixed \(K' = 1\), we get \({\tilde{\epsilon }_i}= {\tilde{g}_i}\), such that a \({P_\mathrm{p}}\) signal can be constructed using Eq. (21), directly from the reconstructed \({\tilde{g}_i}\). Example prediction signals are shown in the middle row of panels in Fig. 10. As shown in Fig. 9, using this \({P_\mathrm{p}}\) to fit the control gains in Eq. (42), with the exact same free parameters and across the same parameter ranges as for Eq. (38), yielded further increasing \(R^2\) for both tasks. However, in the same bootstrap analysis as mentioned above, these \(R^2\) increases fell short of statistical significance, also when pooling the two tasks (\(p = 0.08\)). In other words, the observed changes in model fit were promisingly in line with the specific framework assumption being tested here (i.e. that adjustment amplitudes are determined by errors in predictions of control error rather than by raw control errors), but further empirical work will be needed before any firm conclusions can be drawn.

Overall, it may be noted that the obtained \(R^2\) values were relatively low across all models; in part attributable to sensory and motor noise affecting human steering amplitudes, but possibly also suggesting that the three-parameter Salvucci and Gray (2004) formulation is insufficient or imperfect for the studied tasks.

Relationship between time \(\Delta t_i\) since previous steering adjustment, and adjustment amplitude \({\tilde{g}_i}\), in the lane-keeping task. Each dot is one control adjustment, the curves show one-dimensional distributions, and the blue horizontal lines show median \({\tilde{g}_i}\) in bins of \(\Delta t_i\). Panel a shows human steering data; panels b and c computer simulations of best-fitting threshold-based and accumulator-based models, respectively

The bottom row of panels in Fig. 10 provides some further insight into the difference between models with and without prediction: when two adjustments follow each other with a short duration in between, the \(\epsilon \) of the prediction-based model is often better than the prediction-free \({P_\mathrm{r}}\) at capturing the amplitude of the second adjustment, which tends to have a much smaller magnitude than \({P_\mathrm{r}}\), or even the opposite sign. The framework proposed here suggests that these small secondary adjustments occur because the preceding adjustments did not have quite the predicted effect. Especially for the lane-keeping task, this seemed to be happening more for some drivers than for others, and as one might expect it was to some degree related to frequency of steering adjustment. The three lane-keeping drivers for which the shift from Eqs. (38) to (42) improved model fit the most, also had the three largest adjustment frequencies in the group.

Figure 12 shows the best-fitting gains obtained for the 15 drivers performing the lane-keeping task. Based on this figure, the gains \(k_\mathrm{nI}= 0.02\), \(k_\mathrm{nP}= 0.2\), and \(k_\mathrm{f}= 1.6\) were adopted for the example simulations in Fig. 6 and also for the further model fittings in the next section.

6.5 Time between steering adjustments

A final theoretical prediction to be tested here is that the timing of observed adjustments should be better explained as generated by a process of evidence accumulation, such as set out in Eqs. (8) or (11), than by control error thresholds or minimal refractory periods, such as adopted in most existing frameworks and models of intermittent control (e.g. Miall et al. 1993; Gawthrop et al. 2011; Benderius 2014; Johns and Cole 2015; Martínez-García et al. 2016).

Figures 13a and 14a show the distributions of not only adjustment amplitudes \({\tilde{g}_i}\) in the two data sets of human steering, across all drivers, but also the inter-adjustment interval \(\Delta t_i \triangleq t_i - t_{i-1}\). In other words, these figures illustrate how the distribution of amplitudes varied with how much time had passed since the previous adjustment. Note that the distributions of \(\Delta t_i\) (visible in collapsed form along the top of the panels) are roughly log-normal in character, skewed towards larger values, something which is typical of timings obtained from accumulator-based models (e.g. Bogacz et al. 2006).

Further results on timing and amplitudes of steering; as in Fig. 13. Panel a the human steering in the circle task, and panels b–h the effects of varying noise levels in the best-fitting accumulator-based lane-keeping model shown in Fig. 13c. All simulations included road noise, at its fitted value \({\sigma _\mathrm{R}}= 0.02\) rad/s. In panel b, accumulator and motor noises (\({\sigma _\mathrm{a}}\) and \({\sigma _\mathrm{m}}\)) were set to zero, in panels c–e motor noise was zero, and accumulator noise was varied around its fitted value (middle panel), and vice versa in panels (f–h)

Here, an approximate model-fitting of the lane-keeping data was carried out, using the “typical” gain parameters obtained in Sect. 6.4 above, to see if fitting a single model to the data from all drivers would allow reproducing the general patterns seen in Fig. 13a. The remaining parameters of the steering model were grid searched, testing all combinations of the accumulator gain \(k \in \{ 150, 200, \ldots , 400 \}\), the accumulator noise \({\sigma _\mathrm{a}}\in \{ 0.4, 0.5, \ldots , 1.2 \}\), the motor noise \({\sigma _\mathrm{m}}\in \{ 0.2, 0.4, \ldots 1 \}\), and the road/vehicle noise \({\sigma _\mathrm{R}}\in \{ 0.02, 0.03, 0.04, 0.05 \}\) rad/s. For each model evaluation, lane-keeping was simulated for the same amount of time as the human lane-keeping, i.e. 30 min of simulated driving. The model’s steering adjustments were counted in bins with edges \(\Delta t_i\) at \(\{ 0, 0.2, 0.4, \ldots 6, \infty \}\) s, and for \({\tilde{g}_i}\) at

\(\{ 0, 0.25, 0.5, \ldots , 3, \infty \}\) degrees, and the grid search identified the model parameterisation with minimum

where \(O_j\) and \(E_j\) are numbers of adjustments by humans and model in bin j, and q is number of bins. This is standard Chi-square minimisation distribution fitting, apart from the addition of one in the nominator, an approximate method to handle bins with \(E_j = 0\).

Also an alternative model was tested, intended to emulate typical assumptions of previous intermittent control models, as mentioned above. These previous models have been deterministic, and as such they are clearly unable to explain the data observed here. Therefore, an extended stochastic formulation was used: instead of accumulating prediction error \(\epsilon \), this model triggered new adjustments when time since previous adjustment exceeded \(\Delta _{\mathrm {min}}\) and \(\epsilon + \nu _{\mathrm {t}} \ge \epsilon _0\), where \(\nu _{\mathrm {t}}\) is Gaussian noise with zero mean and standard deviation \(\sigma _{\mathrm {t}}\), and \(\epsilon _0\) a threshold parameter. This model was grid searched across all combinations of \(\sigma _{\mathrm {t}} \in \{ 0.06, 0.12, \ldots , 0.52 \}\) degrees,Footnote 3 \(\epsilon _0 \in \{ 0.2, 0.4, \ldots , 1.2 \}\) degrees, \({\Delta _\mathrm{min}}\in \{ 0, 0.1, 0.2 \}\) s, and \({\sigma _\mathrm{m}}\) and \({\sigma _\mathrm{R}}\) across the same ranges as for the accumulator model.

For both models, the best solutions from the grid searches were optimised further using an interior point algorithm (Mathworks MATLAB function fmincon).

The best fits obtained are shown in Fig. 13b, c, with a lower \(\chi ^2 = 451\) (i.e. a better fit) for the accumulator model than for the threshold model, \(\chi ^2 = 593\), despite the threshold model having one more free parameter. The main shortcomings of the threshold model seemed to be (i) a tendency to produce a majority of control adjustments just after the \({\Delta _\mathrm{min}}\) duration, thus not generating a very log-normal-looking distribution of \(\Delta t_i\), and (ii) a failure to account for those observed data points which had simultaneously large \(\Delta t_i\) and \({\tilde{g}_i}\).

The fitted values for the accumulator model were used when generating the example simulations in Fig. 6. The full list of all parameter values used in those simulations are provided in Table 1.

Panels (b) through (h) of Fig. 14 provide a closer look at how the accumulator-based model’s behaviour varies when the different noise magnitudes are varied. In panel (b), note how, in the absence of any accumulator or motor noise, adjustments become infrequent. This is because they are triggered solely by noise-free accumulation of control errors, which tend to be small due to the noise-free control (with gains fitted to the human steering) being rather well-attuned to the vehicle (cf. Fig. 6a). A pattern of decreasing \({\tilde{g}_i}\) with increasing \(\Delta t_i\), observable for human steering in both tasks with \(\Delta t_i > 0.5\) s, is clear already in this simplified form of the model. This is a somewhat counterintuitive consequence of accumulation-based control (Markkula 2014); integration of a small quantity over a long time is the same as integration of a large quantity over a short time.Footnote 4

When adding and increasing accumulator noise [panels (c) through (e)], adjustments become more frequent, and smaller \(\Delta t_i\) start occurring. At these lower \(\Delta t_i\), there is now the opposite pattern of increasing \({\tilde{g}_i}\) with increasing \(\Delta t_i\). This happens in the model because the earlier the noise happens to push the accumulator above threshold, the smaller the control error to respond to will be, on average. Interestingly, this sort of pattern can be seen clearly in the human steering in the circle task [panel (a)]. If, instead of accumulator noise, we add and increase motor noise [panels (f) through (h)], we see that this is another way of producing small \(\Delta t_i\), in this case because ill-attuned adjustments soon trigger additional, corrective adjustments. Here, since large motor mistakes will be detected more quickly, the smaller \(\Delta t_i\) are here instead associated with larger \({\tilde{g}_i}\), thus counteracting the above-mentioned effect of accumulator noise.

7 Discussion

Below, some relevant existing accounts of sensorimotor control will first be enumerated and briefly contrasted with what has been proposed here. Then, a series of subsections will engage in more detail with some specific topics for discussion.

7.1 Related models and frameworks

As mentioned in Sect. 1, Gawthrop and colleagues have also presented a task-general framework for intermittent control (Gawthrop et al. 2011, 2015). What has been proposed here aligns well with their emphasis on possible underlying control intermittency even in cases where the overt behaviour is seemingly continuous in nature. However, at the level of actual model mechanisms, the two frameworks are rather different, with Gawthrop et al starting out from an optimal control engineering perspective whereas we have put more focus on adopting concepts from psychology and neurobiology: zero-order or system-matched holds versus motor primitives; explicit inverse and forward system models versus perceptual heuristics and corollary discharge-type prediction primitives; error deadzones and minimum refractory periods versus evidence accumulation.

Another task-general framework has been derived from the free-energy principle, which suggests that minimisation of free energy, or roughly equivalently minimisation of prediction error, is the fundamental governing principle of the brain (Friston 2005, 2010). From this mathematical framework, Friston and colleagues have derived models of sensorimotor control as active inference (Friston et al. 2010, 2012a; Perrinet et al. 2014), but these have focused on continuous rather than intermittent control. The active inference framework, like ours, describes motor action as being generated to minimise sensory prediction errors, and sensorimotor control as near-optimal without being directly based on engineering optimal control mechanisms. In the active inference terminology, the G and H in our framework are examples of generative models. However, the active inference models have not explicitly included notions of superpositioned ballistic motor primitives, or evidence accumulation to decide on triggering such primitives. In our understanding, such mechanisms should be obtainable as special cases of the more generally formulated active inference theory; we would argue that these are important special cases to consider.

In contrast, as mentioned in Sect. 2, some researchers focusing specifically on motor control have proposed superposition of sequences of motor primitives as a main feature of their conceptual frameworks (Hogan and Sternad 2012; Karniel 2013), but so far without developing these into full computational accounts. Others have focused on how the primitives themselves might be constructed using underlying dynamical systems formulations (Ijspeert et al. 2003; Schaal et al. 2007); a description one level below the one we have adopted here. There is also a related, vast literature on neuronal-level models of how individual saccadic eye movements are generated (e.g. Girard and Berthoz 2005; Rahafrooz et al. 2008; Daye et al. 2014). Overall, these motor-level accounts suggest that the kinematic motor primitives considered in the present framework are not truly ballistic, in the sense that there is a closed control loop to support their successful motor completion. However, from a higher-level perspective it might still be correct to consider them ballistic, in the sense that once they are initiated, they are not further affected by how the perceptual situation which triggered them continues to evolve.

There are also task-specific models of sensorimotor control sharing some of the present framework’s assumptions. The task of reaching towards a target has, for example, been modelled as a superposition of two non-overlapping bell-shaped speed pulses by Meyer et al. (1988), or as an arbitrary number of pulses with possible pairwise overlap by Burdet and Milner (1998). Both of these models allow variable-duration primitives, and the latter model also includes provisions for uncertain estimation of predicted final amplitude of an ongoing primitive, in a manner that is related but not identical to the prediction error-based control used here. A more direct analogue exists in models of smooth pursuit of moving targets with the eyes, where the Smith Predictor type approach has long been used (Robinson et al. 1986; Kettner et al. 1997; Grossberg et al. 2012, and the same is actually true also for the above-mentioned models of individual saccades), but these models are instead continuous in nature. Among the models of car steering as intermittent control, the ones by Roy et al. (2009) and Johns and Cole (2015) are more similar to the Gawthrop et al. (2011) framework than to ours, whereas the models by Gordon and colleagues (Gordon and Srinivasan 2014; Gordon and Zhang 2015; Martínez-García et al. 2016) do make use of steering adjustment primitives, but in a hybrid intermittent-continuous control scheme. The model by Benderius (2014) uses motor primitives and perceptual heuristics, but not sensory prediction or evidence accumulation. The only other car steering model that has not used error deadzones is the one by Boer et al. (2016), who used a just noticeable difference mechanism.

The overall impression is that the level of description we have adopted places our framework somewhere in the middle with respect to these existing models. We are arguably one step closer to the neurobiology than the Gawthrop et al. (2011) framework and the existing car steering models, and one step further away from the neurobiology and from detailed behavioural-level knowledge than some of the models of manual reaching or eye movements. One topic of discussion in the sections to follow below will be how these higher-level and lower-level accounts might possibly benefit from adopting some of the ideas proposed here.

7.2 Control onset and evidence accumulation

To the best of our knowledge, no prior models have adopted the idea that evidence accumulation is involved in sustained sensorimotor control, to decide on when to change the current control by for example triggering a new open-loop control adjustment. This hypothesis seems a very natural one to explore given the large amount of empirical support for accumulation-type models in the context of single decision perceptual-motor tasks. What has been proposed here is that sustained sensorimotor control can be regarded as a sequence of such decisions.

More specifically, we have proposed that the rate of accumulation towards the decision threshold might scale with control error prediction error. This provides an interesting possible answer to the long-standing open question whether control intermittency is caused by minimal refractory periods or to error deadzones, or both. For example, Miall et al. (1993) found that their data supported neither hypothesis completely, and van de Kamp et al. (2013) reported evidence for a refractory period that varied with the order of the control task. In effect, accumulation of prediction error (or even of just control error, without predictions) will result in both (i) mandatory refractory pauses between control actions and (ii) control error magnitudes at which control actions will most typically be issued, but both of these will vary with the specifics of the control situation leading up to the adjustment, and quite naturally also with the task itself (as between the lane-keeping and circle steering tasks studied here). Furthermore, with noise included in the accumulation process, this type of model also provides a natural means of capturing the inherent stochasticity in control action timing.

There are some related, non-threshold accounts in the recent literature: Zgonnikov and colleagues (Zgonnikov et al. 2014; Zgonnikov and Lubashevsky 2015) have proposed two different models of inverted pendulum balancing where control errors and random noise together contribute to intermittently pushing a dynamical system from an inactive to a transiently active state, and Sakaguchi et al. (2015) modelled visual-manual tracking similarly to Gawthrop et al. (2011) but with durations of individual segments of control instead determined by prior and current accuracy of a predictive model of target movement. Our approximate distribution-fitting analyses here tentatively favoured the accumulator model over the threshold model, and Zgonnikov, Sakaguchi, and co-authors also provided empirical arguments in similar veins. These contributions add up to a converging, although arguably still preliminary, case against intermittent control onset as based on error thresholds. An important next step would be to devise experiments and analyses that can test predictions of these various non-threshold models more directly, both against threshold-based alternatives and against each other.

Such empirical investigations could benefit from considering not only behavioural but also neuroimaging data, to possibly look for direct traces of, for example, ongoing evidence accumulation (see, e.g. O’Connell et al. 2012; Werkle-Bergner et al. 2014). One specific assumption in the present framework that would merit testing with both behavioural and neuroimaging approaches is the currently assumed resetting of the accumulation to zero immediately after each control adjustment.

7.3 Different types of open-loop primitives

The motor primitives we have considered here have been of a rather simple nature: stepwise changes of position, all of the same basic shape and duration regardless of amplitude. In car steering specifically, this approach aligns with a previous report of amplitude-independence in steering adjustments (Benderius and Markkula 2014), and it was also sufficient, here, for making the point that the car steering data could be much better understood as a sequence of such steps than as continuous control. However, if one wanted to apply the computational framework proposed here to other tasks (including car steering in a more general sense than lane-keeping or circle-tracking), one would probably want to consider a wider variety of motor primitives.

Already at the level of simple stepwise position changes, it is clear that humans can adapt the duration of their limb movements to the requirements of the task at hand (Plamondon 1995). Even within the same visuo-manual joystick tracking task, Hanneton et al. (1997) observed stepwise adjustment behaviour where smaller amplitude adjustments were performed faster. Visual inspection suggests that this latter phenomenon might actually be occurring also in the present car steering data sets (see, e.g. the small adjustment at 4 s in Fig. 8a), but if so possibly at amplitudes which would require higher-resolution steering angle measurements to properly characterise.