Abstract

Rotational abnormalities in the lower limbs causing patellar mal-tracking negatively affect patients’ lives, particularly young patients (10–17 years old). Recent studies suggest that rotational abnormalities can increase degenerative effects on the joints of the lower limbs. Rotational abnormalities are diagnosed using 2D CT imaging and X-rays, and these data are then used by surgeons to make decisions during an operation. However, 3D representation of data is preferable in the examination of 3D structures, such as bones. This correlates with added benefits for medical judgement, pre-operative planning, and clinical training. Virtual reality can enable the transformation of standard clinical imaging examination methods (CT/MRI) into immersive examinations and pre-operative planning in 3D. We present a VR system (OrthopedVR) which allows orthopaedic surgeons to examine patients’ specific anatomy of the lower limbs in an immersive three-dimensional environment and to simulate the effect of potential surgical interventions such as corrective osteotomies in VR. In OrthopedVR, surgeons can perform corrective incisions and re-align segments into desired rotational angles. From the system evaluation performed by experienced surgeons we found that OrthopedVR provides a better understanding of lower limb alignment and rotational profiles in comparison with isolated 2D CT scans. In addition, it was demonstrated that using VR software improves pre-operative planning, surgical precision and post-operative outcomes for patients. Our study results indicate that our system can become a stepping stone into simulating corrective surgeries of the lower limbs, and suggest future improvements which will help adopt VR surgical planning into the clinical orthopaedic practice.

Similar content being viewed by others

1 Introduction

Rotational abnormalities of the lower limbs are a relatively common orthopaedic problem (Fig. 1). The estimated incidence rate of patients suffering from this pathology is approximately 5.8 per 100,000, and this incidence rate increases in patients 10–17 years old. A misaligned joint axis (Fig. 2) can lead to joint overloading, the onset of exercise pain, and cause early-onset osteoarthritis. Medical diagnosis of rotational abnormalities requires quantifying the rotational profile of the whole limb both by clinical examination and imaging techniques, preferably by computational tomography (CT) examination. Surgical treatment of these defects depends on the degree of deformity [26] and the patient’s age [2].

Recently, the amount of data collected before a surgical procedure has significantly increased as modern imaging systems are widely available. Virtual reality (VR) technologies can lead the way in taking advantage of the now abundant patient-specific data, aiding in their immersive 3D visualisation and correct interpretation, improving our understanding of human anatomy substantially [31]. For example, a patient’s brain 3D model is used by a neurosurgeon for clip pre-selection on aneurysms, invasive coronary artery bypass, or virtual oncologic liver surgery [22, 32, 34]. In most of the aforementioned cases, 3D models were created from CT or MRI screening that are mostly recorded in 3D volumes [12, 42].

In this paper, we investigate how VR can be used to examine patients’ anatomy and visualise surgical outcomes for de-rotational corrective procedures such as the femoral, tibial, and tibial-tuberosity osteotomy. We design & implement a VR system, OrthopedVR, where surgeons can simulate “breaking” the bone and adjusting the rotational profile in each bone segment separately. New segment angles are calculated and the post-operative change in angle is visualised. We achieve this by designing an application-specific 3DUI and integrating it in collaborative VR.

Our system was inspired by medical specialists’ growing demand for VR visualisation tools [11]. As standalone VR hardware becomes more affordable, 2D CT and magnetic resonance images (MRI) transformed into 3D models can be viewed and manipulated stereoscopically in an immersive environment, improving (i) medical judgment, (ii) clinical training, and (iii) operation planning [45]. We specifically focus on VR surgical planning, that enables surgeons understand pathological and anatomical spatial relationships, boosting surgical confidence [37]. As complex surgical procedures & training future surgeons requires teamwork [4, 35], OrthopedVR also supports VR collaboration & remote consultation with colleagues. We evaluate our VR system based on surgeons’ performance when determining bone incision locations and lower limb rotational profile angles in VR, compared to surgical planning using standard clinical digital imaging and communications in medicine (DICOM) viewers for axial CT images. Our study confirms VR’s practical benefit in lower limb clinical assessment planning.

Our three specific contributions include:

-

We developed OrthopedVR, enabling surgeons to examine & manipulate 3D reconstructions of CT scans of patients suffering from rotational abnormalities and patellar mal-tracking in an immersive, multi-user, collaborative environment.

-

To facilitate this, we designed an application-specific, hybrid 3DUI combining contextual and static UI designs. The 3DUI controls the surgical planning simulation by providing incision positioning, post-incision leg re-alignment, and post-operative angles calculation & visualisation.

-

We perform a quantitative and qualitative evaluation for corrective osteotomy planning of the lower limbs in VR. Four expert orthopaedic surgeons endorse our methodology.

2 Related work

2.1 Rotational deformities in femur & tibia, and patellar instability in the lower limbs

Several factors ensure a normally working patellofemoral joint (PFJ) (Fig. 2): soft connective tissues around the knee, the correct geometry of osseous structures and properly aligned components in the lower limb. The rotational alignment of the femur and tibia determine the correct patellofemoral tracking and properly working knee joint. Orthopaedic doctors are especially interested in the femoral version (FV), tibial rotation and knee joint rotation angle (KJRA) [38] (Fig. 3). Significant femoral anteversion leads to an internally rotated gait unless a simultaneous external tibial torsion rotates the leg outward maintaining a normal foot progression angle during gait. Increased femoral anteversion and tibial torsion produce patellofemoral instability. The patellar mal-tracking manifests itself in occasional or permanent instability & chronic pain in the knee region. The anatomic relationship between the resultant force from the quadriceps and the line of pull of the patellar tendon is called the Q-angle and is normally 10-15\(^{\circ }\). The first deformity that affects the patella tracking is valgus (knock knee syndrome) or varus (bow leg syndrome) of the tibia (Fig. 1). Commonly in paediatric patients, the tibia is in the valgus. The varus-valgus deformities in the tibia are the most common. Secondary deformities are also, in some cases, found in the rotated distal femur [7, 19].

2.2 Clinical diagnostics and surgical treatment

The patient history, physical examination and gait observations can indicate signs of patellar mal-tracking, in-toeing or out-toeing gait. Sometimes, malalignments of the hips and knees go unnoticed, perpetuating pain and discomfort, due to the two adverse rotations of the femur and tibia that would otherwise maintain the patient’s feet in a parallel position when walking.

Images taken from the front of the leg, the back, or the side during single or bi-planar X-ray examinations do not reveal the rotational abnormalities [13, 17]. Moreover, the assessment accuracy solely by CT/radiography is questionable [23, 33]. Previous studies showed that 3D reconstruction of bi-planar radiographs or CT versus standard 2D visualisation of radiological data on PACS systems, did not exhibit significant differences during the lower limb length and alignment angle measurements. Significant differences were found only for pelvic obliquity and rotation. Even though 3D reconstructions of radiological data are interchangeable with clinically standard 2D viewing methods, they presented a superior inter-reader agreement [3, 14].

The Modified Perth Protocol, employing 2 mm thick select axial CT scan acquisition through femoral necks, knees and ankle joints, is often used to diagnose rotational abnormalities [6, 20]. Conventionally, radiologists determine the lower limb rotational profile by measuring various CT angles directly on 2D CT slices (Fig. 3). The femoral neck-horizontal axis angle determines the femoral neck anteversion/retroversion angle. The distal femoral condyle-horizontal axis angles are then measured and the degree of femoral rotation is determined as a relative angle between proximal femoral neck-horizontal axis and distal femoral condyle-horizontal axis angles, expressed as femoral internal or external rotational angles. The tibial torsion angles are calculated as a relative angle between the proximal tibial condyles-horizontal axis at the level of the knees and bimalleolar-horizontal axis angles at the level of the ankles; the tibial torsion is described either as positive external rotational or negative internal rotation [16].

Patella tracking can be treated surgically [8, 18, 27] when the tibia is in valgus or varus deformity, by guided growth using plates. However, if the correction of the varus/valgus deformity does not improve the Q-angle, the external rotation of the distal segment of the femur must be considered. If mal-tracking persists, a displacement osteotomy of the patella insertion on the tibia may be required.

2.3 Virtual reality for surgical planning

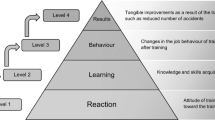

VR in orthopaedics are mainly related to procedure training, education, diagnosis, and rehabilitation [1, 15, 25, 30, 41]. By providing a virtual environment, VR enables the evaluation of different anatomical regions of the body, facilitating the development of an accurate preoperative plan and the visualisation of the entire operative process [43]. For example, in a case report by Kim et al. 2020 [21], surgeons conducted preoperative planning in VR for total maxillectomy and orbital floor reconstruction and they particularly remarked the strengths of this technology. The use of VR in orthopedic surgery training has also demonstrated a measurable improvement of surgical skills and knowledge acquisition among orthopedic surgeons and a flattening of the initial learning curve [10, 15]. However, to the best of our knowledge, there exist no studies/solutions targeting VR surgical planning for derotational osteotomies based on patient-specific data.

VR surgical planning has also shown its potential in clip pre-selection in aneurysms [22], where VR provided neurosurgeons with improved spatial understanding of vascular anatomy leading to outstanding aneurysm closure rates. VR has aided surgeons with oncologic liver surgeries planning [32], curtailing procedure-related complications. 3D models of patient’s specific liver geometry were involved. Invasive coronary artery bypass for Kawasaki disease [34] has also been demonstrated, where the interactive reconstruction of CT images helped plan the insertion of thoracoscopic ports and determine the ideal location for anterior mini-thoracotomy. Overall, VR has shown great potential in improving orthopedic surgery and rehabilitation, but more research is required to fully understand its benefits and limitations.

3 OrthopedVR

OrthopedVR, provides surgeons with a VR environment where they can rehearse an osteotomy beforehand, based on real patient measurements, and visualise its outcome. Various angle correction amounts can be appraised and visualised before operating on the patient. A surgeon first indicates the anticipated incision plane. Our system then recalculates the geometry of the segmented bones and generates two superimposed bone segments that can be manipulated independently and then fused back, in a collaborative manner. OrthopedVR is implemented in Unity3D (Unity Technologies) and deployed on Meta Quest 2 headsets.

A The contextual 3DUI providing bone-cutting actions and relevant information visualisation. B The world-positioned UI, omni-present, communicating essential information. C Dynamic control panel UI, attached to the one hand of the user and controlled with their other hand, containing context-specific actions that get dynamically updated

3.1 Collaborative virtual environment

We implemented a multi-user mode in OrthopedVR as collaborative virtual environments enable surgeons to work on patient cases jointly, increasing their ability to understand a case, and treat it effectively. Additionally, the implementation of collaborative mode provided respondents with adequate VR training by the study coordinator before they were asked to evaluate our system (Fig. 4). At the start of the VR training session, respondents are able to observe the instructor interact with the 3DUI elements in the VR environment, but also practice the controls themselves.

The Photon Engine PUN2 solution (Exit Games GmbH, Germany) has been integrated into our Unity3D-based implementation to enable communication between individual clients. This solution provides synchronisation of position and rotation with interactive objects in the scene, and also remote procedure calls responsible for script execution distribution across all network users.

Environment. The user enters a simple, square room in VR, where all interaction happens. A 3D-model of the patient’s lower limbs hovers in the centre of the room. Following preliminary testing we chose colors for the background and the different osseous structures to maintain a strong perceived contrast and enhance their distinctiveness. Using brighter colours (the natural beige colour of bones) was avoided, as it strained users’ eyes during preliminary testing.

Avatars. Virtual avatars have been implemented to ensure coordination between multiple users sharing the same virtual environment (up to 5, currently). These avatars are spawned for each player when joining the virtual room. An avatar consists of a sphere corresponding to the head of the user with a VR headset model attached indicating the direction of the other user’s head. Additionally, models of hands are visualised so users can point to areas of the patient’s anatomy to each other.

Audio. Surgeons not sharing the same physical environment with their colleagues when discussing cases or investigating and planning surgical procedures require audio chatting capabilities. Therefore a Photon Voice plugin has been implemented to provide users with such functionality. Recorder and Photon Voice Network components implement the transmissions of players’ voices. The Recorder component records the audio from the user’s microphone and distributes it to other users via the Photon Voice Network component. The audio source is located at the centre of the player’s avatar head. Therefore, the origin of the sound appears to follow users as they move.

The main task that must be performed in VR is bone cutting and repositioning based on bone segment angles. To do so, the user grabs a Cutting Cube using the controller, which defines the position and direction of the cutting plane. The plane has six degrees of freedom (6-DoF). We devised a whole set of different 3DUIs, to aid the cutting process decision-making, which we present in the next section.

3.2 3DUI

Our application-specific 3DUI (Fig. 5) combines multiple UI principles [24] to aid with decision-making and information visualisation, and is only controlled with two physical buttons, Index Triggers and Hand Triggers. We combine three different UI design paradigms to enable users make treatment-related decisions & actions whilst having access to all required information at all times. The dynamic UI maintains relevant items/options visible and ready to select/view depending on the surgery simulation state, without tedious searching in non-intuitive scroll-view menus.

3.2.1 Contextual UI

A contextual UI enables direct interaction with the lower limbs, appearing where a segmentation is being performed. The user adjusts the rotation of the limb segment by touching the limb and rotating their wrist. A protractor (Fig. 5A) indicates the rotation angles achieved for each bone segment. This UI provides essential data for decision-making, increasing situational awareness when manipulating bone structures.

3.2.2 World-positioned UI

A World-positioned UI displays key patient/procedure-related data that should be available at all times, collected during the clinical examination, e.g., rotational angles measured in different locations on both lower limbs (Fig. 5B). This UI is purposefully fixed, always-present.

3.2.3 Dynamic control panel UI

This is a point-and-click control panel, dynamically updated, visible at all times, attached to the left controller (user’s left hand - for right-handed users; can be swapped for left-handed users) providing immediate access to the most essential functions (Fig. 5C). This point-and-click UI circumvents the use of several physical controller buttons to perform the large variety of tasks required during pre-operative planning. It contains simple call-to-action (CTA) buttons that execute functions essential to bone-cutting. The right controller (can be swapped for left-handed users) can be used to point to the CTAs. Pressing the Secondary Index Trigger selects the indicated task.

3.3 Collaborative osteotomies

Once a tentative cutting plane has been positioned, and a cut is initiated using the dynamic control panel UI, a series of processes are triggered, generating a new, segmented mesh. Our algorithm performs an exclusion check of the intersecting vertices in the limb vertex list. Two vertex lists are created in the intersection plane, and checks are performed to establish on which side of the cut the final original vertices lie. Then two new meshes are created from each vertex list. New vertex normals are then calculated along with their boundaries and tangents for accurate lighting. Different materials are assigned to each segment: a green material indicates the split-off part that should be rotated (orange material means fixed). To perform this osteotomy in a collaborative environment and calculate accurate angles, we address several technical challenges, such as the complex synchronisation required between meshes and environment states. We include more technical details of our implementation in the supplemental document (Sect. 1).

3.4 Optimisations for standalone VR deployment

Scene lighting is a blend of “pre-baked” and real-time lights. The scene’s ambient lighting, such as the walls, ceiling and floors, is calculated using pre-computed light transport (“baked” light, into a single 1024x1024 pixels lightmap - helping reduce the number of draw-calls when static batching is enabled). Objects which share the same material, as well as the lightmap, are drawn in the same draw-call. Lightmaps are generated by the Progressive CPU Renderer [40]. Pixel light count is set to 1, to maintain high performance on stand-alone VR headsets [28]. Global illumination is processed using the Shadow Mask lighting mode [39]. Lighting the dynamic objects is based on real-time calculations. In order to avoid unnecessary real-time light ray casting onto static models, layer-based filtering is used. Real-time shadow casting is disabled as it would lead to increased draw-calls and polygons due to the high-res lower limb segmentation.

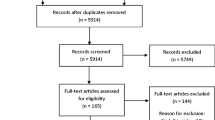

We split the evaluation cohort in two groups of two, enabling us to draw a plethora of conclusions, despite the limited cohort and dataset size. In the clinical and pre-operative studies we directly compared performance between respondents using a DICOM viewer and OrthopedVR on three cases. All of the data collected from the testing session were then included in the overall comparison of the DICOM viewer versus OrthopedVR, regardless of the study type. Note that the illustration of the testing sequences does not follow the exact order of how respondents viewed the individual cases; the order was randomised for each participant

4 Evaluation

We evaluated our VR system both quantitatively and qualitatively.

4.1 Materials and methods

Quantitatively, our focus was on surgeons’ examination performance when manipulating patient CT data on a standard desktop DICOM viewer (as in a picture archiving and communication system, PACS) compared to our immersive 3D reconstruction of the data in OrthopedVR. Our objective performance metric was task completion time for each case and method of assessment. Task completion time serves as an excellent proxy to assess the respondent’s spatial understanding of the unique pathological anatomy of the patient. Our goal was not only to measure how much time our technique saves, compared to PACS, but rather establish, in combination with the qualitative questionnaires and user feedback that significantly less cognitive resources are required to diagnose and plan a surgery in VR, versus 2D viewing. System data such as CPU and GPU utilisation & average FPS count were also recorded.

Qualitative evaluation data were collected in the form of post-session questionnaires and feedback, particularly focusing on the usability of our 3DUI. An additional, conventional UI usability study with non-experts would not provide valuable data for our purposes, as members of the general population without experience in derotational osteotomies, would not be able to evaluate the UI in the context of surgical planning. More specifically, the qualitative questionnaire is designed to assess user experience when using OrthopedVR for osteotomy planning. We obtained feedback on the visual fidelity, user interface, multi-user interaction capabilities, the quality of the 3D data reconstruction, and the perceived performance of the VR simulation among other things. We also gauged the potential of VR in pre-operative orthopaedic assessment and planning, and assessed the willingness of users to adopt and recommend VR technology for pre-operative planning to colleagues and trainees, thus predicting the potential acceptance and uptake of the technology in the orthopaedic community. Participants responded using an extended Likert scale (1 = “very negative” to 10 = “very positive”). Participants were also asked for insights outside of the pre-set questions. The full set of 15 questions can be found in the supplemental document (Sect. 3).

4.2 CT data acquisition

Following ethical approval by our department, we obtained five anonymised data sets of patients indicated to undergo lower limb corrective surgery from our country’s national health system. The Modified Perth Protocol of our national health system only records the areas of the proximal femur, femoral condyles, and taulus from an axial view (0.4–0.6 mm slice thickness in coronal view, 0.4–0.5 mm in sagittal view, and 3.3–3.6 mm slices) to reduce X-ray radiation dosage. Visualisation of the non-continuous dataset is included in the supplemental document (Sect. 2). Due to the missing CT data between the proximal femur, femoral condyles and the taulus, we model the missing structures synthetically. This was required to provide doctors with a realistic 3D model enabling them to locate anatomical features, but no leg joint data were interpolated, or joint angles where affected. We also optimise the 3D models for standalone VR. We present our bone interpolation/optimisation technique in the next section 4.2.1. The CT data were segmented using 3D Slicer based on the master volume range on the Yen’s threshold method and using the Robust Statistics Segmenter [36, 44]. Our CT data segmentation and processing was validated by a Lead Consultant Musculoskeletal Radiologist specialising in diagnostic, interventional musculoskeletal and sports imaging (co-author).

4.2.1 Mesh augmentation and optimisation

Due to the aforementioned limitation of missing CT data between the proximal femur, femoral condyles and the taulus, it is necessary to model the missing structures synthetically. This is essential to provide doctors with a realistic 3D model enabling them to locate anatomical features. The remodelling does not affect or adjust the original bone parts as recorded on CT, but rather only adds structures in-between them. This additional geometry helps visualise how different angle changes in various areas of the leg affect one-another. We used a mesh of the lower limb generated from an open-source contiguous CT stack, as the base mesh for the remodelling of the in-between structures. The modelling filling process is performed in Maya (Autodesk, USA), (mean data preparation time 1 h 36 m (SD = 0 h 24 m).

3D Slicer produces 3D models with dense topology containing millions of polygons and saves them into a.stl CAD format. Due to the noise and artefacts that are present in the CT slices, significantly more -unnecessary- polygons are generated. Importing such 3D models with dense topologies in VR significantly impacts the rendering performance. To ensure a well-performing VR experience, data first go through a re-meshing and decimation process. We used MeshLab [5], an open-source software for processing and editing 3D triangular meshes. By applying surface simplification using quadric error metrics, we unify neighbouring vertices that share the exact coordinates and reduce the polygon count of the segmented 3D models [9]. After applying this procedure, we obtain an optimised model made of just a few thousands of vertices instead of hundreds of thousands. During software testing, we observed that the model of the entire structure of both lower limbs decreased in polygon count more than 90 times, from 755,644 to 8,234 polygons. That ensures stable performance during run-time on the Meta Quest 2. The initial dimensions and shape of the original segmentation are conserved by setting the preserve boundary function in MeshLab. We studied the possibility of accidentally deforming the anatomical structures, thus decreasing anatomical accuracy, and we found no significant proportional changes in the optimised model. Models from MeshLab are exported in the.obj file format. Following the mesh augmentation and optimisation processes, the collaborating radiologist (co-author of the paper) validated the reconstruction by utilising segmentation software to overlay the 3D reconstructed mesh over the CT volumetric data. This process ensured that possible geometrical deviations in the 3D model compared to the CT image were detected. No inaccuracies were detected in the 3D reconstruction by the radiologist.

4.3 Participants

According to the most recent report of our national register for knee osteotomies, 49 surgeons are registered in the UK healthcare system [29]. Due to the complicated and invasive nature of derotational procedures, these types of surgeries are only performed by a very limited number of individuals with a vast experience in the field. Despite the scarcity of experienced specialists in derotational osteotomies, we managed to recruit 4 experienced orthopaedic surgeons (8.16% of the professional body in our country) averaging 20 years of experience in the field (SD = 2.36), mean age 50 years (SD = 2.87). Two surgeons out of four are performing corrective osteotomies regularly. Two surgeons focus on primary and revision hip and knee replacement and one of them partly performs corrective osteotomies. None of them had previous experience with VR. The doctors were not involved in the development process of our system and were not aware of its functioning.

4.4 Procedure

CT scans of five pediatric and young adult patients suffering from patellar mal-tracking either caused by femoral or tibial rotational abnormalities were selected, processed (Sect. 4.2.1) and were used for the evaluation. To make the most of our evaluation cohort, we split the participants into two groups of two, each group running their own study (Fig. 6). The first group of participants (clinical assessment study) comprised one surgeon with experience in hip & knee replacements and some corrective osteotomy experience and one surgeon with experience in hip & knee replacements and experience in indicating patients with rotational abnormalities for expert surgeons primarily focusing on this area. The first group’s objectives were to examine and set diagnoses based initially on the axial CT images and later on 3D reconstructions of the CT data in VR and provide us with a direct comparison regarding decision-making between different methods (Sect. 4.5.1). The second group of participants (pre-operative planning study), including two exceedingly experienced osteotomy surgeons was used to provide a direct comparison in decision-making for surgical intervention and surgical planning between different cases and assessment methods with and without prior examination of CT images (Sect. 4.5.2). Participants of this group had to explain their surgical plan.

4.5 Clinical assessment study and pre-operative planning study

During the first part of each study, participants were shown patient data on a desktop computer using a DICOM viewer with radiological annotations including femoral horizontal axis angle, femoral condylar horizontal axis angles, tibial condyles horizontal axis angles, and bimalleolar horizontal axis angles (Fig. 3). Patient data presentation order was randomised for each participant and method. Participants were not aware that the cases presented in Orthoped VR or in the DICOM viewer could be of the same patients. For each patient case, we asked participants to establish a diagnosis and indication for a surgical procedure (in addition to a surgical plan for the pre-operative planning study). We recorded task completion time and their diagnosis.

Before the second part of each study (evaluation in Virtual Reality), each participant underwent a tutorial session in collaborative VR with the session coordinator (primary author of this paper), during which they were trained on how to interact with the user interface and perform incisions on a 3D model of the lower limbs specifically for training (not included in the testing set). Then, during the examination of patients’ 3D reconstructions in VR, participants were again asked to establish patients’ diagnoses. The task completion and details of the diagnosis were recorded for each patient’s case. After the clinical assessment in OrthopedVR, participants were asked to fill up the qualitative questionnaire. This post-session questionnaire followed an informal feedback discussion probing the participant’s experience with the virtual reality system to specifically indicate its benefits and potential issues before implementation into clinical medicine.

4.5.1 First group: clinical assessment study

The intent of the clinical assessment study was to compare task completion times of Participant 1’s performance of setting patient’s diagnoses based on the CT images prior to the visualisation in OrthopedVR, and Participant 2 being only able to use OrthopedVR, and to compare overall task completion times spent examining and diagnosing on a desktop CT DICOM viewer and by using OrthopedVR.

Participant 1 was presented with CT data of five patients. Participant 2 was presented CT data of only three patients’ prior to the VR examination. This was done in order to be able to compare task completion times of a participant who was able to examine CT data prior to the visualisation onto the OrthopedVR system, and a participant who was not provided CT images beforehand with an exemption of a single case. Participant 2 only saw three out of five cases in order to limit their possible bias when directly comparing both participants’ performance per case using different methods, i.e., we wanted to ensure that they did not see all cases in both systems. Participant 1 was presented all five cases in both methods for us to observe changes in understanding of the patients’ anatomy if cases are seen in both systems.

4.5.2 Second group: pre-operative planning study

The purpose of the pre-operative planning study was to compare task completion times of Participant’s 3 performance of surgical planning on the CT images prior to the visualisation in OrthopedVR and Participant 4 only being able to use OrthopedVR.

Participant 3 was presented all five cases for clinical evaluation on the desktop DICOM viewer and later on the OrthopedVR system. Participant 3 also performed an examination of CT 3D reconstructions in the OrthopedVR system in a different randomised order to eliminate any learning effects. Participant 4 was presented with three cases each for clinical evaluation on the desktop DICOM viewer and in the VR system with one case overlapping between both examination methods. As in the case of the Clinical assessment study group, presenting just three cases for each method to Participant 4 was done to minimise bias and learning effects due to them seeing a patient’s case with a different visualisation method when comparing each participant’s performance per each case.

The results of the qualitative questionnaire (10 is better) (Sect. 5.2). Please note that Q7 was an open-ended question (also see supplemental for full questionnaire)

5 Results and discussion

5.1 Quantitative measurements

Task completion times of all participants and study groups for desktop-CT versus VR strongly indicated that participants completed the tasks much faster in VR. Mean completion time in VR was shorter (mean = 1 m 52 s, SD = 0 m 29 s) in contrast to examinations performed on the desktop DICOM viewer (mean = 4 m 23 s, SD = 1 m 44 s) (Fig. 7). We tested the difference of the completion times over all participants and both study groups for desktop-CT versus OrthopedVR using a non-parametric Mann–Whitney U test due to log-normal distribution reported by Shapiro-Wilk test. A Mann-Whitney test indicated statistical significance in task completion times differences (DICOM viewer (Mdn = 218 s) versus OrthopedVR (Mdn = 85 s), \(U=30\), \(p=.0001\)). These results, taken together with very positive user feedback (see next subsection), establish that VR indeed enabled a better understandings of patients’ pathological anatomy in the OrthopedVR system.

5.1.1 First group: clinical assessment study

Task completion times analyses indicate a better understanding of patients’ anatomy when in VR, and improvement in decision-making. Participant 1 assessed three selected patients’ CT scans on a desktop DICOM viewer. Participant 2 examined the same three selected patients’ 3D reconstructions in OrthopedVR without being able to examine CT datasets in the DICOM viewer beforehand. Our measurements indicated that Participant’s 2 understanding was strengthened from the 3D reconstructions (Participant 1: mean = 7 m 3 s, SD = 3 m 36 s vs Participant 2: mean = 1 m 10 s, SD = 0 m 16 s) (Fig. 8). Participant’s 2 decision-making was 6x faster in OrthopedVR than Participant’s 1 decision-making on the DICOM viewer. Participant’s 1 average total task completion time for setting on a diagnosis for all 5 patients in OrthopedVR (mean = 3 m 25 s, SD = 1 m 15 s) improved by 46.3% in comparison with the average total task completion time while examining all patients’ data in the DICOM viewer (mean = 6 m 22 s, SD = 3 m 22 s). Comparing average task completion times across both participants in OrthopedVR (mean = 2 m 14 s, SD = 1 m 30 s) and desktop DICOM viewer (mean = 4 m 58 s, SD = 3 m 8 s) showed a 55% difference (more than 2x) between methods in favour of OrthopedVR.

5.1.2 Second group: pre-operative planning study

The results of the pre-operative planning study provided us with insights on how experienced surgeons could benefit from surgical planning in an immersive VR environment. We observed much shorter task completion times for Participant 4 during the clinical examination and surgical planning in OrthopedVR (without being able to first examine CT images) (mean = 2 m 29 s, SD = 0 m 56 s) compared to Participant’s 3 examination and planning in the DICOM viewer (mean = 4 m 37 s, SD = 1 m 3 s), overall a 46,2% difference in decision-making time (Fig. 8). During the clinical examination and surgical planning of all patients’ data, Participant 3 performed 77,3% faster in OrthopedVR (mean = 1 m 5 s, SD = 0, 17 s) than in the DICOM viewer (mean = 4 m 46 s, SD = 1 m 19 s).

When using 2D CT scans, the surgery planning appeared to be faster than the clinical assessment (again, for 2D CT scans). However, this trend is reversed in VR: it is faster to do the clinical assessment than to plan the surgery. We attribute this to the greater experience of respondents in the “Surgical Planning” group. However, it was expected that the surgical planning itself will take more time in VR than clinical assessment, as the surgeons were asked to provide a more comprehensive report on how they would proceed.

5.1.3 VR system performance

OrthopedVR running on the Meta Quest 2 had consistently stable frame rates throughout clinical testing. The CPU utilisation of the device was low (mean = 20%, SD = 5.76%) and the GPU utilisation was moderate (mean = 50.75%, SD = 9.99%). Frames-per-second (FPS) were locked to a maximum of 72 FPS during testing, supported by our optimisation methods (mean = 71.8 FPS, SD = 1.3 FPS).

5.2 Qualitative measurements

Responses to the questionnaires support that Participants felt that OrthopedVR offered a better understanding of lower limb alignment and rotational profile in comparison with isolated 2D axial CT images (mean = 8.25/10, SD = 1.5) (Q10) (Fig. 9). They agreed that such a VR system can play a significant role in orthopedic assessment (mean = 9/10, SD = 0.81) (Q8). The majority of the participants felt neutral, and one participant felt positive about reducing their reliance on conventional radiological manual measurement of angles on CT images (mean = 6.25/10, SD = 2.75, min. = 3, max. = 9) (Q11). Participants further indicated that VR-based orthopaedic planning will be used in the future (mean = 8.75/10, SD = 1.25) (Q9). They shared a positive feedback relating to the increased reproducibility of lower limb rotation profile angle measurements as part of pre-operative planning and reduced variation in angles related to human factors (mean = 7.75/10, SD = 2.21) (Q12). Based on the respondents’ feedback, use of VR software in conjunction with radiology reports could improve the surgical planning, precision, and post-operative outcomes for the patients (mean = 8.25/10, SD = 1.25) (Q13).

They were positive about them being confident that VR should be incorporated in future clinical practice (mean = 8.25/10, SD = 0.95) (Q14), and they would recommend VR rotational profile alignment software for pre-operative planning to their colleagues and trainees (mean = 8.25, SD = 0.95) (Q15). Participants found the collaborative functionality in OrtopedVR useful for training and cooperation (mean = 8.5/10, SD = 1.29) (Q3). Participants also stated that they appreciated more the collaborative tutorial they underwent before the testing session of OrtopedVR with the study coordinator than they would have a pre-recorded video tutorial (mean = 8.5/10, SD = 0.57) (Q4). Participants found the VR environment visually appealing (mean = 8.25/10, SD = 1.25) (Q6).

Participants appreciated the contrast between the anatomical structures of the patient and the darker background. Participants did not complain during the testing for any strain on their eyes. Participants also indicated general satisfaction with the visual performance during the osteotomy planning (mean = 8.25, SD = 1.25) (Q1).Participants showed positive feedback towards the combination of contextual, world-static, and dynamic control panel UIs (mean = 8/10, SD = 0.8) (Q5). Participants also rated that the application was perceivably stable during their individual testing session (mean score = 9.25/10, SD = 0.95) (Q2).

5.3 Summary

Reflecting upon the quantitative and qualitative study results, the reconstruction of patients’ CT data into an immersive environment has an undoubtedly positive impact on diagnosis and surgical planing. Our framework enables surgeons to essentially rehearse a surgery before performing it and try out different parameters and observe the result. Moreover, OrthopedVR, works based on the current -limited- clinical protocols of our health system, without requiring any additional data or radiation dosage.

This is the first study, to our knowledge, that concentrates on improving surgical planning during orthopaedic procedures of the lower limbs, whose current gold standard for diagnosis and pre-operative planning is only a CT scan, an anterior-posterior X-ray, or a physical examination of the patient by an orthopaedic doctor.

5.4 Limitations

A limitation of this study is the amount of available datasets of patients suffering from rotational abnormalities of the lower limbs. Obtaining pediatric cases was exceedingly difficult as our national body reports only 620 cases between the years of 2014-2017 and 70% of patients were between 40 and 55 years of age [29]. These abnormalities are not that widely diagnosed in the younger population as patients are often told to wait until they are candidates for knee arthroplasty despite the fact that knee osteotomy could delay the progression of arthritis. As a result of this, we were only able to gather data from 5 cases. A second limitation stems from the fact that due to current clinical protocols attempting to reduce radiation exposure, there exist no post-operative CT datasets serving as a ground truth comparison for the pre-operative planning. We relied on the experience of the four surgeons to validate their outputs. We did not ask the surgeons to cross-validate each other as their preferred operating approaches (angles & incision technique) to treat mal-tracking differed substantially depending on their personal preference.

6 Conclusion and future work

We presented OrthopedVR, a VR system implementing an application-specific 3DUI to (i) visualise rotational abnormalities of the lower limbs and (ii) enable surgeons first rehearse a derotational osteotomy in VR. Our quantitative and qualitative evaluation showed beyond doubt that immersive surgical planning in VR can be beneficial for more accurate understanding of structural abnormalities, the patient’s pathological anatomy, efficient surgical planning and improved clinical outcome. OrthopedVR can function based on existing clinical screening protocols without requiring additional data or radiation exposure. To our knowledge, this is the first system & study to improve surgical planning for lower limb orthopaedic procedures, whose gold standard for diagnosis and pre-operative planning is only a CT scan, an anterior-posterior X-ray, or a physical examination by an orthopaedic doctor.

Based on extensive discussions with the experienced surgeons, future work could involve: (i) creating a virtual model enabling an understanding of the effect of additional interventions on patella tracking, e.g., as the distance between the lower pole of the patella and the tibial tuberosity remains the same, the femoral condyle moves around that point; It would thus be beneficial for surgeons to be able to view the knee in extension, 30, 60, 90, and 120 degrees of flexion and observe the relation of the patella to the trochlear groove. (ii) Provide the ability for surgeons to mix and match techniques to gain an understanding of what happens with each intervention and how interventions affect one another. One surgeon also suggested the implementation of medial patellofemoral ligament reconstruction into the VR simulation. (iii) Further work on tailored CT protocols, to improve the overall quality and reduce time for producing 3D models for simulated VR environments. This would also allow to validate our approach on a large sample of patients data including post-operative screening serving as the ground truth. Finally, future work could also validate our approach on a larger sample of patient data as well as to compare the results of the VR pre-operative planning to actual post-operative CT screenings, which, however, will require a fundamental change of the clinical data acquisition protocols in our health system.

Data availability

The data that support the findings of this study are available from NHS, UK, but restrictions apply to the availability of these data, which were used under licence for the current study, and so are not publicly available.

References

Ayoub, A., Pulijala, Y.: The application of virtual reality and augmented reality in oral & maxillofacial surgery. BMC Oral Health (2019)

Baur, W., Hönle, W., Schuh, A.: Tibiakopfosteotomie bei Varusgonarthrose Proximal Tibial Osteotomy for Osteoarthritis of the Knee with Varus Deformity. Operative Orthopadie und Traumatologie 17(3), 326–44 (2005)

Chaibi, Y., Cresson, T., Aubert, B., Hausselle, J., Neyret, P., Hauger, O., de Guise, J.A., Skalli, W.: Fast 3D reconstruction of the lower limb using a parametric model and statistical inferences and clinical measurements calculation from biplanar x-rays. Comput. Methods Biomech. Biomed. Engin. 15(5), 457–466 (2012)

Chheang, V., Saalfeld, P., Joeres, F., Boedecker, C., Huber, T., Huettl, F., Lang, H., Preim, B., Hansen, C.: A collaborative virtual reality environment for liver surgery planning. Comput. Graph. (Pergamon) 99, 234–246 (2021)

Cignoni, P., Callieri, M., Corsini, M., Dellepiane, M., Ganovelli, F., Ranzuglia, G.: MeshLab: An open-source mesh processing tool. In: 6th Eurographics Italian Chapter Conference 2008 - Proceedings, pp. 129–136 (2008)

Chauhan, S.K., Clark, G.W., Scott, R.G., Lloyd, S., Sikorski, J.M.: The perth CT protocol for total knee arthroplasty | Orthopaedic Proceedings. Orthopaedic Proc. 90(B) (2008)

Dobbe, A.M., Gibbons, P.J.: Common paediatric conditions of the lower limb. J. Paediatr. Child Health 53(11), 1077–1085 (2017)

Farr, J., Cole, B.J., Kercher, J., Batty, L., Bajaj, S.: Anteromedial Tibial Tubercle Osteotomy (Fulkerson Osteotomy). Anterior Knee Pain and Patellar Instability pp. 455–462 (2011)

Garland, M., Heckbert, P.S.: Surface Simplification Using Quadric Error Metrics. https://bit.ly/3kWnrOq

Goh, G.S., Lohre, R., Parvizi, J., Goel, D.P.: Virtual and augmented reality for surgical training and simulation in knee arthroplasty. Arch. Orthop. Trauma Surg. 141, 2303–2312 (2021)

Goldman, L.W.: Principles of CT and CT technology (2007). https://doi.org/10.2967/jnmt.107.042978

González Izard, S., Juanes Méndez, J.A., Ruisoto Palomera, P., García-Peñalvo, F.J.: Applications of virtual and augmented reality in biomedical imaging. J. Med. Syst. 43(4), 102 (2019)

Gruskay, J.A., Fragomen, A.T., Rozbruch, S.R.: Idiopathic rotational abnormalities of the lower extremities in children and adults. JBJS Rev. (2019). https://doi.org/10.2106/JBJS.RVW.18.00016

Guggenberger, R., Pfirrmann, C.W.A., Koch, P.P., Buck, F.M.: Assessment of lower limb length and alignment by biplanar linear radiography: comparison with supine CT and upright full-length radiography. Am. J. Roentgenol. 202(2), W161–W167 (2014)

Gustafsson, A., Pedersen, P.M., Rømer, T.B., Viberg, B., Palm, H., Konge, L.: Hip-fracture osteosynthesis training: exploring learning curves and setting proficiency standards. Acta Orthopaedica 90, 348–353 (2019)

Holland, C.L., Kamil, A., Puttanna, A., Applications-general, C.: CT assessment of children with in-toeing gait. ECR 2011, 1–13 (2011)

Hospital for Special Surgery: Hip/Femoral Anteversion: Causes, Symptoms, Treatment (2021). http://bit.ly/3ZziZUN

Hosseinzadeh, P., Ross, D.R., Walker, J.L., Talwalkar, V.R., Iwinski, H.J., Milbrandt, T.A.: Three methods of guided growth for pediatric lower extremity angular deformity correction. Iowa Orthop. J. 36, 123 (2016)

Jibri, Z., Jamieson, P., Rakhra, K.S., Sampaio, M.L., Dervin, G.: Patellar maltracking: an update on the diagnosis and treatment strategies. Insights Imaging 10(1), 1–11 (2019)

Kaiser, P., Attal, R., Kammerer, M., Thauerer, M., Hamberger, L., Mayr, R., Schmoelz, W.: Significant differences in femoral torsion values depending on the CT measurement technique. Arch. Orthop. Trauma Surg. 136(9), 1259–1264 (2016)

Kim, H.J., Jo, Y.J., Choi, J.S., Kim, H.J., Park, I.S., You, J.S., Oh, J.S., Moon, S.Y.: Virtual reality simulation and augmented reality-guided surgery for total maxillectomy: a case report. Appl. Sci. 10(18), 6288 (2020)

Kockro, R.A., Killeen, T., Ayyad, A., Glaser, M., Stadie, A., Reisch, R., Giese, A., Schwandt, E.: Aneurysm surgery with preoperative three-dimensional planning in a virtual reality environment: technique and outcome analysis. World Neurosurg. 96, 489–499 (2016)

Kuo, T.Y., Skedros, J.G., Bloebaum, R.D.: Measurement of femoral anteversion by biplane radiography and computed tomography imaging: Comparison with an anatomic reference. Invest. Radiol. 38(4), 221–229 (2003)

LaViola, J.J., Kruijff, E., McMahan, R.P., Bowman, D., Poupyrev, I.P.: 3D User Interfaces: Theory and Practice, 2nd edition edn. Addison-Wesley usability and HCI series. Addison-Wesley, Boston (2017)

Lohre, R., Bois, A.J., Pollock, J.W., Lapner, P., McIlquham, K., Athwal, G.S., Goel, D.P.: Effectiveness of immersive virtual reality on orthopedic surgical skills and knowledge acquisition among senior surgical residents. JAMA Netw. Open 3, e2031217 (2020)

Murphy, S.B.: Tibial osteotomy for genu varum: indications preoperative planning, and technique. Orthop. Clin. North Am. 25(3), 477–482 (1994)

Murray, R., Winkler, P.W., Shaikh, H.S., Musahl, V.: High tibial osteotomy for varus deformity of the knee. J. Am. Acad. Orthop. Surg. Global Res. Rev. 5(7), e21.00141 (2021)

Oculus: Configure Unity Settings | Oculus Developers (2021). bit.ly/3ZUDlaJ

Palmer, H., Elson, D., Baddeley, T., Porthouse, A.: The united kingdom knee osteotomy registry: the first annual report 2018 (2019)

Papagiannakis, G., Lydatakis, N., Kateros, S., Georgiou, S., Zikas, P.: Transforming medical education and training with VR using M.A.G.E.S. (2018). https://doi.org/10.1145/3283289.3283291

Pfeiffer, M., Kenngott, H., Preukschas, A., Huber, M., Bettscheider, L., Müller-Stich, B., Speidel, S.: IMHOTEP: virtual reality framework for surgical applications. Int. J. Comput. Assist. Radiol. Surg. 13(5), 741–748 (2018)

Quero, G., Lapergola, A., Soler, L., Shabaz, M., Hostettler, A., Collins, T., Marescaux, J., Mutter, D., Diana, M., Pessaux, P.: Virtual and augmented reality in oncologic liver surgery. Surg. Oncol. Clin. N. Am. 28(1), 31–44 (2019)

Ruwe, P.A., Gage, J.R., Ozonoff, M.B., DeLuca, P.A.: Clinical determination of femoral anteversion. A comparison with established techniques. J. Bone Joint Surg. Am. 74(6), 820–30 (1992)

Sadeghi, A.H., Taverne, Y.J., Bogers, A.J., Mahtab, E.A.: Immersive virtual reality surgical planning of minimally invasive coronary artery bypass for Kawasaki disease. Eur. Heart J. 41, 3279 (2020). https://doi.org/10.1093/eurheartj/ehaa518

Sereno, M., Wang, X., Besancon, L., Mcguffin, M.J., Isenberg, T.: Collaborative work in augmented reality: a survey. IEEE Trans. Visual. Comput. Graph. 1–1 (2020)

Slicer: Slicer Wiki (2021). bit.ly/3ZTcX12

Stadie, A.T., Kockro, R.A., Reisch, R., Tropine, A., Boor, S., Stoeter, P., Perneczky, A.: Virtual reality system for planning minimally invasive neurosurgery: technical note. J. Neurosurg. 108(2), 382–394 (2008)

Thakrar, R.R., Al-Obaedi, O., Theivendran, K., Snow, M.: Assessment of lower limb rotational profile and its correlation with the tibial tuberosity-trochlea groove distance: a radiological study. J. Orthop. Surg. 27(3), 1–6 (2019)

Unity Technologies: Unity—Manual: Lighting Mode: Shadowmask (2021). https://bit.ly/3JoBPZ5

Unity Technologies: Unity—Manual: The Progressive Lightmapper (2021). http://bit.ly/3L77tvh

Verhey, J.T., Haglin, J.M., Verhey, E.M., Hartigan, D.E.: Virtual, augmented, and mixed reality applications in orthopedic surgery. Int. J. Med. Robot. Comput. Assisted Surg. (2020)

Vertemati, M., Cassin, S., Rizzetto, F., Vanzulli, A., Elli, M., Sampogna, G., Gallieni, M.: A virtual reality environment to visualize three-dimensional patient-specific models by a mobile head-mounted display. Surg. Innov. 26(3), 359–370 (2019)

Wang, D., Li, N., Luo, M., Chen, Y.k.: One visualization simulation operation system for distal femoral fracture. Medicine (2017)

Yen, J.C., Chang, F.J., Chang, S.: A new criterion for automatic multilevel thresholding. IEEE Trans. Image Process. 4(3), 370–378 (1995)

Zawy Alsofy, S., Sakellaropoulou, I., Stroop, R.: Evaluation of surgical approaches for tumor resection in the deep infratentorial region and impact of virtual reality technique for the surgical planning and strategy. J. Craniofac. Surg. 31(7), 1865–1869 (2020)

Acknowledgements

Grant: Clinical and Pre-operative Assessment of Lower Limb Rotational Profiles in Virtual and Augmented Reality. Funder: Engineering and Physical Sciences Research Council (EPSRC). Grant Number: 2456825.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflicts of interest to be declared.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 2 (mp4 32598 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sibrina, D., Bethapudi, S. & Koulieris, G.A. OrthopedVR: clinical assessment and pre-operative planning of paediatric patients with lower limb rotational abnormalities in virtual reality. Vis Comput 39, 3621–3633 (2023). https://doi.org/10.1007/s00371-023-02949-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02949-0