Abstract

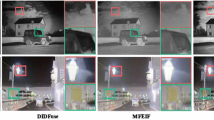

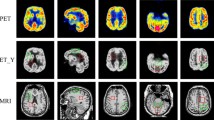

Multi-modality image fusion refers to integrating series of images acquired from different sensors and obtaining a fused image which is expected to provide more comprehensive information. It plays a pivotal role in many computer vision tasks and promotes the performance of subsequent applications. Most existing approaches attempted to design appropriate fusion rules for specific image fusion task, which have a bad generalization to different fusion tasks. Apart from that, the texture details in the fused images are common blurred due to undesirable artifacts introduced from the different modalities. In this paper, we propose a generic multimodal image fusion framework by combing the visual saliency model and flexible bilevel paradigm. Specifically, we decompose input images into an intensity layer, representing large-scale intensity variations, and a detail layer, containing small textures changes. Then we fuse the intensity layer through visual saliency map to improve the contrast of an image under consideration, and design a bilevel paradigm for fusing the detail layer to obtain fine details. Furthermore, to make the fused result visual friendly, a deep prior is built in the bilevel paradigm. Besides, an elastic target-guided hyper-parameter is introduced to dominate the proportion of the textural details from the source images, which can be further adjusted in accordance with different fusion tasks. We conducted the experiments on three available datasets to demonstrate the superiority of our framework against the state-of-the-art methods quantitatively and qualitatively in a variety of fusion tasks, including infrared and visible image, near-infrared and visible image fusion and multimodal medical image fusion.

Similar content being viewed by others

References

Viola P, Jones M, et al.: Rapid object detection using a boosted cascade of simple features. In: CVPR, vol 1, pp 511–518 (2001)

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., Ramanan, D.: Object detection with discriminatively trained part-based models. IEEE TPAMI 32(9), 1627–1645 (2010)

Dikmen, M., Akbas, E., Huang, T.S., Ahuja, N.: Pedestrian recognition with a learned metric. In: ACCV, pp. 501–512. Springer, Berlin (2010)

Gray, D., Tao, H.: Viewpoint invariant pedestrian recognition with an ensemble of localized features. In: ECCV, pp. 262–275. Springer, Berlin (2008)

Duan, Z., Lan, J., Xu, T., Ni, B., Zhuang, L., Yang, X.: Pedestrian detection via bi-directional multi-scale analysis. In: ACM MM, pp. 1023–1031. ACM, New York (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR, pp. 3431–3440 (2015)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: CVPR, pp. 580–587 (2014)

Pu, M., Huang, Y., Guan, Q., Zou, Q.: Graphnet: learning image pseudo annotations for weakly-supervised semantic segmentation. In: ACM MM, pp. 483–491. ACM, New York (2018)

Bhatnagar, G., Wu, Q.M.J., Liu, Z.: Directive contrast based multimodal medical image fusion in NSCT domain. IEEE Trans. Multimedia 15(5), 1014–1024 (2013)

Li, S., Kang, X., Jianwen, H.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864–2875 (2013)

Selesnick, I.W., Baraniuk, R.G., Kingsbury, N.G.: The dual-tree complex wavelet transform. IEEE Signal Process. Mag. 22(6), 123–151 (2005)

Liu, Z., Tsukada, K., Hanasaki, K., Ho, Y.-K., Dai, Y.P.: Image fusion by using steerable pyramid. Pattern Recogn. Lett. 22(9), 929–939 (2001)

Adu, J., Gan, J., Wang, Y., Huang, J.: Image fusion based on nonsubsampled contourlet transform for infrared and visible light image. Infrared Phys. Technol. 61, 94–100 (2013)

Bruno Olshausen, A., David, F.J.: Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 381(6583), 607 (1996)

Xiaoqi, L., Zhang, B., Zhao, Y., Liu, H., Pei, H.: The infrared and visible image fusion algorithm based on target separation and sparse representation. Infrared Phys. Technol. 67, 397–407 (2014)

Zhang, Q., Liu, Y., Blum, R.S., Jungong, H., Dacheng, T.: Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images. A review. Inf. Fus. 40, 57–75 (2018)

Zhu, Z., Yin, H., Chai, Y., Li, Y., Qi, G.: A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 432, 516–529 (2018)

Liu, Y., Chen, X., Ward, R.K., Wang, J.Z.: Medical image fusion via convolutional sparsity based morphological component analysis. IEEE Signal Process. Lett. 26(3), 485–489 (2019)

Yu, L., Xun, C., Ward, R.K., Wang, J.Z.: Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 23(12), 1882–1886 (2016)

Li, H., Xiao-Jun, W.: Densefuse: a fusion approach to infrared and visible images. IEEE Trans. Image Process. 28(5), 2614–2623 (2018)

Ma, J., Wei, Y., Liang, P., Li, C., Jiang, J.: Fusiongan: a generative adversarial network for infrared and visible image fusion. Inf. Fus. 48, 11–26 (2019)

Kun, L., Lei, G., Huihui, L., Jingsong, C.: Fusion of infrared and visible light images based on region segmentation. Chin. J. Aeronaut. 22(1), 75–80 (2009)

Ma, J., Chen, C., Li, C., Huang, J.: Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fus. 31, 100–109 (2016)

Adu, J., Xie, S., Gan, J.: Image fusion based on visual salient features and the cross-contrast. J. Vis. Commun. Image Represent. 40, 218–224 (2016)

Hai-Miao, H., Jiawei, W., Li, B., Guo, Q., Zheng, J.: An adaptive fusion algorithm for visible and infrared videos based on entropy and the cumulative distribution of gray levels. IEEE Trans. Multimedia 19(12), 2706–2719 (2017)

Zhao, W., Huimin, L., Wang, D.: Multisensor image fusion and enhancement in spectral total variation domain. IEEE Trans. Multimedia 20(4), 866–879 (2018)

Zhao, W., Huchuan, L.: Medical image fusion and denoising with alternating sequential filter and adaptive fractional order total variation. IEEE Trans. Instrum. Meas. 66(9), 2283–2294 (2017)

Liu, R., Liu, J., Jiang, Z., Fan, X., Luo, Z.: A bilevel integrated model with data-driven layer ensemble for multi-modality image fusion. IEEE Trans. Image Process. 30, 1261–1274 (2020)

Yang, B., Li, S.: Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59(4), 884–892 (2010)

Liu, J., Yuhui, W., Huang, Z., Liu, R., Fan, X.: SMoA: Searching a modality-oriented architecture for infrared and visible image fusion. IEEE Signal Process. Lett. 28, 1818–1822 (2021)

Liu, Y., Xun, C., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fus. 36, 191–207 (2017)

Nencini, F., Garzelli, A., Baronti, S., Alparone, L.: Remote sensing image fusion using the curvelet transform. Inf. Fus. 8(2), 143–156 (2007)

Ma, J., Zhou, Z., Wang, B., Zong, H.: Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 82, 8–17 (2017)

Meng, F., Song, M., Guo, B., Shi, R., Shan, D.: Image fusion based on object region detection and non-subsampled contourlet transform. Comput. Electr. Eng. 62, 375–383 (2017)

Zhu, P., Ma, X., Huang, Z.: Fusion of infrared-visible images using improved multi-scale top-hat transform and suitable fusion rules. Infrared Phys. Technol. 81, 282–295 (2017)

Li, W., Jiao, D., Zhao, Z., Long, J.: Fusion of medical sensors using adaptive cloud model in local Laplacian pyramid domain. IEEE Trans. Biomed. Eng. 66(4), 1172–1183 (2018)

Chai, P., Luo, X., Zhang, Z.: Image fusion using quaternion wavelet transform and multiple features. IEEE Access 5, 6724–6734 (2017)

Zhang, B., Xiaoqi, L., Pei, H., Zhao, Y.: A fusion algorithm for infrared and visible images based on saliency analysis and non-subsampled shearlet transform. Infrared Phys. Technol. 73, 286–297 (2015)

Liang, J., He, Y., Liu, D., Zeng, X.: Image fusion using higher order singular value decomposition. IEEE Trans. Image Process. 21(5), 2898–2909 (2012)

Yin, H.: Sparse representation with learned multiscale dictionary for image fusion. Neurocomputing 148, 600–610 (2015)

Li, H., He, X., Tao, D., Tang, Y., Wang, R.: Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recogn. 79, 130–146 (2018)

Yu, L., Xun, C., Ward, R.K., Wang, J.Z.: Image fusion with convolutional sparse representation. IEEE Signal Process. Lett. 23(12), 1882–1886 (2016)

Liu, J., Fan, X., Jiang, J., Liu, R., Luo, Z.: Learning a deep multi-scale feature ensemble and an edge-attention guidance for image fusion. IEEE Trans. Circuits Syst. Video Technol. 32(1), 105–119 (2021)

Zhong, J., Yang, B., Li, Y., Zhong, F., Chen, Z.: Image fusion and super-resolution with convolutional neural network. In: Chinese Conference on Pattern Recognition, pp. 78–88. Springer, Berlin (2016)

Liu, Y., Chen, X., Cheng, J., Peng, H., Wang, Z.: Infrared and visible image fusion with convolutional neural networks. Int. J. Wavelets Multiresolution Inf. Process. 16(03), 1850018 (2018)

Saeedi, J., Faez, K.: Infrared and visible image fusion using fuzzy logic and population-based optimization. Appl. Soft Comput. 12(3), 1041–1054 (2012)

Guo, H., Ma, Y., Mei, X., Ma, J.: Infrared and visible image fusion based on total variation and augmented Lagrangian. J. Opt. Soc. Am. A 34(11), 1961–1968 (2017)

Shibata, T., Tanaka, M., Okutomi, M.: Versatile visible and near-infrared image fusion based on high visibility area selection. J. Electron. Imaging 25(1), 013016 (2016)

Han, Y., Cai, Y., Cao, Y., Xiaoming, X.: A new image fusion performance metric based on visual information fidelity. Inf. Fus. 14(2), 127–135 (2013)

Bai, X.: Morphological center operator based infrared and visible image fusion through correlation coefficient. Infrared Phys. Technol. 76, 546–554 (2016)

Li, H., Qiu, H., Zhengtao, Y., Zhang, Y.: Infrared and visible image fusion scheme based on NSCT and low-level visual features. Infrared Phys. Technol. 76, 174–184 (2016)

Wang, A., Jiang, J., Zhang, H.: Multi-sensor image decision level fusion detection algorithm based on DS evidence theory. In: 2014 Fourth International Conference on Instrumentation and Measurement, Computer, Communication and Control, pp. 620–623. IEEE (2014)

Lahoud, F., Süsstrunk, S.: Fast and efficient zero-learning image fusion. arXiv preprint arXiv:1905.03590 (2019)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Nichol, A., Achiam, J., Schulman, J.: On first-order meta-learning algorithms. arXiv preprint arXiv:1803.02999 (2018)

Zhang, K., Zuo, W., Chen, Y., Meng, D., Zhang, L.: Beyond a gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2016)

Zhang, K., Zuo, W., Chen, Y., Meng, D., Zhang, L.: Beyond a gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26(7), 3142–3155 (2017)

Russakovsky, O., Deng, J., Hao, S., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 115(3), 211–252 (2015)

Aslantas, V., Bendes, E.: A new image quality metric for image fusion: the sum of the correlations of differences. AEU-Int. J. Electron. Commun. 69(12), 1890–1896 (2015)

Xydeas, C.S., Vladimir, P.: Objective image fusion performance measure. Electron. Lett. 36(4), 308–309 (2000)

Liu, C.H., Qi, Y., Ding, W.R.: Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys. Technol. 83, 94–102 (2017)

Qinglei, D., Han, X., Ma, Y., Huang, J., Fan, F.: Fusing infrared and visible images of different resolutions via total variation model. Sensors 18(11), 3827 (2018)

Barra, V., Boire, J.-Y.: A general framework for the fusion of anatomical and functional medical images. Neuroimage 13(3), 410–424 (2001)

Liu, Y., Wang, Z.: Simultaneous image fusion and denoising with adaptive sparse representation. Image Process. IET 9(5), 347–357 (2014)

Yin, M., Liu, X., Liu, Y., Chen, X.: Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Instrum. Meas. Soc. 68(1), 49–64 (2019)

Brown, M., Süsstrunk, S.: Multi-spectral sift for scene category recognition. In: CVPR, pp. 177–184 (2011)

Tomasi, C., Manduchi, R.: Bilateral filtering for gray and color images. In: Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), pp. 839–846. IEEE (1998)

Funding

This paper is funded by Science Foundation of China under Grant (Nos. 61922019, 61733002 and 616721255),LiaoNing Revitalization.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we have no financial and personal relationships with other people or organizations that can inappropriately influence our work, there is no professional or other personal interest of any nature or kind in any product, service, and/or company that could be construed as influencing the position presented in, or the review of, the manuscript entitled, “A Unified Image Fusion Framework with Flexible Bilevel Paradigm Integration.”

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, J., Jiang, Z., Wu, G. et al. A unified image fusion framework with flexible bilevel paradigm integration. Vis Comput 39, 4869–4886 (2023). https://doi.org/10.1007/s00371-022-02633-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02633-9