Abstract

For a sample of Exponentially distributed durations we aim at point estimation and a confidence interval for its parameter. A duration is only observed if it has ended within a certain time interval, determined by a Uniform distribution. Hence, the data is a truncated empirical process that we can approximate by a Poisson process when only a small portion of the sample is observed, as is the case for our applications. We derive the likelihood from standard arguments for point processes, acknowledging the size of the latent sample as the second parameter, and derive the maximum likelihood estimator for both. Consistency and asymptotic normality of the estimator for the Exponential parameter are derived from standard results on M-estimation. We compare the design with a simple random sample assumption for the observed durations. Theoretically, the derivative of the log-likelihood is less steep in the truncation-design for small parameter values, indicating a larger computational effort for root finding and a larger standard error. In applications from the social and economic sciences and in simulations, we indeed, find a moderately increased standard error when acknowledging truncation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Poor sample selection is a frequent basis for objection to the inferential quality of data. Hospital controls may be negatively selective, a student sample is a positive selection. Sampling from soldiers is selective, because a body height threshold truncates smaller recruits. Inference from the status quo of a loan portfolio can take into account the fact that earlier loan applications with too small score had been rejected (see Bücker et al. 2013). Here we study de-selection on the basis of age being either too low or too high. An age is the duration between two events, denoted as “birth” and “death”, and Fig. 1 (left) shows the three possible situations.

Left: Three cases of the date of 1st event (black bullet) and date of 2nd event (white circle): observed (solid) and truncated (dashed) durations/ Right: Sets in the co-domain of \((X_i,T_i)'\) or \((\widetilde{X}_j, \widetilde{T}_j)'\) used in Lemma 1 (and proof): Example \(x \ge s\). (Explanation of panels and symbols is distributed over larger parts of text.)

We assume an Exponential distribution for the latent duration \(\widetilde{X}_j\), observed or truncated, and estimate its parameter \(\theta _0\). Our three applications will be the lifetime of a company (in Germany), the duration of a marriage (in the city of Rostock), and the waiting time, after the 50th birthday, until dementia onset (in Germany).

The parameter of an Exponential distribution is closely linked to the probability of the second event happening within one time unit, one year in all of our applications. In essence, one wants to estimate such an event probability by dividing the number of events (over a certain period) by the number of units at risk (at the beginning of the period), this being prohibited by the lack of denominator. We circumvent the missing data with the conditional distribution of the duration.

We distinguish, as three statistical masses, the population as all units with a first event in a period (of length G), the latent simple random sample and, after truncation, the observed data.

One can of course ask, in particular whether such simple random latent samples exist at all in practice. In survival analysis, the assumption of durations as independent identically distributed random variables can be defended, because independence and randomness are attributable to an unforeseeable staggered entry (see e.g. Weißbach and Walter 2010). Even more specifically, in labour economics, it is validated theoretically that market friction renders the entry into a new occupation for an employee random, and hence its duration until the new occupation.

Truncation is known to introduce a selection bias, referring to the comparison of two models, the estimate of the correct model compared to the estimate from erroneously modelling the observed data as a simple random sample (srs-design). (We will later distinguish the selection bias from the statistical bias, the later referring to only one model, namely comparing an estimate with the true parameter.) More important for us is that truncation is suspected to increase the standard error, as suspected by Adjoudj and Tatachak (2019) due to dependence in the observed data, and we are interested in the extent to which the truncation hinders statistical inference in terms of large sample properties.

As an early reference, Cox and Hinkley (1974) in their Example 2.25 consider the size of the truncated sample as an ancillary statistic, not acknowledging the size of the latent sample, n, as a parameter. The size of the truncated sample was subsequently considered again as random in Woodroofe (1985), and conditioning was used to prove consistency. Neighbouring contemporaneous work on truncation in survival analysis, mostly semi- and non-parametrically are (Shen 2010; Moreira and de Uña-Álvarez 2010; Weißbach et al. 2013; Emura et al. 2015, 2017; Frank et al. 2019; Dörre 2020).

Here, we derive the maximum likelihood estimator (MLE) of n and \(\theta _0\) by representing the observed data as a truncated empirical process. We derive the likelihood with standard results for empirical processes (see e.g. Reiss 1993). The size of the data m will be shown to be such a process, seen as a point measure, evaluated at a certain set S. To the best of our knowledge the model is the first example of an exponential family with the space of point measures being the sample space.

2 Model and result

Before presenting the estimator and its asymptotic distribution, the data need to be described.

2.1 Sample selection

The unit j of the latent sample carries as second measure with its lifetime \(\widetilde{X}_j\) (\(\in \mathbb {R}_0^+\)) its birthdate (a calendar time). We, equivalently, measure the birthdate backwards from a specific time point (equal for all units of the latent sample) and denote it as \(\widetilde{T}_j\). We use the calendar date when our study period begins as thus time point, so that \(\widetilde{T}_j\) has the interpretation of being the “age when the study begins”. We consider as population, units born within a pre-defined time window going back G time units from the study beginning, so that \(\widetilde{T}_j \in [0,G]\) (see Fig. 1 (left)). Define \(S:=\mathbb {R}_0^+ \times [0,G]\), with \(0<G < \infty \), the space for one outcome, and let it generate the \(\sigma \)-field \(\mathcal {B}\). In comparison to the example of soldiers whose recruitment truncates all at the same height, to fit our survival analytic applications, each unit is truncated at a different age. As illustrated in Fig. 1 (left), all units are truncated at the same time, when the study begins. Differently for each unit j, the time interval of observation truncates the sample unit in cases of a too low or too high age. Because \(\widetilde{T}_j\) is the (shifted) birth date, assuming as births process a time-homogeneous Poisson process renders the distribution of \(\widetilde{T}_j\) to be Uniform (see Dörre 2020, Lemma 2). Let us collect the following notation and assumptions:

-

(A1)

Let \(\Theta :=[ \varepsilon , 1 / \varepsilon ]\) for some “small” \(\varepsilon \in ]0,1[\).

-

(A2)

Let for \(\theta _0\) being an interior point of \(\Theta \), \(\widetilde{X}_j \sim Exp(\theta _0)\), i.e. with density \(f_E(\cdot /\theta _0)\) and CDF \(F_E(\cdot /\theta _0)\) of the Exponential distribution. Let \(\widetilde{T}_j \sim Uni[0,G]\), with density \(f^{\widetilde{T}}\) and CDF \(F^{\widetilde{T}}\) of the Uniform distribution.

-

(A3)

\(\widetilde{X}_j\) and \(\widetilde{T}_j\) are stochastically independent.

-

(A4)

For known constant \(s>0\), column vector \((\tilde{X}_j,\tilde{T}_j)'\) is observed if it is in

$$\begin{aligned} D:=\{ (x,t)' | 0 < t \le x \le t + s, t \le G \}. \end{aligned}$$

Assumption (A4) formalises that a sample unit is only observed when its second event falls into the observation period (of length s). For instance, in one of the applications, we will know the age-at-insolvency, i.e. the duration until insolvency, only for those companies that filed for insolvency within the \(s=3\) years 2014–2016. The parallelogram D is depicted in Fig. 1 (right). Following up on (A4), we denote an observation by \((X_i,T_i)'\), \(i=1, \ldots , m \le n\).

The paper assumes a simple random sample for \((\widetilde{X}_j,\widetilde{T}_j)'\), \(j=1,\ldots ,n, n \in \mathbb {N}\), i.e. \(\text{ i.i.d. }\) random variables (r.v.) mapping from the probability space \((\Omega ,\mathcal {A},P)\) onto the measurable space \((S, \mathcal {B})\).

Define now for \(\theta \in \Theta \)

and note that for \(\theta =\theta _0\), by Fig. 1 (right), Fubini’s Lemma and the substitution rule, it is \(P\{\widetilde{T}_j \le \widetilde{X}_j \le \widetilde{T}_j +s\}\), i.e. the selection probability of the jth individual. The numerator is, due to \(\theta _0,s,G >0\), strictly positive and, as to be expected, with a larger observation interval, i.e. increasing s, the selection becomes more likely. Additionally, for larger \(\theta _0\) (or smaller expected duration) the denominator increases faster than the numerator does, so that the selection becomes less likely. A shorter duration will not reach the observation interval. Seen as a function of G, \(\alpha _{\theta _0}\) is monotonously decreasing, with almost the same interpretation.

The selection probability will occur in the likelihood, so that for maximisation, its first derivative will be needed. The second derivative of \(\alpha _{\theta }\) (with now variable \(\theta \)) will be needed for proving the asymptotic normality and thus calculating the standard error. The proof is elementary and omitted here.

Corollary 1

With Assumptions (A1)–(A4) the first and second derivatives of (1) in \(\theta \) are:

Obviously, the distribution of \((\widetilde{X}_j,\widetilde{T}_j)'\), conditional on being observed, will become important for deriving the likelihood.

Definition 1

Let \((X_1,T_1)'\), \((X_2,T_2)'\), \((X_3,T_3)'\), ..., be independent and identically distributed with CDF

In more detail, the distributions of \((X_i,T_i)'\) and \(X_i\) will be needed on the one hand later to define the precise stochastic description of the data, i.e. of the truncated sample as a truncated empirical process. On the other hand, we already need the distribution (and also moments) here to study the consistency and asymptotic normality of the maximum likelihood estimator. The proofs of Lemma 1 and Corollary 2 are elementary (and omitted), but it is useful to define sets (see Fig. 1 (right)):

Lemma 1

With Definition 1 and under Assumptions (A1)–(A4) it holds, for \((x,t)' \in D\), \(\alpha _{\theta _0} F^{X,T}(x,t) = (1- e^{- \theta _0 x}) t/G - R(x,t)\), with \(\frac{\partial ^2}{\partial x \partial t} R(x,t) =0\).

Corollary 2

With Definition 1 and under Assumptions (A1)–(A4):

-

(i)

For \((x,t)' \in D\) it holds \(f^{X,T}(x,t)= \frac{\theta _0}{G \alpha _{\theta _0}} e^{- \theta _0 x}\) (outside D it is zero).

-

(ii)

The marginal density of X for \(x \in [0,G+s]\) is

$$\begin{aligned} f^X(x)= & {} \frac{\theta _0}{G \alpha _{\theta _0}} e^{- \theta _0 x} \left( \mathbbm {1}_{[0,s]}(x) x + \mathbbm {1}_{]s,G]}(x) s + \mathbbm {1}_{]G,G+s]}(x) (G-x+s) \right) . \end{aligned}$$ -

(iii)

For the expectation of \(X_i\) it holds

$$\begin{aligned} \alpha _{\theta _0} E_{\theta _0}(X_i)&=A(s,G,\theta _0) e^{-\theta _0 s} + B(s,G,\theta _0) e^{-\theta _0 G} \\&\quad + C(s,G,\theta _0) e^{-\theta _0 (G+s)} + \frac{2}{G\theta _0^2}, \end{aligned}$$with \(A(s,G,\theta _0) := -\frac{s}{G\theta _0}- \frac{2}{G\theta _0^2}, B(s,G,\theta _0) := - \frac{1}{\theta _0} - \frac{2}{G \theta _0^2}\) and \(C(s,G,\theta _0) := \frac{G+s}{G \theta _0} + \frac{2}{G \theta _0^2}\).

-

(iv)

For the variance of \(X_i\) note that

$$\begin{aligned} E_{\theta _0}(X^2_i)&=A^q(s,G,\theta _0) e^{-\theta _0 s} + B^q(s,G,\theta _0) e^{-\theta _0 G} \\&\quad + C^q(s,G,\theta _0) e^{-\theta _0 (G+s)} + \frac{1}{4\theta _0^3} \end{aligned}$$with \(A^q(s,G,\theta _0)= \frac{-s^2}{G \theta _0} - \frac{s}{6 \theta _0^2} - \frac{1}{4 \theta _0^3}\), \(B^q(s,G,\theta _0)=\frac{-G}{\theta _0} - \frac{4}{\theta _0^2} - \frac{1}{4 \theta _0^3}\) and \(C^q(s,G,\theta _0)= \frac{(G+s)^2}{G \theta _0} + \frac{G+s}{6 \theta ^2} + \frac{1}{4 \theta _0^3}\).

We are now in the position to formulate the likelihood, maximise it and apply large sample theory.

2.2 Estimator and confidence interval

Similar to \(P\{A \cap B\}=P\{A|B\}P\{B\}\) and with detailed definitions following, we decompose the density of the observations and the random sample size, i.e. the likelihood \(\ell \), into the product of the conditional density of the data—conditional on observation—and the distribution of the observation count. If the observations—conditional on having been observed—are independent, the first factor of such product, again, is a product, namely over the conditional densities of each observation.

W.r.t. the second of such factors, note that the size of the observed sample has a Binomial distribution. We can approximate it by a Poisson distribution, when—as is usually argued with the probability generating function—the selection probability \(\alpha _{\theta }\) for each of the n i.i.d. latent Bernoulli experiments is small. This is the case when the width of the observation period (of length s) is “short”, relative to population period (of length G), as will be true for our applications. The description so far motivates

where we already use the “generic” parameter \(\theta \), as will be explained at the end of Sect. 3. The conditionally independent and Exponentially distributed observed durations \(X_i\) cause the first two factors in (2). The last two factors appear in the Poisson distribution of the observed sample size with parameter \(n \alpha _{\theta }\). Details for the likelihood construction will need a formulation of the data as truncated empirical process and will be given in Sect. 3 (and in Theorem 3). The main topic is that it is not necessary to formulate the conditional independence as further assumptions, but that it follows from the simple sample assumption for the \((\widetilde{X}_j,\widetilde{T}_j)'\) and Assumptions (A1)–(A4). At first reading, Sect. 3 may be omitted without lack of coherence.

As a side remark, by inspection of (2), and long-known for random left-truncated durations, the likelihood does not include the (observed) \(t_i\), but it does include the (unobserved) n. Accordingly n, that has not been a parameter in the model (A1)–(A3), becomes a parameter after adding (A4).

As n is unknown in likelihood (2) (and equally in its rigorous counterpart to follow in Theorem 3), we obtain the approximate MLE for \((n,\theta _0)\) and use the \(\theta \)-coordinate of the bivariate zero as \(\hat{\theta }\). The logarithm of the likelihood has the derivative

Solving the bivariate equation for \(n \in \mathbb {R}^+\) results in \(m/\alpha _{\theta }\). In order to facilitate the proofs later on, we formulate the estimation as a minimization problem, and in detail as a minimization of an average. Define

with \(i_j\) as a realization of \(I_j := \mathbbm {1}_{[\tilde{T}_j,{\tilde{T}}_j + s]}({\tilde{X}}_j)\).

The derivative of the log-likelihood is now obviously related to (see van der Vaart 1998, Sect. 5)

The function is not observable, but it becomes observable after multiplication by n and hence its zero, \(\hat{\theta }\), is observable.

In order to account for boundary maxima, define the MLE \(\hat{\theta }\) now as the zero of \(\Psi _n(\theta )\) if it exists in (the open) \(\Theta \), as \(\varepsilon \) if \(\Psi _n(\theta )>0\), respectively as \(1/\varepsilon \) if \(\Psi _n(\theta )<0\), both for all \(\theta \in \Theta \). The following analytical properties (with proof in Appendix A) will be needed to prove the consistency and asymptotic normality of \(\hat{\theta }\).

Lemma 2

Under the Assumptions (A1)–(A4) it is

-

(i)

\(\psi _{\theta }(\tilde{x}_j,\tilde{t}_j)\) twice continuously differentiable in \(\theta \) for every \(({\tilde{x}}_j, {\tilde{t}}_j)'\),

-

(ii)

for \(({\tilde{x}}_j, {\tilde{t}}_j)' \in D\)

$$\begin{aligned} \dot{\psi }_{\theta }(\tilde{x}_j,\tilde{t}_j)= i_j \left( \frac{2}{\theta ^2} - \frac{s^2 e^{-\theta s}}{(1- e^{- \theta s})^2} - \frac{G^2 e^{-G \theta }}{(1- e^{- G \, \theta })^2} \right) > 0, \end{aligned}$$(6) -

(iii)

\(E_{\theta _0}[\psi _{\theta }(\widetilde{X}_j,\widetilde{T}_j)] = \alpha _{\theta _0} E_{\theta _0}(X_i) - \frac{\alpha _{\theta _0}}{\theta } + \frac{\alpha _{\theta _0}\dot{\alpha }_{\theta }}{\alpha _{\theta }} =:\Psi (\theta )\),

-

(iv)

\(E_{\theta _0}[\psi _{\theta _0}(\widetilde{X}_j,\widetilde{T}_j)]=\Psi (\theta _0)=0\) and

-

(v)

\(\Psi _n(\hat{\theta }) {\mathop {\rightarrow }\limits ^{p}} 0\).

As a comparison, we consider the naïve approach to assume already for the observed data, \(X_1, \ldots , X_m {\mathop {\sim }\limits ^{iid}}Exp(\theta _0)\). This is even more tempting, as the necessity of a population definition seems to be redundant. Theoretically, under srs-assumption, the derivative of the log-likelihood—multiplied by minus one—has summands

being similar to the first two summands of (4) if \(i_j=1\). An interpretation of (ii) in Lemma 2 is now the srs-design as the limit, in the sense that, if \(i_j=1\), it is, \(\lim _{s \rightarrow \infty } \lim _{G \rightarrow \infty } \dot{\psi }_{\theta }(\tilde{x}_j,\tilde{t}_j) = \dot{\psi }^{srs}_{\theta }(x_i)\). Condition (v) is a tribute to boundary maxima, \(\Psi _n(\theta )\) has no zero in \(\Theta \) in case of a too high or too low “location” of \(\Psi _n\), in combination with a too small amplitude over the parameter space, meaning \(\Psi _n(1/\varepsilon ) - \Psi _n(\varepsilon )\). As \(\varepsilon \) can be chosen arbitrarily small, the amplitude depends on the limiting behaviour of \(\Psi _n\) towards the boundaries of \(\mathbb {R}^+\), on the left for  and on the right for \(\theta \rightarrow \infty \). Towards the left border, consider Taylor expansions for the numerator and denominator of \(\psi _{\theta }({\tilde{x}}_j, \tilde{t}_j)/i_j - {\tilde{x}}_j\) to show that the first two derivatives, using l’Hôspital’s rule for

and on the right for \(\theta \rightarrow \infty \). Towards the left border, consider Taylor expansions for the numerator and denominator of \(\psi _{\theta }({\tilde{x}}_j, \tilde{t}_j)/i_j - {\tilde{x}}_j\) to show that the first two derivatives, using l’Hôspital’s rule for  , are zero, but the third is not. The resulting finite limit is

, are zero, but the third is not. The resulting finite limit is

Following up, note that

(see Definition 2 and Proof to Lemma 2(iii)). Note further  , from Corollary 2(iii), and

, from Corollary 2(iii), and  (see (1)).

(see (1)).

Compare with  , to see that the reduced amplitude implies less information for truncation, due to the obviously reduced slope also at \(\theta _0\).

, to see that the reduced amplitude implies less information for truncation, due to the obviously reduced slope also at \(\theta _0\).

By contrast, on the right border, the limiting behaviour for \(\theta \rightarrow \infty \) is not affected by the change in design. To see when \(\psi _{1/\varepsilon }({\tilde{x}}_j,{\tilde{t}}_j)>0\), note that \(\lim _{\theta \rightarrow \infty }\psi _{\theta }({\tilde{x}}_j,{\tilde{t}}_j)/i_j - {\tilde{x}}_j=0\), using l’Hôspital’s rule once. For the srs-design, it is the same and finite, showing that a boundary maximum can occur when the observed durations are small, i.e. when \(\theta _0\) is large (compared to n). We will continue the comparison of designs in Monte Carlo simulation and applications of Sects. 4 and 5.

Theorem 1

Under assumptions (A1)–(A4) and for \(\theta _0 \in ]\varepsilon ,1/\varepsilon [\) holds \(\hat{\theta } {\mathop {\rightarrow }\limits ^{p}} \theta _0\).

Proof

Apply van der Vaart (1998), Lemma 5.10. \(]\varepsilon ,1/\varepsilon [\) is a subset of the real line, \(\Psi _n\) is a random function and \(\Psi \) a fixed, both in \(\theta \). It is \(\Psi _n(\theta ) {\mathop {\rightarrow }\limits ^{p}} \Psi (\theta )\) for every \(\theta \), roughly speaking due to Lemma 2(iii) and the LLN. Specifically, the Poisson property for M results in \(M/n {\mathop {\rightarrow }\limits ^{p}} \alpha _{\theta _0}\). Furthermore, \(\frac{1}{n} \sum _{j=1}^n I_j \widetilde{X}_j=\frac{1}{n} \sum _{i=1}^M X_i {\mathop {\rightarrow }\limits ^{p}} \alpha _{\theta _0} E_{\theta _0}(X_i)\) is a consequence of \(M \sim Poi(n \alpha _{\theta _0})\). Together with \(E_{\theta _0}(M)= Var_{\theta _0}(M) = n \alpha _{\theta _0}\) one has

as \(E_{\theta _0}(X_i)\) and \(Var_{\theta _0}(X_i)\) are finite by Corollary 2(iii+iv). Convergence follows in squared mean, and hence in probability.

For the next condition in Lemma 5.10, we need a short discussion about maxima at the boundary of \(\Theta \) for some—typically small—n. In these situations, there is no zero to \(\Psi _n(\theta )\). We will demonstrate that, using the boundary in these situations, the MLE is a “near zero”. That is, \(\Psi _n(\theta )\) is non-decreasing due to Lemma 2(ii) and Lemma 2(v) holds. Furthermore, \(\Psi (\theta )\) is obviously differentiable and \(\dot{\Psi }(\theta _0)>0\) with the same argument as for \(\dot{\psi }_{\theta }\) in Lemma 2(ii) for \(({\tilde{x}}_j, {\tilde{t}}_j)' \in D\), such that \(\Psi (\theta _0- \eta )< 0 < \Psi (\theta _0+ \eta )\) for every \(\eta >0\) when \(\Psi (\theta _0)=0\), which holds due to Lemma 2(iv). \(\square \)

Although being the MLE, we cannot study asymptotic normality with general results from maximum likelihood theory. This would only be possible if we had considered an estimator for the pair \((n,\theta _0)\). Nonetheless, \(\hat{\theta }\) is an M-estimator.

The main idea is to use the smoothness of \(\Psi _n(\theta )\) and apply a quadratic Taylor expansion of \(\Psi _n\) around \(\theta _0\) and evaluated at \(\hat{\theta }\), resulting in (see van der Vaart 1998, Equation (5.18))

with \({\tilde{\theta }}\) between \(\hat{\theta }\) and \(\theta _0\). We will need:

Lemma 3

It is \(E_{\theta _0}[\psi ^2_{\theta _0}(\widetilde{X}_j,\widetilde{T}_j)] < \infty \) and \(\ddot{\psi }_{\theta }(\tilde{x}_j,\tilde{t}_j) \le \ddot{\psi }(\tilde{x}_j,\tilde{t}_j)\) for all \(\theta \) and the subsequent bound integrable.

Proof

For the first half: It is \(I_j \widetilde{X}_j^2 \le (G+s)^2 \Rightarrow E_{\theta _0}(I_j \widetilde{X}_j^2) \le \alpha _{\theta _0} (G+s)^2\), \(I_j \widetilde{X}_j \ge 0 \Rightarrow E_{\theta _0}(I_j \widetilde{X}_j) \ge 0\) and \(I_j \widetilde{X}_j \le (G+s) \Rightarrow E_{\theta _0}(I_j \widetilde{X}_j) \le \alpha _{\theta _0} (G+s)\), so that

which is finite due to \(\theta _0 \in \Theta \), the finiteness and positivity of \(\alpha _{\theta _0}\) from (1) and the finiteness of \(\dot{\alpha }_{\theta _0}\) from Corollary 1(i). For the second half: In (9), we can replace the denominators by their (due to the arguments after (1)) positive minima. Then, all numerators are continuous functions on compact \(\Theta \) hence with finite maxima, that we may insert. So that \(\ddot{\psi }_{\theta }(\tilde{x}_j,\tilde{t}_j) \le i_j C=:\ddot{\psi }(\tilde{x}_j,\tilde{t}_j)\) (with \(C < \infty \)) having finite integral \(C \alpha _{\theta _0}\). \(\square \)

Theorem 2

Let be \(\theta _0 \in ]\varepsilon , 1/\varepsilon [\) then, under assumptions (A1)–(A4), holds \(\sqrt{n} (\hat{\theta } - \theta _0) {\mathop {\rightarrow }\limits ^{d}} N(0, \sigma ^2)\) with \( \sigma ^2 := E_{\theta _0}(\psi _{\theta _0}^2(\widetilde{X}_j,\widetilde{T}_j))/ [E_{\theta _0}(\dot{\psi }_{\theta _0}(\widetilde{X}_j,\widetilde{T}_j))]^2\) (see definitions (6) and (9)).

Proof

Use the classical assumptions of Fisher (here in the formulation from van der Vaart 1998, Theorem 5.41). The main assumption of consistency is Theorem 1. Now \(\psi _{\theta }({\tilde{x}}_j, {\tilde{t}}_j)\) is twice continuously differentiable in \(\theta \) for every \(({\tilde{x}}_j,{\tilde{t}}_j)\), due to Lemma 2(i). \(E_{\theta _0}[\psi _{\theta _0}(\widetilde{X}_j, \widetilde{T}_j)]=0\) due to Lemma 2(iv) with \(E_{\theta _0}[\psi ^2_{\theta _0}(\widetilde{X}_j, \widetilde{T}_j)] < \infty \) due to Lemma 3. The existence of \(E_{\theta _0}[\dot{\psi }_{\theta _0}(\widetilde{X}_j,\widetilde{T}_j)]\) follows from (4) and positivity from Lemma 2(ii) combined with \(E_{\theta _0}(I_j)=\alpha _{\theta _0}>0\). Dominance of the second derivative by a fixed integrable function around \(\theta _0\) is due to Lemma 3. \(\square \)

For the estimation of the standard error (SE) from Theorem 2, we replace expectations by averages over the latent sample (with \(\theta _0\) replaced by \(\hat{\theta }\)),

being observable, because indicators reduce sums up to m.

3 Likelihood approximation

In order to give a precise version and derivation of the likehood (2), we now describe the truncated sample as stochastic process as in Kalbfleisch and Lawless (1989), especially as truncated empirical process, which in turn is approximated by a mixed empirical process. For the mixed process, deriving the likelihood is relatively simple.

Denote by \(\epsilon _a\) the Dirac measure concentrated at point \(a \in S\). Define the point measure \(\mu := \sum _{j=1}^n \epsilon _{(\widetilde{x}_j,\widetilde{t}_j)'}\), \(\mu : \mathcal {B} \mapsto \bar{\mathbb {N}}_0\), and the space of point measure on \(\mathcal {B}\) (with fixed n) by \(\mathbb {M}\). By inserting random variables, it becomes an empirical process \(N_n:= \sum _{j=1}^n \epsilon _{(\widetilde{X}_j,\widetilde{T}_j)'(\omega )}\) (\(\Omega \mapsto \mathbb {M}\)), measurable w.r.t. \(\sigma \)-algebras from \(\mathcal {A}\) to \(\mathcal {M}\), the \(\sigma \)-algebra for \(\mathbb {M}\). The data is now the truncated empirical process (for an illustration, see Fig. 2 (left))

for which we write \(X_1, \ldots , X_m\) in all but this section. The size of the truncated sample is \(N_{n,D}(S)\), for which we write M—and realised m—in all but this section, and is hence random and dependent on the sample size n.

In order to parametrize the data, i.e. the truncated empirical process, we write its intensity measure (only needed for sets \([0,x] \times [0,t]\)) as

due to Lemma 1. To see that, note that

Here, and in the following, the measure in the co-domain of a random variable is denoted \(\mathcal {L}\), e.g. \(\mathcal {L}(\widetilde{X}_j,\widetilde{T}_j)\). Note also that, \(\nu _{N_{n,D}}\) evaluated at S, is \(n \alpha _{\theta _0}\). One can show that \(N_{n,D}\) is equal in distribution to a Binomial-mixing empirical process. However, as our data in the applications (Sect. 5) will be relatively few, because s is relatively small, we will see shortly that it is enough to approximate the data with a Poisson-mixing empirical process.

Definition 2

Assume (A1)–(A4) and let Z be Poisson-distributed with parameter \(n \alpha _{\theta _0}\) and independent thereof \((X_i,T_i)'\) of Definition 1:

Due to \(\nu _{n,D}(S)=n \alpha _{\theta _0}<\infty \) and \(\mathcal {L}[(X_i,T_i)']=\nu _{n,D}/(n \alpha _{\theta _0})\) (by (11)) now \(N^{*}_n\) is a Poisson process with an intensity measure (see Reiss 1993, Theorem 1.2.1(i))

The latter is generally true for Poisson processes, (realized or not), so that Z is also observed.

The parallelogram D is “small” (in terms of \(\mathcal {L}(\widetilde{X}_j,\widetilde{T}_j)\)) relative to S, as long as the observation interval width s is relatively small compared to the width G of the population (and the typically long expected durations). Hence, \(N^{*}_n\) is “close” to \(N_{n,D}\) in Hellinger distance (see e.g. Reiss 1993, Approximation Theorem 1.4.2). We will now derive the likelihood for \(N^{*}_n\).

The likelihood is the density of \(N^{*}_n\), evaluated at the realisation, denoted as \(n^{*}_n\), i.e. with inserted z and \((x_i,t_i)'\)’s. The density of \(N^{*}_n\) has as its domain, the co-domain of \(N^{*}_n\), \(\mathbb {M}\), so that the density of \(N^{*}_n\) is a function of the point measure \(\mu \). Furthermore, a Radon–Nikodym density requires a dominating measure and we use the density of another Poisson process. We chose the 2-dim homogeneous Poisson process on \([0,A]^2\).

Definition 3

Let \(A \in \mathbb {N}\) be a number larger than the support of \(X_i\) or \(T_i\), e.g. the next natural number larger then \(G+s\) (see Definition 1). Let \(N_0\) be a Poisson process with \(Z_0 \sim Poi_{A^2}\) and independently thereof \((X^0_i,T^0_i)' \sim Uni([0,A]^2)\) \(i=1,2,3, \ldots \).

Note that \(N_0\) has a (finite) intensity measure, where \(\lambda _{[0,A]^2}\) denotes the Lebegues measure restricted to \([0,A]^2\), (see Reiss 1993, Theorem 1.2.1.(i))

The latter is different from a geometrically intuitive volume \(A^4\). \(\mathcal {L}(N_0)\) will now serve as the dominating measure in order to derive the Radon–Nikodym density of \(\mathcal {L}(N^{*}_n)\). But for that we will need the Radon–Nikodym density of \(\nu _{n,D}\) w.r.t. \(\nu _0\), so that (see Billingsley 2012, Formula (16.11)) one searches \(h_{\theta _0}:S \rightarrow \mathbb {R}_0^+\) with \(\forall B \in \mathcal {B}\) it is

For \(B=[0,x]\times [0,t]\) and \(x\le A, t \le A\) due to Fubini’s theorem, with \(\lambda \) as the univariate Lebesgues measure, due to the differentiability,

where (11) is used for the third equality, and Lemma 1 for the forth together with \(\frac{\partial ^2}{\partial x \partial t} R(x,t) = 0\) from Lemma 1. Of course, for \((x,t)' \not \in D\) is \(h_{\theta _0}(x,t) = 0\).

Theorem 3

For Assumptions (A1)–(A4) and \(\alpha _{\theta _0}\) from (1), the model \(N^{*}_n\) of Definition 2, has likelihood w.r.t. to \(\mathcal {L}(N_0)\) from Definition 3:

The proof is in Appendix B. The main idea is to decompose the density of the data, i.e. of \(\mathcal {L}(N_n^*)\), into the product of the density, conditional on \(N_n^*(S)\), multiplied by the probability mass distribution of the Poisson distributed \(N_n^*(S)\). The later results in the very last factor of (16) to include an exponential function in \(n \alpha _{\theta _0}\). Note that by Fisher-Neyman factorization \((N^{*}_n(S), \sum _{i=1}^{N^{*}_n(S)} X_i)\) is a sufficient statistic.

We maximise the likelihood as a function in its second argument, the “generic” parameter \(\theta \), being already the notation in (3). For a thorough discussion about the parameter notation, we refer the reader to the maximum likelihood estimator as posterior mode in a Bayesian analysis with uniform prior (see e.g. Robert 2001, Sect. 2.3). Finally note that, after taking logarithm, the derivatives w.r.t to \(\theta \) and n of (16) are equal to that of its intuitive counterpart (2) with \(n^{*}_n(S)\) replaced by m (see (3)).

4 Monte Carlo simulations

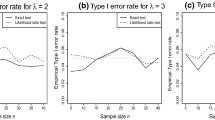

Our aim in this section is twofold, first we illustrate the vanishing bias, i.e. consistency, stated theoretically by Theorem 1. Second, the notion of a “bias”, referring to one model so far, can be extended to the “selection bias” comparing two models. We will assess such design-effect compared to the srs-design as motivated theoretically after Lemma 2.

We simulate \(n \in \{10^p, p=3, \ldots , 6 \}\) durations \(\widetilde{X}_j\) from Assumption (A2) with \(\theta _0 \in \{0.005, 0.01, 0.05, 0.1 \}\) according to (A1) and further \(\widetilde{T}_j\) according to (A2) with \(G \in \{24, 48 \}\), and we obey (A3). We then retained m of the \(\tilde{x}_j\), that fulfil (A4) with \(s \in \{2,3,48\}\). We calculate for the data set v the MLE \(\hat{\theta }^{(v)}\) as zero of (5) by means of a standard algorithm. Boundary maxima do not occur because (8) is markedly negative for all simulation scenarios.

In order to illustrate, first, consistency, assess the finite sample bias as an average over the \(R =1000\) simulated \((\hat{\theta }^{(v)} - \theta _0)\). Table 1 (1st rows) lists the results, and it can be seen that the bias decreases to virtually zero. In order to show the decline in the mean squared error, consider the estimated standard error (10) of \(\hat{\theta }^{(v)}\). In Table 1 (2nd rows) averages over the \(\left( \hat{\sigma }^{(v)}\right) ^2\) seem to have a finite limit for increasing n. Hence, the standard error decreases of order \(\sqrt{n}\).

A by-product of the simulations is that they enable confirming the representation of \(\sigma ^2\) (in Theorem 2). On the one hand, \(Var(\hat{\theta })\) can be approximated by \(\frac{1}{R} \sum _{v}^R (\hat{\theta }^{(v)} - \theta _0)^2\), the simulated variance, i.e. \(\sigma ^2= Var(\sqrt{n} \hat{\theta }) =n Var(\hat{\theta })\) by n times the simulated variance (Table 1 (3rd rows)). On the other hand, in a simulation, and not in an application, can \(\sigma ^2\) be estimated as n times the square of (10) (Table 1 (2rd rows)). Both quantifications become equal for large n.

The relation of the standard error with respect to \(\alpha _{\theta _0}\) is also interesting. It decreases, obviously because \(\alpha _{\theta _0}\) is linearly related to the size of the truncated sample by \(m=n \alpha _{\theta _0}\) (see again (11)). The relation of \(\alpha _{\theta _0}\) to \(\theta _0\), s and G is already explained after its definition (1) and its respective sensitivity is presented in Table 1. There is one exception; although \(\alpha _{\theta _0}\) is decreasing in G, the simulated \(\hat{\sigma }^2\) does not increase, but instead decreases for a given n (Table 1 (left panels)). The reason can be suspected to be as in the srs-design, where the estimated standard error (17) is not only increasing in m of order 1/2, but also decreases in \(\sum _{i=1}^m x _i\) of order 1, the latter being much larger for a large G (at given m).

Second, for the srs-design, applying (7) results in an MLE \(\hat{\theta }_{srs}= m/\sum _{i=1}^m x _i\) with standard error \(\sigma _{srs}/\sqrt{m}= \theta _0/\sqrt{n^{*}(S)}\) (i.e. \(\sigma _{srs}^2:=\theta _0^2\)). The latter can be estimated by inserting \(\hat{\theta }_{srs}\),

The factor for “inflating” the variance from Theorem 2, denoted as Kish’s design effect, is

Illustrating the design effect with the VIF is typical for the field of sampling techniques, especially in survey sampling. (By contrast, in the field of econometrics, variance inflation typically denotes the fact that standard errors increase for coefficients in a regression when accepting more covariates.) In the simulations, the VIF remains overall at a quite moderate size, with a tendency to increase in \(\alpha _{\theta _0}\).

We will continue the comparison of designs in the applications of Sect. 5 where we will see a substantial variance inflation in all three applications.

5 Three empirical applications

5.1 Populations and data

Insolvency of corporates founded 1990–2013 The population of our first application are German companies founded after the last structural break in Germany, the re-unification, namely at the beginning of 1990. The first event is the foundation of the company, and the second considered event is the insolvency. We restrict attention to the \(G=24\) years until the end of 2013, after which we started observing. Let \(\widetilde{X}_j\sim Exp(\theta _0)\) denote the age-at-insolvency, and by \(\widetilde{T}_j\) its age at the beginning of 2014. We assume a foundation to have taken place constantly (over those \(G=24\) years), i.e. \(\widetilde{T}_j \sim Uni[0,24]\). The German federal ministry of finance publishes the age of each insolvent debtor. We stop observing in 2016, i.e. \(s=3\), after having collected, as a truncated sample \(m=55,279\) companies.

Divorce of couples married 1993–2017 In our next application, the German bureau of statistics reports divorces, with marriage lengths. Of marriages sealed between 1993 and 2017 in the German city of Rostock, \(m=327\) marriages were divorced during 2018. Of these, 82 lasted less than 5 years, 112 lasted 6–10, 67 lasted 11–15, 40 lasted 16–20 and 26 held 21–25 years, i.e. \(G=25\) and \(s=1\). This small sample size example can help to understand dependence of the variance inflation to the data size.

Dementia onset of people born 1900–1954 Our final application is dementia incidence in Germany for the birth cohorts 1900 until 1954. The first event is the 50th birthday of a person, between 1950 and 2004, i.e. we have \(G=55\) . An insurance company reported that between 2004 and 2013 (\(s=9\)), \(m=35,929\) insurants has had a dementia incidence (the second event) (for more information about the data see Weißbach et al. 2021).

5.2 Comparison of estimation results

The zero of (5), i.e. the point estimate \(\hat{\theta }\), is found graphically, for instance for the first application by Fig. 2 (right). For the estimated standard error see (10). All estimates are in Table 2, which also contains the estimates under srs-design (17).

It is evident that ignoring truncation overestimates the hazard \(\theta _0\) by, for example, 29% in the insolvency application, and also causes negative selection of units in the others. We observe that the standard error is underestimated by about 35% for all applications (equivalent to an on average \(\widehat{VIF}=2,5\), as estimation of (18)), presumably through ignoring the stochastic dependence between units (and thus measurements) within the truncated sample. Also variance inflation almost seems not to depend on the sample size.

6 Discussion

The results are encouraging, as even after truncation, asymptotic normality holds, and standard errors do not increase too much. The considerable selection bias can be accounted for easily and identification of the parameters follows from standard results on the exponential family.

However, it is somewhat unfortunate that standard consistency proofs for the Exponential family fail, because compactness of the parameter space is violated, even when re-parametrising, due to the growing sample size being a parameter itself. And a temptation to withstand is to misinterpret the data as a simple random sample, only because statistical units are selected with equal probabilities (see (1)). This is especially tempting, because if the truncated sample was simple, not knowing n would be similar to not knowing the size of the population, requiring “finite-population corrections” only in the case of relatively many observations.

In practice, the considerable effort to account for truncation can even be circumvented in rich data situations by adjusting the population definition to start at the observation interval, however thereby excluding observable units (see e.g. Weißbach et al. 2009).

Of course more advanced sampling designs exist, such as endogenous sampling where units that have had a longer timeframe have a larger selection probability, in contrast to our model (sse (1)). Also truncation is typically analysed with counting process theory, focusing more on the role of the filtration as an information model (see e.g. Andersen et al. 1988). And with respect to robustness, the maximum likelihood method we use can be inferior to the method of moments (see e.g. Weißbach and Radloff 2020; Rothe and Wied 2020).

Nonetheless, we believe that our approach still offers some advantages: As we (i) directly recognize the second measurement, the age when observation starts, as random, (ii) model the sample size as random and (iii) distinguish explicitly between indices in observed and unobserved sample.

Two more minor points appear notable. First, the distance from the data to the mixed empirical process can be reduced to zero by changing from Poisson-mixing to Binomial-mixing, although little new insight can be expected, other than longer proofs. The same is true when proving the information equality for the standard error. And finally, one troublesome aspect should not be concealed. Compare the design effect with the theory of cluster samples where the VIF increases in the cluster size linearly, for given intra-cluster correlation. Considering the time as a classifier, truncation seems to introduce a very small intra-temporal correlation, because the increase in the VIF is small. However, for very small sample sizes, the VIF should then be even smaller. Non-linear behaviour of the dependence on the sample size is conceivable.

References

Adjoudj L, Tatachak A (2019) Conditional quantile estimation for truncated and associated data. Commun Stat 48:4598–4641

Andersen P, Borgan Ø, Gill R, Keiding N (1988) Censoring, truncation and filtering in statistical models based on counting processes. Contemp Math 80:1–31

Billingsley P (2012) Probability and measure, 4th edn. Wiley, Hoboken

Bücker M, van Kampen M, Krämer W (2013) Reject inference in consumer credit scoring with nonignorable missing data. J Bank Financ 37:1040–1045

Cox DR, Hinkley DV (1974) Theoretical statistics. CRC Press, Boca Raton

Dörre A (2020) Bayesian estimation of a lifetime distribution under double truncation caused by time-restricted data collection. Stat Pap 61:945–965

Emura T, Konno Y, Michimae H (2015) Statistical inference based on the nonparametric maximum likelihood estimator under double-truncation. Lifetime Data Anal 21:397–418

Emura T, Hu Y-H, Konno Y (2017) Asymptotic inference for maximum likelihood estimators under the special exponential family with double-truncation. Stat Pap 58:877–909

Frank G, Chae M, Kim Y (2019) Additive time-dependent hazard model with doubly truncated data. J Korean Stat Soc 48:179–193

Kalbfleisch JD, Lawless JF (1989) Inference based on retrospective ascertainment: an analysis of the data on transfusion-related aids. J Am Stat Assoc 84:360–372

Moreira C, de Uña-Álvarez J (2010) A semiparametric estimator of survival for doubly truncated data. Stat Med 29:3147–3159

Reiss R-D (1993) A course on point processes. Springer, New York

Robert CP (2001) The Baysian choice. Springer, New York

Rothe C, Wied D (2020) Estimating derivatives of function-valued parameters in a class of moment condition models. J Econ 217:1–19

Shen P-S (2010) Nonparametric analysis of doubly truncated data. Ann Inst Stat Math 62:835–853

van der Vaart A (1998) Asymptotic statistics. Cambridge University Press, Cambridge

Weißbach R, Radloff L (2020) Consistency for the negative binomial regression with fixed covariate. Metrika 83:627–641

Weißbach R, Walter R (2010) A likelihood ratio test for stationarity of rating transitions. J Econ 155:188–194

Weißbach R, Tschiersch P, Lawrenz C (2009) Testing time-homogeneity of rating transitions after origination of debt. Empir Econ 36:575–596

Weißbach R, Poniatowski W, Krämer W (2013) Nearest neighbor hazard estimation with left-truncated duration data. Adv Stat Anal 97:33–47

Weißbach R, Kim Y, Dörre A, Fink A, Doblhammer G (2021) Left-censored dementia incidences in estimating cohort effects. Lifetime Data Anal 27:38–63

Woodroofe M (1985) Estimating a distribution function with truncated data. Ann Stat 13:163–177

Acknowledgements

The financial support from the Deutsche Forschungsgemeinschaft (DFG) of R. Weißbach is gratefully acknowledged (Grant WE 3573/3-1 “Multi-state, multi-time, multi-level analysis of health-related demographic events: Statistical aspects and applications”). we thank W. Lohse, D. Ollrogge and G. Doblhammer for support in the data acquisition process. For the support with data we thank the AOK Research Institute (WIdO). The linguistic and idiomatic advice of Brian Bloch is also gratefully acknowledged.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

Appendix A: Proof of Lemma 2

For (i), note first that by (A1), \(\theta _0 \in \Theta \), so is \(\theta \): For \((\tilde{x}_j,\tilde{t}_j) \not \in D\), \(\psi _{\theta }(\tilde{x}_j,\tilde{t}_j) \equiv 0\). Alternatively, due to Corollary 1, first and second derivatives of \(\alpha _{\theta }\) are continuous, and therefore, so will be the third. Also \(\alpha _{\theta }\), being—along with \(\theta \)—the only component of a denominator in the first or second derivative of \(\psi _{\theta }\), is strictly positive due to the quotient rule.

For (ii): For the equality, due to Corollary 1, it is

For the positivity, we start to show that for \(x>0\) or \(y>0\)

Study its slope, \( g'(y) = 2 e^{-2y} - (2e^{-y} - 2y e^{-y}) = 2 e^{-2y} - 2e^{-y} + 2y e^{-y}\), being equal to zero if and only if

The latter is only fulfilled for \(y=0\), due to the known inequality \(e^y > 1+y\) for \(y \ne 0\), applied to \(-y\). Now, \(y=0\) is not in the domain and hence, g does not change the sign of the slope. It is \(g(\log (2))=0.06\) and \(g(1)= 0.13\), so that g is increasing and positive, due to \(\lim _{y \rightarrow 0} g(y)=0\). Now proceed to observe that from \(x e^{-x/2}< 1 - e^{-x} \Rightarrow x^2 e^{-x} < (1- e^{-x})^2\) follows

and similarly for G instead of s, both for \(i_j=1\).

For (iii):

For (iv): For the first equality, note that \(E_{\theta }[\psi _{\theta _0}(\widetilde{X}_j,\widetilde{T}_j)]=\Psi (\theta )\) due to (iii). Further, because of (1), Corollary 2(iii) and Corollary 1, we have:

The three terms add up to \(-\theta _0^2 \Psi (\theta _0)\) of (iii) and adding the coefficients of \(e^{-\theta _0 s}\), \(e^{-\theta _0 \, G}\) and \(e^{-\theta _0 (s+G)}\) (and the constants), we have \(\theta _0^2 \Psi (\theta _0)=0\). Finally, it is \(\theta _0 \ne 0\).

For (v): The main idea of the proof is that in the event of a boundary minimum, the distance from \(\Psi _n(\theta )\) to the \(\theta \)-axis is smaller than to \(\Psi (\theta )\), and that it will converge to the latter. Hence, after surpassing the axis, there will be a zero and \(\Psi _n(\hat{\theta })=0\).

We need to show, stressing the dependence of \(\hat{\theta }\) on n, that:

Denote the ’event’ of a boundary minimum on the left side as (recall the monotonicity of \(\Psi _n(\theta )\) from (ii)), \(A_n := \{\hat{\theta }_n = \varepsilon \} = \{ \Psi _n(\varepsilon ) > 0\}\), and on the right as \(B_n := \{\hat{\theta }_n = 1/\varepsilon \}=\{ \Psi _n(1/\varepsilon ) < 0\}\). Again due to the monotonicity of \(\Psi _n(\theta )\), the events are mutually exclusive, \( A_n \cap B_n = \emptyset \), with the consequence that \(P(A_n \cup B_n) = P(A_n) + P(B_n)\).

Recall that \(\Psi (\theta _0)=0\) (from (iv)). Also it is \(\dot{\Psi }(\theta )> 0\) with the same calculation as for \(\dot{\psi }_{\theta }({\tilde{x}}_j,{\tilde{t}}_j)\) in the equality of (6) (for \(({\tilde{x}}_j,{\tilde{t}}_j) \in D\)) in (ii). Hence, for \(\theta _0 \in ]\varepsilon , 1/\varepsilon [\), \(\Psi (\theta )\) is ’away’ from zero at the boundary, i.e. \(-\Psi (\varepsilon ) > 0\) and \(\Psi (1/\varepsilon ) > 0\) Furthermore, in the event of \(A_n\), the distance from \(\Psi _n(\varepsilon )\) to the \(\theta \)-axis is smaller than to (the negative) \(\Psi (\varepsilon )\):

Similarly, in the event of \(B_n\), it is

We have \(|\Psi _n(\hat{\theta }_n)|> \eta \Leftrightarrow \Psi _n(\hat{\theta }_n) \ne 0 \Leftrightarrow \hat{\theta }_n \in \{\varepsilon , 1/\varepsilon \} \Leftrightarrow A_n \cup B_n \Leftrightarrow \{ \Psi _n(\varepsilon ) > 0\} \cup \{ \Psi _n(1/\varepsilon ) < 0\}\) and hence

where the last inequality is due to (19),(20) and that, due the very beginning of the proof, \(\Psi _n(\theta ) {\mathop {\rightarrow }\limits ^{p}} \Psi (\theta )\) for \(\theta \in \Theta \). \(\square \)

Appendix B: Proof of Theorem 3

First we derive the density of \(\mathcal {L}(N^{*}_n)\) w.r.t. \(\mathcal {L}(N_0)\) to be

and the display (16) results by replacing the true \(\theta _0\) by the generic \(\theta \), inserting \(h_{\theta }\) from (15) and evaluating at the argument (\(\mu \)) as the observation \(n_n^{*}\).

According to Theorem 3.1.1 in Reiss (1993), it suffices to derive the density only on \(\mathbb {M}_k:=\{\mu \in \mathbb {M} : \mu (S) =k \}\).

We obtain by (11) and (12) that \(\mathcal {L}[(X_i,T_i)']=\nu _n^{*}/\nu _n^{*}(S)\), and \(\mathcal {L}[(X^0_i,T^0_i)']=\nu _0/\nu _0(S)\) by (13). Both are mappings \(\mathcal {B} \rightarrow \mathbb {R}_0^+\) related by a density (being a mapping \(S \rightarrow \mathbb {R}_0^+\))

That \(\nu _0(S)\) and \(\nu _n^{*}(S)\) are constants leads to the first equality, the second equality is due to (14) and the third holds by (11) and (13). The product experiment \(\mathcal {L}[(X_i,T_i)']^k\) has \(\mathcal {L}[(X^0_i,T^0_i)']^k\)-density,

Define \(\iota _k: S^k \rightarrow \mathbb {M}_k\) with

so that \(h_{1,k}= f_k \circ \iota _k\) with

and \(\mu =\sum _{i=1}^k \epsilon _{(x_i, t_i)'}\). The seemingly double-used \(h_{1,k}\) represents two different mappings, due to the different domains (\(S^k\) in (22) and \(\mathbb {M}_k\) later). This means that \(f_k\) attributes for point measure \(\mu \), build on \((x_1,t_1)', \ldots , (x_k,t_k)'\), the same value as \(h_{1,k}\) does for the vector \(((x_1,t_1)', \ldots , (x_k,t_k)')\). Now note that for \(M \in \mathcal {M}_k\) (with \(\mathcal {M}_k\) being the restriction of \(\mathcal {M}\) to \(\mathbb {M}_k\))

It is easiest to start reading the line from the centre, where \(\iota _k^{-1}(M)\) is short for \(\{ \iota _k^{-1}(\mu ), \mu \in M\}\). (Notation to be distinguished from sample size.) Similarly, \(\iota _k [\mathcal {L}(X_i^0,T_i^0)]^k = \mathcal {L}(\sum _{i=1}^k \epsilon _{(X^0_i,T^0_i)'})\). Hence by Lemma 3.1.1 in Reiss (1993), it is \(f_k \in d \mathcal {L}\left( \sum _{i=1}^k \epsilon _{(X_i,T_i)'} \right) /d \mathcal {L}\left( \sum _{i=1}^k \epsilon _{(X^0_i,T^0_i)'}\right) \). For \(M \in \mathcal {M}_k\),

In the first equality, the second condition, \(Z =k\), results from the fact that whatever \(\mu \), it must be in \(\mathcal {M}_k\). For the first condition, the largest index for summation is originally Z, but can be replaced by k due to the second condition. (The order of conditions is irrelevant.) The second equality is due to the independence (see Definitions 2). Similarly by Definitions 3 for \(N_0\):

Hence,

The last equality is due to (23). Now, due to Definitions 2 and 3, (13)(right), (12)(right) and (11) we have

So that

Hence, by the display (24) of the distribution of \(\mathcal {L}(N_n^{*})\), its density is, inserting (22),

for \(\mu = \sum _{i=1}^k \epsilon _{(X_i,T_i)'}\).

Concluding from k to \(\mu (S)\) and inserting the above displays, \(\mathcal {L}(N^{*}_n)\) (or more informally \(N^{*}_n\)) has \(\mathcal {L}(N_0)\)-density (21) (see Reiss 1993, Theorem 3.1.1 and Example 3.1.1) \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weißbach, R., Wied, D. Truncating the exponential with a uniform distribution. Stat Papers 63, 1247–1270 (2022). https://doi.org/10.1007/s00362-021-01272-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-021-01272-x