Abstract

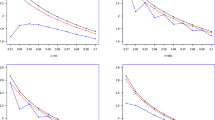

Conditional Value-at-Risk (CVaR) is an increasingly popular coherent risk measure in financial risk management. In this paper, a new nonparametric kernel estimator of CVaR is established, and a Bahadur type expansion of the estimator is also given under \(\alpha \)-mixing sequences. Furthermore, the mean, variance, mean square error (MSE) and uniformly asymptotic normality of the new estimator are discussed, optimal bandwidths are obtained as well. In order to better illustrate performances of the new CVaR estimator, we conduct numerical simulations under some \(\alpha \)-mixing sequences and a GARCH model, and discover that the new CVaR estimator is smoother and more accurate than estimators proposed by other scholars because of the bias and MSE of the new estimator are smaller. Finally, we use the new estimator to analyze the daily log-loss of real financial series.

Similar content being viewed by others

References

Acerbi C, Tasche D (2002) On the coherence of expected shortfall. J Bank Finance 26(7):1487–1503

Artzner P, Delbaen F, Eber J-M, Heath D (1999) Coherent measures of risk. Math Finance 9(3):203–228

Ben-Tal A, Teboulle M (1986) Expected utility, penalty functions and duality in stochastic nonlinear programming. Manag Sci 32(11):1445–1466

Ben-Tal A, Teboulle M (1987) Penalty functions and duality in stochastic programming via \(\phi \)-divergence functionals. Math Oper Res 12(2):224–240

Ben-Tal A, Teboulle M (2007) An old-new concept of convex risk measure: the optimized certainty equivalent. Math Finance 17(3):449–476

Bodnar T, Schmid W, Zabolotskyy T (2013) Asymptotic behavior of the estimated weights and of the estimated performance measures of the minimum VaR and the minimum CVaR optimal portfolios for dependent data. Metrika 76(8):1105–1134

Cai ZW, Wang X (2008) Nonparametric estimation of conditional VaR and expected shortfall. J Econom 147(1):120–130

Chen SX, Tang CY (2005) Nonparametric inference of value-at-risk for dependent financial ruturns. J Financ Econom 3(2):227–255

Chen SX (2008) Nonparametric estimation of expected shortfall. J Financ Econom 6(1):87–107

David BB (2007) Large deviations bounds for estimating conditional value-at-risk. Oper Res Lett 35(6):722–730

Follmer H, Schied A (2002) Convex measures of risk and trading constraints. Finance Stoch 6(4):429–447

Gourieroux C, Laurent JP, Scaillet O (2000) Sensitivity analysis of values at risk. J Empir Finance 7(3–4):225–245

Kato K (2012) Weighted Nadaraya–Watson estimation of conditional expected shortfall. J Financ Econom 10(2):265–291

Leorato S, Peracchi F, Tanase AV (2012) Asymptotically efficient estimation of the conditional expected shortfall. Comput Stat Data Anal 56(4):768–784

Liu J (2008) Two-step kernel estimation of expected shortfall for \(\alpha \)-mxing time series. Dissertation, Guangxi Normal University

Liu J (2009) Nonparametric estimation of expected shortfall. Chin J Eng Math 26(4):577–585

Luo ZD, Ou SD (2017) The almost sure convergence rate of the estimator of optimized certainty equivalent risk measure under \(\alpha \)-mixing sequences. Commun Stat Theory Methods 46(16):8166–8177

Luo ZD, Yang SC (2013) The asymptotic properties of CVaR estimator under \(\rho \) mixing sequences. Acta Mathematica Sinica (Chinese Series) 56(6):851–870

Mokkadem A (1988) Mixing properties of ARMA processes. Stoch Process Appl 29(2):309–315

Pavlikov K, Uryasev S (2014) CVaR norm and applications in optimization. Optim Lett 8(7):1999–2020

Peracchi F, Tanase AV (2008) On estimating the conditional expected shortfall. Appl Stoch Models Bus Ind 24(5):471–493

Pflug GC (2000) Some remarks on the value-at-risk and the conditional value-at-risk. In: Uryasev S (ed) Probabilistic constrained optimization: methodology and applications. Kluwer Academic Publishers, Dordrecht, pp 272–277

Rockafellar RT, Uryasev S (2000) Optimization of conditional value at risk. J Risk 2(3):21–41

Roussas GG, Ioannides DA (1987) Moment inequalities for mixing sequences of random variables. Stoch Anal Appl 5(1):60–120

Rueda M, Arcos A (2004) Improving ratio-type quantile estimates in a finite population. Stat Pap 45(2):231–248

Scaillet O (2004) Nonparametric estimation and sensitivity analysis of expected shortfall. Math Finance 14(1):115–129

Shao QM (1990) Exponential inequalities for dependent random variables. Acta Mathematicae Applicatae Sinica (English Series) 6(4):338–350

Takeda A, Kanamori T (2009) A robust optimization approach based on conditional value-at-risk measure and its applications to statistical learning problems. Eur J Oper Res 198(1):287–296

Trindade AA, Uryasev S, Shapiro A, Zrazhevsky G (2007) Financial prediction with constrained tail risk. J Bank Finance 31(11):3524–3538

Wang L (2010) Kernel type smoothed quantile estimation under long memory. Stat Pap 51(1):57–67

Yang SC (2000) Moment bounds for strong mixing sequences and their application. J Math Res Expo 20(3):349–359

Yang SC, Li YM (2006) Uniformly asymptotic normality of the regression weighted estimator for strong mixing samples. Acta Mathematica Sinica (Chinese Series) 49(5):1163–1170

Yang SC (2003) Uniformly asymptotic normality of the regression weighted estimator for negatively associated samples. Stat Probab Lett 62(2):101–110

Yu KM, Ally AK, Yang SC et al (2010) Kernel quantile-based estimation of expected shortfall. J Risk 12(4):15–32

Zhang Q, Yang W, Hu S (2014) On bahadur representation for sample quantiles under \(\alpha \)-mixing sequence. Stat Pap 55(2):285–299

Acknowledgements

The research was financially supported by Guangxi Natural Science Foundation under Grant No. 2016GXNSFBA380069 and the Science and Technology Research Project of Guangxi Higher Education Institutions under Grant No. YB2014390. Moreover, I thank the anonymous referees very much for valuable comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Related lemmas and proofs of Theorem 1−3

1.1 Related lemmas of Theorem 1

Lemma 1

(Shao 1990) Suppose \(\{X_n, n\ge 1\}\) is a stationary \(\alpha \)-mixing sequence with \(EX_1=0\), \(E|X_1|^r<\infty \) for some \(r>2\), and

then,

where \(S_n=\sum _{t=1}^{n}X_t\).

Denote

Lemma 2

If the Assumptions 1–4 are satisfied, then

Proof

Denote \(Z_i=I(X_i\le x)-EI(X_i\le x)\). Note \(EI(X_i\le x)=F(x)\), then \(F_n(x)-F(x)=n^{-1}\sum _{i=1}^{n}Z_i\), and it is clear that \(E|Z_i|^{2+\delta }\le 1\). Moreover, since \(\alpha (n)=O(n^{-\lambda })\) for \(\lambda >(2+\delta )/\delta \), let \(\varepsilon =\lambda -(2+\delta )/\delta >0\), then

From Lemma 1, we have

\(\square \)

Lemma 3

(Liu 2008) If the Assumptions 1–4 are satisfied, then

Lemma 4

(Liu 2008) Suppose the Assumptions 1–4 are satisfied, denote \(b_K=\int _{-\infty }^{+\infty }uK(u)G(u)du\), \(\sigma ^2_K=\int _{-\infty }^{+\infty }u^2K(u)du\), and the probability density function of \(X_i\) is denoted by \(f(\cdot )\), then

Let \(MSE(\hat{v}_{p,h})=E(\hat{v}_{p,h}-{v}_{p})^2\) be the mean square error (MSE) of \(\hat{v}_{p,h}\), then

Lemma 5

Suppose the Assumptions 1–4 are satisfied, then

Proof

Note \(\hat{v}_{p,h}-v_p=o(n^{-1/2}\log n)\) a.s. and \(F_n(x)=F(x)+O(n^{-1/2}(\log \log n)^{1/2})\), then there exists \(0<\theta <1\) such that

then from Formula (A.3) and (A.4), it’s clear that

More over,

Since

Then,

It implies that

Combining Formula (A.5), (A.6) with (A.7), we have

\(\square \)

Lemma 6

Suppose the Assumptions 1–4 are satisfied, then

Proof

Note \(\hat{v}_{p,h}-v_p=O(n^{-1/2}\log n)\) a.s. and \(F_n(x)=F(x)+o(n^{-1/2}\log n)\), then in the Eq. (A.3)

From Formula (A.3), we have

Moreover,

then

Then, \(J_{21}\) of Formula (A.6) satisfies

Combining Formula (A.3), (A.6), (A.8) with (A.9), we have

\(\square \)

From Lemma 5, Theorems 1 and 2 can be easily proved as follows.

1.2 Proof of Theorem 1

Proof

Since \({\hat{v}}_{p,h}-{v}_{p}=o(n^{-1/2}\log n),\ a.s.\), then \(({\hat{v}}_{p,h}-{v}_{p})\cdot o(n^{-1/2}\log n)=o(n^{-1}\log ^2 n),\ a.s.\) So, from Lemma 5 we have

\(\square \)

1.3 Proof of Theorem 2

Proof

From Lemma 4, we know that \(E(\hat{v}_{p,h}-v_p)=O(h^2)\). Moreover, using the result of Lemma 5, we have

Besides, Formula \(Var(\hat{\mu }_{p,h})=p^{-2}n^{-1}\sigma ^2_0(p; n)+o(n^{-2}\log ^4n)\) is obvious from Theorem 1. Therefore,

\(\square \)

1.4 Proof of Theorem 3

Proof

From Lemma 4, we know that \(E(\hat{v}_{p,h}-v_p)=O(h^2)\). Utilizing the result of Lemma 6, we have

It means that

So, Theorem 3 holds. \(\square \)

Appendix B: Related lemmas and proof of Theorem 4

In this section, we give some necessary lemmas and the proof of Theorem 4.

1.1 Related lemmas of Theorem 4

Lemma 7

(Roussas and Ioannides 1987) Let \(\{X_i:i\ge 1\}\) be a sequence of \(\alpha \)-mixing random variables. Suppose that \(\xi \) and \(\eta \) are \({\mathcal {F}}_{1}^{k}\)-measurable and \({\mathcal {F}}_{k+n}^{\infty }\)-measurable random variables, respectively. If \(E|\xi |^s<\infty \), \(E|\eta |^t<\infty \ \ a.s.\), and \(1/s+1/t+1/q=1\), then

where, \(\Vert Y\Vert _r:=(E|Y|^r)^{1/r}.\)

Note 1

There exists a positive number C such that \(|\sigma ^2_0(p; n)|<C\) for any \(n\ge 1\). Actually, let \(q=(2+\delta )/\delta \) and \(s=t=(2+\delta )\) in Lemma 7, then from Assumption 1, we have

Lemma 8

(Yang 2000) Let \(\{X_j : j\ge 1\}\) be a sequence of \(\alpha \)-mixing random variables with zero mean.

- (i)

If \(E|X_j|^{2+\delta }<\infty \) for \(\delta >0\), then

$$\begin{aligned} E\left( \sum _{j=1}^{n}X_j\right) ^2\le \left( 1+20\sum _{m=1}^n\alpha ^{\delta /(2+\delta )}(m)\right) \sum _{j=1}^n\Vert X_j\Vert _{2+\delta }^2. \end{aligned}$$ - (ii)

If \(E|X_j|^{r+\tau }<\infty \) and \(\alpha (n)=O(n^{-\lambda })\) for \(r>2\), \(\tau >0\) and \(\lambda >r(r+\tau )/2\tau \), then, for given \(\varepsilon >0\), there exists a positive constant \(C=C(r,\tau ,\lambda ,\varepsilon )\) which doesn’t depend on n such that

$$\begin{aligned} E\left| \sum _{j=1}^{n}X_j\right| ^r\le C\left\{ n^{\varepsilon }\sum _{j=1}^nE|X_j|^r+\left( \sum _{j=1}^n\Vert X_j\Vert _{r+\tau }^2\right) ^{r/2}\right\} . \end{aligned}$$

Denote \(Y_{i}:={n^{-1/2}}\sigma _{0}^{-1}(p, n)\{[X_i-v_p]^+ -E[X_i-v_p]^+\}\), \(i=1,2,\ldots ,n\), and \(S_n:=\sum _{i=1}^{n}Y_{i}\), then

At first, we prove that \(S_n\) is uniformly asymptotic normality.

Let \(k=\lfloor n/(p_1+p_2)\rfloor \), then

where,

\(k_m=(m-1)(p_1+p_2)+1,l_m=(m-1)(p_1+p_2)+p_1+1,m=p_2,\ldots ,k.\)

Lemma 9

Suppose Assumptions 1–5 are satisfied, then

Proof

From Lemma 8(i), Assumption 1 , Formula (3.1) and Note 1, we know

On the other hand, \(n-k(p_1+p_2)<(p_1+p_2)\), then

So Formula (B.3) holds. Otherwise, Formula (B.4) can be proved by combining the Markov inequality with Formula (B.3). \(\square \)

Denote \(s_n^2:=\sum _{m=1}^k Var(y_{nm})\), we can obtain an inequality about \(s_n^2\) as follows.

Lemma 10

Suppose Assumptions 1–5 are satisfied, then

Proof

Note \(E|S_n|^2=Var(S_n)=1\), and

Then, using Lemma 9, we have

Moreover,

Combining Formula (B.5) with (B.6), we know Lemma 10 holds. \(\square \)

Suppose \(\{\xi _{nm}:m=1,2,\ldots ,k\}\) is an independent random variable sequence, random variables \(\xi _{nm}\) and \(y_{nm}\) are identically distributed (\(m=1,2,\ldots ,k\)). Let \(T_n=\sum _{m=1}^k \xi _{nm}, D_n=\sum _{m=1}^k Var(\xi _{nm})\), and denote \(H_n(u)\) and \(\tilde{H}_n(u)\) as the distribution functions of \(T_n/\sqrt{D_n}\) and \(T_n\), respectively. Obviously,

Lemma 11

Suppose Assumptions 1–5 are satisfied, then

Proof

Let \(r=2+2\rho \) and \(\tau =\delta -2\rho \). From Formula (3.4), we have \(0<2\rho <\delta \), then \(\tau =\delta -2\rho >0\), moreover,

Using Lemma 8(ii) and taking \(\varepsilon =\rho \), we have

And using Lemma 10, we know \(D_n^2=s_n^2\rightarrow 1\), hence

Utilizing Berry–Esseen theorem, we obtain Formula (B.7). \(\square \)

Lemma 12

(Yang 2003) Suppose \(\{\xi _n:n\le 1\}\) and \(\{\eta _n:n\le 1\}\) are two random variable sequences, and positive constant sequence \(\{\gamma _n:n\le 1\}\) satisfies \(\gamma _n\rightarrow 0\) as \(n\rightarrow \infty \). If

then for \(\forall \)\(\epsilon >0\),

1.2 Proof of Theorem 4

Proof

Using similar proof methods of Yang and Li (2006, Theorem 2.1 and Lemma 4.4), we easily have

Moreover, applying Lemma 12 by taking \(\epsilon =n^{-1/4}\log n\) and Formula (B.1), (B.8), we know Theorem 4 holds. \(\square \)

1.3 Proof of Corollary 2

The proof of this corollary is similar to that of Yang and Li (2006, Corollary 2.3).

Rights and permissions

About this article

Cite this article

Luo, Z. Nonparametric kernel estimation of CVaR under \(\alpha \)-mixing sequences. Stat Papers 61, 615–643 (2020). https://doi.org/10.1007/s00362-017-0952-2

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-017-0952-2

Keywords

- Nonparametric kernel estimator

- CVaR

- \(\alpha \)-Mixing sequence

- Bahadur type expansion

- Uniformly asymptotic normality

- MSE

- Optimal bandwidth