Abstract

The paper addresses the shortcoming of current event-based vision (EBV) sensors in the context of particle imaging. Latency is introduced both on the pixel level as well as during read-out from the array and results in systemic timing errors when processing the recorded event data. Using pulsed illumination, the overall latency can be quantified and indicates an upper bound on the frequency response on the order of 10–20 kHz for the specific EBV sensor. In particle-based flow measurement applications, particles scattering the light from a pulsed light source operating below this upper frequency can be reliably tracked in time. Through the combination of event-based vision and pulsed illumination, flow field measurements are demonstrated at light pulsing rates up to 10 kHz in both water and air flows by providing turbulence statistics and velocity spectra. The described EBV-based velocimetry system consists of only an EBV camera and a (low-cost) laser that can be directly modulated by the camera, making the system compact, portable and cost-effective.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Event-based vision (EBV), also referred to as dynamic vision sensing (DVS) or neuromorphic imaging, constitutes a new approach to motion-related imaging. Unlike conventional frame-based image sensors, an EBV sensor only records intensity changes, that is, contrast change events, on the pixel level. As each pixel operates on its own, the resulting event data stream is completely asynchronous, varying from quiescent in static imagery and increasing to considerable data rates for scenes with high dynamic content. Consequently, the associated event-data processing requires a partial departure from the established concepts of frame-based image processing.

The underlying concepts were originally proposed by Carver Mead and Misha Mahowald (Mahowald 1992) in the early 1990’s and were aimed at mimicking the functionality of the eye’s retina—hence coining the name “silicon retina” in their work. By the late 2000’s the technology had matured enough to result in usable prototype cameras based on event-based sensors with pixel area on the order of 128\(\times\)128 elements (Lichtsteiner et al. 2008). More recently, several companies have introduced fully integrated commercial grade event-cameras with detector arrays up to 1 MPixel (Finateu et al. 2020). This improved accessibility to event cameras has greatly expanded the areas of event-based vision applications and has made the present study possible. Comprehensive overviews of the current status of event-based vision, its range of applications and associated data processing are provided by Gallego et al. (2022) as well as Tayarani-Najaran and Schmuker (2021). Beyond this, a Github repository maintained by the Robotics and Perception Group of the University of Zurich provides an extensive collection of literature and resources on the subject (Robotics and Perception Group 2022).

While the number of applications for EBV/DVS are continually increasing, the temporal delay between the contrast detection (CD) event and the actual time assigned to it by the detector hardware has been identified as one of the more important shortcomings (Bouvier 2021). This temporal delay is generally referred to as latency with data sheets and literature quoting values on the order of 3 to 200 \(\upmu\)s (see Table 1 in Gallego et al. 2022). In practice, the event camera’s latency may even be higher and depends on lighting conditions, various adjustable detector parameters (biases) as well as the number of events per unit time. As a consequence any sort of data processing relying on the actual event-time will be affected by temporal uncertainty. In the case of particle-based fluid flow measurement the particle velocity estimate will have an uncertainty that is directly related to the uncertainty of the event time-stamps.

In the following, the working principles of event-based imaging are briefly outlined along with a description of the different aspects contributing to the latency, followed by measurements of the latency of the currently used hardware. The second part describes the use of pulsed illumination to obtain more precise information on the particle-produced events. The pulsed illumination event-based imaging concept (pulsed-EBIV) is demonstrated on simple water and air flows containing a wide range of flow dynamics. Along with comparative PIV measurements, the statistical and spectral analysis of the processed EBIV data allows an assessment of the overall measurement uncertainty of the proposed technique. The material presented herein extends on a recent publication upon the subject (Willert and Klinner 2022a).

2 Event sensor operation and signal processing

Each EBV pixel consists of a photodiode with adjoining contrast change detection unit and logical unit (Fig. 1). Photons collected by the photodiode induce a photocurrent that is converted to a voltage. The logarithm of this voltage is supplied to the contrast detection unit allowing it to trigger contrast detection (CD) events at a fixed relative contrast threshold, typically on the order of 10-25%. A comparator unit distinguishes between positive and negative intensity change events, where positive refers to an intensity change from dark to bright (“On" event) whereas negative indicates a reduction of intensity (“Off" event). Once a pixel detects a positive or negative event the logical unit sends out an acknowledge request to the detector’s event monitors, termed arbiters, which then register the event and transmit the event’s time-stamp \(t_i\), its polarity \(p_i\) and pixel coordinates \((x_i,y_i)\) to the output data stream. After a pending event has been acknowledged, the logical unit resets the detector part of the pixel allowing a further event to be captured.

The asynchronous readout procedure is outlined in Fig. 2: an event-triggered pixel at position (x, y) sends out both a row request (RR) and column request (CR). The requests are acknowledged (RA,CA) in succession by the respective row and column arbiters. Once the event is fully acknowledged its coordinate, polarity and time stamp is forwarded to the output stream. Actual implementations differ between various devices. For instance, the Gen4 device by Prophesee only uses a single arbiter, assigning pending events from the same row with the same time stamp (Finateu et al. 2020). The “first-come-first-served" concept of current event-sensor readout architectures is one of the primary sources of the system-inherent latency.

The sensitivity of the detector array in triggering positive or negative CD events is controlled through a number of biases. These can be tuned by the user to suit a given application. The most relevant biases for the present application were found to be the positive and negative detection thresholds along with the refractory period that controls the dead time of a given pixel after registering a CD event. A systematic study of the influence of the biases on event generation and the associated latency is challenging in itself, not only because of the large parameter space, but also because the lighting conditions along with motion of the imaged object(s) contribute. A detailed overview of event generation and measurement schemes to determine the latency are provided by Joubert et al. (2019).

Simplified schematic asynchronous readout system of an EBV sensor (following Fig.II.12 from Bouvier 2021). An event-triggered pixel at (x, y) sends row and column requests (RR, CR) that are acknowledged (RA,CA) by the arbiters

2.1 Limitations of EBV sensors

Within the context of accurate event capture, the latency, that is, the time between the CD appearing on the pixel and its actual acknowledgment by the event processing unit, is the most relevant. The total latency is a combination of the pixel latency and system latency, the first of which is the delay of the pixel itself in responding to a contrast change appearing on its photodiode. The pixel’s sub-units outlined in Fig. 1 all have inherent response characteristics that contribute to the pixel latency. In addition, the response characteristics differ between pixels and results in a temporal jitter, that is, pixels respond differently to otherwise identical lighting conditions.

With increasing number of CD events per unit time, the arbiters begin saturate and can no longer react promptly to pending event requests. This results in an additional latency between the time assigned to the event and the actual occurrence of the event, and may sometimes reach up to several hundred \(\upmu\)s. The event overload may manifest itself differently and depends on the sensor architecture and is one of the primary challenges in low-latency event-sensor design. For the presently used hardware, the arbiter saturation results in horizontal stripes of CD events all of which are assigned with the same event time (see Fig. 3). The effect can be monitored with histograms of the event arrival times. The event rate can be fine-tuned not to result in arbiter overloading by changing the light intensity—here, laser power and/or lens aperture—or by adjusting the particle seeding density. For the presently used Gen 4 HD sensor (Prophesee EVK2-HD, \(1280 \!\times \! 720\) pixels) up to 50–60 ev/(pixel \(\cdot\) s) were found to produce useful particle event data. Alternatively, a reduction in the region of interest (ROI) on the sensor, that is, the number of pixels being monitored, can be applied to increase the event-count per pixel as the arbiters can process a reduced number of pixels more efficiently.

Example of the loss of event-timing due to saturation of event-monitoring units (arbiters) within the sensor itself. The pseudo-image contains 2 ms of CD events acquired with a continuously operating laser in the wake of a circular cylinder in a small wind tunnel. The event data rate is about \(60\!\cdot \! 10^6\) events/s

3 Event-based imaging with pulsed illumination

The combination of event-based imaging with pulsed illumination is not new and already has found several applications in the area of 3D reconstruction. Using a pulsed laser line at frequencies in the 100–500 Hz range, Brandli et al. (2014) reconstructed 3D surfaces from the events produced by the projected line. Huang et al. (2021) used a high-speed digital light projector to produce a blinking pattern at 1000 Hz that is imaged by an event camera to reconstruct 3D surfaces. By projecting a grid of laser dots onto surfaces Muglikar et al. (2021) reconstruct 3D surfaces and state that the scanning illumination at 60 Hz maximizes the spatiotemporal consistency yielding considerably improved depth estimates. Event-based imaging in combination with two triangularly modulated lasers of different wavelength are used for event-based bispectral photometry to perform surface reconstruction in water by making use of the wavelength-dependent absorption characteristic of the medium (Takatani et al. 2016). In this case, modulation frequencies of 1–120 Hz are applied.

In the present study, pulsed illumination of a flow seeded with light scattering particles intends to address several issues at the same time:

-

With continuous illumination stationary or slowly moving particles produce no or very few events. Light pulses will make these particles visible.

-

The events generated by a moving particle decreases as its velocity on the sensor increases simply because the respective pixel cannot collect a sufficient number of photoelectrons as the particle image passes by. With pulsed illumination the position of the particles is essentially “frozen” in time until the next light pulse illuminates it at a different position.

-

Events generated by pulses of light can be directly associated with the timing of the preceding pulse of light which reduces the latency issues described in the previous section.

The concept of event-based imaging with pulsed illumination is outlined in Fig. 4. Due to latency the events appear only after a light pulse illuminates the scene (or particle). If the latency is shorter than the pulsing interval, then the events can be uniquely associated with the preceding light pulse. Thereby, the uncertainty introduced by the camera’s event-detection latency can be mitigated. When the latency exceeds the pulsing interval then events from a given light pulse will appear in the following pulsing interval(s). This overlap inadvertently results in timing ambiguities.

3.1 Event latency measurements

The combined latency, consisting of the contribution of both the on-pixel delay and the latency introduced by the arbiters, is estimated by illuminating a particle-seeded fluid flow with a pulsed light source. The light pulses clearly define the time-intervals during which the detector should produce events and should be sufficiently short to keep particle streaking minimal. In the present case, a small laser with pulse width modulation (PWM) is used to produce light pulses with a duty cycle of 1% – 10% at frequencies of 500 Hz \(< f_p <20\) kHz. The low-cost laser (NEJE Tool E30130) is originally purposed for laser-cutting and engraving. It has a mean output power of 5.2 W at a wavelength of 450 nm with a full-width-half-mean (FWHM) spectral width of 2 nm as measured with a spectrometer (Ocean Optics USB2000+, 0.5 nm resolution). The light pulses emitted by the laser have rise and fall times of about 3 \(\upmu\)s (see Fig. 5). For pulse widths \(\tau _p \!>\! 5\,\upmu\)s the light pulses exhibit a saw-tooth modulation of about 15% of the maximum pulse power with a modulation frequency of about 50 kHz. The pulsing frequency has no effect on the shape or amplitude of the emitted laser pulse. The finite pulse width may have to be taken into consideration as it can introduce particle image streaking on the event detector for fast moving particles. Here, a Q-switched high-speed solid-state laser with pulses in the sub-microsecond range would improve the measurements.

A water flow experiment (jet in water) is used for the assessment of the temporal event generation by pulsed light illumination. The laser light is spread into a parallel light sheet of about 80 mm height and 1 mm waist thickness using a \(f \!=\! -40\) mm plano-concave cylindrical lens along with a \(f \!=\! 40\) mm plano-convex cylindrical lens. A small pump is placed in one corner of a small glass water tank ( \(300 \! \times \! 200 \!\times \! 60\) mm\(^3\), \(H \!\times \! W \!\times \! T\)) and produces a turbulent jet of about 1.5 m/s exit velocity (diameter 8 mm). The water is seeded with neutrally buoyant 10 \(\upmu\)m silver coated glass spheres. Event-recordings for different pulse frequencies are acquired near the jet nozzle. An objective lens with 55 mm focal length (Nikon Micro-Nikkor 55 mm 1:2.8) with the aperture set at \(f_\# \!=\! 5.6\) collects the scattered light at a magnification of 27.0 pixel/mm (\(m \!=\! 0.12\)). The camera event detection thresholds are adjusted to favor positive events. Camera biases, magnification, lens aperture and seeding concentration are held constant for the measurements.

Histograms of the recorded event data are presented in Fig 6 for two laser pulsing frequencies, \(f_p \!=\! 2\) kHz and \(f_p \!=\! 5\) kHz. The event histograms in Fig. 6a, b exhibit distinct peaks at the laser modulation frequency with the pulses indicated by the green bursts. Averaging a larger number of pulse periods (Fig. 6c, d) results in an averaged temporal distribution of the event generation due to pulsed light. A strong increase in events coincides with the beginning of the laser pulse and peaks after about \(100\,\mu\)s before decaying. Most events associated with the laser pulse are captured within \(150-200\,\upmu\)s. This timescale determines the maximum laser pulsing rate at which reliable pulsed-EBIV can be obtained. For the present event camera (Prophesee EVK2-HD) this corresponds to about 5–7 kHz when recording at full sensor resolution (\(1280 \!\times \! 720\)) and the arbiters operate below their saturation (i.e., less than \(40 \!\cdot \! 10^6\) events/s).

By reducing the active area of the sensor (ROI), the performance of the arbiter can be enhanced, as the number of events per row and unit time is reduced while keeping the particle image density within the ROI constant. Figure 7 shows histograms of event data obtained for a ROI of 1280(W) \(\times\) 160(H) pixel with corresponding event pulse widths of 26 \(\upmu\)s and 22 \(\upmu\)s (FWHM). This indicates that the motion of particles can be captured at pulsing frequencies up to 20 kHz at this ROI.

The effect of increasing the event data rates is highlighted in Fig. 8. While the event pseudo-images in Fig. 8a, b look nearly the same, the corresponding event histograms (Fig. 8c, d) reveal that the clear defined burst of events following a laser pulse disappears when the event rate increases from \(38 \!\cdot \! 10^6\) events/s to \(50 \!\cdot \! 10^6\) events/s. Due to arbiter saturation, the event data in Fig. 8b cannot be reliably processed to extract particle image displacement data. Online monitoring is therefore required during event capture to ensure adequate recordings.

As mentioned previously, continuous illumination results in event data rates that strongly depend on the particles’ velocity. This is illustrated in Fig. 9a,c for an air flow around a square rib (c.f. Sect. 5.3) with data rates differing by nearly a factor of two between fast outer flow and the slow flow in the wake region. The seeding distribution on the other hand is constant throughout the imaged domain. With pulsed illumination, here at 5 kHz, the event generation is nearly uniform in all areas of the imaged domain.

Histograms of recorded event times for pulsed-EBIV measurements on a water jet at pulsing frequencies of \(f_p \!=\! 2\) kHz (a, c) and \(f_p \!=\! 5\) kHz (b, d). The green lines indicate the actual time and width of the pulses driving the laser. Top row (a, b) shows a random sample within the record, whereas the lower row (c, d) provides an averaged temporal distribution of events for the respective pulsing frequencies. The vertical red line marks the peak in the event distribution

Histograms of recorded event times in a ROI of \(1280 \!\times \! 160\) pixel for laser pulse frequencies of \(f_p \!=\! 10\) kHz (a, c) and \(f_p \!=\! 20\) kHz (b, d). Pulse widths are 6 \(\upmu\)s and 3 \(\upmu\)s, respectively, producing event pulses widths of 26 \(\upmu\)s and 22 \(\upmu\)s (FWHM). Top row shows sequences of several pulses, bottom row an average temporal distribution from several hundred pulse periods. The dashed red vertical lines mark the pulsing period duration. Green lines indicate the pulses driving the laser, actual pulses of light may be longer (c.f. Fig. 5)

Sample of 2.5 ms of events recorded on a turbulent jet in water at \(f_p \!=\! 4\) kHz and event rates of \(38 \!\cdot \! 10^6\) events/s (a) and \(50 \!\cdot \! 10^6\) events/s (b). Sub-figures (c) and (d) show respective temporal distributions of the events. The dashed red vertical lines indicate one laser pulsing period (\(250\,\upmu\)s). (Animations of the event data in (a) are provided in the supplementary material.)

4 Processing of pulsed event-data

Event-data provided by the pulsed illumination of the particles suggests the use of established multi-frame particle image velocimetry (PIV) processing algorithms. This is achieved by first generating pseudo-images from the event data. These are accumulated from the events surrounding a given maximum in the event histograms as shown in Fig. 6 and can be readily determined if the pulsing frequency is known. An optimal association of events is possible when the timing of the laser pulses has been recorded as part of the event stream. All events within a given frame are assigned with the same time stamp.

The off-line processing of the previously recorded event sequences involves several steps to convert the event data stream into distinct pseudo-images that can be handled by typical correlation-based algorithms designed for handling PIV recordings:

-

1.

Using the known, fixed pulsing frequency, estimate the temporal position of the beginning of the light pulse (in case the laser trigger pulses are not recorded by the event camera).

-

2.

Generate a pseudo-image sequence in which each image contains all the events for a preceding light pulse. Pixels associated with a CD event are given the same intensity irrespective of their actual time-stamp under the assumption that the given pixels were triggered by the same light pulse. The resulting stream of pseudo-images will have the same frequency as the laser pulses used to illuminate the particles.

-

3.

Image blurring is suggested to reduce spiking of the cross-correlation signals and ensure a correlation peak width suitable for sub-pixel peak position estimation. A smoothing filter with a kernel size of \(1-2\) pixels is considered adequate.

As such modern PIV algorithms are optimized for particle image intensity distributions of approximately Gaussian shape and perform less well with the single-pixel events (Dirac delta function). Therefore, the pseudo-images generated from the event data are first low-pass filtered with a Gaussian kernel to generate suitable “particle images”.

The sequence of pseudo-particle image distributions can be processed using standard correlation-based PIV algorithms as mentioned above and significant improvement can be achieved through the use of multi-frame, pyramid-based cross-correlation schemes (Sciacchitano et al. 2012; Lynch and Scarano 2013; Willert et al. 2021). The analysis of the pseudo-image sequences obtained in the following experiments typically involves subsets of \(N_f \!=\! 5\) frames per time-step and is increased up to \(N_f \!=\! 9\) to reduce noise at the cost of reduced frequency bandwidth due to temporal filtering.

5 Sample measurements performed with pulsed illumination EBIV

5.1 Turbulent jet in water

Both pulsed-EBIV and matching snapshot PIV measurements are taken on a turbulent jet in a small water tank as described in Sect. 3.1. The field of view is adjusted to achieve similar magnification factors for both measurement techniques, \(m \!=\! 27.0\) pixel/mm (37.0 \(\upmu\)m/pixel) for pulsed-EBIV and \(m \!=\! 28.75\) pixel/mm (34.8 \(\upmu\)m/pixel) for PIV, using the same lens for both (Nikon Micro-Nikkor 55 mm 1:2.8). Laser light sheet configuration, seeding concentration and operational conditions of the jet are held constant to achieve a common imaging configuration. A side-by-side comparison of the two measurements is given in Table 1.

For PIV, the laser is operated in double-pulse mode with a pulse separation of \(\tau \!=\! 500\,\upmu\)s and pulse duration of \(t_p \!=\! 100\,\upmu\)s (\(600\,\upmu\)J). PIV recordings are captured at about 4 Hz using a double-shutter CCD camera (PixelFly-PIV, PCO/ILA-5150) with a resolution of \(1392 \!\times \! 1040\) pixels at \(6.45\,\upmu\)m/pixel. The acquired PIV data set comprises 1000 image pairs which are processed with a standard multiple-pass, iterative grid-refining cross-correlation-based algorithm. As an initial step the mean intensity image is computed from the image set and subtracted from each image prior to processing, with negative intensity values set to zero. The final sampling window size covers \(32 \!\times \! 32\) pixels (\(1.11 \!\times \! 1.11\,\textrm{mm}^2\)). Validation is based on normalized median filtering with a threshold of 3 (Westerweel and Scarano 2005).

For pulsed-EBIV, the laser is operated with pulsing frequencies of 2,4 and 5 kHz and a duty cycle of 1% while the camera lens aperture is stepped down to \(f_\# \!=\! 5.6\). Pulse energies vary in the range of about \(10-25\,\upmu\)J, depending on pulsing rate. This is a small fraction of the energy used for PIV. The event camera biases are adjusted for maximum sensitivity to positive CD events given that negative CD events are not required in the subsequent processing. These parameters result in event data rates of \(35-38 \!\cdot \! 10^6\) events/s (100–120 MB/s). Additional data are acquired from a reduced ROI of \(320 \!\times \! 720\) pixel at similar effective data rates and pulsing frequencies up to 10 kHz. Event sequences of 10 s duration are recorded for both sensor ROIs.

Processing of the event recordings first involves the conversion to pseudo-image sequences as described in Sect. 4 followed by displacement field estimation using a multi-frame cross-correlation scheme. The iterative \(N_f \!=\! 5\)-frame algorithm uses sampling windows of \(48 \!\times \! 48\) pixel for event data acquired on the full HD array (\(1280 \!\times \! 720\) pixel) and is reduced to \(32 \!\times \! 32\) pixel for a ROI of \(320 \!\times \! 720\) pixel. Additionally, the full HD event data obtained at 5 kHz is processed at \(32 \!\times \! 32\) pixel with a \(N_f \!=\! 9\)-frame scheme.

Figure 10 provides a side-by-side comparison of the first and second order statistics for both PIV and EBIV data. Except for the region near the nozzle there is good quantitative agreement between the two data sets. Asymmetry in the transverse velocity v is due to wall effects within the small water tank. Also a deflecting plate located further downstream (at \(x/D \!\approx \! 20\)) was moved between the experiments thereby slightly altering the cross-flow. The streamwise velocity variance \(\langle u u \rangle\) shows some irregular structures the cause of which have not been identified. Profiles extracted at \(x/D \!=\! 2\) are shown in Fig. 11 and exhibit a good collapse for the mean value. The largest discrepancies are present for the variances \(\langle u u \rangle\) and \(\langle v v \rangle\) for the event data acquired at \(f_p \!=\! 2\) kHz. An explanation for this is that the \(N_f \!=\! 5\)-frame processing scheme insufficiently captures the flow dynamics in the jet’s shear layers. This is also reflected in the validation rate which drops considerably in the shear layers for the \(f_p \!=\! 2\) kHz event data.

Considerable improvement in the convergence of the different data sets is achieved through the reduction of the ROI (Fig. 11b). This is attributed by the increase of the particle image density by roughly a factor of 3–4 (c.f. Table 2). At the same time the spatial resolution could be increased by decreasing the sample size from \(48 \!\times \! 48\) pixel to \(32 \!\times \! 32\) pixel.

5.2 Channel boundary layer

Measurements of a developing boundary layer are taken in the square duct of a small wind tunnel at \(x/D \!\approx \! 20\) with \(D \!=\! 76\) mm being the cross-sectional dimension. Depending on the flow velocity the flow switches from laminar to turbulent conditions with the transition occurring at about \(U_\infty \!=\! 3.0\) m/s. It should be noted that at \(x/D \!\approx \! 20\) (1475 mm) the turbulent duct flow is not fully converged.

Reduced sensor ROIs of \(320(W) \!\times \! 720(H)\) and \(1280(W) \!\times \! 320(H)\) capture a 7 mm wide strip of the flow field, respectively, extending up to wall normal distances of \(y \!= \!15\) mm (\(y/D = 0.39\)) and \(y \!=\! 27\) mm (\(y/D \!=\! 0.73\)). Respective magnification is \(m \!=\! 46.6\) pixel/mm for the laminar condition and \(m \!=\! 45.9\) pixel/mm for the turbulent condition.

Under laminar conditions the imaged particles produce event clusters with a mean size of 1.29 events per particle and light pulse. The particle image density, expressed in particles per pixel (ppp), is estimated at \(ppp \!=\! 0.014\) and includes a small amount (O[1%]) of random events, which are triggered randomly on the sensor array (shot noise). Event data captured of the turbulent channel flow condition exhibit a similar event cluster size (1.45) at a slightly lower image density of \(ppp \!=\! 0.011\). (Details on the estimation of particle image density and size are provided in appendix A.)

The velocity profile of the laminar flow condition determined from an event stream at a laser pulse rate of \(f_p = 5\) kHz and 1.0 s duration is shown in Fig. 12a. The root mean square values of the velocity components (Fig. 12b, c) are determined by subtracting a moving average of \(N \!=\! 50\) samples (10 ms) from the time-resolved velocity profile to account for a slight unsteadiness of the tunnel mean flow. With the low frequency part removed, the rms values are indicative of the measurement uncertainty that can be achieved with the present pulsed-EBIV implementation. While the choice of interrogation window size and number of frames, \(N_f\), used per time-step have insignificant influence on the mean values, they do affect the rms values. Increasing the sample size reduces the rms values as more particle image event matches enter into the correlation estimate. The same can be achieved by increasing \(N_f\). In that case, the underlying sum of correlation scheme attenuates noise in the correlation plane from which the particle image displacement is determined. At the same time the rms values are only weakly correlated with increasing displacement values which is to be expected from the iterative processing scheme that offsets the sample windows according to the displacement (Westerweel et al. 1997; Westerweel 2002). The peak in the rms value of the streamwise velocity component u close to the wall near \(y \!=\! 1\) mm is of physical nature and is associated with the onset of weak instabilities of the near wall flow.

At higher free stream velocities (\(U_\infty \!>\! 3\) m/s) the channel flow transitions to turbulence. Event recordings are performed using similar imaging conditions as for the laminar case at \(f_p \!=\! 10\) kHz and a ROI of \(1280 \!\times \! 160\) pixel at \(m \!=\! 32.7\) pixel/mm. Three event records of 10 s duration are processed with \(N_f \!=\! 5\) images per time step resulting in 50,000 (correlated) vector fields each. Analysis is performed with a high aspect ratio sampling window \(64(W)\!\times \!6(H)\) pixel (\(1.96\!\times \!0.183\,\textrm{mm}^2\)) to improve spatial resolution in the wall-normal direction; with sampling locations spaced at \(\Delta y\!=\!2\) pixel (\(61\,\upmu\)m). For post-processing the profile of the unsteady velocity components u, v is extracted from the center of each 2d velocity map. This results in temporal records of the unsteady velocity profile \(u_i(y,t)\) at a fixed streamwise position (\(x_0\!=\!1475\) mm). A 200 ms sample of such a record is provided in Fig. 13 for the two velocity components \(u_i\!=\! {u,v}\).

Profiles of the mean and higher order statistics computed from the temporal velocity profile are provided in Fig. 14. Owing to the high spatial resolution of the near wall profile (Fig. 14a) the mean velocity gradient at the wall can be estimated from which the shearing velocity, \(u_\tau \!=\!0.30\) m/s, and viscous length scale, \(\nu /u_\tau \!=\!49\,\upmu\)m, can be obtained (Willert 2015). Profiles of the velocity variances, normalized by viscous scaling, are presented in Fig. 14b together with DNS data for a turbulent boundary layer (TBL) flow of similar friction Reynolds number (Schlatter and Örlü 2010). For wall distances of \(y^+ \!<\!5\) the measured profiles of the higher moments deviate from the DNS which can be attributed to spatial smoothing imposed by the finite sized image sampling of the correlation-based algorithm. In viscous scaling the \(64(W)\!\times \!6(H)\) pixel samples cover \(40^+ \times 3.7^+\). In the logarithmic layer up to \(y^+ \approx 200\) the wall-normal fluctuations \(\langle v v \rangle\) and, to a lesser degree, the Reynolds stress \(\langle u v \rangle\) are also attenuated due to spatial smoothing. Underestimation of the skewness at the outer edge of the boundary layer are due to the validation scheme that removes data deviating more than 6 standard deviations from mean.

Figure 15 further demonstrates the possibility of extracting spectral information from the time-resolved EBIV data. The spatially resolved spectrum of the streamwise velocity u is compiled from pre-multiplied power spectra of u(y, t) for each wall-normal distance y. The peak energy, with a dominant frequency of 83 Hz, resides at a wall distance of \(y^+\!=\!13.0\) (indicated by the horizontal dot-dashed line), slightly below the maximum of the variance \(\langle u u \rangle\) in Fig. 14b where it is located at \(y^+\!=\!14.5\).

In the context of the present paper, Fig. 15 acts as a placeholder for further analysis that can be performed with data obtained by the pulsed EBIV technique, such as space-time (2-point) correlations and perform spectral modal analysis (see, e.g., Willert and Klinner, 2022b).

Profiles of mean streamwise velocity U (a) and velocity variances \(\langle u u \rangle\), \(\langle v v \rangle\) and Reynolds stress \(\langle u v \rangle\) (b) obtained by pulsed light EBIV in a turbulent duct flow. Quantities are normalized by inner variables, friction velocity \(u_\tau\) and viscous unit \(\nu /u_\tau\). Sub-figure (c) provides the skewness and kurtosis of the measured velocity components. A kurtosis value of 3 represents a Gaussian distribution. (DNS data, gray lines, from Schlatter and Örlü 2010)

5.3 Flow around a square rib

Structures such as ribs, pins, fins or other surface features are typically placed onto surfaces to enhance convective heat transfer. The internal cooling of turbine blades is a common application. In the present case, a single square rib of size \(H \!=\! 8.18\) mm is placed spanwise in the square test section (\(D \!=\! 76\) mm) at \(x \!=\! 1700\) mm downstream of the channel entry. The flow condition upstream of the rib is laminar with a free stream velocity of \(U_\infty \!\approx \! 2.0\) m/s with a bulk Reynolds number of \(\textrm{Re}_b \!=\! 10\,000\) and a Reynolds number based on rib height of \(\textrm{Re}_H \!=\! 1\,100\).

Image magnification is set at \(m \!=\! 31.4\) pixel/mm (\(31.8\,\upmu\)m/pixel). The laser pulsing rate is fixed at 5 kHz with pulse widths of \(25\,\upmu\)s (\(130\,\upmu\)J/pulse).

Event sequences of 10 s duration are captured on a field of view of \(41\!\times \!23\,\textrm{mm}^2\) at different streamwise positions by traversing camera and light sheet. Figure 16 provides sampled event data for a duration of 10 ms. Event data rates vary between 42 MEv/s and 50 MEv/s with corresponding respective data rates of 111 MB/s and 133 MB/s. With the light sheet coming from the top, the laser flare on the anodized aluminum surface of the square rib results in significant event generation within the otherwise dark region of the rib. This could be considered an effect similar to the laser flare-induced saturation on CCD (blooming). Laser light passing through the glass of the channel results in less scattered light and mirror imaged particle event streaks can be observed. Due to the pulsed illumination stationary particles and other dust like features on the wall also become visible.

Under laminar inflow conditions a series of nearly stable vortices (rollers) form upstream of the rib. A shear layer is present above the rib, extends downstream and results in steady shedding of vortices about 3 rib heights downstream. The immediate wake is characterized by a rather stable recirculation zone with velocities about one order of magnitude lower than the external flow.

Analogous to the previously described boundary layer flow, the pseudo-image data of the flow around the square rib is processed with a multi-frame processing scheme (\(N_f \!=\! 5\) frames) using samples of \(40\! \times \!40\) pixels (\(1.3\!\times \!1.3\,\textrm{mm}^2\)). With a seeding density estimated at \(ppp \!=\! 0.0084\) about 13 particles are captured per sample on average (c.f. Table 2).

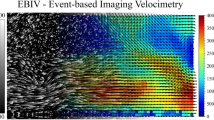

First and second order statistics of the flow field are plotted in Fig. 17 compiled from 50 000 (correlated) samples.

Sample event data of the flow around a square rib of \(H \!=\! 8.2\) mm at \(\text {Re}_H \!=\! 1\,100\) and pulsed illumination at \(f_p \!=\! 5\) kHz. With the flow direction left-to-right, the time-slices capture 5 ms of events or 25 laser pulses of laminar upstream region (a) and turbulent recirculation region in the immediate wake (b). Animations of the raw event data are provided in the supplementary material

Velocity statistics for the flow around a square rib with laminar inflow conditions obtained from 4 sampling domains of \(36 \!\times \! 20\,\textrm{mm}^2\) (\(4.3\,H \!\times \! 2.4\,H\) ). Statistics are computed from \(50\,000 \!\times 200 \upmu\)s = 10 s. Black rectangles indicate sampling domains used for spectral estimates shown in Fig. 18

Figure 18 shows spectra obtained both in the laminar region upstream of the rib and downstream in the shear layer of turbulent wake with the respective sampling domains indicated in Fig. 17. The pronounced peak at \(f \!=\! 35.4\) Hz with a reduced frequency of \(\textrm{St} \!=\! f\,H\,U_\infty ^{-1} \!=\! 0.145\) is the signature of the periodic vortex shedding in the shear layer between outer flow and wake region. It is present for both velocity components and is also “felt” in the upstream region (Fig. 18). A low frequency modulation is present in the streamwise velocity component u at frequencies below 10 Hz and is due to a slight oscillation (pumping) of the mean flow in the channel. Its magnitude is about 3 orders of magnitude weaker than the spectral content in the turbulent wake and therefore cannot be observed in the spectra obtained in the wake flow (Fig. 18b).

The frequency spectra permit an estimation of the noise level in the measurement. In the upstream laminar region (Fig. 18a) the noise floor is constant across the frequency spectrum, aside from the signatures of the vortex shedding and the slight modulation of the streamwise velocity component. The increase of the sampling window from \(40\!\times \!40\) pixel to \(64\times \!64\) pixel results in the reduction in the noise level by roughly a factor of two, which is a direct consequence of the increased number of events entering in the velocity estimation. The spectrum obtained in the wake region (Fig. 18b) resembles that of a classical turbulent energy cascade overlaid with the strong signature of the vortex shedding. At a frequency of about 1 kHz the spectrum exhibits a roll-up indicating the presence of a noise floor at about \(10^{-6} (\text {m/s})^2 / \text {Hz}\) corresponding to a rms velocity fluctuation of 0.05 m/s. The increase in sampling window size from \(40\!\times \!40\) to \(64\!\times \! 64\) pixel reduces the noise floor and steepens the slope in the dissipation range of the energy cascade. A maximum velocity of \(U_\infty = 2\) m/s and a noise level of 0.02 m/s in the quiescent (laminar) flow suggests a dynamic velocity range (DVR) of 100:1 for the present measurement. This is in agreement with the uncertainty estimates obtained from the laminar boundary layer (c.f. Fig. 12b, c).

6 Discussion

Due to bandwidth limitations of the sensor/camera the event rate that can be continuously acquired is limited. In comparison to continuous illumination, where particles continuously generate events during their movement, the pulsed illumination results in spatially confined event generation (“frozen” particles). Hence, the same number of particles will produce less events per unit time with pulsed illumination. The previous experiments have shown that up to \(40\!\cdot \! 10^6\) events/s are feasible with pulsed particle illumination. Above \(40\!\cdot \!10^6\) events/s the arbiters begin to saturate and can no longer process the pending events within the “framing” rate imposed by the pulsing laser light. In comparison to continuous illumination more particles can be captured; under ideal circumstances each particle would produce exactly one event per light pulse. Particle streaking due to finite laser pulse duration, limited optical resolution (blurring) and non-uniform scattering intensity will result in particles producing multiple events per laser pulse.

Given that the number of reliably captured events per time-unit is constant, the number of particles per pseudo-frame reduces proportional to the increase of the laser pulsing rate which determines the effective frame rate of the pseudo-images. For the present hardware limit of (about) \(40\!\cdot \! 10^6\) events/s at best 8 000 particles can be tracked simultaneously by the HD sensor at a laser pulsing rate of \(f_p \!=\! 5\) kHz, assuming that each particle produces one event per pulse on average. In practice, it was found that each particle produced on average about 1.5 events in air and about 2.0 events in water (see Table 2). These values are strongly influenced by imaging conditions (focus, lens quality, optical distortion, and random event noise). Increasing the pulse energy or the particle image density (ppp) results in a saturation of the sensor’s arbiters with consequential loss of timing information. Once the arbiters are saturated, events can no longer be assigned to specific light pulses; the histogram of the event temporal distribution becomes uniform without distinct peaks corresponding to the light pulsing frequency (c.f. Fig 8d).

Compared to the continuous illumination of the particles, the pulsed illumination results in a nearly constant particle image density, regardless of the flow velocity. This is best explained by considering that for continuous illumination faster moving particles scatter less light per unit time onto a given pixel such that event rate reduces up to the point of producing no events. With pulsed illumination the respective pixels receive the same amount of light (photons) regardless of the particle’s velocity, assuming sufficiently short pulses to prevent particle image streaking. The finite pulse width of the low cost (engraving) lasers used herein results in a certain amount of image streaking for faster moving particles; that is, multiple spatially adjoining events are produced during the laser pulse. While more costly, Q-switched high-speed lasers with narrow pulse widths in the sub-microsecond range would further improve the quality of the acquired event data.

The pulsed light also makes stationary particles visible which would normally be hidden under constant illumination conditions. In previous implementations of EBIV the lack of events in quiescent regions of a flow have resulted in data loss (Willert and Klinner 2022a). If timing and pulse energies are carefully adjusted, the pulsed illumination does not result in an avalanche of events that would otherwise saturate the sensor. Strong background signal levels and light scattering from surfaces are less problematic in comparison to PIV recording under similar illumination conditions. Areas that would otherwise be saturated (overexposed) in conventional camera images can produce CD events provided that the intensity change is sufficiently high.

7 Conclusion

By being able to clearly associate captured events from the EBV sensor with a given light pulse permits a more reliable tracking of particles because the timing uncertainty (i.e., latency) introduced by the sensor’s arbiters can be accounted for. This also opens the possibility to extend the method for 3d tracking of particles using multiple synchronized cameras. In previous 3d tracking implementations the timing uncertainty (i.e., latency and jitter) of the recorded events imposed significant uncertainties and required special filtering approaches in the 3d reconstruction of the space-time particle tracks (c.f. Borer et al. 2017). More recent work performed by Rusch and Rösgen (2021, 2022) resulted in a 3d particle tracking velocimetry (PTV) system, called TrackAER, that is capable of reconstructing dense velocity fields of the imaged volume in real time with accuracies in the sub-percent range. Relying on continuous illumination (LED arrays) and hydrogen-filled soap bubble tracers, reliable measurements are possible on a field of view of about 1 m at wind tunnel speeds up to about 50 m/s, beyond which progressive signal loss becomes apparent. On the sensor this corresponds to about 50 000 pixel/s. Using the described pulsed illumination approach, measurements beyond 200 000 pixel/s become feasible (i.e., 20 pixel displacement at 10 kHz light pulsing rate).

Within this work the event-data was analyzed in an off-line manner using correlation-based algorithms that rely on pseudo-images rendered as an intermediate step. Alternatively, direct event-tracking schemes, derived, e.g., from established PTV algorithms, should be able to provide reliable Lagrangian tracking data of the imaged particles with considerably less computational effort. The track data could then be projected onto an Eulerian frame to obtain flow maps matching those produced by the correlation-based schemes presented herein (Gesemann et al. 2016; Godbersen and Schröder 2020). The possibility of performing the event-based particle image tracking in real-time has been demonstrated by Rusch and Rösgen (2021, 2022).

The high sensitivity of the EBV sensor reduces the demand for high-power laser illumination. The low cost of the event-based cameras in comparison to high-speed cameras of similar frame rate (10 kHz at 1 MPixel) makes 4d Lagrangian particle tracking viable and affordable. Also, the EBV camera hardware has a low power consumption (1–5 W), is very compact (cube of 30–40 mm, without lens) and has a low weight with the production versions of these cameras weighing about 50 g. Nonetheless, it should be mentioned that the lack of grayscale information along with the bandwidth limitations of currently available event cameras results in a reduction of achievable particle image densities compared to imaging setups using conventional high-speed framing cameras.

Availability of code, data and materials

Sample event data can be obtained from the author upon request. Further code and data is available for download at https://github.com/cewdlr/ebiv.

References

Borer D, Delbruck T, Rösgen T (2017) Three-dimensional particle tracking velocimetry using dynamic vision sensors. Exp Fluids. https://doi.org/10.1007/s00348-017-2452-5

Bouvier M (2021) Study and design of an energy efficient perception module combining event-based image sensors and spiking neural network with 3D integration technologies. Theses, Université Grenoble Alpes [2020-....], https://tel.archives-ouvertes.fr/tel-03405455

Brandli C, Mantel T, Hutter M et al (2014) Adaptive pulsed laser line extraction for terrain reconstruction using a dynamic vision sensor. Front Neurosci. https://doi.org/10.3389/fnins.2013.00275

Finateu T, Niwa A, Matolin D, et al (2020) 5.10 - A 1280\(\times\)720 back-illuminated stacked temporal contrast event-based vision sensor with 4.86 \(\upmu\)m pixels, 1.066GEPS readout, programmable event-rate controller and compressive data-formatting pipeline. In: 2020 IEEE international solid- state circuits conference - (ISSCC), pp 112–114, https://doi.org/10.1109/ISSCC19947.2020.9063149

Gallego G, Delbrück T, Orchard G et al (2022) Event-based vision: a survey. IEEE Trans Pattern Anal Mach Intell 44(1):154–180. https://doi.org/10.1109/TPAMI.2020.3008413

Gesemann S, Huhn F, Schanz D, et al (2016) From noisy particle tracks to velocity, acceleration and pressure fields using b-splines and penalties. In: 18th international symposium on applications of laser techniques to fluid mechanics, no. 186 in Conference Proceedings online, Book of Abstracts, pp 1–17, https://elib.dlr.de/101422/

Godbersen P, Schröder A (2020) Functional binning: improving convergence of Eulerian statistics from Lagrangian particle tracking. Meas Sci Technol 31(9):095304. https://doi.org/10.1088/1361-6501/ab8b84

Huang X, Zhang Y, Xiong Z (2021) High-speed structured light based 3d scanning using an event camera. Opt Express 29(22):35864–35876. https://doi.org/10.1364/OE.437944

Joubert D, Hébert M, Konik H et al (2019) Characterization setup for event-based imagers applied to modulated light signal detection. Appl Opt 58(6):1305–1317. https://doi.org/10.1364/AO.58.001305

Lichtsteiner P, Posch C, Delbruck T (2008) A 128\(\times\)128 120 dB 15 \(\upmu\)s latency asynchronous temporal contrast vision sensor. IEEE J Solid-State Circuits 43(2):566–576. https://doi.org/10.1109/JSSC.2007.914337

Lynch K, Scarano F (2013) A high-order time-accurate interrogation method for time-resolved PIV. Meas Sci Technol 24(3):035305. https://doi.org/10.1088/0957-0233/24/3/035305

Mahowald M (1992) VLSI analogs of neuronal visual processing: a synthesis of form and function. PhD thesis, California Institute of Technology, Pasadena (CA), https://resolver.caltech.edu/CaltechCSTR:1992.cs-tr-92-15

Muglikar M, Gallego G, Scaramuzza D (2021) ESL: event-based structured light. CoRR abs/2111.15510. https://arxiv.org/abs/2111.15510

Robotics and Perception Group (2022) Event-based vision resources. https://github.com/uzh-rpg/event-based_vision_resources,

Rusch A, Rösgen T (2021) TrackAER: Real-time event-based particle tracking. In: 14th international symposium on particle image velocimetry (ISPIV 2021). Illinois Institute of Technology, Chicago, IL, p 176, https://doi.org/10.18409/ispiv.v1i1.176

Rusch A, Rösgen T (2022) Online event-based insights into unsteady flows with TrackAER. In: 20th international symposium on application of laser and imaging techniques to fluid mechanics, Lisbon, Portugal, https://www.research-collection.ethz.ch/handle/20.500.11850/588738

Schlatter P, Örlü R (2010) Assessment of direct numerical simulation data of turbulent boundary layers. J Fluid Mech 659:116–126. https://doi.org/10.1017/S0022112010003113

Sciacchitano A, Scarano F, Wieneke B (2012) Multi-frame pyramid correlation for time-resolved PIV. Exp Fluids 53:1087–1105. https://doi.org/10.1007/s00348-012-1345-x

Takatani T, Ito Y, Ebisu A, et al (2016) Event-based bispectral photometry using temporally modulated illumination. In: IEEE conference computer vision and pattern recognition (CVPR)

Tayarani-Najaran MH, Schmuker M (2021) Event-based sensing and signal processing in the visual, auditory, and olfactory domain: A review. Frontiers in Neural Circuits. https://doi.org/10.3389/fncir.2021.610446

Westerweel J (2002) Theoretical analysis of the measurement precision in particle image velocimetry. Exp Fluids 29(Suppl):S3–S12

Westerweel J, Scarano F (2005) Universal outlier detection for PIV data. Exp Fluids 39(6):1096–1100. https://doi.org/10.1007/s00348-005-0016-6

Westerweel J, Dabiri D, Gharib M (1997) The effect of a discrete window offset on the accuracy of cross-correlation analysis of digital PIV recordings. Exp Fluids 23:20–28. https://doi.org/10.1007/s003480050082

Willert C (2015) High-speed particle image velocimetry for the efficient measurement of turbulence statistics. Exp Fluids 56(1):17. https://doi.org/10.1007/s00348-014-1892-4

Willert C, Klinner J (2022) Event-based imaging velocimetry: An assessment of event-based cameras for the measurement of fluid flows. Exp Fluids 63:101. https://doi.org/10.1007/s00348-022-03441-6

Willert C, Klinner J (2022b) Event-based imaging velocimetry applied to a cylinder wake flow in air. In: 20th international symposium on application of laser and imaging techniques to fluid mechanics, https://elib.dlr.de/187568/1/LISBON_LxSymp_2022_paper230.pdf

Willert C, Schanz D, Novara M, et al (2021) Multi-resolution, time-resolved piv measurements of a decelerating turbulent boundary layer near separation. In: 14th international symposium on particle image velocimetry (ISPIV 2021), https://doi.org/10.18409/ispiv.v1i1.77,

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was made possible through DLR-internal funding.

Author information

Authors and Affiliations

Contributions

Not applicable due to single authorship, the material contained in this manuscript was prepared and written solely by the author.

Corresponding author

Ethics declarations

Conflict of interest

There are no conflict interests to declare.

Ethics approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 9719 KB)

Supplementary file 2 (mp4 14457 KB)

Supplementary file 3 (mp4 24118 KB)

Supplementary file 4 (mp4 20902 KB)

Appendices

Appendix A Estimation of particle image density

Compared to conventional frame-based imaging the estimation of particle image density from asynchronous event data is slightly different due the fact that particles appear at distinct times. Therefore, a measure of particle image per pixel and time would be more appropriate. In the case of pulsed illumination of particles, event times are assigned with the pulse timing as described in the preceding manuscript (c.f. Figure 4) allowing the event-data to be rendered as pseudo-images. These pseudo-images can then be used in the estimation of particle image density in ppp allowing a comparison to frame-based imaging approaches.

Depending on scattering behavior and imaging conditions, a given particle illuminated by a light pulse can produce multiple events both spatially and temporally. Collected into pseudo-images this results in clusters of pixels representing individual particle images that can be counted. The cluster count is then used for the estimation of the particle image density in ppp and the particle image size \(d_i\). Here, the mean particle image size \(d_i\) is defined as the square root of the average cluster size. Also included in the ppp estimate is the random event noise that varies depending on the EBV sensor’s bias settings. For the presented measurements this random noise is typically less than a percent but may reach up to several percent (see, e.g., Fig. 8c). The random event contribution could be estimated by applying Lagrangian particle tracking which separates noise from particle image tracks.

For the PIV data presented in Sect. 5.1 and Table 1 the particle image density is estimated by first subtracting the intensity mean of all images from an individual PIV recording. The residual image is then binarized resulting in clusters that can be sized and counted as for the event-based pseudo-images. Due to the mean intensity subtraction the actual ppp for the PIV recordings may be higher than the estimates.

Appendix B Supplementary material

Animated sequences of the acquired event data are provided as supplementary material. All sequences were originally recorded with a laser pulsing frequency of \(f_p \!=\! 5\) kHz with pseudo-frames incremented by \(1/f_p \!=\! 200\,\upmu\)s. At a playback speed of 25 frame/s the events display at \(0.005\times\) actual speed. Duration of all sequences is 50 ms. Only positive events are rendered.

-

turbulent jet in water: 2 ms time-slices (10 pulses)

-

laminar boundary layer: 1 ms time-slices (5 pulses)

-

flow around rib (upstream location): 2 ms time-slices (10 pulses)

-

flow around rib (downstream location): 2 ms time-slices (10 pulses)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Willert, C.E. Event-based imaging velocimetry using pulsed illumination. Exp Fluids 64, 98 (2023). https://doi.org/10.1007/s00348-023-03641-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-023-03641-8