Abstract

Objectives

This study aims to define consensus-based criteria for acquiring and reporting prostate MRI and establishing prerequisites for image quality.

Methods

A total of 44 leading urologists and urogenital radiologists who are experts in prostate cancer imaging from the European Society of Urogenital Radiology (ESUR) and EAU Section of Urologic Imaging (ESUI) participated in a Delphi consensus process. Panellists completed two rounds of questionnaires with 55 items under three headings: image quality assessment, interpretation and reporting, and radiologists’ experience plus training centres. Of 55 questions, 31 were rated for agreement on a 9-point scale, and 24 were multiple-choice or open. For agreement items, there was consensus agreement with an agreement ≥ 70% (score 7–9) and disagreement of ≤ 15% of the panellists. For the other questions, a consensus was considered with ≥ 50% of votes.

Results

Twenty-four out of 31 of agreement items and 11/16 of other questions reached consensus. Agreement statements were (1) reporting of image quality should be performed and implemented into clinical practice; (2) for interpretation performance, radiologists should use self-performance tests with histopathology feedback, compare their interpretation with expert-reading and use external performance assessments; and (3) radiologists must attend theoretical and hands-on courses before interpreting prostate MRI. Limitations are that the results are expert opinions and not based on systematic reviews or meta-analyses. There was no consensus on outcomes statements of prostate MRI assessment as quality marker.

Conclusions

An ESUR and ESUI expert panel showed high agreement (74%) on issues improving prostate MRI quality. Checking and reporting of image quality are mandatory. Prostate radiologists should attend theoretical and hands-on courses, followed by supervised education, and must perform regular performance assessments.

Key Points

• Multi-parametric MRI in the diagnostic pathway of prostate cancer has a well-established upfront role in the recently updated European Association of Urology guideline and American Urological Association recommendations.

• Suboptimal image acquisition and reporting at an individual level will result in clinicians losing confidence in the technique and returning to the (non-MRI) systematic biopsy pathway. Therefore, it is crucial to establish quality criteria for the acquisition and reporting of mpMRI.

• To ensure high-quality prostate MRI, experts consider checking and reporting of image quality mandatory. Prostate radiologists must attend theoretical and hands-on courses, followed by supervised education, and must perform regular self- and external performance assessments.

Similar content being viewed by others

Introduction

Multi-parametric MRI (mpMRI) in the diagnostic pathway of prostate cancer (PCa) has a well-established upfront role in the recently updated European Association of Urology (EAU) guideline and American Urological Association recommendations [1, 2]. For biopsy-naïve men with suspicion of PCa, based on an elevated serum prostate–specific antigen level or abnormal digital rectal examination, it is now recommended to undergo a mpMRI before biopsy. Incorporation of mpMRI in the diagnostic pathway of men with clinical suspicion of PCa has several advantages compared to a systematic transrectal ultrasonography–guided biopsy (TRUSGB) approach. MRI can rule out clinically significant (cs)PCa and, therefore, will result in fewer unnecessary prostate biopsies [3,4,5]. Also, mpMRI reduces overdiagnosis and overtreatment of low-grade cancer [5,6,7,8,9]. Finally, mpMRI allows targeted biopsies of those lesions assessed as suspicious, enabling better risk stratification [10].

If one wants to take advantage of the ‘MRI pathway’, annually 1,000,000 men in Europe need to have a pre-biopsy MRI [11]. Performing such a high number of mpMRIs with high-quality acquisition and high-quality reporting is a significant challenge for the uroradiological community. Fortunately, the recently updated Prostate Imaging-Reporting and Data System (PI-RADS) version 2.1 defines global standardization of reporting and recommends uniform acquisition [12]. However, there is a lack of consensus on how to assure and uphold mpMRI acquisition and reporting quality. There is also a need to define requirements for learning and accumulation of reporting experience for mpMRI.

Suboptimal image acquisition and reporting at an individual level will result in clinicians losing confidence in the technique and returning to the (non-MRI) TRUS biopsy pathway. Therefore, it is crucial to establish quality criteria for both acquisition and reporting of mpMRI. Thus, this study aims to define consensus-based criteria for acquiring and reporting mpMRI scans and determining the prerequisites for mpMRI quality.

Materials and methods

A Delphi consensus process was undertaken to formulate recommendations regarding three different areas in the diagnostic MRI pathway of PCa: (1) image quality assessment of mpMRI; (2) interpretation and reporting of mpMRI; and (3) reader experience and training requirements. The Delphi method is a technique of structured and systematic information gathering from experts on a specific topic using a series of questionnaires [13]. In this study, the diagnostic role of mpMRI in biopsy-naïve men with a suspicion of PCa was considered.

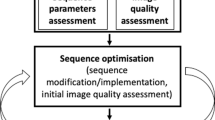

The Delphi process was carried out in four phases (Fig. 1). (1) Panellists from the European Society of Urogenital Radiology (ESUR) and EAU Section of Urologic Imaging (ESUI) were selected based on expertise and publication record in the PCa diagnosis, and on their involvement in guideline development. (2) A questionnaire was created with items that were identified by a subcommittee of the ESUR, based on the statements from a recent UK consensus paper on implementation of mpMRI for PCa detection [14]. (3) Panel-based consensus findings were determined using an online Delphi process. For this purpose, an internet survey was generated and sent by email to the members of the group (created in Google Forms). In the second round, a reminder to complete the questionnaires was sent by email. The panellists anonymously completed two rounds of a questionnaire consisting of 39 items (including 55 subquestions). Based on the knowledge of the entire group’s responses in the first round, second round voting was performed. Outcomes of the multiple-choice and open questions were graphically displayed, so the results could be reflected before selecting a response in the second round. For inclusion in the final recommendations, each survey item required to have reached group consensus by the end of the two survey rounds. (4) The items of the questionnaires were analysed, and consensus statements were formulated based on the outcomes. In total, 31 of 55 items were rated for agreement on a 9-point Likert scale.

An item scored as ‘agree’ (score 7–9) by ≥ 70% of participants and disagree (score 1–3) by ≤ 15% constituted ‘consensus agreement’ for an item. An item scored as ‘disagree’ (score 1–3) by ≥ 70% of participants and agree (score 7–9) by ≤ 15% was considered as ‘consensus disagreement’. The other items (24 of 55) were multiple-choice or open questions and were presented graphically. For the multiple-choice or open questions to reach consensus, a panel majority scoring of ≥ 50% was required.

Results

The response rate for both rounds was 58% (44 of 76). The final panel comprised 44 urologists and urogenital radiologists who are experts in prostate cancer imaging. After the first round, eight subquestions were deleted based on comments from the panellists in the free-text fields because they considered these items either a duplication or not relevant (questions: 8b, 9b, 10b, 16c, 16d, 19b, 26b, 32b; Tables 1, 2 and 3). All deleted subquestions were questions without consensus in the first round.

After the first round of the Delphi process, consensus agreement was obtained in 19 of 31 (61%) questions that could be rated on a 1–9 scale. Consensus was obtained in 1 of 24 (4.2%) of multiple-choice/open questions. After the second round, this improved to 24 of 31 (77%) and in 11 of 16 (69%), respectively. None of the statements received consensual disagreement. Agreement statements combined with the outcomes of the multiple-choice/open questions were used to provide input for the recommendations regarding image quality and learning of prostate mpMRI and expertise of (training) centres (Tables 4 and 5).

Section 1: Image quality assessment

The panellists consensually agreed on all five agreement statements in this section (Table 1). Consensus was reached on 2 out of 3 multiple-choice questions. Assessment of the technical image quality measures should be checked (question (Q)1) and reported (Q2), which can be qualitatively done by visual assessments by radiologists (Q5). Checking image quality is realistic and should be implemented into clinical practice (Q3). A majority of the panellists voted for external and objective image quality assessment regularly at 6 months or longer intervals (70%; 31 of 44 panellists). There was no consensus on whether image quality assessment was to be performed after a set number of cases, and panellists chose an interval of 300 or ≥ 400 cases in 25% (11 of 44 panellists) and 41% (18 of 44), respectively. Image quality checks could also be performed on a randomly selected sample of cases, wherein a majority (64%; 28 of 44 panellists) agreed that a selection of 5% of exams is most appropriate, but commented this could be dependent on the number of cases per centre.

Furthermore, the use of a standardized phantom for apparent diffusion coefficient value measurements is advocated (Q4), to enable quantifiable apparent diffusion coefficient (ADC) values that could be used as a threshold for the detection of csPCa in the peripheral zone.

Section 2: Interpretation and reporting of mpMRI

The panellists have reached a consensus on 5/10 statements in this section (Table 2). There was no consensus on the multiple-choice questions in this section.

There was agreement on the use of self-performance tests to evaluate a radiologists’ performance (Q8a). Panellists did not agree upon the ideal frequency for this evaluation (Q8b; only answered in round 1). Consensus was reached on making use of histopathologic feedback, which is mandatory to evaluate the radiologists’ interpretation performance (Q11). Also, consensus was reached; comparing the radiologists’ performance to expert-reading (Q12), the use of external performance assessments (Q9a), and the use of internet-based histologically validated cases (Q13) should be part of the quality assessment, to improve individual radiologists’ skill at interpretation.

There was no consensus on using institution-based audits as part of the quality assessment on acquisition and reporting (Q10a). Also, there was no consensus on the use of a percentage of non-suspicious mpMRI (PI-RADS 1 or 2) as a marker for the quality of reporting (Q15a); the use of a percentage of PI-RADS 3, 4 or 5 as a marker for the quality of interpretation (Q16a); and on the questions about monitoring the percentages of PI-RADS 1–2 (non-suspicious), 3 (equivocal) or 4–5 (suspicious) lesions as markers for the quality of scan interpretations. Multiple panellists commented that the percentages in Q15 and Q16 are highly dependent on the prevalence of csPCa in the population at risk. There was no agreement on impelling a database with MRI and correlative histology mandatory (Q14).

Section 3: Experience and training centres

This section comprised questions regarding general requirements for radiologists who interpret prostate mpMRI and statements on knowledge levels and experience (Table 3). Consensus was reached on 14 out of 16 agreement statements (88%) and 9 out of 11 multiple-choice/open questions (82%).

General requirements

Before independently reading prostate mpMRI, radiologists should undertake a combination of core theoretical prostate mpMRI courses with lectures on the existing knowledge about prostate cancer (imaging) and hands-on practice at workstations where experts supervise reporting (Q17). The panellists agreed upon certification of training (Q26a). However, there was no consensus on what body (national or European) should be the certifying organization (Q26b). For good prostate mpMRI quality, assessment of the technical quality measures should be in place (Q33), and minimal technical requirements according to PI-RADS v2 should be met (Q34). Panellists agreed that peer reviews of image quality should be organized (Q35). PI-RADS should be used as a basic assessment tool (Q37). There was no consensus about impelling double-reading (Q36).

A prerequisite for radiologists who interpret and report prostate mpMRI should be that they participate in the multidisciplinary team (MDT) meetings or attend MDT-type workshops where patient-based clinical scenarios are discussed (Q19a). There was no agreement on the number of MDT meetings that should be attended per year. An MDT must include mpMRI review with histology results from the biopsy and, if performed, radical prostatectomy specimens (Q20) and presence of representatives from the urology, radiology, pathology and medical and radiation oncology departments (Q21). Prostate radiologists should have roles in the MDT in shared decision-making on (how to perform) targeted biopsy (Q23). Within this MDT, they should be aware of alternative diagnostic methods (risk stratification algorithms in diagnostic and treatment work-up) (Q25). Prostate radiologists should know the added value of mpMRI and the consequences of false positive or false negative mpMRI (Q24).

Knowledge levels

There was no consensus about introducing several knowledge levels for prostate radiologists (Q32a), for instance, general (basic), good clinical (subspeciality) and top-level (reference centre). The panellists answered multiple-choice questions about the experience requirements for ‘basic’ versus ‘expert’ prostate radiologists and reached consensus on 8 out of 9 (sub)questions (see Table 4).

Novice prostate radiologists should begin with supervised reporting. A majority of the panellists favoured supervised reporting for at least 100 cases before independent reporting (57%; 25 of 44 panellists). In total, novice prostate radiologists should have read 400 cases to qualify as a ‘basic prostate radiologist’ (93%; 41 of 44 panellists). They should be carrying out a minimum of 150 cases/year (52%; 23 of 44 panellists) and perform an examination every year (57%; 25 of 44 panellists). In double-reads, basic prostate radiologists should have at least 80% agreement with an expert training centre read (52%; 23 of 44 panellists) on the assessment of PI-RADS 1–2 versus 3–5 lesions.

Expert prostate radiologists should have read at least 1000 cases (77%; 34 of 44 panellists). There was no consensus on how many exams an expert radiologist should read annually. Eighteen of 44 (41%) panellists favoured 200 cases/year, while 14 out of 44 (32%) panellists thought that expert radiologists should be carrying out a minimum of 500 cases/year. Expert radiologists should perform an examination every 4 years (75%; 33 of 44 panellists). They should have at least 90% agreement with an expert training centre read (64%; 28 of 44 panellists).

Fifty percent of the panellists (22 of 44 panellists) voted for at least 500 cases a year to give hands-on training. There was no consensus on the required number of cases per year a high-throughput centre should perform before being able to organize educational courses.

Discussion

There is a lack of evidence on how to assess prostate mpMR image quality and on the requirements for those reading the examinations, including learning and experience prerequisites for independent reporting. This Delphi consensus documented by expert radiologists and expert urologists from the ESUR and the ESUI provides a set of recommendations to address these issues. They are offered as a starting point to improve the acquisition and reporting quality of mpMR images.

Three headings summarize the outcomes: (1) image quality assessment, (2) interpretation and reporting and (3) experience and training centres.

Image quality assessment

There is a considerable variation in prostate MR image quality and compliance with recommendations on acquisition parameters. In a recent UK quality audit, 40% of patients did not have a prostate MRI that was adequate for interpretation, with a 38–86% compliance variation with recognized acquisition standards [12, 15,16,17]. The panellists agreed that reporting of image quality must be performed and implemented into clinical practice. Checking image quality was expected to improve mpMRI reproducibility. Before translating these recommendations into clinical practice, efforts are needed to develop qualitative and preferably also quantitative criteria to assess image quality.

Interpretation and reporting

The panellists reached consensus on using self-performance tests, with histopathologic feedback, preferably compared to expert reading as well as to external performance assessments to determine individual radiologists’ reporting accuracy. A lower level of PI-RADS 3 cases (indeterminate probability of csPCa) is seen in expert centres compared to non-expert centres in biopsy-naïve men [7, 18]. However, the panel did not reach consensus on the use of cut-off levels for the various PI-RADS categories (1–2, 3 and 4–5). A minority of panellists favoured the use of a percentage as an indicator for the interpretation quality; most of them suggested a minimal PI-RADS 1–2 percentage of 20%, a maximum PI-RADS 3 percentage of 20–30% and a minimum percentage of PI-RADS 4 and PI-RADS 5 of 20–30% each. The high dependence of the PI-RADS distribution on the prevalence of csPCa is the reason for this lack of consensus. Nonetheless, in specifically defined populations, e.g. European biopsy-naïve patients (average csPCa prevalence of 25–40%), the percentage of PI-RADS 3 potentially is an indication of the ‘certainty’ of diagnosis and thus of image quality and reading. Recent studies show that differences of PI-RADS 3 rates (6–28%) are also attributable to magnetic field strength (1.5 versus 3 T, thus image quality), to strict adherence to the use of PI-RADS-assessment and of expert double-reading [7,8,9, 19, 20].

Experience and training centres

There are scarce data that show a learning curve effect for mpMRI, the effect of a dedicated reader education program on PCa detection and diagnostic confidence and the effect of an online interactive case-based interpretation program [21,22,23,24]. Moreover, experienced urogenital radiologists show higher inter-reader agreement and better area under the receiver operating curve (AUC) characteristics as to radiologists with lower levels of experience [25,26,27,28,29]. In a relatively small sample size study, the AUC seems to remain stable after reading 300 cases but is significantly lower in readers who have read only 100 cases [27]. Nevertheless, thresholds for the number of prostate mpMRIs required before independent reporting and before reaching an expert level and the corresponding number of cases per year are not yet well established. Several previous studies suggested a dedicated training course followed by ≥ 100 expert-supervised mpMRI examinations [14, 22, 30]. For smaller centres or radiology groups that want to start a prostate MRI program, there are several existing (international) hands-on courses or possibilities to arrange (online) supervised readings by expert centres to facilitate this. The expert panel agreed that before interpreting mpMRI in addition to the recommendations in sections 1 and 2, a course should be attended, including theoretical and hands-on practice. Also, the expert panel listed a set of criteria for ‘basic’ and ‘expert’ prostate radiologists (Table 4). Radiologists should have read 100 supervised cases before independent reporting, have read a minimum of 300 cases before being classified as a ‘basic’ prostate radiologist and continue to read a minimum of 150 cases a year. For being classified as an ‘expert’ prostate radiologist, a minimum number of 1000 cases should be read. Also, there should be an examination every year for a novice prostate radiologist, and every 4 years for an expert. The panel did not reach a consensus on the number of cases a year an expert prostate radiologist should read (200/≥ 500 cases).

Some limitations need to be recognized. One of the limitations of a Delphi consensus process is that the results reflect the opinions of a selection of experts and are not based on a systematic literature review or meta-analyses. The methodology captures what experts think, and not what the evidence indicates in data-poor areas of practice. Also, definitions for consensus are arbitrary, and other definitions could result in different recommendations. The opinions of the expert panel can represent the intuition of experienced, knowledgeable practitioners who anticipate what the evidence would or will show, but they also can be wrong. Conflicts of interest can also influence expert opinion. However, as there are quite many participants (44), the influence of these biases is likely to be minimal. This modified Delphi process used a rigorous methodology in which questions were carefully designed. The consensus process and its results should be used for structuring the discussions of important topics regarding prostate MR image quality that currently lack evidence in the literature.

Because the questions addressed by the consensus are highly relevant for daily clinical practice, we are careful to emphasise that simply because experts agree does not mean they are right. Nevertheless, this consensus contributes to our knowledge. It captures what experts in the field think today regarding the need to implement reliable, high-quality prostate mpMRI as a diagnostic examination in the diagnostic pathway of biopsy-naïve men at risk of csPCa. This consensus-based statement should be used as a starting point, from where specific (reporting) templates will be developed, and future studies should be performed to validate the criteria and recommendations.

Conclusion

This ESUR/ESUI consensus statement summarises in a structured way the opinions of recognized experts in diagnostic prostate mpMRI issues that are not adequately addressed by the existing literature. We focussed on recommendations on image quality assessment criteria and prerequisites for acquisition and reporting of mpMRI. Checking and reporting of prostate MR image quality are mandatory. Initially, prostate radiologists should have attended theoretical and hands-on courses, followed by supervised education, and must perform regular self- and external performance assessments, by comparing their diagnoses with histopathology outcomes.

Abbreviations

- csPCa:

-

Clinically significant prostate cancer

- EAU:

-

European Association of Urology

- ESUI:

-

EAU Section of Urologic Imaging

- ESUR:

-

European Society of Urogenital Radiology

- MDT:

-

Multidisciplinary team

- mpMRI:

-

Multi-parametric MRI

- PCa:

-

Prostate cancer

- Q:

-

Question

- TRUSGB:

-

Transrectal ultrasound–guided biopsy

References

Mottet N, van den Bergh RCN, Briers E et al (2019) EAU – ESTRO – ESUR – SIOG guidelines on prostate cancer 2019 European Association of Urology guidelines 2019 Edition. European Association of Urology Guidelines Office, Arnhem, The Netherlands

Bjurlin MA, Carroll PR, Eggener S et al (2020) Update of the Standard Operating Procedure on the Use of Multiparametric Magnetic Resonance Imaging for the Diagnosis, Staging and Management of Prostate Cancer. J Urol 203:706–712

Moldovan PC, Van den Broeck T, Sylvester R et al (2017) What is the negative predictive value of multiparametric magnetic resonance imaging in excluding prostate cancer at biopsy? A systematic review and meta-analysis from the European Association of Urology Prostate Cancer guidelines panel. Eur Urol 72:250–266

Panebianco V, Barchetti G, Simone G et al (2018) Negative multiparametric magnetic resonance imaging for prostate cancer: what’s next? Eur Urol 74:48–54

Drost FH, Osses D, Nieboer D et al (2020) Prostate magnetic resonance imaging, with or without magnetic resonance imaging-targeted biopsy, and systematic biopsy for detecting prostate cancer: a Cochrane systematic review and meta-analysis. Eur Urol 77:78–94

van der Leest M, Cornel E, Israel B et al (2019) Head-to-head comparison of transrectal ultrasound-guided prostate biopsy versus multiparametric prostate resonance imaging with subsequent magnetic resonance-guided biopsy in biopsy-naive men with elevated prostate-specific antigen: a large prospective multicenter clinical study. Eur Urol 75:570–578

Kasivisvanathan V, Rannikko AS, Borghi M et al (2018) MRI-targeted or standard biopsy for prostate-cancer diagnosis. N Engl J Med 378:1767–1777

Rouviere O, Puech P, Renard-Penna R et al (2019) Use of prostate systematic and targeted biopsy on the basis of multiparametric MRI in biopsy-naive patients (MRI-FIRST): a prospective, multicentre, paired diagnostic study. Lancet Oncol 20:100–109

Ahmed HU, El-Shater Bosaily A, Brown LC et al (2017) Diagnostic accuracy of multi-parametric MRI and TRUS biopsy in prostate cancer (PROMIS): a paired validating confirmatory study. Lancet 389:815–822

Kasivisvanathan V, Stabile A, Neves JB et al (2019) Magnetic resonance imaging-targeted biopsy versus systematic biopsy in the detection of prostate cancer: a systematic review and meta-analysis. Eur Urol 76:284–303

Ferlay J, Colombet M, Soerjomataram I et al (2018) Cancer incidence and mortality patterns in Europe: estimates for 40 countries and 25 major cancers in 2018. Eur J Cancer 103:356–387

Turkbey B, Rosenkrantz AB, Haider MA et al (2019) Prostate imaging reporting and data system version 2.1: 2019 update of prostate imaging reporting and data system version 2. Eur Urol 76:340–351

Yeh JS, Van Hoof TJ, Fischer MA (2016) Key features of academic detailing: development of an expert consensus using the Delphi method. Am Health Drug Benefits 9:42–50

Brizmohun Appayya M, Adshead J, Ahmed HU et al (2018) National implementation of multi-parametric magnetic resonance imaging for prostate cancer detection - recommendations from a UK consensus meeting. BJU Int 122:13–25

Esses SJ, Taneja SS, Rosenkrantz AB (2018) Imaging facilities’ adherence to PI-RADS v2 minimum technical standards for the performance of prostate MRI. Acad Radiol 25:188–195

Burn PR, Freeman SJ, Andreou A, Burns-Cox N, Persad R, Barrett T (2019) A multicentre assessment of prostate MRI quality and compliance with UK and international standards. Clin Radiol 74:894 e819–894 e825

Engels RRM, Israel B, Padhani AR, Barentsz JO (2020) Multiparametric magnetic resonance imaging for the detection of clinically significant prostate cancer: what urologists need to know. part 1: acquisition. Eur Urol 77:457–468

van der Leest M, Israel B, Cornel EB et al (2019) High diagnostic performance of short magnetic resonance imaging protocols for prostate cancer detection in biopsy-naive men: the next step in magnetic resonance imaging accessibility. Eur Urol 76:574–581

Barentsz JO, van der Leest MMG, Israel B (2019) Reply to Jochen Walz. Let’s keep it at one step at a time: why biparametric magnetic resonance imaging is not the priority today. Eur Urol 2019;76:582-3: how to implement high-quality, high-volume prostate magnetic resonance imaging: Gd contrast can help but is not the major issue. Eur Urol 76:584–585

Barrett T, Slough R, Sushentsev N et al (2019) Three-year experience of a dedicated prostate mpMRI pre-biopsy programme and effect on timed cancer diagnostic pathways. Clin Radiol 74:894 e891–894 e899

Garcia-Reyes K, Passoni NM, Palmeri ML et al (2015) Detection of prostate cancer with multiparametric MRI (mpMRI): effect of dedicated reader education on accuracy and confidence of index and anterior cancer diagnosis. Abdom Imaging 40:134–142

Rosenkrantz AB, Ayoola A, Hoffman D et al (2017) The learning curve in prostate MRI interpretation: self-directed learning versus continual reader feedback. AJR Am J Roentgenol 208:W92–W100

Rosenkrantz AB, Begovic J, Pires A, Won E, Taneja SS, Babb JS (2019) Online interactive case-based instruction in prostate magnetic resonance imaging interpretation using prostate imaging and reporting data system version 2: effect for novice readers. Curr Probl Diagn Radiol 48:132–141

Gaziev G, Wadhwa K, Barrett T et al (2016) Defining the learning curve for multiparametric magnetic resonance imaging (MRI) of the prostate using MRI-transrectal ultrasonography (TRUS) fusion-guided transperineal prostate biopsies as a validation tool. BJU Int 117:80–86

Greer MD, Brown AM, Shih JH et al (2017) Accuracy and agreement of PIRADSv2 for prostate cancer mpMRI: a multireader study. J Magn Reson Imaging 45:579–585

Greer MD, Shih JH, Lay N et al (2019) Interreader variability of prostate imaging reporting and data system version 2 in detecting and assessing prostate cancer lesions at prostate MRI. AJR Am J Roentgenol 212:1197–1205

Gatti M, Faletti R, Calleris G et al (2019) Prostate cancer detection with biparametric magnetic resonance imaging (bpMRI) by readers with different experience: performance and comparison with multiparametric (mpMRI). Abdom Radiol (NY) 44:1883–1893

Girometti R, Giannarini G, Greco F et al (2019) Interreader agreement of PI-RADS v. 2 in assessing prostate cancer with multiparametric MRI: a study using whole-mount histology as the standard of reference. J Magn Reson Imaging 49:546–555

Hansen NL, Koo BC, Gallagher FA et al (2017) Comparison of initial and tertiary centre second opinion reads of multiparametric magnetic resonance imaging of the prostate prior to repeat biopsy. Eur Radiol 27:2259–2266

Puech P, Randazzo M, Ouzzane A et al (2015) How are we going to train a generation of radiologists (and urologists) to read prostate MRI? Curr Opin Urol 25:522–535

Acknowledgements

The authors would like to acknowledge all expert radiologists and urologists who participated as a panellist in the Delphi process, in particular the members of the European Society of Urogenital Radiology and the EAU Section of Urologic Imaging.

Funding

The authors state that this work has not received any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Professor Jelle O. Barentsz.

Conflict of interest

The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article.

Statistics and biometry

No complex statistical methods were necessary for this paper.

Informed consent

Institutional Review Board approval was not required because the research did not concern human or animal subjects.

Ethical approval

Institutional Review Board approval was not required because the research did not concern human or animal subjects.

Methodology

• prospective

• observational

• multicenter study

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

de Rooij, M., Israël, B., Tummers, M. et al. ESUR/ESUI consensus statements on multi-parametric MRI for the detection of clinically significant prostate cancer: quality requirements for image acquisition, interpretation and radiologists’ training. Eur Radiol 30, 5404–5416 (2020). https://doi.org/10.1007/s00330-020-06929-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-020-06929-z