Abstract

Allogeneic hematopoietic stem cell transplantation (allo-HSCT) is potentially curative for acute myeloid leukemia (AML). The inherent graft-versus-leukemia activity (GvL) may be optimized by donor lymphocyte infusions (DLI). Here we present our single-center experience of DLI use patterns and effectiveness, based on 342 consecutive adult patients receiving a first allo-HSCT for AML between 2009 and 2017. The median age at transplantation was 57 years (range 19–79), and the pre-transplant status was active disease in 58% and complete remission (CR) in 42% of cases. In a combined landmark analysis, patients in CR on day +30 and alive on day +100 were included. In this cohort (n=292), 93 patients received cryopreserved aliquots of peripheral blood-derived grafts for DLI (32%) and median survival was 55.7 months (2-year/5-year probability: 62%/49%). Median survival for patients receiving a first dose of DLI “preemptively,” in the absence of relapse and guided by risk marker monitoring (preDLI; n=42), or only after hematological relapse (relDLI; n=51) was 40.9 months (2-year/5-year: 64%/43%) vs 10.4 months (2-year/5-year: 26%/10%), respectively. Survival was inferior when preDLI was initiated at a time of genetic risk marker detection vs mixed chimerism or clinical risk only. Time to first-dose preDLI vs time to first-dose relDLI was similar, suggesting that early warning and intrinsically lower dynamics of AML recurrence may contribute to effectiveness of preDLI-modified GvL activity. Future refinements of the preemptive DLI concept will benefit from collaborative efforts to diagnose measurable residual disease more reliably across the heterogeneous genomic spectrum of AML.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Allogeneic hematopoietic stem cell transplantation (allo-HSCT) is an effective, potentially curative treatment in patients with a range of acute and chronic leukemias, based on a potent graft-versus-leukemia (GvL) immune effect [1]. Moreover, when leukemic cells recur after allo-HSCT, single or repeated infusion of lymphocytes from the original donor in the absence of prophylactic immune suppression can lead to durable remissions. The flexibility of using donor lymphocyte infusions (DLI) has increased with the finding that cryopreserved aliquots of the donor cell preparation used for the original allo-HSCT, routinely prepared and stored under Good Manufacturing Practice conditions, retain the ability to induce GvL effects [2]. This proactive DLI strategy is applicable to graft preparations from granulocyte-colony stimulating factor (G-CSF)–primed and unprimed donors [3, 4].

The clinical outcome of allo-HSCT with optional DLI has been examined across a number of hematological cancer indications, as described in recent reviews [5,6,7]. Direct comparison of study results is hampered by the diversity of single-indication and mixed-indication basket designs used, with marked differences in patient selection and treatment settings. Thus, there is limited systematic information regarding allo-HSCT and DLI use patterns and outcome directly related to adult patients with AML. As a step toward future harmonized treatment strategies, a proposal for individualized, risk-adjusted use of DLI in AML patients has been published by the Acute Leukemia Working Party of the European Society for Blood and Marrow Transplantation [7].

The present study was designed to provide an update on our clinical experience by (i) focusing on AML in adults only; (ii) selecting a cohort that reflects the changes in the eligible patient population, notably older patients and those with comorbidities and extensive pretreatment, made possible by improved supportive care, inclusion of mismatched and unrelated donors, and a shift from myeloablative (MAC) toward reduced-intensity conditioning (RIC) [8]; and (iii) using a cohort with extensive follow-up that includes allo-HSCT with and without DLI from a single transplant center.

Patients, materials, and methods

Inclusion criteria and data collection

The electronic files of our unit at the Medical Center of the University of Freiburg, Germany, were used to identify all consecutive cases of allo-HSCT carried out between January 1, 2009, and December 31, 2017, in patients with confirmed diagnosis of AML and age ≥18 years. All patients had given their written informed consent to the treatment and use of information for research purposes. All analyses of human data were carried out in compliance with the relevant ethical regulations. All demographic data, treatment details, and information from regular clinical follow-up were prospectively collected in our dedicated allo-HSCT registry, with database lock for follow-up November 8, 2019, and analyzed retrospectively.

Allo-HSCT and DLI procedures

Indication setting, diagnostic procedures, and clinical protocols followed international guidelines and institutional policies. Allo-HSCT were carried out with allogeneic peripheral blood hematopoietic stem cells (PBHSC), collected after G-CSF stimulation and prepared under Good Manufacturing Practice conditions. HLA-identical donors were classified as HLA-matched sibling or unrelated donors, and non-identical donors (e.g., HLA-A, B, C, DRB1, DQB1: 9/10) as HLA-mismatched sibling or unrelated donors, respectively. Initiation of DLI treatment, for which no specific AML guidelines have been put forward [8], followed individualized assessment of clinical status after transplantation, discontinuation of prophylactic immunosuppression, history and current status of GvHD (no active GvHD), eligibility for other treatment options, laboratory and clinical risk factors, and patient consent. Frozen aliquots of the original G-CSF stimulated graft were routinely stored for future use in DLI [2]. After using up the original aliquots, 5 patients with extended DLI dosing received further DLI with unstimulated donor lymphocytes from the original donor, a sibling, or an unrelated second donor, respectively. The selected DLI dose regimens were consistent with previous reports and recommendations that emphasize individualized dose adjustments [2, 7, 9]. Institutional policies did not include any a priori upper limits on the number of DLI cycles that could be given or the duration of DLI treatment.

We also considered the fact that clinical decisions to perform DLI influence the choice of additional treatment modalities [5], including various chemotherapy regimens (CT), hypomethylating agents (HMA: azacytidine, decitabine), and FLT3-directed tyrosine-kinase inhibitors (TKI: sorafenib, midostaurin). The corresponding information was collected for all patients with DLI.

Genetic markers

Mixed chimerism in bone marrow or peripheral blood samples was assessed by fluorescence in situ hybridization (FISH) or by short tandem repeat (STR) analysis [10, 11]. For clinical assessment, mixed chimerism levels, with a conventional cut-off value of 5%, and kinetic profiles were considered risk indicators [7, 11]. The presence of leukemia cells in bone marrow or peripheral blood mononuclear cell preparations was defined by detection of specific mutations, rearrangements, or expression profiles for leukemia-associated genes (e.g., NPM1, FLT3, WT1, MLL, DEK, NUP214, CEBPA, CBFB, MYH11, RUNX1, RUNX1T1, EVI1, ETV6, AF6, AF9, AF10, RAEB-1, KMT2A, MLL3, PTD, PML-RARA, BCR-ABL, KIT, JAK2, TP53) [11] as part of the clinical laboratory practice at the Medical Center of the University of Freiburg, with continual updates of methods and procedures. The timing of mixed chimerism and genetic analyses was determined by routine clinical follow-up schedules, including bone marrow and peripheral blood analysis on day +30 post-transplant for remission assessment. Genetic data sets obtained with peripheral blood and bone marrow samples at the time of first diagnosis of AML in a given patient, when available, were aligned with the current version of the European Leukemia Network risk classification scheme (ELN 2017) [8].

Response criteria and evaluation

The assessment of patient status followed international guidelines [8]. Briefly, CR was defined as bone marrow blasts < 5%, absence of circulating blasts and blasts with Auer rods, absence of extramedullary disease, absolute neutrophil count (ANC) ≥ 1000/μL, platelet count ≥ 100,000/μL, and transfusion independence. For the present study, we also included CR with incomplete hematological recovery in this category. Primary induction failure (PIF) was defined as failure to achieve any CR at any time despite treatment for AML. Progressive disease was defined as an increase in bone marrow blast percentage, increase of absolute blast counts in the blood, or new extramedullary disease. Relapse was defined as the recurrence of disease after CR, meeting one or more of the following criteria: ≥ 5% blasts in bone marrow or peripheral blood, extramedullary disease, or disease presence determined by clinical assessment. The temporal sequence of recurring remission and relapse events in a given patient, starting at time of AML diagnosis, was identified by sequential numbering (e.g., CR1, CR2, REL1).

The presence of aGvHD was recorded with overall grade (0=none; grades I–IV) and organ-specific stages for skin, liver, and intestine (0=none; stages 1–4) using standard criteria [12]. The presence of cGvHD was assessed by organ-specific scores (1 to 3) and global assessment of severity (mild, moderate, severe) [13], with updates [14]. Subcategories of aGvHD and cGvHD, including overlap syndromes [13], were not evaluated separately in the present study.

Data analysis, endpoints, and statistics

The retrospective analysis of our single-center allo-HSCT database for adult AML patients included three hierarchically ordered steps for mutually exclusive and collectively exhaustive cohort partitioning (Online Resource 1a): (i) selection of first-time allo-HSCT only, since re-transplantation in AML is associated with a very different outcome [15]; (ii) selection of allo-HSCT meeting a combined landmark of CR on day +30 after transplantation and being alive without re-transplantation on day +100 vs those not meeting this landmark; and (iii) stratification of allo-HSCT meeting the combined day-100 landmark by DLI use, distinguishing between first-dose DLI given prior to any hematological relapse (preDLI), first-dose DLI given after relapse (relDLI), and no DLI during follow-up.

Patient-, disease-, and treatment-related variables of cohort subgroups were compared using Fisher’s exact test for categorical variables and the Mann–Whitney test for continuous variables. Baseline characteristics were summarized using median, interquartile range, and range for continuous measures and numbers and frequencies for categorical measures. As principal health outcome, we used survival with transplant [16, 17], defined here by the time from date of first allo-HSCT to death, from any cause, or date of re-transplantation, whichever occurred first, censored for status alive without re-transplantation at last follow-up. Overall survival, from date of first allo-HSCT to death, from any cause, censored for status alive at last follow-up, which aggregates benefit from first allo-HSCT and potential re-transplantation, was used for some comparative analyses. Conditional survival with first transplant was assessed with the day-100 landmark as described [18]. Follow-up times were assessed as described [19], accounting for death and re-transplantation. Probability of survival was estimated by the Kaplan–Meier method. Survival curves were compared by log-rank testing, and results were expressed as hazard ratios (HR) with 95% confidence intervals (95% CI). Cox proportional hazards regression models were used to assess impact of selected covariates at time of transplantation. Data were analyzed using GraphPad Prism version 8.3.0 (GraphPad Software, San Diego, CA, USA), accepting p<0.05 as indicating a statistically significant difference.

Results

Patient characteristics and GvL-directed therapy

A total of 342 adult patients with AML were treated with a first allo-HSCT at our unit between January 1, 2009, and December 31, 2017. The median age was 57 years (range 19–79), and the status at transplantation was active disease in 58% vs 42% with CR (Table 1). Toxicity-reduced conditioning was used in a majority of patients (79%), and graft donor selection showed a substantial contribution of unrelated HLA-matched (56%) and mismatched donors (22%). The median follow-up at time of database lock was 5.1 years.

Of the primary study cohort with first allo-HSCT, 292 of 342 patients (85%) met the combined day-100 landmark (CR on day +30; being alive without re-transplant on day +100) as the clinical setting most likely to qualify for future DLI use (Online Resource 1a). During follow-up, 93 patients (32%) received DLI, based on their clinical status, laboratory findings, availability of cryopreserved graft cells, and GvHD status. The first dose of DLI was given “preemptively”—prior to detecting any hematological relapse—in 42 patients (preDLI), based on routine monitoring for mixed chimerism and genetic markers combined with individual clinical risk assessment. Specifically, at the time of first-dose preDLI, 18 of 42 patients showed at least one molecular or cytogenetic risk marker, including 12 with molecular detection of target gene mutations, copy number changes, or increased expression, 5 with molecular genetic markers plus mixed chimerism, and one with cytogenetic changes plus mixed chimerism. Twenty-two patients showed mixed chimerism without reported genetic markers, and two had no reported genetic markers or mixed chimerism at the time of first preDLI dose. In addition, 51 patients received a first dose of DLI only after hematological relapse was detected (relDLI). As shown in Table 1, the clinical characteristics at time of transplantation are closely similar among total cohort, day-100 landmark cohort, and preDLI and relDLI cohorts, with no statistically significant differences identified.

Characteristics of DLI use and additional treatment

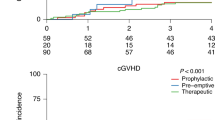

The characteristics of DLI use in first-time allo-HSCT patients meeting the day-100 landmark (Table 2) were closely similar between preDLI and relDLI groups, and the only statistically significant differences seen were related to DLI dose regimens. Thus, the preDLI group showed a higher number of DLI cycles per patient than the relDLI group [median (range), 6 (1–43) vs 3 (1–25); p=0.0002), and, for patients with ≥ 2 cycles, a longer interval from first-dose to last-dose DLI [median (range), 161 days (53–2114) vs 65 days (8-2472); p<0.0001] (Fig. 1 a, c). The proportion of patients with ≥3 DLI cycles was 86% for the preDLI group vs 61% for the relDLI group. There was no statistically significant difference in the number cells per DLI between the preDLI and relDLI groups when comparing the respective first, second, and maximum doses given (Fig. 1 b). Regarding the temporal sequence, the median time from transplantation to discontinuation of prophylactic CsA, median time from discontinuing CsA to first-dose DLI, and median time from transplantation to first-dose DLI were comparable for the preDLI and relDLI groups (Fig. 1 d–f). Specifically, the time from transplantation to first-dose DLI was not statistically different in the preDLI and relDLI groups (median, 273 vs 191 days; p=0.13), or in the subset of preDLI-treated patients with subsequent hematological relapse (n=25) vs the relDLI group (median, 272 vs 191 days; p=0.37).

Characteristics of preDLI and relDLI dose regimens in adult AML patients with first allo-HSCT. Box-and-whisker plots for numbers of DLI doses/patient given during the study period, comparing preDLI and relDLI (a); numbers of CD3 cells/kg body weight given per DLI infusion, separately for first, second and, if applicable, third or later maximum individual dose (b); time intervals between first and second and, if applicable, first and last dose of DLI in a given patient (c); time intervals from transplantation to cessation of prophylactic immunosuppression per patient (d); and time intervals from cessation of prophylactic immunosuppression to first dose DLI per patient (e). In panel f, the preDLI and relDLI cohorts are compared for time delay between transplantation and first dose DLI. Data in panels a–c are right-censored for patients alive without re-transplantation at the time of last follow-up. In panels a–e, boxes represent 1st quartile, median and 3rd quartile values, whiskers extend by 1.5 times interquartile range beyond the 1st and 3rd quartiles, and outliers are represented by circles (or numbers in parentheses, in panel e)

The incidence and severity of GvHD was monitored separately for the initial period after allo-HSCT, prior to first-dose DLI, and following DLI. The observed patterns for patients with preDLI and relDLI were not statistically different, as summarized in Table 2.

We also determined how commonly other treatment modalities, notably CT, HMA (azacytidine, decitabine), and FLT3-directed TKI (sorafenib, midostaurin), were introduced before or after a first dose of DLI. In the preDLI group (n=42), these added treatments were uncommon prior to first-dose DLI (TKI, 7% of patients; HMA, 5%), with a marked increase only when relapse occurred despite preDLI [subgroup with relapse (n=25): TKI, 24%; HMA, 52%; CT, 52%; HMA or CT, 80%]. In the relDLI group (n=51), the use of these treatments showed a similar pattern once relapse had occurred [TKI, n=13 (25%); HMA, n=32 (63%); CT, n=23 (45%); HMA or CT, n=44 (86%)], with the added treatment started either before or after patients received their first dose of relDLI in similar proportions: TKI start before/after first-dose relDLI in 5 vs 8 patients; HMA, 17 vs 15; CT, 11 vs 12; and HMA or CT, 26 vs 18.

Outcome

Survival with first transplant was selected as principal health outcome. For the total cohort of first-time allo-HSCT (n=342), median survival was 29.5 months, with 2-year and 5-year estimates of 53% and 43%, respectively. In patients meeting the day-100 landmark (n=292; Fig. 2), the median survival was 55.7 months (2-year/5-year: 62%/49%). As expected, failure to meet the day-100 landmark, indicative of very early treatment-related mortality or rapid leukemia progression, was associated with low median survival (1.7 months) and a 2-year estimated survival of 2%.

Survival with first allo-HSCT in adult patients with AML. Composite view of Kaplan–Meier plots (a) and tabulated outcome parameters (b), matched for the entire study cohort (graph A1, column A2), the day 100 landmark cohort (graph B1, column B2), and the day 100 landmark subcohorts with preDLI and relDLI (graph C1, column C2), respectively. Survival times from day of transplantation. In the top panel, vertical tick marks along the survival curves indicate individual patients alive without re-transplantation at the respective time of last follow-up. The bottom panel includes types of observed endpoint events for each group

Analysis of allo-HSCT that had met the day-100 landmark based on the type of DLI use revealed distinct outcomes (Fig. 2). Patients with preDLI (n=42) showed a median survival of 40.9 months (2-year/5-year: 64%/43%), and the observed endpoint events included relapse-related deaths (9 of 42; 21%), treatment-related deaths due to infection and GvHD (2 of 42; 5%), and re-transplantations (13 of 42; 31%), with 43% (18 of 42) alive without re-transplantation at last follow-up. In the relDLI setting (n=51), median survival was 10.4 months (2-year/5-year: 26%/10%). The observed endpoint events were relapse-related deaths (26 of 51; 51%), treatment-related death due to GvHD and infection subsequent to remission (1 of 51), death of unknown cause (1 of 51), and re-transplantation (20 of 51; 39%), with 6% (3 of 51) being alive without re-transplantation at last follow-up. The incremental benefit of re-transplantation in this setting, which was not the focus of our current study, is shown in Online Resource 2.

We further compared patients in the preDLI group who, just prior to receiving the first dose of DLI, were recorded either (i) with at least one of the more leukemia cell-specific genetic risk indicators (AML-typical gene mutations or expression patterns, or cytogenetic abnormalities), or (ii) only with less AML cell-specific risk indicators (mixed chimerism levels or kinetics; individualized physician’s judgement of clinical risk). As shown in Fig. 3, the subgroup with at least one genetic indicator (n=18) showed a median survival of 25.6 months (2-year/5-year: 56%/19.2%), and those without genetic indicator (n=24) did not reach median survival (2-year/5-year: 71%/58.0%). This difference was statistically significant [p=0.018; HR, 2.54 (95% CI, 1.10–5.86)].

Survival with first allo-HSCT and preDLI by risk marker constellation at the time of first-dose preDLI. Kaplan–Meier plot and tabulated outcome parameters for the day 100 landmark cohort with preDLI use, stratified as follows: MC/Clin (n=24), includes 22 patients with detection of mixed chimerism (MC) and 2 with individual clinical risk assessment (Clin) at follow-up immediately preceding first-dose preDLI, without genetic risk markers at that time (non-specific risk indicators); Mol/CG ± MC (n=18), includes 17 patients with AML-typical molecular genetic findings (Mol), including 5 with concomitant mixed chimerism, and one with cytogenetic findings (CG), with concomitant mixed chimerism, reported at follow-up immediately preceding first-dose preDLI (leukemia-specific risk indicators). Survival times from day of transplantation

For patients with no DLI during follow-up (n=199), median survival was not reached (2-year/5-year: 71%/62%). However, this group comprises two distinct clinical scenarios with very different prognosis and endpoint events. Thus, one subset of patients (37 of 199) relapsed after transplantation, received no DLI due to rapid progression or other treatment, and showed a low median survival of 8.4 months (2-year/5-year: 19%/7%). The observed endpoint events were relapse-related death (29 of 37; 78%), re-transplantation (6 of 37; 16%), unknown cause of death (1 of 37), or being alive without re-transplantation (1 of 37). The second subset with no DLI comprised patients that maintain CR (162 of 199) but remained at risk of delayed treatment-related mortality. In this setting, median survival was not reached (2-year/5-year: 83%/75%), 120 of 162 patients were alive at database lock, and 42 patients were reported with either treatment-related (38 of 42) or unknown (4 of 42) causes of death. The treatment-related causes (allowing multiple entries per patient) included GvHD in 14 of 38 patients; viral, bacterial, and fungal infections (21 of 38); post-transplant lymphoproliferative disorder (6 of 38); acute respiratory distress syndrome (3 of 38); and various types of single or multiple organ failure (10 of 38). As a cautionary note, this group with no DLI at database lock likely includes a proportion of patients that may receive DLI during their further clinical course, affecting our final outcome estimates. However, the median follow-up of 5.1 years achieved in this study (estimated 2-year follow-up: 95.2%; 95% CI 91.0–97), compared to the finding that most DLI are started within the first 2 years after allo-HSCT (Fig. 1 f), suggests that the necessary adjustments for DLI-based stratification, once follow-up is fully completed, should be moderate.

In a supplementary analysis, we examined all patients in the DLI-related subgroups who presented with hematological relapse during follow-up for the time from transplantation to relapse. The median time from transplantation to relapse was shorter in the relDLI subgroup (n=51; median 5.2 months) than the preDLI subgroup with relapse [n=25; 14 months; HR, 2.11 (95% CI, 1.34–3.3); p=0.0013]. In the no-DLI subgroup with relapse (n=37), median time to relapse was 5.4 months, similar to the relDLI subgroup. With regard to post-relapse survival with transplant, all three subgroups were closely similar (relDLI, median 3.7 months; preDLI, 3.5 months; no-DLI, 1.7 months).

Finally, we examined the correlation between disease status at the start of transplantation and outcome. As shown in Online Resource 3a, active disease vs CR at transplantation was associated with lower median survival in patients meeting the day-100 landmark [p=0.0017; HR, 1.68 (95% CI, 1.22–2.31)], with an estimated 5-year survival of 40.5% (95% CI 32.1–48.8) vs 59.3% (50.1–67.4). By contrast, no significant difference was seen for the preDLI and relDLI subgroups (Online Resource 3b-c). Instead, this difference can be traced to patients with continued CR and no DLI during follow-up [p=0.0059; HR, 2.38 (95% CI, 1.30–4.37)], the subgroup with mostly treatment-related mortality, with a 5-year estimated survival of 64.5% (95% CI 51.4–74.9) and 85.4% (75.1–91.7) for active disease status vs CR at the start of transplantation. Stratification of outcome according to the ELN 2017 classification was hampered by missing or partial data, notably for patients with early dates of initial AML diagnosis and no access to original diagnostic samples for re-analysis. Accepting these limitations, we assigned the DLI cohorts to favorable (preDLI: 7 of 37 evaluable; relDLI: 1 of 48 evaluable), intermediate (preDLI: 27 of 37; relDLI: 33 of 48), and adverse profiles at the time of initial AML diagnosis (preDLI: 3 of 37; relDLI: 14 of 48), respectively. With the caveat of small subgroup sizes, there was no statistically significant association between these risk categories and post-transplantation survival in either group.

Discussion

The role of allo-HSCT in AML treatment depends on two key questions: which patients are treated with allo-HSCT—as a nonrandom selection step [20]—and what is the effectiveness of allo-HSCT thereafter? In our primary study cohort of patients with first allo-HSCT, the age distribution and representation of active disease at transplantation, secondary AML, and reduced-toxicity conditioning are consistent with the nonrandom selection of patients linked to broader eligibility for allo-HSCT [8]. This clinical profile is largely maintained throughout our subgroup analyses. As we start from a prospective single-center allo-HSCT database, we suggest that the estimated 2-year/5-year probability of survival of 53%/43% reflects the current level of effectiveness of first allo-HSCT in adult patients with AML. Moreover, the 2-year/5-year probability of survival is 62%/49% for patients meeting the day-100 landmark. This early landmark separates clinical courses with rapid leukemia progression or immediate treatment-related mortality [21], overwhelming any initial GvL benefit of the transplant, from those that offer extended options for further GvL-modifying strategies.

In our day-100 landmark cohort, DLI were included in the clinical management of about one-third of patients (32%). There are several general criteria to be met when deciding on DLI eligibility, notably the availability of suitable graft donor cells, acceptable GvHD levels after allo-HSCT, and lack of more promising treatment choices [7]. In addition, there is an option, based on the individualized clinical assessment of each patient, to implement a first dose of DLI “preemptively,” based on preceding clinical and laboratory risk markers, or to implement a first dose of DLI after detecting a relapse. In our day-100 landmark cohort, these two options were selected in about equal proportions of patients (14% vs 17%). The 2-year/5-year probabilities of survival with first transplant are 64%/43% for the preDLI cohort, 26%/10% for the relDLI cohort, and 71%/62% for the cohort with no DLI during follow-up. Within the preDLI cohort, patients who presented prior to first-dose DLI with leukemia-specific molecular or cytogenetic risk markers showed inferior survival compared to those with less specific risk indicators, namely mixed chimerism and/or individual clinical risk ranking. This difference was statistically significant but due to limited group sizes and the heterogeneity of detected gene markers in our cohort, larger follow-up studies will be important.

The specific criteria selected for our preDLI/relDLI classification, namely comparing the date of first-dose DLI to the date of any potential post-transplantation relapse, reflect the need for an objective, unambiguous, and retrospectively retrievable set of parameters in existing allo-HSCT databases to allow cohort partitioning among patients with highly diversified treatment patterns (Online Resource 1 a). In this respect, we diverge slightly from proposed classifications [22] that assume differences in DLI intent of treating overt hematological relapse, treating measurable residual disease (MRD) [23, 24], or preventing both MRD and relapse with prospectively scheduled adjuvant DLI [25,26,27]. Lessons learned from chronic myeloid leukemia [22] show that the intent-based classification requires a robust and universally applicable set of laboratory markers to distinguish MRD-positive and MRD-negative patients and to serve as reliable mechanistic biomarkers for short-term DLI effects. Establishing a similar framework for AML is highly desirable but, as outlined by Ravandi et al. [23], not yet achieved in light of the distinctive heterogeneity and clonal architecture of AML. Current limitations include the failure of existing technology platforms (i.e., AML gene mutations and expression, cytogenetics, genome typing, multi-parameter flow cytometry, mixed chimerism) to identify and standardize single-timepoint criteria for the MRD-positive status, and to distinguish between bona fide MRD-negative patients and AML without appropriate detection method; e.g., available targets for quantitative PCR may cover only about 50% of all AML cases [23]. Secondly, even specific gene markers may not distinguish leukemic cells that are biologically capable and likely to cause relapse from residual cells that are terminally differentiated or in senescence. Third, there is no randomized controlled trial evidence yet to recommend MRD monitoring in AML as a validated interim endpoint for patient benefit [23, 28, 29]. These limitations explain why previous studies in AML have differed markedly in their technical details to identify preemptive and prophylactic DLI use and select study cohorts, preventing direct comparison of the results [5,6,7]. For the purposes of our day-100 landmark design, which aims to exclude no patients, it is even more important that we define preDLI and relDLI in an objective and unambiguous manner. The limited ability to classify a total allo-HSCT cohort in AML by robust MRD-positive and MRD-negative status, as outlined above, also explains why our landmark design incorporates the genetic and non-specific risk factors at the level of the preDLI group only, and not as a first-tier diagnostic category (Online Resource 1 b).

Our study includes additional aspects that extend or diverge from previous reports of allo-HSCT with DLI in AML patients. For instance, the studies cited in recent reviews [5,6,7] do not yet provide a comprehensive cohort perspective for allo-HSCT, with and without DLI, or a linked analysis of preDLI and relDLI, to account for shifts in nonrandom patient selection and additional treatment modalities. In addition, few studies have concentrated on AML only. Instead, many have used basket designs, aggregating data for adult AML with, variously, pediatric AML, myelodysplastic syndrome, myeloproliferative neoplasm, acute lymphocytic leukemia, or other hematological conditions [2,3,4, 30,31,32,33]. Finally, exclusion criteria have been used widely but inconsistently to limit the analysis to specific clinical settings. Collectively, most of these studies advocate some form of DLI in AML [2,3,4, 15, 25, 27, 30, 33,34,35,36,37,38,39], but a uniform assessment of DLI effectiveness in adult AML has not yet emerged. The present study may help to fill some of these gaps specific to AML, and our day-100 landmark design is readily transferable to other existing allo-HSCT databases for direct comparison while retaining the contextual information of nonrandom patient selection, prevalence and type of DLI use, patterns of added modalities, and outcome.

There are several limitations to be considered. As a general caveat for studies in AML with allo-HSCT [28, 29], our findings do not establish specific efficacy claims for DLI that would require randomized controlled trials. It also deserves emphasis that our results do not predict how outcomes would change with different decision criteria for preDLI or relDLI use. Importantly, the no-DLI group is not an internal control for preDLI and relDLI decision making but, instead, describes two distinct event sequences: relapsed patients ineligible for relDLI or prioritized for re-transplantation, and patients with continued CR, but still facing the risk of late treatment-related mortality [21, 40]. Finally, it is inherent to our cohort design that some of the patients without DLI and censored at the time of database lock may still receive DLI during their subsequent clinical course. Since we and others [9, 36, 41] have found that most DLI decisions are taken within the first 2 years after allo-HSCT, it seems likely that these adjustments in final cohort assignment will decrease over time, and our median follow-up of 5.1 years should suffice to support our main study conclusions.

As an incentive to future research, we report an unexpected observation regarding the temporal alignment between date of allo-HSCT and dates of first-dose DLI and post-transplantation relapse, respectively. Previously, the benefit of preemptive DLI had been linked directly to an early-warning concept, with the assumption that a first dose of preemptive DLI would generally occur sooner after transplantation than a first dose of DLI triggered by hematological relapse [42]. However, previous studies have not examined this assumption in the context of uniform institutional policies for the clinical management of a common starting cohort of adult AML patients with allo-HSCT. We identified no statistically significant difference between median time from allo-HSCT to first-dose preDLI vs first-dose relDLI. While the preemptive DLI setting, by definition, describes an early (prior-to-relapse) intervention in a given patient, this may be counteracted at the cohort level. Conceivably, a priori differences among allo-HSCT recipients regarding clonal genetics of residual leukemic cells [43] or propensity for slow vs precipitous escape from GvL surveillance [44] may allow detection of an extended period of steady-state MRD in some patients, while the same monitoring schedule may fail to detect a discrete MRD phase in patients with a steep rise in leukemic cell burden. In this scenario, non-random selection of the preDLI and relDLI treatment options, reflecting as yet unidentified leukemic cell attributes, is superimposed on intrinsic DLI efficacy. Separating these elements will require dual progress in the efforts to establish MRD as a bona fide diagnostic entity in AML and to implement standardized MRD surveillance, as a justification for randomized controlled trials of routinely cryopreserved DLI in early phases after allo-HSCT.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Kolb H-J (2008) Graft-versus-leukemia effects of transplantation and donor lymphocytes. Blood 112:4371–4383. https://doi.org/10.1182/blood-2008-03-077974

Hasskarl J, Zerweck A, Wäsch R, Ihorst G, Bertz H, Finke J (2012) Induction of graft versus malignancy effect after unrelated allogeneic PBSCT using donor lymphocyte infusions derived from frozen aliquots of the original graft. Bone Marrow Transplant 47:277–282. https://doi.org/10.1038/bmt.2011.45

Lamure S, Paul F, Gagez A-L, Delage J, Vincent L, Fegueux N, Sirvent A, Gehlkopf E, Veyrune JL, Yang LZ, Kanouni T, Cacheux V, Moreaux J, Bonafoux B, Cartron G, de Vos J, Ceballos P (2020) A retrospective comparison of DLI and gDLI for post-transplant treatment. J Clin Med 9:2204. https://doi.org/10.3390/jcm9072204

Schneidawind C, Jahnke S, Schober-Melms I, Schumm M, Handgretinger R, Faul C, Kanz L, Bethge W, Schneidawind D (2019) G-CSF administration prior to donor lymphocyte apheresis promotes anti-leukaemic effects in allogeneic HCT patients. Br J Haematol 186:60–71. https://doi.org/10.1111/bjh.15881

Zeiser R, Beelen DW, Bethge W, Bornhäuser M, Bug G, Burchert A, Christopeit M, Duyster J, Finke J, Gerbitz A, Klusmann JH, Kobbe G, Lübbert M, Müller-Tidow C, Platzbecker U, Rösler W, Sauer M, Schmid C, Schroeder T, Stelljes M, Kröger N, Müller LP (2019) Biology-driven approaches to prevent and treat relapse of myeloid neoplasia after allogeneic hematopoietic stem cell transplantation. Biol Blood Marrow Transplant 25:e128–e140. https://doi.org/10.1016/j.bbmt.2019.01.016

Dickinson AM, Norden J, Li S, Hromadnikova I, Schmid C, Schmetzer H, Jochem-Kolb H (2017) Graft-versus-leukemia effect following hematopoietic stem cell transplantation for leukemia. Front Immunol 8. https://doi.org/10.3389/fimmu.2017.00496

Tsirigotis P, Byrne M, Schmid C, Baron F, Ciceri F, Esteve J, Gorin NC, Giebel S, Mohty M, Savani BN, Nagler A (2016) Relapse of AML after hematopoietic stem cell transplantation: methods of monitoring and preventive strategies. A review from the ALWP of the EBMT. Bone Marrow Transplant 51:1431–1438. https://doi.org/10.1038/bmt.2016.167

Döhner H, Estey E, Grimwade D, Amadori S, Appelbaum FR, Büchner T, Dombret H, Ebert BL, Fenaux P, Larson RA, Levine RL, Lo-Coco F, Naoe T, Niederwieser D, Ossenkoppele GJ, Sanz M, Sierra J, Tallman MS, Tien HF, Wei AH, Löwenberg B, Bloomfield CD (2017) Diagnosis and management of AML in adults: 2017 ELN recommendations from an international expert panel. Blood 129:424–448. https://doi.org/10.1182/blood-2016-08-733196.424

Schmid C, Labopin M, Nagler A, Bornhäuser M, Finke J, Fassas A, Volin L, Gürman G, Maertens J, Bordigoni P, Holler E, Ehninger G, Polge E, Gorin NC, Kolb HJ, Rocha V, EBMT Acute Leukemia Working Party (2007) Donor lymphocyte infusion in the treatment of first hematological relapse after allogeneic stem-cell transplantation in adults with acute myeloid leukemia: a retrospective risk factors analysis and comparison with other strategies by the EBMT acute leukem. J Clin Oncol 25:4938–4945. https://doi.org/10.1200/JCO.2007.11.6053

Waterhouse M, Kunzmann R, Torres M, Bertz H, Finke J (2013) An internal validation approach and quality control on hematopoietic chimerism testing after allogeneic hematopoietic cell transplantation. Clin Chem Lab Med 51:363–369. https://doi.org/10.1515/cclm-2012-0230

Waterhouse M, Pfeifer D, Duque-Afonso J, Follo M, Duyster J, Depner M, Bertz H, Finke J (2019) Droplet digital PCR for the simultaneous analysis of minimal residual disease and hematopoietic chimerism after allogeneic cell transplantation. Clin Chem Lab Med 57:641–647. https://doi.org/10.1515/cclm-2018-0827

Przepiorka D, Weisdorf D, Martin P, Klingemann HG, Beatty P, Hows J, Thomas ED (1995) 1994 Consensus Conference on Acute GVHD Grading. Bone Marrow Transplant 15:825–828

Filipovich AH, Weisdorf D, Pavletic S, Socie G, Wingard JR, Lee SJ, Martin P, Chien J, Przepiorka D, Couriel D, Cowen EW, Dinndorf P, Farrell A, Hartzman R, Henslee-Downey J, Jacobsohn D, McDonald G, Mittleman B, Rizzo JD, Robinson M, Schubert M, Schultz K, Shulman H, Turner M, Vogelsang G, Flowers MED (2005) National Institutes of Health Consensus Development Project on criteria for clinical trials in chronic graft-versus-host disease: I. diagnosis and staging working group report. Biol Blood Marrow Transplant 11:945–956. https://doi.org/10.1016/j.bbmt.2005.09.004

Jagasia MH, Greinix HT, Arora M et al (2016) National Institutes of Health Consensus Development Project on Criteria for Clinical Trials in Chronic Graft-versus-Host Disease: I. The 2014 Diagnosis and Staging Working Group Report Madan. 21:389–401. https://doi.org/10.1016/j.bbmt.2014.12.001.National

Kharfan-Dabaja MA, Labopin M, Polge E, Nishihori T, Bazarbachi A, Finke J, Stadler M, Ehninger G, Lioure B, Schaap N, Afanasyev B, Yeshurun M, Isaksson C, Maertens J, Chalandon Y, Schmid C, Nagler A, Mohty M (2018) Association of second allogeneic hematopoietic cell transplant vs donor lymphocyte infusion with overall survival in patients with acute myeloid leukemia relapse. JAMA Oncol 4:1245–1253. https://doi.org/10.1001/jamaoncol.2018.2091

Lund LH, Edwards LB, Kucheryavaya AY, Benden C, Christie JD, Dipchand AI, Dobbels F, Goldfarb SB, Levvey BJ, Meiser B, Yusen RD, Stehlik J, International Society of Heart and Lung Transplantation (2014) The Registry of the International Society for Heart and Lung Transplantation: Thirty-first Official Adult Heart Transplant Report—2014; Focus Theme: Retransplantation. J Heart Lung Transplant 33:996–1008. https://doi.org/10.1016/j.healun.2014.08.003

Han SH, Go J, Park SC, Yun SS (2019) Long-term outcome of kidney retransplantation in comparison with first transplantation: a propensity score matching analysis. Transplant Proc 51:2582–2586. https://doi.org/10.1016/j.transproceed.2019.03.070

Hieke S, Kleber M, König C, Engelhardt M, Schumacher M (2015) Conditional survival: a useful concept to provide information on how prognosis evolves over time. Clin Cancer Res 21:1530–1536. https://doi.org/10.1158/1078-0432.CCR-14-2154

Schemper M, Smith TL (1996) A note on quantifying follow-up in studies of failure time. Control Clin Trials 17:343–346. https://doi.org/10.1016/0197-2456(96)00075-X

Juliusson G, Karlsson K, Lazarevic VL, Wahlin A, Brune M, Antunovic P, Derolf Å, Hägglund H, Karbach H, Lehmann S, Möllgård L, Stockelberg D, Hallböök H, Höglund M, for the Swedish Acute Leukemia Registry Group, the Swedish Acute Myeloid Leukemia Group, the Swedish Adult Acute Lymphoblastic Leukemia Group (2011) Hematopoietic stem cell transplantation rates and long-term survival in acute myeloid and lymphoblastic leukemia: real-world population-based data from the Swedish Acute Leukemia Registry 1997-2006. Cancer 117:4238–4246. https://doi.org/10.1002/cncr.26033

Styczyński J, Tridello G, Koster L et al (2020) Death after hematopoietic stem cell transplantation: changes over calendar year time, infections and associated factors. Bone Marrow Transplant 55:126–136. https://doi.org/10.1038/s41409-019-0624-z

Tomblyn M, Lazarus HM (2008) Donor lymphocyte infusions: The long and winding road: how should it be traveled? Bone Marrow Transplant 42:569–579. https://doi.org/10.1038/bmt.2008.259

Ravandi F, Walter RB, Freeman SD (2018) Evaluating measurable residual disease in acute myeloid leukemia. Blood Adv 2:1356–1366. https://doi.org/10.1182/bloodadvances.2018016378

Schuurhuis GJ, Heuser M, Freeman S, Béné MC, Buccisano F, Cloos J, Grimwade D, Haferlach T, Hills RK, Hourigan CS, Jorgensen JL, Kern W, Lacombe F, Maurillo L, Preudhomme C, van der Reijden BA, Thiede C, Venditti A, Vyas P, Wood BL, Walter RB, Döhner K, Roboz GJ, Ossenkoppele GJ (2018) Minimal/measurable residual disease in AML: a consensus document from the European LeukemiaNet MRD Working Party. Blood 131:1275–1291. https://doi.org/10.1182/blood-2017-09-801498

Jedlickova Z, Schmid C, Koenecke C, Hertenstein B, Baurmann H, Schwerdtfeger R, Tischer J, Kolb HJ, Schleuning M (2016) Long-term results of adjuvant donor lymphocyte transfusion in AML after allogeneic stem cell transplantation. Bone Marrow Transplant 51:663–667. https://doi.org/10.1038/bmt.2015.234

Eefting M, de Wreede LC, Halkes CJM, von dem Borne PA, Kersting S, Marijt EWA, Veelken H, Putter H, Schetelig J, Falkenburg JHF (2016) Multi-state analysis illustrates treatment success after stem cell transplantation for acute myeloid leukemia followed by donor lymphocyte infusion. Haematologica 101:506–514. https://doi.org/10.3324/haematol.2015.136846

Yan CH, Liu Q-F, Wu D-P et al (2017) Prophylactic donor lymphocyte infusion (dli) followed by minimal residual disease and graft-versus-host disease–guided multiple DLIs could improve outcomes after allogeneic hematopoietic stem cell transplantation in patients with refractory/relapsed acute. Biol Blood Marrow Transplant 23:1311–1319. https://doi.org/10.1016/j.bbmt.2017.04.028

Medeiros BC (2018) Interpretation of clinical endpoints in trials of acute myeloid leukemia. Leuk Res 68:32–39. https://doi.org/10.1016/j.leukres.2018.02.002

Appelbaum FR, Rosenblum D, Arceci RJ, Carroll WL, Breitfeld PP, Forman SJ, Larson RA, Lee SJ, Murphy SB, O'Brien S, Radich J, Scher NS, Smith FO, Stone RM, Tallman MS (2007) End points to establish the efficacy of new agents in the treatment of acute leukemia. Blood 109:1810–1816. https://doi.org/10.1182/blood-2006-08-041152

Schmid C, Labopin M, Schaap N, Veelken H, Schleuning M, Stadler M, Finke J, Hurst E, Baron F, Ringden O, Bug G, Blaise D, Tischer J, Bloor A, Esteve J, Giebel S, Savani B, Gorin NC, Ciceri F, Mohty M, Nagler A, on behalf of the EBMT Acute Leukaemia Working Party (2018) Prophylactic donor lymphocyte infusion after allogeneic stem cell transplantation in acute leukaemia - a matched pair analysis by the Acute Leukaemia Working Party of EBMT. Br J Haematol 184:782–787. https://doi.org/10.1111/bjh.15691

Miyamoto T, Fukuda T, Nakashima M, Henzan T, Kusakabe S, Kobayashi N, Sugita J, Mori T, Kurokawa M, Mori SI (2017) Donor lymphocyte infusion for relapsed hematological malignancies after unrelated allogeneic bone marrow transplantation facilitated by the Japan Marrow Donor Program. Biol Blood Marrow Transplant 23:938–944. https://doi.org/10.1016/j.bbmt.2017.02.012

Yan CH, Liu DH, Liu KY, Xu LP, Liu YR, Chen H, Han W, Wang Y, Qin YZ, Huang XJ (2012) Risk stratification-directed donor lymphocyte infusion could reduce relapse of standard-risk acute leukemia patients after allogeneic hematopoietic stem cell transplantation. Blood 119:3256–3262. https://doi.org/10.1182/blood-2011-09-380386

Mo XD, Zhang XH, Xu LP, Wang Y, Yan CH, Chen H, Chen YH, Han W, Wang FR, Wang JZ, Liu KY, Huang XJ (2017) Comparison of outcomes after donor lymphocyte infusion with or without prior chemotherapy for minimal residual disease in acute leukemia/myelodysplastic syndrome after allogeneic hematopoietic stem cell transplantation. Ann Hematol 96:829–838. https://doi.org/10.1007/s00277-017-2960-7

Steinmann J, Bertz H, Wäsch R, Marks R, Zeiser R, Bogatyreva L, Finke J, Lübbert M (2015) 5-Azacytidine and DLI can induce long-term remissions in AML patients relapsed after allograft. Bone Marrow Transplant 50:690–695. https://doi.org/10.1038/bmt.2015.10

Takami A, Yano S, Yokoyama H, Kuwatsuka Y, Yamaguchi T, Kanda Y, Morishima Y, Fukuda T, Miyazaki Y, Nakamae H, Tanaka J, Atsuta Y, Kanamori H (2014) Donor lymphocyte infusion for the treatment of relapsed acute myeloid leukemia after allogeneic hematopoietic stem cell transplantation: a retrospective analysis by the adult acute myeloid leukemia working group of the Japan society for hematopoietic cell transplantation. Biol Blood Marrow Transplant 20:1785–1790. https://doi.org/10.1016/j.bbmt.2014.07.010

Hemmati P, Vogelsänger C, Terwey T, Jehn C, Vuong LG, Blau IW, Penack O, Dörken B, Arnold R (2016) Efficacy of adoptive immunotherapy by donor lymphocyte infusions after allogeneic stem cell transplantation from related an unrelated donors: a single center retrospective analysis. Blood 128:4556. https://doi.org/10.1182/blood.v128.22.4556.4556

Krishnamurthy P, Potter VT, Barber LD, Kulasekararaj AG, Lim ZY, Pearce RM, de Lavallade H, Kenyon M, Ireland RM, Marsh JCW, Devereux S, Pagliuca A, Mufti GJ (2013) Outcome of donor lymphocyte infusion after T cell-depleted allogeneic hematopoietic stem cell transplantation for acute myelogenous leukemia and myelodysplastic syndromes. Biol Blood Marrow Transplant 19:562–568. https://doi.org/10.1016/j.bbmt.2012.12.013

Bejanyan N, Weisdorf DJ, Logan BR, Wang HL, Devine SM, de Lima M, Bunjes DW, Zhang MJ (2015) Survival of patients with acute myeloid leukemia relapsing after allogeneic hematopoietic cell transplantation: a center for International Blood and Marrow Transplant Research Study. Biol Blood Marrow Transplant 21:454–459. https://doi.org/10.1016/j.bbmt.2014.11.007

Eefting M, von dem Borne PA, de Wreede LC, Halkes CJ, Kersting S, Marijt EW, Veelken H, Falkenburg JF (2014) Intentional donor lymphocyte-induced limited acute graft-versus-host disease is essential for long-term survival of relapsed acute myeloid leukemia after allogeneic stem cell transplantation. Haematologica 99:751–758. https://doi.org/10.3324/haematol.2013.089565

Gooley TA, Chien JW, Pergam SA, Hingorani S, Sorror ML, Boeckh M, Martin PJ, Sandmaier BM, Marr KA, Appelbaum FR, Storb R, McDonald GB (2010) Reduced mortality after allogeneic hematopoietic-cell transplantation. N Engl J Med 363:2091–2101. https://doi.org/10.1056/NEJMoa1004383

Mo XD, Zhang XH, Xu LP, Wang Y, Yan CH, Chen H, Chen YH, Han W, Wang FR, Wang JZ, Liu KY, Huang XJ (2016) Salvage chemotherapy followed by granulocyte colony-stimulating factor-primed donor leukocyte infusion with graft-vs-host disease control for minimal residual disease in acute leukemia/myelodysplastic syndrome after allogeneic hematopoietic stem cell tran. Eur J Haematol 96:297–308. https://doi.org/10.1111/ejh.12591

Orti G, Barba P, Fox L, Salamero O, Bosch F, Valcarcel D (2017) Donor lymphocyte infusions in AML and MDS: Enhancing the graft-versus-leukemia effect. Exp Hematol 48:1–11. https://doi.org/10.1016/j.exphem.2016.12.004

Ding L, Ley TJ, Larson DE, Miller CA, Koboldt DC, Welch JS, Ritchey JK, Young MA, Lamprecht T, McLellan MD, McMichael JF, Wallis JW, Lu C, Shen D, Harris CC, Dooling DJ, Fulton RS, Fulton LL, Chen K, Schmidt H, Kalicki-Veizer J, Magrini VJ, Cook L, McGrath SD, Vickery TL, Wendl MC, Heath S, Watson MA, Link DC, Tomasson MH, Shannon WD, Payton JE, Kulkarni S, Westervelt P, Walter MJ, Graubert TA, Mardis ER, Wilson RK, DiPersio JF (2012) Clonal evolution in relapsed acute myeloid leukaemia revealed by whole-genome sequencing. Nature 481:506–510. https://doi.org/10.1038/nature10738

Zeiser R, Vago L (2019) Mechanisms of immune escape after allogeneic hematopoietic cell transplantation. Blood 133:1290–1297

Acknowledgements

We thank Irmgard Matt for excellent support with data acquisition.

Code availability

Not applicable

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Conceptualization: Andrés R. Rettig, Jürgen Finke; methodology: Andrés R. Rettig, Gabriele Ihorst, Jürgen Finke; formal analysis and investigation: Andrés R. Rettig, Gabriele Ihorst; writing—original draft preparation: Andrés R. Rettig. All authors reviewed, edited, and approved the final manuscript.

Corresponding author

Ethics declarations

The research protocol was approved by the institutional ethics committee.

Consent to participate

Informed consent was obtained from all individual participants included in the study.

Consent for publication

Patients signed informed consent regarding publishing their data.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(PDF 1580 kb)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rettig, A.R., Ihorst, G., Bertz, H. et al. Donor lymphocyte infusions after first allogeneic hematopoietic stem-cell transplantation in adults with acute myeloid leukemia: a single-center landmark analysis. Ann Hematol 100, 2339–2350 (2021). https://doi.org/10.1007/s00277-021-04494-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00277-021-04494-z