Abstract

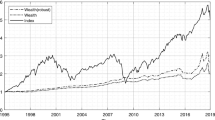

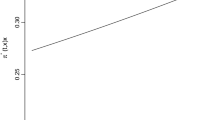

This paper studies stochastic linear-quadratic control with a time-inconsistent objective and worst-case drift disturbance. We allow the agent to introduce disturbances to reflect her uncertainty about the drift coefficient of the controlled state process. We adopt a two-step equilibrium control approach to characterize the robust time-consistent controls, which can preserve the order of preference. Under a general framework allowing random parameters, we derive a sufficient condition for equilibrium controls using the forward-backward stochastic differential equation approach. We also provide analytical solutions to mean-variance portfolio problems for various settings. Our empirical studies confirm the improvement in portfolio’s performance in terms of out-of-sample Sharpe ratio by incorporating with robustness.

Similar content being viewed by others

References

Anderson, E.W., Hansen, L.P., Sargent, T.J.: A quartet of semigroups for model specification, robustness, prices of risk, and model detection. J. Eur. Econ. Assoc. 1(1), 68–123 (2003)

Basak, S., Chabakauri, G.: Dynamic mean-variance asset allocation. Rev. Financ. Stud. 23(8), 2970–3016 (2010)

Björk, T., Khapko, M., Murgoci, A.: On time-inconsistent stochastic control in continuous time. Financ. Stoch. 21(2), 331–360 (2017)

Björk, T., Murgoci, A.: A theory of Markovian time-inconsistent stochastic control in discrete time. Financ. Stoch. 18(3), 545–592 (2014)

Björk, T., Murgoci, A., Zhou, X.Y.: Mean-variance portfolio optimization with state-dependent risk aversion. Math. Financ. 24(1), 1–24 (2014)

Ellsberg, D.: Risk, ambiguity, and the savage axioms. Q. J. Econ. 75(4), 643–669 (1961)

Fouque, J.P., Pun, C.S., Wong, H.Y.: Portfolio optimization with ambiguous correlation and stochastic volatilities. SIAM J. Control Optim. 54(5), 2309–2338 (2016)

Han, B., Pun, C.S., Wong, H.Y.: Robust state-dependent mean–variance portfolio selection: a closed-loop approach. Financ. Stoch. 25, 1–33 (2021)

Hu, Y., Huang, J., Li, X.: Equilibrium for time-inconsistent stochastic linear—quadratic control under constraint. arXiv preprint arXiv:1703.09415 (2017)

Hu, Y., Jin, H., Zhou, X.Y.: Time-inconsistent stochastic linear-quadratic control. SIAM J. Control Optim. 50(3), 1548–1572 (2012)

Hu, Y., Jin, H., Zhou, X.Y.: Time-inconsistent stochastic linear-quadratic control: characterization and uniqueness of equilibrium. SIAM J. Control Optim. 55(2), 1261–1279 (2017)

Huang, J., Huang, M.: Robust mean field linear-quadratic-Gaussian games with unknown \(L^2\)-disturbance. SIAM J. Control Optim. 55(5), 2811–2840 (2017)

Ismail, A., Pham, H.: Robust Markowitz mean-variance portfolio selection under ambiguous covariance matrix. Math. Financ. 29(1), 174–207 (2019)

Jin, H., Zhou, X.Y.: Continuous-time portfolio selection under ambiguity. Math. Control Relat. Fields 5(3), 475–488 (2015)

Knight, F.H.: Risk, Uncertainty and Profit. Houghton Mifflin, New York (1921)

Kobylanski, M.: Backward stochastic differential equations and partial differential equations with quadratic growth. Ann. Probab. 28(2), 558–602 (2000)

Li, D., Ng, W.L.: Optimal dynamic portfolio selection: multiperiod mean-variance formulation. Math. Financ. 10(3), 387–406 (2000)

Lim, A.E., Zhou, X.Y.: Mean-variance portfolio selection with random parameters in a complete market. Math. Oper. Res. 27(1), 101–120 (2002)

Ma, J., Yin, H., Zhang, J.: On non-Markovian forward-backward SDEs and backward stochastic PDEs. Stoch. Process. Appl. 122(12), 3980–4004 (2012)

Maenhout, P.J.: Robust portfolio rules and asset pricing. Rev. Financ. Stud. 17(4), 951–983 (2004)

Moon, J., Yang, H.J.: Linear-quadratic time-inconsistent mean-field type Stackelberg differential games: time-consistent open-loop solutions. IEEE Trans. Autom. Control 66(1), 375–382 (2020)

Morlais, M.A.: Quadratic BSDEs driven by a continuous martingale and applications to the utility maximization problem. Financ. Stoch. 13(1), 121–150 (2009)

Peng, S.: A general stochastic maximum principle for optimal control problems. SIAM J. Control Optim. 28(4), 966–979 (1990)

Pun, C.S.: Robust time-inconsistent stochastic control problems. Automatica 94, 249–257 (2018)

Pun, C.S., Wong, H.Y.: Robust investment-reinsurance optimization with multiscale stochastic volatility. Insurance 62, 245–256 (2015)

Richou, A.: Numerical simulation of BSDEs with drivers of quadratic growth. Ann. Appl. Probab. 21(5), 1933–1964 (2011)

Richou, A.: Markovian quadratic and superquadratic BSDEs with an unbounded terminal condition. Stoch. Process. Appl. 122(9), 3173–3208 (2012)

Sun, J.: Mean-field stochastic linear quadratic optimal control problems: open-loop solvabilities. ESAIM 23(3), 1099–1127 (2017)

Sun, J., Li, X., Yong, J.: Open-loop and closed-loop solvabilities for stochastic linear quadratic optimal control problems. SIAM J. Control Optim. 54(5), 2274–2308 (2016)

Van Den Broek, W., Engwerda, J., Schumacher, J.M.: Robust equilibria in indefinite linear-quadratic differential games. J. Optim. Theory Appl. 119(3), 565–595 (2003)

Wald, A.: Statistical decision functions which minimize the maximum risk. Ann. Math. 57, 265–280 (1945)

Wang, T.: Equilibrium controls in time inconsistent stochastic linear quadratic problems. Appl. Math. Optim. 81(2), 591–619 (2020)

Wang, T.: On closed-loop equilibrium strategies for mean-field stochastic linear quadratic problems. ESAIM 26, 41 (2020)

Wei, Q., Yu, Z.: Time-inconsistent recursive zero-sum stochastic differential games. Math. Control Relat. Fields 8(3&4), 1051 (2018)

Yan, T., Han, B., Pun, C.S., Wong, H.Y.: Robust time-consistent mean-variance portfolio selection problem with multivariate stochastic volatility. Math. Financ. Econ. 14(4), 699–724 (2020). https://doi.org/10.1007/s11579-020-00271-0

Yong, J.: Linear-quadratic optimal control problems for mean-field stochastic differential equations. SIAM J. Control Optim. 51(4), 2809–2838 (2013)

Yong, J.: Linear-quadratic optimal control problems for mean-field stochastic differential equations-time-consistent solutions. Trans. Am. Math. Soc. 369(8), 5467–5523 (2017)

Yong, J., Zhou, X.Y.: Stochastic Controls: Hamiltonian Systems and HJB Equations, vol. 43. Springer Science & Business Media, New York (1999)

Zhou, X.Y., Li, D.: Continuous-time mean-variance portfolio selection: a stochastic LQ framework. Appl. Math. Optim. 42(1), 19–33 (2000)

Acknowledgements

The authors would like to thank the anonymous referee and the editors for their careful reading and valuable comments, which have greatly improved the manuscript.

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Bingyan Han is supported by UIC Start-up Research Fund (Reference No: R72021109). Chi Seng Pun gratefully acknowledges the Ministry of Education (MOE), AcRF Tier 2 Grant (Reference No: MOE2017-T2-1-044) for the funding of this research. Hoi Ying Wong acknowledges the support from the Research Grants Council of Hong Kong via GRF 14303915.

Appendices

Proofs of Results in Section 3

1.1 Proof of Lemma 3.5

Proof

Let k be a positive constant. For \(j=1,...,d\),

Note that \(C^j,~j=1,\ldots ,d\) are essentially bounded. Let k be a positive constant, we have

We have

By the stability results of BSDEs, (see, e.g., Theorem 3.3, Chapter 7 in [38]), we have

Hence, we have

Other two terms in (A.1) can be proven in similar manner. Then the result follows. \(\square \)

1.2 Proof of Theorem 3.7

Proof

Let \(X^{t,\varepsilon ,v}\) be the state process corresponding to \(u^{t,\varepsilon ,v}, h^*\). Define \(Y\equiv Y^{t,\varepsilon ,v}\) and \(Z\equiv Z^{t,\varepsilon ,v}\) satisfying

Since Assumption 3.6 holds, by Lemma 1 in [23], we have the following moment estimates,

Furthermore, since \(\mu ^*_x (X^*_s, u^*_s, s)\) is deterministic, we take conditional expectation on both sides of the SDE for Y, then \({\mathbb {E}}^{\mathbb {P}}_t[Y_s]\) satisfies an ODE with 0 as its unique solution. Therefore, \({\mathbb {E}}^{\mathbb {P}}_t[Y_s]=0, \; s\in [t,T]\).

Then, we obtain the following

Using the definition of \(({\tilde{p}}(\cdot ; t), {\tilde{k}}(\cdot ; t))\) and \(({\tilde{P}}(\cdot ; t), {\tilde{K}}(\cdot ; t))\) in (3.7) and (3.8) and applying Itô’s lemma to the last two terms, we have

and

After simplifications, we prove (3.11). \(\square \)

1.3 Proof of Theorem 3.9

Proof

Since \(H^\varepsilon (s;t)\preceq 0\) and \( {\tilde{H}}(s;t) \succeq 0\), from Theorems 3.2 and 3.7, \((h^*, u^*)\) is an equilibrium control pair if and only if

Using the notations in (3.13) and (3.14), we only need to show

The first step is to prove

The proof is analogous to Proposition 3.3 in [11]. Define \(\varPsi (\cdot )\) as the solution of

\(I_n\) is the \(n\times n\) identity matrix. Since \(\alpha _s\) is bounded and deterministic, \(\varPsi (\cdot )\) is invertible, and \(\varPsi (\cdot ), \varPsi ^{-1}(\cdot )\) are bounded.

Denote \({\hat{p}}(s;t) = \varPsi (s) p(s;t) + \nu {\mathbb {E}}^{\mathbb {P}}_t[X^*_T] + \mu _1 X_t^* + \mu _2\) and \({\hat{k}}^j(s;t) = \varPsi (s) k^j(s;t)\). Then

has a unique solution which does not depend on t, so we can denote \({\hat{p}}(s;t) = {\hat{p}}(s)\), \({\hat{k}}^j(s;t) = {\hat{k}}^j(s)\), therefore, \(p(s;t) = \varPsi ^{-1}(s){\hat{p}}(s) + \varPsi ^{-1}(s) w_t\), where \(w_t = - \nu {\mathbb {E}}^{\mathbb {P}}_t[X^*_T] - \mu _1 X_t^* - \mu _2\). Then \(\lambda (s;t) = f_1(s) + f_2(s) w_t\), where

Since \({\mathbb {E}}^{\mathbb {P}}_t\left[ \sup _{s\in [t,T]} \Vert f_2(s)\Vert ^2_2 \right] < \infty \), we have

Then (A.3) is proved.

For the equivalence between (3.12) and (A.2), we note that

-

If (3.12) is true, then \({\mathbb {E}}^{\mathbb {P}}_t[\lambda (s;s)] = 0\). Therefore,

$$\begin{aligned} \lim _{\varepsilon \downarrow 0} \frac{1}{\varepsilon } \int _t^{t+\varepsilon } {\mathbb {E}}^{\mathbb {P}}_t[\lambda (s;t)]ds = \lim _{\varepsilon \downarrow 0} \frac{1}{\varepsilon } \int _t^{t+\varepsilon } {\mathbb {E}}^{\mathbb {P}}_t[\lambda (s;s)]ds = 0. \end{aligned}$$ -

If \(\lim _{\varepsilon \downarrow 0} \frac{1}{\varepsilon } \int _t^{t+\varepsilon } {\mathbb {E}}^{\mathbb {P}}_t[\lambda (s;t)]ds = 0\), then \(\lim _{\varepsilon \downarrow 0} \frac{1}{\varepsilon } \int _t^{t+\varepsilon } {\mathbb {E}}^{\mathbb {P}}_t[\lambda (s;s)]ds = 0\). By Lemma 3.5 in [9], the stochastic Lebesgue differentiation theorem, we have (3.12).

\(\square \)

Proofs of Results in Section 4

1.1 Proof of Lemma 4.3

Proof

With slightly abuse of notations, suppose there is another solution pair (X, h) and the corresponding adjoint process is p(s; t). Note \( X_T - {\mathbb {E}}^{\mathbb {P}}_t[ X_T] - \mu _2 \in L^2_{{{\mathcal {F}}}_T}(\varOmega ; {\mathbb {R}}, {\mathbb {P}})\), by [38, Chapter 7, Theorem 2.2], we still have a unique adapted solution

Therefore, \(p(t;t)= -\mu _2 e^{\int ^T_t r_s ds}\) and we have the same solution for \( h_t = - \xi e^{\int ^T_t r_s ds} u^*_t = h^*_t\). \(\square \)

1.2 Proof of Lemma 4.5

Proof

Suppose there is another solution pair \((X, {\bar{u}})\), and \({\bar{u}}\) is admissible. Let

By \({\tilde{\varLambda }}(t;t)=0\), we derive

Then

Finally,

We take conditional expectation on both sides and notice that \(r_s\) is deterministic, therefore \({\bar{p}}(t;t)=0\). So,

Then \({\bar{k}}(t;t)\) should be essentially bounded, and

The existence of a solution is obvious. For uniqueness, consider \(({\bar{p}}(\cdot ;t), {\bar{k}}(\cdot ; t) )\) in the space \(L^2_{{\mathcal {F}}}(\varOmega ; \,C(t,T;{\mathbb {R}}), {\mathbb {P}}) \) \(\times \) \( L^\infty _{{\mathcal {F}}}(t, T;\, {\mathbb {R}}^d)\).

Without loss of generality, let \(r=0\). As \({\bar{k}}(s;t)\) does not depend on t, we denote \({\bar{k}}(s) = {\bar{k}}(s;s) = {\bar{k}}(s;t)\). Moreover, we introduce \(a_1(s), a_2(s)\) to rewrite \(d{\bar{p}}(s;t)\) as follows, noting that \(a_1(s), a_2(s)\) are essentially bounded.

Define

Then

As \({\bar{p}}(\cdot ;t) \in L^2_{{\mathcal {F}}}(\varOmega ; \,C(t,T;{\mathbb {R}}), {\mathbb {P}})\), \(p_0(\cdot ;t) \in L^2_{{\mathcal {F}}}(\varOmega ; \,C(t,T;{\mathbb {R}}), {\mathbb {P}})\).

Suppose there are two solutions \((p^{(1)}_0 , {\bar{k}}^{(1)})\) and \((p^{(2)}_0 , {\bar{k}}^{(2)})\). Denote \(p_\varDelta (s;t) = p^{(1)}_0(s;t) - p^{(2)}_0(s;t)\), \(k_\varDelta (s) = {\bar{k}}^{(1)}(s) - {\bar{k}}^{(2)}(s)\), then

Applying Itô’s lemma to \(\Vert p_\varDelta (s;t)\Vert ^2_2\) on s, taking expectation, and noting that \({\bar{k}}^{(1)}(s)\) and \({\bar{k}}^{(2)}(s)\) are essentially bounded, we have

By Gronwall’s inequality, \({\mathbb {E}}^{\mathbb {P}}\big [ \Vert p_\varDelta (s;t)\Vert ^2_2 \big ] = 0\). Therefore, \({\bar{p}}(s;t) = 0,~{\bar{k}}(s;t) = 0\). \(\square \)

1.3 Proof of Proposition 4.7

Proof

A direct calculation shows

Since \(\theta \) is essentially bounded and we can introduce a new probability measure \({\tilde{{\mathbb {Q}}}}\) under which \({\tilde{W}}\) is a standard Brownian motion,

Under \({\tilde{{\mathbb {Q}}}}\), the driver of \({\tilde{\varGamma }}^{(2)}_t\) has no cross term \(\theta '_t {\tilde{\gamma }}^{(2)}_t\) and

Then it is straightforward to verify the conditions in [27, Theorem 2.5]. The Lipschitz constant in [27, Assumption (F.1)] is \(K_b + \frac{|\xi \mu _2 - 1|\Vert \sigma _\vartheta \Vert _\infty }{(\xi \mu _2 + 1)^2}\). We can take constants in [27, Assumption (B.1)] as follows.

In particular, [27, Assumption (B.1)(4)] becomes Condition (2) above. Therefore, we can apply [27, Theorem 2.5] under measure \({\tilde{{\mathbb {Q}}}}\). \({\tilde{\gamma }}^{(2)}_t\) is essentially bounded since \(\theta _t\) is still essentially bounded under \({\tilde{{\mathbb {Q}}}}\). \(\square \)

1.4 Proof of Proposition 4.8

Proof

The proof is a direct application of [27, Proposition 3.1]. With the truncation function \(\rho _M(\cdot )\) in [27, Proposition 3.1], our truncated driver (corresponding to \(f_M\) in [27, Proposition 3.1]) is Lipschitz in \(\vartheta \) with constant \(\frac{|\xi \mu _2 - 1|}{(\xi \mu _2 + 1)^2} M + \frac{2\mu _2}{(\xi \mu _2 + 1)^2} e^{\int ^T_0 r_s ds} ( C_\theta + 1) \), Lipschitz in \({\tilde{\gamma }}^{(2)}\) with constant \(\frac{|\xi \mu _2 - 1|}{(\xi \mu _2 + 1)^2} ( C_\theta + 1) + \frac{2\xi }{(\xi \mu _2 + 1)^2} M\), and Lipschitz in \({\tilde{\varGamma }}^{(2)}\) with constant \( \Vert r\Vert _\infty \). The remaining proof follows exactly from [27, Proposition 3.1]. \(\square \)

1.5 Proof of Lemma 4.13

Proof

Suppose there is another solution pair (X, h) and the corresponding adjoint process is p(s; t). With the same idea as in the proof of Lemma 4.3, we have a unique adapted solution

Therefore, we have \(h_t = h^*_t\).

\(\square \)

1.6 Proof of Lemma 4.14

Proof

Let \(J=\frac{{\tilde{M}}}{{\tilde{N}}}\), \(K=\frac{J}{{\tilde{M}}} {\tilde{U}}- \frac{J^2}{{\tilde{M}}} {\tilde{V}}\), then to prove the existence and uniqueness of \(({\tilde{M}}, {\tilde{U}})\), \(({\tilde{N}}, {\tilde{V}})\), we only need to show the existence and uniqueness of \(({\tilde{M}}, {\tilde{U}}), (J, K)\). It is easy to show

We consider \({\tilde{M}}^c = {\tilde{M}}\vee c\), \( J^c = J \vee c\), where \(c\le 1\) is a constant. The corresponding diffusion terms are denoted by \({\tilde{K}}^c\), \({\tilde{U}}^c\). Then,

(B.8) is a standard quadratic BSDE system, there exists a solution pair \(({\tilde{M}}^c, {\tilde{U}}^c) \in L^\infty _{{\mathcal {F}}}(0,T;{\mathbb {R}}) \times L^2_{{\mathcal {F}}}(0,T;{\mathbb {R}}^d, {\mathbb {P}})\) and \((J^c, K^c) \in L^\infty _{{\mathcal {F}}}(0,T;{\mathbb {R}}) \times L^2_{{\mathcal {F}}}(0,T;{\mathbb {R}}^d, {\mathbb {P}})\). \({\tilde{U}}^c\cdot W^{\mathbb {P}}, K^c\cdot W^{\mathbb {P}}\) are BMO martingales, see [16, 22]. Since \(1-\frac{1}{J^c}\le 0\), by comparison principle for quadratic BSDE in [16], \({\tilde{M}}^c \ge {\hat{M}}^c\), where \({\hat{M}}^c\) is the solution to following BSDE,

Since \(\big [(2-\frac{1}{J^c}- \frac{\tilde{\varGamma }}{\hat{M}^c})\theta + \frac{{\hat{U}}^c}{\hat{M}^c}\big ]\cdot W^{\mathbb {P}}\) is a BMO martingale, we can introduce a new probability measure \({\mathbb {Q}}\) and under \({\mathbb {Q}}\) define a new Brownian motion \(W^{\mathbb {Q}}_s\) by \(W^{\mathbb {Q}}_s = W^{\mathbb {P}}_s + \int ^s_0 (2-\frac{1}{J^c}- \frac{\tilde{\varGamma }}{\hat{M}^c} )\theta + \frac{{\hat{U}}^c}{\hat{M}^c} dt\). Then

Therefore, \({\tilde{M}}^c \ge {\underline{l}}\), \({\underline{l}}\) does not depend on c. By comparison principle for quadratic BSDE in [16], since \(\frac{\alpha ^2}{\xi {\tilde{M}}^c} \le \frac{\alpha ^2}{\xi {\underline{l}}}\) then \(J^c_s \ge \exp (-\int ^T_s\frac{\alpha ^2_t}{\xi {\underline{l}}} dt)\). This lower bound also does not depend on c. Finally, let constant \(0<c< \min \big \{\exp (-\int ^T_0 \frac{\alpha ^2_t}{\xi {\underline{l}}}dt), \;\; {\underline{l}}\big \}\), we have \({\tilde{M}} = {\tilde{M}}^c\), \( J =J^c\), \({\tilde{U}} = {\tilde{U}}^c\), \({\tilde{K}} = {\tilde{K}}^c\). The existence of solutions is guaranteed.

Next, we prove the uniqueness. Since \({\tilde{M}}, J \ge c >0\), define \(Y=\frac{1}{{\tilde{M}}}, Z=-\frac{{\tilde{U}}}{{\tilde{M}}^2}, G = \frac{1}{J}\), \(E=-\frac{K}{J^2}\). Y, G are essentially bounded. Then

Suppose there are two solutions \((Y^{(1)},Z^{(1)}),(G^{(1)},E^{(1)})\) and \((Y^{(2)},Z^{(2)}),(G^{(2)},E^{(2)})\). Denote \({\bar{Y}} = Y^{(1)} - Y^{(2)}, {\bar{Z}} = Z^{(1)} - Z^{(2)}, {\bar{G}} = G^{(1)} - G^{(2)}, {\bar{E}} = E^{(1)} - E^{(2)}\). Then

Applying Itô’s lemma to \(\Vert {\bar{G}}_s\Vert ^2_2+\Vert {\bar{Y}}_s\Vert ^2_2\) and taking conditional expectation, we have

By Hölder inequality, we have

Other terms can be treated in a similar way. Finally,

Consider \(s\in [T-\delta ,T]\) and denote \(G_\delta = \Vert {\bar{G}}_. \Vert _{L^\infty _{{\mathcal {F}}}(T -\delta , T; {\mathbb {R}})}\), \(Y_\delta = \Vert {\bar{Y}}_. \Vert _{L^\infty _{{\mathcal {F}}}(T -\delta , T; {\mathbb {R}})}\), we obtain

Let \(I_\delta = G_\delta \vee Y_\delta \). Taking supremum on the left-hand side over \(s\in [T-\delta ,T]\) yields

By choosing sufficiently small \(\delta \) such that \(C\sqrt{\delta } < 1\), we have \(G^2_\delta = Y^2_\delta = 0\). The same steps are repeated on \([T-2\delta ,T-\delta ], [T-3\delta ,T-2\delta ],...\), until time 0 is reached. The uniqueness follows. \(\square \)

1.7 Proof of Lemma 4.16

Proof

Suppose there is another solution pair \((X, {\bar{u}})\), let

By \({\tilde{\varLambda }}(t;t)=0\), we derive

Then

Finally, we can show

This BSDE has the exact same form as in [11], then it admits a unique solution \(({\bar{p}}(\cdot ;t), {\bar{k}}(\cdot ; t) ) \) in the space \(L^q_{{\mathcal {F}}}(\varOmega ; \,C(t,T;{\mathbb {R}}), {\mathbb {P}})\) \(\times \) \(L^q_{{\mathcal {F}}}(t, T;\, {\mathbb {R}}^d, {\mathbb {P}}) \) for any \(q \in (1,2)\). Therefore, \({\bar{p}}(s;t) = 0, {\bar{k}}(s;t) = 0\). \(\square \)

1.8 Proof of Proposition 4.18

Proof

Consider the BSDE (B.10) for (Y, G, Z, E). For notation simplicity, introduce functions \(f^y\) and \(f^g\) for the drivers to rewrite (B.10) as

Lemma 4.14 shows \(0 < Y, G \le \frac{1}{c}\) for a constant \(0< c < 1\). Indeed, c depends on the value of T. Therefore, we use notation c(T) to highlight this dependence. As in [27, Proposition 3.1], consider a truncation function \(\rho _\kappa \) which is a smooth modification of the centered Euclidean ball of radius \(\kappa \). Denote \((Y^\kappa , G^\kappa , Z^\kappa , E^\kappa )\) as the solution to the truncated BSDE

where \(f^y_\kappa \triangleq f^y(\cdot , \varphi (\cdot ), \cdot , \rho _\kappa (\cdot ), \cdot )\) and \(f^g_\kappa \triangleq f^g(\cdot , \varphi (\cdot ), \cdot , \rho _\kappa (\cdot ), \cdot )\) truncate on (Z, E). First consider that \(b_\vartheta \) is differentiable. Then \((\vartheta , Y, G, Z, E)\) is differentiable with respect to \(\zeta \) in (4.12) and

where \(\nabla \vartheta _t = (\partial \vartheta _{it}/ \partial \zeta _{jt} )_{1 \le i, j \le d}\) etc are defined like the counterparts in [26, Theorem 3.1] and we omit arguments in \(f^y_\kappa \) and \(f^g_\kappa \) for simplicity. One difficulty is \(\nabla Y^\kappa _t\) and \(\nabla G^\kappa _t\) are coupled. The idea is to truncate \(\nabla Y^\kappa _t\) in \(\nabla G^\kappa _t\) and \(\nabla G^\kappa _t\) in \(\nabla Y^\kappa _t\). Without loss of generality, we use the same constant \(\kappa \) and the truncation function is denoted as \({\bar{\rho }}_\kappa \). Denote \((\nabla {\bar{Y}}^\kappa , \nabla {\bar{G}}^\kappa , \nabla {\bar{Z}}^\kappa , \nabla {\bar{E}}^\kappa )\) as the solution to

Note the BSDE above uses \((Y^\kappa , G^\kappa , Z^\kappa , E^\kappa )\). Since \(\nabla _z f^y_\kappa \) and \(\nabla _e f^g_\kappa \) are essentially bounded, we can apply Girsanov’s theorem to introduce a Brownian motion \(W^y_t \triangleq W^{\mathbb {P}}_t - \int ^t_0 \nabla _z f^y_\kappa ds\) under measure \({\mathbb {Q}}^y\) and \(W^g_t \triangleq W^{\mathbb {P}}_t - \int ^t_0 \nabla _e f^g_\kappa ds\) under \({\mathbb {Q}}^g\). Then

Since \(\Vert \nabla \vartheta _t\Vert _2 \le e^{K_b T}\) and

It is direct to show

Then by setting T sufficiently small, the right hand side of the two inequalities can be smaller than \(\kappa \). Therefore, the truncation \({\bar{\rho }}_\kappa \) is not binding and \((\nabla {\bar{Y}}^\kappa , \nabla {\bar{G}}^\kappa , \nabla {\bar{Z}}^\kappa , \nabla {\bar{E}}^\kappa ) =(\nabla Y^\kappa , \nabla G^\kappa , \nabla Z^\kappa , \nabla E^\kappa )\). With Malliavin calculus as in [26, Theorem 3.1], a version of \((Z^\kappa _t, E^\kappa _t)\) is given by \((\nabla Y^\kappa _t (\nabla \vartheta _t)^{-1}\sigma _\vartheta (t)\), \(\nabla G^\kappa _t (\nabla \vartheta _t)^{-1}\sigma _\vartheta (t))\). Since \(\Vert (\nabla \vartheta _t)^{-1}\sigma _\vartheta (t)\Vert _2 \le C_\sigma e^{K_b T}\), we can further select T small enough such that truncation \(\rho _\kappa \) is also not binding. Then we derive that (Z, E) is essentially bounded and therefore \({\tilde{U}}\) is essentially bounded.

When \(b_\vartheta \) is not differentiable, the result can be proved by a standard approximation and stability results for Lipschitz BSDEs as noted in [26, 27]. \(\square \)

Rights and permissions

About this article

Cite this article

Han, B., Pun, C.S. & Wong, H.Y. Robust Time-Inconsistent Stochastic Linear-Quadratic Control with Drift Disturbance. Appl Math Optim 86, 4 (2022). https://doi.org/10.1007/s00245-022-09871-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s00245-022-09871-2

Keywords

- Robust control

- Stochastic linear-quadratic control

- Time-inconsistent preference

- Forward-backward stochastic differential equation