Abstract

The brain tends to associate specific features of stimuli across sensory modalities. The pitch of a sound is for example associated with spatial elevation such that higher-pitched sounds are felt as being “up” in space and lower-pitched sounds as being “down.” Here we investigated whether changes in the pitch of sounds could be effective for visual motion perception similar to those in the location of sounds. We demonstrated that only sounds that alternate in up/down location induced illusory vertical motion of a static visual stimulus, while sounds that alternate in higher/lower pitch did not induce this illusion. The pitch of a sound did not even modulate the visual motion perception induced by sounds alternating in up/down location. Interestingly, though, sounds alternating in higher/lower pitch could become a driver for visual motion if they were paired in a previous exposure phase with vertical visual apparent motion. Thus, only after prolonged exposure, the pitch of a sound became an inducer for upper/lower visual motion. This occurred even if during exposure the pitch and location of the sounds were paired in an incongruent fashion. These findings indicate that pitch–space correspondence is not so strong to drive or modulate visual motion perception. However, associative exposure could increase the saliency of pitch–space relationships and then the pitch could induce visual motion perception by itself.

Similar content being viewed by others

Notes

We confirmed that the stimuli could be discriminated only by pitch. We sequentially presented higher- and lower-pitched tones, or vice versa, with 1,000 ms of ISI and asked 10 participants to judge which tone was perceived as higher in pitch or larger in amplitude (these response domains were randomly assigned in each trial). Pitch discrimination performance was nearly perfect (percentage of correct responses (standard errors of the mean) was 94.5 % (1.9 %) and 93 % (2.1 %) in the monaural and binaural presentations, respectively). On the contrary, amplitude discrimination performance was not significantly different from chance (54.5 % (9.0 %) and 55.0 % (8.9 %), t(9) = 0.50 and 0.58, in the monaural and binaural presentations, respectively).

References

Adams WJ, Graf EW, Ernst MO (2004) Experience can change the “light-from-above” prior. Nat Neurosci 7:1057–1058

Ahissar M, Hochstein S (1993) Attentional control of early perceptual learning. Proc Natl Acad Sci USA 90:5718–5722

Arman AC, Ciaramitaro VM, Boynton GM (2006) Effects of feature-based attention on the motion aftereffect at remote locations. Vision Res 46:2968–2976

Bernstein IH, Edelstein BA (1971) Effects of some variations in auditory input upon visual choice reaction time. J Exp Psychol 87:241–247

Brainard DH (1997) The psychophysics toolbox. Spat Vis 10:433–436

Calvert GA, Spence C, Stein BE (eds) (2004) The handbook of multisensory processing. MIT Press, Cambridge

Cavanagh P, Favreau OE (1985) Color and luminance share a common motion pathway. Vision Res 25:1595–1601

Chiou R, Rich AN (2012) Cross-modality correspondence between pitch and spatial location modulates attentional orienting. Perception 41:339–353

Dolscheid S, Shayan S, Majid A, Casasanto D. (2011) The thickness of musical pitch: psychophysical evidence for the Whorfian hypothesis. In: Proceedings of the 33rd Annual Conference of the Cognitive Science Society, pp 537–542

Ernst MO (2005) A Bayesian view on multimodal cue integration. In: Knoblich G, Thornton I, Grosjean M, Shiffrar M (eds) Perception of the human body perception from the inside out. Oxford University Press, New York, pp 105–131

Ernst MO (2007) Learning to integrate arbitrary signals from vision and touch. J Vis 7(7):1–14

Ernst MO, Bülthoff HH (2004) Merging the senses into a robust percept. Trends Cogn Sci 8:162–169

Evans KK, Treisman A (2010) Natural cross-modal mappings between visual and auditory features. J Vis 10(1):6: 1–12

Guzman-Martinez E, Ortega L, Grabowecky M, Mossbridge J, Suzuki S (2012) Interactive coding of visual spatial frequency and auditory amplitude-modulation rate. Curr Biol 22:383–388

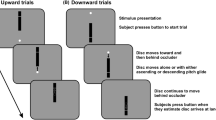

Hidaka S, Manaka Y, Teramoto W, Sugita Y, Miyauchi R, Gyoba J, Suzuki Y, Iwaya Y (2009) Alternation of sound location induces visual motion perception of a static object. PLoS ONE 4:e8188

Hidaka S, Teramoto W, Kobayashi M, Sugita Y (2011a) Sound-contingent visual motion aftereffect. BMC Neurosci 12:44

Hidaka S, Teramoto W, Sugita Y, Manaka Y, Sakamoto S, Suzuki Y (2011b) Auditory motion information drives visual motion perception. PLoS ONE 6:e17499

Klapetek A, Ngo MK, Spence C (2012) Does crossmodal correspondence modulate the facilitatory effect of auditory cues on visual search? Atten Percept Psychophys 74:1154–1167

Kobayashi M, Teramoto W, Hidaka S, Sugita Y (2012a) Indiscriminable sounds determine the direction of visual motion. Sci Rep 2:365

Kobayashi M, Teramoto W, Hidaka S, Sugita Y (2012b) Sound frequency and aural selectivity in sound-contingent visual motion aftereffect. PLoS ONE 7:e36803

Ludwig VU, Adachi I, Matsuzawa T (2011) Visuoauditory mappings between high luminance and high pitch are shared by chimpanzees (Pan troglodytes) and humans. Proc Natl Acad Sci USA 108:20661–20665

Macmillan NA, Creelman CD (2004) Detection theory: a user’s guide, 2nd edn. Lawrence Erlbaum Associates Inc, New Jersey

Maeda F, Kanai R, Shimojo S (2004) Changing pitch induced visual motion illusion. Curr Biol 14:R990–R991

Marks LE (2004) Cross-modal interactions in speeded classification. In: Calvert GA, Spence C, Stein BE (eds) Handbook of multisensory processes. MIT Press, Cambridge, pp 85–105

Mateeff S, Hohnsbein J, Noack T (1985) Dynamic visual capture: apparent auditory motion induced by a moving visual target. Perception 14:721–727

Mossbridge JA, Grabowecky M, Suzuki S (2011) Changes in auditory frequency guide visual-spatial attention. Cognition 121:133–139

Mudd SA (1963) Spatial stereotypes of four dimensions of pure tone. J Exp Psychol 66:347–352

Parise C, Spence C (2008) Synesthetic congruency modulates the temporal ventriloquism effect. Neurosci Lett 442:257–261

Parise CV, Spence C (2009) “When birds of a feather flock together”: synesthetic correspondences modulate audiovisual integration in non-synesthetes. PLoS ONE 4:e5664

Parise CV, Spence C (2012) Audiovisual crossmodal correspondences and sound symbolism: a study using the implicit association test. Exp Brain Res 220:319–333

Pelli DG (1997) The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10:437–442

Pratt CC (1930) The spatial character of high and low tones. J Exp Psychol 13:278–285

Roffler SK, Butler RA (1968) Factors that influence the localization of sound in the vertical plane. J Acoust Soc Am 43:1255–1259

Rusconi E, Kwan B, Giordano BL, Umiltà C, Butterworth B (2006) Spatial representation of pitch height: the SMARC effect. Cognition 99:113–129

Sadaghiani S, Maier JX, Noppeney U (2009) Natural, metaphoric, and linguistic auditory direction signals have distinct influences on visual motion processing. J Neurosci 29:6490–6499

Spence C (2011) Crossmodal correspondences: a tutorial review. Atten Percept Psychophys 73:971–995

Spence C, Deroy O (2012) Crossmodal correspondences: innate or learned? Iperception 3:316–318

Sweeny TD, Guzman-Martinez E, Ortega L, Grabowecky M, Suzuki S (2012) Sounds exaggerate visual shape. Cognition 124:194–200

Teramoto W, Hidaka S, Sugita Y (2010a) Sounds move a static visual object. PLoS ONE 5:e12255

Teramoto W, Manaka Y, Hidaka S, Sugita Y, Miyauchi R, Sakamoto S, Gyoba J, Iwaya Y, Suzuki Y (2010b) Visual motion perception induced by sounds in vertical plane. Neurosci Lett 479:221–225

Walker R (1987) The effects of culture, environment, age, and musical training on choices of visual metaphors for sound. Percept Psychophys 42:491–502

Walker P, Bremner JG, Mason U, Spring J, Mattock K, Slater A, Johnson SP (2010) Preverbal infants’ sensitivity to synaesthetic cross-modality correspondences. Psychol Sci 21:21–25

Zangenehpour S, Zatorre RJ (2010) Crossmodal recruitment of primary visual cortex following brief exposure to bimodal audiovisual stimuli. Neuropsychologia 48:591–600

Acknowledgments

We thank Wouter D.H. Stumpel for his technical supports. We are grateful to anonymous reviewers for their valuable and insightful comments and suggestions for early versions of the manuscript. This research was supported by the Ministry of Education, Culture, Sports, Science and Technology, Grant-in-Aid for Specially Promoted Research (No. 19001004) and Rikkyo University Special Fund for Research.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MOV 5970 kb)

Supplementary material 2 (MOV 1671 kb)

Supplementary material 3 (MOV 3285 kb)

Rights and permissions

About this article

Cite this article

Hidaka, S., Teramoto, W., Keetels, M. et al. Effect of pitch–space correspondence on sound-induced visual motion perception. Exp Brain Res 231, 117–126 (2013). https://doi.org/10.1007/s00221-013-3674-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-013-3674-2