Abstract

People have a variety of sources of information (cues) about surface slant at their disposal. We used a simple placing task to evaluate the relative importance of three such cues (motion parallax, binocular disparity and texture) within the space in which people normally manipulate objects. To do so, we projected a stimulus onto a rotatable screen. This allowed us to manipulate texture cues independently of binocular disparity and motion parallax. We asked people to stand in front of the screen and place a cylinder on the screen. We analysed the cylinder’s orientation just before contact. Participants mainly relied on binocular cues (weight between 50 and 90%), in accordance with binocular cues being known to be reliable when the stimulus surface is nearby and almost frontal. Texture cues contributed between 2 and 18% to the estimated slant. Motion parallax was given a weight between 1 and 9%, despite the fact that it only provided information when the head began to move, which was just before the arm did. Thus motion parallax is used to judge surface slant, even when one is under the impression of standing still.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

It is often important to accurately judge the slant of surfaces in our nearby environment. Whether placing our foot on the ground when we walk or climb stairs, or our fingers on an object when we grasp it and place it elsewhere, the interaction always involves making contact with surfaces. In order to interact successfully, we need to know the orientation of these surfaces. We have many ways to judge a surface’s orientation, including ones based on texture gradients, binocular disparity and motion parallax.

One important cue that contributes to most people’s slant perception is binocular disparity (see Howard and Rogers 1995 for an extensive review of the literature on binocular vision). The small differences between the images in the two eyes suffice to obtain information about the slant in depth. From the literature on grasping it could be inferred that binocular cues normally dominate our actions. For example, Servos and Goodale (1994) claim that binocular vision is the principal source of information for reaching and grasping movements. However, binocular information does not guarantee correct grasping (Hibbard and Bradshaw 2003), so there is reason to expect other cues to also play a role in guiding our actions.

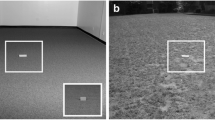

A second cue that contributes to slant perception is the deformation of any surface texture and of shapes’ outlines as a result of perspective. We will refer to the combined information from all such sources as the texture cue. This is the cue that allows us to have a powerful and striking impression of surface slant from a flat image, as exemplified in Fig. 1. It is well known that surface texture provides valuable information on slant perception (Gibson 1950; Stevens 1981; Buckley et al. 1996; Landy and Graham 2004) and motor control (Knill 1998a; Watt and Bradshaw 2003).

Retrieving the slant of a surface from texture cues is based on the assumption that the distribution of texture elements over the surface is more or less even and that shapes are more or less symmetrical, see Rosenholtz and Malik (1997). This assumption holds for a wide variety of natural and artificial objects.

The third cue that we will consider is motion parallax (Rogers and Collett 1989; Rogers and Graham 1979, 1982; Ono and Steinbach 1990; Gillam and Rogers 1991; Ujike and Ono 2001). For a review of the widespread use of motion parallax in the animal kingdom, see Kral (2003). Movement of the head relative to a surface generates changes in the surface’s retinal image over time. These changes depend on the motion of the head relative to the items in the surrounding, and on the items’ relative distances. The latter dependency can be used to obtain information about depth and slant. Watt and Bradshaw (2003) have shown that motion parallax can guide human movements when binocular cues are not available.

When more than one cue is available the cues are combined. It is generally accepted that the estimated slant is a weighted average of the slants indicated by various cues, and that the weight of a cue is related to its accuracy, although some details of the mechanism are still under debate (Landy et al. 1995; Hillis et al. 2002; Hogervorst and Brenner 2004; Rosas et al. 2005; Muller et al. 2007). The relative contribution of binocular cues and texture cues depends on the distance (Hillis et al. 2004) and surface orientation (Knill 1998b; Buckley and Frisby 1993; Ryan and Gillam 1994), because binocular vision is better nearby and texture gradients change least rapidly with the angle of slant for near-frontal surfaces (for purely geometrical reasons). Knill (2005) measured how binocular cues and texture cues to surface orientation are combined to guide motor behaviour. Cue weights were found to be dependent on surface slant and also on the task: more weight was given to binocular cues for controlling hand movements than for making perceptual judgements. Knill used cue-consistent and cue-conflict stimuli in a virtual reality environment.

We wanted to find out whether motion parallax contributes to judgement of slant in the presence of other cues (such as binocular and texture cues) under more or less natural conditions. To do so, we used a setup in which the physical slant of a surface could be manipulated independently of the slant indicated by a pronounced texture. Thus a conflict between the texture cue and all the other cues was created by violating the assumption that the distribution of texture elements is homogeneous. The judged surface slant was determined by asking participants to swiftly place a flat cylindrical probe on the slanted surface (as in Knill 2005). Our main interest was in the extent to which head movements and the resulting motion parallax contribute to the perceived surface slant. So, conditions in which the head could move freely were compared with one in which a head restraint was used. We compared conditions with and without binocular vision in order to be able to evaluate the importance of motion parallax in relation to this cue.

Methods

Participants

Five people, four of whom were male, participated in the experiment. All participants gave their informed consent prior to their inclusion in the study. The experiment was part of an ongoing research program that was approved by the local ethics committee. All participants were right-handed, had normal or corrected-to-normal vision and good binocular vision (stereo acuity <40 arcseconds).

Experimental setup

Participants were standing upright in front of a large rotatable screen. A sketch of the setup is given in Fig. 2. The screen was a plexiglass plate covered with projection foil. Images were projected from below by an Hitachi cp-x325 LCD projector with a resolution of 1024 × 768 pixels. The screen and the projector could be rotated as a whole. Participants wore computer-controlled PLATO shutter-glasses, with which we could alternate between monocular and binocular vision, see Milgram (1987). A chessboard pattern was projected onto the screen (see Fig. 3). Slants were defined relative to the gravity-defined horizontal. A grey ring, 104 mm in diameter, indicated the target position for the probe. Two positions of the ring, one near the participant (the target’s centre 100 mm below the stimulus centre) and one further away (100 mm above the stimulus centre) were displayed in random order to make sure that participants did not simply repeat the previous movement. Figure 3 shows the stimuli with 0° and 10° texture slant relative to the surface’s physical slant. The geometry of the stimulus was calculated by projecting the texture-defined slant on the physically rotated surface. The projection is calculated from the point of the observer’s eyes. The 0° stimulus was a 40 cm square, with 40 cm corresponding to a visual angle of about 27°. A dark grey rim was drawn around the stimulus to mask any real edges that could become visible due to reflections within the set-up. To avoid illuminating objects around the set-up, the luminance of the image at the position of the eyes was limited to 0.4 Cd m−2. This was achieved by placing filters in front of the projector.

Participants were standing upright, facing the screen. They moved a probe from a starting position 50 cm to the right of the surface midline to a target position on the surface (indicated by the grey ring). Participants wore PLATO glasses with which we could switch between no, monocular and binocular vision. During the experiment, only the slanted surface was visible

Examples of consistent (left) and conflict (right) images. The left panel shows the 0° texture slant on a 0° surface slant as seen from above. The right panel shows the 10° texture slant on the 0° surface slant. The deformation of the stimulus is consistent with the actual viewing geometry but for clarity the image is presented as seen from above. The target (grey ring) indicates the position at which participants have to place the probe. The targets are shown at the ‘near’ position. The dark grey rim’s shape is in accordance with the texture cue

The probe was a flat cylinder (diameter = 104 mm, height = 22 mm, mass = 0.2 kg). Movements of the probe were registered by an optotrak 3020 system (northern digital inc., Waterloo, ON, Canada). This system tracks the position of active infrared markers with an accuracy better than 0.5 mm. The 3D-positions of five markers on the probe were tracked at a rate of 200 Hz. The position, orientation and velocity of the probe were calculated from these data.

Procedure

Experiments were performed in a completely dark room. The dark environment and the low intensity of the stimuli ensured that there was no visible external reference frame. Participants were instructed to place the probe at the indicated target position on the surface. They were to start moving as soon as the target was visible. Stimuli were shown for 2.5 s. All movements were completed well within this interval.

In order to avoid dark adaptation a bright lamp was turned on for 5 s immediately after each trial. During this period participants placed the probe at the starting position, 50 cm to the right of the midline of the screen. Then the light was turned off for about 5 s, during which time the experimenter adjusted the orientation of the screen in preparation for the next trial.

Figure 4 shows the six combinations of surface slant and texture slant that were used in the experiment. The different combinations could be viewed monocularly or binocularly. The four consistent combinations (on the diagonal in Fig. 4) were used as a standard. The physical surface slant (see Fig. 2) was −10°, 0°, 10° or 20°, and the image on the surface was as shown on the left in Fig. 3. The two conflict combinations either involved presenting the image shown on the right in Fig. 3 on a horizontal surface (surface slant 0°; texture slant 10°) or presenting a similarly transformed image (slanted in the opposite direction) on a surface with 10° slant. All six stimuli were presented under various conditions. In total there were four experimental conditions, each consisting of 192 trials, divided into 3 blocks of 64 trials. Within every condition 75% of the trials were without conflict and 25% involved a conflict between texture and the other cues present in that condition.

The six combinations of physical surface slant (continuous lines) and slant suggested by texture (dashed lines) that were used in the experiment. In four cases there was no conflict between the cues (solid disks). In two cases there was a conflict (open disks). The slants have been exaggerated for clarity

We used four conditions in which different combinations of the available cues were presented. The choice of conditions will become clear when we describe the data analysis. We chose three conditions with which we could calculate the five parameters of our model, and one condition to test one of our assumptions.

In the ‘binocular’ condition viewing was binocular and head-free in both conflict and consistent trials. In this condition all cues to slant perception were available. In the ‘monocular’ condition the conflict and consistent trials were both presented monocularly and head-free. Stimuli were viewed with the left or right eye in random order. No binocular cues were available, but all other cues were present. In the ‘biteboard’ condition the head was fixed in combination with monocular viewing. The biteboards were made individually with an impression of the participant’s teeth. The biteboard severely limits head movements, removing information from motion parallax.

In the consistent trials of the ‘mixed’ condition the screen was viewed monocularly (75% of all trials), but in the conflict trials (25%) it was viewed binocularly. This condition was included to evaluate whether participants adapt their strategy at the level of a session rather than per trial. In the ‘binocular’ condition binocular information was always reliable, so participants could have learnt to use this cue. In the ‘mixed’ condition, in contrast, binocular cues were absent in the majority of trials, so participants could have learnt to use texture or motion parallax. Note that the 25% binocular, conflict trials in the ‘mixed’ condition are identical to the conflict trials in the ‘binocular condition’, whereas the 75% consistent trials are identical to the consistent trials in the ‘monocular’ condition. Thus the ‘mixed’ condition serves as a control condition to test whether the weight given to the cues stays about the same under changing viewing conditions on other trials.

Analysis

During some moments of some trials the participant’s fingers or hand occluded one or more of the five markers. The position of each marker relative to the centre of the probe is known, so the position and orientation of the probe can be calculated from any set of at least three markers. Frames in which fewer than three marker positions were known were not analysed. Figure 5 shows a schematic side-view of the path followed by the probe. The end of the movement was defined as the first sample at which the centre of the probe was less than 2 mm from the screen. The probe orientation was averaged over all samples at which the centre of the probe was between 100 and 20 mm from its position at the end of the movement (the grey line segments in Fig. 5). The last 20 mm of the path were excluded to avoid considering moments at which the edge of the probe could be in contact with the real surface of the projection screen.

The upper left panel gives a schematic side view of the probe’s path (curved thin line) towards the slanted surface (straight thick line). The position and orientation of the probe were measured by the optotrak system. The upper right panel shows a side view of a few paths towards the ‘far’ target position. The average probe orientation is determined during the last 100 to 20 mm before the end of the movement (indicated schematically by the dark-grey line segments). The lower panels show examples of the probe orientation with time intervals of 25 ms

To understand our method for determining the cue weights, consider the two extreme hypothetical outcomes shown in Fig. 6. At the one extreme, if information from the texture cue is not used at all, probe orientations will always follow the slant of the physical surface (left panel). At the other extreme, if the observer only relies on texture cues the orientation of the probe will follow the texture-defined slant (right panel). In the latter case the line connecting the cue-conflict conditions has approximately the opposite slope of that for consistent conditions. Note that the probe orientations in the consistent trials may differ from the physical surface slant (grey line). Some flattening may arise because the hand may still be rotating towards the surface slant during the last 100 mm of its trajectory. Participants may also rely to some extent on previous slopes that they encountered during the experiment. We will model these effects as a prior for a single surface slant for all conditions.

We assume that the weights that participants give to the different cues are the same in all conditions. This would for instance be so if observers base the weights on the reliability, as in optimal cue combination (Landy et al. 1995). We can therefore model the estimated slant (S) as a weighted average of the slant estimated from each of the available cues (si), which are all assumed to give veridical estimates except for the prior for a fixed slant (as mentioned in the previous paragraph):

where w i is the weight (in arbitrary units) given to each available cue, with i ∈ {b, m, t, r, p} indicating binocular vision, motion parallax, texture, a rest category containing any other valid cues, and the prior. Note that we predict that the cue weights (w i ) of all available cues will be the same in all conditions, although the relative weight given to a cue (w i /∑w i ) will differ between conditions because it depends on the cues that are available in that condition.

The slant of the prior is a constant. Its value is likely to be near the mean of the slants in all previous conditions, but this is not essential for our analysis:

If s denotes the simulated slant, then the slants indicated by all other available cues are:

except for the conflict trials, for which the texture differs 10° from all other available cues (see Fig. 4):

Combining these equations gives:

and

The sums in Eqs. 5 and 6 are only over cues that were available in each condition. The slopes (β) of the regression lines in Fig. 6 are given by the first derivatives of the estimated slant:

Combining Eqs. 7 and 8 yields:

and

Equations 9 and 10 apply to all experimental conditions as modified for cue availability, except for the ‘mixed’ condition. In the ‘binocular’ condition all cues yield information about the surface’s slant:

Similar equations can be written for the other conditions. Binocular information is not available in the ‘monocular’ condition, so w B does not occur in the equation:

Similarly, motion parallax ceases to contribute to the estimated slant in the ‘biteboard’ condition, giving:

Since the weights are in arbitrary units, we are free to define them in such a way that the sum of all weights is one:

First we determine the weight of the prior relative to that of texture (w P /w T ) for each condition, using Eq. 10. Then, the weighted average of these ratios is calculated for each participant. We found no clear evidence that the assumption that w P /w T is the same across conditions was not justified. Next, determining the ratios between the slopes in the ‘binocular’, ‘monocular’ and ‘biteboard’ conditions from our data allows us to use Eqs. 11 to 14 to determine the values of the weights (w B , w T , w M and w R ).

The data of the ‘mixed’ condition was analysed by comparing the slopes (βconflict and βconsistent) with the matching slopes in the ‘binocular’ and the ‘monocular’ conditions. Data for ‘near’ and ‘far’ target positions were pooled before calculating the slopes. The weights of the cues were calculated for individual participants. In addition, we also pooled the data of all participants before calculating the slopes, which yields the weights for ‘All’ participants.

Results

We determined average probe orientations for each participant and condition. The slopes of probe orientation as a function of surface orientation for conflict and consistent conditions enable us to calculate the cue weights, as explained in the ‘methods’ section.

Conditions

The upper panel of Fig. 7 shows the probe orientations in the binocular condition. Conflict (open symbols) and consistent (closed symbols) probe orientations are almost the same. The difference between the slopes is small but significant (P < 0.05). The second panel of Fig. 7 shows the probe orientations in the monocular condition. The slopes clearly differ between the conflict and the consistent trials (P < 0.01). In the ‘biteboard’ condition (third panel of Fig. 7) the conflict trials have almost the opposite slope than the consistent trials. The difference between the slopes is significant (P < 0.01). The cue weights were calculated using Eqs. 10 to 14 and the values of the regression slopes.

Pooled data for all participants. Each panel is for one of the four experimental conditions. Average probe orientations are shown for surfaces with (open symbols) and without (closed symbols) conflicts between real slant and texture slant. The error bars show the overall standard deviation. Correct probe orientations for the consistent trials (grey lines), linear regression (black lines) and regression slopes, β (numbers in lower right corner) are also shown. Asterisks indicate whether the difference between the regression slopes is significant (*P < 0.05; **P < 0.01)

The ‘mixed’ condition was included as a control to ascertain that the cue weights do not depend on the viewing conditions on other trials. We compared the monocular consistent trials with the identical trials in the ‘monocular’ condition, and the binocular conflict trials with the identical trials in the ‘binocular’ condition. The regression slope for monocular, consistent trials (βconsistent = 0.72) is not significantly different (P > 0.05) from the same trials in the ‘monocular’ condition (βconsistent = 0.76). The slope of the (binocular) conflict trials in the ‘mixed’ condition (βconflict = 0.61) is slightly but significantly lower (P = 0.04) than for conflict trials in the ‘binocular’ condition (βconflict = 0.72). Thus the weights may not be completely independent of the conditions. The difference was small enough to accept the calculation of the weights on the basis of the assumption that the condition is irrelevant. Our analysis may however underestimate the weight given to the texture cue.

Cue weights

The weight of the binocular cue lies between 50 and 90%, for individual participants (see Fig. 8). The average standard error is 11%. The errors in the cue weights are calculated by the method of propagation of errors based on the errors in the regression slopes. If we determine the slopes across all participants, the binocular weight is 71 ± 6%. The weight of the texture cue lies between 2 and 18% with an average standard error of 3% for individual participants. The weight given to the texture cue is 8 ± 1% across all participants. The weight given to motion parallax lies between 1 and 9% for individual participants with an average standard error of 6%. The weight given to motion parallax is 8 ± 3% across all participants. The weight attributed to the rest category of cues was only 3 ± 2% across all participants. The prior contributed between 6 and 23% for individual participants with an average standard error of 3%. The weight of the prior varies between 7 and 13% across conditions. Across all participants the weight of the prior is 10 ± 2%.

The weights given to the binocular, texture, motion parallax, other cues and to the prior, for each participant (horizontal axis). The weights are obtained by substituting the slopes of conflict data and consistent data in Eqs. 10 to 14. For ‘All’, first all participant’s data in each condition was pooled and then the weights were determined, which are all significantly different from zero. For individual participants the texture cue was not significantly different from zero in one case, the motion cue in four cases and the rest category in three cases

Head movements

Head movements were measured in the monocular viewing condition for three of the participants. Participants move their head considerably when placing the cylinder: EB moved on average 103 mm, JG 53 mm and DdG 37 mm in the lateral direction. Interestingly, the head movement only started just before the arm movement. Shortly before (100 ms) the onset of arm movement (when the probe was 10 mm from the starting position), the head had only moved 10 mm (EB), 4 mm (JG) or 6 mm (DdG). Thus the information from motion parallax is mainly picked up during the arm movement. None of the participants were aware of having made head movements.

Discussion

We used a physically rotatable screen as a surface. The projected stimulus was viewed in a completely dark environment within a space in which objects are normally manipulated. In our analysis systematic deformations that affect a single cue (like depth compression resulting from an erroneous depth estimate) were not considered. Moreover, we assume that the cue weights are the same in all conditions, so that their contributions to the percept only depend on which cues are available. We determined the contributions of binocular disparity, texture cues, motion parallax, a rest category and a prior. Under these conditions and based on these assumptions we conclude that participants mainly relied on binocular information (between 50 and 90%). Texture cues contributed between 2 and 18% to the estimated slant. Motion parallax contributed up to 9%. The prior contributed between 6 and 23%. Residual cues may account for up to 9%.

Comparing conditions with and without head movement revealed that motion parallax plays a role in slant perception. This is evident from the weights (Fig. 8) but also from a comparison of performance in the ‘monocular’ and ‘biteboard’ conditions (Fig. 7). It is not unusual to move the whole body, including the head, when making large arm movements. Beside mechanical reasons for doing so we here show that it may also have perceptual advantages.

We included in our analysis a rest category of cues that might contain information about slant that was not manipulated. The results suggest that this category indeed includes cues that yield some information about slant. Accommodation, or the rate at which the image becomes blurred with distance from fixation, might provide such information (Mather 1997; Watt et al. 2005). However, artefacts of our setup such as the possible visible micro texture (fibres in the projection foil or pixels on the screen) and the angular distribution of the light scattered from the surface could play a role too. Taken together in a rest category such cues contribute only a few percent to the estimated slant.

The probe orientations in the consistent trials in Fig. 7 are not equal to the physical surface slant. We incorporated a prior in our model to take into account behaviour that is not related to the instantaneous information, like visual or haptic information from previous trials. The slant indicated by the prior cue is a constant; i.e. it does not depend on the stimulus. Flatter slopes indicate that the prior plays a relatively large role. The weight of the prior is about as large as the weight of the texture cue. One component of the prior could be that the hand orientation is still changing towards its final value at the moment that we sample, which is slightly before contact (Cuijpers et al. 2004). Any biases towards a certain orientation of the hand or towards a certain perceived slant will also contribute to the weight of the prior.

The purpose of having the ‘mixed’ viewing condition was to check whether the weights change under different conditions. Ernst et al. (2000) have shown that haptic feedback can make more weight be given to a visual slant cue that is consistent with the feedback. In our study the haptic feedback was always consistent with the physical slant of the surface, so only the texture cue was sometimes unreliable. So, with a conflict between surface slant and texture the haptic feedback may yield a bias towards other cues than texture. Our results show a small difference between the ‘mixed’ condition and the comparable trials in the ‘monocular’ and ‘binocular’ condition. Thus here too the extent to which cues are used does probably depend to some extent on experience in previous trials. This indicates that the estimated slant does not only depend on the accuracy of the presented cues, as is often assumed in theories of optimal cue combination (Landy et al. 1995; Hillis et al. 2002; Muller et al. 2007 ). It is however possible that the difference arises from less use of motion parallax when binocular information was always available, perhaps because participants move less when there is enough information from other sources than motion parallax. We do not know whether this is the case because we did not measure head movements in all conditions. However, these differences are all too small to be taken seriously without further research.

In our study binocular disparity is given most weight, which is in accordance with binocular cues being known to be reliable when the stimulus surface is nearby and almost frontal. Because experimental conditions were all in favour of binocular disparity, the role of motion parallax and texture cues is probably smaller here than in natural viewing conditions. Motion parallax and texture cues both contribute to a small but significant extent to slant perception, although marked differences between participants were observed. Motion parallax was available only shortly and began relatively late, as the head began to move only just before onset of the arm movement. From animal studies it is known that a range of animals gain depth information by moving from side to side just before the performance of an action, see Kral (2003). We have shown that humans are able to use motion parallax during an action. It was known that monocular depth information can be used to guide our actions (Marotta et al. 1998; Dijkerman et al. 1999; Watt and Bradshaw 2003). It was not known, however, that motion parallax plays a role under conditions where other cues are dominantly available and without actively moving one’s head before starting the action. We conclude that motion parallax is used as a cue to manipulate objects in our nearby environment, even when one is under the impression of holding one’s head still. Motion parallax should therefore not be ignored in a ‘static’ task unless the head is really fixated.

References

Buckley D, Frisby JP (1993) Interaction of stereo, texture and outline cues in the shape perception of three-dimensional ridges. Vision Res 33:919–933. doi:10.1016/0042-6989(93)90075-8

Buckley D, Frisby JP, Blake A (1996) Does the human visual system implement an ideal observer theory of slant from texture? Vision Res 36:1163–1176. doi:10.1016/0042-6989(95)00177-8

Cuijpers RH, Smeets JB, Brenner E (2004) On the relation between object shape and grasping kinematics. J Neurophysiol 91:2598–2606. doi:10.1152/jn.00644.2003

Dijkerman HC, Milner AD, Carey DP (1999) Motion parallax enables depth processing for action in a visual form agnosic when binocular vision is unavailable. Neuropsychologia 37:1505–1510. doi:10.1016/S0028-3932(99)00063-9

Ernst MO, Banks MSW, Bülthoff HH (2000) Touch can change visual slant perception. Nat Neurosci 3:69–73. doi:10.1038/71140

Gibson JJ (1950) The perception of visual surfaces. Am J Psychol 63:367–384

Gillam B, Rogers B (1991) Orientation disparity, deformation and stereoscopic slant perception. Perception 20:441–448. doi:10.1068/p200441

Hibbard PB, Bradshaw MF (2003) Reaching for virtual objects: binocular disparity and the control of prehension. Exp Brain Res 148:196–201. doi:10.1007/s00221-002-1295-2

Hillis JM, Ernst MO, Banks MS, Landy MS (2002) Combining sensory information: mandatory fusion within, but not between, senses. Science 298:1627–1630. doi:10.1126/science.1075396

Hillis JM, Watt SJ, Landy MS, Banks MS (2004) Slant from texture and disparity cues: optimal cue combination. J Vis 4:967–992. doi:10.1167/4.12.1

Hogervorst MA, Brenner E (2004) Combining cues while avoiding perceptual conflicts. Perception 33:1155–1172. doi:10.1068/p5253

Howard IP, Rogers BJ (1995) Binocular vision and stereopsis. Oxford University Press, New York

Knill DC (1998a) Surface orientation from texture: ideal observers, generic observers and the information content of texture cues. Vision Res 38:1655–1682. doi:10.1016/S0042-6989(97)00324-6

Knill DC (1998b) Discrimination of planar surface slant from texture: human and ideal observers compared. Vision Res 38:1683–1711. doi:10.1016/S0042-6989(97)00325-8

Knill DC (2005) Reaching for visual cues to depth: the brain combines depth cues differently for motor control and perception. J Vis 5:103–315. doi:10.1167/5.2.2

Kral K (2003) Behavioural-analytical studies of the role of head movements in depth perception in insects, birds and mammals. Behav Processes 64:1–12. doi:10.1016/S0376-6357(03)00054-8

Landy MS, Graham N (2004) Visual perception of texture. In: Chalupa LM, Werner JS (eds) The visual neurosciences. MIT Press, Cambridge, pp 1106–1118

Landy MS, Maloney LT, Johnston EB, Young M (1995) Measurement and modeling of depth cue combination: in defense of weak fusion. Vision Res 35:389–412. doi:10.1016/0042-6989(94)00176-M

Marotta JJ, Kruyer A, Goodale MA (1998) The role of head movements in the control of manual prehension. Exp Brain Res 120:134–138. doi:10.1007/s002210050386

Mather G (1997) The use of image blur as a depth cue. Perception 26:1147–1158. doi:10.1068/p261147

Milgram P (1987) A spectacle-mounted liquid-crystal tachistoscope. Behav Res Methods Instrum Comput 19:449–456. doi:10.1068/p080125

Muller CPM, Brenner E, Smeets JBJ (2007) Living up to optimal expectations. J Vis 7:1–10. doi:10.1167/7.3.2

Ono H, Steinbach MJ (1990) Monocular stereopsis with and without head movement. Percept Psychophys 48:179–187

Rogers BJ, Collett TS (1989) The appearance of surfaces specified by motion parallax and binocular disparity. Q J Exp Psychol A 41:697–717. doi:10.1080/14640748908402390

Rogers BJ, Graham ME (1979) Motion parallax as an independent cue for depth perception. Perception 8:125–134. doi:10.1068/p080125

Rogers BJ, Graham ME (1982) Similarities between motion parallax and stereopsis in human depth perception. Vision Res 22:261–270

Rosas P, Wagemans J, Ernst MO, Wichmann FA (2005) Texture and haptic cues in slant discrimination: reliability-based cue weighting without statistically optimal cue combination. J Opt Soc Am A Opt Image Sci Vis 22:801–809

Rosenholtz R, Malik J (1997) Surface orientation from texture: isotropy or homogeneity (or both)? Vision Res 37:2283–2293. doi:10.1016/S0042-6989(96)00121-6

Ryan C, Gillam B (1994) Cue conflict and stereoscopic surface slant about horizontal and vertical axes. Perception 23:645–658. doi:10.1068/p230645

Servos P, Goodale MA (1994) Binocular vision and the on-line control of human prehension. Exp Brain Res 98:119–127. doi:10.1007/BF00229116

Stevens KA (1981) The information content of texture gradients. Biol Cybern 42:95–105

Ujike H, Ono H (2001) Depth thresholds of motion parallax as a function of head movement velocity. Vision Res 41:2835–2843. doi:10.1016/S0042-6989(01)00164-X

Watt SJ, Bradshaw MF (2003) The visual control of reaching and grasping: binocular disparity and motion parallax. J Exp Psychol Hum Percept Perform 29:404–415. doi:10.1037/0096-1523.29.2.404

Watt SJ, Akeley K, Ernst MO, Banks MS (2005) Focus cues affect perceived depth. J Vis 5:834–862. doi:10.1167/5.10.7

Acknowledgments

This research was supported by vidi grant 452-02-007 of the Netherlands Organization for Scientific Research (NWO). We thank Michael Landy for his suggestions.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Louw, S., Smeets, J.B.J. & Brenner, E. Judging surface slant for placing objects: a role for motion parallax. Exp Brain Res 183, 149–158 (2007). https://doi.org/10.1007/s00221-007-1043-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-007-1043-8