Abstract

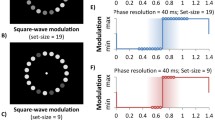

Temporal synchrony is a critical condition for integrating information presented in different sensory modalities. To gain insight into the mechanism underlying synchrony perception of audio-visual signals we examined temporal limits for human participants to detect synchronous audio-visual stimuli. Specifically, we measured the percentage correctness of synchrony–asynchrony discrimination as a function of audio-visual lag while changing the temporal frequency and/or modulation waveforms. Audio-visual stimuli were a luminance-modulated Gaussian blob and amplitude-modulated white noise. The results indicated that synchrony–asynchrony discrimination became nearly impossible for periodic pulse trains at temporal frequencies higher than 4 Hz, even when the lag was large enough for discrimination with single pulses (Experiment 1). This temporal limitation cannot be ascribed to peripheral low-pass filters in either vision or audition (Experiment 2), which suggests that the temporal limit reflects a property of a more central mechanism located at or before cross-modal signal comparison. We also found that the functional behaviour of this central mechanism could not be approximated by a linear low-pass filter (Experiment 3). These results are consistent with a hypothesis that the perception of audio-visual synchrony is based on comparison of salient temporal features individuated from within-modal signal streams.

Similar content being viewed by others

Notes

An alternative method, i.e. measuring the probability of reporting apparent synchrony as a function of the audiovisual time lag (e.g. Dixon and Spitz 1980), could severely suffer from variation of the participants’ criteria of “simultaneity”. When a generous criterion is applied, the participants would judge different time lags as belonging to the same “synchrony” category even though they could discriminate one lag from another. This is also a serious problem under conditions in which auditory driving induces the perception of an illusory audiovisual synchrony irrespective of the audio-visual relationship

Why we did not find any effect of spatial location is not obvious, but a few points are worth mentioning. First, past studies showing positional facilitation employed tasks that are not free from response bias. If the effect of spatial location is to stabilise the response bias, it will not affect synchrony–asynchrony discrimination performance. Second, Keetels and Vroomen (2004) reported that the effect of location was small for participants giving a good performance. Because the participants of the subsidiary experiment were all well-trained, their performance might have already saturated

In a preliminary observation, we measured the rate of perception of synchrony as a function of audio-visual lag. When the stimulus waveform was a square wave modulation whose mean was equal to the background, some participants made a significant number of false synchrony responses at ~180° shift. This error however disappeared when we reduced the background luminance to make the visual stimulus invisible during the off phase. To reduce the potential contribution of offset responses we used a similar background setting for all the stimuli in the main experiments (except for the low-cut single pulse). This could be an objection to the argument that offset response had some effects in the case of sinusoidal modulation.

Another line of support comes from the finding that audio-visual synchrony detection is nearly impossible for randomly generated pulse trains when the pulse temporal density is high (Fujisaki and Nishida 2004)

References

Bushara KO, Hanakawa T, Immisch I, Toma K, Kansaku K, Hallett M (2003) Neural correlates of cross-modal binding. Nat Neurosci 6:190–195

Dixon NF, Spitz L (1980) The detection of auditory visual desynchrony. Perception 9:719–721

Efron B, Tibshirani RJ (1994) An introduction to the bootstrap. Monographs on statistics and applied probability, no. 5. Chapman& Hall, New York

Fendrich R, Corballis PM (2001) The temporal cross-capture of audition and vision. Percept Psychophys 63:719–725

Forte J, Hogben JH, Ross J (1999) Spatial limitations of temporal segmentation. Vision Res 39:4052–4061

Fujisaki W, Nishida S (2004) Temporal characteristics of synchrony detection of audiovisual signals. Paper presented at the Fifth Annual Meeting of the International Multisensory Research Forum, Universitat de Barcelona, Barcelona, Spain

Fujisaki W, Shimojo S, Kashino M, Nishida S (2004) Recalibration of audiovisual simultaneity. Nat Neurosci 7:773–778

Gebhard JW, Mowbray GH (1959) On discriminating the rate of visual flicker and auditory flutter. Am J Psychol 72:521–529

Green DM, Swets JA (1966) Signal detection theory and psychophysics. Krieger, Huntington

Guski R (2004) Spatial congruity in audiovisual synchrony judgements. Proceedings of 18th International Congress on Acoustics, pp 2059–2062

Holcombe AO, Cavanagh P (2001) Early binding of feature pairs for visual perception. Nat Neurosci 4:127–128

Johnston A, Nishida S (2001) Time perception: brain time or event time? Curr Biol 11:R427–R430

Keetels M, Vroomen J (2004) Spatial separation affects audio-visual temporal synchrony. Paper presented at the Fifth Annual Meeting of the International Multisensory Research Forum, Universitat de Barcelona, Barcelona, Spain

Kelly DH (1979) Motion and vision: II. Stabilized spatio-temporal threshold surface. J Opt Soc Am 69:1340–1349

Lewald J, Guski R (2003) Cross-modal perceptual integration of spatially and temporally disparate auditory and visual stimuli. Brain Res Cogn Brain Res 16:468–478

Lewkowicz DJ (1996) Perception of auditory-visual temporal synchrony in human infants. J Exp Psychol Hum Percept Perform 22:1094–1106

Lu ZL, Sperling G (1995) The functional architecture of human visual motion perception. Vision Res 35:2697–2722

McGurk H, MacDonald J (1976) Hearing lips and seeing voices. Nature 264:746–774

Meredith MA, Nemitz JW, Stein BE (1987) Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J Neurosci 7:3215–3229

Morein-Zamir S, Soto-Faraco S, Kingstone A (2003). Auditory capture of vision: examining temporal ventriloquism. Brain Res Cogn Brain Res 17:154–163

Moutoussis K, Zeki S (1997) A direct demonstration of perceptual asynchrony in vision. Proc R Soc Lond B Biol Sci 264:393–399

Munhall KG, Gribble P, Sacco L, Ward M (1996) Temporal constraints on the McGurk effect. Percept Psychophys 58:351–362

Nishida S, Johnston A (2002) Marker correspondence, not processing latency, determines temporal binding of visual attributes. Curr Biol 12:359–368

Recanzone GH (2003) Auditory influences on visual temporal rate perception. J Neurophysiol 89:1078–1093

Roufs JA, Blommaert FJ (1981) Temporal impulse and step responses of the human eye obtained psychophysically by means of a drift-correcting perturbation technique. Vision Res 21:1203–1221

Sanabria D, Soto-Faraco S, Chan J, Spence C (2003) Does perceptual grouping precede multisensory integration? Evidence from the crossmodal dynamic capture task. Paper presented at 4th Annual Meeting of the International Multisensory Research Forum, McMaster University, Hamilton, Ontario, Canada

Sekuler R, Sekuler AB, Lau R (1997) Sound alters visual motion perception. Nature 385:308

Shimojo S, Shams L (2001) Sensory modalities are not separate modalities: plasticity and interactions. Curr Opin Neurobiol 11:505–509

Shipley T (1964) Auditory flutter-driving of visual flicker. Science 145:1328–1330

Spence C, Baddeley R, Zampini M, James R, Shore DI (2003) Multisensory temporal order judgements: when two locations are better than one. Percept Psychophys 65:318–328

Victor JD, Conte MM (2002) Temporal phase discrimination depends critically on separation. Vision Res 42:2063–2071

Vroomen J, de Gelder B (2000) Sound enhances visual perception: cross-modal effects of auditory organization on vision. J Exp Psychol Hum Percept Perform 26:1583–1590

Wada Y, Kitagawa N, Noguchi K (2003) Audio-visual integration in temporal perception. Int J Psychophysiol 50:117–124

Watanabe K, Shimojo S (2001) When sound affects vision: effects of auditory grouping on visual motion perception. Psychol Sci 12:109–116

Welch RB, Warren DH (1980) Immediate perceptual response to intersensory discrepancy. Psychol Bull 88:638–667

Welch RB, DuttonHurt LD, Warren DH (1986) Contributions of audition and vision to temporal rate perception. Percept Psychophys 39:294–300

Zampini M, Shore DI, Spence C (2003a) Audiovisual temporal order judgments. Exp Brain Res 152:198–210

Zampini M, Shore DI, Spence C (2003b) Multisensory temporal order judgments: the role of hemispheric redundancy. Int J Psychophysiol 50:165–180

Acknowledgements

We thank Makio Kashino (NTT), Shinsuke Shimojo (CalTech), Alan Johnston (UCL), Derek Arnold (UCL), and the Human Frontier Science Program (RGP0070/2003-C).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fujisaki, W., Nishida, S. Temporal frequency characteristics of synchrony–asynchrony discrimination of audio-visual signals. Exp Brain Res 166, 455–464 (2005). https://doi.org/10.1007/s00221-005-2385-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-005-2385-8