Abstract

For complex Wigner-type matrices, i.e. Hermitian random matrices with independent, not necessarily identically distributed entries above the diagonal, we show that at any cusp singularity of the limiting eigenvalue distribution the local eigenvalue statistics are universal and form a Pearcey process. Since the density of states typically exhibits only square root or cubic root cusp singularities, our work complements previous results on the bulk and edge universality and it thus completes the resolution of the Wigner–Dyson–Mehta universality conjecture for the last remaining universality type in the complex Hermitian class. Our analysis holds not only for exact cusps, but approximate cusps as well, where an extended Pearcey process emerges. As a main technical ingredient we prove an optimal local law at the cusp for both symmetry classes. This result is also the key input in the companion paper (Cipolloni et al. in Pure Appl Anal, 2018. arXiv:1811.04055) where the cusp universality for real symmetric Wigner-type matrices is proven. The novel cusp fluctuation mechanism is also essential for the recent results on the spectral radius of non-Hermitian random matrices (Alt et al. in Spectral radius of random matrices with independent entries, 2019. arXiv:1907.13631), and the non-Hermitian edge universality (Cipolloni et al. in Edge universality for non-Hermitian random matrices, 2019. arXiv:1908.00969).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The celebrated Wigner–Dyson–Mehta (WDM) conjecture asserts that local eigenvalue statistics of large random matrices are universal: they only depend on the symmetry type of the matrix and are otherwise independent of the details of the distribution of the matrix ensemble. This remarkable spectral robustness was first observed by Wigner in the bulk of the spectrum. The correlation functions are determinantal and they were computed in terms the sine kernel via explicit Gaussian calculations by Dyson, Gaudin and Mehta [59]. Wigner’s vision continues to hold at the spectral edges, where the correct statistics was identified by Tracy and Widom for both symmetry types in terms of the Airy kernel [70, 71]. These universality results have been originally formulated and proven [17, 35, 36, 67,68,69] for traditional Wigner matrices, i.e. Hermitian random matrices with independent, identically distributed (i.i.d.) entries and their diagonal [55, 57] and non-diagonal [51] deformations. More recently they have been extended to Wigner-type ensembles, where the identical distribution is not required, and even to a large class of matrices with general correlated entries [7, 8, 11]. In different directions of generalization, sparse matrices [1, 32, 47, 56], adjacency matrices of regular graphs [14] and band matrices [19, 20, 66] have also been considered. In parallel developments bulk and edge universal statistics have been proven for invariant \(\beta \)-ensembles [12, 15, 17, 18, 29, 30, 52, 61, 62, 64, 65, 73] and even for their discrete analogues [13, 16, 41, 48] but often with very different methods.

A precondition for the Tracy-Widom distribution in all these generalizations of Wigner’s original ensemble is that the density of states vanishes as a square root near the spectral edges. The recent classification of the singularities of the solution to the underlying Dyson equation indeed revealed that at the edges only square root singularities appear [6, 10]. The density of states may also form a cusp-like singularity in the interior of the asymptotic spectrum, i.e. single points of vanishing density with a cubic root growth behaviour on either side. Under very general conditions, no other type of singularity may occur. At the cusp a new local eigenvalue process emerges: the correlation functions are still determinantal but the Pearcey kernel replaces the sine- or the Airy kernel.

The Pearcey process was first established by Brézin and Hikami for the eigenvalues close to a cusp singularity of a deformed complex Gaussian Wigner (GUE) matrix. They considered the model of a GUE matrix plus a deterministic matrix (“external source”) having eigenvalues \(\pm 1\) with equal multiplicity [21, 22]. The name Pearcey kernel and the corresponding Pearcey process have been coined by [72] in reference to related functions introduced by Pearcey in the context of electromagnetic fields [63]. Similarly to the universal sine and Airy processes, it has later been observed that also the Pearcey process universality extends beyond the realm of random matrices. Pearcey statistics have been established for non-intersecting Brownian bridges [3] and in skew plane partitions [60], always at criticality. We remark, however, that critical cusp-like singularity does not always induce a Pearcey kernel, see e.g. [31].

In random matrix theory there are still only a handful of rather specific models for which the emergence of the Pearcey process has been proven. This has been achieved for deformed GUE matrices [2, 4, 23] and for Gaussian sample covariance matrices [42,43,44] by a contour integration method based upon the Brézin–Hikami formula. Beyond linear deformations, the Riemann-Hilbert method has been used for proving Pearcey statistics for a certain two-matrix model with a special quartic potential with appropriately tuned coefficients [40]. All these previous results concern only specific ensembles with a matrix integral representation. In particular, Wigner-type matrices are out of the scope of this approach.

The main result of the current paper is the proof of the Pearcey universality at the cusps for complex Hermitian Wigner-type matrices under very general conditions. Since the classification theorem excludes any other singularity, this is the third and last universal statistics that emerges from natural generalizations of Wigner’s ensemble.

This third universality class has received somewhat less attention than the other two, presumably because cusps are not present in the classical Wigner ensemble. We also note that the most common invariant \(\beta \)-ensembles do not exhibit the Pearcey statistics as their densities do not feature cubic root cusps but are instead 1/2-Hölder continuous for somewhat regular potentials [28]. The density vanishes either as 2kth or \((2k +\frac{1}{2})\)th power with their own local statistics (see [26] also for the persistence of these statistics under small additive GUE perturbations before the critical time). Cusp singularities, hence Pearcey statistics, however, naturally arise within any one-parameter family of Wigner-type ensembles whenever two spectral bands merge as the parameter varies. The classification theorem implies that cusp formation is the only possible way for bands to merge, so in that sense Pearcey universality is ubiquitous as well.

The bulk and edge universality is characterized by the symmetry type alone: up to a natural shift and rescaling there is only one bulk and one edge statistic. In contrast, the cusp universality has a much richer structure: it is naturally embedded in a one-parameter family of universal statistics within each symmetry class. In the complex Hermitian case these are given by the one-parameter family of (extended) Pearcey kernels, see (2.5) later. Thinking in terms of fine-tuning a single parameter in the space of Wigner-type ensembles, the density of states already exhibits a universal local shape right before and right after the cusp formation; it features a tiny gap or a tiny nonzero local minimum, respectively [5, 10]. When the local lengthscale \(\ell \) of these almost cusp shapes is comparable with the local eigenvalue spacing \(\delta \), then the general Pearcey statistics is expected to emerge whose parameter is determined by the ratio \(\ell /\delta \). Thus the full Pearcey universality typically appears in a double scaling limit.

Our proof follows the three step strategy that is the backbone of the recent approach to the WDM universality, see [38] for a pedagogical exposé and for detailed history of the method. The first step in this strategy is a local law that identifies, with very high probability, the empirical eigenvalue distribution on a scale slightly above the typical eigenvalue spacing. The second step is to prove universality for ensembles with a tiny Gaussian component. Finally, in the third step this Gaussian component is removed by perturbation theory. The local law is used for precise apriori bounds in the second and third steps.

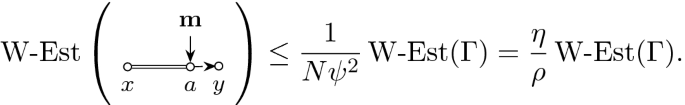

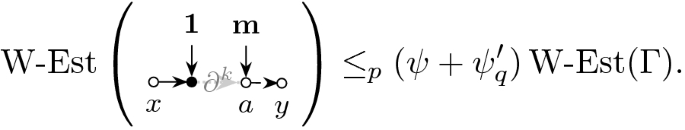

The main novelty of the current paper is the proof of the local law at optimal scale near the cusp. To put the precision in proper context, we normalize the \(N\times N\) real symmetric or complex Hermitian Wigner-type matrix H to have norm of order one. As customary, the local law is formulated in terms of the Green function \(G(z):=(H-z)^{-1}\) with spectral parameter z in the upper half plane. The local law then asserts that G(z) becomes deterministic in the large N limit as long as \(\eta :=\mathfrak {I}z\) is much larger than the local eigenvalue spacing around \(\mathfrak {R}z\). The deterministic approximant M(z) can be computed as the unique solution of the corresponding Dyson equation (see (2.2) and (3.1) later). Near the cusp the typical eigenvalue spacing is of order \(N^{-3/4}\); compare this with the \(N^{-1}\) spacing in the bulk and \(N^{-2/3}\) spacing near the edges. We remark that a local law at the cusp on the non-optimal scale \(N^{-3/5}\) has already been proven in [8]. In the current paper we improve this result to the optimal scale \(N^{-3/4}\) and this is essential for our universality proof at the cusp.

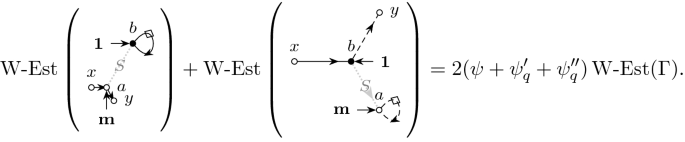

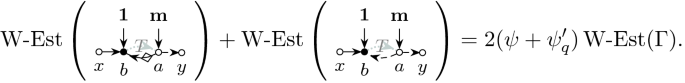

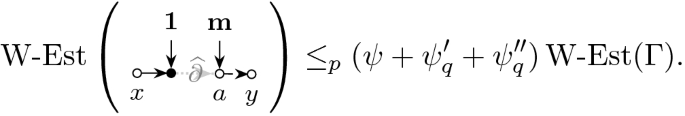

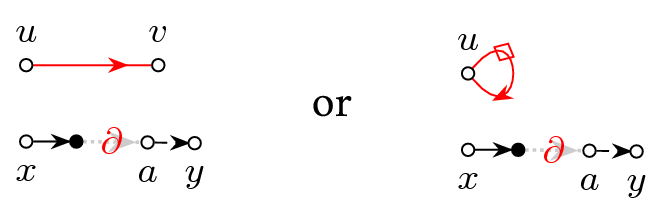

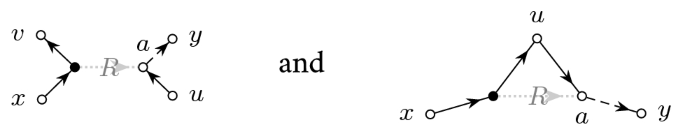

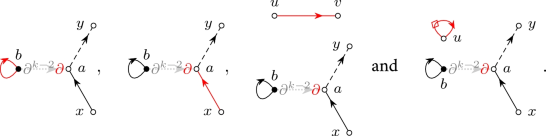

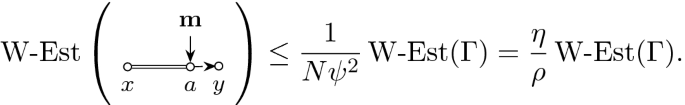

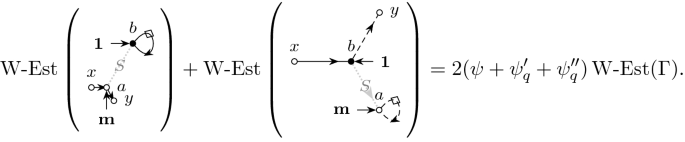

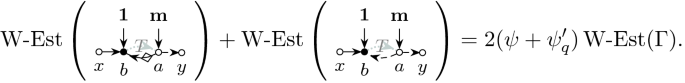

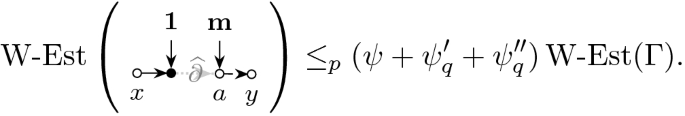

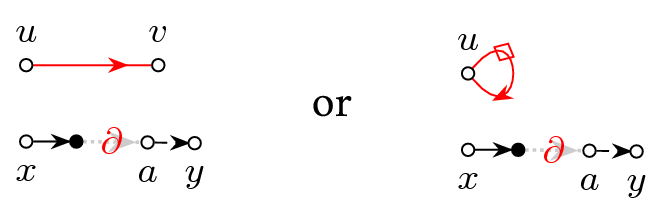

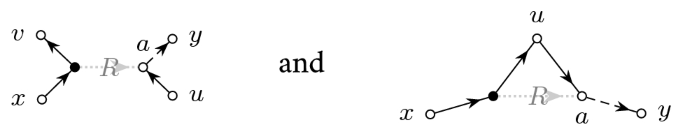

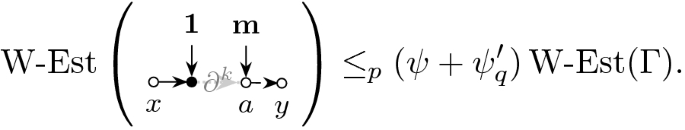

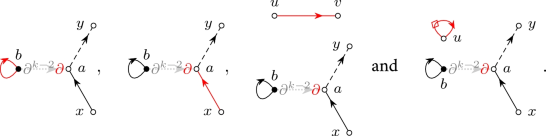

The main ingredient behind this improvement is an optimal estimate of the error term D (see (3.4) later) in the approximate Dyson equation that G(z) satisfies. The difference \(M-G\) is then roughly estimated by \({{\mathcal {B}}}^{-1} (MD)\), where \({{\mathcal {B}}}\) is the linear stability operator of the Dyson equation. Previous estimates on D (in averaged sense) were of order \(\rho /N\eta \), where \(\rho \) is the local density; roughly speaking \(\rho \sim 1\) in the bulk, \(\rho \sim N^{-1/3}\) at the edge and \(\rho \sim N^{-1/4}\) near the cusp. While this estimate cannot be improved in general, our main observation is that, to leading order, we need only the projection of MD in the single unstable direction of \({{\mathcal {B}}}\). We found that this projection carries an extra hidden cancellation due to a special local symmetry at the cusp and thus the estimate on D effectively improves to \(\rho ^2/N\eta \). Customary power counting is not sufficient, we need to compute this error term explicitly at least to leading order. We call this subtle mechanism cusp fluctuation averaging since it combines the well established fluctuation averaging procedure with the additional cancellation at the cusp. Similar estimates extend to the vicinity of the exact cusps. We identify a key quantity, denoted by \(\sigma (z)\) (in (3.5b) later), that measures the distance from the cusp in a canonical way: \(\sigma (z)=0\) characterizes an exact cusp, while \(\left| \sigma (z)\right| \ll 1\) indicates that z is near an almost cusp. Our final estimate on D is of order \((\rho +\left| \sigma \right| )\rho /N\eta \). Since the error term D is random and we need to control it in high moment sense, we need to lift this idea to a high moment calculation, meticulously extracting the improvement from every single term. This is performed in the technically most involved Sect. 4 where we use a Feynman diagrammatic formalism to bookkeep the contributions of all terms. Originally we have developed this language in [34] to handle random matrices with slow correlation decay, based on the revival of the cumulant expansion technique in [45] after [50]. In the current paper we incorporate the cusp into this analysis. We identify a finite set of Feynman subdiagrams, called \(\sigma \)-cells (Definition 4.10) with value \(\sigma \) that embody the cancellation effect at the cusp. To exploit the full strength of the cusp fluctuation averaging mechanism, we need to trace the fate of the \(\sigma \)-cells along the high moment expansion. The key point is that \(\sigma \)-cells are local objects in the Feynman graphs thus their cancellation effects act simultaneously and the corresponding gains are multiplicative.

Formulated in the jargon of diagrammatic field theory, extracting the deterministic Dyson equation for M from the resolvent equation \((H-z)G(z)=1\) corresponds to a consistent self-energy renormalization of G. One way or another, such procedure is behind every proof of the optimal local law with high probability. Our \(\sigma \)-cells conceptually correspond to a next order resummation of certain Feynman diagrams carrying a special cancellation.

We remark that we prove the optimal local law only for Wigner-type matrices and not yet for general correlated matrices unlike in [11, 34]. In fact we use the simpler setup only for the estimate on D (Theorem 3.7) the rest of the proof is already formulated for the general case. This simpler setup allows us to present the cusp fluctuation averaging mechanism with the least amount of technicalities. The extension to the correlated case is based on the same mechanism but it requires considerably more involved diagrammatic manipulations which is better to develop in a separate work to contain the length of this paper.

Our cusp fluctuation averaging mechanism has further applications. It is used in [9] to prove an optimal cusp local law for the Hermitization of non-Hermitian random matrices with a variance profile, demonstrating that the technique is also applicable in settings where the flatness assumption is violated. The cusp of the Hermitization corresponds to the edge of the non-Hermitian model via Girko’s formula, thus the optimal cusp local law leads to an optimal bound on the spectral radius [9] and ultimately also to edge universality [25] for non-Hermitian random matrices.

Armed with the optimal local law we then perform the other two steps of the three step analysis. The third step, relying on the Green function comparison theorem, is fairly standard and previous proofs used in the bulk and at the edge need only minor adjustments. The second step, extracting universality from an ensemble with a tiny Gaussian component can be done in two ways: (i) Brézin–Hikami formula with contour integration or (ii) Dyson Brownian Motion (DBM). Both methods require the local law as an input. In the current work we follow (i) mainly because this approach directly yields the Pearcey kernel, at least for the complex Hermitian symmetry class. In the companion work [24] we perform the DBM analysis adapting methods of [37, 53, 54] to the cusp. The main novelty in the current work and in [24] is the rigidity at the cusp on the optimal scale provided below. Once this key input is given, the proof of the edge universality from [53] is modified in [24] to the cusp setting, proving universality for the real symmetric case as well. We remark, however, that, to our best knowledge, the analogue of the Pearcey kernel for the real symmetric case has not yet been explicitly identified.

We now explain some novelty in the contour integration method. We first note that a similar approach was initiated in the fundamental work of Johansson on the bulk universality for Wigner matrices with a large Gaussian component in [49]. This method was generalised later to Wigner matrices with a small Gaussian component in [35] as well as it inspired the proof of bulk universality via the moment matching idea [68] once the necessary local law became available. The double scaling regime has also been studied, where the density is very small but the Gaussian component compensates for it [27]. More recently, the same approach was extended to the cusp for deformed GUE matrices [23, Theorem 1.3] and for sample covariance matrices but only for large Gaussian component [42,43,44]. For our cusp universality, we need to perform a similar analysis but with a small Gaussian component. We represent our matrix H as \({\widehat{H}} + \sqrt{t} U\), where U is GUE and \({\widehat{H}}\) is an independent Wigner-type matrix. The contour integration analysis (Sect. 5.1) requires a Gaussian component of size at least \(t\gg N^{-1/2}\).

The input of the analysis in Sect. 5.1 for the correlation kernel of H is a very precise description of the eigenvalues of \({\widehat{H}}\) just above \(N^{-3/4}\), the scale of the typical spacing between eigenvalues—this information is provided by our optimal local law. While in the bulk and in the regime of the regular edge finding an appropriate \(\widehat{H}\) is a relatively simple matter, in the vicinity of a cusp point the issue is very delicate. The main reason is that the cusp, unlike the bulk or the regular edge, is unstable under small perturbations; in fact it typically disappears and turns into a small positive local minimum if a small GUE component is added. Conversely, a cusp emerges if a small GUE component is added to an ensemble that has a density with a small gap. In particular, even if the density function \(\rho (\tau )\) of H exhibits an exact cusp, the density \(\widehat{\rho }(\tau )\) of \(\widehat{H}\) will have a small gap: in fact \(\rho \) is given by the evolution of the semicircular flow up to time t with initial data \(\widehat{\rho }\). Unlike in the bulk and edge cases, here one cannot match the density of H and \(\widehat{H}\) by a simple shift and rescaling. Curiously, the contour integral analysis for the local statistics of H at the cusp relies on an optimal local law of \(\widehat{H}\) with a small gap far away from the cusp.

Thus we need an additional ingredient: the precise analysis of the semicircular flow \(\rho _s:=\widehat{\rho } \boxplus \rho _{\mathrm {sc}}^{(s)}\) near the cusp up to a relatively long times \(s\lesssim N^{-1/2+\epsilon }\); note that \(\rho _t=\rho \) is the original density with the cusp. Here \(\rho _{\mathrm {sc}}^{(s)}\) is the semicircular density with variance s and \(\boxplus \) indicates the free convolution. In Sects. 5.2–5.3 we will see that the edges of the support of the density \(\rho _s\) typically move linearly in the time s while the gap closes at a much slower rate. Already \(s\gg N^{-3/4}\) is beyond the simple perturbative regime of the cusp whose natural lengthscale is \(N^{-3/4}\). Thus we need a very careful tuning of the parameters: the analysis of a cusp for H requires constructing a matrix \(\widehat{H}\) that is far from having a cusp but that after a relatively long time \(t=N^{-1/2+\epsilon }\) will develop a cusp exactly at the right location. In the estimates we heavily rely on various properties of the solution to the Dyson equation established in the recent paper [10]. These results go well beyond the precision of the previous work [5] and they apply to a very general class of Dyson equations, including a non-commutative von-Neumann algebraic setup.

Notations. We now introduce some custom notations we use throughout the paper. For non-negative functions f(A, B), g(A, B) we use the notation \(f \le _A g\) if there exist constants C(A) such that \(f(A,B)\le C(A) g(A,B)\) for all A, B. Similarly, we write \(f\sim _A g\) if \(f\le _A g\) and \(g\le _A f\). We do not indicate the dependence of constants on basic parameters that will be called model parameters later. If the implied constants are universal, we instead write \(f\lesssim g\) and \(f\sim g\). Similarly we write \(f \ll g\) if \(f\le c g\) for some tiny absolute constant \(c>0\).

We denote vectors by bold-faced lower case Roman letters \(\mathbf {x},\mathbf {y}\in \mathbb {C}^N\), and matrices by upper case Roman letters \(A,B\in \mathbb {C}^{N\times N}\) with entries \(A=(a_{ij})_{i,j=1}^N\). The standard scalar product and Euclidean norm on \(\mathbb {C}^N\) will be denoted by \(\left\langle \mathbf {x},\mathbf {y}\right\rangle :=N^{-1}\sum _{i\in [N]}\overline{x_i}y_i\) and \(\Vert \mathbf {x}\Vert \), while we also write \(\left\langle A,B\right\rangle :=N^{-1}{{\,\mathrm{Tr}\,}}A^*B\) for the scalar product of matrices, and \(\left\langle A\right\rangle :=N^{-1}{{\,\mathrm{Tr}\,}}A\), \(\left\langle \mathbf {x}\right\rangle :=N^{-1}\sum _{a\in [N]}x_a\). We write \({{\,\mathrm{diag}\,}}R\), \({{\,\mathrm{diag}\,}}{\mathbf {r}}\) for the diagonal vector of a matrix R and the diagonal matrix obtained from a vector \({\mathbf {r}}\), and \(S\odot R\) for the entrywise (Hadamard) product of matrices R, S. The usual operator norm induced by the vector norm \(\Vert \cdot \Vert \) will be denoted by \(\Vert A\Vert \), while the Hilbert-Schmidt (or Frobenius) norm will be denoted by \(\Vert A\Vert _\text {hs}:=\sqrt{\left\langle A,A\right\rangle }\). For integers n we define \([n]:=\{1,\ldots ,n\}\).

2 Main Results

2.1 The Dyson equation

Let \(W=W^* \in \mathbb {C}^{N \times N}\) be a self-adjoint random matrix and \(A={{\,\mathrm{diag}\,}}({\varvec{a}})\) be a deterministic diagonal matrix with entries \({\varvec{a}}=(a_i)_{i=1}^N \in \mathbb {R}^N\). We say that W is of Wigner-type [8] if its entries \(w_{ij}\) for \(i \le j\) are centred, \({{\,\mathrm{\mathbf {E}}\,}}w_{ij} =0\), independent random variables. We define the variance matrix or self-energy matrix \(S=(s_{ij})_{i,j=1}^N\) by

This matrix is symmetric with non-negative entries. In [8] it was shown that as N tends to infinity, the resolvent \(G(z):=(H-z)^{-1}\) of the deformed Wigner-type matrix \(H=A+W\) entrywise approaches a diagonal matrix

The entries \({\mathbf {m}}=(m_1, \ldots , m_N):\mathbb {H}\rightarrow \mathbb {H}^N\) of M have positive imaginary parts and solve the Dyson equation

We call M or \({\mathbf {m}}\) the self-consistent Green’s function. The normalised trace of M is the Stieltjes transform of a unique probability measure on \(\mathbb {R}\) that approximates the empirical eigenvalue distribution of \(A+W\) increasingly well as \(N \rightarrow \infty \), motivating the following definition.

Definition 2.1

(Self-consistent density of states). The unique probability measure \(\rho \) on \(\mathbb {R}\), defined through

is called the self-consistent density of states (scDOS). Accordingly, its support \({{\,\mathrm{supp}\,}}\rho \) is called self-consistent spectrum.

2.2 Cusp universality

We make the following assumptions:

Assumption (A)

(Bounded moments). The entries of the Wigner-type matrix \(\sqrt{N}W\) have bounded moments and the expectation A is bounded, i.e. there are positive \(C_k\) such that

Assumption (B)

(Fullness). If the matrix \(W = W^* \in \mathbb {C}^{N \times N}\) belongs to the complex hermitian symmetry class, then we assume

as quadratic forms, for some positive constant \(c>0\). If \(W = W^T \in \mathbb {R}^{N \times N}\) belongs to the real symmetric symmetry class, then we assume \({{\,\mathrm{\mathbf {E}}\,}}w_{ij}^2 \ge \frac{c}{N}\).

Assumption (C)

(Bounded self-consistent Green’s function). In a neighbourhood of some fixed spectral parameter \(\tau \in \mathbb {R}\) the self-consistent Green’s function is bounded, i.e. for positive \(C,\kappa \) we have

We call the constants appearing in Assumptions (A)–(C)model parameters. All generic constants C in this paper may implicitly depend on these model parameters. Dependence on further parameters however will be indicated.

Remark 2.2

The boundedness of \({\mathbf {m}}\) in Assumption (C) can be ensured by assuming some regularity of the variance matrix S. For more details we refer to [5, Chapter 6].

From the extensive analysis in [10] we know that the self-consistent density \(\rho \) is described by explicit shape functions in the vicinity of local minima with small value of \(\rho \) and around small gaps in the support of \(\rho \). The density in such almost cusp regimes is given by precisely one of the following three asymptotics:

-

(i)

Exact cusp. There is a cusp point \(\mathfrak {c}\in \mathbb {R}\) in the sense that \(\rho (\mathfrak {c})=0\) and \(\rho (\mathfrak {c}\pm \delta )>0\) for \(0\ne \delta \ll 1\). In this case the self-consistent density is locally around \(\mathfrak {c}\) given by

$$\begin{aligned} \rho (\mathfrak {c}\pm x) = \frac{\sqrt{3}\gamma ^{4/3}}{2\pi } x^{1/3} \Big [1+{\mathcal {O}}\,\left( x^{1/3}\right) \Big ],\qquad x\ge 0 \end{aligned}$$(2.4a)for some \(\gamma >0\).

-

(ii)

Small gap. There is a maximal interval \([\mathfrak {e}_-,\mathfrak {e}_+]\) of size \(0<\Delta :=\mathfrak {e}_+-\mathfrak {e}_-\ll 1\) such that \(\rho |_{[\mathfrak {e}_-,\mathfrak {e}_+]}\equiv 0\). In this case the density around \(\mathfrak {e}_\pm \) is, for some \(\gamma >0\), locally given by

$$\begin{aligned} \rho (\mathfrak {e}_\pm \pm x)=\frac{\sqrt{3}(2\gamma )^{4/3}\Delta ^{1/3}}{2\pi }\Psi _{\mathrm {edge}}(x/\Delta )\left[ 1+{\mathcal {O}}\,\left( \Delta ^{1/3}\Psi _{\mathrm {edge}}(x/\Delta )\right) \right] ,\qquad x\ge 0\nonumber \\ \end{aligned}$$(2.4b)where the shape function around the edge is given by

$$\begin{aligned} \Psi _{\mathrm {edge}}(\lambda ):=\frac{\sqrt{\lambda (1+\lambda )}}{(1+2\lambda +2\sqrt{\lambda (1+\lambda )})^{2/3}+(1+2\lambda -2\sqrt{\lambda (1+\lambda )})^{2/3}+1},\quad \lambda \ge 0.\nonumber \\ \end{aligned}$$(2.4c) -

(iii)

Non-zero local minimum. There is a local minimum at \(\mathfrak {m}\in \mathbb {R}\) of \(\rho \) such that \(0<\rho (\mathfrak {m})\ll 1\). In this case there exists some \(\gamma >0\) such that

$$\begin{aligned} \rho (\mathfrak {m}+ x) = \rho (\mathfrak {m}) + \rho (\mathfrak {m}) \Psi _{\mathrm {min}}\left( \frac{3\sqrt{3} \gamma ^4 x}{2(\pi \rho (\mathfrak {m}))^3 }\right) \left[ 1+{\mathcal {O}}\,\left( \rho (\mathfrak {m})^{1/2}+ \frac{\left| x\right| }{\rho (\mathfrak {m})^3}\right) \right] ,\quad x\in \mathbb {R}\nonumber \\ \end{aligned}$$(2.4d)where the shape function around the local minimum is given by

$$\begin{aligned} \Psi _{\mathrm {min}}(\lambda ) :=\frac{\sqrt{1+\lambda ^2}}{(\sqrt{1+\lambda ^2}+\lambda )^{2/3}+(\sqrt{1+\lambda ^2}-\lambda )^{2/3}-1}-1,\qquad \lambda \in \mathbb {R}.\quad \end{aligned}$$(2.4e)

We note that the parameter \(\gamma \) in (2.4a) is chosen in a way which is convenient for the universality statement. We also note that the choices for \(\gamma \) in (2.4b)–(2.4d) are consistent with (2.4a) in the sense that in the regimes \(\Delta \ll x\ll 1\) and \(\rho (\mathfrak {m})^3\ll \left| x\right| \ll 1\) the respective formulae asymptotically agree. Depending on the three cases (i)–(iii), we define the almost cusp point \(\mathfrak {b}\) as the cusp \(\mathfrak {c}\) in case (i), the midpoint \((\mathfrak {e}_-+\mathfrak {e}_+)/2\) in case (ii), and the minimum \(\mathfrak {m}\) in case (iii). When the local length scale of the almost cusp shape starts to match the eigenvalue spacing, i.e. if \(\Delta \lesssim N^{-3/4}\) or \(\rho (\mathfrak {m})\lesssim N^{-1/4}\), then we call the local shape a physical cusp. This terminology reflects the fact that the shape becomes indistinguishable from the exact cusp with \(\rho (\mathfrak {c})=0\) when resolved with a precision above the eigenvalue spacing. In this case we call \(\mathfrak {b}\) a physical cusp point.

The extended Pearcey kernel with a real parameter \(\alpha \) (often denoted by \(\tau \) in the literature) is given by

where \(\Xi \) is a contour consisting of rays from \(\pm \infty e^{\mathrm {i}\pi /4}\) to 0 and rays from 0 to \(\pm \infty e^{-\mathrm {i}\pi /4}\), and \(\Phi \) is the ray from \(-\mathrm {i}\infty \) to \(\mathrm {i}\infty \). The simple Pearcey kernel with parameter \(\alpha =0\) has been first observed in the context of random matrix theory by [21, 22]. We note that (2.5) is a special case of a more general extended Pearcey kernel defined in [72, Eq. (1.1)].

It is natural to express universality in terms of a rescaled k-point function \(p_k^{(N)}\) which we define implicitly by

for test functions f, where the summation is over all subsets of k distinct integers from [N].

Theorem 2.3

Let H be a complex Hermitian Wigner matrix satisfying Assumptions (A)–(C). Assume that the self-consistent density \(\rho \) within \([\tau -\kappa ,\tau +\kappa ]\) from Assumption (C) has a physical cusp, i.e. that \(\rho \) is locally given by (2.4) for some \(\gamma >0\) and \(\rho \) either (i) has a cusp point \(\mathfrak {c}\), or (ii) a small gap \([\mathfrak {e}_-,\mathfrak {e}_+]\) of size \(\Delta :=\mathfrak {e}_+-\mathfrak {e}_-\lesssim N^{-3/4}\), or (iii) a local minimum at \(\mathfrak {m}\) of size \(\rho (\mathfrak {m})\lesssim N^{-1/4}\). Then it follows that for any smooth compactly supported test function \(F:\mathbb {R}^k\rightarrow \mathbb {R}\) it holds that

where

\({\varvec{x}}=(x_1,\ldots ,x_k)\), \(\mathrm{d}{\varvec{x}}=\mathrm{d}x_1\ldots \mathrm{d}x_k\), and \(c(k)>0\) is a small constant only depending on k.

2.3 Local law

We emphasise that the proof of Theorem 2.3 requires a very precise a priori control on the fluctuation of the eigenvalues even at singular points of the scDOS. This control is expressed in the form of a local law with an optimal convergence rate down to the typical eigenvalue spacing. We now define the scale on which the eigenvalues are predicted to fluctuate around the spectral parameter \(\tau \).

Definition 2.4

(Fluctuation scale). We define the self-consistent fluctuation scale \(\eta _{\mathrm {f}}=\eta _{\mathrm {f}}(\tau )\) through

if \(\tau \in {{\,\mathrm{supp}\,}}\rho \). If \(\tau \not \in {{\,\mathrm{supp}\,}}\rho \), then \(\eta _{\mathrm {f}}\) is defined as the fluctuation scale at a nearby edge. More precisely, let I be the largest (open) interval with \(\tau \in I \subseteq \mathbb {R}{\setminus } {{\,\mathrm{supp}\,}}\rho \) and set \(\Delta :=\min \{\left| I\right| ,1\}\). Then we define

We will see later (cf. (A.8b)) that (2.7) is the fluctuation of the edge eigenvalue adjacent to a spectral gap of length \(\Delta \) as predicted by the local behaviour of the scDOS. The control on the fluctuation of eigenvalues is expressed in terms of the following local law.

Theorem 2.5

(Local law). Let H be a deformed Wigner-type matrix of the real symmetric or complex Hermitian symmetry class. Fix any \(\tau \in \mathbb {R}\). Assuming (A)–(C) for any \(\epsilon ,\zeta >0\) and \(\nu \in \mathbb {N}\) the local law holds uniformly for all \(z=\tau + \mathrm {i}\eta \) with \({{\,\mathrm{dist}\,}}(z,{{\,\mathrm{supp}\,}}\rho ) \in [N^\zeta \eta _{\mathrm {f}}(\tau ),N^{100}]\) in the form

for any \({\mathbf {u}},\mathbf {v}\in \mathbb {C}^{N}\) and

for any \(B \in \mathbb {C}^{N \times N}\). Here \(\rho (z):=\left\langle \mathfrak {I}M(z)\right\rangle /\pi \) denotes the harmonic extension of the scDOS to the complex upper half plane. The constants \(C>0\) in (2.8) only depends on \(\epsilon ,\zeta ,\nu \) and the model parameters.

We remark that later we will prove the local law also in a form which is uniform in \(\tau \in [-N^{100},N^{100}]\) and \(\eta \in [ N^{-1+\zeta }, N^{100}]\), albeit with a more complicated error term, see Proposition 3.11. The local law Theorem 2.5 implies a large deviation result for the fluctuation of eigenvalues on the optimal scale uniformly for all singularity types.

Corollary 2.6

(Uniform rigidity). Let H be a deformed Wigner-type matrix of the real symmetric or complex Hermitian symmetry class satisfying Assumptions (A)–(C) for \(\tau \in {{\,\mathrm{int}\,}}({{\,\mathrm{supp}\,}}\rho )\). Then

for any \(\epsilon >0\) and \(\nu \in \mathbb {N}\) and some \(C=C(\epsilon ,\nu )\), where we defined the (self-consistent) eigenvalue index \(k(\tau ):=\lceil N\rho ((-\infty , \tau ))\rceil \), and where \(\lceil x\rceil =\min \{k\in \mathbb {Z}|k\ge x\}\).

In particular, the fluctuation of the eigenvalue whose expected position is closest to the cusp location does not exceed \(N^{-3/4+\epsilon }\) for any \(\epsilon >0\) with very high probability. The following corollary specialises Corollary 2.6 to the neighbourhood of a cusp.

Corollary 2.7

(Cusp rigidity). Let H be a deformed Wigner-type matrix of the real symmetric or complex Hermitian symmetry class satisfying Assumptions (A)–(C) and \(\tau =\mathfrak {c}\) the location of an exact cusp. Then \( N\rho ((-\infty , \mathfrak {c})) = k_\mathfrak {c}\) for some \(k_\mathfrak {c}\in [N]\), that we call the cusp eigenvalue index. For any \(\epsilon >0\), \(\nu \in \mathbb {N}\) and \(k \in [N]\) with \(\left| k-k_\mathfrak {c}\right| \le c N\) we have

where \(C=C(\epsilon ,\nu )\) and \(\gamma _k\) are the self-consistent eigenvalue locations, defined through \( N\rho ((-\infty , \gamma _k)) = k\).

We remark that a variant of Corollary 2.7 holds more generally for almost cusp points. It is another consequence of Corollary 2.6 that with high probability there are no eigenvalues much further than the fluctuation scale \(\eta _{\mathrm {f}}\) away from the spectrum. We note that the following corollary generalises [11, Corollary 2.3] by also covering internal gaps of size \(\ll 1\).

Corollary 2.8

(No eigenvalues outside the support of the self-consistent density). Let \(\tau \not \in {{\,\mathrm{supp}\,}}\rho \). Under the assumptions of Theorem 2.5 we have

for any \(\epsilon ,\nu >0\), where c and C are positive constants, depending on model parameters. The latter also depends on \(\epsilon \) and \(\nu \).

Remark 2.9

Theorem 2.5 and its consequences, Corollaries 2.6, 2.7 and 2.8 also hold for both symmetry classes if Assumption (B) is replaced by the condition that there exists an \(L \in \mathbb {N}\) and \(c>0\) such that \(\min _{i,j}(S^L)_{ij} \ge c/N\). A variance profile S satisfying this condition is called uniformly primitive (cf. [6, Eq. (2.5)] and [5, Eq. (2.11)]). Note that uniform primitivity is weaker than condition (B) on two accounts. First, it involves only the variance matrix \({{\,\mathrm{\mathbf {E}}\,}}\left| w_{ij}\right| ^2\) unlike (2.3) in the complex Hermitian case that also involves \({{\,\mathrm{\mathbf {E}}\,}}w_{ij}^2\). Second, uniform primitivity allows certain matrix elements of W to vanish. The proof under these more general assumptions follows the same strategy but requires minor modifications within the stability analysis.Footnote 1

3 Local Law

In order to directly appeal to recent results on the shape of solution to Matrix Dyson Equation (MDE) from [10] and the flexible diagrammatic cumulant expansion from [34], we first reformulate the Dyson equation (2.2) for N-vectors \({\mathbf {m}}\) into a matrix equation that will approximately be satisfied by the resolvent G. This viewpoint also allows us to treat diagonal and off-diagonal elements of G on the same footing. In fact, (2.2) is a special case of

for a matrix \(M=M(z) \in \mathbb {C}^{N \times N}\) with positive definite imaginary part, \(\mathfrak {I}M =(M-M^*)/2\mathrm {i}>0\). The uniqueness of the solution M with \(\mathfrak {I}M>0\) was shown in [46]. Here the linear (self-energy) operator \({\mathcal {S}}:\mathbb {C}^{N \times N} \rightarrow \mathbb {C}^{N \times N}\) is defined as \({\mathcal {S}}[R]:={{\,\mathrm{\mathbf {E}}\,}}WRW\) and it preserves the cone of positive definite matrices. Definition 2.1 of the scDOS and its harmonic extension \(\rho (z)\) (cf. Theorem 2.5) directly generalises to the solution to (3.1), see [10, Definition 2.2].

In the special case of Wigner-type matrices the self-energy operator is given by

where \({\mathbf {r}}:=(r_{ii})_{i=1}^N\), S was defined in (2.1), \(T = (t_{ij})_{i,j=1}^N \in \mathbb {C}^{N \times N}\) with \(t_{ij}={{\,\mathrm{\mathbf {E}}\,}}w_{ij}^2 \mathbb {1}(i \ne j)\) and \(\odot \) denotes the entrywise Hadamard product. The solution to (3.1) is then given by \(M={{\,\mathrm{diag}\,}}({\mathbf {m}})\), where \({\mathbf {m}}\) solves (2.2). Note that the action of \({\mathcal {S}}\) on diagonal matrices is independent of T, hence the Dyson equation (2.2) for Wigner-type matrices is solely determined by the matrix S, the matrix T plays no role. However, T plays a role in analyzing the error matrix D, see (3.4) below.

The proof of the local law consists of three largely separate arguments. The first part concerns the analysis of the stability operator

and shape analysis of the solution M to (3.1). The second part is proving that the resolvent G is indeed an approximate solution to (3.1) in the sense that the error matrix

is small. In previous works [8, 11, 34] it was sufficient to establish smallness of D in an isotropic form \(\left\langle \mathbf {x},D\mathbf {y}\right\rangle \) and averaged form \(\left\langle BD\right\rangle \) with general bounded vectors/matrices \(\mathbf {x},\mathbf {y},B\). In the vicinity of a cusp, however, it becomes necessary to establish an additional cancellation when D is averaged against the unstable direction of the stability operator \(\mathcal {B}\). We call this new effect cusp fluctuation averaging. Finally, the third part of the proof consists of a bootstrap argument starting far away from the real axis and iteratively lowering the imaginary part \(\eta =\mathfrak {I}z\) of the spectral parameter while maintaining the desired bound on \(G-M\).

Remark 3.1

We remark that the proofs of Theorem 2.5, and Corollaries 2.6 and 2.8 use the independence assumption on the entries of W only very locally. In fact, only the proof of a specific bound on D (see (3.15) later), which follows directly from the main result of the diagrammatic cumulant expansion, Theorem 3.7, uses the vector structure and the specific form of \(\mathcal {S}\) in (3.2) at all. Therefore, assuming (3.15) as an input, our proof of Theorem 2.5 remains valid also in the correlated setting of [11, 34], as long as \(\mathcal {S}\) is flat (see (3.6) below), and Assumption (C) is replaced by the corresponding assumption on the boundedness of \(\Vert M\Vert \).

For brevity we will carry out the proof of Theorem 2.5 only in the vicinity of almost cusps as the local law in all other regimes was already proven in [8, 11] to optimality. Therefore, within this section we will always assume that \(z = \tau +\mathrm {i}\eta =\tau _0+\omega +\mathrm {i}\eta \in \mathbb {H}\) lies inside a small neighbourhood

of the location \(\tau _0\) of a local minimum of the scDOS within the self-consistent spectrum \({{\,\mathrm{supp}\,}}\rho \). Here c is a sufficiently small constant depending only on the model parameters. We will further assume that either (i) \(\rho (\tau _0)\ge 0\) is sufficiently small and \(\tau _0\) is the location of a cusp or internal minimum, or (ii) \(\rho (\tau _0)=0\) and \(\tau _0\) is an edge adjacent to a sufficiently small gap of length \(\Delta >0\). The results from [10] guarantee that these are the only possibilities for the shape of \(\rho \), see (2.4). In other words, we assume that \(\tau _0 \in {{\,\mathrm{supp}\,}}\rho \) is a local minimum of \(\rho \) with a shape close to a cusp (cf. (2.4)). For concreteness we will also assume that if \(\tau _0\) is an edge, then it is a right edge (with a gap of length \(\Delta >0\) to the right) and \(\omega \in (-c, \frac{\Delta }{2}]\). The case when \(\tau _0\) is a left edge has the same proof.

We now introduce a quantity that will play an important role in the cusp fluctuation averaging mechanism. We define

where \(\mathfrak {R}M:=(M+M^*)/2\) is the real part of \(M=M(z)\). It was proven in [10, Lemma 5.5] that \(\sigma (z)\) extends to the real line as a 1/3-Hölder continuous function wherever the scDOS \(\rho \) is smaller than some threshold \(c\sim 1\), i.e. \(\rho \le c\). In the specific case of \(\mathcal {S}\) as in (3.2) the definition simplifies to

since \(M={{\,\mathrm{diag}\,}}({\mathbf {m}})\) is diagonal, where multiplication and division of vectors are understood entrywise. When evaluated at the location \(\tau _0\) the scalar \(\sigma (\tau _0)\) provides a measure of how far the shape of the singularity at \(\tau _0\) is from an exact cusp. In fact, if \(\sigma (\tau _0)=0\) and \(\rho (\tau _0)=0\), then \(\tau _0\) is a cusp location. To see the relationship between the emergence of a cusp and the limit \(\sigma (\tau _0) \rightarrow 0\), we refer to [10, Theorem 7.7 and Lemma 6.3]. The analogues of the quantities \({\mathbf {f}},{\mathbf {p}}\) and \(\sigma \) in (3.5b) are denoted by \(f_u,s\) and \(\sigma \) in [10], respectively. The significance of \(\sigma \) for the classification of singularity types in Wigner-type ensembles was first realised in [5]. Although in this paper we will use only [10] and will not rely on [5], we remark that the definition of \(\sigma \) in [5, Eq. (8.11)] differs slightly from the definition (3.5b). However, both definitions equally fulfil the purpose of classifying singularity types, since the ensuing scalar quantities \(\sigma \) are comparable inside the self-consistent spectrum. For the interested reader, we briefly relate our notations to the respective conventions in [10] and [5]. The quantity denoted by f in both [10] and [5] is the normalized eigendirection of the saturated self-energy operator F in the respective settings and is related to \({\mathbf {f}}\) from (3.5b) via \(f={\mathbf {f}}/ \Vert {\mathbf {f}}\Vert +{\mathcal {O}}\,\left( \eta /\rho \right) \). Moreover, \(\sigma \) in [5] is defined as \(\left\langle f^3 {{\,\mathrm{sgn}\,}}\mathfrak {R}{\mathbf {m}}\right\rangle \), justifying the comparability to \(\sigma \) from (3.5b).

3.1 Stability and shape analysis

From (3.1) and (3.4) we obtain the quadratic stability equation

for the difference \(G-M\). In order to apply the results of [10] to the stability operator \(\mathcal {B}\), we first have to check that the flatness condition [10, Eq. (3.10)] is satisfied for the self-energy operator \(\mathcal {S}\). We claim that \(\mathcal {S}\) is flat, i.e.

as quadratic forms for any positive semidefinite \(R \in \mathbb {C}^{N \times N}\). We remark that in the earlier paper [8] in the Wigner-type case only the upper bound \(s_{ij}\le C/N\) defined the concept of flatness. Here with the definition (3.6) we follow the convention of the more recent works [10, 11, 34] which is more conceptual. We also warn the reader, that in the complex Hermitian Wigner-type case the condition \(c/N\le s_{ij}\le C/N\) implies (3.6) only if \(t_{ij}\) is bounded away from \(-s_{ij}\).

However, the flatness (3.6) is an immediate consequence of the fullness Assumption (B). Indeed, (B) is equivalent to the condition that the covariance operator \(\Sigma \) of all entries above and on the diagonal, defined as \(\Sigma _{ab,cd}:={{\,\mathrm{\mathbf {E}}\,}}w_{ab} w_{cd}\), is uniformly strictly positive definite. This implies that \(\Sigma \ge c \Sigma _{\mathrm {G}}\) for some constant \(c\sim 1\), where \(\Sigma _{\mathrm {G}}\) is the covariance operator of a GUE or GOE matrix, depending on the symmetry class we consider. This means that \({\mathcal {S}}\) can be split into \({\mathcal {S}}={\mathcal {S}}_0+c {\mathcal {S}}_{\mathrm {G}}\), where \({\mathcal {S}}_\mathrm {G}\) and \(\mathcal {S}_0\) are the self-energy operators corresponding to \(\Sigma _\mathrm {G}\) and \(\Sigma -c\Sigma _\mathrm {G}\), respectively. It is now an easy exercise to check that \({\mathcal {S}}_{\mathrm {G}}\) and thus \({\mathcal {S}}\) is flat.

In particular, [10, Proposition 3.5 and Lemma 4.8] are applicable implying that [10, Assumption 4.5] is satisfied. Thus, according to [10, Lemma 5.1] for spectral parameters z in a neighbourhood of \(\tau _0\) the operator \({\mathcal {B}}\) has a unique isolated eigenvalue \(\beta \) of smallest modulus and associated right \(\mathcal {B}[V_\mathrm {r}]=\beta V_\mathrm {r}\) and left \({\mathcal {B}}^*[V_\mathrm {l}]= \overline{\beta } V_{\mathrm {l}}\) eigendirections normalised such that \(\Vert V_\mathrm {r}\Vert _{\mathrm {hs}} =\langle {V_\mathrm {l}} \,, {V_\mathrm {r}}\rangle =1\). We denote the spectral projections to \(V_\mathrm {r}\) and to its complement by \(\mathcal {P}:=\left\langle V_\mathrm {l},\cdot \right\rangle V_\mathrm {r}\) and \(\mathcal {Q}:=1-\mathcal {P}\). For convenience of the reader we now collect some important quantitative information about the stability operator and its unstable direction from [10].

Proposition 3.2

(Properties of the MDE and its solution). The following statements hold true uniformly in \(z=\tau _0+\omega +\mathrm {i}\eta \in \mathbb {D}_\mathrm {cusp}\) assuming flatness as in (3.6) and the uniform boundedness of \(\Vert M\Vert \) for \(z\in \tau _0+(-\kappa ,\kappa )+\mathrm {i}\mathbb {R}_+\),

-

(i)

The eigendirections \(V_\mathrm {l},V_\mathrm {r}\) are norm-bounded and the operator \(\mathcal {B}^{-1}\) is bounded on the complement to its unstable direction, i.e.

$$\begin{aligned} \Vert \mathcal {B}^{-1}\mathcal {Q}\Vert _{\mathrm {hs}\rightarrow \mathrm {hs}}+\Vert V_\mathrm {r}\Vert +\Vert V_\mathrm {l}\Vert \lesssim 1.\end{aligned}$$(3.7a) -

(ii)

The density \(\rho \) is comparable with the explicit function \(\rho (\tau _0+\omega +\mathrm {i}\eta )\sim \widetilde{\rho }(\tau _0+\omega +\mathrm {i}\eta )\) given by

$$\begin{aligned} \widetilde{\rho } :={\left\{ \begin{array}{ll} \rho (\tau _0)+(\left| \omega \right| +\eta )^{1/3},&{}\text {in cases (i),(iii) if }\tau _0=\mathfrak {m},\mathfrak {c},\\ (\left| \omega \right| +\eta )^{1/2}(\Delta +\left| \omega \right| +\eta )^{-1/6},&{}\text {in case (ii) if }\tau _0=\mathfrak {e}_-,\; \omega \in [-c,0]\\ \eta (\Delta +\left| \omega \right| +\eta )^{-1/6}(\left| \omega \right| +\eta )^{-1/2},&{}\text {in case (ii) if }\tau _0=\mathfrak {e}_-,\; \omega \in [0,\Delta /2].\\ \end{array}\right. }\nonumber \\ \end{aligned}$$(3.7b) -

(iii)

The eigenvalue \(\beta \) of smallest modulus satisfies

$$\begin{aligned} \left| \beta \right| \sim \frac{\eta }{\rho } + \rho (\rho +\left| \sigma \right| ), \end{aligned}$$(3.7c)and we have the comparison relations

$$\begin{aligned} \begin{aligned}&\left| \left\langle V_\mathrm {l}, M \mathcal {S}[V_\mathrm {r}]V_\mathrm {r}\right\rangle \right| \sim \rho +\left| \sigma \right| , \\&\left| \left\langle V_\mathrm {l},M\mathcal {S}[V_\mathrm {r}]\mathcal {B}^{-1}\mathcal {Q}[M\mathcal {S}[V_\mathrm {r}]V_\mathrm {r}]+M\mathcal {S}\mathcal {B}^{-1}\mathcal {Q}[M\mathcal {S}[V_\mathrm {r}]V_\mathrm {r}]V_\mathrm {r}\right\rangle \right| \sim 1. \end{aligned} \end{aligned}$$(3.7d) -

(iv)

The quantities \(\eta /\rho +\rho (\rho +\left| \sigma \right| )\) and \(\rho +\left| \sigma \right| \) in (3.7c)–(3.7d) can be replaced by the following more explicit auxiliary quantities

$$\begin{aligned} \begin{aligned} \widetilde{\xi }_1(\tau _0+\omega +\mathrm {i}\eta )&:={\left\{ \begin{array}{ll} (\left| \omega \right| +\eta )^{1/2} (\left| \omega \right| +\eta +\Delta )^{1/6},\\ (\rho (\tau _0)+(\left| \omega \right| +\eta )^{1/3})^2, \end{array}\right. }\\ \widetilde{\xi }_2(\tau _0+\omega +\mathrm {i}\eta )&:={\left\{ \begin{array}{ll} (\left| \omega \right| +\eta +\Delta )^{1/3}, &{}\text {if } \tau _0=\mathfrak {e}_-,\\ \rho (\tau _0)+(\left| \omega \right| +\eta )^{1/3}, &{}\text {if }\tau _0=\mathfrak {m},\mathfrak {c}. \end{array}\right. } \end{aligned} \end{aligned}$$(3.7e)which are monotonically increasing in \(\eta \). More precisely, it holds that \(\eta /\rho + \rho (\rho +\left| \sigma \right| ) \sim \widetilde{\xi }_1\) and, in the case where \(\tau _0=\mathfrak {c},\mathfrak {m}\) is a cusp or a non-zero local minimum, we also have that \(\rho +\left| \sigma \right| \sim \widetilde{\xi }_2\). For the case when \(\tau _0=\mathfrak {e}_-\) is a right edge next to a gap of size \(\Delta \) there exists a constant \(c_*\) such that \(\rho +\left| \sigma \right| \sim \widetilde{\xi }_2\) in the regime \(\omega \in [-c,c_*\Delta ]\) and \(\rho +\left| \sigma \right| \lesssim \widetilde{\xi }_2\) in the regime \(\omega \in [c_*\Delta ,\Delta /2]\).

Proof

We first explain how to translate the notations from the present paper to the notations in [10]: The operators \(\mathcal {S},\mathcal {B},\mathcal {Q}\) are simply denoted by S, B, Q in [10]; the matrices \(V_l,V_r\) here are denoted by \(l/\langle {l} \,, {b}\rangle ,b\) there. The bound on \(\mathcal {B}^{-1}\mathcal {Q}\) in (3.7a) follows directly from [10, Eq. (5.15)]. The bounds on \(V_\mathrm {l},V_\mathrm {r}\) in (3.7a) follow from the definition of the stability operator (3.3) together with the fact that \(\Vert M\Vert \lesssim 1\) (by Assumption (C)) and \(\Vert {\mathcal {S}}\Vert _{\mathrm {hs} \rightarrow \Vert \cdot \Vert } \lesssim 1\), following from the upper bound in flatness (3.6). The asymptotic expansion of \(\rho \) in (3.7b) follows from [10, Remark 7.3] and [5, Corollary A.1]. The claims in (iii) follow directly from [10, Proposition 6.1]. Finally, the claims in (iv) follow directly from [10, Remark 10.4]. \(\square \)

The following lemma establishes simplified lower bounds on \(\widetilde{\xi }_1,\widetilde{\xi }_2\) whenever \(\eta \) is much larger than the fluctuation scale \(\eta _\mathrm {f}\). We defer the proof of the technical lemma which differentiates various regimes to the Appendix.

Lemma 3.3

Under the assumptions of Proposition 3.2 we have uniformly in \(z=\tau _0+\omega +\mathrm {i}\eta \in \mathbb {D}_\mathrm {cusp}\) with \(\eta \ge \eta _\mathrm {f}\) that

We now define an appropriate matrix norm in which we will measure the distance between G and M. The \(\Vert \cdot \Vert _*\)-norm is defined exactly as in [11] and similar to the one first introduced in [34]. It is a norm comparing matrix elements on a large but finite set of vectors with a hierarchical structure. To define this set we introduce some notations. For second order cumulants of matrix elements \(\kappa (w_{ab},w_{cd}):={{\,\mathrm{\mathbf {E}}\,}}w_{ab}w_{cd}\) we use the short-hand notation \(\kappa (ab,cd)\). We also use the short-hand notation \(\kappa (\mathbf {x}b,cd)\) for the \(\mathbf {x}=(x_a)_{a\in [N]}\)-weighted linear combination \(\sum _a x_a \kappa (ab,cd)\) of such cumulants. We use the notation that replacing an index in a scalar quantity by a dot (\(\cdot \)) refers to the corresponding vector, e.g. \(A_{a\cdot }\) is a short-hand notation for the vector \((A_{ab})_{b\in [N]}\). Matrices \(R_{\mathbf {x}\mathbf {y}}\) with vector subscripts \(\mathbf {x},\mathbf {y}\) are understood as short-hand notations for \(\left\langle \mathbf {x},R\mathbf {y}\right\rangle \), and matrices \(R_{\mathbf {x}a}\) with mixed vector and index subscripts are understood as \(\left\langle \mathbf {x},R e_a\right\rangle \) with \(e_a\) being the ath normalized \(\Vert e_a\Vert =1\) standard basis vector. We fix two vectors \(\mathbf {x},\mathbf {y}\) and some large integer K and define the sets of vectors

Here the cross and the direct part \(\kappa _\mathrm {c},\kappa _\mathrm {d}\) of the 2-cumulants \(\kappa (\cdot ,\cdot )\) refer to the natural splitting dictated by the Hermitian symmetry. In the specific case of (3.2) we simply have \(\kappa _\mathrm {c}(ab,cd)=\delta _{ad}\delta _{bc}s_{ab}\) and \(\kappa _\mathrm {d}(ab,cd)=\delta _{ac}\delta _{bd}t_{ab}\). Then the \(\Vert \cdot \Vert _*\)-norm is given by

We remark that the set \(I_k\) hence also \(\Vert \cdot \Vert _*\) depend on z via \(M=M(z)\). We omit this dependence from the notation as it plays no role in the estimates.

In terms of this norm we obtain the following estimate on \(G-M\) in terms of its projection \(\Theta =\left\langle V_\mathrm {l},G-M\right\rangle \) onto the unstable direction of the stability operator \(\mathcal {B}\). It is a direct consequence of a general expansion of approximate quadratic matrix equations whose linear stability operators have a single eigenvalue close to 0, as given in Lemma A.1.

Proposition 3.4

(Cubic equation for \(\Theta \)). Fix \(K\in \mathbb {N}\), \(\mathbf {x},\mathbf {y}\in \mathbb {C}^N\) and use \(\Vert \cdot \Vert _*=\Vert \cdot \Vert _*^{K,\mathbf {x},\mathbf {y}}\). For fixed \(z \in \mathbb {D}_{\mathrm {cusp}}\) and on the event that \(\Vert G-M\Vert _*+\Vert D\Vert _*\lesssim N^{-10/K}\) the difference \(G-M\) admits the expansion

with an error matrix E and the scalar \(\Theta :=\left\langle V_\mathrm {l}, G-M\right\rangle \) that satisfies the approximate cubic equation

Here, the error \(\epsilon _*\) satisfies the upper bound

where R is a deterministic matrix with \(\Vert R\Vert \lesssim 1\) and the coefficients of the cubic equation satisfy the comparison relations

Proof

We first establish some important bounds involving the \(\Vert \cdot \Vert _*\)-norm. We claim that for any matrices \(R,R_1,R_2\)

The proof of (3.9) follows verbatim as in [11, Lemma 3.4] with (3.7a) as an input. Moreover, the bound on \(\left\langle V_\mathrm {l}, \cdot \right\rangle \) follows directly from the bound on \({\mathcal {Q}}\). Obviously, we also have \(\Vert \cdot \Vert _*\le 2 \Vert \cdot \Vert \).

Next, we apply Lemma A.1 from the Appendix with the choices

The operator \({\mathcal {B}}\) in Lemma A.1 is chosen as the stability operator (3.3). Then (A.1) is satisfied with \(\lambda :=N^{1/2K}\) according to (3.9) and (3.7a). With \(\delta :=N^{-25/4K}\) we verify (3.8a) directly from (A.5), where \(\Theta = \left\langle V_\mathrm {l}, G-M\right\rangle \) satisfies

Here we used \(\left| \Theta \right| \le \Vert G-M\Vert _*\lesssim N^{-10/K}\) and \(\Vert MD\Vert _*\lesssim N^{1/2K}\Vert D\Vert _*\). The coefficients \(\mu _0, \mu _2, \mu _3\) are defined through (A.4) and R is given by

Now we bound \( \left| \left\langle R, D\right\rangle \Theta \right| \le N^{-1/4K} \left| \Theta \right| ^3 + N^{1/8K} \left| \left\langle R, D\right\rangle \right| ^{3/2}\) by Young’s inequality, absorb the error terms bounded by  into the cubic term, \(\mu _3 \Theta ^3 + \mathcal {O}(N^{-1/4K} \left| \Theta \right| ^3) = \widetilde{\mu }_3 \Theta ^3\), by introducing a modified coefficient \(\widetilde{\mu }_3\) and use that \(\left| \mu _3\right| \sim \left| \widetilde{\mu }_3\right| \sim 1\) for any \(z \in \mathbb {D}_{\mathrm {cusp}}\). Finally, we safely divide (3.10) by \(\widetilde{\mu }_3\) to verify (3.8b) with \(\xi _1:=-\beta / \widetilde{\mu }_3\) and \(\xi _2 :=\mu _2 / \widetilde{\mu }_3\). For the fact \(\left| \mu _3\right| \sim 1\) on \(\mathbb {D}_{\mathrm {cusp}}\) and the comparison relations (3.8d) we refer to (3.7c)–(3.7d). \(\square \)

into the cubic term, \(\mu _3 \Theta ^3 + \mathcal {O}(N^{-1/4K} \left| \Theta \right| ^3) = \widetilde{\mu }_3 \Theta ^3\), by introducing a modified coefficient \(\widetilde{\mu }_3\) and use that \(\left| \mu _3\right| \sim \left| \widetilde{\mu }_3\right| \sim 1\) for any \(z \in \mathbb {D}_{\mathrm {cusp}}\). Finally, we safely divide (3.10) by \(\widetilde{\mu }_3\) to verify (3.8b) with \(\xi _1:=-\beta / \widetilde{\mu }_3\) and \(\xi _2 :=\mu _2 / \widetilde{\mu }_3\). For the fact \(\left| \mu _3\right| \sim 1\) on \(\mathbb {D}_{\mathrm {cusp}}\) and the comparison relations (3.8d) we refer to (3.7c)–(3.7d). \(\square \)

3.2 Probabilistic bound

We now collect bounds on the error matrix D from [34, Theorem 4.1] and Sect. 4. We first introduce the notion of stochastic domination.

Definition 3.5

(Stochastic domination). Let \(X=X^{(N)}, Y=Y^{(N)}\) be sequences of non-negative random variables. We say that X is stochastically dominated by Y (and use the notation \(X \prec Y\)) if

for any \(\epsilon >0, \nu \in \mathbb {N}\) and some family of positive constants \(C(\epsilon ,\nu )\) that is uniform in N and other underlying parameters (e.g. the spectral parameter z in the domain under consideration).

It can be checked (see [33, Lemma 4.4]) that \(\prec \) satisfies the usual arithmetic properties, e.g. if \(X_1\prec Y_1\) and \(X_2\prec Y_2\), then also \(X_1+X_2\prec Y_1 +Y_2\) and \(X_1X_2\prec Y_1 Y_2\). Furthermore, to formulate bounds on a random matrix R compactly, we introduce the notations

for random matrices R and a deterministic control parameter \(\Lambda =\Lambda (z)\). We also introduce high moment norms

for \(p\ge 1\), scalar valued random variables X and random matrices R. To translate high moment bounds into high probability bounds and vice versa we have the following easy lemma [11, Lemma 3.7].

Lemma 3.6

Let R be a random matrix, \(\Phi \) a deterministic control parameter such that \(\Phi \ge N^{-C}\) and \(\Vert R\Vert \le N^C\) for some \(C>0\), and let \(K\in \mathbb {N}\) be a fixed integer. Then we have the equivalences

Expressed in terms of the \(\Vert \cdot \Vert _p\)-norm we have the following high-moment bounds on the error matrix D. The bounds (3.11a)–(3.11b) have already been established in [34, Theorem 4.1]; we just list them for completeness. The bounds (3.11c)–(3.11d), however, are new and they capture the additional cancellation at the cusp and are the core novelty of the present paper. The additional smallness comes from averaging against specific weights \({\mathbf {p}},{\mathbf {f}}\) from (3.5b).

Theorem 3.7

(High moment bound on D with cusp fluctuation averaging). Under the assumptions of Theorem 2.5 for any compact set \(\mathbb {D}\subset \{z\in \mathbb {C}|\mathfrak {I}z\ge N^{-1}\}\) there exists a constant C such that for any \(p\ge 1,\epsilon >0\), \(z\in \mathbb {D}\) and matrices/vectors \(B,\mathbf {x},\mathbf {y}\) it holds that

Moreover, for the specific weight matrix \(B={{\,\mathrm{diag}\,}}({\mathbf {p}}{\mathbf {f}})\) we have the improved bound

and the improved bound on the off-diagonal component

where we defined the following z-dependent quantities

and \(q=Cp^3/\epsilon \).

Theorem 3.7 will be proved in Sect. 4. We now translate the high moment bounds of Theorem 3.7 into high probability bounds via Lemma 3.6 and use those to establish bounds on \(G-M\) and the error in the cubic equation for \(\Theta \). To simplify the expressions we formulate the bounds in the domain

Lemma 3.8

(High probability error bounds). Fix \(\zeta ,c>0\) sufficiently small and suppose that \(\left| G-M\right| \prec \Lambda \), \(\left| \mathfrak {I}(G-M)\right| \prec \Xi \) and \(\left| \Theta \right| \prec \theta \) hold at fixed \(z \in \mathbb {D}_\zeta \), and assume that the deterministic control parameters \(\Lambda , \Xi ,\theta \) satisfy \(\Lambda +\Xi +\theta \lesssim N^{-c}\). Then for any sufficiently small \(\epsilon >0\) it holds that

as well as

where the coefficients \(\xi _1,\xi _2\) are those from Proposition 3.4, and we recall that \(\Theta =\left\langle V_l,G-M\right\rangle \).

Proof

We translate the high moment bounds (3.11a)–(3.11b) into high probability bounds using Lemma 3.6 and \(\left| G\right| \prec \Vert M\Vert + \Lambda \lesssim 1\) to find

In particular, these bounds together with the assumed bounds on \(G-M\) guarantee the applicability of Proposition 3.4. Now we use (3.14) and (3.9) in (3.8a) to get (3.13b). Here we used (3.9), translated \(\Vert \cdot \Vert _p\)-bounds into \(\prec \)-bounds on \(\Vert \cdot \Vert _*\) and vice versa via Lemma 3.6, and absorbed the \(N^{1/K}\) factors into \(\prec \) by using that K can be chosen arbitrarily large. It remains to verify (3.13a). In order to do so, we first claim that

for any sufficiently small \(\epsilon >0\).

Proof of (3.15)

We first collect two additional ingredients from [10] specific to the vector case.

-

(a)

The imaginary part \(\mathfrak {I}{\mathbf {m}}\) of the solution \({\mathbf {m}}\) is comparable \(\mathfrak {I}{\mathbf {m}}\sim \left\langle \mathfrak {I}{\mathbf {m}}\right\rangle =\pi \rho \) to its average in the sense \(c \left\langle \mathfrak {I}{\mathbf {m}}\right\rangle \le \mathfrak {I}m_i\le C \left\langle \mathfrak {I}{\mathbf {m}}\right\rangle \) for all \(i\) and some \(c,C>0\), and, in particular, \({\mathbf {m}}=\mathfrak {R}{\mathbf {m}}+{\mathcal {O}}\,\left( \rho \right) \).

-

(b)

The eigendirections \(V_\mathrm {l},V_\mathrm {r}\) are diagonal and are approximately given by

$$\begin{aligned} V_\mathrm {l} = c{{\,\mathrm{diag}\,}}({\mathbf {f}}/\left| {\mathbf {m}}\right| ) + {\mathcal {O}}\,\left( \rho +\eta /\rho \right) ,\qquad V_\mathrm {r}=c' {{\,\mathrm{diag}\,}}({\mathbf {f}}\left| {\mathbf {m}}\right| )+ {\mathcal {O}}\,\left( \rho +\eta /\rho \right) \nonumber \\ \end{aligned}$$(3.16)for some constants \(c,c'\sim 1\).

Indeed, (a) follows directly from [10, Proposition 3.5] and the approximations in (3.16) follow directly from [10, Corollary 5.2]. The fact that \(V_\mathrm {l},V_\mathrm {r}\) are diagonal follows from simplicity of the eigendirections in the matrix case, and the fact that \(M={{\,\mathrm{diag}\,}}({\mathbf {m}})\) is diagonal and that \({\mathcal {B}}\) preserves the space of diagonal matrices as well as the space of off-diagonal matrices. On the latter \({\mathcal {B}}\) acts stably as \(1+\mathcal {O}_{\mathrm {hs}\rightarrow \mathrm {hs}}(N^{-1})\). Thus the unstable directions lie inside the space of diagonal matrices.

We now turn to the proof of (3.15) and first note that, according to (a) and (b) we have

with errors in \(\Vert \cdot \Vert \)-norm-sense, for some constant \(c \sim 1\) to see

where \({\mathbf {w}}_1\in \mathbb {C}^N\) is a deterministic vector with uniformly bounded entries. Since \(\left| \left\langle {{\,\mathrm{diag}\,}}({\mathbf {w}}_1)D\right\rangle \right| \prec (\rho +\Xi )/N\eta \) by (3.14), the bound on the first term in (3.15) follows together with (3.11c) via Lemma 3.6. Now we consider the second term in (3.15). We split \(D = D_\mathrm {d} + D_\mathrm {o}\) into its diagonal and off-diagonal components. Since \({\mathcal {B}}\) and \({\mathcal {S}}\) preserve the space of diagonal and the space of off-diagonal matrices we find

with an appropriate deterministic matrix \(u_{ij}\) having bounded entries. In particular, the cross terms vanish and the first term is bounded by

according to (3.14). By taking the off-diagonal part of (3.8a) and using the fact that M and \(V_\mathrm {r}\) and therefore also \(\mathcal {B}^{-1}\mathcal {Q}[M\mathcal {S}[V_\mathrm {r}]V_\mathrm {r}]\) are diagonal (cf. (b) above) we have

for any \(\epsilon \) such that \(\theta \lesssim N^{-\epsilon }\) by Young’s inequality in the last step. Together with (3.17), (3.14) and the assumption that \(\left| G_\mathrm {o}\right| =\left| (G-M)_\mathrm {o}\right| \prec \Lambda \) we then compute

Thus the bound on the second term on the lhs. in (3.15) follows together with (3.18)–(3.19) by \({\mathcal {S}}[G_\mathrm {o}] = T \odot G^t\) and (3.11d) via Lemma 3.6. This completes the proof of (3.15).\(\square \)

With (3.14) and (3.15) the upper bound (3.8c) on the error \(\epsilon _*\) of the cubic equation (3.8b) takes the same form as the rhs. of (3.15) if K is sufficiently large depending on \(\epsilon \). By the first estimate in (3.13b) we can redefine the control parameter \(\Lambda \) on \(\left| G-M\right| \) as \(\Lambda :=\theta +((\rho +\Xi )/N \eta )^{1/2}\) and the claim (3.13a) follows directly with (3.15), thus completing the proof of Lemma 3.8. \(\square \)

3.3 Bootstrapping

Now we will show that the difference \(G-M\) converges to zero uniformly for all spectral parameters \(z \in \mathbb {D}_\zeta \) as defined in (3.12). For convenience we refer to existing bounds on \(G-M\) far away from the real line to establish a rough bound on \(G-M\) in, say, \(\mathbb {D}_1\). We then iteratively lower the threshold on \(\eta \) by appealing to Proposition 3.4 and Lemma 3.8 until we establish the rough bound in all of \(\mathbb {D}_\zeta \). As a second step we then improve the rough bound iteratively until we obtain Theorem 2.5.

Lemma 3.9

(Rough bound). For any \(\zeta >0\) there exists a constant \(c>0\) such that on the domain \(\mathbb {D}_\zeta \) we have the rough bound

Proof

The rough bound (3.20) in a neighbourhood of a cusp has first been established for Wigner-type random matrices in [8]. For the convenience of the reader we present a streamlined proof that is adapted to the current setting. The lemma is an immediate consequence of the following statement. Let \(\zeta _\mathrm {s}>0\) be a sufficiently small step size, depending on \(\zeta \). Then for any \(\mathbb {N}_0\ni k\le 1/\zeta _\mathrm {s}\) on the domain \(\mathbb {D}_{\max \{1-k\zeta _\mathrm {s}, \zeta \}}\) we have

We prove (3.21) by induction over k. For sufficiently small \(\zeta \) the induction start \(k=0\) holds due to the local law away from the self-consistent spectrum, e.g. [34, Theorem 2.1].

Now as induction hypothesis suppose that (3.21) holds on \(\widetilde{\mathbb {D}}_{k} :=\mathbb {D}_{\max \{1-k\zeta _\mathrm {s}, \zeta \}}\), and in particular, \(\left| G\right| \prec 1\), \(\Vert G\Vert _p\le _{\epsilon ,p}N^{\epsilon }\) for any \(\epsilon ,p\) according to Lemma 3.6. The monotonicity of the function \(\eta \mapsto \eta \Vert G(\tau +\mathrm {i}\eta )\Vert _p\) (see e.g. [34, proof of Prop. 5.5]) implies \(\Vert G\Vert _p\le _{\epsilon ,p} N^{\epsilon +\zeta _\mathrm {s}}\le N^{2 \zeta _\mathrm {s}}\) and therefore, according to Lemma 3.6, that \(\left| G\right| \prec N^{2\zeta _\mathrm {s}}\) on \(\widetilde{\mathbb {D}}_{k+1}\). This, in turn, implies \(\left| D\right| \prec N^{-\zeta /3}\) on \(\widetilde{\mathbb {D}}_{k+1}\) by (3.11a) and Lemma 3.6, provided \(\zeta _\mathrm {s}\) is chosen small enough. We now fix \(\mathbf {x},\mathbf {y}\) and a large integer K as the parameters of \(\Vert \cdot \Vert _*=\Vert \cdot \Vert _*^{\mathbf {x},\mathbf {y},K}\) for the rest of the proof and omit them from the notation but we stress that all estimates will be uniform in \(\mathbf {x},\mathbf {y}\). We find \(\sup _{z \in {\widetilde{\mathbb {D}}}_{k+1}}\Vert D(z)\Vert _*\prec N^{-\zeta /3}\), by using a simple union bound and \(\Vert \partial _z D\Vert \le N^C\) for some \(C>0\). Thus, for K large enough, we can use (3.8a), (3.8b), (3.8c) and (3.9) to infer

on the event \(\Vert G-M\Vert _*+\Vert D\Vert _*\lesssim N^{-10/K}\), and on \(\widetilde{\mathbb {D}}_{k+1}\). Now we use the following lemma [10, Lemma 10.3] to translate the first estimate in (3.22) into a bound on \(\left| \Theta \right| \). For the rest of the proof we keep \(\tau =\mathfrak {R}z\) fixed and consider the coefficients \(\xi _1,\xi _2\) and \(\Theta \) as functions of \(\eta \).

Lemma 3.10

(Bootstrapping cubic inequality). For \(0<\eta _*<\eta ^*<\infty \) let \(\xi _1,\xi _2:[\eta _*,\eta ^*] \rightarrow \mathbb {C}\) be complex valued functions and \(\widetilde{\xi }_1,\widetilde{\xi }_2, d:[\eta _*,\eta ^*] \rightarrow \mathbb {R}^+ \) be continuous functions such that at least one of the following holds true:

-

(i)

\(\left| {\xi }_1\right| \sim \widetilde{\xi }_1\), \(\left| {\xi }_2\right| \sim \widetilde{\xi }_2\), and \(\widetilde{\xi }_2^3/d,\widetilde{\xi }_1^3/d^2,\widetilde{\xi }_1^2/d\widetilde{\xi }_2\) are monotonically increasing, and \(d^2/\widetilde{\xi }_1^3+d\widetilde{\xi }_2/\widetilde{\xi }_1^2\ll 1\) at \(\eta ^*\),

-

(ii)

\(\left| {\xi }_1\right| \sim \widetilde{\xi }_1\), \(\left| {\xi }_2\right| \lesssim \widetilde{\xi }_1^{1/2}\), and \(\widetilde{\xi }_1^3/d^2\) is monotonically increasing.

Then any continuous function \(\Theta :[\eta _*,\eta ^*] \rightarrow \mathbb {C}\) that satisfies the cubic inequality  on \([\eta _*,\eta ^*]\), has the property

on \([\eta _*,\eta ^*]\), has the property

With direct arithmetics we can now verify that the coefficients \(\xi _1,\xi _2\) in (3.8b) and the auxiliary coefficients \(\widetilde{\xi }_1,\widetilde{\xi }_2\) defined in (3.7e) satisfy the assumptions in Lemma 3.10 with the choice of the constant function \(d=N^{-4^{-k}\zeta +\delta }\) for any \(\delta >0\), by using only the information on \(\xi _1,\xi _2\) given by the comparison relations (3.8d). As an example, in the regime where \(\tau _0\) is a right edge and \(\omega \sim \Delta \), we have \(\widetilde{\xi }_1 \sim (\eta +\Delta )^{2/3}\) and \(\widetilde{\xi }_2 \sim (\eta +\Delta )^{1/3}\) and both functions are monotonically increasing in \(\eta \). Then Assumption (ii) of Lemma 3.10 is satisfied. All other regimes are handled similarly.

We now set \(\eta ^*:=N^{-k\zeta _\mathrm {s}}\) and

By the induction hypothesis we have \(\left| \Theta (\eta ^*)\right| \lesssim d \lesssim \min \{ d^{1/3}, d^{1/2}\widetilde{\xi }_2^{-1/2},d \widetilde{\xi }_1^{-1}\}\) with overwhelming probability, so that the condition in (3.23) holds, and conclude \(\left| \Theta (\eta )\right| \prec d^{1/3}=N^{-(4^{-k}\zeta -\delta )/3}\) for \(\eta \in [\eta _*,\eta ^*]\). For small enough \(\delta >0\) the second bound in (3.22) implies \(\Vert G-M\Vert _*\prec N^{-4^{k+1}\zeta }\). By continuity and the definition of \(\eta _*\) we conclude \(\eta _*=N^{-(k+1) \zeta _\mathrm {s}}\), finishing the proof of (3.21). \(\square \)

Proof of Theorem 2.5

The bounds within the proof hold true uniformly for \(z\in \mathbb {D}_\zeta \), unless explicitly specified otherwise. We therefore suppress this qualifier in the following statements. First we apply Lemma 3.8 with the choice \(\Xi =\Lambda \), i.e. we do not treat the imaginary part of the resolvent separately. With this choice the first inequality in (3.13b) becomes self-improving and after iteration shows that

and, in other words, (3.13a) holds with \(\Xi =\theta + (\rho /N\eta )^{1/2}+ 1/N\eta \). This implies that if \(\left| \Theta \right| \prec \theta \lesssim N^{-c}\) for some arbitrarily small \(c>0\), then

holds for all sufficiently small \(\widetilde{\epsilon }\) with overwhelming probability, where we defined

For this conclusion we used the comparison relations (3.8d), Proposition 3.2(iv) as well as (3.7b), and the bound \(\sqrt{\eta /\rho }\sim \sqrt{\eta /\widetilde{\rho }}\lesssim \widetilde{\xi }_2\). \(\square \)

The bound (3.25) is a self-improving estimate on \(\left| \Theta \right| \) in the following sense. For \(k \in \mathbb {N}\) and \(l \in \mathbb {N}\cup \{*\}\) let

Then (3.25) with \(\left| \Theta \right| \prec \theta _k\) implies that \(\left| \Theta ^3+ \xi _2 \Theta ^2 +\xi _1 \Theta \right| \lesssim N^{-\widetilde{\epsilon }} d_k\). Applying Lemma 3.10 with \(d=N^{-\widetilde{\epsilon }}{d_k}\), \(\eta ^*\sim 1\), \(\eta _*=N^{\zeta -1}\) yields the improvement \(\left| \Theta \right| \prec \theta _{k+1}\). Here we needed to check the condition in (3.23) but at \(\eta ^*\sim 1\) we have \(\widetilde{\xi }_1\sim 1\), so \(\left| \Theta \right| \lesssim N^{-\widetilde{\epsilon }}d_k\le d_{k+1}\sim \theta _{k+1}\). After a k-step iteration until \(N^{-k\widetilde{\epsilon }}\) becomes smaller than \(N^{6\widetilde{\epsilon }}d_*\), we find \(\left| \Theta \right| \prec \theta _*\), where we used that \(\widetilde{\epsilon }\) can be chosen arbitrarily small. We are now ready to prove the following bound which we, for convenience, record as a proposition.

Proposition 3.11

For any \(\zeta >0\) we have the bounds

where \(\theta _*:=\min \{d_*^{1/3},d_*^{1/2}/\widetilde{\xi }_2^{1/2},d_*/\widetilde{\xi }_1\}\), and \(d_*,\widetilde{\rho },\widetilde{\xi }_1,\widetilde{\xi }_2\) are given in (3.26), (3.7b) and (3.7e), respectively.

Proof

Using \(\left| \Theta \right| \prec \theta _*\) proven above, we apply (3.24) with \(\theta =\theta _*\) to conclude the first inequality in (3.27). For the second inequality in (3.27) we use the estimate on \(\left| G-M\right| _{\mathrm {av}}\) from (3.13b) with \(\theta = \theta _*\) and \(\Xi = (\rho /N\eta )^{1/2}+ 1/N\eta \). \(\square \)

The bound on \(\left| G-M\right| \) from (3.27) implies a complete delocalisation of eigenvectors uniformly at singularities of the scDOS. The following corollary was established already in [8, Corollary 1.14] and, given (3.27), the proof follows the same line of reasoning.

Corollary 3.12

(Eigenvector delocalisation). Let \({\mathbf {u}}\in \mathbb {C}^N\) be an eigenvector of H corresponding to an eigenvalue \(\lambda \in \tau _0+(-c,c)\) for some sufficiently small positive constant \(c \sim 1\). Then for any deterministic \(\mathbf {x}\in \mathbb {C}^N\) we have

The bounds (3.27) simplify in the regime \(\eta \ge N^\zeta \eta _\mathrm {f}\) above the typical eigenvalue spacing to

using Lemma 3.3 which implies \(\theta _*\le d_*/\widetilde{\xi }_1\le 1/N\eta \). The bound on \(\left| G-M\right| _{\mathrm {av}}\) is further improved in the case when \(\tau _0=\mathfrak {e}_-\) is an edge and, in addition to \(\eta \ge N^\zeta \eta _\mathrm {f}\), we assume \(N^{\delta }\eta \le \omega \le \Delta /2\) for some \(\delta >0\), i.e. if \(\omega \) is well inside a gap of size \(\Delta \ge N^{\delta +\zeta }\eta _\mathrm {f}\). Then we find \(\Delta >N^{-3/4}\) by the definition of \(\eta _\mathrm {f}=\Delta ^{1/9}/N^{2/3}\) in (2.7) and use Lemma 3.3 and (3.7b), (3.7e) to conclude

In the last bound we used \(1/N\omega \le N^{-\delta }/N\eta \) and \(\Delta ^{1/6}/(N\eta \omega ^{1/2})\le N^{-\delta /2}\). Using (3.29) in (3.27) yields the improvement

The bounds on \(\left| G-M\right| _{\mathrm {av}}\) from (3.28) and (3.30), inside and outside the self-consistent spectrum, allow us to show the uniform rigidity, Corollary 2.6. We postpone these arguments until after we finish the proof of Theorem 2.5. The uniform rigidity implies that for \({{\,\mathrm{dist}\,}}(z, {{\,\mathrm{supp}\,}}\rho ) \ge N^{\zeta }\eta _\mathrm {f}\) we can estimate the imaginary part of the resolvent via

for any normalised \(\mathbf {x}\in \mathbb {C}^{N}\), where \({\mathbf {u}}_\lambda \) denotes the normalised eigenvector corresponding to \(\lambda \). For the first inequality in (3.31) we used Corollary 3.12 and for the second we applied Corollary 2.6 that allows us to replace the Riemann sum with an integral as \([\eta ^2+(\tau _0+\omega -\lambda )^2]^{1/2}=\left| z-\lambda \right| \ge N^\zeta \eta _\mathrm {f}\).

Using with (3.31), we apply Lemma 3.8, repeating the strategy from the beginning of the proof. But this time we can choose the control parameter \(\Xi =\rho \). In this way we find

where we defined

Note that the estimates in (3.32) are simpler than those in (3.27). The reason is that the additional terms \(1/N\eta \), \(1/(N\eta )^2\) and \(1/(N\eta )^3\) in (3.27) are a consequence of the presence of \(\Xi \) in (3.13a), (3.13b). With \(\Xi =\rho \) these are immediately absorbed into \(\rho \) and not present any more. The second term in the definition of \(d_\#\) can be dropped since we still have \(\widetilde{\xi }_2\gtrsim (\rho /N\eta )^{1/2}\) (this follows from Lemma 3.3 if \(\eta \ge N^\zeta \eta _\mathrm {f}\), and directly from (3.7b), (3.7e) if \(\omega \ge N^\zeta \eta _\mathrm {f}\)). This implies \(\theta _\#\lesssim d_\#^{1/2}/\widetilde{\xi }_2^{1/2}\lesssim (\rho /N\eta )^{1/2}\), so the first bound in (3.32) proves (2.8a).

Now we turn to the proof of (2.8b). Given the second bound in (3.28), it is sufficient to consider the case when \(\tau =\mathfrak {e}_-+\omega \) and \(\eta \le \omega \le \Delta /2\) with \(\omega \ge N^\zeta \eta _\mathrm {f}\). In this case Proposition 3.2 yields \(\widetilde{\xi }_2\widetilde{\rho }/\widetilde{\xi }_1+\widetilde{\rho }\lesssim \eta /\omega \sim \eta /{{\,\mathrm{dist}\,}}(z,{{\,\mathrm{supp}\,}}\rho )\). Thus we have

and therefore the second bound in (3.32) implies (2.8b). This completes the proof of Theorem 2.5. \(\square \)

3.4 Rigidity and absence of eigenvalues

The proofs of Corollaries 2.6 and 2.8 rely on the bounds on \(\left| G-M\right| _{\mathrm {av}}\) from (3.28) and (3.30). As before, we may restrict ourselves to the neighbourhood of a local minimum \(\tau _0 \in {{\,\mathrm{supp}\,}}\rho \) of the scDOS which is either an internal minimum with a small value of \(\rho (\tau _0)>0\), a cusp location or a right edge adjacent to a small gap of length \(\Delta >0\). All other cases, namely the bulk regime and regular edges adjacent to large gaps, have been treated prior to this work [8, 11].

Proof of Corollary 2.8

Let us denote the empirical eigenvalue distribution of H by \(\rho _H = \frac{1}{N} \sum _{i=1}^N \delta _{\lambda _i}\) and consider the case when \(\tau _0=\mathfrak {e}_-\) is a right edge, \(\Delta \ge N^{\delta } \eta _\mathrm {f}\) for any \(\delta >0\) and \(\eta _\mathrm {f}=\eta _\mathrm {f}(\mathfrak {e}_-)\sim \Delta ^{1/9}N^{-2/3}\). Then we show that there are no eigenvalues in \(\mathfrak {e}_-+[N^{\delta }\eta _\mathrm {f}, \Delta /2]\) with overwhelming probability. We apply [8, Lemma 5.1] with the choices

for any \(\omega \in [N^{\delta }\eta _\mathrm {f}, \Delta /2]\) and some \(\zeta \in (0,\delta /4)\). We use (3.30) to estimate the error terms \(J_1, J_2\) and \(J_3\) from [8, Eq. (5.2)] by \(N^{2\zeta -\delta /2-1}\) and see that \((\rho _H-\rho )([\tau _1,\tau _2]) =\rho _H([\tau _1,\tau _2]) \prec N^{2\zeta -\delta /2-1}\), showing that with overwhelming probability the interval \([\tau _1,\tau _2]\) does not contain any eigenvalues. A simple union bound finishes the proof of Corollary 2.8. \(\square \)

Proof of Corollary 2.6

Now we establish Corollary 2.6 around a local minimum \(\tau _0 \in {{\,\mathrm{supp}\,}}\rho \) of the scDOS. Its proof has two ingredients. First we follow the strategy of the proof of [8, Corollary 1.10] to see that

for any \(\left| \omega \right| \le c \), i.e. we have a very precise control on \(\rho _H\). In contrast to the statement in that corollary we have a local law (3.28) with uniform \(1/N\eta \) error and thus the bound (3.33) does not deteriorate close to \(\tau _0\). We warn the reader that the standard argument inside the proof of [8, Corollary 1.10] has to be adjusted slightly to arrive at (3.33). In fact, when inside that proof the auxiliary result [8, Lemma 5.1] is used with the choice \(\tau _1=-10\), \(\tau _2 =\tau \), \(\eta _1=\eta _2=N^{\zeta -1}\) for some \(\zeta >0\), this choice should be changed to \(\tau _1=-C\), \(\tau _2 =\tau \), \(\eta _1=N^{\zeta -1}\) and \(\eta _2=N^{\zeta }\eta _{\mathrm {f}}(\tau )\), where \(C>0\) is chosen sufficiently large such that \(\tau _1\) lies far to the left of the self-consistent spectrum.