Abstract

The construction of a novel protein force field called FFLUX, which uses topological atoms, is founded on high-rank and fully polarizable multipolar electrostatics. The machine learning method kriging successfully predicts multipole moments of a given atom with as only input the nuclear coordinates of the atoms surrounding this given atom. Thus, trained kriging models accurately capture the polarizable multipolar electrostatics of amino acids. Here we show that successful kriging models can also be constructed for non-electrostatic energy contributions. As a result, the full potential energy surface of the (molecular) system trained for can be predicted by the corresponding set of atomic kriging models. In particular, we report on the performance of kriging models for each atom’s (A) (1) total atomic energy (E AIQA ), (2) intra-atomic energy (E Aintra ) (both kinetic and potential energy), (3) exchange energy (\(V_{XC}^{{AA^{\prime } }}\)) and (4) electrostatic energy (\(V_{\text{cl}}^{{AA^{{\prime }} }}\)) of atom A with the rest of the system (A′), and (5) interatomic energy (\(V_{\text{inter}}^{{AA^{{\prime }} }}\)). The total molecular energy can be reconstructed from the kriging predictions of these atomic energies. For the three case studies investigated (i.e. methanol, N-methylacetamide and peptide-capped glycine), the molecular energies were produced with mean absolute errors under 0.4, 0.8 and 1.1 kJ mol−1, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is a consensus that traditional force fields, which are instrumental in the vast majority of modern molecular simulations, need further improvement. Their limiting accuracy is regularly pointed out in the literature, as a cause for discrepancies between a given force field’s predictions and experimental results. Indeed, if the sampling during the simulation is adequate, then only the potential can be blamed if predictions fail to be trustworthy. In order to improve their energy prediction, popular force fields such as AMBER and CHARMM have been modified on several occasions. Fairly recent modifications were extensively tested [1] in 2011 with the most powerful dedicated molecular simulation hardware in the world. The four force fields tested in this protein folding work were Amber ff03, Amber ff99SB*-ILDN, CHARMM27 and CHAMRMM22*. It was found that the folding mechanism and the properties of the unfolded state depended substantially on the choice of force field. Another extensive and more recent study [2] concluded, from a millisecond of simulations on intrinsically disordered proteins, that eight well-known force fields generate unexpectedly huge differences in chain dimension, hydrogen bonding and secondary structure content. In fact, discrepancies are so serious that changing the force field has a stronger effect on secondary structure content than changing the entire peptide sequence. Such comparisons are quite rare but precious because they clearly demonstrate, while eliminating any sampling issues or hardware limitations, that much more work needs to be done. The question is which type of work.

Over the last decade our strategy has been to re-examine and challenge the core architecture of classical force fields. The type of work that accompanies such a bold strategy can be characterized as arduous, and above all, systematic. At the outset of this long term project, the ubiquitous atomic point charges (one on each nucleus) were replaced by nucleus-centred atomic multipole moments. This step is shared by other next-generation force fields such as AMOEBA [3], XED [4], SIBFA [5] and ACKS2 [6] and is driven by a clearly justified desire towards increasingly accurate electrostatics [7, 8].

The current approach embraces so-called topological atoms as the “entity of information” from which any system (molecular or ionic) is built. Much work has been carried out [9–17] in order to obtain a deep understanding of the convergence behaviour and accuracy of the electrostatic interaction between topological atoms, as well as the electrostatic potential they generate. Topological atoms are defined by the quantum theory of atoms in molecules (QTAIM) [18–21] as finite-volume fragments in real 3D space. As quantum atoms [20, 22], topological atoms are deeply rooted in quantum mechanics [23]. These atoms result from a parameter-free partitioning of the electron density, introducing sharp boundaries whose shape responds to any variation in nuclear geometry. The finite size of topological atoms prevents penetration effects and the associated correction in the form of damping functions. Topological multipolar electrostatics proved to be successful in the description of electrostatic interaction in proteins [24].

The next step in the construction of a topological force field is the inclusion of electrostatic polarization. In principle, the multipole moments of any given atom are influenced by all the atoms surrounding it, but this influence typically decreases the further away the surrounding atoms are. In order to be able to handle the full complexity of this influence, we invoked machine learning early on. Initially we used neural networks [25] and applied it to water clusters [26]. In 2009 it turned out [27] that a completely different machine learning method called kriging performed more accurately than neural networks. Although kriging was computationally more expensive, it coped better with the larger number of molecules surrounding the atom of interest. Kriging [28], which is also known as Gaussian regression analysis [29], originates in geostatistics but has been used in very different application areas, including the prediction [30] of atomic properties when inside molecules.

The essence of our kriging approach is the establishment of a direct mapping between an atomic multipole moment (output) and the nuclear coordinates (input) of the surrounding atoms. This mapping is obtained after training to a training set of (molecular or cluster) geometries, and is able to make a prediction to a previously unseen geometry of the surrounding molecules. For this purpose we take advantage of the renowned interpolative power of kriging. Moreover, when using kriging models in an extrapolative way, the model returns the average value of the atomic property of interest observed over the training set. Finally, it must be pointed out that a kriging model is not returning an atomic polarizability but the atomic multipole moment itself, after the polarization process is complete. This strategy has an important advantage when the kriging models are used during a molecular dynamics simulation: the atomic moments do not need to be computed (typically iteratively) from the polarizabilities. Instead, the multipole moments are predicted “on the fly”, directly from the nuclear coordinates of the surroundings at any given time step.

Most attention has been devoted to modelling the electrostatic interaction at long-range by means of kriged atomic multipole moments. As this procedure is understood and works well [31–37], the next step is how to combine this electrostatic energy with the non-electrostatic energy contributions. Preliminary and unpublished work expressed the latter in the traditional manner, i.e. with Hooke-like potentials reinforced with anharmonic extensions. The parameterization of these potentials, in the presence of kriged electrostatics, turned out to be inadequate. For this reason, a more satisfactory and elegant alternative strategy was carried out, which is to combine kriged electrostatics with kriged non-electrostatics. In this streamlined procedure the machine learning method is trained for energy quantities that are obtained from the same topological energy partitioning [38] that yields the atomic multipole moments. In 2014, the atomic kinetic energy was successfully kriged [39] as the first non-electrostatic energy contribution. That work presented proof-of-concept based on four molecules of increasing complexity (methanol, N-methylacetamide, glycine and triglycine). For all atoms tested, the mean atomic kinetic energy errors fell below 1.5 kJ mol−1, and far below this value in most cases.

In the current article, we go further and deliver proof-of-concept for the kriging of non-electrostatic atomic energy contributions. For that purpose we have adopted the interacting quantum atoms (IQA) scheme proposed by Blanco et al. [40]. This is a topological energy partitioning scheme, inspired by early work [11] on atom–atom partitioning of intramolecular and intermolecular Coulomb energy. In IQA, the kinetic energy is subsumed in the intra-atomic energy (or sometimes called “self energy”), which also contains the potential energy of the electrons interacting with themselves and with the nucleus, both within a given atom. This intra-atomic energy plays a pivotal role in stereo-electronic effects, including intermolecular Pauli-like repulsion. Furthermore, IQA can calculate the electrostatic interaction between two atoms that are so close that their multipolar expansion diverges. The IQA method achieves this goal by using a variant of the six-dimensional volume integration (over two atoms) proposed in Ref. [11], which avoids the multipolar expansion altogether. Similarly, IQA does not multipole-expand the (inter-atomic) exchange energy, although this can be done [15]. However, this route is not followed by our topological force field. This energy contribution expresses covalent bonding energy. Within the Hartree–Fock ansatz, these three energy contributions (self, Coulomb and exchange) complete an IQA partitioning. However, post-Hartree–Fock methods introduce a fourth (non-vanishing) contribution that is associated with electron correlation. In the current work, we use a version of IQA [41] that is compatible with DFT with an eye on including electron correlation effects. We invoke the use of DFT level with the largest of systems studied here, capped glycine.

Three molecules were chosen here for this proof-of-concept investigation: methanol, N-methylacetamide (NMA) and glycine, which is capped by a peptide bond both at its C and N terminus. These systems were chosen to represent a progressive sequence of molecular complexity, while being relevant to biomolecular modelling: methanol features as the side residue in the amino acid serine, NMA is the smallest system modelling a peptide bond, while capped glycine represents an amino acid in an oligopeptide. This work is the first report of combining machine learning with a full topological energy partitioning.

2 Methodological background

2.1 The interacting quantum atoms (IQA) approach

Figure 1 shows examples of topological atoms appearing in N-methylacetamide, which were generated by in-house software [42, 43]. QTAIM essentially defines a topological atom as a three-dimensional subspace determined by the bundle of gradient paths (of a system’s electron density) that are attracted to the atom’s nucleus. This partitioning idea also features in other topological approaches [44], such as that in connection with the electron localization function (ELF). The topological energy partitioning method IQA is a third approach that uses the central idea of the so-called gradient vector field to extract chemical information from a system. Quantum chemical topology (QCT) [45] is a collective name to gather all topological approaches (10 so far, see Box 8.1 in Ref. [20]) that share the abovementioned central idea. The acronym QCT resurfaces in QCTFF, the force field under construction here [22], which uses topological atoms. The new name for QCTFF is FFLUX, for which a very recent and accessible perspective [46] can be consulted. We also note here that it has been shown before [47] that atom types that can be computed using the atomic properties of topological atoms in amino acids.

It is clear from Fig. 1 that QCT partitions a molecule into well-defined non-overlapping atoms [48]. Moreover, these topological atoms do not show any gaps between them; in other words, they partition space exhaustively. It is important to pause and briefly discuss the full consequence of this property. Exhaustive partitioning infers that each point in space belongs to a topological atom: all space is accounted for. In principle, this property must have repercussions [49] for docking studies, as will become clear when QCT starts being used at this larger molecular scale. Classical drug design (e.g. [50]) thinks of both ligand and the protein’s active site as bounded by artificial surfaces (e.g. solvent accessible surface) based on standard van der Waals radii and an image of overlapping hard spheres. This view necessarily introduces “gaps of open space”, which belong neither to the ligand nor to the protein. However, quantum mechanically we know that electron density resides in those gaps, no matter how small or thin they are. Electron density generates an electrostatic potential, and hence, also generates electrostatic interaction energy contributions. If a gap is not accounted for, then energy will be missing, which interferes with the energy balance during the docking process. However, if there is no gap, as in QCT, then all energy is properly accounted for.

In brief, IQA quantitatively describes the total energy of an atom, even if the system is not at a stationary point in the potential energy surface. In other words, unlike in orthodox QTAIM, there is no need to invoke the atomic virial theorem [20, 51], the application of which is restricted to stationary points (such as equilibrium geometries). This total energy is comprised of the energy associated with the atom itself (intra-atomic), and with energy resulting from the interaction between the atoms (interatomic). We will explain each type of energy in turn, beginning with the decomposition of the molecular energy, E molecIQA , into the atomic energies, one for each atom A, denoted E AIQA , followed by its breakdown into intra-atomic (or ‘self’) and interatomic interaction energies,

where E Aintra and V ABinter are the intra-atomic (of atom A) and inter-atomic (between atoms A and B) energies, respectively. The intra-atomic energy can be further partitioned,

where T A is the kinetic energy of the electrons associated with atom A, V AAee is the (repulsive) electron–electron potential energy, and V AAen is the (attractive) electron–nuclear potential energy. Together, these three energies comprise the intra-atomic energy possessed by a single atom.

The interatomic energy attributed to a pair of atoms can also be further partitioned,

where V ABen , V ABne and V ABee were described above but now with the ordering of the subscript components being allied to the ordering of the atoms in the superscript. For example, subscript ‘en’ and superscript ‘AB’ refers to the electrons of atom A and the nucleus of atom B. In addition to the electronic energy components, V ABnn is the repulsive nucleus–nucleus potential energy.

The electron–electron energy V ABee can be even further partitioned to give the components in Eq. (4),

where V ABCoul represents the Coulombic interaction between the electrons in atoms A and B. V AB X represents the inter-electron exchange potential energy and V ABcorr the inter-electron correlation potential energy. Combining the bracketed terms in Eq. (3) with the Coulomb energy only, leads to the total electrostatic energy between two atoms, or V ABelec , which is often written as V ABcl because of the “classical” nature of the electrostatic potential energy (devoid from any purely quantum mechanical exchange energy),

We have extensively studied this energy V ABcl in terms of its multipolar convergence behaviour. The quantity V ABcl incorporates the widely reported electrostatic multipole moments’ contribution of the long-ranged electrostatic energy, in addition to the short-range electrostatic contribution obtained from IQA. Equation (3) can be rewritten as Eq. (6). This is done by first substituting the bracketed expression by \(V_{\text{cl}}^{AB} - V_{\text{Coul}}^{AB} ,\) as obtained from Eq. (5), and then by substituting V ABee using Eq. (4), such that after cancellation of V ABCoul we obtain,

The new expression separates the interatomic energy into the interplay of ionic-like (V ABcl ), covalent (V AB X ) and correlation (V ABcorr ) energies. Note that it is often convenient to combine exchange and correlation in one term. These three energies along with the intra-atomic energy compose the four primary energies that FFLUX is built upon.

Until recently [41] the inclusion of any computationally affordable correlation energy has been lacking because IQA is incompatible with both perturbation theory and density functional theory (DFT) methods. Indeed, the methods that are compatible with the original IQA (i.e. full configuration interaction (FCI), complete active space (CAS), configuration interaction with single and double excitations (CISD) or coupled cluster with single and double excitations (CCSD) levels of theory) demand much greater computational expense. Neither perturbation theory nor standard DFT methods provide a well-defined second-order reduced density matrix, and hence IQA cannot be straightforwardly applied to them. Together with Dr TA Keith, the main author of the QCT computer program AIMAll [52], a DFT-based IQA method that incorporates at least some correlation was validated [41] by our group. The solution involved incorporating the explicit B3LYP atomic exchange functional in order to correctly calculate an atom’s total atomic exchange, thereby recovering the ab initio energy of the whole molecule. However, the fact that the functional cannot be used to calculate interatomic exchange (see Ref. [41] for details) led us to calculate the interatomic component using the Hartree–Fock-like expression but then with Kohn–Sham orbitals inserted. The remaining intra-atomic exchange–correlation is then calculated as the difference between the atomic exchange–correlation directly obtained from the B3LYP functional and the Hartree–Fock-like interatomic exchange.

In this investigation, we will again make use AIMALL. This program is able to return a useful quantity, denoted \(V_{\text{inter}}^{{AA^{{\prime }} }}\), which is defined as follows:

where A′ represents every atom other than atom A. Note that Eq. (7) defines the atom-centred interatomic energy contribution \(V_{\text{inter}}^{{AA^{{\prime }} }}\). In other words, it summarizes how an atom interacts in full with all other atoms. Note that the quantity \(V_{\text{inter}}^{{AA^{{\prime }} }}\) is obtained computationally cheaper than by summing over the individual pair-wise atomic contributions V ABinter . However, the computational advantage of \(V_{\text{inter}}^{{AA^{{\prime }} }}\) is offset by a reduction in the chemical insight that we obtain from being able to inspect each atom pair individually. This loss occurs over and above that caused by lumping together the electrostatic, exchange or correlation energy contributions (see Eq. 6). Instead, the interaction energy is defined in terms of a given atom A experiencing the entire surrounding molecular environment. Equally, \(V_{\text{inter}}^{{AA^{{\prime }} }}\) can be decomposed into \(V_{\text{cl}}^{{AA^{{\prime }} }}\) and \(V_{X}^{{AA^{{\prime }} }}\) components, much like the pair-wise AB energies. For our current purpose, we are satisfied with this formulation in spite of the reduced chemical insight it gives because our prime motivation is to predict atomic energies, and not to predict chemical insight.

Returning to the IQA formalism, we obtain Eq. (8), which expresses three ways (“approaches”) to break up the molecular energy into atomic contributions. Approach A was already present in Eq. (1) while substituting \(V_{\text{inter}}^{{AA^{{\prime }} }}\) of Eq. (7) into Eq. (1) leads to Approach B. Finally, Approach C follows from Eq. (6) and now applying the idea behind Eq. (7) to V ABcl and V AB XC , yielding

which is the key equation for the analysis in this paper. Note that \(V_{\text{inter}}^{{AA^{{\prime }} }}\) is always halved when used in Eq. (8), attributing half of the energy to a single atom A, in order to prevent double-counting of the interatomic energy in the molecule.

We aim for a greater understanding of both the quantitative nature of these five types of energy (E AIQA , E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)) and their suitability in FFLUX after being kriged. As suggested in Eq. (8), the molecular energy can be recovered through three different approaches, each incorporating the use of different IQA energies. Approach A uses only the total atomic energy of an atom, denoted E AIQA . The atomic energy E AIQA is a sum of the intra- and inter-atomic energy and hence expresses their resulting “trade-off” by the single quantity that it is. This final energy, E AIQA , suffices by itself for FFLUX being able to predict the structure and dynamics of a system, because the latter only depend on the total atomic energy, not its breakdown. Approach B exposes the separation of the intra-atomic and interatomic energies for insight into how an atom itself experiences the environment it is in. Finally, Approach C takes the separation one step further, using the individual exchange and electrostatic energies in the interatomic description of an atom.

In order to clarify the strategy for the complete treatment of energy contributions in FFLUX a comment about \(V_{\text{corr}}^{AB}\) in Eq. (4) is necessary. This energy contribution covers dynamic correlation and hence dispersion. Our preferred route is to treat \(V_{\text{corr}}^{AB}\) in exactly the same way as \(V_{\text{cl}}^{AB}\) and \(V_{X}^{AB} .\) This approach, for which proof-of-concept has been reached in our lab, will guarantee a seemless integration of dispersion in the FFLUX ansatz. This strategy will thereby avoid the typical problems (e.g. the need for damping functions) that alternative dispersion methods introduce.

For a more exhaustive description of the IQA partitioning scheme including additional formulae, its capabilities and previous applications, we refer to the original literature [40, 53–58].

2.2 Kriging (Gaussian regression analysis)

As a machine learning method, kriging has its roots in geostatistics where it has been used to predict the location of precious material after being taught these locations [28]. Within FFLUX, kriging is used to map geometrical change within a molecule, obtained from nuclear coordinates, to a corresponding atomic property, which can be an IQA energy or atomic multipole moment. The atomic property is the machine learning output and the coordinates are the input. Although the full details are given elsewhere [33, 34] we explain here how these coordinates are constructed. It is advantageous that the coordinates are internal in nature (so only 3N − 6 for a nonlinear N-atom system). On each nucleus we install a so-called atomic local frame (ALF), which enables the definition of a polar angle and an azimuthal angle to describe the position of each nucleus in the system, except for the three nuclei required in defining the ALF. The distance between the ALF’s origin and the nucleus completes the triplet of (spherical polar) coordinates for a given nucleus in the system (other than that on which the ALF is installed). Machine learning language calls these coordinates features, as they are the input variables to kriging in this case. Finally we note that the first three features of the vector of 3N − 6 features (necessary to describe unambiguously a molecular geometry) consists of (1) the distance between the origin and the first nucleus, which fixes the ALF’s x-axis, (2) the distance between the origin and the second nucleus (fixing the ALF’s xy-plane), and (3) the angle suspended by the first nucleus, the origin and the second nucleus.

Before introducing the mathematics involved, it is useful to understand the kriging procedure qualitatively. Before a kriging model can predict a quantity, it must first be trained for using a number of molecular geometries with corresponding atomic properties. These data form the training set while the test set will consist of data that do not belong to the training set. The external character of the test set makes the assessment of the kriging predictions meaningful, because predictions of the training set data would be exact anyway (with the type of kriging used here). The molecular geometries used to build each set are obtained from sampling a molecular energy well that surrounds an energy minimum. The details of this sampling are given below, in Sect. 2.3.

Figure 2 summarizes the kriging approach, showing the key formula that maps the input (left panel, features) to the output (right panel, atomic energies). The feature vector F collects all N feat features f k , which have been detailed above. Note that N feat is the dimensionality of the feature space in which all N ex molecular geometries are expressed. In the formula of Fig. 2, N ex is the number of training examples, μ represents the mean of the observed value (also known as a constant “background” value) while w i is the kriging “weight” (obtained from the so-called correlation matrix [33]). Note that the name weight should not conjure up an image of arbitrary adjustments because each weight is computed exactly as explained in Ref. [33].

Summary of kriging method at the heart of FFLUX. Atomic energies (intra-atomic, inter-atomic, or total sum) of a given topological atom (right panel, output) are mapped onto the features {f k }, which describe the nuclear geometry of the environment surrounding this given atom. The kriging parameter θ k and p k are optimized (see main text)

Again, instead of giving the full mathematical details [33, 59] of the kriging procedure, we here explain the crux in words. Imagine a coin being tossed a number of times and the outcomes recorded. If the coin is fair, then the parameter, which governs the outcomes and which is called t h , is exactly 0.5 for tossing a head. This parameter is analogous to parameters θ k and p k in the formula of Fig. 2. Now suppose that we observe a statistical bias towards the outcome of “heads up”. We can then ask to what the extent the coin is biased. In other words, given the observations made t h differs from 0.5. In fact, one can find the value of the parameter t h such that the likelihood is maximal of again observing the outcomes that were observed. For example, one could find that t h = 2/3 is this value. Similarly, when this idea is applied to the kriging problem at hand, the so-called likelihood function is maximized against the observed data, which are the atomic energies. This procedure then returns the optimal values of the parameters θ k and p k . The technical details of this optimization are complex and extensively researched in our laboratory [59]. The next paragraph provides a very brief summary. With regard to using the key kriging formula in Fig. 2 (after training), previously unseen features F = {f k } are inserted, returning the atomic property f(F). It is clear that the argument of the exponential is a distance function, which is not necessarily Euclidean (i.e. \(p_{k} \ne 2\)).

In terms of the optimization procedure, first the concentrated log-likelihood is calculated analytically. The function is then maximized by a different machine learning method because this cannot be achieved analytically. We have successfully used particle swarm optimization (PSO), the mathematical details of which can be found in Ref. [60]. The optimization of the parameters θ k and p k via PSO is the most computationally expensive step in the overall kriging process. However, optimizing these parameters allows the user to obtain the highest possible concentrated log-likelihood function, ensuring that the best possible model is obtained. The time for the PSO optimization is proportional to the number of geometries in the training set and the number of atoms in the molecule. The result is an analytical formula (see Fig. 2) linking the geometrical features of a molecule and the atomic property of choice. For a more comprehensive description of the kriging protocol, the reader is invited to refer to our previous publications [33, 35–37, 59, 61].

2.3 Sampling of distorted geometries

The selection of training examples with which to construct a kriging model is of great importance. The geometries of the training set should be representative of the physically realistic regions of conformational space. This representation ensures that predictions corresponding to relevant molecular geometries are always made in areas of conformational space that have been trained for in the kriging model. Conventional methods will use some form of molecular mechanics, parameterized by a classical force field, to generate the training geometries. However, it has been shown that molecular mechanics does not necessarily sample the relevant areas of conformational space over the course of a typical trajectory [62–64].

Our approach attempts to locally approximate the ab initio molecular potential energy surface about a “seeding” conformation, which in this work is the global energetic minimum of the molecule. Whilst more than one seeding conformation can be used, granting a greater exploration of the potential energy surface, we found that for the molecules considered in this work, the Boltzmann weight of the global minimum exceeded 0.75 in all cases. By evaluating the first- and second-order spatial derivatives of the potential energy (Jacobian and Hessian, respectively) at the seeding geometry, one can construct an approximate local potential energy surface through a Taylor expansion. The dynamics on this local approximation to the potential energy surface are then governed by a set of harmonic equations of motion, referred to as the molecular normal modes [65].

Here we outline the major features of our methodology, whilst a more thorough description of is given elsewhere [66, 67]. For an N-atom molecular system, we can define a 3 N × 3 N transformation matrix, \({\mathbf{\mathcal{D}}}\), that converts a mass-weighted Cartesian state vector, \(\varvec{q},\) to an internal coordinate state vector, \(\varvec{s}\). Given \({\mathbf{\mathcal{D}}}\), we can transform the mass-weighted Cartesian Hessian, \(\varvec{H}_{q}\), to an internal coordinate basis through

The frequencies of the molecular normal modes are then given by diagonalising \(\varvec{H}_{s}\)

where \({\mathbf{\mathcal{E}}}\) correspond to the eigenvectors of \(\varvec{H}_{s}\), \(\varvec{I}\) is the identity matrix, and the eigenvalues of \(\varvec{H}_{s}\) are given by the 3 N diagonal elements \(\left( {\varvec{I}\lambda } \right)_{ii} = \lambda_{i} .\) The ith normal mode frequency, \(\nu_{i}\), is related to the ith eigenvalue through the expression

where c is a conversion factor incorporating the speed of light and a conversion from atomic units to reciprocal centimetres. Six of these normal mode frequencies are equal to zero in an internal coordinate basis, corresponding to the global translational and global rotational degrees of freedom of the molecular system.

The amplitude of vibration of the ith normal mode, \(A_{i} ,\) is given by the standard expression for a simple harmonic oscillator,

where \(k_{i}\) is the force constant of the ith normal mode (calculable from \(\nu_{i}\)), and \(E_{i}\) is the energy available to it. Each normal mode is allocated an amount of energy given by a standard equipartition, \(E_{i} = k_{\text{B}} T /2,\) where \(k_{\text{B}}\) is the Boltzmann constant and T is the temperature at which the simulation is performed. To allow for a little more flexibility, each of the \(E_{i}\) is subjected to a stochastic Gaussian fluctuation. Given the amplitude and frequency of the oscillator, each normal mode can evolved in discrete time.

The final matter requiring discussion is how time is discretized for our equations of motion. Given the frequency of the oscillator, we can compute its time period, \(T_{i} = 1 /\nu_{i} .\) We choose a discrete timestep, \(\Delta t_{i}\), such that for each time period, \(T_{i} =\Delta t_{i} n_{\text{cycle}} ,\) where \(n_{\text{cycle}}\) is a user-defined parameter. After every \(n_{\text{cycle}}\) timesteps, we also perturb the energy available to each normal mode by a new Gaussian-distributed number. To reduce the correlation between samples, a final user-defined parameter, \(n_{\text{out}}\) is also defined. We define \(n_{\text{out}}\) to correspond to the number of discrete timesteps that we allow to pass before outputting a sample to the training set. For the work conducted here, we set \(n_{\text{cycle}} = 10\) and \(n_{\text{out}} = 100.\)

3 Computational methods

3.1 The GAIA protocol

Three molecules (methanol, NMA and peptide-capped glycine) have been selected as case examples. Initially, the methanol and NMA molecules were generated in GaussView and optimized to a minimum energy geometry using the Gaussian 09 program, at HF/6-31+G(d,p) level of theory. Single-point energy calculations were performed on the resulting structures, outputting the respective molecular wavefunction and Hessian of the potential energy, calculated at the same level. For the capped glycine molecule, we selected the global minimum conformation from the nine known energetic minima described in a previous publication [68]. For glycine, the calculations were performed at B3LYP/apc-1 [69] level of theory, in-keeping with the level of theory used in previous research [68]. Working at B3LYP level complements a recent publication [41] that validates the extension of the IQA approach to the B3LYP density functional. Prior to such work, the typical IQA partitioning restrictions demanded a well-defined second-order density matrix, thus ruling out correlation-inclusive and approximate Hamiltonian theories, including the density functional theory (DFT) functionals [40, 70–72].

After obtaining the molecular wavefunctions, the process of sampling, performing the energy partitioning and building the kriging models was achieved using the in-house pipeline software, known as the GAIA protocol. The GAIA protocol is outlined in Fig. 3, which displays the sequence of steps, flowing left to right, starting with development of sample geometries and terminating with analysing the models.

GAIA automates the passing of information between two in-house Fortran 90 programs (TYCHE and FEREBUS—orange boxes in Fig. 3) and two commercially available programs (GAUSSIAN09 [73] and AIMAll [52]—green boxes). The output data from one step subsequently forms the input for the following step, until a seeding geometry (or set of seeding geometries) has been converted into a fully trained kriging model. Each program’s role within GAIA can be summarized in a few lines:

-

1.

TYCHE: distorts an input seed geometry, using the molecular normal modes, to create a broad range of sample geometries that collectively describe a local patch on the molecular potential energy surface (around the seed).

-

2.

Gaussian 09 [73]: performs single-point energy calculations and outputs the wavefunction of each molecule.

-

3.

AIMAll [52] (version 15.09.12): starts from the wavefunction of a molecule to obtain the IQA energy partition values: E AIQA , E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\).

-

4.

FEREBUS: uses a training set of molecular geometries to build kriging models of atomic energy (any of the five types above). FEREBUS then validates each model by predicting a test set and comparing the models’ predicted value to the known true value.

The GAIA protocol outlined here is a slight deviation to that reported [22] before for the parameterization procedure of FFLUX. The deviation is a result of the current exclusion of atomic multipole moments but incorporation of the IQA atomic energy components instead. Thus, Fig. 3 represents the protocol tailored to this investigation only.

A set of 4000 initial samples were generated for each molecule by TYCHE from the distortion of a single energy minimum, at a user-defined temperature of 450 K. After single-point energy calculations and wavefunctions were obtained from Gaussian 09 for every sample, IQA energy contributions were obtained from AIMAll (with default quadrature and integration grid options). We set to the value of 3 the AIMAll parameter ‘-encomp’ referring to the IQA energies to be computed. As soon as one atom attains a Lagrangian integration error, L(Ω), greater than the user-defined threshold of 0.001 Hartree, then this atom is removed from the training set, as well as all remaining atoms of the molecular geometry in which the offending atom occurred. This process is known as scrubbing in GAIA. Scrubbing ensures that samples with “noisy” atomic energies (i.e. large L(Ω) value) are excluded from the development of the model. From the samples remaining in the pool after scrubbing, 500 are set aside as the test set and the remaining number of samples (to the nearest hundred) are used for the training set. The resulting training sets were 3400, 3300 and 3000 for methanol, NMA and capped glycine, respectively. These training sets were then employed to generate kriging models for each molecule using the in-house program FEREBUS. The kriging parameter, p k , was optimized in the development of all the models. The settings in FEREBUS were as follows: noisy kriging was not requested, tolerance set to 10−9, convergence to 200 and the swarm-specifier to “dynamic”. Finally, so-called S-curves are produced to illustrate the energies errors on each molecular model. The development and meaning of an S-curve is described in the next section.

3.2 Energy error analysis

As announced earlier, each molecule will be modelled using three approaches, resulting in a tiered level of chemical information being incorporated into the molecular models:

-

Approach A: Modelling the molecule using only the total unpartitioned atomic energy, E AIQA .

-

Approach B: Modelling the molecule using the intra-atomic (E Aintra ) and interatomic (\(V_{\text{inter}}^{{AA^{{\prime }} }}\)) atomic energies.

-

Approach C: Modelling the molecule using the intra-atomic energy (E Aintra ) and the two key interatomic energies: exchange–correlation (\(V_{XC}^{{AA^{{\prime }} }}\)) and classical electrostatic (\(V_{\text{cl}}^{{AA^{{\prime }} }}\)).

Approach A provides the fastest (computationally) and simplest model of a molecule, at the atomic level. Approach B offers a chemically intuitive separation of the intra-atomic and overall interatomic energies and provides models for both. Approach C offers the highest level of chemical detail (in this investigation), separating the interatomic interaction energy into the covalent-like exchange and ionic-like electrostatic components, and again returns models for each.

Moving on to the analysis of the models, we should reintroduce how S-curves can be used to fully convey the quality of a kriging model. The S-curve is a cumulative distribution function (up to 100 %) of absolute energy errors for each test point within the test set. An S-curve plots the absolute (energy) error over the whole molecule (x-axis) versus the test set data point (i.e. molecular geometry) (y-axis) as represented as a percentage (100 %/500 data points = 0.2 % per data point). Thus, each test set molecular geometry point corresponds to one point on the S-curve. In order to plot the total molecular energy error (x-axis), it must be calculated the generalized expression appearing in Eq. (13),

where ‘Y’ is a general notation representing any of five possible IQA energy contributions (E AIQA , E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)), and n is the number of atomic energies being used to describe an atom (or the total atomic model). In this work n can be one, two or three only (hence the upper limit n ≤ 3 in Eq. 13). The value of n depends on the modelling approach. In particular, for approach A we have that n = 1 (E AIQA ), for approach B n = 2 (E Aintra and \(V_{\text{inter}}^{{AA^{{\prime }} }}\)) and approach C leads to n = 3 (E Aintra , \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)). Before summing over the atoms, counted by index A, a sum over n atomic energies must take place in order to obtain the atomic model. When a model is tested, the predictions of the model can be averaged and compared to the average value of the true values. As a result, a model can be said to, on average, slightly over- or under-predict as determined by a positive or negative difference between the averaged predicted and true values. Therefore, summing over these energy models allows for a cancellation of such errors across the models in two possible ways: (1) across the atomic energies that together constitute a single atom, which is atomic cancellation, and (2) across the atomic models that together constitute the molecule, molecular cancellation. The value obtained for \(\Delta E_{\text{IQA}}^{\text{Molec}} ,\) as plotted on the S-curve x-axis, represents the final error for the molecular energy.

The mean absolute error (MAE) for the molecular model is calculated according to Eq. (14). The MAE can be used as a simple measure of the model quality and can be calculated for a single energy model or for a collection of models (such as the resulting molecular model, see Eq. 13),

where the sum runs over the N test = 500 molecular geometries of the test set (of methanol, NMA or glycine).

A final measure, the MAE percentage error, MAE%, can also be calculated by dividing the MAE by the size of the energy well range of the test set. This error is given in Eq. (15),

where ‘MAX’ refers to the highest molecular energy in the test set, and ‘MIN’ to the lowest. Note that the Electronic Supplementary Material reports atomic MAE% values, which are defined in Eq. S1 there, by simply replacing \(\Delta E_{\text{MAE}}^{\text{Molec}}\) by \(\Delta E_{\text{MAE}}^{\text{Atom}} .\) Converting the error into a percentage allows a transferable measure, independent of the energy range of the sampling well, thereby making the MAEs from different molecules more comparable. The MAEs can also be used to compare the quality of individual atomic energy models (also in Eq. 15), which individually may experience a broad variety of energy ranges.

Finally, this work shows, for the first time, S-curves of the complete molecular energy \((\Delta E_{\text{IQA}}^{\text{Molec}} )\) rather than only the multipolar electrostatic energy or later the kinetic energy. Because multipole moments are not used in this work all atoms can interact with each other electrostatically (without concerns about possible divergence). In other words, the complete electrostatic interaction is subject to kriging here, for the first time, covering all 1,2; 1,3 and 1,4 interactions.

4 Results

4.1 Methanol

The 4000 samples (i.e. molecular geometries) generated by TYCHE (see Fig. 4) were subject to scrubbing in GAIA, followed by 500 samples then set aside as the test set. After rounding down to the nearest hundred, 3400 samples remained and formed the training set. These 3400 training set samples sampled an energy well with an energy range of ~115 kJ mol−1.

Figure 5 plots the S-curves for methanol for each of the three modelling approaches (A = E AIQA , B = E Aintra and \(V_{\text{inter}}^{{AA^{{\prime }} }}\), C = E Aintra , \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)).

It is clear that over 95 % of the test set geometries have \(\Delta E_{\text{MAE}}^{\text{Molec}}\) energy errors below 1 kJ mol−1, across all modelling approaches. Such a low error is a pleasing result and an encouraging start to this analysis. An analysis of each molecular model (i.e. approach) is given in Table 1. Interestingly, the simplest model, approach A, performs the best out of the three approaches with a MAE% error of 0.3 %. Approaches B and C perform very similarly with an MAE% error of 0.4 % each, respectively.

Notably, the maximum absolute error observed for approach B is 2.3 kJ mol−1, larger than that for approach C, which returns 1.7 kJ mol−1. One would expect that the most chemically insightful approach, which is C, is the one that accumulates the highest error. This presumption follows from the fact that approach C (which has 18 models, or 3 energies for each of the 6 atoms) has 6 additional models compared to approach B (with 12 models, or 2 energies for each of the 6 atoms), and the extra models accrue additional kriging errors with each model. This matter will be discussed in Sect. 5.2.

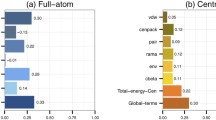

At the atomic level, Fig. 6 shows the MAEs for each atomic energy type (E AIQA , E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)) for each atom in methanol. At first glance, carbon influences the accuracy of the model. In general, C1 has the highest MAEs and is therefore the least accurately modelled atom overall. Following C1, O2 has the next highest errors, followed by the methyl hydrogens (H3/4/5), and finally the most accurately modelled atom, the alcoholic hydrogen H6. In assessing how the IQA atomic energy types compare, the story is also clear. Without exception, the following order appears, starting with the least accurate: E Aintra & \(V_{\text{cl}}^{{AA^{{\prime }} }}\) < \(V_{\text{inter}}^{{AA^{{\prime }} }}\) < E AIQA < \(V_{XC}^{{AA^{{\prime }} }}\). The errors for all energies for any atom never exceed 0.8 kJ mol−1. The interplay between these energies will be discussed in the Sect. 5.

Only looking at the MAE ignores the range of energy that a particular energy has been subjected to in the sampling stage. The MAE percentage error (MAE%) makes the MAEs more comparable when assessing the difficulty for the kriging engine. The MAE percentage errors, along with the MAEs and energy ranges, are tabulated in Table S1 in the Electronic Supplementary Material. Approach A corresponds to S1 (a), approach B to S1 (b) and approach C to S1 (c). The analysis for the E Aintra model [used in both approaches B and C and given in S1 (b)] is not repeated in S1 (c), as the same model is used for each approach. Figure S1 plots MAE versus atomic energy range in order to observe any correlation between them. Some weak correlation can be seen, but nothing strong enough to validate such a relationship.

4.2 NMA

The NMA models were trained using 3300 training set samples (Fig. 7), sampling an energy well with an energy range of ~84 kJ mol−1.

Figure 8 plots the S-curve for each NMA molecular model.

In Fig. 8, almost 95 % of the all test set samples have the E MolecIQA energy correctly predicted within 2.5 kJ mol−1 (across all models). This time there is a clearer separation of the S-curve models, with again approach A (E AIQA ) being the best modelled of the 3 approaches. Interestingly and unexpectedly, approach C (E Aintra , \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)) performs better than approach B (E Aintra and \(V_{\text{inter}}^{{AA^{{\prime }} }}\)) for the NMA system. Given this result, we must now consider whether the dual-cancellation (atomic and molecular) allowed in Eq. (13), prevents a straightforward correlation between the number of atomic models composing a molecular model and the quality of the molecular model. Glycine will further aid our understanding of this, and the topic will be discussed further in the Sect. 5.

The MAE percentage errors for approaches A, B and C are 0.6, 1.3 and 0.9 %, respectively (see Table 2). Again, this is a pleasing result considering the molecular complexity has risen from (3 × 6) − 6 = 12 geometrical features for methanol, to (3 × 12) − 6 = 30 features for NMA. While the number of geometrical features increased by a factor 2.5, the errors for approaches A and C barely doubled. This favourable behaviour stimulates a further upscaling of features. With the S-curves being less entangled for NMA, the maximum absolute error falls in line with the shape and position of the respective S-curve.

Figure 9 is the counterpart of Fig. 6, this time for NMA. Here, we can further confirm that the atomic energy MAEs appear directly related to both the element and energy type being modelled. Initially, the atoms forming NMA can be immediately separated into their elements for the carbon, oxygen and hydrogen atoms, but the nitrogen atoms have very similar errors to the methyl carbons. In NMA, the MAEs also separate the atoms into atom types for carbon (carbonyl carbon and methyl-cap carbons are easily distinguishable). In fact, the oxygen is also modelled so well it is close to being indistinguishable from the hydrogens. Following this, the same trend in energy prediction accuracy is present in NMA as it was in methanol, that is, the sequence E Aintra & \(V_{\text{cl}}^{{AA^{{\prime }} }}\) < \(V_{\text{inter}}^{{AA^{{\prime }} }}\) < E AIQA < \(V_{XC}^{{AA^{{\prime }} }}\) (most accurate) remains valid.

The MAE percentage errors, MAEs and energy ranges are all reported for each atom in Table S2. Figure S3 once more confirms the weak correlation between MAE and energy range, this time for NMA. Figure S4 is analogous to Fig. 9, but plotting MAE% instead of MAE. In going from MAE to MAE%, the range of the energy is now incorporated. As we can see in Figure S4, the trend previously identified for the MAE (E Aintra & \(V_{\text{cl}}^{{AA^{{\prime }} }}\) < \(V_{\text{inter}}^{{AA^{{\prime }} }}\) < E AIQA < \(V_{XC}^{{AA^{{\prime }} }}\)) is no longer true, and no clear trend is seen.

4.3 Glycine (Gly)

The capped glycine models were trained using 3000 training set samples (Fig. 10), sampling an energy range of ~163 kJ mol−1.

Figure 11 shows the S-curve for glycine.

The inset glycine conformation in Fig. 11 is the global minimum identified by TYCHE as forming ~75 % of the Boltzmann distribution when all 9 energy minima were used as seeds. The predominance of the global minimum determined our choice to sample only around this conformation. Almost 95 % of the all test set samples have the E MolecIQA energy correctly predicted within ~2.9 kJ mol−1 (across all models). The S-curves show a resemblance to those in Fig. 5 but shifted to a higher prediction error. Here, there is no longer a clear separation of the S-curves for modelling approaches B and C. Approach A (E AIQA ) still has the lowest errors of the three approaches. Surprisingly approaches B (E Aintra and \(V_{\text{inter}}^{{AA^{{\prime }} }}\)) and C (E Aintra , \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)) is that they again result in the same MAEs of 1.1 kJ mol−1. These data are presented in Table 3.

The MAE percentage errors for approaches A, B and C are 0.6, 0.9 and 0.9 %, respectively (see Table 3). Again, this is a very pleasing result considering the molecular complexity has once more risen from (3 × 12) − 6 = 30 geometrical features for NMA, to (3 × 19) − 6 = 51 features for glycine. In spite of a near doubling of the number of geometrical features, there is little change in the MAEs going from NMA to glycine.

Figure 12 reconfirms the trends identified in Fig. 6 and Fig. 9. Indeed, MAEs are related to the element being modelled and IQA energy type, with atom typing appearing even more evident. Within the carbons, three types are present but only two classes are distinguishable: CC=O > Cα ≡ Cmethyl. Once more, the nitrogen atoms are fairly indistinguishable from the latter class of carbons. This time, the amino hydrogens (H5 and H11) also appear with slightly higher errors than seen for the aliphatic hydrogens. When looking at the trends in the energies themselves, the trends previously seen for methanol and NMA are once more observed in Gly, where E Aintra & \(V_{\text{cl}}^{{AA^{{\prime }} }}\) < \(V_{\text{inter}}^{{AA^{{\prime }} }}\) < E AIQA < \(V_{XC}^{{AA^{{\prime }} }}\) (most accurate). A direct comparison of the errors on the atoms present in both NMA and Gly will be given in the Sect. 5.

Table S3 and the corresponding plots of Figures S5 and S6 are analogous to Table S2 and Figures S3 and S4, this time for glycine. The same observation of a weak correlation between energy range and MAE for each IQA energy type is made in Figure S5. Figure S6 displays the MAE% values for capped glycine. It is clear that 13 out 19 atoms show E AIQA standing out as the least accurate energy to model. For previous systems this majority trend was not seen. However, this conclusion can be rationalized by remembering that E AIQA is the total atomic energy, and hence influenced by every type of energy change within the atom. Hence, it would be reasonable for it to be the most sensitive when the energy is considered relative to the energy range.

5 Discussion

The discussion is divided into four subsections covering key topics that have either been postulated at the beginning of the research or have arisen during the analysis of the results. The first two subsections each correspond to an objective.

5.1 Feasibility of modelling IQA energies

The first objective of this investigation is to assess the suitability and quality of modelling the five IQA energies that can be used in the formulation of FFLUX. In short, we have successfully kriged models and made good molecular energy predictions using three possible combinations of the IQA energies, making them all suitable for use in FFLUX. The resulting molecular models having excellent errors, of less than ±0.4, 1.3 and 0.7 kJ mol−1 for methanol, NMA and Gly, respectively. Qualitatively speaking, Figs. 5, 8 and 11 display behaviour analogous to previous S-curves in previous literature [31, 39], leading to an overall successful prediction of both multipolar electrostatic and non-electrostatic energetics.

In order to quantitatively compare the quality of the results, we need to draw on a previous paper [39] where a component of E Aintra , namely the kinetic energy T A, was kriged for every atom in a similar set of systems (methanol, NMA, glycine and triglycine). We decided to compare our results with the kinetic energy results, only for methanol and NMA, because their training set sizes match best. In that work [39], MAEs for the atomic kinetic energy were obtained of 0.8 kJ mol−1 (0.1 %) and 0.7 kJ mol−1 (0.3 %) for a methanol–carbon and the carbonyl–carbon in NMA, respectively. In our work, we have presented differing training set sizes (3400 and 3300 for methanol and NMA, respectively), but it is still useful to compare the results. For E Aintra we obtain MAEs of 0.7 kJ mol−1 (0.3 %) and 1.5 kJ mol−1 (0.4 %), respectively, and for \(V_{\text{inter}}^{{AA^{{\prime }} }}\) we have MAEs of 0.5 kJ mol−1 (0.3 %) and 1.0 kJ mol−1 (0.2 %) for the equivalent atoms, respectively. Hence, despite our training sets being larger, the MAE errors remain slightly higher than those observed for the kinetic energy. This confirms an initial suspicion that the summative nature of both E Aintra and \(V_{\text{inter}}^{{AA^{{\prime }} }}\) results in a more complicated kriging problem than an example of the subcomponents (T A) forming these energies. However, our overall similar performance is still very promising given that we have the complete energy of an atom A (and thus a molecule when summing over A) being modelled with comparable errors, albeit using larger training sets. Another advantage of this investigation is the ability to krige only one, two or three energies, yet still capturing the energetic behaviour of the whole molecule. Hence, this design saves substantial computational time by not needing to krige every individual IQA atomic energy (T A, V AAen , V AAee , \(V_{\text{en}}^{{AA^{{\prime }} }}\), \(V_{\text{ne}}^{{AA^{{\prime }} }}\), \(V_{\text{nn}}^{{AA^{{\prime }} }}\) and \(V_{\text{ee}}^{{AA^{{\prime }} }}\)) (should V ee still remain unpartitioned). It is also noted that across all atoms in all three systems investigated here, the MAE error never exceeded 1.5 kJ mol−1 (with the majority under 1 kJ mol−1), or a MAE percentage error over 1.4 %, for any energy.

Another measure of quality to loosely compare our results with are the previously kriged electrostatic multipole moments, which describe the classical electrostatic interaction energy for 1,4 and higher order interactions [33]. Here, the notation ‘1,4’ describes the interaction between atoms separated by 3 covalent bonds. A 1,5 interaction has 4 separating covalent bonds, and so on. For 1,4 and higher interactions (i.e. 1, n > 4) in a capped histidine system (29 atoms), the MAE for the intramolecular electrostatic energy calculated through kriged multipole moments, was 2.5 kJ mol−1. In our investigation \(V_{\text{cl}}^{{AA^{{\prime }} }}\) and \(V_{\text{inter}}^{{AA^{{\prime }} }}\) never exceed an MAE of 1.4 kJ mol−1. Is this MAE respectable compared to the multipolar electrostatic energy error? Yes, because \(V_{\text{cl}}^{{AA^{{\prime }} }}\) (and \(V_{\text{inter}}^{{AA^{{\prime }} }}\) too) accounts for all electrostatic interactions and the multipolar electrostatic analysis only for 1,4 and higher interactions (due to convergence limitations). Admittedly, a training set of only 600 training set geometries [33] was used for the latter analysis.

Finally, we point out that we are currently investigating a potential reduction in the number of training set samples needed to obtain suitably accurate atomic and molecular models. This research is focussed on the selective building of training sets, and a variety of approaches are currently being investigated to achieve this.

5.2 Cancellation of errors

A second objective of the current investigation is to observe to what extent any cancellation of errors takes place within the summative combination of kriging models described in Eq. 9. As previously described, this approach offers the potential to benefit from the fortuitous cancellation of errors, but also equally the unfortunate accumulation of errors as a result of the machine learning. In particular, an atomic energy component may be, on average, predicted to be more stable than the true energy (i.e. overestimated). Another atomic energy component may be, on average, predicted to be less stable than the true energy (i.e. underestimated). As a result, the summative combination of both an over- and underestimated result allows for some cancellation, resulting in an overall energy prediction being more accurate, when only these two energies are considered. Accumulating these cancellations further across many energy models and across many atoms increases this effect dramatically.

Despite the cancellation appearing to rely on chance, since there is no control for the over- or underestimation of the energy models, the prediction of the kriging models will always be consistent, i.e. the same geometrical features are used to map all atomic energies within a single atom, and each atom’s geometrical features are related to every other atom in the molecule. Hence, if one energy is overestimated and the another is underestimated with those identical or related features, then this same interplay between the kriging models will always be present. Naturally, the opposite can also occur where, for example, two overestimating models result in a summed higher total error instead of cancelling. However, from the results in Figs. 6, 9 and 12, it is evident that the summative energies (E AIQA and \(E_{\text{inter}}^{{AA^{{\prime }} }}\)) generally have lower errors than by simply summing the absolute errors of the components that make up these energies (E Aintra + \(E_{\text{inter}}^{{AA^{{\prime }} }}\) and \(V_{XC}^{{AA^{{\prime }} }}\)+ \(V_{\text{cl}}^{{AA^{{\prime }} }}\), respectively). Evidence of the molecular cancellation (described in Sect. 3.2) can also be seen, given that atomic MAE often are between 0.5 and 1.5 kJ mol−1, but the resulting molecular MAE is always ≤1 kJ mol−1 (for NMA).

5.3 S-curve analysis

The MAEs (and MAE percentage errors) of the total E MolecIQA molecular energy, as given in Tables 1, 2 and 3 for each respective molecule, are arguably the most important values obtained in this investigation. Therefore, these values are representative of an overall quality check for this investigation. Through averaging the MAE of the three approaches (A, B and C) for each molecule, we obtain a single MAE error for each molecule: 0.3 kJ mol−1 (methanol), 0.7 kJ mol−1 (NMA) and 0.9 kJ mol−1 (Gly). These <1 kJ mol−1 results become more impressive when the total energy for each system is compared: methanol has a molecular energy of ~302,000 kJ mol−1, NMA ~648,700 kJ mol−1 and Gly ~1,191,500 kJ mol−1.

Of the three approaches, approach A consistently is the most accurate for each molecule. This is a result of the minimal approach incorporating only a single model per atom in the molecule. However, the performance of approaches B and C were less distinguishable or predictable. Either approach was capable of being slightly more accurate than the other. However, the molecular error when calculated using either approach B or C was always within 0.3 kJ mol−1 of one another. In conclusion, we consider all three routes as suitable candidates for modelling the molecular energy, each incorporating a different level of chemical insight.

One further point to note in the analysis of our S-curves, are the “rogue points” present near the 100 % ceiling of the plots. Few rogue points occur for approach B in methanol, but more noticeably for approaches B and, in particular, approach A for Gly. These points are considered rogue due to the large gap that appears separating these points from the almost continuous S-like shape of the plot. Figure 13 (on capped glycine) sheds lights on how rogue points arise.

In Fig. 13, the glycine geometries that passed GAIA’s scrubbing procedure are plotted according to their molecular energies and separated according to which set they belong to, that is, the training set in blue or the test set in red. The information shown in Fig. 13 is essentially a one-dimensional distribution of molecular energies (y-axis) but spread out in two dimensions by introducing an x-axis that merely counts the 3000 training set samples and the 500 test set samples. The test set remains identical for all three modelling approaches.

A large vertical gap between the blue points in Fig. 13 indicates a lack of training points in that energy region. On the other hand, continuous lines indicate a high density of points, covering well the corresponding energy region. The point in the test set at −1,198,412.1 kJ mol−1 (encircled green in Fig. 13) is the geometry corresponding to the maximum predicted error (8.9 kJ mol−1) seen in the S-curve of approach A (utmost right point in the red curve in Fig. 11), denoted RTP1 (Rogue Test Point 1). The point in the test set at −1,198,406.7 kJ mol−1 (encircled orange in Fig. 13) is the geometry corresponding to the maximum error (7.9 kJ mol−1) seen in the S-curve of approach B (utmost right point in blue curve in Fig. 11), denoted RTP2. Both these molecular energies appear to be reasonably well sampled in the training set, with nearby (blue) points of −1,198,412.5 and −1,198,406.9 kJ mol−1. Unusually, there was no problem for approaches B and C in predicting the molecular energy for RTP1, with errors of 3.4 and 1.3 kJ mol−1, respectively. Similarly, for RTP2, errors of 1.1 and 3.1 kJ mol−1 were obtained for approaches A and C. This suggests that it is generally not a lack of training geometries that are causing the large rogue errors. Instead it could be any one (or combination) of the following three effects: (1) the potential energy surface around these points is undulant (in general for the molecule, or for a particular IQA energy) and the training geometries included are not enough to fully capture this landscape adequately, (2) the test points are outside of the domain of applicability, defined as the region of conformational space that can be interpolated by the training points of the kriging model. In other words, points lying outside of the domain of applicability correspond to points that lie outside of the training set, and so the kriging model is required to perform an extrapolation to make a prediction [74], (3) when summing across models, the balance of accumulating errors results in reduced cancellation of errors. All these reasons would also be supported by working with the higher-energy geometries where there are fewer samples in the training set, compared to those closer to the energy of the seed minimum.

Another measure that is useful when analysing the cause of a poor prediction within FEREBUS is the mean signed error (MSE) (or mean signed deviation, MSD). In statistics, the MSE is a measure of how close a predicted value matches the true quantity. Having a high MSE for a particular prediction indicates that the model is not well trained for in that region, and is a hallmark of working outside the domain of applicability. Taking glycine’s approach A as an example, the C12 atom stands out as an atom with a particularly poor E AIQA prediction for RTP1 (an error of 5.2 kJ mol−1). C12 also has an MSE approximately five times the average across all of the test geometries. Some of the other atoms in glycine also indicate a slightly increased difficulty in predicting E AIQA for this test geometry, but not to the same degree as for C12. Hence, it can be concluded that C12 is the source of the error for RTP1, due to the model operating outside of its domain of applicability. Evidently, as approaches B and C perform well in predicting this molecular energy, this MSE explanation either is irrelevant when using E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{\text{cl}}^{{AA^{{\prime }} }}\), and/or \(V_{XC}^{{AA^{{\prime }} }}\), or the effect is dampened by the cancellation of errors. This type of analysis can be applied to any rogue point on an S-curve.

In contrast, the point at −1,198,380.1 kJ mol−1 (encircled black in Fig. 13) in the test set (TP1) (which is not a rogue point), appears to be the least well sampled in the training set, but the predicted errors for this sample are 0.3 kJ mol−1 (approach A), 2.4 kJ mol−1 (approach B) and 2.0 kJ mol−1 (approach C), thus, not near the maximal points on each of the S-curves. The unexpectedly good prediction of TP1 is credited to kriging’s impressive interpolation between two largely spaced training points.

5.4 Evidence for atom typing

Figures 6, 9 and 12 illustrated the ‘difficulty’ of modelling each atomic energy, according to the MAE. From this analysis, we learned that atoms belonging to a particular functional group had a MAE that distinguished some from others, independently of the IQA energies being used for this observation. Across our three systems, the carbonyl carbons were the most difficult atoms to model, with a maximum MAE value of ~1.2–1.3 kJ mol−1, in both NMA and Gly. The carbonyl carbon was followed by similar maximum MAEs values for the α-carbon, the amino nitrogens and methyl carbons of ~0.5 kJ mol−1, in methanol, NMA and Gly. The oxygens were easily distinguishable with a much lower maximum MAE of around 0.1 kJ mol−1, followed by the consistently very accurately modelled hydrogens with maximal MAEs of <0.1 kJ mol−1. It is interesting to note that the N1 atom in NMA, resulted in similar errors to that of the corresponding atoms N4 and N10 in Gly. The same is true of the NMA atoms carbonylic C2 (with C6 and C8 in Gly) and O3 (with O7 and O9 in Gly), within ±0.2 kJ mol−1. Hence, with atoms having comparable MAEs across multiple molecules, there is some basic evidence of atom typing. However, it is not a rule that can be used in distinguishing all present functional groups, as evidenced by the difficulty in distinguishing α-carbon, methyl carbon and the amino nitrogen groups, using only the MAEs. It would be interesting to see which further trends are observed when a broader range of functional groups are studied.

The discussion above is not the first time atom typing has been considered within the study of an energy partitioning. A recent article by Patrikeev et al. [75]. investigated the performance of several density functionals in their evaluation of both Kohn–Sham and correlation kinetic energies of topological atoms, and also commented on discriminating atom types through such atomic descriptors. Initial findings for the Kohn–Sham energies indicated a strong link between some of the tested functionals and the atomic number (or element) of an atom. A further finding in the assessment of correlation kinetic energies allowed aromatic and aliphatic hydrogens to be separated. It should also be reiterated that IQA within DFT is a little tricky since the Kohn–Sham approach does not lead to exact correlated reduced density matrices [76].

6 A note on dispersion and transferability

The only type of energy contribution that is lacking in the current kriging treatment of all IQA energy contributions is that associated with dispersion. Admittedly, the current treatment includes electron correlation, but because we used B3LYP this electron correlation does not cover dispersion effects. However, soon-to-be-published work of our group successfully kriges the IQA intra-atomic, E Aintra , and interatomic, \(V_{\text{inter}}^{{AA^{{\prime }} }}\), energies at the M06-2X/aug-cc-pVDZ level of theory. This functional describes (or mimics) some mid-range dispersion effects, but the ultimate goal of FFLUX is to invoke a post-Hartree–Fock method (non-DFT) to cover dispersion properly.

A second note concerns the transferability of the obtained model with respect to an exchange–correlation functional other than B3LYP. The only other exchange–correlation functionals implemented in the program AIMALL are LSDA and M06-2X. The current investigation has not been mirrored using any other functional from which we could directly compare transferability results. However, there is potential for transferability to be considered in the aforementioned work to be published, where M06-2X was used. Like in the current work, IQA atomic energy predictions made for multiple different systems could be compared. Some understanding of the transferability of another exchange–correlation functional can come from earlier work [77] from our laboratory in which the electrostatic energy, obtained through atomic multipole moments, was kriged at three levels of theory, namely HF, B3LYP and M06-2X. From that work, one would expect that M06-2X will perform similarly to B3LYP.

7 Conclusion

The development of the novel force field FFLUX now moves beyond its machine learning treatment of multipolar electrostatics. We demonstrate that short-range non-multipolar electrostatics can now also be kriged successfully. Moreover, (non-multipolar) exchange energies as well as intra-atomic energies (beyond just the kinetic energy) are now also kriged with promising energy errors. As a result, chemical bonding and stereo-electronic effects are now, by way of principle, incorporated in FFLUX. This achievement is realized within the context of the methodology of interacting quantum atoms (IQA).

Three approaches (A, B, and C), incorporating five IQA atomic energies (E AIQA , E Aintra , \(V_{\text{inter}}^{{AA^{{\prime }} }}\), \(V_{XC}^{{AA^{{\prime }} }}\) and \(V_{\text{cl}}^{{AA^{{\prime }} }}\)), were successfully used to develop molecular models offering control in balancing accuracy and chemical insight. The most accurate and least expensive molecular model (approach A) was built using the total atomic energy E AIQA , with MAEs of ±0.3, 0.4 and 0.6 kJ mol−1 for methanol, NMA and capped glycine, respectively. Interestingly, the more insightful formalisms involving the intra- and interatomic components (approach B), and the interatomic exchange and electrostatic contributions (approach C), resulted in similar MAEs. These errors are on a par with previous literature and are a result of the combination of models benefitting from cancellation of errors. The latter occur both within an atom’s total energy modelling (atomic cancellation), and also when summing across total atomic models (molecular cancellation) to obtain the molecular model.

In summary, the novel strategy and results were a successful proof-of-concept approach, developed to be integrated into FFLUX. Future work will build upon the method presented here employing the models in the application of geometry optimization, initially without, but later with, the incorporation of multipolar electrostatics. Future research is also focussing on creating intelligent training sets, designed to reduce the number of samples used in the building of kriging models.

References

Piana S, Lindorff-Larsen K, Shaw DE (2011) How robust are protein folding simulations with respect to force field parameterization? Biophys J 100:L47–L49

Rauscher S, Gapsys V, Gajda MJ, Zweckstetter M, de Groot BL, Grubmüller H (2015) Structural ensembles of intrinsically disordered proteins depend strongly on force field: a comparison to experiment. J Chem Theor Comput 11:5513–5524

Ponder JW, Wu C, Pande VS, Chodera JD, Schnieders MJ, Haque I, Mobley DL, Lambrecht DS, DiStasio RAJ, Head-Gordon M, Clark GNI, Johnson ME, Head-Gordon T (2010) Current status of the AMOEBA polarizable force field. J Phys Chem B 114:2549–2564

Vinter JG (1994) Extended electron distributions applied to the molecular mechanics of some intermolecular interactions. J Comput Aided Mol Des 8:653–668

Gresh N, Cisneros GA, Darden TA, Piquemal J-P (2007) Anisotropic, polarizable molecular mechanics studies of inter- and intramoecular interactions and ligand-macromolecule complexes. A bottom-up strategy. J Chem Theory Comput 3:1960–1986

Verstraelen T, Vandenbrande S, Ayers PW (2014) Direct computation of parameters for accurate polarizable force fields. J Chem Phys 141:194114

Cardamone S, Hughes TJ, Popelier PLA (2014) Multipolar electrostatics. Phys Chem Chem Phys 16:10367–10387

Kramer C, Spinn A, Liedl KR (2014) Charge anisotropy: where atomic multipoles matter most. J Chem Theory Comput 10:4488–4496

Kosov DS, Popelier PLA (2000) Convergence of the multipole expansion for electrostatic potentials of finite topological atoms. J Chem Phys 113:3969–3974

Popelier PLA, Joubert L, Kosov DS (2001) Convergence of the electrostatic interaction based on topological atoms. J Phys Chem A 105:8254–8261

Popelier PLA, Kosov DS (2001) Atom-atom partitioning of intramolecular and intermolecular Coulomb energy. J Chem Phys 114:6539–6547

Popelier PLA, Rafat M (2003) The electrostatic potential generated by topological atoms: a continuous multipole method leading to larger convergence regions. Chem Phys Lett 376:148–153

Rafat M, Popelier PLA (2005) The electrostatic potential generated by topological atoms. Part II: inverse multipole moments. J Chem Phys 123(204103–204101):204107

Rafat M, Popelier PLA (2006) A convergent multipole expansion for 1,3 and 1,4 Coulomb interactions. J Chem Phys 124(144102):1–7

Rafat M, Popelier PLA (2007) Topological atom-atom partitioning of molecular exchange energy and its multipolar convergence. In: Matta CF, Boyd RJ (eds) Quantum theory of atoms in molecules, vol 5. Wiley-VCH, Weinheim, pp 121–140

Rafat M, Popelier PLA (2007) Long range behaviour of high-rank topological multipole moments. J Comput Chem 28:832–838

Joubert L, Popelier PLA (2002) The prediction of energies and geometries of hydrogen bonded DNA base-pairs via a topological electrostatic potential. Phys Chem Chem Phys 4:4353–4359

Bader RFW (1990) Atoms in molecules. A quantum theory. Oxford University Press, Oxford

Popelier PLA (2000) Atoms in molecules. An introduction. Pearson Education, London

Popelier PLA (2014) The quantum theory of atoms in molecules, Chapter 8. In: Frenking G, Shaik S (eds) The nature of the chemical bond revisited. Wiley-VCH, Weinheim, pp 271–308

Matta CF, Boyd RJ (2007) The quantum theory of atoms in molecules. From solid state to DNA and drug design. Wiley-VCH, Weinheim

Popelier PLA (2015) QCTFF: on the construction of a novel protein force field. Int J Quantum Chem 115:1005–1011

Bader RFW, Popelier PLA (1993) Atomic theorems. Int J Quantum Chem 45:189–207

Yuan Y, Mills MJL, Popelier PLA (2014) Multipolar electrostatics for proteins: atom–atom electrostatic energies in crambin. J Comput Chem 35:343–359

Handley CM, Popelier PLA (2010) Potential energy surfaces fitted by artificial neural networks. J Phys Chem A 114:3371–3383

Handley CM, Popelier PLA (2009) A dynamically polarizable water potential based on multipole moments trained by machine learning. J Chem Theory Comput 5:1474–1489

Handley CM, Hawe GI, Kell DB, Popelier PLA (2009) Optimal construction of a fast and accurate polarisable water potential based on multipole moments trained by machine learning. Phys Chem Chem Phys 11:6365–6376

Cressie N (1993) Statistics for spatial data. Wiley, New York

Rasmussen CE, Williams CKI (2006) Gaussian processes for machine learning. The MIT Press, Cambridge

Rupp M, Ramakrishnan R, von Lilienfeld OA (2015) Machine learning for quantum mechanical properties of atoms in molecules. J Phys Chem Lett 6:3309–3313

Fletcher TL, Davie SJ, Popelier PLA (2014) Prediction of intramolecular polarization of aromatic amino acids using kriging machine learning. J Chem Theory Comput 10:3708–3719

Fletcher TL (2014) Polarizable multipolar electrostatics driven by kriging machine learning or a peptide force field: assessment, improvement and up-scaling. Ph.D. Thesis, School of Chemistry, University of Manchester

Kandathil SM, Fletcher TL, Yuan Y, Knowles J, Popelier PLA (2013) Accuracy and tractability of a kriging model of intramolecular polarizable multipolar electrostatics and its application to histidine. J Comput Chem 34:1850–1861

Yuan Y, Mills MJL, Popelier PLA (2014) Multipolar electrostatics based on the kriging machine learning method: an application to serine. J Mol Model 20:2172–2186

Mills MJL, Popelier PLA (2012) Polarisable multipolar electrostatics from the machine learning method kriging: an application to alanine. Theor Chem Acc 131:1137–1153

Yuan Y (2012) A polarisable multipolar force field for pepides based on kriging: towards application in protein crystallography and enzymatic reactions. Ph.D. thesis, School of Chemistry, University of Manchester

Mills MJL, Popelier PLA (2011) Intramolecular polarisable multipolar electrostatics from the machine learning method kriging. Comput Theor Chem 975:42–51