Abstract

In this paper, it is shown that any well-posed 2nd order PDE can be reformulated as a well-posed first order least squares system. This system will be solved by an adaptive wavelet solver in optimal computational complexity. The applications that are considered are second order elliptic PDEs with general inhomogeneous boundary conditions, and the stationary Navier–Stokes equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, a wavelet method is constructed for the optimal adaptive solution of stationary PDEs. We develop a general procedure to write any well-posed 2nd order PDE as a well-posed first order least squares system. The (natural) least squares formulations contain dual norms, that, however, impose no difficulties for a wavelet solver. The advantages of the first order least squares system formulation are twofold.

Firstly, regardless of the original problem, the least squares problem is symmetric and positive definite, which opens the possibility to develop an optimal adaptive solver. The obvious use of the least-squares functional as an a posteriori error estimator, however, is not known to yield a convergent method (see, however, [16] for an alternative for Poisson’s problem). As we will see, the use of the (approximate) residual in wavelet coordinates as an a posteriori error estimator does give rise to an optimal adaptive solver.

Secondly, as we will discuss in more detail in the following subsections, the optimal application of a wavelet solver to a first order system reformulation allows for a simpler, and quantitatively more efficient approximate residual evaluation than with the standard formulation of second order. Moreover, it applies equally well to semi-linear equations, as e.g. the stationary Navier–Stokes equations, and it applies to wavelets that have only one vanishing moment.

The approach to apply the wavelet solver to a well-posed first order least squares system reformulation also applies to time-dependent PDEs in simultaneous space-time variational formulations, as parabolic problems or instationary Navier–Stokes equations. With those problems, the wavelet bases consist of tensor products of temporal and spatial wavelets. Consequently, they require a different procedure for the approximate evaluation of the residual in wavelet coordinates, which will be the topic of a forthcoming work.

1.1 Adaptive wavelet schemes, and the approximate residual evaluation

Adaptive wavelet schemes can solve well-posed linear and nonlinear operator equations at the best possible rate allowed by the basis in linear complexity [7,8,9, 29, 31, 32, 34]. Schemes with those properties will be called optimal. The schemes can be applied to PDEs, which we study in this work, as well as to integral equations [18].

There are two kinds of adaptive wavelet schemes. One approach is to apply some convergent iterative method to the infinite system in wavelet coordinates, with decreasing tolerances for the inexact evaluations of residuals [8, 9]. These schemes rely on the application of coarsening to achieve optimal rates.

The other approach is to solve a sequence of Galerkin approximations from spans of nested sets of wavelets. The (approximate) residual in wavelet coordinates of the current approximation is used as an a posteriori error estimator to make an optimal selection of the wavelets to be added to form the next set [7]. With this scheme, that is studied in the current work, the application of coarsening can be avoided [23, 34], and it turns out to be quantitatively more efficient. This approach is restricted to PDOs whose Fréchet derivatives are symmetric and positive definite (compact perturbations can be added though, see [22]).

A key computational ingredient of both schemes is the approximate evaluation of the residual in wavelet coordinates. Let us discuss this for a linear operator equation \(A u =f\), with, for some separable Hilbert spaces \(\mathscr {H}\) and \(\mathscr {K}\), for convenience over \(\mathbb {R}\), \(f \in \mathscr {K}'\) and \(A \in \mathcal {L}\mathrm {is}(\mathscr {H},\mathscr {K}')\) (i.e., \(A \in \mathcal {L}(\mathscr {H},\mathscr {K}')\) and \(A^{-1} \in \mathcal {L}(\mathscr {K}',\mathscr {H})\)).

Equipping \(\mathscr {H}\) and \(\mathscr {K}\) with Riesz bases \(\Psi ^\mathscr {H}\), \(\Psi ^\mathscr {K}\), formally viewed as column vectors, \(A u =f\) can be equivalently written as a bi-infinite system of coupled scalar equations \(\mathbf{A} \mathbf{u}=\mathbf{f}\), where \(\mathbf{f}=f(\Psi ^\mathscr {K})\) is the infinite ‘load vector’, \(\mathbf{A}=(A \Psi ^\mathscr {H})(\Psi ^\mathscr {K})\) is the infinite ‘stiffness’ or system matrix, and \(u=\mathbf{u}^\top \Psi ^\mathscr {H}\).

Here we made use of following notations:

Notation 1.1

For countable collections of functions \(\Sigma \) and \(\Upsilon \), we write \(g(\Sigma )=[g(\sigma )]_{\sigma \in \Sigma }\), \(M(\Sigma )(\Upsilon )=[M(\sigma )(\upsilon )]_{\upsilon \in \Upsilon , \sigma \in \Sigma }\), and \(\langle \Upsilon , \Sigma \rangle = [\langle \upsilon , \sigma \rangle ]_{\upsilon \in \Upsilon , \sigma \in \Sigma }\), assuming g, M, and \(\langle \,,\,\rangle \) are such that the expressions at the right-hand sides are well-defined.

The space of square summable vectors of reals indexed over a countable index set \(\vee \) will be denoted as \(\ell _2(\vee )\) or simply as \(\ell _2\). The norm on this space will be simply denoted as \(\Vert \cdot \Vert \).

As a consequence of \(A \in \mathcal {L}\mathrm {is}(\mathscr {H},\mathscr {K}')\), we have that \(\mathbf{A} \in \mathcal {L}\mathrm {is}(\ell _2,\ell _2)\). For the moment, let us additionally assume that \(\mathbf{A}\) is symmetric and positive definite, as when \(\mathscr {K}=\mathscr {H}\), \((Au)(v)= (Av)(u)\) and \((Au)(u) \gtrsim \Vert u\Vert _\mathscr {H}^2\) (\(u,v \in \mathscr {H}\)). If this is not the case, then the following can be applied to the normal equations \(\mathbf{A}^\top \mathbf{A} \mathbf{u}=\mathbf{A}^\top \mathbf{f}\).

For the finitely supported approximations \({\tilde{\mathbf{u}}}\) to \(\mathbf{u}\) that are generated inside the adaptive wavelet scheme, the residual \(\mathbf{r}=\mathbf{f} -\mathbf{A} {\tilde{\mathbf{u}}}\) has to be approximated within a sufficiently small relative tolerance. The resulting scheme has been shown to converge with the best possible rate: Whenever \(\mathbf{u}\) can be approximated at rate s, i.e. \(\mathbf{u} \in \mathcal {A}^s\), meaning that for any \(N \in \mathbb {N}\) there exists a vector of length N that approximates \(\mathbf{u}\) within tolerance \({\mathcal {O}}(N^{-s})\), the approximations produced by the scheme converge with this rate s. Moreover, the scheme has linear computational complexity under the cost condition that

The lower bound on \(\varepsilon \) reflects the fact that inside the adaptive scheme, it is never needed to approximate a residual more accurately than within a sufficiently small, but fixed relative tolerance. The validity of (1.1) will require additional properties of \(\Psi ^\mathscr {H}\) and \(\Psi ^\mathscr {K}\) in addition to being Riesz bases. For that reason we consider wavelet bases.

The standard way to approximate the residual within tolerance \(\varepsilon \) is to approximate both \(\mathbf{f}\) and \(\mathbf{A} {\tilde{\mathbf{u}}}\) separately within tolerance \(\varepsilon /2\). Under reasonable assumptions, \(\mathbf{f}\) can be approximated within tolerance \(\varepsilon /2\) by a vector of length \({\mathcal {O}}(\varepsilon ^{-1/s})\).

For the approximation of \(\mathbf{A} {\tilde{\mathbf{u}}}\), it is used that, thanks to the properties of the wavelets as having vanishing moments, each column of \(\mathbf{A}\), although generally infinitely supported, can be well approximated by finitely supported vectors. In the approximate matrix-vector multiplication routine introduced in [7], known as the APPLY-routine, the accuracy with which a column is approximated is judiciously chosen depending on the size of the corresponding entry in the input vector \({\tilde{\mathbf{u}}}\). It has been shown to realise a tolerance \(\varepsilon /2\) at cost \({\mathcal {O}}(\varepsilon ^{-1/s}|{\tilde{\mathbf{u}}}|_{\mathcal {A}^{s}}^{1/s}+\#\,\mathrm{supp}\,{\tilde{\mathbf{u}}})\), for any s in some range \((0,s^*]\). For wavelets that have sufficiently many vanishing moments, this range was shown to include the range of \(s \in (0,s_{\max }]\) for which, in view of the order of the wavelets, \(\mathbf{u} \in \mathcal {A}^s\) can possibly be expected (cf. [28]). Using that for the approximations \({\tilde{\mathbf{u}}}\) to \(\mathbf{u}\) that are generated inside the adaptive wavelet scheme, it holds that \(|{\tilde{\mathbf{u}}}|_{\mathcal {A}^{s}} \lesssim |\mathbf{u}|_{\mathcal {A}^{s}}\), in those cases the cost condition is satisfied, and so the adaptive wavelet scheme is optimal.

The APPLY-routine, however, is quite difficult to implement. Note, in particular, that its outcome depends nonlinearly on the input vector \({\tilde{\mathbf{u}}}\). Furthermore, in experiments, the routine turns out to be quantitatively expensive. Finally, although it has been generalized to certain classes of semi-linear PDEs, in those cases it has not been shown that \(s^* \ge s_{\max }\), meaning that for nonlinear problems the issue of optimality is actually open.

1.2 An alternative for the APPLY routine

A main goal of this paper is to develop a quantitatively efficient alternative for the APPLY-routine, that, moreover, gives rise to provable optimal adaptive wavelet schemes for classes of semi-linear PDEs, and applies to wavelets with only one vanishing moment. As an introduction, we consider the model problem of Poisson’s equation in one space dimension \( \left\{ \begin{array}{ll} -\,u''=f &{}\text { on } (0,1),\\ u=0 &{}\text { at }\{0,1\}, \end{array} \right. \) that, in standard variational form, reads as finding \(u \in \mathscr {H}:=H^1_0(0,1)\) such that

where, by identifying \(L_2(0,1)'\) with \(L_2(0,1)\) and using that \(H_0^1(0,1) \hookrightarrow L_2(0,1)\) is dense, \(\langle \,,\, \rangle _{L_2(0,1)}\) is also used to denote the duality pairing on \(H^{-1}(0,1)\times H^1_0(0,1)\). We consider piecewise polynomial, locally supported wavelet Riesz bases \(\Psi ^\mathscr {H}\) and \(\Psi ^\mathscr {K}\) for \(H^1_0(0,1)\). Let us exclusively consider admissible approximations \({\tilde{\mathbf{u}}}\) to \(\mathbf{u}\) in the sense that their finite supports form trees, meaning that if \(\lambda \in \mathrm{supp}\,{\tilde{\mathbf{u}}}\), then then there exists a \(\mu \in \mathrm{supp}\,{\tilde{\mathbf{u}}}\), whose level is one less than the level of \(\lambda \), and \({{\mathrm{meas}}}(\mathrm{supp}\,\psi ^\mathscr {H}_\lambda \cap \mathrm{supp}\,\psi ^\mathscr {H}_\mu )>0\). It is known that the approximation classes \(\mathcal {A}^s\) become only ‘slightly’ smaller by this restriction to tree approximation compared to unconstrained approximation (cf. [11]). What is more, the restriction to tree approximation seems mandatory anyway to construct an optimal algorithm for nonlinear operators. The benefit of tree approximation is that \(\tilde{u}:={\tilde{\mathbf{u}}}^\top \Psi ^\mathscr {H}\) has an alternative, ‘single-scale’ representation as a piecewise polynomial w.r.t. a partition \({\mathcal {T}}_1\) of (0, 1) with \(\# {\mathcal {T}}_1 \lesssim \#{\mathrm{supp}\,{\tilde{\mathbf{u}}}}\).

For the moment, let us make the additional assumption that \(\Psi ^\mathscr {H}\) is selected inside \(H^2(0,1)\). Then, for an admissible \({\tilde{\mathbf{u}}}\), with its support denoted as \(\Lambda ^\mathscr {H}\), integration-by-parts shows that

where \(\tilde{u}''\) is piecewise polynomial w.r.t. \({\mathcal {T}}_1\). If \(\mathbf{u} \in \mathcal {A}^s\), then for any \(\varepsilon >0\) there exists a piecewise polynomial \(f_\varepsilon \) w.r.t. a partition \(\mathcal {T}_2\) of (0,1) into \({\mathcal {O}}(\varepsilon ^{-1/s})\) subintervals such that \(\Vert f-f_\varepsilon \Vert _{H^{-1}(0,1)} \le \varepsilon \).Footnote 1 The term \(f-f_\varepsilon \) is commonly referred to as data oscillation.

The function \(f_\varepsilon +\tilde{u}''\) is piecewise polynomial w.r.t. the smallest common refinement \(\mathcal {T}\) of \(\mathcal {T}_1\) and \(\mathcal {T}_2\). Thanks to this piecewise smoothness of \(f_\varepsilon +\tilde{u}''\) w.r.t. \(\mathcal {T}\), and the property of \(\psi _\lambda ^\mathscr {K}\) having one vanishing moment, \(|\langle \psi _\lambda ^\mathscr {K}, f_\varepsilon +\tilde{u}''\rangle _{L_2(0,1)}|\) is decreasing as function of the minimal difference of the level of \(\psi _\lambda ^\mathscr {K}\) and that of any subinterval in \(\mathcal {T}\) that has non-empty intersection with \(\mathrm{supp}\,\psi _\lambda ^\mathscr {K}\). Here with the level of \(\psi _\lambda ^\mathscr {K}\) or that of an interval \(\omega \), we mean an \(\ell \in \mathbb {N}_0\) such that \(2^{-\ell } \eqsim {{\mathrm{diam}}}(\mathrm{supp}\,\psi _\lambda ^\mathscr {K})\) or \(2^{-\ell } \eqsim {{\mathrm{diam}}}\,\omega \), respectively. In particular, given a constant \(\varsigma >0\), there exists a constant k, such that by dropping all \(\lambda \) for which the aforementioned minimal level difference exceeds k, the remaining indices form a tree \(\Lambda ^\mathscr {K}\) with \(\# \Lambda ^\mathscr {K}\lesssim \# \mathcal {T}\lesssim \varepsilon ^{-1/s}+\# \Lambda ^\mathscr {H}\) (dependent on k), and (see Proposition A.1)

and so, using \(\Vert \mathbf{r}\Vert \eqsim \Vert u-\tilde{u}\Vert _{H^1(0,1)}\) and \(\Vert \mathbf{f}-\mathbf{f}_\varepsilon \Vert \eqsim \Vert f-f_\varepsilon \Vert _{H^{-1}(0,1)}\),

Note that for \(\varsigma \) being sufficiently small, and so k sufficiently large, by taking \(\varepsilon \) suitably the approximate residual will meet any accuracy that is required in the cost Condition (1.1).

By selecting ‘single scale’ collections \(\Phi ^\mathscr {H}\) and \(\Phi ^\mathscr {K}\) with \({{\mathrm{span}}}\, \Phi ^\mathscr {H}\supseteq {{\mathrm{span}}}\,\Psi ^\mathscr {H}|_{\Lambda ^\mathscr {H}}\) and \({{\mathrm{span}}}\,\Phi ^\mathscr {K}\supseteq {{\mathrm{span}}}\,\Psi ^\mathscr {K}|_{\Lambda ^\mathscr {K}}\), and \(\# \Phi ^\mathscr {H}\lesssim \# \Lambda ^\mathscr {H}\) and \(\# \Phi ^\mathscr {K}\lesssim \# \Lambda ^\mathscr {K}\), this approximate residual \(\mathbf{r}|_{\Lambda ^\mathscr {K}}\) can be computed in \({\mathcal {O}}(\Lambda ^\mathscr {K})\) operations as follows: First express \(\tilde{u}\) in terms of \(\Phi ^\mathscr {H}\) by applying a multi-to-single scale transformation to \({\tilde{\mathbf{u}}}\), then apply to this representation the sparse stiffness matrix \(\langle (\Phi ^\mathscr {K})',(\Phi ^\mathscr {H})' \rangle _{L_2(0,1)}\), subtract \(\langle \Phi ^\mathscr {K},f \rangle _{L_2(0,1)}\), and finally apply the transpose of the multi-to-single scale transformation involving \(\Psi ^\mathscr {K}|_{\Lambda ^\mathscr {K}}\) and \(\Phi ^\mathscr {K}\). This approximate residual evaluation thus satisfies the cost condition for optimality, it is relatively easy to implement, and it is observed to be quantitatively much more efficient.

It furthermore generalizes to semi-linear operators, in any case for nonlinear terms that are multivariate polynomials in u and derivatives of u. Indeed, as an example, suppose that instead of \(-u''=f\) the equation reads as \(-u'' +u^3=f\). Then the residual is given by \(\langle \Psi ^\mathscr {K},f+\tilde{u}''-\tilde{u}^3 \rangle _{L_2(0,1)}\). Since \(f_\varepsilon +\tilde{u}''-\tilde{u}^3\) is a piecewise polynomial w.r.t. \(\mathcal {T}\), the same arguments shows that \(\langle \Psi ^\mathscr {K},f+\tilde{u}''-\tilde{u}^3 \rangle _{L_2(0,1)}\big |_{\Lambda ^\mathscr {K}}\) is a valid approximate residual.

The essential idea behind our approximate residual evaluation is that, after the replacement of f by \(f_\varepsilon \), the different terms that constitute the residual are expressed in a common dictionary, before the residual, as a whole, is integrated against \(\Psi ^\mathscr {K}\). In our simple one-dimensional example this was possible by selecting \(\Psi ^\mathscr {H}\subset H^2(0,1)\), so that the operator could be applied to the wavelets in strong, or more precisely, mild sense, meaning that the result of the application lands in \(L_2(0,1)\). It required piecewise smooth, globally \(C^1\)-wavelets. Although the same approach applies in more dimensions, there, except on product domains, the construction of \(C^1\)-wavelet bases is cumbersome. For that reason, our approach will be to write a PDE of second order as a system of PDEs of first order. It will turn out that there are several possibilities to do so.

1.3 A common first order system least squares formulation

To introduce ideas, let us again consider the model problem of Poisson’s equation in one dimension. By introducing the additional unknown \(\theta =u'\), for given \(f \in L_2(0,1)\) this PDE can be written as the first order system of finding \((u,\theta ) \in H^1_0(0,1) \times H^1(0,1)\) such that

The corresponding homogeneous operatorFootnote 2 \(\vec {H}^\mathrm{h}:=(v,\eta )\mapsto (-\eta ',-v'+\eta )\) is in \(\mathcal {L}\mathrm {is}(H^1_0(0,1) \times H^1(0,1), L_2(0,1) \times L_2(0,1))\) (cf. [30, (proof of) Thm. 3.1]). To arrive at a symmetric and positive definite system, we consider the least squares problem of solving

Its solution solves the Euler–Lagrange equations

which in this setting are known as the normal equations.

To these equations we apply the adaptive wavelet scheme, so with ‘\(\mathscr {H}\)’\(=\)‘\(\mathscr {K}\)’\(=H^1_0(0,1) \times H^1(0,1)\), (‘A’\((u,\theta ))(v,\eta ):=\langle \vec {H}^h(v,\eta ),\vec {H}^h(u,\theta )\rangle _{L_2(0,1) \times L_2(0,1)}\) and right-hand side ‘f’\((v,\eta ):=\langle f, -\eta ' \rangle _{L_2(0,1)}\). From \(\vec {H}^\mathrm{h}\) being a homeomorphism with its range, i.e.,

being a consequence of \(\vec {H}^\mathrm{h}\) being even boundedly invertible between the full spaces, it follows that the bilinear form is bounded, symmetric, and coercive. After equipping \(H_0^1(0,1)\) and \(H^1(0,1)\) with wavelet Riesz bases \(\Psi ^{H^1_0}\) and \(\Psi ^{H^1}\), for admissible \({\tilde{\mathbf{u}}}\) and \(\varvec{\tilde{\theta }}\), with \(\tilde{u}:={\tilde{\mathbf{u}}}^\top \Psi ^{H^1_0}\) and \(\tilde{\theta }:=\varvec{\tilde{\theta }}^\top \Psi ^{H^1}\) the residual reads as

The construction of an approximate residual follows the same lines as described before for the standard variational formulation.Footnote 3 The functions \(\tilde{u}',\,\tilde{\theta },\,\tilde{\theta }'\) are piecewise polynomials w.r.t. a partition \(\mathcal {T}_1\) of (0, 1) into \({\mathcal {O}}(\#\,\mathrm{supp}\,{\tilde{\mathbf{u}}}+\#\,\mathrm{supp}\,\varvec{\tilde{\theta }})\) subintervals. If \((\mathbf{u},\varvec{\theta }) \in \mathcal {A}^s\), then there exists a piecewise polynomial \(f_\varepsilon \) w.r.t. a partition \(\mathcal {T}_2\) of (0, 1) into \({\mathcal {O}}(\varepsilon ^{-1/s})\) subintervals such that \(\Vert f-f_\varepsilon \Vert _{L_2(0,1)} \le \varepsilon \). Thanks to the piecewise smoothness of \(\tilde{u}'-\tilde{\theta }\) and \(\tilde{\theta }'+f_\varepsilon \), there exist trees \(\Lambda ^{H^1_0}\) and \(\Lambda ^{H^1}\), with \(\# \Lambda ^{H^1_0}+ \# \Lambda ^{H^1} \lesssim \#\mathcal {T}_1+\#\mathcal {T}_2\) (dependent on \(\varsigma \)), such that

Since the approximate residual can be evaluated in \({\mathcal {O}}(\# \Lambda ^{H^1_0} \cup \Lambda ^{H^1})\) operations, we conclude that it satisfies the cost Condition (1.1) for optimality of the adaptive wavelet scheme.

Remark 1.2

Recall that, as always with least squares formulations, the same results are valid when lower order, possibly non-symmetric terms are added to the second order PDE, as long as the standard variational formulation remains well-posed. Furthermore, as we will discuss, least squares formulations allows to handle inhomogeneous boundary conditions. Finally, as we will see, the approach of reformulating a 2nd order PDE as a first order least squares problem, and then optimally solving the normal equations applies to any well-posed PDE, not necessarily being elliptic.

In [17] we applied the adaptive wavelet scheme to a least squares formulation of the current, common type. Disadvantages of this formulation are that (i) it requires that \(f \in L_2(0,1)\), instead of \(f \in H^{-1}(0,1)\) as allowed in the standard variational formulation. Related to that, and more importantly, for a semi-linear equation \(-u''+N(u)=f\), (ii) it is needed that N maps \(H^1_0(0,1)\) into \(L_2(0,1)\), instead of into \(H^{-1}(0,1)\). Finally, with the generalization of this least squares formulation to more than one space dimensions, (iii) the space \(H^1(0,1)\) for \(\theta \) reads as \(H({{\mathrm{div}}};\Omega )\). In [17], for two-dimensional connected polygonal domains \(\Omega \), we managed to construct a wavelet Riesz basis for \(H({{\mathrm{div}}};\Omega )\). This construction, however, relied on the fact that, in two dimensions, any divergence-free function is the curl of an \(H^1\)-function. To the best of our knowledge, wavelet Riesz bases for \(H({{\mathrm{div}}};\Omega )\) for non-product domains in three and more dimensions have not been constructed.

In the next subsection, we describe a prototype of a least-squares formulation with which these disadvantages (i)–(iii) are avoided.

1.4 A seemingly unpractical least squares formulation

The first order system least squares formulation that will be studied in this paper reads, for the model problem, as follows: again we introduce \(\theta =u'\), but now consider the first order system of finding \((u,\theta ) \in H^1_0(0,1) \times L_2(0,1)\) such that

where \((D'\theta )(v):=-\langle \theta ,v'\rangle _{L_2(0,1)}\), i.e., \(D'\theta \) is the distributional derivative of \(\theta \).

In ‘primal’ mixed form, this system reads as

The corresponding homogeneous operator \(\vec {H}^\mathrm{h}\) is in \(\mathcal {L}\mathrm {is}(H^1_0(0,1) \times L_2(0,1), H^{-1}(0,1) \times L_2(0,1))\), and the least squares problem reads as solving

with normal equations reading as

(\((v,\eta ) \in H^1_0(0,1) \times L_2(0,1)\)).

In the terminology from [3], our current least squares problem is identified as being unpractical because of the appearance of the dual norm. To deal with this, as in [19], we select some wavelet Riesz basis \(\Psi ^{\hat{H}^1_0}\) for \(H^1_0(0,1)\), and replace the norm on \(H^{-1}(0,1)\) in (1.3) by the equivalent norm defined by \(\Vert g(\Psi ^{\hat{H}^1_0})\Vert \) for \(g \in H^{-1}(\Omega )\). Correspondingly, in (1.4) we replace the inner product \(\langle g,h\rangle _{H^{-1}(0,1)}\) by \(g(\Psi ^{\hat{H}^1_0})^\top h(\Psi ^{\hat{H}^1_0})\), so that the resulting normal equations read as finding \((u,\theta ) \in H^1_0(0,1) \times L_2(0,1)\) such that

for all \((v,\eta ) \in H^1_0(0,1) \times L_2(0,1)\).

To apply the adaptive wavelet scheme to these normal equations, we equip \(H^1_0(0,1)\) and \(L_2(0,1)\) with wavelet Riesz bases \(\Psi ^{H^1_0}\) and \(\Psi ^{L_2}\), respectively. When these bases have order \(p+1\) and p, the best possible convergence rate \(s_{\max }\) will be equal to p (p / n on an n-dimensional domain). Note that the order of the basis \(\Psi ^{\hat{H}^1_0}\) is irrelevant.

For approximations \((\tilde{u},\tilde{\theta })=({\tilde{\mathbf{u}}}^\top \Psi ^{H^1},\varvec{\tilde{\theta }}^\top \Psi ^{L_2})\) for admissible \({\tilde{\mathbf{u}}}\) and \(\varvec{\tilde{\theta }}\), the residual \(\mathbf{r}\) of \(({\tilde{\mathbf{u}}},\varvec{\tilde{\eta }})\) reads as

that, under the additional condition that \(\Psi ^{L_2} \subset H^1(0,1)\), and thus \(\tilde{\theta } \in H^1(0,1)\), is equal to

This last step is essential because it allows us, after the replacement of f by a piecewise polynomial \(f_\varepsilon \), to express \(\tilde{\theta }'+f_\varepsilon \) in a common dictionary. The additional condition is satisfied by piecewise polynomial, globally continuous wavelets, which are available on general domains in multiple dimensions.

In view of the previous discussions, to describe the approximate residual evaluation, it suffices to consider the term \(\langle \Psi ^{L_2}, \mathbf{z}^\top (\Psi ^{\hat{H}^1_0})'\rangle _{L_2(0,1)}\) with \(\mathbf{z}:= \langle \Psi ^{\hat{H}^1_0},f+\tilde{\theta }'\rangle _{L_2(0,1)}\). The by now familiar approach is applied twice: the function \(\tilde{\theta }'\) is piecewise polynomial w.r.t. a partition \(\mathcal {T}_1\) into \({\mathcal {O}}(\#\,\mathrm{supp}\,\varvec{\tilde{\theta }})\) subintervals. If \((\mathbf{u},\varvec{\theta }) \in \mathcal {A}^s\), then there exists a piecewise polynomial \(f_\varepsilon \) w.r.t. a partition \(\mathcal {T}_2\) of (0,1) into \({\mathcal {O}}(\varepsilon ^{-1/s})\) subintervals such that \(\Vert f-f_\varepsilon \Vert _{H^{-1}(0,1)} \le \varepsilon \). Consequently, there exists a tree \(\Lambda ^{\hat{H}^1_0}\) with \(\# \Lambda ^{\hat{H}^1_0} \lesssim \#\,\mathrm{supp}\,\varvec{\tilde{\theta }}+\varepsilon ^{-1/s}\) (dependent on \(\varsigma \)) such that \(\Vert \mathbf{z}-\mathbf{z}|_{\Lambda ^{\hat{H}^1_0}}\Vert \lesssim \varsigma \Vert f+\tilde{\theta }'\Vert _{H^{-1}(0,1)}+\varepsilon \). The function \(\tilde{z}:=\mathbf{z}|_{\Lambda ^{\hat{H}^1_0}}^\top \Psi ^{\hat{H}^1_0}\) is piecewise polynomial w.r.t. a partition \(\mathcal {T}_2\) of (0,1) into \({\mathcal {O}}(\# \Lambda ^{\hat{H}^1_0})\) subintervals. Consequently, exists a tree \(\Lambda _1^{L_2}\) with \(\# \Lambda _1^{L_2} \lesssim \# \Lambda ^{\hat{H}^1_0}\) (dependent on \(\varsigma \)) such that \(\Vert \langle \Psi ^{L_2}, \tilde{z}'\rangle _{L_2(0,1)}-\langle \Psi ^{L_2}, \tilde{z}'\rangle _{L_2(0,1)}\big |_{\Lambda _1^{L_2}}\Vert \le \varsigma \Vert \tilde{z}\Vert _{H^1(0,1)} \lesssim \varsigma (\Vert \mathbf{z}\Vert +\Vert \mathbf{z}-\mathbf{z}|_{\Lambda ^{\hat{H}^1_0}}\Vert ) \lesssim \varsigma (\Vert f+\tilde{\theta }'\Vert _{H^{-1}(0,1)}+\varepsilon )\). Combining this with the approximations for the other two terms that constitute the residual, we infer that there exist trees \(\Lambda ^{H^1_0}\) and \(\Lambda ^{L_2}\) with \(\# \Lambda ^{H^1_0}+\# \Lambda ^{L_2} \lesssim \#\,\mathrm{supp}\,\# {\tilde{\mathbf{u}}}+\#\,\mathrm{supp}\,\varvec{\tilde{\theta }} +\varepsilon ^{-1/s}\) (dependent on \(\varsigma \)), such that

where \(\tilde{\mathbf{r}}_2\) is constructed from \(\mathbf{r}_2\) by replacing \(\mathbf{z}\) by \(\mathbf{z}|_{\Lambda ^{\hat{H}^1_0}}\). This approximate residual evaluation satisfies the cost Condition (1.1) for optimality.

As we will see in Sect. 2, the advantage of the current construction of a first order system least squares problem is that it applies to any well-posed (semi-linear) second order PDE. The two instances of the spaces \(H^1_0(0,1)\) represent the trial and test spaces in the standard variational formulation, and well-posedness of the latter implies well-posedness of the least squares formulation. The additional space \(L_2(0,1)\) reads in general as an \(L_2\)-type space. So, in particular, with this formulation, \(H({{\mathrm{div}}})\)-spaces do not enter. The price to be paid is that (1.5) is somewhat more complicated than (1.2), and that therefore its approximation is somewhat more costly to compute.

Remark 1.3

The more popular ‘dual’ mixed formulation of our model problem reads as finding \((u,\theta ) \in L_2(0,1) \times H^1(0,1)\) such that \(\langle -\theta ',v\rangle _{L_2(0,1)}+\langle \theta ,\eta \rangle _{L_2(0,1)}+\langle u,\eta '\rangle _{L_2(0,1)}=\langle f,v\rangle _{L_2(0,1)}\) (\((v,\eta ) \in L_2(0,1) \times H^1(0,1)\)). The resulting least squares formulation has the combined disadvantages of both other formulations that we considered. It requires \(f \in L_2(0,1)\), possibly nonlinear terms should map into \(L_2(0,1)\), in more than one dimension the space \(H^1(0,1)\) reads as an \(H({{\mathrm{div}}})\)-space, and one of the norms involved in the least squares minimalisation is a dual norm.

Remark 1.4

With the aim to avoid both a dual norm in the least squares minimalisation, and \(H({{\mathrm{div}}})\) or other vectorial Sobolev spaces as trial spaces, in our first investigations of this least squares approach in [31], we considered the ‘extended div-grad’ first order system least squares formulation studied in [14]. A sufficient and necessary [30], but restrictive condition for its well-posedness is \(H^2\)-regularity of the homogeneous boundary value problem.

1.5 Layout of the paper

In Sect. 2, a general procedure is given to reformulate any well-posed semi-linear 2nd order PDE as a well-posed first order least squares problem. As we will see, this procedure gives an effortless derivation of well-posed first order least squares formulations of elliptic 2nd order PDEs, and that of the stationary Navier–Stokes equations. The arising dual norm can be replaced by the equivalent \(\ell _2\)-norm of a functional in wavelet coordinates.

In Sect. 3, we recall properties of the adaptive wavelet Galerkin method (awgm). Operator equations of the form \(F(z)=0\), where, for some Hilbert space \(\mathscr {H}\), \(F:\mathscr {H}\rightarrow \mathscr {H}'\) and DF(z) is symmetric and positive definite, are solved by the awgm at the best possible rate from a Riesz basis for \(\mathscr {H}\). Furthermore, under a condition on the cost of the approximate residual evaluations, the method has optimal computational complexity.

In the short Sect. 4, it is shown that the awgm applies to the normal equations that result from the first order least squares problems as derived in Sect. 2.

In Sect. 5, we apply the awgm to a first order least squares formulation of a semi-linear 2nd order elliptic PDE with general inhomogeneous boundary conditions. Under a mild condition on the wavelet basis for the trial space, the efficient approximate residual evaluation that was outlined in Sect. 1.4 applies, and it satisfies the cost condition, so that the awgm is optimal. Wavelet bases that satisfy the assumptions are available on general polygonal domains. Some technical results needed for this section are given in “Appendix A”.

In Sect. 6 the findings from Sect. 5 are illustrated by numerical results.

In Sect. 7, we consider the so-called velocity–pressure–velocity gradient and the velocity–pressure–vorticity first order system formulations of the stationary Navier–Stokes equations. Results analogously to those demonstrated for the elliptic problem will be shown to be valid here as well.

2 Reformulation of a semi-linear second order PDE as a first order system least squares problem

In an abstract framework, we give a procedure to write semi-linear second order PDEs, that have well-posed standard variational formulations, as a well-posed first order system least squares problems. A particular instance of this approach has been discussed in Sect. 1.4.

For some separable Hilbert spaces \(\mathscr {U}\) and \(\mathscr {V}\), for convenience over \(\mathbb {R}\), consider a differentiable mapping

Remark 2.1

In applications G is the operator associated to a variational formulation of a PDO with trial space \(\mathscr {U}\) and test space \(\mathscr {V}\).

For \(\mathscr {T}\) being another separable Hilbert space, let

i.e., \(G_1\) and \(G_2\) are bounded linear operators.

Remark 2.2

In applications, as those discussed in Sects. 5 and 7, \(G_1 G_2\) will be a factorization of the leading second order part of the PDO (possibly modulo terms that vanish at the solution, cf. Sect. 7.2) into a product of first order PDOs.

Obviously, u solves

if and only if it is the first component of the solution \((u,\theta )\) of

where \(\vec {H}:\mathscr {U}\times \mathscr {T}\supset \mathrm {dom}(G)\times \mathscr {T}=\mathrm {dom}(\vec {H}) \rightarrow \mathscr {V}' \times \mathscr {T}\).

The following lemma shows that well-posedness of the original formulation implies that of the reformulation as a system.

Lemma 2.3

Let \(DG(u) \in \mathcal {L}(\mathscr {U},\mathscr {V}')\) be a homeomorphism with its range, i.e., \(\Vert DG(u) v\Vert _{\mathscr {V}'} \eqsim \Vert v\Vert _\mathscr {U}\) \((v \in \mathscr {U})\). Then

is a homeomorphism with its range, being \(\{(f,g) \in \mathscr {V}'\times \mathscr {T}:f-G_1g \in \mathrm{ran}\,DG(u)\}\). In particular, with \(r, R>0\) such that \(r \Vert v\Vert _\mathscr {U}\le \Vert DG(u)(v)\Vert _{\mathscr {V}'} \le R \Vert v\Vert _\mathscr {U}\), it holds that

Remark 2.4

Since \(\mathrm{ran}\,DG(u)=\mathscr {V}'\) iff \(\mathrm{ran}\,D\vec {H}(u,\theta )=\mathscr {V}'\times \mathscr {T}\), in particular we have that \(DG(u) \in \mathcal {L}\mathrm {is}(\mathscr {U},\mathscr {V}')\) implies that \(D\vec {H}(u,\theta ) \in \mathcal {L}\mathrm {is}(\mathscr {U}\times \mathscr {T},\mathscr {V}' \times \mathscr {T})\).

Proof

We have \(D\vec {H}(u,\theta )\left[ \begin{array}{@{}l@{}} v \\ \eta \end{array} \right] =\left[ \begin{array}{@{}l@{}} DG_0(u)v+G_1\eta \\ \eta -G_2 v \end{array} \right] =\left[ \begin{array}{@{}l@{}} DG(u) v+G_1(\eta -G_2v) \\ \eta -G_2 v \end{array} \right] \) by \(DG_0(\cdot )=DG(\cdot )-G_1 G_2\). So

By estimating \(\Vert D\vec {H}(u,\theta ) \left[ \begin{array}{@{}l@{}} v \\ \eta \end{array} \right] \Vert _{\mathscr {V}' \times \mathscr {T}} \le \Vert DG(u) v+G_1(\eta -G_2v)\Vert _{\mathscr {V}'}+\Vert \eta -G_2 v\Vert _\mathscr {T}\), one easily arrives at (2.3).

For (f, g) being in the set at the right-hand side in (2.4), consider the system

This system has a unique solution, so that the \(\subseteq \)-symbol in (2.4) reads as an equality sign, and \(r\Vert v\Vert _\mathscr {U}\le \Vert f\Vert _{\mathscr {V}'}+\Vert G_1\Vert \Vert g\Vert _{\mathscr {T}}\) and \(\Vert \eta \Vert _\mathscr {T}\le \Vert g\Vert _\mathscr {T}+\Vert G_2\Vert \Vert v\Vert _\mathscr {U}\). By estimating \(\Vert (v,\eta )\Vert _{\mathscr {U}\times \mathscr {T}} \le \Vert v\Vert _\mathscr {U}+\Vert \eta \Vert _\mathscr {T}\) one easily arrives at (2.2). \(\square \)

In the following, we will always assume that

-

(i)

there exists a solution u of \(G(u)=0\);

-

(ii)

G is two times continuously Fréchet differentiable in a neighborhood of u;

-

(iii)

\(DG(u) \in \mathcal {L}(\mathscr {U},\mathscr {V}')\) is a homeomorphism with its range.

Then

-

(a)

\((u,\theta )=(u,G_2 u)\) solves \(\vec {H}(u,\theta )=\vec {0}\);

-

(b)

\(\vec {H}\) is two times continuously Fréchet differentiable in a neighborhood of \((u,\theta )\);

-

(c)

\(D\vec {H}(u,\theta ) \in \mathcal {L}(\mathscr {U}\times \mathscr {T},\mathscr {V}' \times \mathscr {T})\) is a homeomorphism with its range,

the latter by Lemma 2.3. In summary, when the equation \(G(u)=0\) is well-posed [(i)–(iii) are valid], then so is \(\vec {H}(u,\theta )=\vec {0}\) [(a)–(c) are valid], and solving one equation is equivalent to solving the other.

Remark 2.5

Actually, one might dispute whether these equations should be called well-posed when \(\mathrm{ran}\,DG(u) \subsetneq \mathscr {V}'\) and so \(\mathrm{ran}\,D\vec {H}(u,\theta ) \subsetneq \mathscr {V}' \times \mathscr {T}\). In any case, under conditions (i)–(iii), and so (a)–(c), the corresponding least-squares problems and resulting (nonlinear) normal equations are well-posed, as we will see next.

A solution \((u,\theta )\) of \(\vec {H}(u,\theta )=\vec {0}\) is a minimizer of the least squares functional

In particular, it holds that

Lemma 2.6

For \(\vec {H}:\mathscr {U}\times \mathscr {T}\supset \mathrm {dom}(\vec {H}) \rightarrow \mathscr {V}' \times \mathscr {T}\), and \(\vec {H}\) being Fréchet differentiable at a root \((u,\theta )\), property (c) is equivalent to the property that for \((\tilde{u},\tilde{\theta }) \in \mathscr {U}\times \mathscr {V}\) in a neighbourhood of \((u,\theta )\),

Proof

This is a consequence of \(\vec {H}(\tilde{u},\tilde{\theta })=D\vec {H}(u,\theta ) (\tilde{u}-u,\tilde{\theta }-\theta )+o(\Vert \tilde{u}-u\Vert _\mathscr {U}+\Vert \tilde{\theta }-\theta \Vert _\mathscr {T})\). \(\square \)

A minimizer \((u,\theta )\) of Q is a solution of the Euler-Lagrange equations

that, in this setting, are usually called (nonlinear) normal equations. Using (a)–(b), one computes that

We conclude the following: Under the assumptions (a)–(c), it holds that

-

(1)

DQ is a mapping from a subset of a separable Hilbert space, viz. \(\mathscr {U}\times \mathscr {T}\), to its dual;

-

(2)

there exists a solution of \(DQ(u,\theta )=0\) (viz. any solution of \(\vec {H}(u,\theta )=0\));

-

(3)

DQ is continuously Fréchet differentiable in a neighborhood of \((u,\theta )\);

-

(4)

\(0<D^2 Q(u,\theta )= D^2 Q(u,\theta )' \in \mathcal {L}\mathrm {is}(\mathscr {U}\times \mathscr {T},(\mathscr {U}\times \mathscr {T})')\).

As a consequence of the last property, one infers that in a neighborhood of \((u,\theta )\), \(DQ(u,\theta )=0\) has exactly one solution.

In view of the above findings, in order to solve \(G(u)=0\), for a G that satisfies (i)–(iii), we are going to solve the (nonlinear) normal equations \(DQ(u,\theta )=0\). A major advantage of DQ over G is that its derivative is symmetric and coercive.

A concern, however, is whether, for given \((u,\theta ), (v,\eta ) \in \mathscr {U}\times \mathscr {T}\), \(DQ(u,\theta )(v,\eta )\) as given by (2.5) is evaluable. We will think of the inner product on \(\mathscr {T}\) as being evaluable. In our applications, \(\mathscr {T}\) will be of the form \(L_2(\Omega )^N\). To deal with the dual norm on \(\mathscr {V}'\), we equip \(\mathscr {V}\) with a Riesz basis

meaning that the analysis operator

and so its adjoint, known as the synthesis operator,

In the definition of the least squares functional Q, and consequently in that of DQ, we now replace the standard dual norm on \(\mathscr {V}'\) by the equivalent norm \(\Vert \mathcal {F}_\mathscr {V}\cdot \Vert _{\ell _2(\vee _\mathscr {V})}\). Then in view of the definition of \(\vec {H}\) and the expression for \(D\vec {H}\), we obtain that

where (1)–(4) are still valid.

Remark 2.7

We refer to [4] for an alternative approach to solve least square problems that involves dual norms.

To solve the obtained (nonlinear) normal equations \(DQ(u,\theta )=0\) we are going to apply the adaptive wavelet Galerkin method (awgm). Note that the definition of \(DQ(u,\theta )(v,\eta )\) still involves an infinite sum over \(\vee _\mathscr {V}\) that later, inside the solution process, is going to be replaced by a finite one.

3 The adaptive wavelet Galerkin method (awgm)

In this section, we summarize findings about the awgm from [17, 31]. Let

-

(I)

\(F:\mathscr {H}\supset \mathrm {dom}(F) \rightarrow \mathscr {H}'\), with \(\mathscr {H}\) being a separable Hilbert space;

-

(II)

\(F(z)=0\);

-

(III)

F be continuously differentiable in a neighborhood of z;

-

(IV)

\(0<DF(z)=DF(z)' \in \mathcal {L}\mathrm {is}(\mathscr {H},\mathscr {H}')\).

In our applications, the triple \((F,\mathscr {H},z)\) will read as \((DQ,\mathscr {U}\times \mathscr {T},(u,\theta ))\), so that (I)–(IV) are guaranteed by (1)–(4).

Let \(\Psi =\{\psi _\lambda :\lambda \in \vee \}\) be a Riesz basis for \(\mathscr {H}\), with analysis operator \(\mathcal {F}:g \mapsto [g(\psi _\lambda )]_{\lambda \in \vee } \in \mathcal {L}\mathrm {is}(\mathscr {H},\ell _2(\vee ))\), and so synthesis operator \(\mathcal {F}':\mathbf{v} \mapsto \mathbf{v}^\top \Psi :=\sum _{\lambda \in \vee } v_\lambda \psi _\lambda \in \mathcal {L}\mathrm {is}(\ell _2(\vee ),\mathscr {H}')\). For any \(\Lambda \subset \vee \), we set

For satisfying the forthcoming Condition 3.5 that concerns the computational cost, it will be relevant that \(\Psi \) is a basis of wavelet type.

Writing \(z=\mathcal {F}' \mathbf{z}\), and with

an equivalent formulation of \(F(z)=0\) is given by

We are going to approximate \(\mathbf{z}\), and so z, by a sequence of Galerkin approximations from the spans of increasingly larger sets of wavelets, which sets are created by an adaptive process. Given \(\Lambda \subset \vee \), the Galerkin approximation \(\mathbf{z}_\Lambda \), or equivalently, \(z_\Lambda :=\mathbf{z}_\Lambda ^\top \Psi \), are the solutions of \(\langle \mathbf{F}(\mathbf{z}_\Lambda ),\mathbf{v}_\Lambda \rangle _{\ell _2(\vee )}=0\) (\(\mathbf{v}_\Lambda \in \ell _2(\Lambda )\)), i.e., \(\mathbf{F}(\mathbf{z}_\Lambda )|_{\Lambda }=0\), and \(F(z_\Lambda )(v_\Lambda )=0\) (\(v_\Lambda \in {{\mathrm{span}}}\{\psi _\lambda :\lambda \in \Lambda \}\)), respectively. These solutions exist uniquely when \(\inf _{{\tilde{\mathbf{z}}}_\Lambda \in \ell _2(\Lambda )} \Vert \mathbf{z}-{\tilde{\mathbf{z}}}_\Lambda \Vert \) is sufficiently small [26, 31].

In order to be able to construct efficient algorithms, in particular when F is non-affine, it will be needed to consider only sets \(\Lambda \) from a certain subset of all finite subsets of \(\vee \). In our applications, this collection of so-called admissible \(\Lambda \) will consist of (Cartesian products of) finite trees. For the moment, it suffices when the collection of admissible sets is such that the union of any two admissible sets is again admissible.

To provide a benchmark to evaluate our adaptive algorithm, for \(s>0\), we define the nonlinear approximation class

A vector \(\mathbf{z}\) is in \(\mathcal {A}^s\) if and only if there exists a sequence of admissible \((\Lambda _i)_i\), with \(\lim _{i \rightarrow \infty } \#\Lambda _i=\infty \), such that \(\sup _i \inf _{\mathbf{z}_i \in \ell _2(\Lambda _i)} (\#\Lambda _i)^s \Vert \mathbf{z}-\mathbf{z}_i\Vert <\infty \). This means that \(\mathbf{z}\) can be approximated in \(\ell _2(\vee )\) at rate s by vectors supported on admissible sets, or, equivalently, z can be approximated in \(\mathscr {H}\) at rate s from spaces of type \({{\mathrm{span}}}\{\psi _\lambda :\lambda \in \Lambda ,\,\Lambda \text { is admissible}\}\).

The adaptive wavelet Galerkin method (awgm) defined below produces a sequence of increasingly more accurate Galerkin approximations \(\mathbf{z}_\Lambda \) to \(\mathbf{z}\). The, generally, infinite residual \(\mathbf{F}(\mathbf{z}_\Lambda )\) is used as an a posteriori error estimator. A motivation for the latter is given by the following result.

Lemma 3.1

For \(\Vert \mathbf{z}-{\tilde{\mathbf{z}}}\Vert \) sufficiently small, it holds that \(\Vert \mathbf{F}({\tilde{\mathbf{z}}})\Vert \eqsim \Vert \mathbf{z}-{\tilde{\mathbf{z}}}\Vert \).

Proof

With \(\tilde{z}={\tilde{\mathbf{z}}}^\top \Psi \), it holds that \(\Vert \mathbf{F}({\tilde{\mathbf{z}}})\Vert \eqsim \Vert F(\tilde{z})\Vert _{\mathscr {H}'}\). From (II)–(III), we have \(F(\tilde{z})=DF(z)(\tilde{z}-z)+o(\Vert \tilde{z}-z\Vert _\mathscr {H})\). The proof is completed by \(\Vert DF(z)(\tilde{z}-z)\Vert _{\mathscr {H}'} \eqsim \Vert \tilde{z}-z\Vert _\mathscr {H}\) by (IV). \(\square \)

This a posteriori error estimator guides an appropriate enlargement of the current set \(\Lambda \) using a bulk chasing strategy, so that the sequence of approximations converge with the best possible rate to \(\mathbf{z}\). To arrive at an implementable method, that is even of optimal computational complexity, both the Galerkin solution and its residual are allowed to be computed inexactly within sufficiently small relative tolerances.

Algorithm 3.2

(awgm)

In step (R), by means of a loop in which an absolute tolerance is decreased, the true residual \(\mathbf{F}(\mathbf{z}_{\Lambda _i})\) is approximated within a relative tolerance \(\delta \). In step (B), bulk chasing is performed on the approximate residual. The idea is to find a smallest admissible \(\Lambda _{i+1} \supset \Lambda _i\) with \(\Vert \mathbf{r}_i|_{\Lambda _{i+1}}\Vert \ge \mu _0 \Vert \mathbf{r}_i\Vert \). In order to be able to find an implementation that is of linear complexity, the condition of having a truly smallest \(\Lambda _{i+1}\) has been relaxed. Finally, in step (G), a sufficiently accurate approximation of the Galerkin solution w.r.t. the new set \(\Lambda _{i+1}\) is determined.

Convergence of the adaptive wavelet Galerkin method, with the best possible rate, is stated in the following theorem.

Theorem 3.3

[31, Thm. 3.9] Let \(\mu _1, \gamma , \delta \), \(\inf _{\mathbf{v}_{\Lambda _0} \in \ell _2(\Lambda _0)}\Vert \mathbf{z}-\mathbf{v}_{\Lambda _0}\Vert \), \(\Vert \mathbf{F}(\mathbf{z}_{\Lambda _0})|_{\Lambda _0}\Vert \), and the neighborhood \(\mathbf{Z}\) of the solution \(\mathbf{z}\) all be sufficiently small. Then, for some \(\alpha =\alpha [\mu _0]<1\), the sequence \((\mathbf{z}_{\Lambda _i})_i\) produced by awgm satisfies

If, for whatever \(s>0\), \(\mathbf{z} \in \mathcal {A}^s\), then \(\# (\Lambda _{i+1} {\setminus } \Lambda _0) \lesssim \Vert \mathbf{z}-\mathbf{z}_{\Lambda _{i}}\Vert ^{-1/s}\).

The computation of the approximate Galerkin solution \(\mathbf{z}_{\Lambda _{i+1}}\) can be implemented by performing the simple fixed point iteration

Taking \(\omega >0\) to be a sufficiently small constant and starting with \(\mathbf{z}_{\Lambda _{i+1}}^{(0)}=\mathbf{z}_{\Lambda _{i}}\), a fixed number of iterations suffices to meet the condition \(\Vert \mathbf{F}(\mathbf{z}^{(j+1)}_{\Lambda _{i+1}})|_{\Lambda _{i+1}}\Vert \le \gamma \Vert \mathbf{r}_i\Vert \). This holds also true when each of the \(\mathbf{F}()|_{\Lambda _{i+1}}\) evaluations is performed within an absolute tolerance that is a sufficiently small fixed multiple of \(\Vert \mathbf{r}_i\Vert \).

Optimal computational complexity of the awgm—meaning that the work to obtain an approximation within a given tolerance \(\varepsilon >0\) can be bounded on some constant multiple of the bound on its support length from Theorem 3.3—is guaranteed under the following two conditions concerning the cost of the “bulk chasing” process, and that of the approximate residual evaluation, respectively. Indeed, apart from some obvious computations, these are the only two tasks that have to be performed in awgm.

Condition 3.4

The determination of \(\Lambda _{i+1}\) in Algorithm 3.2 is performed in \({\mathcal {O}}(\#\,\mathrm{supp}\,\mathbf{r}_i+\# \Lambda _i)\) operations.

In case of unconstrained approximation, i.e., any finite \(\Lambda \subset \vee \) is admissible, this condition is satisfied by collecting the largest entries in modulus of \(\mathbf{r}_i\), where, to avoid a suboptimal complexity, an exact sorting should be replaced by an approximate sorting based on binning. With tree approximation, the condition is satisfied by the application of the so-called Thresholding Second Algorithm from [2]. We refer to [31, §3.4] for a discussion.

To understand the second condition, that in the introduction was referred to as the cost Condition (1.1), note that inside the awgm it is never needed to approximate a residual more accurately than within a sufficiently small, but fixed relative tolerance.

Condition 3.5

For a sufficiently small, fixed \(\varsigma >0\), there exists a neighborhood \(\mathbf{Z}\) of the solution \(\mathbf{z}\) of \(\mathbf{F}(\mathbf{z})=0\), such that for all admissible \(\Lambda \subset \vee \), \({\tilde{\mathbf{z}}}\in \ell _2(\Lambda ) \cap \mathbf{Z}\), and any \(\varepsilon >0\), there exists an \(\mathbf{r} \in \ell _2(\vee )\) with

that one can compute in \({\mathcal {O}}(\varepsilon ^{-1/s}+\#\Lambda )\) operations. Here \(s>0\) is such that \(\mathbf{z} \in \mathcal {A}^s\).

Under both conditions, the awgm has optimal computational complexity:

Theorem 3.6

In the setting of Theorem 3.3, and under Conditions 3.4 and 3.5, not only \(\# \mathbf{z}_{\Lambda _i}\), but also the number of arithmetic operations required by awgm for the computation of \(\mathbf{z}_{\Lambda _i}\) is \({\mathcal {O}}(\Vert \mathbf{z}-\mathbf{z}_{\Lambda _i}\Vert ^{-1/s})\).

4 Application to normal equations

As discussed in Sect. 2, we will apply the awgm to the (nonlinear) normal equations \(DQ(u,\theta )=0\), with DQ from (2.6). That is, we apply the findings collected in the previous section for the general triple \((F,\mathscr {H},z)\) now reading as \((DQ,\mathscr {U}\times \mathscr {T},(u,\theta ))\).

For \(\Psi ^\mathscr {U}=\{\psi _\lambda ^\mathscr {U}:\lambda \in \vee _\mathscr {U}\}\) and \(\Psi ^\mathscr {T}=\{\psi _\lambda ^\mathscr {T}:\lambda \in \vee _\mathscr {T}\}\) being Riesz bases for \(\mathscr {U}\) and \(\mathscr {T}\), respectively, we equip \(\mathscr {U}\times \mathscr {T}\) with Riesz basis

(w.l.o.g. we assume that \(\vee _\mathscr {U}\cap \vee _\mathscr {T}=\emptyset \)). With \(\mathcal {F}\in \mathcal {L}\mathrm {is}(\mathscr {U}\times \mathscr {T},\ell _2(\vee ))\) being the corresponding analysis operator, and \(D\mathbf{Q}:=\mathcal {F}DQ \mathcal {F}'\), for \([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top \in \ell _2(\vee )\), and with \((\tilde{u},\tilde{\theta }):=[{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]\Psi \), we have

In this setting, using Lemma 3.1, Condition 3.5 can be reformulated as follows:

Condition 3.5*

For a sufficiently small, fixed \(\varsigma >0\), there exists a neighborhood of the solution \((u,\theta )\) of \(DQ(u,\theta )=0\), such that for all admissible \(\Lambda \subset \vee \), all \([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top \in \ell _2(\Lambda )\), with \((\tilde{u},\tilde{\theta }):=[{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ] \Psi \) being in this neighborhood, and any \(\varepsilon >0\), there exists an \(\mathbf{r} \in \ell _2(\vee )\) with

that one can compute in \({\mathcal {O}}(\varepsilon ^{-1/s}+\#\Lambda )\) operations, where \(s>0\) is such that \([\mathbf{u}^\top , \mathbf{\theta }^\top ]^\top \in \mathcal {A}^s\).

To verify this condition, we will use the additional property, i.e. on top of (1)–(4), that \(\Vert u-\tilde{u}\Vert _\mathscr {U}+\Vert \theta -\tilde{\theta }\Vert _\mathscr {T}\eqsim \Vert G_0(\tilde{u})-G_1\tilde{\theta }\Vert _{\mathscr {V}'}+\Vert \tilde{\theta }-G_2\tilde{u}\Vert _{\mathscr {T}}\), which is provided by Lemma 2.6.

5 Semi-linear 2nd order elliptic PDE

We apply the solution method outlined in Sects. 2, 3 and 4 to the example of a semi-linear 2nd order elliptic PDE with general (inhomogeneous) boundary conditions. The main task will be to verify Condition 3.5*.

5.1 Reformulation as a first order system least squares problem

Let \(\Omega \subset \mathbb {R}^n\) be a bounded domain, \(\Gamma _N \cup \Gamma _D=\partial \Omega \) with \({{\mathrm{meas}}}(\Gamma _N \cap \Gamma _D)=0\), \({{\mathrm{meas}}}(\Gamma _D)>0\) when \(\Gamma _D \ne \emptyset \), and \(A:\Omega \rightarrow \mathbb {R}^{n\times n}_\mathrm{symm}\) with \(\xi ^\top A(\cdot ) \xi \eqsim \Vert \xi \Vert ^2\) (\(\xi \in \mathbb {R}^n\), a.e.). We set

and

For \(N:\mathscr {U}\supset \mathrm {dom}(N) \rightarrow \mathscr {V}_1'\), \(f \in \mathscr {V}_1'\), \(g \in H^{\frac{1}{2}}(\Gamma _D)\), and \(h \in H^{-\frac{1}{2}}(\Gamma _N)\), we consider the semi-linear boundary value problem

that in standard variational form reads as finding \(u \in \mathscr {U}\) such that

\((v=(v_1,v_2) \in \mathscr {V})\).

We assume that this variational problem has a solution u, and that G, i.e., N, is two times continuously Fréchet differentiable in a neighborhood of u, and \(DG(u) \in \mathcal {L}(\mathscr {U},\mathscr {V}')\) is a homeomorphism with its range, i.e., we assume that

formulated in Sect. 2.

Remarks 5.1

Because \(\mathscr {U}\rightarrow H^{\frac{1}{2}}(\Gamma _D):u \mapsto u|_{\partial \Omega }\) is surjective, from [30, Thm. 2.1] it follows that condition (iii) is satisfied when

(actually, L being a homeomorphism with its range is already sufficient).

By writing \(g=u_0|_{\Gamma _D}\) for some \(u_0 \in \mathscr {U}\), one infers that for linear N, existence of a (unique) solution u, i.e. (i), follows from \(L \in \mathcal {L}\mathrm {is}(\mathscr {V}_1,\mathscr {V}_1')\). For \(g=0\), the conditions of N being monotone and locally Lipschitz are sufficient for having a (unique) solution u. Relaxed conditions on N suffice to have a (locally unique) solution. We refer to [5].

Using the framework outlined in Sect. 2, we write this second order elliptic PDE as a first order system least squares problem. Putting

, we define

by

The results from Sect. 2 show that the solution u can be found as the first component of the minimizer \((u,\vec {\theta }) \in \mathscr {U}\times \mathscr {T}\) of

being the solution of the normal equations \(DQ(u,\vec {\theta })=0\), and furthermore, that these normal equations are well-posed in the sense that they satisfy (1)–(4).

To deal with the ‘unpractical’ norm on \(\mathscr {V}'\), as in Sect. 1.4, at the end of Sect. 2, and in Sect. 4, we equip \(\mathscr {V}_1\) and \(\mathscr {V}_2\) with wavelet Riesz bases

and replace, in the definition of Q, the norms on their duals by the equivalent norms defined by \(\Vert g(\Psi ^{\mathscr {V}_1})\Vert \) or \(\Vert g(\Psi ^{\mathscr {V}_2})\Vert \), for \(g \in \mathscr {V}_1'\) or \(g \in \mathscr {V}_2'\), respectively.

Next, after equiping \(\mathscr {U}\) and \(\mathscr {T}\) with Riesz bases

and so \(\mathscr {U}\times \mathscr {T}\) with \(\Psi =(\Psi ^{\mathscr {U}},0_{\mathscr {T}}) \cup (0_{\mathscr {U}},\Psi ^{\mathscr {T}})\), we apply the awgm to the resulting system

where \((u,\vec {{\theta }}):=[\mathbf{{u}}^\top ,\varvec{{\theta }}^\top ] \Psi \).

To express the three terms in \(v\mapsto \langle v, N({u})-f\rangle _{L_2(\Omega )}-\langle v,h \rangle _{L_2(\Gamma _N)} +\langle \nabla v, \vec {{\theta }} \rangle _{L_2(\Omega )^n} \in \mathscr {V}_1'\) w.r.t. one dictionary of functions on \(\Omega \) and one dictionary of functions on \(\Gamma _N\), similarly to Sect. 1.4 we impose the additional, but in applications easily realisable condition that

Then for finitely supported approximations \([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top \) to \([\mathbf{{u}}^\top ,\varvec{{\theta }}^\top ]^\top \), for \((\tilde{u},\vec {\tilde{\theta }}):=[{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ] \Psi \in \mathscr {U}\times H({{\mathrm{div}}};\Omega )\), we have

where we used the vanishing traces of \(v \in \mathscr {V}_1 \) at \(\Gamma _D\), to write \(\langle \nabla v, \vec {\tilde{\theta }}\rangle _{L_2(\Omega )^n}\) as \(\langle v,- {{\mathrm{div}}}\vec {\tilde{\theta }}\rangle _{L_2(\Omega )}+\langle v, \vec {\tilde{\theta }}\cdot \mathbf{n}\rangle _{L_2(\Gamma _N)}\).

Each of the terms \(A \nabla {\tilde{u}} -\vec {\tilde{\theta }}\), \({\tilde{u}}-g\), \(N({\tilde{u}})-f-{{\mathrm{div}}}\vec {\tilde{\theta }}\), and \(\vec {\tilde{\theta }}\cdot \mathbf{n}-h\) correspond, in strong form, to a term of the least squares functional, and therefore their norms can be bounded by a multiple of the norm of the residual, which is the basis of our approximate residual evaluation. In order to verify Condition 3.5*, we have to collect some assumptions on the wavelets, which will be done in the next subsection.

Remark 5.2

If \(\Gamma _D=\emptyset \), then obviously (5.4) should be read without the second term involving \(\Psi ^{\mathscr {V}_2}\). If \(\Gamma _D\ne \emptyset \) and homogeneous Dirichlet boundary conditions are prescribed on \(\Gamma _D\), i.e., \(g=0\), it is simpler to select \(\mathscr {U}=\mathscr {V}_1=\{u \in H^1(\Omega ):u|_{\Gamma _D}=0\}\), and to omit integral over \(\Gamma _D\) in the definition of G, so that again (5.4) should be read without the second term involving \(\Psi ^{\mathscr {V}_2}\).

5.2 Wavelet assumptions and definitions

We formulate conditions on \(\Psi ^{\mathscr {V}_1}\), \(\Psi ^{\mathscr {V}_2}\), \(\Psi ^{\mathscr {U}}\), and \(\Psi ^{\mathscr {T}}\), in addition to being Riesz bases for \(\mathscr {V}_1\), \(\mathscr {V}_2\), \(\mathscr {U}\), and \(\mathscr {T}\), respectively.

Recalling that \(\mathscr {T}=\mathscr {T}_1\times \cdots \times \mathscr {T}_n\), we select \(\Psi ^\mathscr {T}\) of canonical form

where \(\Psi ^{\mathscr {T}_q}=\{\psi ^{\mathscr {T}_q}_\lambda :\lambda \in \vee _{\mathscr {T}_q}\}\) is a Riesz basis for \(\mathscr {T}_q\) (with \(\vee _{\mathscr {T}_{q'}} \cap \vee _{\mathscr {T}_{q''}} =\emptyset \) when \(q'\ne q''\)).

For \(*\in \{\mathscr {U},\mathscr {T}_1,\ldots ,\mathscr {T}_n,\mathscr {V}_1\}\), we collect a number of (standard) assumptions, (\(w_{1}\))–(\(w_{4}\)), on the scalar-valued wavelet collections \(\Psi ^*=\{\psi _\lambda ^*:\lambda \in \vee _*\}\) on \(\Omega \). Corresponding assumptions on the wavelets \(\Psi ^{\mathscr {V}_2}\) on \(\Gamma _D\) will be formulated at the end of this subsection. To each \(\lambda \in \vee _*\), we associate a value \(|\lambda | \in \mathbb {N}_0\), which is called the level of \(\lambda \). We will assume that the elements of \(\Psi ^*\) have one vanishing moment, and are locally supported, piecewise polynomial of some degree m, w.r.t. dyadically nested partitions in the following sense:

- (\(w_{1}\)):

-

There exists a collection \(\mathcal {O}_\Omega :=\{\omega :\omega \in \mathcal {O}_\Omega \}\) of closed polytopes, such that, with \(|\omega |\in \mathbb {N}_0\) being the level of \(\omega \), \({{\mathrm{meas}}}(\omega \cap \omega ')=0\) when \(|\omega |=|\omega '|\) and \(\omega \ne \omega '\); for any \(\ell \in \mathbb {N}_0\), \(\bar{\Omega }=\cup _{|\omega |=\ell } \omega \); \(\mathrm{diam}\,\omega \eqsim 2^{-|\omega |}\); and \(\omega \) is the union of \(\omega '\) for some \(\omega '\) with \(|\omega '|=|\omega |+1\). We call \(\omega \) the parent of its children \(\omega '\). Moreover, we assume that the \(\omega \in \mathcal {O}_\Omega \) are uniformly shape regular, in the sense that they satisfy a uniform Lipschitz condition, and \({{\mathrm{meas}}}(F_\omega )\eqsim {{\mathrm{meas}}}(\omega )^{\frac{n-1}{n}}\) for \(F_\omega \) being any facet of \(\omega \).

- (\(w_{2}\)):

-

\(\mathrm{supp}\,\psi ^*_\lambda \) is contained in a connected union of a uniformly bounded number of \(\omega \)’s with \(|\omega |=|\lambda |\), and restricted to each of these \(\omega \)’s is \(\psi ^*_\lambda \) a polynomial of degree m.

- (\(w_{3}\)):

-

Each \(\omega \) is intersected by the supports of a uniformly bounded number of \(\psi ^*_\lambda \)’s with \(|\lambda |=|\omega |\).

- (\(w_{4}\)):

-

\(\int _\Omega \psi ^*_\lambda \,dx =0\), possibly with the exception of those \(\lambda \) with \(\mathrm{dist}(\mathrm{supp}\,\psi ^*_\lambda ,\Gamma _D) \lesssim 2^{-|\lambda |}\), or with \(|\lambda |=0\).

Generally, the polynomial degree m will be different for the different bases, but otherwise fixed. The collection \(\mathcal {O}_\Omega \) is shared among all bases. Note that the conditions of \(\Psi ^\mathscr {U}\) being a basis for \(\mathscr {U}\), and to consist of piecewise polynomials, implies that \(\mathscr {U}\subset C(\bar{\Omega })\). Wavelets of in principle arbitrary order that satisfy these assumptions can be found in e.g. [20, 25].

Definition 5.3

A collection \({\mathcal {T}} \subset \mathcal {O}_\Omega \) such that \(\overline{\Omega }=\cup _{\omega \in {\mathcal {T}}} \omega \), and for \(\omega _1 \ne \omega _2 \in {\mathcal {T}}\), \({{\mathrm{meas}}}(\omega _1 \cap \omega _2)=0\) will be called a tiling. With \(\mathcal {P}_m(\mathcal {T})\), we denote the space of piecewise polynomials of degree m w.r.t. \(\mathcal {T}\). The smallest common refinement of tilings \({\mathcal {T}}_1\) and \({\mathcal {T}}_2\) is denoted as \({\mathcal {T}}_1 \oplus {\mathcal {T}}_2\).

To be able to find, in linear complexity, a representation of a function, given as linear combination of wavelets, as a piecewise polynomial w.r.t. a tiling—mandatory for an efficient evaluation of nonlinear terms, we will impose a tree constraint on the underlying set of wavelet indices. A similar approach was followed earlier in [6, 10, 21, 33, 35].

Definition 5.4

To each \(\lambda \in \vee _*\) with \(|\lambda |>0\), we associate one \(\mu \in \vee _*\) with \(|\mu |=|\lambda |-1\) and \({{\mathrm{meas}}}(\mathrm{supp}\,\psi ^*_\lambda \cap \mathrm{supp}\,\psi ^*_\mu )>0\). We call \(\mu \) the parent of \(\lambda \), and so \(\lambda \) a child of \(\mu \).

To each \(\lambda \in \vee _*\), we associate some neighbourhood \(\mathcal {S}(\psi ^*_\lambda )\) of \(\mathrm{supp}\,\psi ^*_\lambda \), with diameter \(\lesssim 2^{-|\lambda |}\), such that \(\mathcal {S}(\psi ^*_\lambda ) \subset \mathcal {S}(\psi ^*_\mu )\) when \(\lambda \) is a child of \(\mu \).

We call a finite \(\Lambda \subset \vee _*\) a tree, if it contains all \(\lambda \in \vee _*\) with \(|\lambda |=0\), as well as the parent of any \(\lambda \in \Lambda \) with \(|\lambda |>0\).

Note that we now have tree structures on the set \(\mathcal {O}_\Omega \) of polytopes, and as well as on the wavelet index sets \(\vee _*\). We trust that no confusion will arise when we speak about parents or children.

For some collections of wavelets, as the Haar or more generally, Alpert wavelets [1], it suffices to take \(\mathcal {S}(\psi ^*_\lambda ):=\mathrm{supp}\,\psi ^*_\lambda \). The next result shows that, thanks to (\(w_{1}\))-(\(w_{2}\)), a suitable neighbourhood \(\mathcal {S}(\psi ^*_\lambda )\) as meant in Definition 5.4 always exists.

Lemma 5.5

With \(C:=\sup _{\lambda \in \vee _*} 2^{|\lambda |} {{\mathrm{diam}}}\, \mathrm{supp}\,\psi ^*_\lambda \), a valid choice of \(\mathcal {S}(\psi ^*_\lambda )\) is given by \(\{x \in \Omega :{{\mathrm{dist}}}(x,\mathrm{supp}\,\psi _\lambda ^*) \le C 2^{-|\lambda |}\}\).

Proof

For \(\mu ,\lambda \in \vee _*\) with \(|\mu |=|\lambda |-1\) and \({{\mathrm{meas}}}(\mathrm{supp}\,\psi ^*_\lambda \cap \mathrm{supp}\,\psi ^*_\mu )>0\), and \(x \in \Omega \) with \({{\mathrm{dist}}}(x,\mathrm{supp}\,\psi _\lambda ^*) \le C 2^{-|\lambda |}\), it holds that \({{\mathrm{dist}}}(x,\mathrm{supp}\,\psi _\mu ^*) \le {{\mathrm{dist}}}(x,\mathrm{supp}\,\psi _\lambda ^*)+{{\mathrm{diam}}}(\mathrm{supp}\,\psi ^*_\lambda ) \le C2^{-|\mu |}\). \(\square \)

A proof of the following proposition, as well as an algorithm to apply the multi-to-single-scale transformation that is mentioned, is given in [31, §4.3].

Proposition 5.6

Given a tree \(\Lambda \subset \vee _*\), there exists a tiling \({\mathcal {T}}({\Lambda }) \subset \mathcal {O}_\Omega \) with \(\# {\mathcal {T}}({\Lambda }) \lesssim \# \Lambda \) such that \({{\mathrm{span}}}\{ \psi ^*_\lambda :\lambda \in \Lambda \}\subset \mathcal {P}_{m}({\mathcal {T}}({\Lambda }))\). Moreover, equipping \(\mathcal {P}_{m}(\mathcal {T}(\Lambda ))\) with a basis of functions, each of which supported in \(\omega \) for one \(\omega \in \mathcal {T}(\Lambda )\), the representation of this embedding, known as the multi- to single-scale transform, can be applied in \({\mathcal {O}}(\#\Lambda )\) operations.

The benefit of the definition of \(\mathcal {S}(\psi _\lambda ^*)\) appears from the following lemma.

Lemma 5.7

Let \(\overline{\Omega }=\Sigma _0 \supseteq \Sigma _1 \supseteq \cdots \). Then

is a tree.

Proof

The set \(\Lambda ^*\) contains all \(\lambda \in \vee _*\) with \(|\lambda |=0\), as well as, by definition of \(\mathcal {S}(\cdot )\), the parent of any \(\lambda \in \Lambda ^*\) with \(|\lambda |>0\). \(\square \)

In Proposition 5.6 we saw that for each tree \(\Lambda \) there exists a tiling \({\mathcal {T}}(\Lambda )\), with \(\# {\mathcal {T}}(\Lambda ) \lesssim \#\Lambda \), such that \({{\mathrm{span}}}\{ \psi ^*_\lambda :\lambda \in \Lambda \}\subset \mathcal {P}_{m}({\mathcal {T}}({\Lambda }))\). Conversely, in the following, given a tiling \({\mathcal {T}}\), and a constant \(k \in \mathbb {N}_0\), we construct a tree \(\Lambda ^*({{\mathcal {T}},k})\) with \(\# \Lambda ^*({{\mathcal {T}},k}) \lesssim \# {\mathcal {T}}\) (dependent on k) such that a kind of reversed statements hold: In “Appendix A”, statements of type \(\lim _{k \rightarrow \infty } \sup _{0 \ne g \in \mathcal {P}_{m}({\mathcal {T}})} \frac{\Vert \langle \Psi ^*,g\rangle _{L_2(\Omega )}|_{\vee _*{\setminus } \Lambda ^*({{\mathcal {T}},k})}\Vert }{\Vert g\Vert _{*'}} = 0\) will be shown, meaning that for any tolerance there exist a k such that for any \(g \in \mathcal {P}_{m}({\mathcal {T}})\) the relative error in dual norm in the best approximation from the span of the corresponding dual wavelets with indices in \(\Lambda ^*({{\mathcal {T}},k})\) is less than this tolerance.

Definition 5.8

Given a tiling \({\mathcal {T}} \subset \mathcal {O}_\Omega \), let \(t({\mathcal {T}}) \subset \mathcal {O}_\Omega \) be its enlargement by adding all ancestors of all \(\omega \in {\mathcal {T}}\). Given a \(k \in \mathbb {N}_0\), we set

Proposition 5.9

The set \(\Lambda ^*({{\mathcal {T}},k})\) is a tree, and \(\# \Lambda ^*({{\mathcal {T}},k}) \lesssim \# {\mathcal {T}}\) (dependent on \(k \in \mathbb {N}_0\)).

Proof

The first statement follows from Lemma 5.7. Since the number of children of any \(\omega \in \mathcal {O}_\Omega \) is uniformly bounded, it holds that \(\# t({\mathcal {T}})\lesssim \# {\mathcal {T}}\), and so \(\# \Lambda ^*({{\mathcal {T}},k}) \lesssim \# {\mathcal {T}}\) as a consequence of the wavelets being locally supported. \(\square \)

Example 5.10

Let \(\Psi =\{\psi _\lambda :\lambda \in \vee \}\) be the collection of Haar wavelets on \(\Omega =(0,1)\), i.e., the union of the function \(\psi _{0,0} \equiv 1\), and, for \(\ell \in \mathbb {N}\) and \(k=0,\ldots ,2^{\ell -1}-1\), the functions \(\psi _{\ell ,k}:=2^{\frac{\ell -1}{2}}\psi (2^{\ell -1}\cdot -k)\), where \(\psi \equiv 1\) on \([0,\frac{1}{2}]\) and \(\psi \equiv -1\) on \((\frac{1}{2},1]\). Writing \(\lambda =(\ell ,k)\), we set \(|\lambda |=\ell \). The parent of \(\lambda \) with \(|\lambda |>0\) is \(\mu \) with \(|\mu |=|\lambda |-1\) and \(\mathrm{supp}\,\psi _\lambda \subset \mathrm{supp}\,\psi _\mu \), and \(\mathcal {S}(\psi _\lambda ):=\mathrm{supp}\,\psi _\lambda \).

Let \(\mathcal {O}_{\Omega }\) be the union, for \(\ell \in \mathbb {N}_0\) and \(k=0,\ldots ,2^\ell -1\), of the intervals \(2^{\ell }[k,k+1]\) to which we assign the level \(\ell \).

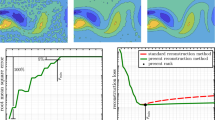

Now as an example let \(\Lambda \subset \vee \) be the set \(\{(0,0),(1,0),(2,0),(3,0)\}\), which is a tree in the sense of Definition 5.4. It corresponds to the solid parts in the left picture in Fig. 1.

The (minimal) tiling \({\mathcal {T}}(\Lambda )\) as defined in Proposition 5.6 is given by \(\{[0,\frac{1}{8}],[\frac{1}{8},\frac{1}{4}], [\frac{1}{4},\frac{1}{2}], [\frac{1}{2},1]\}\).

Conversely, taking \({\mathcal {T}}:={\mathcal {T}}(\Lambda )\), the set \(\Lambda (\mathcal {T},1) \subset \vee \) as defined in Definition 5.8 is given by \(\{(0,0),(1,0),(2,0),(2,1),(3,0),(3,1), (4,0), (4,1)\}\) and is illustrated in the right picture in Fig. 1.

Definition 5.11

Recalling from (4.1) and the first lines of this subsection that the Riesz basis \(\Psi \) for \(\mathscr {U}\times \mathscr {T}\) is of canonical form

with index set \(\vee :=\vee _{\mathscr {U}} \cup \vee _{\mathscr {T}_1} \cup \cdots \cup \vee _{\mathscr {T}_n}\), we call \(\Lambda \subset \vee \) admissible when each of \(\Lambda \cap \vee _{\mathscr {U}},\Lambda \cap \vee _{\mathscr {T}_1},\,\ldots ,\Lambda \cap \vee _{\mathscr {T}_n}\) are trees. The tiling \({\mathcal {T}}(\Lambda )\) is defined as the smallest common refinement \({\mathcal {T}}(\Lambda \cap \vee _{\mathscr {U}}) \oplus {\mathcal {T}}(\Lambda \cap \vee _{\mathscr {T}_1})\oplus \cdots \oplus {\mathcal {T}}(\Lambda \cap \vee _{\mathscr {T}_n})\). Conversely, given a tiling \({\mathcal {T}} \subset \mathcal {O}_\Omega \) and a \(k \in \mathbb {N}_0\), we define the admissible set \(\Lambda ({\mathcal {T}},k) \subset \vee \) by \(\Lambda ^\mathscr {U}({\mathcal {T}},k)\cup \Lambda ^{\mathscr {T}_1}({\mathcal {T}},k) \cup \cdots \cup \Lambda ^{\mathscr {T}_n}({\mathcal {T}},k)\).

Remark 5.12

Let \(\Psi ^{\mathscr {U}}\) be a wavelet basis for \(\mathscr {U}\) of order \(d_\mathscr {U}>1\) (i.e., all wavelets \(\psi _\lambda ^{\mathscr {U}}\) up to level \(\ell \) span all piecewise polynomials in \(\mathscr {U}\) of degree \(d_\mathscr {U}-1\) w.r.t. \(\{\omega :\omega \in \mathcal {O}_\Omega ,\,|\omega |=\ell \}\)), and similarly, for \(1 \le q \le n\), let \(\Psi ^{\mathscr {T}_q}\) be a wavelet basis for \(\mathscr {T}_q\) of order \(d_\mathscr {T}>0\). Recalling the definition of an approximation class given in (3.1), a sufficiently smooth solution \((u,\vec {\theta })\) is in \(\mathcal {A}^s\) for \(s=s_{\max }:=\min (\frac{d_\mathscr {U}-1}{n},\frac{d_\mathscr {T}}{n})\), whereas on the other hand membership of \(\mathcal {A}^s\) for \(s>s_{\max }\) cannot be expected under whatever smoothness condition.

For \(s \le s_{\max }\), a sufficient and ‘nearly’ necessary condition for \((u,\vec {\theta })\in \mathcal {A}^s\) is that \((u,\vec {\theta }) \in B^{s n+1}_{p,\tau }(\Omega ) \times B^{s n}_{p,\tau }(\Omega )^n\) for \(\frac{1}{p}<s+\frac{1}{2}\) and arbitrary \(\tau >0\), see [11]. This mild smoothness condition in the ‘Besov scale’ has to be compared to the condition \((u,\vec {\theta }) \in H^{s n+1}(\Omega ) \times H^{s n}(\Omega )^n\) that is necessary to obtain a rate s with approximation from the spaces of type \({{\mathrm{span}}}\{\psi ^\mathscr {U}_\lambda :|\lambda |\le L\} \times \prod _{q=1}^n {{\mathrm{span}}}\{\psi ^{\mathscr {T}_q}_\lambda :|\lambda |\le L\}\).

We pause to add three more assumptions on our PDE: We assume that w.r.t. the coarsest possible tiling \(\{\omega :\omega \in \mathcal {O}_\Omega ,\,|\omega |=0\}\) of \(\bar{\Omega }\),

Remark 5.13

The subsequent analysis can easily be generalized to A being piecewise smooth. With some more efforts other nonlinear terms N can be handled as well. For example, for N(u) of the form \(n_1(u) u\), it will be needed that for some \(m \in \mathbb {N}\), for each \(\omega \in \mathcal {O}_\Omega \), there exists a subspace \(V_\omega \subset H^1_0(\omega )\) with \(\Vert \cdot \Vert _{H^1(\omega )} \lesssim 2^{|\omega |} \Vert \cdot \Vert _{L_2(\omega )}\) on \(V_\omega \), and

(cf. proof of Lemma A.2).

Finally in this subsection we formulate our assumptions on the wavelet Riesz basis \(\Psi ^{\mathscr {V}_2}=\{\psi ^{\mathscr {V}_2}_\lambda :\lambda \in \vee _{\mathscr {V}_2}\}\) for \(H^{-\frac{1}{2}}(\Gamma _D)\). We assume that it satisfies the assumptions (\(w_{2}\)) and (\(w_{3}\)) with \(\mathcal {O}_\Omega \) reading as

Furthermore, we impose that

which, for biorthogonal wavelets, is essentially is (\(w_{4}\)) (cf. [20, lines following (A.2)]). (To relax the smoothness conditions on \(\Gamma _D\) needed for the definition of \(H^{-1}(\Gamma _D)\), one can replace (5.8) by \(\Vert \psi ^{\mathscr {V}_2}_\lambda \Vert _{H^s(\Gamma _D)} \lesssim 2^{|\lambda |(s+\frac{1}{2})}\) for some \(s \in [-1,-\frac{1}{2})\)).

The definition of a boundary tiling \({\mathcal {T}}_{\Gamma _D} \subset \mathcal {O}_{\Gamma _D}\) is similar to Definition 5.3. Also similar to the corresponding preceding definitions are that of a tree \(\Lambda \subset \vee _{\mathscr {V}_2}\), and of the boundary tiling \({\mathcal {T}}_{\Gamma _D}(\Lambda ) \subset \mathcal {O}_{\Gamma _D}\) for \(\Lambda \subset \vee _{\mathscr {V}_2}\) being a tree. Conversely, for a boundary tiling \({\mathcal {T}}_{\Gamma _D}\subset \mathcal {O}_{\Gamma _D}\) and \(k \in \mathbb {N}_0\), for \(*\in \{\mathscr {U},\mathscr {V}_2\}\) we define the tree

5.3 An appropriate approximate residual evaluation

Given an admissible \(\Lambda \subset \vee \), \([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top \in \ell _2(\Lambda )\) with \((\tilde{u},\vec {\tilde{\theta }}):=[{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ] \Psi \) sufficiently close to \((u,\vec {\theta })\), and an \(\varepsilon >0\), our approximate evaluation of \(D\mathbf{Q}([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top )\), given in (5.4), is built in the following steps, where \(k \in \mathbb {N}_0\) is a sufficiently large constant:

-

(s1)

Find a tiling \({\mathcal {T}}(\varepsilon ) \subset \mathcal {O}_\Omega \), such that

$$\begin{aligned}&\inf _{(g_\varepsilon ,f_\varepsilon ,\vec {h}_\varepsilon ) \in \mathcal {P}_m({\mathcal {T}}(\varepsilon )\cap \Gamma _D) \cap C(\Gamma _D) \times \mathcal {P}_m({\mathcal {T}}(\varepsilon )) \times \mathcal {P}_m({\mathcal {T}}(\varepsilon ))^n} \left( \Vert g-g_\varepsilon \Vert _{H^{\frac{1}{2}}(\Gamma _D)} \right. \\&\left. \quad +\,\Vert v_1 \mapsto \int _\Omega (f-f_\varepsilon ) v_1\,dx+\int _{\Gamma _N}(h-\vec {h}_\varepsilon \cdot \mathbf{n}) v_1 \,ds\Vert _{\mathscr {V}_1'}\right) \le \varepsilon . \end{aligned}$$Set \({\mathcal {T}}(\Lambda ,\varepsilon ):={\mathcal {T}}(\Lambda ) \oplus {\mathcal {T}}(\varepsilon )\).

-

(s2)

-

(a)

Approximate

$$\begin{aligned} \mathbf{r}^{(\frac{1}{2})}_1:=\langle \Psi ^{\mathscr {V}_1}, N(\tilde{u})-f- {{\mathrm{div}}}\vec {\tilde{\theta }}\rangle _{L_2(\Omega )}+\langle \Psi ^{\mathscr {V}_1}, \vec {\tilde{\theta }}\cdot \mathbf{n}-h\rangle _{L_2(\Gamma _N)} \end{aligned}$$by

$$\begin{aligned} {\tilde{\mathbf{r}}}^{\left( \frac{1}{2}\right) }_1:=\mathbf{r}^{\left( \frac{1}{2}\right) }_1|_{\Lambda ^{\mathscr {V}_1}({\mathcal {T}}(\Lambda ,\varepsilon ),k)}. \end{aligned}$$ -

(b)

With \(\tilde{r}_1^{(\frac{1}{2})}:=({\tilde{\mathbf{r}}}^{(\frac{1}{2})}_1)^\top \Psi ^{\mathscr {V}_1}\), approximate

$$\begin{aligned} \mathbf{r}_1=\left[ \begin{array}{@{}l@{}} \mathbf{r}_{11} \\ \mathbf{r}_{12}\end{array} \right] :=\left[ \begin{array}{@{}l@{}} \langle DN(\tilde{u}) \Psi ^{\mathscr {U}}, \tilde{r}_1^{(\frac{1}{2})} \rangle _{L_2(\Omega )}\\ \langle \Psi ^{\mathscr {T}},\nabla \tilde{r}_1^{(\frac{1}{2})} \rangle _{L_2(\Omega )^n} \end{array} \right] \end{aligned}$$by \({\tilde{\mathbf{r}}}_1= \left[ \begin{array}{@{}l@{}} {\tilde{\mathbf{r}}}_{11} \\ {\tilde{\mathbf{r}}}_{12}\end{array} \right] := \mathbf{r}_1|_{\Lambda ({\mathcal {T}}(\Lambda ^{\mathscr {V}_1}({\mathcal {T}}(\Lambda ,\varepsilon ),k)),k)}\).

-

(a)

-

(s3)

Approximate

$$\begin{aligned} \mathbf{r}_2:=\left\langle \left[ \begin{array}{@{}l@{}} -\,A \nabla \Psi ^{\mathscr {U}}\\ \Psi ^{\mathscr {T}} \end{array} \right] ,\vec {\tilde{\theta }}-A \nabla \tilde{u} \right\rangle _{L_2(\Omega )^n} \end{aligned}$$by \({\tilde{\mathbf{r}}}_2:=\mathbf{r}_2|_{\Lambda ({\mathcal {T}}(\Lambda ),k)}\).

-

(s4)

-

(a)

Approximate \(\mathbf{r}^{(\frac{1}{2})}_3:=\langle \Psi ^{\mathscr {V}_2}, \tilde{u}-g\rangle _{L_2(\Gamma _D)}\) by

$$\begin{aligned} {\tilde{\mathbf{r}}}^{\left( \frac{1}{2}\right) }_3:=\mathbf{r}^{\left( \frac{1}{2}\right) }_3|_{\Lambda ^{\mathscr {V}_2}({\mathcal {T}}(\Lambda ,\varepsilon )\cap \Gamma _D,k)}. \end{aligned}$$ -

(b)

With \(\tilde{r}_3^{\left( \frac{1}{2}\right) }:=({\tilde{\mathbf{r}}}^{(\frac{1}{2})}_3)^\top \Psi ^{\mathscr {V}_2}\), approximate \(\mathbf{r}_3:=\left[ \begin{array}{@{}l@{}} \langle \Psi ^\mathscr {U},\tilde{r}_3^{(\frac{1}{2})}\rangle _{L_2(\Gamma _D)}\\ 0_{\vee _\mathscr {T}} \end{array}\right] \) by

$$\begin{aligned} {\tilde{\mathbf{r}}}_3:=\mathbf{r}_3|_{\Lambda ({\mathcal {T}}_{\Gamma _D}(\Lambda ^{\mathscr {V}_2}({\mathcal {T}}(\Lambda ,\varepsilon )\cap \Gamma _D,k)),k)}. \end{aligned}$$

-

(a)

Although (s2)–(s4) may look involved at first glance, the same kind of approximation is used at all instances. Each term in (5.4) consists essentially of a wavelet basis that is integrated against a piecewise polynomial, or more precisely, a function that can be sufficiently accurately approximated by a piecewise polynomial thanks to the control of the data oscillation by the refinement of the partition performed in (s1). In each of these terms, all wavelets are neglected whose levels exceed locally the level of the partition plus a constant k.

In the next theorem it is shown that this approximate residual evaluation satisfies the condition for optimality of the adaptive wavelet Galerkin method.

Theorem 5.14

For an admissible \(\Lambda \subset \vee \), \([{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ]^\top \in \ell _2(\Lambda )\) with \((\tilde{u},\vec {\tilde{\theta }}):=[{\tilde{\mathbf{u}}}^\top ,\varvec{\tilde{\theta }}^\top ] \Psi \) sufficiently close to \((u,\vec {\theta })\), and an \(\varepsilon >0\), consider the steps (s1)–(s4). With \(s>0\) such that \([\mathbf{u}^\top ,\varvec{\theta }^\top ]^\top \in \mathcal {A}^s\), let \({\mathcal {T}}(\varepsilon )\) from (s1) satisfy \(\# {\mathcal {T}}(\varepsilon ) \lesssim \varepsilon ^{-1/s}\). Then

where the computation of \({\tilde{\mathbf{r}}}_1+{\tilde{\mathbf{r}}}_2+{\tilde{\mathbf{r}}}_3\) requires \({\mathcal {O}}(\# \Lambda +\varepsilon ^{-1/s})\) operations. So by taking k sufficiently large, Condition 3.5* is satisfied.

Before giving the proof of this theorem, let us discuss matters related to step (s1). First of all, existence of tilings \({\mathcal {T}}(\varepsilon )\) as mentioned in the theorem is guaranteed. Indeed, because of \([\mathbf{u}^\top ,\varvec{\theta }^\top ]^\top \in \mathcal {A}^s\), given \(C>0\), for any \(\varepsilon >0\) there exists a \([{\tilde{\mathbf{u}}}_\varepsilon ^\top ,\varvec{\tilde{\theta }}_\varepsilon ^\top ]^\top \in \ell _2(\Lambda _\varepsilon )\), where \(\Lambda _\varepsilon \) is admissible and \(\#\Lambda _\varepsilon \lesssim \varepsilon ^{-1/s}\), such that \(\Vert [\mathbf{u}^\top ,\varvec{\theta }^\top ]^\top -[{\tilde{\mathbf{u}}}_\varepsilon ^\top ,\varvec{\tilde{\theta }}_\varepsilon ^\top ]^\top \Vert \le \varepsilon /C\). By taking a suitable C, from Lemma 2.6, (5.2) and (5.3) we infer that with \((\tilde{u}_\varepsilon ,\vec {\tilde{\theta }}_\varepsilon ):=[{\tilde{\mathbf{u}}}_\varepsilon ^\top ,\varvec{\tilde{\theta }}_\varepsilon ^\top ] \Psi \),