Abstract

The effective large-scale properties of materials with random heterogeneities on a small scale are typically determined by the method of representative volumes: a sample of the random material is chosen—the representative volume—and its effective properties are computed by the cell formula. Intuitively, for a fixed sample size it should be possible to increase the accuracy of the method by choosing a material sample which captures the statistical properties of the material particularly well; for example, for a composite material consisting of two constituents, one would select a representative volume in which the volume fraction of the constituents matches closely with their volume fraction in the overall material. Inspired by similar attempts in materials science, Le Bris, Legoll and Minvielle have designed a selection approach for representative volumes which performs remarkably well in numerical examples of linear materials with moderate contrast. In the present work, we provide a rigorous analysis of this selection approach for representative volumes in the context of stochastic homogenization of linear elliptic equations. In particular, we prove that the method essentially never performs worse than a random selection of the material sample and may perform much better if the selection criterion for the material samples is chosen suitably.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

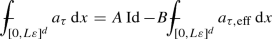

The most widely employed method for determining the effective large-scale properties of a material with random heterogeneities on a small scale is the method of representative volumes. It basically proceeds by taking a small sample of the material—a “representative volume element” (RVE)—and determining the properties of the sample by the cell formula. The criteria for the choice of the representative volume have been the subject of an ongoing debate; while in principle increasing the size of the material sample increases the accuracy of the approximation of the material properties, this comes at a correspondingly larger computational cost. It has been conjectured that for a fixed size of the material sample, selecting a material sample which captures certain statistical properties of the material in a particularly good way may be beneficial; for example, for a composite material consisting of two constituent materials, one would try to select a material sample for which the volume fraction of each constituent material within the sample matches the overall volume fraction of this constituent in the composite as closely as possible (see Fig. 1). Alternatively, for linear materials one might try to match the averaged material coefficient in the sample with the average taken over the full material. There have been efforts in materials science and mechanics towards replicating further statistical properties of the material in a representative volume, an approach called “special quasirandom structures” [82, 83, 86] or “statistically similar representative volume elements” [15,16,17,18, 28, 81]. A particularly successful approach in this direction has been developed for linear materials by Le Bris et al. [64]; their method proceeds by considering a large number of material samples, evaluating one or more cheaply computable statistical quantities of the samples (like, for example, the spatial average of the coefficient), and then choosing the sample as the representative volume that is most representative for the material as measured by these quantities. In the present work, in the context of stochastic homogenization of linear elliptic PDEs we provide the first rigorous justification of these approaches.Footnote 1

Among the six depicted material samples, the method of Le Bris, Legoll, and Minvielle in its simplest realization would choose either the first sample or the fifth sample as the representative volume element and discard the others, as the volume fraction of the inclusions in the first and the fifth sample is closest to the overall material average. Note that in the depicted material samples the volume fraction of the inclusions is proportional to the number of inclusions, as all inclusions are of equal size. For a better illustration of the method, both the size and the number of the depicted samples have been chosen much smaller than in actual computations

For materials with random heterogeneities on small scales, the approximation of the effective material coefficient by the method of representative volumes is a random quantity itself, as the outcome depends on the sample of the material. In the setting of linear elliptic PDEs with random coefficient fields—which corresponds to the setting of heat conduction, electrical currents, or electrostatics in a material with random microstructure—Gloria and Otto [48, 53, 54] have investigated the structure of the error of the approximation of the effective material coefficient by the method of representative volumes: the leading-order contribution to the error (with respect to the size of the RVE) consists of random fluctuations; in expectation the approximation of effective coefficients by the method of representative volumes is accurate to higher order, that is the systematic error of the RVE method is of higher order.Footnote 2 For a given size of the RVE—which corresponds to a fixed computational effort—the accuracy of the RVE method may therefore be increased significantly by reducing the variance of the approximations of the effective coefficient. It is precisely such a reduction of the variance by which the selection approach for representative volumes of Le Bris et al. [64] achieves its gain in accuracy.

For linear elliptic PDEs with random coefficients and moderate ellipticity contrast, the reduction of the variance by the ansatz of Le Bris et al. [64] is particularly remarkable; by selecting the representative volume according to the criterion that the averaged coefficient in the RVE should be particularly close to the averaged coefficient in the overall material, in numerical examples with ellipticity contrast \(\sim 5\) they observed a variance reduction by a factor of \(\sim 10\). Going beyond this simple selection criterion, they devised a criterion based on an expansion of the effective coefficient in the regime of small ellipticity contrast, which numerically achieves a remarkable variance reduction factor of \(\sim 60\) even for a moderate ellipticity contrast \(\sim 5\). Note that this basically corresponds to the gain of about one order of magnitude in accuracy for a negligible additional computational cost and implementation effort.

However, the analysis of the selection approach for representative volumes has been restricted to the one-dimensional setting [64], in which the homogenization of linear elliptic PDEs is linear in the inverse coefficient and therefore independent of the geometry of the material. Besides the highly nonlinear dependence of the effective coefficient on the heterogeneous coefficient field in dimensions \(d\geqq 2\), one of the main challenges in the analysis of the selection method for representative volumes is the fact that it is only expected to increase the accuracy by a (though often very large) constant factor, at least for a fixed set of statistical quantities by which the selection is performed. At the same time, the available error estimates for the representative volume element method in stochastic homogenization are only optimal up to constant factors. For this reason, the analysis of the selection approach for representative volumes necessitates a fine-grained analysis of the structure of fluctuations in stochastic homogenization.

1.1 Stochastic Homogenization of Linear Elliptic PDEs: A Brief Outline

The subject of the present contribution is the rigorous justification of the selection method for representative volumes by Le Bris et al. [64] in the context of linear elliptic equations

with random coefficient fields a on \(\mathbb {R}^d\) for arbitrary spatial dimension d. Note that this setting describes, for example, heat conduction or electrostatics in a random material. Our assumptions on the probability distribution of the coefficient field a are standard in the theory of stochastic homogenization; we assume just uniform ellipticity and boundedness, stationarity, and finite range of dependence (see conditions (A1)–(A3) below). In particular, our analysis includes the case of a two-material composite with random non-overlapping inclusions as depicted in Fig. 1.

The theory of stochastic homogenization of linear elliptic PDEs predicts that for coefficient fields with only short-range correlations on a scale \(\varepsilon \ll 1\) the solution u to the equation with random coefficient field (1) may be approximated by the solution \(u_{\mathsf {hom}}\) of an effective equation of the form

where \(a_{\mathsf {hom}}\in \mathbb {R}^{d\times d}\) is a constant effective coefficient which describes the effective behavior of the material. In this context of linear materials, the method of representative volumes is employed to compute the effective coefficient \(a_{\mathsf {hom}}\).

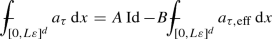

Let us describe the method of representative volumes for the approximation of the effective material coefficient \(a_{\mathsf {hom}}\) in more detail. It proceeds by choosing a sample of the material, say, a cube with side length \(L\varepsilon \) for some \(L\gg 1\), uniformly at random. Roughly speaking—for the moment passing silently over the question of boundary conditions—by solving the equation for the homogenization corrector \(\phi _i\) associated with the i-th coordinate direction on the representative volume

(\(e_i\in \mathbb {R}^d\) denoting the i-th vector of the standard basis) one may obtain an approximation \(a^{{\text {RVE}}}\) for the effective coefficient \(a_{\mathsf {hom}}\) in terms of the averaged fluxes

This expression is also known in homogenization as the cell formula. As already mentioned before, the approximation \(a^{{{\text {RVE}}}}\) for the effective material coefficient \(a_{\mathsf {hom}}\) is a random variable itself, as it depends on the realization of the random coefficient field a on the sample volume \([0,L\varepsilon ]^d\). It has been proven by Gloria and Otto [54, 55] and also observed in numerical computations that the main contribution to the error of the RVE method is caused by the random fluctuations of the approximation \(a^{{\text {RVE}}}\), while the systematic error is of higher order: For spatial dimensions \(d\geqq 1\) one has

but

As a consequence, a reduction of the fluctuations of the approximations \(a^{{\text {RVE}}}\) would lead to an increase in accuracy of the approximation for the effective coefficient \(a_{\mathsf {hom}}\). It has been observed numerically by Le Bris et al. [64], and shall be proven below rigorously, that the selection approach for representative volumes achieves its gain in accuracy precisely by reducing the fluctuations of the approximations for the effective coefficients.

1.2 Informal Summary of Our Main Results

In the present work, we prove that in the setting of stochastic homogenization of linear elliptic equations the selection approach for representative volumes by Le Bris et al. [64]

-

essentially never performs worse than a completely random selection of the representative volume element, but may perform much better for suitable selection criteria,

-

basically maintains the order of the systematic error of the approximation for the effective coefficient, and

-

reduces also the error in the approximation for the effective coefficient that may occur with a given low probability, that is reduces also the “outliers” of the approximation for the effective coefficient.

As mentioned before, in the setting of linear elliptic PDEs the method of representative volumes is employed to obtain an approximation \(a^{{\text {RVE}}}\) for the effective (homogenized) coefficient \(a_{\mathsf {hom}}\). The role of “material samples” is assumed by realizations of the random coefficient field \(a:[0,L\varepsilon ]^d\rightarrow \mathbb {R}^{d\times d}\), on which the computation of the approximations \(a^{{\text {RVE}}}\) is based.

The selection approach for representative volumes proposed in [64] then proceeds as follows: at first, one or more statistical quantities \(\mathcal {F}\) are chosen which assign a real number \(\mathcal {F}(a)\in \mathbb {R}\) to any realization \(a:[0,L\varepsilon ]^d \rightarrow \mathbb {R}^{d\times d}\). Note that the simplest statistical quantity proposed in [64] is the spatial average  . Next, one considers a sequence of independent samples of the random coefficient field until a sample meets the selection criterion

. Next, one considers a sequence of independent samples of the random coefficient field until a sample meets the selection criterion

for some chosen parameter \(\delta \) with \(CL^{-d/2} |\log L|^C \leqq \delta \leqq 1\). Finally, the approximation for the effective coefficient is computed by solving the equation for the homogenization corrector (3) and using the cell formula (4) for this sample of the random coefficient field.

To give a flavor of our main result, let us formulate it informally in the case of a single statistical quantity \(\mathcal {F}(a)\). We denote the approximation for the effective coefficient by the standard representative volume element method (without selection of material samples) by \(a^{{\text {RVE}}}\) and the approximation for the effective coefficient by the selection approach for representative volumes by \(a^{{\text {sel-RVE}}}\). In this case, our main theorems Theorems 2 and 3 may be summarized as follows:

-

The systematic error of the approximation \(a^{{\text {sel-RVE}}}\) is essentially (up to powers of \(\log L\) and some prefactors) of the same order as the systematic error of the standard representative volume element method \(a^{{\text {RVE}}}\): We have

$$\begin{aligned} \big |\mathbb {E}\big [a^{{\text {sel-RVE}}}\big ]-a_{\mathsf {hom}}\big | \leqq \frac{C\kappa ^{3/2}}{\delta } L^{-d} |\log L|^C. \end{aligned}$$The quantity \(\kappa \) will be discussed below.

-

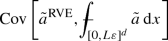

The fluctuations of the approximation \(a^{{\text {sel-RVE}}}\) are reduced by the fraction of the variance of \(a^{{\text {RVE}}}\) that is explained by \(\mathcal {F}(a)\). More precisely, we derive the estimate

$$\begin{aligned} \frac{{{\text {Var}}~}a^{{\text {sel-RVE}}}}{{{\text {Var}}~}a^{{\text {RVE}}}} \leqq&1-(1-\delta ^2)|\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}|^2 +\frac{C \kappa ^{3/2} r_{{\text {Var}}}}{\delta } L^{-d/2} |\log L|^C \end{aligned}$$where \(\rho _{\mathcal {F}(a),a^{{\text {RVE}}}} \in [-1,1]\) denotes the correlation coefficient of \(\mathcal {F}(a)\) and \(a^{{\text {RVE}}}\), given by

$$\begin{aligned} \rho _{\mathcal {F}(a),a^{{\text {RVE}}}}:= \frac{{\text {Cov}}[a^{{\text {RVE}}},\mathcal {F}(a)]}{\sqrt{{{\text {Var}}~}\mathcal {F}(a) {{\text {Var}}~}a^{{\text {RVE}}}}}, \end{aligned}$$and where \(r_{{\text {Var}}}:=\frac{L^{-d}}{{{\text {Var}}~}a^{{\text {RVE}}}}\) denotes the ratio between the expected order of fluctuations of \(a^{{\text {RVE}}}\) and the actual magnitude of fluctuations. Note that the last term in the estimate on \({{\text {Var}}~}a^{{\text {sel-RVE}}}\) converges to zero as the size L of the representative volume increases.

-

The probability of “outliers” is reduced by the selection method just as suggested by the variance reduction, at least in an “intermediate” region between the “bulk” and the “outer tail” of the probability distribution: One has a moderate-deviations-type estimate of the form

$$\begin{aligned}&\mathbb {P}\Bigg [\frac{\big |a^{{\text {sel-RVE}}}_{ij}-a_{{\mathsf {hom}},ij}\big |}{\sqrt{\big (1-|\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}|^2+\delta ^2\big ){{\text {Var}}~}a^{{\text {RVE}}}_{ij}+L^{-d/2-\beta }}}\geqq s\Bigg ]\\&\quad \leqq \Big (1+\frac{C\delta }{\sqrt{1-|\rho |^2} s}+\frac{C}{\delta L^\beta }\Big )\mathbb {P}\big [|\mathcal {N}_1|\geqq s\big ]+\frac{C}{\delta }\exp (-L^{2\beta }) \end{aligned}$$for any \(s\geqq C\max \{(1-|\rho |^2)^{1/2} \delta ^{-1},\delta (1-|\rho |^2)^{-1/2}\}\) and some \(\beta =\beta (d)>0\), where \(\mathcal {N}_1\) denotes the centered normal distribution with unit variance.

-

In the above bounds, \(\kappa :=(1-|\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}|^2)^{-1}\) denotes (essentially) the condition number of the covariance matrix \({{\text {Var}}~}(a^{{\text {RVE}}},\mathcal {F}(a))\). For the case that the correlation \(|\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}|\) is close to one, we derive bounds which are independent of \(\kappa \) but come at the cost of a lower rate of convergence in L, namely

$$\begin{aligned} \big |\mathbb {E}\big [a^{{\text {sel-RVE}}}\big ]-a_{\mathsf {hom}}\big | \leqq \frac{C}{\delta } L^{-d/2-d/8} |\log L|^C \end{aligned}$$and

$$\begin{aligned} \frac{{{\text {Var}}~}a^{{\text {sel-RVE}}}}{{{\text {Var}}~}a^{{\text {RVE}}}} \leqq&1-(1-\delta ^2)\big |\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}\big |^2 +\frac{C r_{{\text {Var}}}}{\delta } L^{-d/8} |\log L|^C. \end{aligned}$$

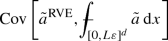

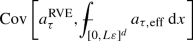

Our estimate on the variance reduction achieved by the selection approach for representative volumes is implicit in the sense that it is determined by the correlation coefficient

In fact, the failure of the correlation coefficient \(\rho _{\mathcal {F}(a),a^{{\text {RVE}}}}\) to be nonzero also implies the failure of gaining accuracy by the selection approach for the representative volumes (see Theorem 4): In such a case of vanishing correlation, the method of Le Bris et al. [64] is not superior (but essentially also not inferior) to the standard method of choosing a representative volume randomly.

This raises the question whether such a degeneracy of the correlation coefficient can occur for “natural” choices of the statistical quantity \(\mathcal {F}(a)\). In Theorem 4, we shall prove that even for a “natural” choice like  there is a priori no guarantee that there is a nonzero correlation between \(a^{{\text {RVE}}}\) and \(\mathcal {F}(a)\): We construct an example of a probability distribution of a for which the covariance of \(a^{{\text {RVE}}}\) and the average of the coefficient field

there is a priori no guarantee that there is a nonzero correlation between \(a^{{\text {RVE}}}\) and \(\mathcal {F}(a)\): We construct an example of a probability distribution of a for which the covariance of \(a^{{\text {RVE}}}\) and the average of the coefficient field  in fact vanishes, while the variances

in fact vanishes, while the variances  and \({{\text {Var}}~}a^{{\text {RVE}}}\) are nondegenerate.

and \({{\text {Var}}~}a^{{\text {RVE}}}\) are nondegenerate.

However, the failure of the variance reduction approaches to effectively reduce the variance is presumably limited to rather artificial examples: we prove that the covariance of \(a^{{\text {RVE}}}\) and the average of the coefficient field  is positive for coefficient fields which are obtained from iid random variables by applying a “monotone” function, see Proposition 5.

is positive for coefficient fields which are obtained from iid random variables by applying a “monotone” function, see Proposition 5.

For a multivariate Gaussian probability distribution, conditioning on the event of one variable being close to its expectation reduces the variance of the other variable, provided that the two random variables are nontrivially correlated. In our setting, conditioning on the event “spatial average of coefficient field is close to its expectation” reduces the variance of the random variable “approximation for the effective conductivity” \(a^{{\text {RVE}}}\), as their joint probability distribution is close to a multivariate Gaussian

1.3 Outline of Our Strategy

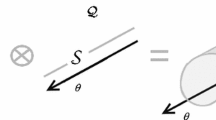

The basic idea underlying our analysis of the selection approach for representative volumes is the observation that the joint probability distribution of the approximation for the effective coefficient \(a^{{\text {RVE}}}\) and one or more statistical quantities \(\mathcal {F}(a)\) like the average of the coefficient field  is close to a multivariate Gaussian, up to an error of the order \(L^{-d} |\log L|^C\) in a suitable notion of distance between probability measures. The selection of representative volumes by the criterion (7)—which amounts to conditioning on the event \(|\mathcal {F}(a)-\mathbb {E}[\mathcal {F}(a)]|\leqq \delta \sqrt{{{\text {Var}}~}\mathcal {F}(a)}\)—then reduces the variance of the probability distribution of \(a^{{\text {RVE}}}\) by the variance explained by the statistical quantity \(\mathcal {F}(a)\), up to error terms due to the deviation of the probability distribution from a multivariate Gaussian and the non-perfectness of the conditioning \(\delta >0\), see Fig. 2. Note that for an ideal multivariate Gaussian distribution, the expected value of the approximation \(a^{{\text {RVE}}}\) would be left unchanged under conditioning since the criterion (7) is symmetric around \(\mathbb {E}[\mathcal {F}(a)]\), that is the conditioning would not introduce a bias. As a consequence, for our approximate multivariate Gaussian \((a^{{\text {RVE}}},\mathcal {F}(a))\) the expectation of \(a^{{\text {RVE}}}\) is changed under conditioning only by the distance of our probability distribution to a multivariate Gaussian, which is a higher-order term. Note that both the reduction of the variance by conditioning and the estimate on the bias introduced by the conditioning rely crucially on the fact that our probability distribution is close to a multivariate Gaussian (and not another probability distribution); it is obvious from the picture in Fig. 2 that a probability distribution other than a multivariate Gaussian could introduce a large bias under conditioning and even an increase in variance. Our analysis of the selection approach for representative volumes by Le Bris et al. [64] is a first practical application of the beautiful theory of fluctuations in stochastic homogenization, which has been developed in recent years and which our work both draws ideas from and contributes to.

is close to a multivariate Gaussian, up to an error of the order \(L^{-d} |\log L|^C\) in a suitable notion of distance between probability measures. The selection of representative volumes by the criterion (7)—which amounts to conditioning on the event \(|\mathcal {F}(a)-\mathbb {E}[\mathcal {F}(a)]|\leqq \delta \sqrt{{{\text {Var}}~}\mathcal {F}(a)}\)—then reduces the variance of the probability distribution of \(a^{{\text {RVE}}}\) by the variance explained by the statistical quantity \(\mathcal {F}(a)\), up to error terms due to the deviation of the probability distribution from a multivariate Gaussian and the non-perfectness of the conditioning \(\delta >0\), see Fig. 2. Note that for an ideal multivariate Gaussian distribution, the expected value of the approximation \(a^{{\text {RVE}}}\) would be left unchanged under conditioning since the criterion (7) is symmetric around \(\mathbb {E}[\mathcal {F}(a)]\), that is the conditioning would not introduce a bias. As a consequence, for our approximate multivariate Gaussian \((a^{{\text {RVE}}},\mathcal {F}(a))\) the expectation of \(a^{{\text {RVE}}}\) is changed under conditioning only by the distance of our probability distribution to a multivariate Gaussian, which is a higher-order term. Note that both the reduction of the variance by conditioning and the estimate on the bias introduced by the conditioning rely crucially on the fact that our probability distribution is close to a multivariate Gaussian (and not another probability distribution); it is obvious from the picture in Fig. 2 that a probability distribution other than a multivariate Gaussian could introduce a large bias under conditioning and even an increase in variance. Our analysis of the selection approach for representative volumes by Le Bris et al. [64] is a first practical application of the beautiful theory of fluctuations in stochastic homogenization, which has been developed in recent years and which our work both draws ideas from and contributes to.

The underlying reason for the convergence of the joint probability distribution of \(a^{{\text {RVE}}}\) and one or more functionals \(\mathcal {F}(a)\) towards a multivariate Gaussian is a central limit theorem for suitable collections of vector-valued random variables. We show that the approximation \(a^{{\text {RVE}}}\) for the effective coefficient \(a_{\mathsf {hom}}\)—and also the functionals \(\mathcal {F}(a)\) that are used in the work of Le Bris et al. [64]—may be written as a sum of random variables with a local dependence structure with multiple levels, see Definition 6 and Proposition 7. For such sums of vector-valued random variables with multilevel local dependence, a proof of quantitative normal approximation is provided in the companion article [43] (see also Theorem 9 below). To the best of our knowledge such quantitative normal approximation results were previously known only for sums of random variables with local dependence structure [33, 34, 80] (corresponding more or less to just the lowest level of random variables in Fig. 4 below), a framework into which the approximation for the effective coefficient \(a^{{\text {RVE}}}\) does not fit. Note that the sharp boundaries of the region defined by the selection criterion (7) (see also the sharp boundaries in Fig. 2) necessitate the use of a rather strong (though standard) distance between probability measures for our quantitative normal approximation result (see Definition 8); in particular, a stronger notion of distance between probability measures than the 1-Wasserstein distance must be used.

As a by-product, our work also provides a proof of quantitative normal approximation for \(a^{{\text {RVE}}}\) in a different setting than available in the literature so far. To the best of our knowledge, the results on quantitative normal approximation for \(a^{{\text {RVE}}}\) in the literature always rely on an assumption that the coefficient field a is obtained as a function of iid random variables [39, 52, 77] or that the probability distribution of a is subject to a second-order Poincaré inequality like in [38]. In contrast, our result holds under the assumption of finite range of dependence, in which to the best of our knowledge only a qualitative normal approximation result had been known [6].

The companion article [43] also provides a result on moderate deviations in the sense of Kramers for sums of random variables with multilevel local dependence structure, see Theorem 10. Our result on the reduction of the error by the selection approach for representative volumes in the case of unlikely events (Theorem 3) is based on this moderate deviations theorem.

Our counterexample for the variance reduction—which shows that even “natural” statistical quantities like the spatial average  do not necessarily explain a positive fraction of the variance of \(a^{{\text {RVE}}}\)—is based on the nonlinear dependence of the effective coefficient in periodic homogenization on the underlying coefficient field. More precisely, our counterexample consists of an interpolation between a standard random checkerboard and a random checkerboard with two types of tiles, one tile type being a constant coefficient field and one tile type being a second-order laminate microstructure; see Section 6 for details of the construction.

do not necessarily explain a positive fraction of the variance of \(a^{{\text {RVE}}}\)—is based on the nonlinear dependence of the effective coefficient in periodic homogenization on the underlying coefficient field. More precisely, our counterexample consists of an interpolation between a standard random checkerboard and a random checkerboard with two types of tiles, one tile type being a constant coefficient field and one tile type being a second-order laminate microstructure; see Section 6 for details of the construction.

1.4 Computation of Effective Properties of Random Materials: A More Detailed Look

In the homogenization of periodic linear materials—that is in the homogenization of the linear elliptic PDE (1) with periodic coefficient field a in the sense \(a(x)=a(x+\varepsilon k)\) for all \(k\in \mathbb {Z}^d\)—it is possible to compute the effective coefficient \(a_{\mathsf {hom}}\) by exploiting the periodicity of the coefficient field, basically reducing the problem to solving a PDE—the PDE for the homogenization corrector—on a single periodicity cell: for a period of length \(\varepsilon \), the effective coefficient is given by the cell formula

with the homogenization corrector \(\phi _i\) defined as the unique \(\varepsilon \)-periodic solution with zero average to the PDE

As a consequence, in periodic homogenization the numerical computation of the effective coefficient \(a_{\mathsf {hom}}\) typically requires only modest effort.

In contrast, in stochastic homogenization this simplification is no longer possible due to the absence of a periodic structure in the random coefficient field \(a^{\mathbb {R}^{d}}:\mathbb {R}^{d}\rightarrow \mathbb {R}^{d\times d}\) and the computation of the effective coefficient becomes a computationally costly problem. The effective coefficient in stochastic homogenization is given by the infinite volume limit cell formulaFootnote 3

with \(\phi _i^{{\text {L,Dir}}}\) denoting the solution to the corrector problem with Dirichlet boundary conditions

In practice, in order to approximate the effective coefficient \(a_{\mathsf {hom}}\) a representative volume \([0,L\varepsilon ]^d\) of finite size must be chosen. However, the approximation of the effective coefficient by the standard cell formula with Dirichlet boundary conditions for the corrector

is only of first-order accuracy \(\mathbb {E}[|a^{{\text {RVE}}}_{{\text {Dir}}}-a_{\mathsf {hom}}|^2]^{1/2}\lesssim L^{-1}\) due to the presence of a boundary layer: the artificial Dirichlet boundary condition leads to the creation of a boundary layer in an \(O(\varepsilon )\)-neighborhood of the boundary \(\partial [0,L\varepsilon ]^d\). The limitation to first-order accuracy is present even in the systematic error \(\mathbb {E}[a^{{\text {RVE}}}]-a_{\mathsf {hom}}\). Note that while replacing the volume average in the cell formula by an average taken strictly in the interior of the representative volume typically increases the accuracy [84], for general probability distributions it does not increase the order of convergence due to global effects of the boundary layer. To achieve the convergence rates \(|\mathbb {E}[a^{{\text {RVE}}}]-a_{\mathsf {hom}}|\lesssim L^{-d}|\log L|^d\) and \(\mathbb {E}[|a^{{\text {RVE}}}-a_{\mathsf {hom}}|^2]^{1/2} \lesssim L^{-d/2}\) stated in (6) and (5), the boundary layer phenomenon must necessarily be addressed by the use of a more careful approximation technique than the method of correctors with Dirichlet boundary data.

One possibility of avoiding the creation of boundary layers is the use of a so-called “periodization” of the probability distribution: Given a probability distribution of coefficient fields \(a^{\mathbb {R}^d}\), one first fixes the size \(L\varepsilon \) of the desired representative volume and then attempts to construct a probability distribution of \(L\varepsilon \)-periodic coefficient fields a such that the law of \(a|_{x+[0,\frac{1}{2} L\varepsilon ]^d}\) (i. e the law of a restricted to some box of half the size of the representative volume) coincides with the law of \(a^{\mathbb {R}^d}|_{x+[0,\frac{1}{2} L\varepsilon ]^d}\) for any \(x\in \mathbb {R}^d\). For one realization of the periodized probability distribution of coefficient fields a one may then solve the corrector equation \(-\nabla \cdot (a(e_i+\nabla \phi _i))=0\) with periodic boundary conditions on \(\partial [0,L\varepsilon ]^d\) and define the approximation \(a^{{\text {RVE}}}\) for the effective coefficient \(a_{\mathsf {hom}}\) as

This approximation \(a^{{\text {RVE}}}\) then has the desired approximation properties (5) and (6). Note that this construction requires the knowledge of the probability distribution of \(a^{\mathbb {R}^{d}}\) and must be done in a case-by-case basis; it is therefore not feasible in all practical situations.

To give an example, random non-overlapping inclusions like in Fig. 1 may be constructed by considering a Poisson point process on \(\mathbb {R}^d\times [0,1]\), ordering the points \((x_k,y_k)\in \mathbb {R}^d\times [0,1]\) with respect to their last coordinate \(y_k\), and then successively placing inclusions in \(\mathbb {R}^d\) centered at the \(x_k\) and with diameter \(\varepsilon \) if the “previous” points \(x_l\), \(l<k\), have a distance of at least \(\varepsilon \) from \(x_k\) (that is \(|x_l-x_k|\geqq \varepsilon \)). The result of such a construction is shown in Fig. 3a. For this probability distribution, one may define a periodization in a natural way by considering a Poisson point process on \([0,L\varepsilon )^d\times [0,1]\) and defining an \(L\varepsilon \)-periodic coefficient field with non-overlapping inclusions in the obvious way, replacing the Euclidean distance \(|x_l-x_k|\) by the periodicity-adjusted distance \(|x_l-x_k|_{{{\text {per}}}}:= \inf _{z\in \mathbb {Z}^d} |x_l-x_k+L\varepsilon z|\). A sample from the periodized probability distribution is shown in Fig. 3b.

If no periodization of the probability distribution is available—for example if only samples from the probability distribution are available and the underlying probability distribution is not known, like in applications where one has access to samples of the materials—, one has to resort to an alternative means of increasing the rate of convergence of the method of representative volumes. One feasible option is to “screen” the effect of the boundary by introducing a “massive” term in the PDE for the homogenization corrector [24, 47, 54]: Fixing a scale \(\sqrt{T} \sim \frac{L}{\log L}\), one replaces the equation for the homogenization corrector by the PDE

and approximates the effective coefficient \(a_{\mathsf {hom}}\) by

where \(\eta \) is a smooth nonnegative weight supported in the slightly smaller box \([\frac{1}{8} L\varepsilon ,(1-\frac{1}{8})L\varepsilon ]^d\). In up to four spatial dimensions \(d\leqq 4\), this approximation also admits error estimates of the form

and

Due to the already substantial length of the present paper, we shall limit ourselves to the analysis of the selection approach for representative volumes in the context of periodizations of the probability distribution and defer the analysis of the screening approach to a future work.

Generally speaking, in the method of representative volumes the equation for the homogenization corrector may be solved by any numerical algorithm that is feasible for the given size of the representative volume; for example, standard finite element methods may be employed for representative volumes of moderate size, while for very large representative volumes one may use appropriate instances of modern computational homogenization methods like the multiscale finite element method, heterogeneous multiscale methods, and related approaches (see for example [1, 14, 29, 40, 60, 61, 71]) or the local orthogonal decomposition method by Målqvist and Peterseim [70].

Note that besides the modern numerical homogenization methods—which are in principle applicable to any elliptic PDE involving a heterogeneous coefficient field—there have been numerous numerical works on the more specific problem of the approximation of effective coefficients in stochastic homogenization, see for example [13, 32, 41, 42, 62, 72, 79].

1.5 The Selection Approach for Representative Volumes by Le Bris, Legoll and Minvielle

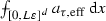

Let us describe the selection approach for representative volumes by Le Bris et al. [64] in more detail. The selection approach for representative volumes achieves its gain in accuracy of approximations \(a^{{\text {RVE}}}\) for the effective coefficient \(a_{\mathsf {hom}}\) (as compared to the standard representative volume element method with completely random choice of the material sample) by selecting only those realizations of the random coefficient field \(a|_{[0,L\varepsilon ]^d}\) which capture some important statistical properties of the coefficient field a in an exceptionally good way. For example, in the simplest setting Le Bris et al. [64] propose to restrict one’s attention to realizations of the coefficient field a for which the average on \([0,L\varepsilon ]^d\) is exceptionally close to its expected value in the sense that

for some \(\delta \ll 1\). Note that for generic realizations of a only

is true by the central limit theorem for the averages  and the finite range of dependence \(\varepsilon \).

and the finite range of dependence \(\varepsilon \).

On a numerical level, such a selection approach typically provides an increase in computational efficiency if the accuracy is indeed increased by conditioning on the event (9): usually, the most expensive step in the computation of the approximations \(a^{{\text {RVE}}}\) is the computation of the homogenization corrector as the solution to the PDE (3). In contrast, the generation of random coefficient fields a and the evaluation of the average of a is typically cheap. Therefore it is often worth generating about \(\frac{1}{\delta }\) independent realizations of a to obtain on average one realization of a which satisfies (9); for this single realization, the corrector equation (3) is solved numerically and the approximation \(a^{{\text {RVE}}}\) for the effective coefficient is computed. This strategy is also applicable to situations in which the probability distribution of the coefficient field is not known, but one has only access to a large number of samples of the coefficient field, like in applications in which one has access to data from actual material samples.

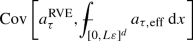

The selection criterion (9) based on the average of the coefficient field in the material sample is the first out of two selection criteria proposed by Le Bris et al. [64]. In order to reduce the variance of \(a^{{\text {RVE}}}\) further, they propose to consider several such statistical quantities at the same time, for example in addition to the spatial average

the quantities

for some (approximation of the) solution \(v_i\) to the constant-coefficient equation

require that all of these statistical quantities be close to their expectation at the same time. The quantities (10) arise as a second-order correction to the effective conductivity \(a^{{\text {RVE}}}\) in the expansion in the regime of small ellipticity contrast: Expanding the homogenization corrector \(\phi _i\) and the approximate effective conductivity \(a^{{\text {RVE}}}\) as a power series in \(\nu \) for the family of coefficient fields

we deduce

with \(\phi _i^0\equiv 0\), \(\phi _i^1=v_i\), and \(\phi _i^2\) defined as the solution to another PDE. As a consequence, for the approximation of the effective conductivity we obtain

where in the last step we have used the periodicity of \(\phi _i^2\). To see that the contribution of \(v_i\) is actually of second order in \(\nu \), one uses again \(a={\text {Id}}+\nu {\hat{a}}\) and the periodicity of \(v_i\).

By selecting the representative volumes by the two criteria (9) and

at the same time, in the model problem of the random checkerboard with an ellipticity ratio of 5 Le Bris, Legoll, and Minvielle were able to reduce the variance of the approximations \(a^{{\text {sel-RVE}}}\) for the effective conductivity by a factor of 60, compared to the approximations \(a^{{\text {RVE}}}\) by the standard representative volume element method.

Another remarkable feature of the selection approach for representative volumes by Le Bris, Legoll, and Minvielle is its compatibility with the vast majority of numerical homogenization methods: As the selection approach for representative volumes operates at the level of the choice of the coefficient field a, it may be combined with essentially any numerical discretization method for the corrector problem (59). Note that there exist many numerical homogenization methods that are particularly well-adapted to certain geometries of the microstructure; the selection approach for representative volumes may be employed in most of these methods to achieve a further speedup.

The selection approach for representative volumes is only one out of several variance reduction concepts in the context of stochastic homogenization: Blanc et al. [22, 23, 25] have succeeded in reducing the variance by the method of antithetic variables; note that however for this approach the achievable variance reduction factor is much more limited. The method of control variates has also been demonstrated to be successful in the context of the computation of effective coefficients in stochastic homogenization [25, 65].

1.6 A Brief Overview of Quantitative Stochastic Homogenization

For the sake of completeness, let us give a short overview of the tremendous progress that has been achieved in the quantitative theory of stochastic homogenization in recent years. The earliest (non-optimal) quantitative homogenization results for linear elliptic equations are due to Yurinskiĭ [85]. A decade later, Naddaf and Spencer [76] introduced the use of spectral gap inequalities in stochastic homogenization and derived optimal fluctuation estimates in the regime of small ellipticity contrast \(||a-{\text {Id}}||_{L^\infty } \ll 1\), that is in a perturbative setting. Another decade later, Caffarelli and Souganidis derived the first—though only logarithmic—rates of convergence for nonlinear stochastic homogenization problems [31]. Gloria and Otto [53, 54] and Gloria et al. [49] succeeded in the derivation of optimal homogenization rates for discrete linear elliptic equations with i. i. d. random conductances. Subsequently, these results were generalized to elliptic equations on \(\mathbb {R}^d\) and correlated probability distributions by Gloria et al. [50, 51]. For coefficient fields a whose correlations decay quickly on scales larger than \(\varepsilon >0\), these quantitative estimates for the homogenization error—that is, for the difference between the solutions to the PDE with the random coefficient field (1) and its homogenized approximation (2)—read

with \(\mathcal {C}(a)\) satisfying stretched exponential moment bounds and for suitable \(p=p(d)\). Armstrong and Smart [9] were the first to obtain power-law rates of convergence for nonlinear equations, deriving and employing an Avellanda–Lin type regularity estimate [12]; see also Armstrong and Mourrat [8]. Their estimates also come with optimal—almost Gaussian—stochastic moment bounds. Recently, the progress in stochastic homogenization culminated in the derivation of the optimal homogenization rates with optimal stochastic moment bounds by Armstrong, et al. [5] and Gloria and Otto [55]: For finite range of dependence \(\varepsilon \), a quantitative error bound for the homogenization error of the form (12) holds true with a random constant \(\mathcal {C}(a)\) with almost Gaussian moments \(\mathbb {E}[\exp (\mathcal {C}(a)^{2-\delta }/C(\delta ))]\leqq 2\) for any \(\delta >0\).

Higher-order approximation results in terms of homogenized problems have been derived in [19,20,21, 56, 69], relying on the concept of higher-order correctors which was first used in the stochastic homogenization context in [44] to establish Liouville principles of arbitrary order in the spirit of Avellaneda and Lin’s result in periodic homogenization [11]. Further works in quantitative stochastic homogenization include the analysis of nondivergence form equations [7], a regularity theory up to the boundary [45], degenerate elliptic equations [2, 46], and the homogenization of parabolic equations [3, 66]. Recently, Armstrong and Dario [4] and Dario [36] succeeded in establishing quantitative homogenization for supercritical Bernoulli bond percolation on the standard lattice.

The fluctuations of the mathematical objects arising in the stochastic homogenization of linear elliptic PDEs have been the subject of a beautiful series of works, starting with the work of Nolen [77] and a subsequent work of Gloria and Nolen [52] on quantitative normal approximation for (a single component of) the approximation of the effective conductivity \(a^{{\text {RVE}}}\) and a work of Mourrat and Otto [74] on the correlation structure of fluctuations in the homogenization corrector \(\phi _i\). Mourrat and Nolen [73] have shown a quantitative normal approximation result for the fluctuations of the corrector. Gu and Mourrat [57] have derived a description of fluctuations in the solutions to the equation with random coefficient field (1). Recently, a pathwise description of fluctuations of the solutions to the equation with random coefficient field (1)—namely, in terms of deterministic linear functionals of the so-called homogenization commutator\(\Xi :=(a-a_{\mathsf {hom}})({\text {Id}}+\nabla \phi )\), a random field converging (for \(\varepsilon \rightarrow 0\)) towards white noise—was developed by Duerinckx et al. [39]. The scaling limit of certain energetic quantities—related to the homogenization commutator—as well as the scaling limit of the homogenization corrector has been identified in the setting of finite range of dependence by Armstrong et al. [5]. As far as quantitative normal approximation results are concerned, all of these works work under the assumption of i.i.d. coefficients (in the discrete setting) or second-order Poincaré inequalities. To the best of our knowledge, the present work provides the first quantitative description of fluctuations (though so far limited to the approximation of the effective conductivity \(a^{{\text {RVE}}}\)) when the decorrelation in the coefficient field is quantified by the assumption of finite range of dependence instead of functional inequalities.

Note that despite its long history [35, 63, 67, 78], the qualitative theory of stochastic homogenization has also been a very active area of research in the past years, see for example [10, 27, 58, 59]; however, due to the substantial length of the present manuscript we shall not provide a more detailed discussion and refer the reader to these references instead.

Notation Throughout the paper, we shall use standard notation for Sobolev spaces and weak derivatives; for a space-time function v(x, s), we denote by \(\nabla v\) its spatial gradient (in the weak sense) and by \(\partial _s v\) its (weak) time derivative. The notation  is used for the average integral over a set B of positive but finite Lebesgue measure. The space of measurable functions f with \(||f||_{L^p}:=(\int _{\mathbb {R}^d} |f|^p \,\mathrm{d}x)^{1/p}<\infty \) will be denoted by \(L^p\). By \(L^p_{loc}\) we denote the space of functions f with \(f\chi _{\{|x|\leqq R\}}\in L^p\) for all \(R<\infty \). We shall also use the weighted space \(L^p_{h}\) of functions with \(||f||_{L^p_h}:=(\int _{\mathbb {R}^d} |f(x)|^p h(x) \,\mathrm{d}x)^{1/p}<\infty \) for a nonnegative measurable weight function h. By \(H^1(\mathbb {R}^d)\) we denote as usual the Sobolev space of functions \(v\in L^2(\mathbb {R}^d)\) with \(\nabla v\in L^2(\mathbb {R}^d)\); similarly, \(H^1_{loc}(\mathbb {R}^d)\) is the space of functions v with \(v\in L^2_{loc}(\mathbb {R}^d)\) and \(\nabla v\in L^2_{loc}(\mathbb {R}^d)\). For a Banach space X we denote by \(L^p([0,T];X)\) the usual Lebesgue–Bochner space.

is used for the average integral over a set B of positive but finite Lebesgue measure. The space of measurable functions f with \(||f||_{L^p}:=(\int _{\mathbb {R}^d} |f|^p \,\mathrm{d}x)^{1/p}<\infty \) will be denoted by \(L^p\). By \(L^p_{loc}\) we denote the space of functions f with \(f\chi _{\{|x|\leqq R\}}\in L^p\) for all \(R<\infty \). We shall also use the weighted space \(L^p_{h}\) of functions with \(||f||_{L^p_h}:=(\int _{\mathbb {R}^d} |f(x)|^p h(x) \,\mathrm{d}x)^{1/p}<\infty \) for a nonnegative measurable weight function h. By \(H^1(\mathbb {R}^d)\) we denote as usual the Sobolev space of functions \(v\in L^2(\mathbb {R}^d)\) with \(\nabla v\in L^2(\mathbb {R}^d)\); similarly, \(H^1_{loc}(\mathbb {R}^d)\) is the space of functions v with \(v\in L^2_{loc}(\mathbb {R}^d)\) and \(\nabla v\in L^2_{loc}(\mathbb {R}^d)\). For a Banach space X we denote by \(L^p([0,T];X)\) the usual Lebesgue–Bochner space.

As usual, we shall denote by C and c constants whose value may change from occurrence to occurrence. We are going to use the notation \(\mathcal {C}(a)\) and similar expressions to denote a random constant subject to suitable moment bounds; again, the precise value of \(\mathcal {C}(a)\) may change from occurrence to occurrence.

For a vector \(v\in \mathbb {R}^m\) we denote by |v| its Euclidean norm. We denote the identity matrix in \(\mathbb {R}^{N\times N}\) by \({\text {Id}}\) or \({\text {Id}}_N\). For a matrix \(A\in \mathbb {R}^{m\times m}\) we shall denote by |A| its natural norm \(|A|:=\max _{v,w\in \mathbb {R}^m,|v|=|w|=1} |v\cdot A w|\) and by \(A^*\) its transpose (as all our matrices are real). For \(x\in \mathbb {R}^d\) we denote by \(|x|_\infty =\max _i |x_i|\) its supremum norm. By \(|x-y|_{{\text {per}}}\) respectively (for sets) \({\text {dist}}_{{\text {per}}}(U,V)\), we denote the periodicity-adjusted distance (in the context of the torus \([0,L\varepsilon ]^d\)). By \(|x-y|_\infty ^{{{\text {per}}}}\) and \({\text {dist}}^{{\text {per}}}_\infty (x,y)\), we denote the corresponding distances associated with the maximum norm. For a positive definite matrix A, we denote by \(\kappa (A)\) its condition number.

Given a positive definite symmetric matrix \(\Lambda \in \mathbb {R}^{N\times N}\), we denote the Gaussian with covariance matrix \(\Lambda \) by

For \(\gamma >0\), we equip the space of random variables X with stretched exponential moment \(\mathbb {E}[\exp (|X|^\gamma /a)]<\infty \) for some \(a=a(X)>0\) with the norm \(||X||_{\exp ^\gamma }:=\sup _{p\geqq 1} p^{-1/\gamma } \mathbb {E}[|X|^p]^{1/p}\). For a discussion of this choice of norm, see Appendix B.

For a map \(f:\mathbb {R}^N\rightarrow V\) into a normed vector space V, we denote for any \(r>0\) by \({{\text {osc}}}_r f(x_0):=\sup _{x,y\in \{|x-x_0|\leqq r\}} |f(x)-f(y)|_V\) its oscillation in the ball of radius r around \(x_0\).

The conditional expectation of a random variable X given Y is denoted by \(\mathbb {E}[X|Y]\).

2 Main Results

In the present work, we establish a rigorous justification of the selection approach for representative volumes by Le Bris et al. [64] in the context of stochastic homogenization of linear elliptic PDEs for quite general probability distributions of the coefficient field \(a^{\mathbb {R}^d}\). Our only assumptions on the probability distribution of the coefficient field \(a^{\mathbb {R}^d}:\mathbb {R}^d\rightarrow \mathbb {R}^{d\times d}\) are uniform ellipticity and boundedness, stationarity, and finite range of dependence, which is a standard set of assumptions in stochastic homogenization [9, 55] (note that we equip the space of uniformly elliptic and bounded coefficient fields with the topology of Murat and Tartar’s H-convergence [75]). Let us remark that all of our results and proofs are also valid in the case of strongly elliptic systems, upon adapting the notation in the obvious way:

-

(A1)

Uniform ellipticity of a coefficient field a as usual means that there exists a positive real number \(\lambda >0\) such that almost surely we have \(a(x)v\cdot v \geqq \lambda |v|^2\) for almost every \(x\in \mathbb {R}^d\) and every \(v\in \mathbb {R}^d\). Furthermore we assume uniform boundedness in the sense that almost surely \(|a(x)v|\leqq \frac{1}{\lambda }|v|\) holds for almost every \(x\in \mathbb {R}^d\) and every \(v\in \mathbb {R}^d\).

-

(A2)

Stationarity means that the law of the shifted coefficient field \(a(\cdot +x)\) must coincide with the law of \(a(\cdot )\) for every \(x\in \mathbb {R}^d\). On a heuristic level, this means that “the probability distribution of a is everywhere the same” or, in other words, that the material is spatially statistically homogeneous.

-

(A3)

Finite range of dependence\(\varepsilon \) means that for any two Borel sets \(A,B\subset \mathbb {R}^d\) with \({\text {dist}}(A,B)\geqq \varepsilon \) the restrictions \(a|_A\) and \(a|_B\) must be stochastically independent. In particular, this assumption restricts the correlations in the coefficient field to the scale \(\varepsilon \ll 1\).

Note that these assumptions include for example the case of a two-material composite with random (either overlapping or non-overlapping) inclusions of diameter \(\varepsilon \), the centers distributed according to a Poisson point process (up to removal in case of overlap); see Fig. 3a. Further examples include coefficient fields \(a^{\mathbb {R}^d}(x):=\xi ({\tilde{a}}(x))\) that arise by pointwise application of a nonlinear function \(\xi :\mathbb {R}^{d\times d}\rightarrow \mathbb {R}^{d\times d}\) to a (tensor-valued) stationary Gaussian random field \({\tilde{a}}\) with finite range of dependence \(\varepsilon \) and integrable correlations, provided that the function \(\xi \) is Lipschitz and takes values in the set of uniformly elliptic and bounded matrices.

For the approximation of the effective coefficient \(a_{\mathsf {hom}}\), it is of advantage to work with a so-called periodization of the stationary ensemble of random coefficient fields \(a^{\mathbb {R}^d}\) (employing terminology from statistical mechanics, a probability measure on the space of coefficient fields shall also be called an ensemble of coefficient fields). By a periodization of an ensemble of coefficient fields \(a^{\mathbb {R}^d}\) we understand an ensemble of coefficient fields a which are almost surely \(L\varepsilon \mathbb {Z}^d\)-periodic for some \(L\gg 1\) and for which the probability distribution of a on each cube of size of half the period \(\frac{L\varepsilon }{2}\) coincides with the probability distribution of the original coefficient field \(a^{\mathbb {R}^d}\), that is for which the probability distribution of \(a|_{x+[0,L\varepsilon /2]^d}\) coincides with the distribution of \(a^{\mathbb {R}^d}|_{x+[0,L\varepsilon /2]^d}\) for all \(x\in \mathbb {R}^d\). For such a periodization, the condition (A3) is replaced by the following conditions (A3\(_a\)), (A3\(_b\)), (A3\(_c\)):

-

(\(\hbox {A3}_a\)) The coefficient field a is almost surely \(L \varepsilon \mathbb {Z}^d\)-periodic.

-

(\(\hbox {A3}_b\)) There exists a finite range of dependence\(\varepsilon >0\) such that for any two measurable \(L \varepsilon \mathbb {Z}^d\)-periodic sets \(A,B\subset \mathbb {R}^d\) with \({\text {dist}}(A,B)\geqq \varepsilon \) the restrictions \(a|_A\) and \(a|_B\) are stochastically independent.

-

(\(\hbox {A3}_c\)) For any \(x_0\in \mathbb {R}^d\) the law of the restriction \(a|_{x_0+[-\frac{L\varepsilon }{4},\frac{L\varepsilon }{4}]^d}\) coincides with the corresponding law for some (non-periodic) ensemble of coefficient fields \(a^{\mathbb {R}^d}\) satisfying (A1)–(A3).

Furthermore, to include examples like the random checkerboard in our analysis, we need the following notion of discrete stationarity:

-

(A2’)

We say that our probability distribution of coefficient fields a satisfies discrete stationarity if the law of the shifted coefficient field \(a(\cdot +x)\) coincides with the law of \(a(\cdot )\) for every shift \(x\in \varepsilon \mathbb {Z}^d\).

Our main assumptions stated in Assumption 1 below consist of two parts. First, we assume that the probability distribution of coefficient fields \(a^{\mathbb {R}^d}\) satisfies the standard assumptions from stochastic homogenization and that there exists a suitable periodization a of the probability distribution. Second, we require the statistical quantities \(\mathcal {F}(a)\) to admit a “multilevel local dependence structure decomposition” as introduced in Definition 6 below. Let us remark that both the spatial average

and the higher-order quantity \(\mathcal {F}_{2-\mathrm{point}}(a)\) considered by Le Bris et al. [64] as defined in (10) satisfy the conditions in Definition 6; a proof of this fact is provided in Proposition 7 below. As a consequence, both the spatial average \(\mathcal {F}_{avg}(a)\) and the higher-order quantity \(\mathcal {F}_{2-\mathrm{point}}(a)\) may be chosen as the statistical quantities by which the selection of representative volumes is performed in our main theorems Theorem 2 and Theorem 3.

Assumption 1

(Assumptions and Notation) Consider a probability distribution of random coefficient fields \(a^{\mathbb {R}^d}\) on \(\mathbb {R}^d\), \(d\geqq 1\), which satisfies the conditions of ellipticity, stationarity, and finite range of dependence (A1)–(A3). Let \(L\geqq 2\) and suppose that there exists an \(L\varepsilon \)-periodization a of the probability distribution of \(a^{\mathbb {R}^d}\) subject to (A1), (A2), (A3\(_a\))–(A3\(_c\)). Denote by \(a^{{\text {RVE}}}\) the approximation for the effective coefficient \(a_{\mathsf {hom}}\) by the standard representative volume element method with a material sample of size \([0,L\varepsilon ]^d\), that is set

with \(\phi _i\) being the unique \(L\varepsilon \)-periodic solution with vanishing average to the corrector equation

Let \(\mathcal {F}(a)=(\mathcal {F}_1(a),\ldots ,\mathcal {F}_N(a))\) be a collection of statistical quantities of the coefficient field a which are subject to the conditions of Definition 6 with \(K\leqq C_0\), \(B\leqq C_0 |\log L|^{C_0}\), and \(\gamma \geqq c_0\) for some \(0<c_0,C_0<\infty \). Suppose that the covariance matrix of \(\mathcal {F}(a)\) is nondegenerate and bounded in the natural scaling in the sense

For any \(1\leqq i,j\leqq d\) introduce the condition number \(\kappa _{ij}\) of the covariance matrix of \((a^{{\text {RVE}}}_{ij},\mathcal {F}(a))\)

and the ratio \(r_{{\text {Var}},ij}\) between the expected order of fluctuations and the actual fluctuations of the approximation \(a^{{\text {RVE}}}_{ij}\)

Denote by C a constant depending on d, \(\lambda \), \(\gamma \), N, and \(C_0\).

Under the above assumptions, the selection approach for representative volumes to capture certain statistical properties of the material in the representative volume particularly well—as proposed by Le Bris et al. [64]—leads to the following increase in accuracy of the computed material coefficients:

Theorem 2

(Justification of the Selection Approach for Representative Volumes) Let the assumptions and notations of Assumption 1 be in place. Denote by \(a^{{\text {sel-RVE}}}\) the approximation for the effective coefficient \(a_{\mathsf {hom}}\) by the selection approach for representative volumes introduced by Le Bris et al. [64] in the case of a representative volume of size \(L\varepsilon \). Suppose that the representative volumes \(a|_{[0,L\varepsilon ]^d}\) are selected from the periodized probability distribution according to the criterion

for some \(\delta \in (0,1]\). Let the selection criterion be chosen not too strict in the sense that \(\delta ^N \geqq C L^{-d/2} |\log L|^{C(d,\gamma ,C_0)}\). Then the selection approach for representative volumes is subject to the following error analysis:

-

(a)

The systematic error of the approximation \(a^{{\text {sel-RVE}}}\) satisfies the estimate

$$\begin{aligned} \big |\mathbb {E}\big [a^{{\text {sel-RVE}}}\big ]-a_{\mathsf {hom}}\big | \leqq \frac{C \kappa _{ij}^{3/2}}{\delta ^N} L^{-d} |\log L|^{C(d,\gamma )}. \end{aligned}$$(15) -

(b)

The variance of the approximation \(a^{{\text {sel-RVE}}}\) is estimated from above by

$$\begin{aligned} \frac{{{\text {Var}}~}a^{{\text {sel-RVE}}}_{ij}}{{{\text {Var}}~}a^{{\text {RVE}}}_{ij}} \leqq 1-(1-\delta ^2) |\rho |^2 + \frac{C \kappa _{ij}^{3/2}r_{{\text {Var}},ij}}{\delta ^N} L^{-d/2} |\log L|^{C(d,\gamma )}, \end{aligned}$$(16)where \(|\rho |^2\) is the fraction of the variance of \(a^{{\text {RVE}}}_{ij}\) explained by the \(\mathcal {F}(a)\), that is, \(|\rho |^2\) is the maximum of the squared correlation coefficient between \(a^{{\text {RVE}}}_{ij}\) and any linear combination of the \(\mathcal {F}_n(a)\). The explained fraction of the variance is given by the formula

$$\begin{aligned} |\rho |^2 := \frac{{\text {Cov}}[a^{{\text {RVE}}}_{ij},\mathcal {F}(a)] \cdot ({{\text {Var}}~}\mathcal {F}(a))^{-1} {\text {Cov}}[\mathcal {F}(a),a^{{\text {RVE}}}_{ij}]}{{{\text {Var}}~}a^{{\text {RVE}}}_{ij}}. \end{aligned}$$(17) -

(c)

The probability that a randomly chosen coefficient field a satisfies the selection criterion (14) is at least

$$\begin{aligned} \mathbb {P}\big [|\mathcal {F}(a)-\mathbb {E}\big [\mathcal {F}(a)\big ]|\leqq \delta L^{-d/2}\big ] \geqq c(N) \delta ^N. \end{aligned}$$(18) -

(d)

The systematic error and the variance of \(a^{{\text {sel-RVE}}}\) may be estimated independently of \(\kappa _{ij}\) at the price of lower rate of convergence in L

$$\begin{aligned} \big |\mathbb {E}\big [a^{{\text {sel-RVE}}}\big ]-a_{\mathsf {hom}}\big | \leqq \frac{C}{\delta ^N} L^{-d/2-d/8} |\log L|^{C(d,\gamma )} \end{aligned}$$(19)and

$$\begin{aligned} \frac{{{\text {Var}}~}a^{{\text {sel-RVE}}}_{ij}}{{{\text {Var}}~}a^{{\text {RVE}}}_{ij}} \leqq 1-(1-\delta ^2) |\rho |^2 + \frac{Cr_{{\text {Var}},ij}}{\delta ^N} L^{-d/8} |\log L|^{C(d,\gamma )}. \end{aligned}$$(20)

The previous theorem states that the approximation of effective coefficients by the selection approach for representative volumes is essentially at least as accurate as a random selection of samples (except for a possible additional relative error of the order \(C L^{-d/2} |\log L|^C\), which however converges to zero quickly as L increases), at least when measuring the mean-square error. If the selection is based on a statistical quantity \(\mathcal {F}(a)\) which is capable of explaining a large part of the variance of \(a^{{\text {RVE}}}_{ij}\), the selection approach achieves a much better accuracy than a random selection of samples (namely, by a factor of about \(\sqrt{1-|\rho |^2}\)).

However, the previous theorem only provides a statement about the reduction of the mean-square error by the selection approach for representative volumes. A natural question is whether this reduction of the error also applies to rare events: More precisely, if we fix a small probability \(p>0\), is the bound on the error \(|a^{{\text {sel-RVE}}}_{ij}-a_{{\mathsf {hom}},ij}|\) which holds with probability \(1-p\) also improved as suggested by the variance reduction estimate (16)? The following theorem shows that this is in fact true for “moderate deviations”, that is basically for probabilities \(p\gtrsim \exp (-L^\beta )\) for some \(\beta >0\). More precisely, the theorem is to be read as follows: up to error terms that converge to zero as \(L\rightarrow \infty \) and \(s\rightarrow \infty \), the probability of \(a^{{\text {sel-RVE}}}_{ij}\) deviating from \(a_{{\mathsf {hom}},ij}\) by more than s times the ideally reduced standard deviation \(\sqrt{(1-|\rho |^2){{\text {Var}}~}a^{{\text {RVE}}}_{ij}}\) behaves like the probability of a normal distribution deviating from its mean by more than s standard deviations, at least in some regime \(s\leqq L^{\beta /3}\).

Theorem 3

Let the assumptions and notations of Theorem 2 be in place. Suppose in addition \(L\geqq C\). Then the selection approach for representative volumes leads to a reduction of the “outliers” of the probability distribution of \(a^{{\text {sel-RVE}}}\) in the sense of the moderate-deviations-type bound

for any \(s\geqq \max \big \{1,\frac{\delta }{\sqrt{1-|\rho |^2}}\big \}\) and some \(\beta =\beta (d)>0\).

We have shown in the preceding two theorems that the selection approach for representative volumes by Le Bris et al. essentially does not increase the error; it succeeds in reducing the fluctuations of the approximations as soon as the functionals \(\mathcal {F}(a)\) and the approximation \(a^{{\text {RVE}}}\) have a nonzero covariance.

However, as we shall show in the next theorem there exist cases in which the selection approach for representative volumes in fact fails to reduce the variance significantly, even for a “natural” statistical quantity like the average of the coefficient field

Theorem 4

(Possible Failure of the Reduction of the Variance) Suppose that the assumptions of Theorem 2 hold. Then the estimate (16) on the reduction of the variance is sharp in the sense

Furthermore, for \(d\geqq 2\) there exist \(L\varepsilon \)-periodic probability distributions of coefficient fields a which satisfy the conditions of ellipticity, discrete stationarity, and finite range of dependence (A1), (A2’), (A3\(_a\))–(A3\(_c\)) with the following property: the covariance of \(a^{{\text {RVE}}}\) and the spatial average  vanishes

vanishes

while the fluctuations of \(a^{{\text {RVE}}}\) and  are nondegenerate in the sense that

are nondegenerate in the sense that

for some universal constant c. These coefficient fields may be chosen to be of the form \(a(x)={\tilde{a}}(x){\text {Id}}\) for some scalar random field \({\tilde{a}}\).

As a consequence, for these probability distributions of coefficient fields the selection approach for representative volumes based on the spatial average  fails to efficiently reduce the variance in the sense that

fails to efficiently reduce the variance in the sense that

Let us note that it is presumably not too difficult to replace the random checkerboard in our construction of the counterexample featuring (23) by random spherical inclusions distributed according to a Poisson point process (with overlaps of the inclusions). This would yield a counterexample subject to the continuous stationarity (A2).

The next theorem suggests that the failure of effective variance reduction is atypical and may be limited to rather artificial examples. For a large class of random coefficient fields—namely for coefficient fields that are obtained from a collection of iid random variables \(\xi _{k}\), \(k\in \varepsilon \mathbb {Z}^d\), by applying a stationary monotone map with finite range of dependence—the correlation coefficient between \(a^{{\text {RVE}}}\) and the average  is bounded from below by a positive number. Therefore, for such (ensembles of) coefficient fields both the method of special quasirandom structures and the method of control variates in fact reduce the variance by some factor \(\tau <1\) when applied with the choice

is bounded from below by a positive number. Therefore, for such (ensembles of) coefficient fields both the method of special quasirandom structures and the method of control variates in fact reduce the variance by some factor \(\tau <1\) when applied with the choice  .

.

Proposition 5

(Reduction of the Variance for a Large Class of Coefficient Fields) Let \(\varepsilon >0\) and let \(L\geqq 2\) be an integer and let V denote some measure space. Let \((\Gamma _k)\), \(k\in \varepsilon \mathbb {Z}^d\cap [0,L\varepsilon )^d\), be a collection of independent identically distributed V-valued random variables, and denote by \(({\tilde{\Gamma }}_k)\) an independent copy. Extend \(\Gamma _k\) to \(k\in \varepsilon \mathbb {Z}^d\) by \(L\varepsilon \)-periodicity. For \(k\in \varepsilon \mathbb {Z}^d\) and \(z\in V\), denote by \(\Delta _{k,z} \Gamma \) the collection \(({\tilde{\Gamma }}_k)\) obtained by setting \({\tilde{\Gamma }}_k:=z\) and \({\tilde{\Gamma }}_j=\Gamma _j\) for all \(j\ne k\).

Let \(a=a(x,\Gamma )\) be a measurable map into the uniformly elliptic \(L\varepsilon \)-periodic symmetric coefficient fields with the property that \(a(x,\Gamma )\) depends only on the \(\Gamma _k\) with \(|x-k|_{{\text {per}}}\leqq K\varepsilon \) for some \(K\geqq 1\) (in a measurable way). Suppose that the map is stationary in the sense that \(a(x+y,\Gamma )=a(x,\Gamma _{\cdot +y})\) for any \(y\in \varepsilon \mathbb {Z}^d\).

Suppose that the dependence of a on \(\Gamma \) is monotone in the sense that for every \(k\in \varepsilon \mathbb {Z}^d\) and every pair \(z_1,z_2 \in V\), either for all x the inequality

holds, or for all x the reverse inequality

holds. Suppose furthermore that there exists \(\nu >0\) such that we have the quantified monotonicity

for all \(x\in [0,L \varepsilon )^d\) and all \(\Gamma \), where \(\big (a(x,\Gamma )-a(x,\Delta _{k,{\tilde{\Gamma }}_k}\Gamma )\big )_+^{1/2}\) denotes the matrix square root and where \({\tilde{\Gamma }}\) denotes an independent copy of \(\Gamma \).

Then the probability distribution of \(a=a(x,\Gamma )\) satisfies the conditions of ellipticity, periodicity, and finite range of dependence (A1), (A3\(_a\)), and (A3\(_b\)) (with \(\varepsilon \) replaced by \(4K\varepsilon \)), as well as the discrete stationarity (A2’). Furthermore, for such coefficient fields a the correlation between \(\xi \cdot a^{{\text {RVE}}}\xi \) (where \(\xi \in \mathbb {R}^d\) is any nonzero vector) and the average

is bounded from below by a positive number in the sense

In the statements of our main theorems, we have made use of the following notion of “multilevel local dependence decomposition”; this structure will also be at the heart of the proof of our main results (an illustration of this decomposition is provided in Fig. 4):

Definition 6

(Sums of Random Variables with Multilevel Local Dependence Structure) Let \(d\geqq 1\), \(N\in \mathbb {N}\), \(\varepsilon >0\), and \(L\geqq 2\). Consider a probability distribution of coefficient fields a on \(\mathbb {R}^d\) subject to the assumptions of ellipticity and boundedness, stationarity, and finite range of dependence \(\varepsilon \) (A1), (A2), and (A3), or the periodization of such an ensemble subject to the conditions (A1), (A2), and (A3\(_a\)) - (A3\(_c\)). Let \(X=X(a)\) be an \(\mathbb {R}^N\)-valued random variable.

We then say that X is a sum of random variables with multilevel local dependence if there exist random variables \(X_y^m=X_y^m(a)\), \(0\leqq m\le 1+\log _2 L\) and \(y\in 2^m \varepsilon \mathbb {Z}^d\cap [0,L\varepsilon )^d\), and constants \(K\geqq 1\), \(\gamma \in (0,2]\), and \(B\geqq 1\) with the following properties:

-

The random variable \(X_y^m(a)\) only depends on \(a|_{y+K \log L \, [-2^m \varepsilon ,2^m \varepsilon ]^d}\). More precisely, \(X_y^m(a)\) is a measurable function of \(a|_{y+K \log L \, [-2^m \varepsilon ,2^m \varepsilon ]^d}\) equipped with the topology of H-convergence.

-

We have

$$\begin{aligned} X=\sum _{m=0}^{1+\log _2 L} \sum _{y\in 2^m \varepsilon \mathbb {Z}^d\cap [0,L\varepsilon )^d} X_y^m. \end{aligned}$$ -

The random variables \(X_y^m\) satisfy the bound

$$\begin{aligned} ||X_y^m||_{\exp ^\gamma } \leqq B L^{-d}. \end{aligned}$$(26)

An illustration of the “multilevel local dependence structure” introduced in Definition 6 (in a one-dimensional setting). At the bottom, a sample of the random coefficient field a is depicted; the \(X_y^k\) may depend not only on the values of the coefficient field directly below their box, but on the coefficient field in a region that is wider by a factor of \(K \log L\)

The next proposition shows that the approximation \(a^{{\text {RVE}}}\) of the effective coefficient by the method of representative volumes may indeed be rewritten as a sum of random variables with a multilevel local dependence structure. We establish the same result for the spatial average of the coefficient field  and the second-order term \(\mathcal {F}_{2-\mathrm{point}}(a)\) in the low ellipticity contrast expansion of \(\smash {a^{{\text {RVE}}}}\) given by (10).

and the second-order term \(\mathcal {F}_{2-\mathrm{point}}(a)\) in the low ellipticity contrast expansion of \(\smash {a^{{\text {RVE}}}}\) given by (10).

Furthermore, the last result of the next proposition shows that the fraction of the variance of \(a^{{\text {RVE}}}\) that is explained by the statistical quantities \(\mathcal {F}_{avg}(a)\) and \(\mathcal {F}_{2-\mathrm{point}}(a)\)—that is, the gain in accuracy achieved by the selection approach for representative volumes when employing these statistical quantities—stabilizes as the size L of the representative volume increases; more precisely, it converges to some limit with rate \(L^{-d/2}|\log L|^C\).

Proposition 7

Let the assumptions (A1), (A2), (A3\(_a\))–(A3\(_c\)) be satisfied, that is consider the periodization of a stationary ensemble of random coefficient fields. For any coefficient field a, denote by \(\phi _i\) the unique (up to additions of constants) periodic solution to the corrector equation

Then the approximation \(a^{{\text {RVE}}}\) of the effective coefficient \(a_{\mathsf {hom}}\) by the representative volume element method, given by

is a sum of a family of random variables with multilevel local dependence. More precisely, \(a^{{\text {RVE}}}\) satisfies the criteria of Definition 6 for any \(\gamma <1\) with \(K:=C(d,\lambda )\) and \(B:=C(d,\gamma ,\lambda ) |\log L|^{C(d,\gamma )}\).

Furthermore, the spatial average

is also a sum of a family of random variables with multilevel local dependence. The criteria of Definition 6 are satisfied by \(\mathcal {F}_{avg}(a)\) for any \(\gamma <\infty \) with \(K:=C(d)\) and \(B:=C(d,\gamma )\).

Additionally, the second-order correction to the effective conductivity in the setting of small ellipticity contrast \(\mathcal {F}_{2-\mathrm{point}}\), given by

with \(v_i\) denoting the solution to

is a sum of random variables with multilevel local dependence structure: the random variable \(\mathcal {F}_{2-\mathrm{point}}(a)\) satisfies the criteria of Definition 6 for any \(\gamma <1\) with \(K:=C(d,\lambda )\) and \(B:=C(d,\gamma ,\lambda ) |\log L|^{C(d,\gamma )}\).

Finally, the rescaled variances and covariances of \(a^{{\text {RVE}}}\) and the statistical quantities \(\mathcal {F}_{avg}(a)\) and \(\mathcal {F}_{2-\mathrm{point}}(a)\) converge as \(L\rightarrow \infty \). There exist positive semidefinite matrices \(V_{{{\text {RVE}}}}\), \(V_{avg}\), \(V_{2-\mathrm{point}}\) and matrices \(V_{c,{{\text {RVE}}},avg}\), \(V_{c,{{\text {RVE}}},2-\mathrm{point}}\), \(V_{c,avg,2-\mathrm{point}}\) independent of L such that the estimates

and

hold true.

It is interesting to compare our approach on quantitative normal approximation of \(a^{{\text {RVE}}}\) with concepts employed in the derivation of optimal error estimates in stochastic homogenization [5, 6, 55]. A central theme in [5] is the approximate additivity of certain energetic quantities: the energy quantity on a certain scale may approximately be written as a sum of the energy quantities on smaller scales, allowing for an application of the central limit theorem. In [55], the application of the central limit theorem is facilitated by the homogenization of the flux propagation in the parabolic semigroup associated with the random elliptic operator. In our context, while we also introduce an additive decomposition of \(a^{{\text {RVE}}}\), we do not require the summands to be of the same structure as \(a^{{\text {RVE}}}\) and allow for a multilevel structure. This enables us to derive an optimal-order normal approximation result for the fluctuations.

Note that in [5, 6] a certain localization property of the considered energy quantity has been established. In principle, sufficiently strong localization properties of a random field allow for a multilevel decomposition of (linear functionals of) the random field in the sense of Definition 6 and therefore for an application of our quantitative normal approximation result in Theorem 9; see, in particular, the proof of [43, Theorem 2] for such a construction. However, the locality of the energy quantity established in [5, 6] is non-optimal and in general not sufficient for our purposes. In the forthcoming work [37], an optimal-order localization result for (linear functionals of) the homogenization commutator \(\Xi :=(a-a_{\mathsf {hom}})({\text {Id}}+\nabla \phi )\) will be provided, implying an optimal-order normal approximation result.

3 Strategy of the Proof and Intermediate Results

Our main result relies on a quantitative normal approximation result for the joint probability distribution of the approximation of the effective conductivity \(a^{{\text {RVE}}}\) and auxiliary random variables \(\mathcal {F}(a)\) like the spatial average  . The distance of the probability distribution to a multivariate Gaussian will be quantified through the following notion of distance between probability measures. Note that this distance is a standard choice in the theory of multivariate normal approximation, see for example [33] and the references therein.

. The distance of the probability distribution to a multivariate Gaussian will be quantified through the following notion of distance between probability measures. Note that this distance is a standard choice in the theory of multivariate normal approximation, see for example [33] and the references therein.

Definition 8

Given a symmetric positive definite matrix \(\Lambda \in \mathbb {R}^{N\times N}\) and some \({\bar{L}}<\infty \), we consider the classes \(\Phi _{\Lambda }^{{\bar{L}}}\) of functions \(\phi :\mathbb {R}^N\rightarrow \mathbb {R}\) subject to the following properties:

-

\(\phi \) is smooth and its first derivative is bounded in the sense \(|\nabla \phi (x)| \leqq {\bar{L}}\) for all \(x\in \mathbb {R}^N\).

-

For any \(r>0\) and any \(x_0\in \mathbb {R}^N\), we have

$$\begin{aligned} \int _{\mathbb {R}^N} {{\text {osc}}}_r \phi (x) ~\mathcal {N}_{\Lambda }(x-x_0) \,\mathrm{d}x \leqq r, \end{aligned}$$(29)where \({{\text {osc}}}_r \phi (x)\) is the oscillation of \(\phi \) defined as

$$\begin{aligned} {{\text {osc}}}_r\phi (x):=\sup _{|z|\leqq r}\phi (x+z)-\inf _{|z|\leqq r} \phi (x+z) \end{aligned}$$and where

$$\begin{aligned} \mathcal {N}_{\Lambda }(x):=\frac{1}{(2\pi )^{N/2}\sqrt{\det \Lambda }} \exp \bigg (-\frac{1}{2}\Lambda ^{-1} x \cdot x\bigg ). \end{aligned}$$

The class \(\Phi _\Lambda \) is defined as

Furthermore, we introduce the distance \(\mathcal {D}\) between the law of an \(\mathbb {R}^N\)-valued random variable X and the N-variate Gaussian \(\mathcal {N}_\Lambda \) as