Abstract

We analyze a model of competition in Bayesian persuasion in which two senders vie for the patronage of a receiver by disclosing information about the qualities of their respective proposals, which are positively correlated. The information externality—the news disclosed by one sender contains information about the other sender’s proposal—generates two effects on the incentives for information disclosure. The first effect, which we call the underdog-handicap effect, arises because the receiver is endogenously biased toward choosing the ex ante stronger sender. The second effect, which we call the good-news curse, arises because a sender’s favorable signal realization implies that the rival is more likely to generate a strong competing signal realization. While the underdog-handicap effect encourages more aggressive disclosure, the good-news curse can lower disclosure incentives. If the senders’ ex ante expected qualities are different, and the qualities of their two proposals are highly correlated, then the underdog-handicap effect dominates. Furthermore, as the correlation approaches its maximum possible value, the competition becomes so intense that both senders engage in full disclosure in the unique limit equilibrium.

Similar content being viewed by others

Notes

Kolotilin (2018) analyzes the situation where there is only one university, but a potential employer can acquire information not only from the university but also from other sources. He characterizes the optimal disclosure mechanism and derives the necessary and sufficient conditions for full and no information revelation.

Information transmission with multiple senders has been studied using frameworks different from Bayesian persuasion. For example, Milgrom and Roberts (1986) study a multi-sender persuasion game in which the receiver is unsophisticated. There is also a large literature that examines the conflict of interests among senders in the cheap-talk settings. Krishna and Morgan (2001) extend Crawford and Sobel (1982) to a setting with two senders and show that a full-revelation equilibrium exists if the senders have opposing biases. In addition, Battaglini (2002) shows that with two senders and a multi-dimensional state space, a full-revelation equilibrium generically exists. Kawai (2015) generalizes the finding of Krishna and Morgan (2001) to multi-dimensional state space.

We thank Raphael Boleslavsky for bringing these papers to our attention.

In a related investigation, Gentzkow and Kamenica (2017a) consider a more specialized setting in which every sender can choose a signal that is arbitrarily correlated with each other. They provide a simple algorithm for finding the set of equilibria and show that an increase in the intensity of competition (according to the notions mentioned above) never makes the set of equilibrium outcomes less informative in set orders.

Li and Norman (2018) provide an example that if only conditionally independent mechanisms are feasible for each sender, the equilibrium outcome can be less informative with an additional sender.

In the context of our model, a necessary, though not sufficient condition, for Blackwell-connectedness is that every sender can disclose information about the proposal quality of the competing sender. In many applications, including our motivating example of universities’ competition in grading policies, in which competing senders are endowed with differentiated proposals, it is quite natural that an individual sender does not have direct control over the revelation of information about competitors’ proposals.

Formally, \({\mathcal {G}}_{i}\equiv \{ G_{i}\in \varDelta ( [ 0,1] ) :{\mathbb {E}}_{G_{i}}[ p_{i}] =\mu _{i}\} \).

Notice that by definition, \(p_{i}=\Pr ( U_{i}=u_{h} |p_{i}) =\Pr ( U_{i}=u_{h}|p_{i},\mu _{j}) \).

The concave closure \({\text {*}}{con}[ \varPi _{i}] \ \)of \(\varPi _{i}\) is defined as the smallest concave function that is everywhere weakly greater than \(\varPi _{i}\). That is, \({\text {*}}{con}[ \varPi _{i}] ( p) \equiv \sup \{ z|( p,z) \in {\text {*}}{co} ( \varPi _{i}) \} \), where \({\text {*}}{co}( \varPi _{i}) \) stands for the convex hull of the graph of \(\varPi _{i}\).

In the context of competition between universities in Introduction, the unobservable state \(\omega \) could be interpreted as some “ soft” skills of the admitted cohorts, such as passion for learning, emotional resilience, or grit. These soft skills play a significant role in determining the students’ productivity at the time of graduation, but they cannot be directly and objectively tested. The commonality of these skills among students could be reasonably attributed to the common education system, as well as the social environment the students are brought up. It is also quite reasonable that the grading policies of universities are designed by focusing on revealing their graduates’ productivities without explicitly taking into account their actual level of these soft skills.

To see this, note first that by definition, \(G_{j}( \cdot ) =\mu _{j}G_{j}( \cdot |U_{j}=u_{h}) +( 1-\mu _{j}) G_{j}( \cdot |U_{j} =u_{l}) \) and \(p_{j}=\Pr ( U_{j}=u_{h}|p_{j}) =\left( 1+\frac{1-\mu _{j}}{\mu _{j}}\frac{g_{j}\left( p_{j}|U_{j}=u_{l}\right) }{g_{j}\left( p_{j}|U_{j}=u_{h}\right) }\right) ^{-1}\), where \(g_{j}\left( \cdot |U_{j}\right) \) is the density function of \(G_{j}\left( \cdot |U_{j}\right) \). Solving for the conditional distributions gives

$$\begin{aligned} G_{j}\left( p|U_{j}=u_{h}\right) =\frac{\int _{0}^{p}s\mathrm{d}G_{j}\left( s\right) }{\mu _{j}}\text {, and }G_{j}\left( p|U_{j}=u_{l}\right) =\frac{\int _{0} ^{p}\left( 1-s\right) \mathrm{d}G_{j}\left( s\right) }{1-\mu _{j}}. \end{aligned}$$Furthermore, \(\Pr ( U_{2}=u_{h}|p_{1}) =\mu _{2}+\frac{\rho ( p_{1}-\mu _{1}) }{\mu _{1}( 1-\mu _{1}) }\). Substitute these conditional distributions into Eq. (3) gives Eq. (4).

Note that \({\overline{\varPi }}_{\iota }( p) \) cannot be flat because \(\varPi _{i}( p;G_{j}) \) is not constant throughout [0, 1] .

\({\text {*}}{cl}( A) \) is the closure of set A.

Recall by Lemma 2, \(\delta _{i}( 1) =1\).

As shown by the second and the third panels of Fig. 4, a sender’s payoff can be decreasing in his own signal realization when \(\rho >0\).

See the second and the third panels of Fig. 4.

To see this, note that by differentiating Eq. (4) twice, we have

$$\begin{aligned} \frac{\partial ^{2}\varGamma _{2}\left( p_{1}|G_{2},p_{1}\right) }{\partial p_{1}^{2}}=G_{2}^{\prime \prime }\left( p_{1}\right) +\frac{\rho \left( p_{1}-\mu \right) }{\mu ^{2}\left( 1-\mu \right) ^{2}}\left[ 3G_{2}^{\prime }\left( p_{1}\right) +\left( p_{1}-\mu \right) G_{2}^{\prime \prime }\left( p_{1}\right) \right] . \end{aligned}$$If \(\frac{\partial ^{2}\varGamma _{2}\left( p_{1}|G_{2},p_{1}\right) }{\partial p_{1}^{2}}=0\), then at a higher normalized covariance \(\rho ^{\prime }>\rho \), we have \(\frac{\partial ^{2}\varGamma _{2}\left( p_{1}|G_{2},p_{1}\right) }{\partial p_{1}^{2}}<0\) for \(p_{1}<\pi \) and \(\frac{\partial ^{2}\varGamma _{2}\left( p_{1}|G_{2},p_{1}\right) }{\partial p_{1}^{2}}>0\) for \(p_{1}>\mu \).

Competition in price and information disclosure shares a number of similarities. First, aggressive pricing and disclosure are both beneficial to the consumer/receiver. Second, an increase in the number of competing sellers/senders leads to a lower price/more transparent disclosure as shown in Perloff and Salop (1985) and Au and Kawai (2018), respectively. Moreover, as the competitor engages in more disclosure, it convexifies the sender’s payoff function, calling for a more informative disclosure in response. In other words, disclosure policies are strategic complements, like price-setting in a Bertrand model of differentiated products. A notable difference between the two settings, however, is that while our model of competitive disclosure is a zero-sum game between the senders, the Bertrand model of differentiated products is a nonzero-sum game between the sellers.

This is because given a full-disclosure strategy \(G_{2}\), \(\varPi _{1}( 0;G_{2}) =\frac{1}{2}\), and \(\varPi _{1}( p_{1};G_{2}) =1-\frac{\mu _{1}p_{1}}{2}\) for all \(p_{1}>0\).

In the case of independent proposals, Boleslavsky and Cotton (2018) analyze a game with an exogenously given “ reserve” expected proposal quality.

Suppose there are two senders with \(U_{1}\) and \(U_{2}\) being independently distributed. Suppose also that \(U_{1}\in \{ 0,1\} \) with prior \(\Pr ( U_{1}=1) =0.7\), whereas \(U_{2}\in \{ 0,2\} \) with prior \(\Pr ( U_{2}=2) =0.1\). Then, it is not difficult to see that there exists no equilibrium. Intuitively, given the relative high prior of sender 1, an atom at the top is needed for Bayes-plausibility. The atom in turn implies a jump in sender 2’s payoff function at \(p_{2}=0.5\), resulting in no best response for sender 2.

If we maintain the tie-breaking rule adopted, i.e., randomly selects one sender with equal probabilities, a subgame-perfect equilibrium does not exist.

The reason is as follows. If the first sender’s signal realization \(p_{1}\) exceeds \(\mu \), the second sender would always maximize the probability of matching \(p_{1}\) by choosing a disclosure mechanism with support \(\{ 0,p_{1}\} \). Thus, the first sender can back out the payoff of inducing each posterior \(p_{1}\): \(\varPi ( p_{1}) =1-\frac{\mu }{p_{1}}\) for \(p_{1}>\mu \) and \(\varPi ( p_{1}) =0\) for \(p_{1}\le \mu \). It is straightforward that the first sender’s optimal mechanism has a binary support \(\{ 0,\min \{ 2\mu ,1\} \} \).

For recent progress, see Li and Norman (2017) that utilize a linear programming approach to analyze sequential persuasion.

The choice of densities ensures that Bayes-plausibility is preserved.

To see this, define \(W( p_{j}) \) as the probability that sender i wins by using strategy \({\tilde{G}}_{i}\), conditional on the posterior realization of the rival sender being \(p_{j} \in \triangle \{ u_{l},u_{h}\} \). By definition, \(V_{i}( {\tilde{G}}_{i},G_{j}) =\int W( p_{j}) \mathrm{d}G_{j}( p_{j}) \). As \({\tilde{G}}_{i}\) is continuous on [0, 1) , \(W( p_{j}) \) is lower semicontinuous in \(p_{j}\). Therefore, by the Portmanteau theorem, for every sequence \(\{ G_{j}^{k}\} _{k\in {\mathbb {N}} }\) that converges in weak* topology to \(G_{-i}\), we have \(\lim \inf V_{i}( {\tilde{G}}_{i},G_{j}^{k}) \ge V_{i}( {\tilde{G}} _{i},G_{j}) \). That is, \(V_{i}( {\tilde{G}}_{i},G_{j}^{\prime }) \) is lower semicontinuous with respect to \(G_{j}^{\prime }\).

References

Au, P.H.: Dynamic information disclosure. RAND J. Econ. 46(4), 791–823 (2015)

Au, P.H., Kawai, K.: Competitive information disclosure by multiple senders. Working Paper, UNSW (2018)

Battaglini, M.: Multiple referrals and multidimensional cheap talk. Econometrica 70, 1379–1401 (2002)

Board, S., Lu, J.: Competitive information disclosure in search markets. J. Polit. Econ. 126(5), 1965–2010 (2018)

Boleslavsky, R., Cotton, C.: Grading standards and education quality. Am. Econ. J. Microecon. 7(2), 248–79 (2015). https://doi.org/10.1257/mic.20130080

Boleslavsky, R., Cotton, C.: Limited capacity in project selection: competition through evidence production. Econ. Theory 65(2), 385–421 (2018). https://doi.org/10.1007/s00199-016-1021-0

Crawford, V.P., Sobel, J.: Strategic information transmission. Econometrica 50(6), 1431–1451 (1982)

Gentzkow, M., Kamenica, E.: Bayesian persuasion with multiple senders and rich signal spaces. Games Econ. Behav. 104, 411–429 (2017a). https://doi.org/10.1016/j.geb.2017.05.004

Gentzkow, M., Kamenica, E.: Competition in persuasion. Rev. Econ. Stud. 84(1), 300–322 (2017b). https://doi.org/10.1093/restud/rdw052

Hoffmann, F., Inderst, R., Ottaviani, M.: Persuasion through selective disclosure: implications for marketing, campaigning, and privacy regulation. Working Paper, University of Bonn (2014)

Kamenica, E., Gentzkow, M.: Bayesian persuasion. Am. Econ. Rev. 101(6), 2590–2615 (2011). https://doi.org/10.1257/aer.101.6.2590

Kawai, K.: Sequential cheap talks. Games Econ. Behav. 90, 128–133 (2015)

Kolotilin, A.: Optimal information disclosure: a linear programming approach. Theor. Econ. 13(2), 607–635 (2018)

Krishna, V., Morgan, J.: A model of expertise. Q. J. Econ. 116(2), 747–75 (2001)

Li, F., Norman, P.: Sequential persuasion. Working Paper, UNC Chapel-Hill (2017)

Li, F., Norman, P.: On Bayesian persuasion with multiple senders. Econ. Lett. 170, 66–70 (2018)

Milgrom, P., Roberts, J.: Relying on the information of interested parties. RAND J. Econ. 17(1), 18–32 (1986)

Ostrovsky, M., Schwarz, M.: Information disclosure and unraveling in matching markets. Am. Econ. J. Microecon. 2(2), 34–63 (2010). https://doi.org/10.1257/mic.2.2.34

Perloff, J.M., Salop, S.C.: Equilibrium with product differentiation. Rev. Econ. Stud. 52(1), 107–120 (1985)

Reny, P.J.: On the existence of pure and mixed strategy Nash equilibria in discontinuous games. Econometrica 67(5), 1029–1056 (1999)

Simon, L.K., Zame, W.R.: Discontinuous games and endogenous sharing rules. Econometrica 58(4), 861–872 (1990)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We are grateful to Richard Holden, Anton Kolotilin, Hongyi Li, Peter Norman, Carlos Pimienta, Marek Pycia, Satoru Takahashi, Yosuke Yasuda and three anonymous referees for the useful discussions and valuable comments. We would also like to thank seminar participants at University of Technology Sydney, UNSW Sydney, Singapore Management University, National University of Singapore, Econometric Society Asian Meeting, Korea University, Japan-Taiwan-HK Contract Theory Workshop and Australian National University. Keiichi Kawai greatly acknowledges the financial support from UNSW Sydney and Australian Research Council (DECRA Grant RG160734).

Appendix

Appendix

Proof of Lemma 2

Suppose \(p_{1},p_{2}\in ( 0,1) \). By a direct application of Bayes’ rule,

It is straightforward to show that \(\Pr ( U_{1}=u_{h}|p_{1},p_{2}) \ge \Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds if and only if \(p_{2}\le \frac{1}{1+k}\), where

Observe that \(k<1\) if and only if \(\rho >0\). Also, define a function \(\delta :[ 0,1] \rightarrow [ 0,1] \) by

With these definitions, if \(p_{1}\in ( 0,1) \) or \(p_{2}\in ( 0,1) \), then \(\Pr ( U_{1}=u_{h}|p_{1},p_{2}) \ge \Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds if and only if \(p_{2}\le \delta ( p_{1}) \).

It remains to consider \(p_{1},p_{2}\in \{ 0,1\} \times \{ 0,1\} \). If \(p_{1}=0\), then \(\Pr ( U_{1}=u_{h}|p_{1},p_{2}) =0\ge \Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds if and only if \(p_{2}=0\). If \(p_{1}=1\), then \(\Pr ( U_{1}=u_{h}|p_{1},p_{2}) =1\ge \Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds for all \(p_{2} \in [ 0,1] \). Finally, if \(p_{2}=1\), then \(\Pr ( U_{1} =u_{h}|p_{1},p_{2}) \ge 1=\Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds if and only if \(p_{1}=1\). In sum, for all \(p_{1},p_{2}\in [ 0,1] \), \(\Pr ( U_{1}=u_{h}|p_{1},p_{2}) \ge \Pr ( U_{2}=u_{h}|p_{1},p_{2}) \) holds if and only if \(p_{2}\le \delta ( p_{1}) \).

The function \(\delta \) is increasing and concave because

Furthermore, the function \(\delta \) is increasing in \(\rho \):

Finally, if \(\rho =0\), then \(k=1\) and \(\delta ( p) =p\). As \(\rho \rightarrow ( 1-\mu _{1}) \mu _{2}\), \(k\rightarrow 0\) and \(\delta ( p) \) converges pointwise to \(1_{\{ p>0\} } \). \(\square \)

Proof of Theorem 1

The sufficiency is straightforward. Suppose sender i’s payoff function \(\varPi _{i}( p_{i};G_{j}) \) satisfies the linear structure. If it has a jump at \(p_{i}=1\), then any strategy with support \([ 0,p_{i}] \cup \{ 1\} \) is optimal for sender i. If it does not have a jump at \(p_{i}=1\), then any strategy with support \([ 0,p_{i}] \) is optimal for sender i.

To see the necessity, suppose that \(( G_{1},G_{2}) \) is a pair of equilibrium strategies. We first make a few preliminary observations. For notational simplicity, let \({\tilde{\rho }}\equiv \frac{\rho }{\mu _{1}\mu _{2}( 1-\mu _{1}) ( 1-\mu _{2}) }\). Then

If \(G_{j}\) is differentiable at \(p_{i}\), then

\(\square \)

Lemma 3

Suppose \(( q_{0},q_{1}) \cap {\text {*}}{supp}G_{j}=\emptyset \). (i) \(\varPi _{i}( p;G_{j}) \) is linear and weakly decreasing on \(( \delta _{j}( q_{0}) ,\delta _{j}( q_{1}) ) \). (ii) If \(G_{j}( \delta _{i}( q_{1}) ) <G_{j}( \delta _{i}( q_{2}) ) \) for \(q_{2}>q_{1}\), and \(G_{j}( \cdot ) \) is continuous at \(\delta _{i}( q_{2}) \), then \(\frac{\varPi _{i}( q_{1};G_{j}) -\varPi _{i}( q_{0};G_{j}) }{q_{1}-q_{0}}<\frac{\varPi _{i}( q_{2};G_{j}) -\varPi _{i}( q_{0};G_{j}) }{q_{2}-q_{0}}\). Consequently, \({\text {*}}{con}[ \varPi _{i}|G_{j}] ( q_{1}) >\varPi _{i}( q_{1} ;G_{j}) \).

Proof

-

(i)

By Eq. (9), for each \(p_{i}\in \left( \delta _{j}\left( q_{0}\right) ,\delta _{j}\left( q_{1}\right) \right) \), we have \(d\varPi _{i}\left( p_{i};G_{j}\right) /dp_{i}={\tilde{\rho }}\int _{0}^{\delta _{i}\left( p_{i}\right) }\left( s-\mu _{j}\right) \mathrm{d}G_{j}\left( s\right) \le 0\).

-

(ii)

Define \(D\equiv \frac{\varPi _{i}\left( q_{2};G_{j}\right) -\varPi _{i}\left( q_{0};G_{j}\right) }{q_{2}-q_{0}}-\frac{\varPi _{i}\left( q_{1};G_{j}\right) -\varPi _{i}\left( q_{0};G_{j}\right) }{q_{1}-q_{0}}\). Then, for some \(\tilde{q}\in \left[ \delta _{i}\left( q_{0}\right) ,\delta _{i}\left( q_{2}\right) \right] \),

$$\begin{aligned} D&=\frac{G_{j}\left( \delta _{i}\left( q_{2}\right) \right) -G_{j}\left( \delta _{i}\left( q_{0}\right) \right) }{q_{2}-q_{0}} +\frac{{\tilde{\rho }}\left( q_{2}-\mu _{i}\right) \left( \int _{\delta _{i}\left( q_{0}\right) }^{\delta _{i}\left( q_{2}\right) }\left( s-\mu _{j}\right) \mathrm{d}G_{j}\left( s\right) \right) }{q_{2}-q_{0}}\\&=\frac{G_{j}\left( \delta _{i}\left( q_{2}\right) \right) -G_{j}\left( \delta _{i}\left( q_{0}\right) \right) }{q_{2}-q_{0}}\left( 1+\tilde{\rho }\left( q_{2}-\mu _{i}\right) \left( {\tilde{q}}-\mu _{j}\right) \right) \\&>\frac{G_{j}\left( \delta _{i}\left( q_{2}\right) \right) -G_{j}\left( \delta _{i}\left( q_{0}\right) \right) }{q_{2}-q_{0}}\left( 1+\tilde{\rho }\left( -\mu _{1}\left( 1-\mu _{2}\right) \right) \right) \ge 0 . \end{aligned}$$

\(\square \)

Define \({\bar{p}}_{i}\equiv \sup ( {\text {*}}{supp}G_{i}) \) and \({\hat{p}}_{i}\equiv \sup ( {\text {*}}{supp}G_{i}\cap ( 0,1) ) \).

Lemma 4

The following holds in an equilibrium. (i) \({\bar{p}}_{j}=\delta _{i}( {\bar{p}}_{i}) \); (ii) \(G_{i}( p) \) does not have an atom at any \(p\in ( 0,1) \) and \(G_{1}( 0) \times G_{2}( 0) =0\); (iii) \(G_{i}( p) \) is strictly increasing in \(( 0,\hat{p}_{i}) \); and (iv) \({\hat{p}}_{j}=\delta _{i}( {\hat{p}}_{i}) \).

Proof

Let \({\mathcal {P}}_{i}\) be the set of linear upper envelopes of \(\varPi _{i}( p_{i};G_{j}) \) at \(p_{i}=\mu _{i}\). Then, \({\text {*}}{con}[ \varPi _{i}|G_{j}] ( p_{i}) \le L_{i}( p_{i} ;G_{j}) \) for all \(p_{i}\in [ 0,1] \) and \(L_{i} \in {\mathcal {L}}_{i}\ \)by definition, and

-

(i)

\({\bar{p}}_{j}=\delta _{i}\left( {\bar{p}}_{i}\right) \): Suppose \({\bar{p}}_{i}>\delta _{j}\left( {\bar{p}}_{j}\right) \). By (8), \(\varPi _{i}\left( p_{i} ;G_{j}\right) =1\) for all \(p_{i}\in \left( \delta _{j}\left( {\bar{p}} _{j}\right) ,1\right] \). Therefore by (10), \(\left( \delta _{j}\left( \bar{p}_{j}\right) ,1\right] \cap {\text {*}}{supp}G_{i}=\emptyset \), a contradiction.

-

(ii)

No atom at any \(p\in \left( 0,1\right) \) and \(G_{1}\left( 0\right) \times G_{2}\left( 0\right) =0\): Suppose \(G_{i}\) assigns an atom at \({\tilde{p}}\in \left( 0,1\right) \). Then, \(\varPi _{j}\) exhibits an upward jump at \(\delta _{i}\left( {\tilde{p}}\right) \). Therefore, there exists an \(\varepsilon >0\) such that \(\varPi _{j}\left( p;G_{i}\right) <{\text {*}}{con}\left[ \varPi _{j}|G_{i}\right] \ \)for all \(p\in \left[ \delta _{i}\left( {\tilde{p}}\right) -\varepsilon ,\delta _{i}\left( \tilde{p}\right) \right] \), i.e., \(\left[ \delta _{i}\left( {\tilde{p}}\right) -\varepsilon ,\delta _{i}\left( {\tilde{p}}\right) \right] \cap {\text {*}}{supp}G_{j}=\emptyset \). By Lemma 3, however, this implies that \(\varPi _{i}\left( {\tilde{p}};G_{j}\right) <{\bar{\varPi }}_{i}\left( {\tilde{p}};G_{j}\right) \) for all \({\bar{\varPi }}_{i}\in {\mathcal {P}}_{i}\), a contradiction. Next, if \(G_{i}\) assigns an atom at 0, then \(\varPi _{j}\left( 0;G_{i}\right) <{\bar{\varPi }} _{i}\left( 0;G_{j}\right) \) for all \({\bar{\varPi }}_{i}\in {\mathcal {P}}_{i}\). Therefore, \(G_{i}\left( 0\right) >0\) implies \(G_{j}\left( 0\right) =0\).

-

(iii)

\(G_{i}\left( p\right) \)is strictly increasing on \(\left( 0,{\hat{p}}_{i}\right) \): This immediately follows from Lemma 3.

-

(iv)

\({\hat{p}}_{j}=\delta _{i}\left( {\hat{p}}_{i}\right) \): Suppose \({\hat{p}}_{j}>\delta _{i}\left( {\hat{p}}_{i}\right) \). By part (i), \({\bar{p}}_{j}=\delta _{i}\left( {\bar{p}}_{i}\right) \). Therefore, \(\delta _{i}\left( {\bar{p}}_{i}\right) >\delta _{i}\left( {\hat{p}}_{i}\right) \), or equivalently, \({\bar{p}}_{i}>{\hat{p}}_{i}\). Then, by the definition of \(\hat{p}_{i}\), we have \({\bar{p}}_{i}={\bar{p}}_{j}=1\), and \(G_{i}\) assigns an atom at \({\bar{p}}_{i}\). Also, this implies that for all \(p\in \left[ {\hat{p}} _{i},1\right) \), \(G_{i}\left( p\right) =G_{i}\left( {\hat{p}}_{i}\right) \). Then, Lemma 3 implies that \(\varPi _{j}\left( p_{j};G_{i}\right) <{\bar{\varPi }}_{j}\left( p_{j};G_{i}\right) \) for all \(p_{j}\in \left( \delta \left( {\hat{p}}_{i}\right) ,1\right) \) for some \({\bar{\varPi }}_{j}\in {\mathcal {P}}_{j}\), i.e., \({\hat{p}}_{j}\le \delta \left( \hat{p}_{i}\right) \), a contradiction.

\(\square \)

By (10), \(p_{i}\in {\text {*}}{supp}G_{i}\) only if \({\bar{\varPi }}_{i}( p_{i};G_{j}) =\varPi _{i}( p_{i};G_{j}) \). Therefore, properties (i)–(iv) together imply the linear structure of the payoff functions. \(\square \)

Proof of Corollary 1

Suppose \(( G_{1},G_{2}) \) is an equilibrium. By Theorem 1, \(\varPi _{i}\left( p_{i};G_{j}\right) \) is linear on the interval \((0,{\hat{p}}_{i}]\). Therefore,

The solution to the differential equation above is

for some integration constant \(C_{j}\) and \(\varLambda _{j}( s) \equiv -\frac{\rho ( ( s-\mu _{i}) \delta _{i}^{\prime }( s) +2( \delta _{i}( s) -\mu _{j}) ) }{\mu _{1}\mu _{2}( 1-\mu _{1}) ( 1-\mu _{2}) +\rho ( s-\mu _{i}) ( \delta _{i}( s) -\mu _{j}) }\). Substituting \(p_{i}={\hat{p}}_{i}\) in the solution above, we get \(C_{j}=\frac{G_{j}( {\hat{p}}_{j}) -G_{j}( 0) }{\int _{0}^{\delta _{j}( {\hat{p}}_{j}) }\varPhi _{j}( s^{\prime }) \mathrm{d}s^{\prime }}\). Equation (6) is obtained by substituting the integration constant to the solution above.

Next, using Eq. (6), the Bayes-plausibility condition for sender j can be simplified as follows.

This gives the simplified Bayes-plausibility condition.

The slope of \(\varPi _{i}( p_{i};G_{j}) \) on \([ 0,{\hat{p}} _{i}] \) is

Lastly,

Therefore, if \(G_{j}( {\hat{p}}_{j}) <1\), then

Other conditions follows from Theorem 1. \(\square \)

Proof of Theorem 2

Equilibrium Existence: The strategy space is compact (with respect to weak*-topology). Sender i’s payoff (as a function of strategy profile) \(V_{i}( G_{i},G_{j}) \equiv E_{G_{i}}[ \varPi _{i}( p;G_{j}) ] \ \)is linear in \(G_{i}\) and hence is quasiconcave in \(G_{i}\). The game is zero sum, i.e., \(V_{1}( G_{1},G_{2}) +V_{2}( G_{2},G_{1}) =1\), and hence satisfies reciprocal upper semicontinuity. Therefore, if we show that the payoff function satisfies payoff security, Corollary 3.3 of Reny (1999) guarantees the existence of a pure-strategy equilibrium. Fix an arbitrary strategy profile \(( G_{i},G_{j}) \) and \(\varepsilon >0\).

We show below that there exists a strategy \({\tilde{G}}_{i}\) of sender i that is continuous on \([ 0,1) \ \)and \(V_{i}( {\tilde{G}}_{i} ,G_{j}) >V_{i}( G_{i},G_{j}) -\varepsilon /2\). Note that the set of discontinuous points \(D\subset [ 0,1] \) of \(G_{i}\) is countable. We thus can denote \(D=\{ d_{1},d_{2},\cdots ,d_{l} ,\cdots \} \), where \(d_{l}<d_{l+1}\). Let \(t_{l}\) be the size of atom at \(d_{l}\). First, suppose \(\varPi _{i}( p_{i};G_{j}) \) is continuous at \(d_{l}\). Then, there exists an interval \(( d_{l}-\varepsilon _{l} ,d_{l}+\varepsilon _{l}) \) such that \(\varPi _{i}( p_{i};G_{j}) >\varPi _{i}( d_{l};G_{j}) -( \frac{\varepsilon }{2+\varepsilon }) ^{l}\) for all \(p_{i}\in ( d_{l}-\varepsilon _{l},d_{l} +\varepsilon _{l}) \). Now replacing the atom \(t_{l}\) at \(d_{l}\) with a uniform distribution over the interval \(( d_{l}-\varepsilon _{l} ,d_{l}+\varepsilon _{l}) \) gives a new distribution \(G_{i}^{\prime }\) such that \(G_{i}^{\prime }\) does not have an atom at \(d_{l}\) and \(V_{i}( G_{i}^{\prime },G_{j}) >V_{i}( G_{i},G_{j}) -( \frac{\varepsilon }{2+\varepsilon }) ^{l}\). Next suppose \(\varPi _{i}( p_{i};G_{j}) \) is discontinuous at \(d_{l}\). Recall that while \(\varPi _{i}( p_{i};G_{j}) \) may be decreasing, it cannot jump downward. Thus, it is necessary that \(\lim _{p_{i}\rightarrow d_{l}^{-}}\varPi _{i}( p_{i};G_{j})<\varPi _{i}( d_{l};G_{j}) <\lim _{p_{i} \rightarrow d_{l}^{+}}\varPi _{i}( p_{i};G_{j}) \). Choose a pair \(\varepsilon _{l},\varepsilon _{l}^{\prime }>0\) such that (i) for all \(p^{\prime }\in ( d_{l},d_{l}+\varepsilon _{l}) \), \(\varPi _{i}( d_{l} ;G_{j}) <\varPi _{i}( p^{\prime };G_{j}) \), and (ii) \(\frac{\varepsilon _{l}^{\prime }}{\varepsilon _{l}}>t_{l}( \frac{2+\varepsilon }{\varepsilon }) ^{l}-1\) and (iii) \(d_{l}-\varepsilon _{l}^{\prime }>0\). Replace the atom at \(d_{l}\) with a uniform distribution with density \(\frac{\varepsilon _{l}}{\varepsilon _{l}^{\prime }( \varepsilon _{l}+\varepsilon _{l}^{\prime }) }t_{l}\) over the interval \(( d_{l}-\varepsilon _{l}^{\prime },d_{l}) \), as well as a uniform distribution with density \(\frac{\varepsilon _{l}^{\prime }}{\varepsilon _{l}( \varepsilon _{l}+\varepsilon _{l}^{\prime }) }t_{l}\) over the interval \(( d_{l},d_{l}+\varepsilon _{l}) \).Footnote 25 The new strategy \(G_{i}^{\prime }\) thus obtained has no atom at \(d_{l}\). Moreover, as the additional mass assigned to the interval \(( d_{l}-\varepsilon _{l}^{\prime },d_{l}) \) is \(\frac{\varepsilon _{l}}{\varepsilon _{l}^{\prime }( \varepsilon _{l}+\varepsilon _{l}^{\prime }) } t_{l}\times \varepsilon _{l}^{\prime }<( \frac{\varepsilon }{2+\varepsilon }) ^{l}\), we have \(V_{i}( G_{i}^{\prime },G_{j}) >V_{i}( G_{i},G_{j}) -( \frac{\varepsilon }{2+\varepsilon }) ^{l}\).

Next, as \({\tilde{G}}_{i}\) is continuous on [0, 1) , \(V_{i}( {\tilde{G}}_{i},G_{j}) \) is lower semicontinuous with respect to \(G_{j}\).Footnote 26 Consequently, there exists a neighborhood \(O( G_{j}) \) of \(G_{j}\) such that for all \(G_{j}\in O( G_{j}) \), \(V_{i}( \tilde{G}_{i},G_{j}) >V_{i}( {\tilde{G}}_{i},G_{j}) -\varepsilon /2\). We thus have the payoff security at \(( G_{i},G_{j}) \).

Equilibrium Uniqueness: Suppose there are two equilibria \(( G_{1},G_{2}) \) and \(( G_{1}^{\prime },G_{2}^{\prime }) \). Define \({\hat{p}}_{i}\equiv \sup ( {\text {*}}{supp}G_{i}\cap ( 0,1) ) \) and \({\bar{p}}_{i}\equiv \sup ( {\text {*}}{supp} G_{i}) \); \({\hat{p}}_{i}^{\prime }\) and \({\bar{p}}_{1}^{\prime }\) are defined accordingly for the equilibrium \(( G_{1}^{\prime },G_{2}^{\prime }) \). By the interchangeability property of zero-sum games, \(( G_{1} ,G_{2}^{\prime }) \) and \(( G_{1}^{\prime },G_{2}) \) are also equilibria. As a result, \(G_{1}\) and \(G_{1}^{\prime }\) have a common support, i.e., \({\hat{p}}_{1}={\hat{p}}_{1}^{\prime }\) and \({\bar{p}}_{1}={\bar{p}}_{1}^{\prime }\). Similarly, \(G_{2}\), and \(G_{2}^{\prime }\) have a common support, i.e., \({\hat{p}}_{2}={\hat{p}}_{2}^{\prime }\) and \({\bar{p}}_{2}={\bar{p}}_{2}^{\prime }\). In other words, the support of equilibrium strategies is unique. Finally, we explain why this, together with Bayes-plausibility and the necessity of the linear structure of equilibrium strategy uniquely pin down the equilibrium strategy. If \(G_{i}( {\hat{p}}_{i}) =1\) (and hence \(G_{j}( {\hat{p}} _{j}) =1\)), then the simplified Bayes-plausibility condition in Corollary 1 implies that \(G_{i}( 0) =G_{i}^{\prime }( 0) \) and \(G_{j}( 0) =G_{j}^{\prime }( 0) \). Next, suppose \(G_{1}( {\hat{p}}_{1}) <1\). For each \(i\in \{ 1,2\} \), fixing a \({\hat{p}}_{i}\), the simplified Bayes-plausibility condition and the atom condition give a system of two equations with two unknowns (\(G_{i}( 0) \) and \(G_{i}( {\hat{p}}_{i}) \)). It is straightforward to verify that there exists a unique solution to the system, so \(G_{i}( 0) =G_{i}^{\prime }( 0) \) and \(G_{i}( {\hat{p}} _{i}) =G_{i}^{\prime }( {\hat{p}}_{i}) \). \(\square \)

Proof of Theorem 3

For expositional simplicity, we refer an equilibrium such that \(G_{1}( {\hat{p}}_{1}) =G_{2}( {\hat{p}}_{2}) =1\) as a type-N equilibrium, and \(\max \{ G_{1}( {\hat{p}}_{1}) ,G_{2}( {\hat{p}}_{2}) \} <1\) as a type-A equilibrium.

Lemma 5

A strategy profile \(( G_{1},G_{2}) \) is a type-N equilibrium if and only if there exist (i) \({\hat{p}}_{1}\in [ 0,1] \) and \(\hat{p}_{2}=\delta _{1}( {\hat{p}}_{1}) \); and (ii) \(G_{1}( 0) =0\) and \(G_{2}( 0) \ge 0\) such that

A strategy profile \(( G_{1},G_{2}) \) is a type-A equilibrium if and only if there exist (i) \({\hat{p}}_{1}\in [ 0,1] \) and \(\hat{p}_{2}=\delta _{1}( {\hat{p}}_{1}) \); and (ii) \(G_{1}( 0) =0\) and \(G_{2}( 0) \ge 0\) such that

Proof

The linear structure of payoff functions implies that there exist \({\hat{p}} _{1}\in [ 0,1] \) and \({\hat{p}}_{2}=\delta _{1}( {\hat{p}} _{1}) \) such that we can express \({\tilde{\varPi }}_{i}\) as

for \(i=1,2\) and \(j\ne i\). Since \(G_{j}( \delta _{i}( p) ) ={\tilde{\varPi }}_{i}( p;G_{j}) \) for \(p\in ( 0,\hat{p}_{i}) \), we have \(G_{j}( {\tilde{p}}) ={\tilde{\varPi }} _{i}( \delta _{j}( {\tilde{p}}) ;G_{j}) \) for \({\tilde{p}}\in ( 0,\delta _{j}( {\hat{p}}_{i}) ) =( 0,{\hat{p}}_{j}) \). Therefore,

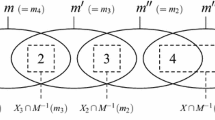

A few remarks are useful for our subsequent analysis. First, \(\delta _{1}( p) \) is an increasing concave function, while \(\delta _{2}( p) \) is an increasing convex function. Therefore, as illustrated in Fig. 5, \(G_{1}( p_{1}) \) is concave on the interval \((0,{\hat{p}}_{1}]\), whereas \(G_{2}( p_{2}) \) is convex on the interval \((0,{\hat{p}}_{2}]\). Second, \({\hat{p}}_{i}=\delta _{i}( {\hat{p}}_{j}) \); and \(\delta _{i}( p) =1\) if and only if \(p=1\). Third, if an equilibrium is type-A, i.e., \(\max \{ G_{1}( {\hat{p}}_{1}) ,G_{2}( {\hat{p}}_{2}) \} <1\), then the linear structure of payoff functions requires that for \(i=1,2\) and \(j\ne i\),

As \(\lim _{p\rightarrow 0^{+}}{\tilde{\varPi }}_{i}\left( p;G_{j}\right) =G_{j}\left( 0\right) \), \({\tilde{\varPi }}_{i}\left( 1;G_{j}\right) =\frac{1}{2}\left( 1+G_{j}\left( {\hat{p}}_{j}\right) \right) \) and \(\tilde{\varPi }_{i}\left( {\hat{p}}_{i};G_{j}\right) =G_{j}\left( {\hat{p}}_{j}\right) \), the equation above can be written as

We are left to show that \(G_{1}( 0) =0\). If \(G_{1}( 0) >0\), then \(G_{2}( 0) =0\). Suppose that there exists a type-N equilibrium, i.e., an equilibrium such that \(G_{1}( {\hat{p}} _{1}) =1\). Recall that \({\hat{p}}_{2}=\delta _{1}( {\hat{p}} _{1}) >{\hat{p}}_{1}\). As \(G_{1}\) is concave on the interval \((0,\hat{p}_{1}]\), whereas \(G_{2}\) is convex on the interval \((0,{\hat{p}}_{2}]\), we have that \(G_{1}( p) \ge G_{2}( p) \) for all p with strict inequality on the interval \(( 0,{\hat{p}}_{1}) \). This implies \(G_{2}\) first-order stochastically dominates \(G_{1}\), contradicting that \(\mu _{1}>\mu _{2}\). Next, suppose there exists a type-A equilibrium, i.e., an equilibrium such that \(G_{1}( {\hat{p}}_{1}) <1\). Rearranging condition (12) and using the hypothesis that \(G_{1}( 0) >0=G_{2}( 0) \), we get

As \({\hat{p}}_{2}>{\hat{p}}_{1}\), we have \(G_{1}( {\hat{p}}_{1}) >G_{2}( {\hat{p}}_{2}) \). Consequently, \(G_{1}( p) \ge G_{2}( p) \) with strict inequality on the interval (0, 1) . This implies \(G_{2}\) first-order stochastically dominates \(G_{1}\), contradicting that \(\mu _{1}>\mu _{2}\). Therefore, we have the required result.

Finally, the conditions \(\mu _{i}=E_{i}^{A}({\hat{p}}_{1}) \) for type-A equilibrium, and \(\mu _{i}=E_{i}^{N}( {\hat{p}}_{1}) \) for type-N equilibrium, are obtained by the Bayes-plausibility condition for sender i. \(\square \)

Now we prove the comparative statics result. Take a pair of normalized quality covariances \(\rho _{L}\) and \(\rho _{H}>\rho _{L}\). Let \(k_{l}=\frac{\mu _{1} }{1-\mu _{1}}\frac{1-\mu _{2}}{\mu _{2}}\frac{( 1-\mu _{1}) \mu _{2}-\rho _{l}}{\mu _{1}( 1-\mu _{2}) -\rho _{l}}\), \(l=L,H\). It is immediate that \(\rho _{L}<\rho _{H}\) is equivalent to \(k_{L}>k_{H}\). Denote by \(( G_{1l},G_{2l}) \) the unique equilibrium under normalized covariance \(\rho _{l}\), for \(l\in \{ L,H\} \). Other functions such as \(\delta _{il}\), \({\tilde{\varPi }}_{il}\), \(E_{il}^{N},\) and \(E_{il}^{A}\), as well as parameters such as \({\hat{p}}_{il}\) are also defined accordingly for \(l\in \{ L,H\} \).

Case 1 \(\mu _{1}\in ( 0,1-\int _{0}^{1}\delta _{1H}( p) \mathrm{d}p) \): As \(\delta _{1L}( p) <\delta _{1H}( p) \) for all \(p\in ( 0,1) \), the equilibria are of type-N for both \(\rho _{L}\) and \(\rho _{H}\), i.e., \(G_{1L}( {\hat{p}}_{1L}) =G_{1H}( {\hat{p}}_{1H}) =1\). Furthermore, as \(E_{1L}^{N}( \cdot ) >E_{1H}^{N}( \cdot ) \) and \(E_{il}^{N}( \cdot ) \) is a strictly increasing function, we have \({\hat{p}}_{1L} <{\hat{p}}_{1H}\) because of the equilibrium condition \({\hat{p}}_{1l}=( E_{il}^{N}) ^{-1}( \mu _{1}) \). Next, note that on the interval \([ 0,{\hat{p}}_{2L}] \), while \({\tilde{\varPi }}_{2L}( p;G_{1L}) =G_{1L}( \delta _{2L}( p) ) \) is linear, \({\tilde{\varPi }}_{2H}( \delta _{1H}( \delta _{2L}( p) ) ;G_{1H}) =G_{1H}( \delta _{2L}( p) ) \) is concave. Furthermore, as \(G_{1L}( \delta _{2L}( 0) ) =G_{1H}( \delta _{2L}( 0) ) =0\), and \(1=G_{1L}( \delta _{2L}( {\hat{p}}_{2L}) ) >G_{1H}( \delta _{2L}( {\hat{p}}_{2L}) ) \), \(G_{1L}( p) \) and \(G_{1H}( p) \) cross exactly once on \((0,{\hat{p}}_{1L}]\). This proves \(G_{1H}\succ G_{1L}\).

Next, we consider sender 2’s strategy. First \({\hat{p}}_{1L}<{\hat{p}}_{1H}\) implies \({\hat{p}}_{2L}<{\hat{p}}_{2H}\). We show below that \(G_{2L}( 0) <G_{2H}( 0) \). Suppose not, then \(G_{2L}( 0) \ge G_{2H}( 0) \), together with the linear structure of \({\tilde{\varPi }}_{1}\), implies \({\tilde{\varPi }}_{1}( p;G_{2L}) \ge {\tilde{\varPi }}_{1}( p;G_{2H}) \) on \([ 0,{\hat{p}} _{1L}] \). Since for all \(p>0\), \({\tilde{\varPi }}_{1L}( p;G_{2L} ) =G_{2L}( \delta _{1L}( p) ) \) and \({\tilde{\varPi }}_{1H}( p;G_{2H}) =G_{2H}( \delta _{1H}( p) ) >G_{2H}( \delta _{1L}( p) ) \), we have that \(G_{2H}\) first-order stochastically dominates \(G_{2L}\), a contradiction. Next, on \((0,{\hat{p}}_{1L}]\), while \(G_{2L}( \delta _{1L}( p) ) ={\tilde{\varPi }}_{1L}( p;G_{2L}) \) is linear, \(G_{2H}( \delta _{1L}( p) ) ={\tilde{\varPi }} _{1L}( \delta _{2H}( \delta _{1L}( p) ) ;G_{2H}) \) is strictly convex in p. Also \(G_{2L}( \delta _{1L}( 0) ) =G_{2L}( 0) <G_{2H}( 0) =G_{2H}( \delta _{1L}( 0) ) \), and \(1=G_{2L}( \delta _{1L}( {\hat{p}}_{1L}) ) >G_{2H}( \delta _{1L}( {\hat{p}}_{1L}) ) \). Hence, \(G_{2L}( p) \) and \(G_{2H}( p) \) cross exactly once on the interval \((0,{\hat{p}}_{2L}]\). We thus have \(G_{2H}\succ G_{2L}\).

Case 2 \(\mu _{1}\in [ 1-\int _{0}^{1}\delta _{1L}( p) \mathrm{d}p,1) \): In this case, both \(G_{1H}\) and \(G_{1L}\) are of type-A. We start with the proof that \({\hat{p}}_{1H}<{\hat{p}}_{1L}\) and \({\hat{p}}_{2H} <{\hat{p}}_{2L}\). Suppose that \({\hat{p}}_{2H}\ge {\hat{p}}_{2L}\). Then, the linear structure of \({\tilde{\varPi }}_{2l}\) requires that \(\frac{{\tilde{\varPi }}_{2L}( {\hat{p}}_{2L};G_{1L}) }{{\hat{p}}_{2L}}<\frac{{\tilde{\varPi }}_{2H}( {\hat{p}}_{2H};G_{1H}) }{{\hat{p}}_{2H}}\). This implies that \(\tilde{\varPi }_{2L}( p;G_{1L}) \le {\tilde{\varPi }}_{2H}( p;G_{1H}) \) for all \(p\in [ 0,{\hat{p}}_{2L}] \). Notice, however, that \({\tilde{\varPi }}_{2L}( p;G_{1L}) =G_{1L}( \delta _{2L}( p) ) >G_{1L}( \delta _{2H}( p) ) \) and \({\tilde{\varPi }}_{2H}( p;G_{1H}) =G_{1H}( \delta _{2H}( p) ) \). Therefore, \(G_{1L}\) first-order stochastically dominates \(G_{1H}\), a contradiction. Since \(\delta _{1L}( p) <\delta _{1H}( p) \), \({\hat{p}}_{2H}<{\hat{p}}_{2L}\) implies \({\hat{p}} _{1H}<{\hat{p}}_{1L}\).

Notice that \({\hat{p}}_{2H}<{\hat{p}}_{2L}\) and the linear structure of \({\tilde{\varPi }}_{2}\) together imply \({\tilde{\varPi }}_{2L}( p;G_{1L}) >{\tilde{\varPi }}_{2H}( p;G_{1H}) \) and \({\tilde{\varPi }}_{2L}( {\hat{p}}_{2L};G_{1L}) >{\tilde{\varPi }}_{2H}( {\hat{p}}_{2H} ;G_{1H}) \). On the interval \([ 0,{\hat{p}}_{2H}] \), \(G_{1L}( \delta _{2L}( p) ) ={\tilde{\varPi }}_{2L}( p;G_{1L}) \) is linear while \(G_{1H}( \delta _{2L}( p) ) ={\tilde{\varPi }}_{2H}( \delta _{1H}( \delta _{2L}( p) ) ;G_{1H}) \) is strictly concave. Furthermore, \(G_{1L}( \delta _{2L}( 0) ) =G_{1H}( \delta _{2L}( 0) ) =0\) and \(G_{1L}( \delta _{2L}( {\hat{p}}_{2H}) ) >G_{1H}( \delta _{2L}( {\hat{p}}_{2H}) ) \). Therefore, \(G_{1L}( p) \) and \(G_{1H}( p) \) cross exactly once on \((0,{\hat{p}}_{1H}]\). Since \(G_{1H}( p) <G_{1L}( p) \) for all \(p\in ( {\hat{p}}_{1H},1) \), we have \(G_{1H}\succ G_{1L}\).

Next, we establish that \(G_{2H}\succ G_{2L}\). To this end, we first show \(G_{2H}( 0) >G_{2L}( 0) \) and \(G_{2H}( \hat{p}_{2H}) <G_{2L}( {\hat{p}}_{2L}) \). Recall from the lemma above that the simplified Bayes-plausibility condition for sender 2 is \(\mu _{2}=( 1-G_{2l}( 0) ) B( {\hat{p}} _{1l},\delta _{1l}) \), where \(B( {\hat{p}}_{1l},\delta _{1l}) \equiv \frac{\int _{0}^{{\hat{p}}_{1l}}\delta _{1l}( s) \mathrm{d}s+2( 1-{\hat{p}}_{1l}) }{2-{\hat{p}}_{1l}}\). Furthermore, \(B( {\hat{p}} _{1L},\delta _{1H}) <B( {\hat{p}}_{1H},\delta _{1H}) \) because \({\hat{p}}_{1H}<{\hat{p}}_{1L}\) and \(\frac{\partial B( {\hat{p}}_{1l} ,\delta _{1l}) }{\partial {\hat{p}}_{1l}}=\frac{-2( 1-\delta ( {\hat{p}}_{1l}) ) -\int _{0}^{{\hat{p}}_{1}}[ \delta _{1l}( {\hat{p}}_{1}) -\delta _{1l}( s) ] \mathrm{d}s}{( 2-{\hat{p}}_{1l}) ^{2}}<0\). As \(B( {\hat{p}}_{1L} ,\delta _{1L}) <B( {\hat{p}}_{1L},\delta _{1H}) \), \(G_{2H}( 0) >G_{2L}( 0) \). Next, we show that \(G_{2H}( {\hat{p}}_{2H}) <G_{2L}( {\hat{p}}_{2L}) \). Observe that the simplified Bayes-plausibility condition, together with the linear structure of the equilibrium, implies that \(G_{2l}( {\hat{p}} _{2l}) =1-\mu _{2}( 1+\frac{\int _{0}^{\delta _{2l}( \hat{p}_{2l}) }\delta _{1l}( s) \mathrm{d}s}{2( 1-\delta _{2l}( {\hat{p}}_{2l}) ) }) ^{-1}\). Recall that \(\delta _{1l}( p) \equiv ( 1+k_{l}\frac{1-p}{p}) ^{-1}\). Evaluating the integral gives \(\frac{\int _{0}^{\delta _{2l}( {\hat{p}}_{2l}) }\delta _{1l}( s) \mathrm{d}s}{2( 1-\delta _{2l}( {\hat{p}}_{2l}) ) }=\frac{\frac{k_{l}}{1-k_{l}} {\hat{p}}_{2l}+\frac{k_{l}}{( 1-k_{l}) ^{2}}( 1-( 1-k_{l}) {\hat{p}}_{2l}) \ln ( 1-( 1-k_{l}) {\hat{p}}_{2l}) }{1-{\hat{p}}_{2l}}\equiv Q( {\hat{p}}_{2l} ,k_{l}) \). By direct computation, \(\frac{\partial Q}{\partial \hat{p}_{2l}}=\frac{k_{l}( {\hat{p}}_{2l}( 1-k_{l}) +k_{l} \ln ( 1-( 1-k_{l}) {\hat{p}}_{2l}) ) }{( 1-{\hat{p}}_{2l}) ^{2}( k_{l}-1) ^{2}}>0\) and \(\frac{\partial Q}{\partial k_{l}}=\frac{( 1-k_{l}^{2}) {\hat{p}} _{2l}+( 1-( 1-k_{l}) {\hat{p}}_{2l}+k_{l}) \ln ( 1-( 1-k_{l}) {\hat{p}}_{2l}) }{( 1-{\hat{p}} _{2l}) ( 1-k_{l}) ^{3}}>0\). We thus have \(Q( \hat{p}_{2L},k_{L})>Q( {\hat{p}}_{2L},k_{H}) >Q( \hat{p}_{2H},k_{H}) \), so \(G_{2L}( {\hat{p}}_{2L}) >G_{2H}( {\hat{p}}_{2H}) \).

The linear structure of \({\tilde{\varPi }}_{1l}\), together with the facts that \({\tilde{\varPi }}_{1L}( 0;G_{2L}) <{\tilde{\varPi }}_{1H}( 0;G_{2H}) \), \({\hat{p}}_{1H}<{\hat{p}}_{1L}\), and \({\tilde{\varPi }}_{1}( {\hat{p}}_{1L};G_{2L}) >{\tilde{\varPi }}_{1}( {\hat{p}}_{1H} ;G_{2H}) \) imply \({\tilde{\varPi }}_{1L}( 1;G_{2L}) >\tilde{\varPi }_{1H}( 1;G_{2H}) \). Next, denote by \({\text {*}}{con}[ {\tilde{\varPi }}_{1L}( p;G_{2L}) ] \) the concave closure of \({\tilde{\varPi }}_{1L}( p;G_{2L}) \). Note that both \({\text {*}}{con}[ {\tilde{\varPi }}_{1L}( p;G_{1L}) ] \) and \({\text {*}}{con}[ {\tilde{\varPi }}_{1H}( \delta _{2H}( \delta _{1L}( p) ) ;G_{2H}) ] \) are linear in \(p\in [ 0,1] \). Moreover, \({\text {*}}{con}[ \tilde{\varPi }_{1H}( \delta _{2H}( \delta _{1L}( 0) ) ;G_{2H}) ] ={\text {*}}{con}[ {\tilde{\varPi }}_{1H}( 0;G_{2H}) ] =G_{2H}( 0) >G_{2L}( 0) ={\text {*}}{con}[ {\tilde{\varPi }}_{1L}( 0;G_{2L}) ] \), and \({\text {*}}{con}[ {\tilde{\varPi }}_{1H}( \delta _{2H}( \delta _{L}( 1) ) ;G_{2H}) ] ={\text {*}}{con}[ {\tilde{\varPi }}_{1H}( 1;G_{2H}) ] =\frac{1}{2}( 1+G_{2H}( {\hat{p}}_{2H}) ) <\frac{1}{2}( 1+G_{2L}( {\hat{p}}_{2L}) ) ={\text {*}}{con} [ {\tilde{\varPi }}_{1L}( 1;G_{2L}) ] \). Therefore, \({\text {*}}{con}[ {\tilde{\varPi }}_{1L}( p;G_{2L}) ] \) and \({\text {*}}{con}[ {\tilde{\varPi }}_{1H}( \delta _{2H}( \delta _{1L}( p) ) ;G_{2H}) ] \) cross exactly once. Consequently, it is necessary that \(G_{2L}( p) \) and \(G_{2H}( p) \) cross exactly once, and \(G_{2H}\succ \) \(G_{2L}\).

Case 3 \(\mu _{1}\in [ 1-\int _{0}^{1}\delta _{1H}( p) \mathrm{d}p,1-\int _{0}^{1}\delta _{1L}( p) \mathrm{d}p) \): In this case, while \(G_{1L}\) is of type-N, \(G_{1H}\) is of type-A. An argument identical to that for Cases 1 and 2 above implies that \(G_{iH}\succ G_{iL}\).

We now discuss the limit of the unique equilibrium of the auxiliary game when \(\rho \) approaches \({\bar{\rho }}\equiv \mu _{2}( 1-\mu _{1}) \). Note that for a sufficiently large \(\rho \), the unique equilibrium is of type-A, i.e., \(\mu _{1}\in ( 1-\int _{0}^{1}\delta ( p) \mathrm{d}p,1) \), because \(1-\int _{0}^{1}\delta ( p) \mathrm{d}p\rightarrow 0\) as \(\rho \rightarrow {\bar{\rho }}\). Consider the limit of sender 1’s strategy. Note first that \(\lim _{\rho \rightarrow {\bar{\rho }}}{\hat{p}}_{1}=0\). For otherwise, there exists an increasing sequence \(\{ \rho _{N}\} \) with \(\rho _{N}\rightarrow {\bar{\rho }}\) such that in the associated sequence of equilibria, we have \({\hat{p}}_{2}\rightarrow 1\). This contradicts that \(\hat{p}_{2}\) is decreasing in \(\rho \). The Bayes-plausibility condition with respect to \(\mu _{1}\) implies that \(\mu _{1}\ge ( 1-G_{1}( \hat{p}_{1}) ) ( 1-{\hat{p}}_{1}) \) and \(1-\mu _{1}\ge G_{1}( {\hat{p}}_{1}) ( 1-{\hat{p}}_{1}) \). Rearranging the two inequalities above gives \(1-\frac{\mu _{1}}{1-{\hat{p}}_{1}}\le G_{1}( {\hat{p}}_{1}) \le \frac{1-\mu _{1}}{1-{\hat{p}}_{1}}\), which implies that \(G_{1}( {\hat{p}}_{1}) \rightarrow 1-\mu _{1}\). Therefore, \(G_{1}( p) \) converges to \(G_{1,F}( p) \) in distribution as \(\rho \rightarrow {\bar{\rho }}\).

Next, consider sender 2’s limit strategy. Since \({\hat{p}}_{1}=\frac{G_{2}( {\hat{p}}_{2}) -G_{2}( 0) }{\frac{1}{2}( 1+G_{2}( {\hat{p}}_{2}) ) -G_{2}( 0) }\), and \({\hat{p}}_{1}\rightarrow 0\), it is necessary that \(G_{2}( {\hat{p}} _{2}) -G_{2}( 0) \rightarrow 0\). Thus, \(G_{2}( p) \) converges to \(G_{2,F}( p) \) in distribution as \(\rho \rightarrow {\bar{\rho }}\). \(\square \)

Proof of Theorem 4

Since the game is zero sum and symmetric, Theorem 2 implies that the equilibrium is unique and symmetric. Moreover, \(\mu _{1}=\mu _{2}\equiv \mu \) implies that \(\delta _{1}( p) =\delta _{2}( p) =p\). Therefore, \(\varLambda ( s) =-\frac{3\rho ( s-\mu ) }{\mu ^{2}( 1-\mu ) ^{2}+\rho ( s-\mu ) ^{2}}\). By Lemma 4, \(G( 0) =0\) in a symmetric equilibrium. By Lemma 1, in an equilibrium such that \(G( {\hat{p}}) =1\), we have

Also, in an equilibrium such that \(G( {\hat{p}}) <1\), we have \(\mu \varPi ( 1) =\frac{1}{2}\) by the linear structure of the payoff function. Then, using Lemma 4, we obtain

Let \(G_{{\tilde{\rho }}}( p) \) be the equilibrium strategy when \(\rho ={\tilde{\rho }}\). Similarly, define \({\hat{p}}_{{\tilde{\rho }}}\equiv \sup ( {\text {*}}{supp}G_{{\tilde{\rho }}}\cap ( 0,1) ) \). Take a pair \(\rho ,\rho ^{\prime }\in [ 0,\mu ( 1-\mu ) ] \) with \(\rho ^{\prime }>\rho \).

First, suppose \(\mu \le 1/2\). By (13), for \(p<2\mu \),

Thus, \(G_{\rho }( p) >G_{\rho ^{\prime }}( p) \) for all \(p\in ( 0,\mu ) \), and \(G_{\rho }( p) <G_{\rho ^{\prime }}( p) \) for all \(p\in ( \mu ,1) \). Therefore, \(G_{\rho }\succ G_{\rho ^{\prime }}\).

Next, suppose \(\mu >1/2\). Observe that both \({\hat{p}}\) and \(G( \hat{p}) \), as given by Eq. (14), are increasing in \(\rho \). Moreover, for \(p<{\hat{p}}\), \(\frac{\partial G( p) }{\partial \rho }\) is given by Eq. (15) above. Therefore, if \({\hat{p}}_{\rho }\ge \mu \), then \(G_{\rho }( p) >G_{\rho ^{\prime }}( p) \) for all \(p\in ( 0,\mu ) \) and \(G_{\rho }( p) <G_{\rho ^{\prime }}( p) \) for all \(p\in ( \mu ,1) \). On the other hand, if \({\hat{p}}_{\rho }<\mu \), then \(G_{\rho }( p) >G_{\rho ^{\prime } }( p) \) for all \(p\in ( 0,{\hat{p}}_{\rho }) \). Thus, \(G_{\rho }\succ G_{\rho ^{\prime }}\).

Finally, as \(\lim _{\rho \rightarrow {\bar{\rho }}}{\hat{p}}_{\rho }>0\) for all possible values of \(\mu \), the limiting strategy G(p) as \(\rho \rightarrow {\bar{\rho }}\) is informative. \(\square \)

Proof of Theorem 5

In a game in which the normalized covariance of proposal qualities is \(\rho \), denote by \(\varPi _{i}^{\rho }( p_{i};G_{j}) \) the payoff function of sender i, denote by \(G_{i,\rho }\) the equilibrium strategy of sender i, and denote by \(\delta _{i}^{\rho }( p) \) the transformation function identified in Lemma 2. Define \({\hat{p}}_{i}^{\rho }\equiv \sup ( {\text {*}}{supp}( G_{i,\rho }) \backslash \{ 1\} ) \).

We first show that\(\ G_{1,\rho }( {\hat{p}}_{1}^{\rho }) <1\) for \(\rho \) sufficiently close to \({\bar{\rho }}\). Suppose not. Then, there exists a sequence \(\{ \rho _{n}\} \) such that \(\lim _{n\rightarrow \infty }\rho _{n}={\bar{\rho }}\), and for all n, \(G_{1,\rho _{n}}( {\hat{p}} _{1}^{\rho _{n}}) =\varPi _{2}^{\rho _{n}}( 1;G_{1,\rho _{n}}) =1\). Then, the linear structure of \(\varPi _{2}\) implies that for any \(p_{2} \in ( 0,1) \), \(\varPi _{2}^{\rho _{n}}( p_{2};G_{1,\rho _{n} }) /p_{2}>1\). Recall that \(\delta _{2}^{\rho _{n}}( p_{2}) \rightarrow 0\) as \(\rho _{n}\rightarrow {\bar{\rho }}\) and

Therefore, for an arbitrary pair \(\varepsilon _{1},\varepsilon _{2}>0\) and for \(p_{2}\in \left( 1-\varepsilon _{1},1\right) \), there exists an \({\bar{n}}_{1}\) such that \(n>{\bar{n}}_{1}\) implies

and hence \(\varPi _{2}^{\rho _{n}}( p_{2};G_{1,\rho _{n}}) /p_{2}<1\), which is a contradiction.

Next, let \(\{ \rho _{n}\} \) be a sequence such that \(\lim _{n\rightarrow \infty }\rho _{n}={\bar{\rho }}\). Since \(\{ {\hat{p}}_{2} ^{\rho _{n}}\} _{n}\) is increasing, \(\lim _{n\rightarrow \infty }\hat{p}_{2}^{\rho _{n}}\) exists. We show that \(\lim _{n\rightarrow \infty }{\hat{p}} _{2}^{\rho _{n}}<1\) and \(\lim _{n\rightarrow \infty }{\hat{p}}_{1}^{\rho _{n}}=0\). If \(\lim _{n\rightarrow \infty }{\hat{p}}_{2}^{\rho _{n}}=1\), then by the argument of the preceding paragraph, for any \(\varepsilon _{1}>0\) and \(\varepsilon _{2}>0\); and \(p_{2}>1-\varepsilon _{1}\), there exists \({\bar{n}}_{2}\) such that \(n>\bar{n}_{2}\) implies \({\hat{p}}_{2}^{\rho _{n}}>p_{2}\) and \(\varPi _{2}^{\rho _{n}}( p_{2};G_{1,\rho _{n}}) <\varepsilon _{2}\), and hence

However, the linear structure of \(\varPi _{2}\) implies \(\varPi _{2}^{\rho _{n}}( 0;G_{1,\rho _{n}}) =\frac{\varPi _{2}^{\rho _{n}}( p_{2};G_{1,\rho _{n} }) -p_{2}\varPi _{2}^{\rho _{n}}( 1;G_{1,\rho _{n}}) }{1-p_{2}} \). Therefore, we have \(\varPi _{2}^{\rho _{n}}( 0;G_{1,\rho _{n}}) <0\), a contradiction. Furthermore, since \({\hat{p}}_{1}^{\rho _{n}}=\delta _{2} ^{\rho _{n}}( {\hat{p}}_{2}) \), we have \(\lim _{n\rightarrow \infty }{\hat{p}}_{1}^{\rho _{n}}=0\).

We are ready to show that \(G_{1,\rho _{n}}\) converges to \(G_{1,F}\) in distribution. Observe that

Therefore, the simplified Bayes-plausibility condition for sender 1 implies

and hence \(1-\mu _{1}\ge \lim _{n\rightarrow \infty }G_{1,\rho _{n}}( \hat{p}_{1}^{\rho _{n}}) \). At the same time, the simplified Bayes-plausibility condition implies \(1-\mu _{1}\le G_{1,\rho _{n}}( {\hat{p}}_{1}^{\rho _{n}}) ( 1-T_{1}( {\hat{p}}_{2}^{\rho _{n} }) ) \le G_{1,\rho _{n}}( {\hat{p}}_{1}^{\rho _{n}}) \). Therefore, \(\lim _{n\rightarrow \infty }G_{1,\rho _{n}}( {\hat{p}} _{1}^{\rho _{n}}) =\) \(1-\mu _{1}\), and \(G_{1,\rho _{n}}\) converges to \(G_{1,F}\) in distribution.

We now show that \(G_{2,\rho _{n}}\) converges to \(G_{2,F}\) in distribution. We first show that \(G_{1,\rho }( 0) =0\) for all \(\rho \) sufficiently close to \({\bar{\rho }}\). Suppose not. Then, there exists a sequence \(\{ \rho _{n}\} \) such that \(\lim _{n\rightarrow \infty }\rho _{n}={\bar{\rho }}\) and \(\ G_{1,\rho _{n}}( 0) >0\) for all n. For each \(\varepsilon _{3}\in ( 0,\mu _{1}) ,\varepsilon _{4}>0\), and \(p_{1}\in ( 0,\varepsilon _{3}) \), there exists an \({\bar{n}}_{3}\) such that \(n>{\bar{n}}_{3}\) implies that

is bounded from below by \(1-\varepsilon _{4}\). However, \(G_{1,\rho _{n}}\left( 0\right) >0\) implies \(G_{2,\rho _{n}}\left( 0\right) =0\) and hence \(\varPi _{1}^{\rho _{n}}\left( 0;G_{2,\rho _{n}}\right) =0\). Furthermore, \(\varPi _{1}^{\rho _{n}}\left( 1;G_{2,\rho _{n}}\right) \ge 1/2\). Therefore, \(\varPi _{1}^{\rho _{n}}\left( 0;G_{2,\rho _{n}}\right) <\varPi _{1}^{\rho _{n}}\left( p_{1};G_{2,\rho _{n}}\right) \) and \(\varPi _{1}^{\rho _{n}}\left( 0;G_{2,\rho _{n} }\right) <\varPi _{1}^{\rho _{n}}\left( 1;G_{2,\rho _{n}}\right) \). Therefore, \(0\notin {\text {*}}{supp}G_{1,\rho _{n}}\), a contradiction to \(G_{1,\rho _{n}}\left( 0\right) >0\).

It remains to show \({\hat{p}}_{2}^{\rho }\rightarrow 0\) as \(\rho \rightarrow {\bar{\rho }}\). An argument similar to that for the convergence of \(G_{1}\) can establish that \(G_{2,\rho }\) converges to to \(G_{2,F}\) in distribution. To see that \(\lim _{\rho \rightarrow {\bar{\rho }}}{\hat{p}}_{2}^{\rho }=0\), suppose there exists a sequence \(\{ \rho _{n}\} \) that converges to \({\bar{\rho }}\) and \(\lim _{n\rightarrow \infty }{\hat{p}}_{2}^{\rho _{n}}={\tilde{p}}_{2}\in ( 0,1) \). Note that we have already established that \(G_{1,\rho _{n} }( 0) =0\) for all n sufficiently large. Thus, for sufficiently large n, the atom condition at the top, as well as that \(\varPi _{2}^{\rho }( 1;G_{1,\rho _{n}}) \ge 1/2\), implies \(\varPi _{2}^{\rho _{n}}( {\tilde{p}}_{2};G_{1,\rho _{n}}) \ge {\tilde{p}}_{2}/2\). On the other hand, \(\lim _{n\rightarrow \infty }p_{1}^{\rho _{n}}=0\) and \(\lim _{n\rightarrow \infty }G_{1,\rho _{n}}( \delta _{2}^{\rho _{n}}( {\tilde{p}}_{2}) ) =0\). Therefore, for any \(\varepsilon _{5}\), there exists an \(\bar{n}_{4}\) such that \(n>{\bar{n}}_{4}\) implies \(\varPi _{2}^{\rho _{n}}( {\tilde{p}}_{2};G_{1,\rho _{n}}) \le \frac{1-{\tilde{p}}_{2}}{1-\mu _{2} }\varepsilon _{5}\), which is a contradiction to \(\varPi _{2}^{\rho _{n}}( {\tilde{p}}_{2};G_{1,\rho _{n}}) \ge {\tilde{p}}_{2}/2\). \(\square \)

Rights and permissions

About this article

Cite this article

Au, P.H., Kawai, K. Competitive disclosure of correlated information. Econ Theory 72, 767–799 (2021). https://doi.org/10.1007/s00199-018-01171-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00199-018-01171-7